[jira] [Work logged] (HDFS-16520) Improve EC pread: avoid potential reading whole block

[ https://issues.apache.org/jira/browse/HDFS-16520?focusedWorklogId=767329=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-767329 ] ASF GitHub Bot logged work on HDFS-16520: - Author: ASF GitHub Bot Created on: 06/May/22 17:30 Start Date: 06/May/22 17:30 Worklog Time Spent: 10m Work Description: jojochuang merged PR #4104: URL: https://github.com/apache/hadoop/pull/4104 Issue Time Tracking --- Worklog Id: (was: 767329) Time Spent: 4h 20m (was: 4h 10m) > Improve EC pread: avoid potential reading whole block > - > > Key: HDFS-16520 > URL: https://issues.apache.org/jira/browse/HDFS-16520 > Project: Hadoop HDFS > Issue Type: Improvement > Components: dfsclient, ec >Affects Versions: 3.3.1, 3.3.2 >Reporter: daimin >Assignee: daimin >Priority: Major > Labels: pull-request-available > Time Spent: 4h 20m > Remaining Estimate: 0h > > HDFS client 'pread' represents 'position read', this kind of read just need a > range of data instead of reading the whole file/block. By using > BlockReaderFactory#setLength, client tells datanode the block length to be > read from disk and sent to client. > To EC file, the block length to read is not well set, by default using > 'block.getBlockSize() - offsetInBlock' to both pread and sread. Thus datanode > read much more data and send to client, and abort when client closes > connection. There is a lot waste of resource to this situation. -- This message was sent by Atlassian Jira (v8.20.7#820007) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16520) Improve EC pread: avoid potential reading whole block

[

https://issues.apache.org/jira/browse/HDFS-16520?focusedWorklogId=766415=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-766415

]

ASF GitHub Bot logged work on HDFS-16520:

-

Author: ASF GitHub Bot

Created on: 05/May/22 04:52

Start Date: 05/May/22 04:52

Worklog Time Spent: 10m

Work Description: jojochuang commented on code in PR #4104:

URL: https://github.com/apache/hadoop/pull/4104#discussion_r865553016

##

hadoop-hdfs-project/hadoop-hdfs/src/test/java/org/apache/hadoop/hdfs/TestDFSStripedInputStream.java:

##

@@ -664,4 +666,70 @@ public void testUnbuffer() throws Exception {

assertNull(in.parityBuf);

in.close();

}

+

+ @Test

+ public void testBlockReader() throws Exception {

+ecPolicy = StripedFileTestUtil.getDefaultECPolicy(); // RS-6-3-1024k

Review Comment:

ok. Thanks you Make sense.

Issue Time Tracking

---

Worklog Id: (was: 766415)

Time Spent: 4h 10m (was: 4h)

> Improve EC pread: avoid potential reading whole block

> -

>

> Key: HDFS-16520

> URL: https://issues.apache.org/jira/browse/HDFS-16520

> Project: Hadoop HDFS

> Issue Type: Improvement

> Components: dfsclient, ec

>Affects Versions: 3.3.1, 3.3.2

>Reporter: daimin

>Assignee: daimin

>Priority: Major

> Labels: pull-request-available

> Time Spent: 4h 10m

> Remaining Estimate: 0h

>

> HDFS client 'pread' represents 'position read', this kind of read just need a

> range of data instead of reading the whole file/block. By using

> BlockReaderFactory#setLength, client tells datanode the block length to be

> read from disk and sent to client.

> To EC file, the block length to read is not well set, by default using

> 'block.getBlockSize() - offsetInBlock' to both pread and sread. Thus datanode

> read much more data and send to client, and abort when client closes

> connection. There is a lot waste of resource to this situation.

--

This message was sent by Atlassian Jira

(v8.20.7#820007)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16520) Improve EC pread: avoid potential reading whole block

[

https://issues.apache.org/jira/browse/HDFS-16520?focusedWorklogId=766396=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-766396

]

ASF GitHub Bot logged work on HDFS-16520:

-

Author: ASF GitHub Bot

Created on: 05/May/22 02:54

Start Date: 05/May/22 02:54

Worklog Time Spent: 10m

Work Description: cndaimin commented on code in PR #4104:

URL: https://github.com/apache/hadoop/pull/4104#discussion_r865523712

##

hadoop-hdfs-project/hadoop-hdfs/src/test/java/org/apache/hadoop/hdfs/TestDFSStripedInputStream.java:

##

@@ -664,4 +666,70 @@ public void testUnbuffer() throws Exception {

assertNull(in.parityBuf);

in.close();

}

+

+ @Test

+ public void testBlockReader() throws Exception {

+ecPolicy = StripedFileTestUtil.getDefaultECPolicy(); // RS-6-3-1024k

Review Comment:

@jojochuang Thanks for your review. Since

`TestDFSStripedInputStreamWithRandomECPolicy` extends

`TestDFSStripedInputStream`, `testBlockReader` will fail on random EC policy in

`TestDFSStripedInputStreamWithRandomECPolicy`. One way is to choose a fixed EC

policy as here we do, another way is to extend test case to adapt any EC policy

which can be considered too.

Issue Time Tracking

---

Worklog Id: (was: 766396)

Time Spent: 4h (was: 3h 50m)

> Improve EC pread: avoid potential reading whole block

> -

>

> Key: HDFS-16520

> URL: https://issues.apache.org/jira/browse/HDFS-16520

> Project: Hadoop HDFS

> Issue Type: Improvement

> Components: dfsclient, ec

>Affects Versions: 3.3.1, 3.3.2

>Reporter: daimin

>Assignee: daimin

>Priority: Major

> Labels: pull-request-available

> Time Spent: 4h

> Remaining Estimate: 0h

>

> HDFS client 'pread' represents 'position read', this kind of read just need a

> range of data instead of reading the whole file/block. By using

> BlockReaderFactory#setLength, client tells datanode the block length to be

> read from disk and sent to client.

> To EC file, the block length to read is not well set, by default using

> 'block.getBlockSize() - offsetInBlock' to both pread and sread. Thus datanode

> read much more data and send to client, and abort when client closes

> connection. There is a lot waste of resource to this situation.

--

This message was sent by Atlassian Jira

(v8.20.7#820007)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16520) Improve EC pread: avoid potential reading whole block

[

https://issues.apache.org/jira/browse/HDFS-16520?focusedWorklogId=764730=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-764730

]

ASF GitHub Bot logged work on HDFS-16520:

-

Author: ASF GitHub Bot

Created on: 01/May/22 05:34

Start Date: 01/May/22 05:34

Worklog Time Spent: 10m

Work Description: jojochuang commented on code in PR #4104:

URL: https://github.com/apache/hadoop/pull/4104#discussion_r862421383

##

hadoop-hdfs-project/hadoop-hdfs/src/test/java/org/apache/hadoop/hdfs/TestDFSStripedInputStream.java:

##

@@ -664,4 +666,70 @@ public void testUnbuffer() throws Exception {

assertNull(in.parityBuf);

in.close();

}

+

+ @Test

+ public void testBlockReader() throws Exception {

+ecPolicy = StripedFileTestUtil.getDefaultECPolicy(); // RS-6-3-1024k

Review Comment:

The variable does seem to be used. Remove this line?

Issue Time Tracking

---

Worklog Id: (was: 764730)

Time Spent: 3h 50m (was: 3h 40m)

> Improve EC pread: avoid potential reading whole block

> -

>

> Key: HDFS-16520

> URL: https://issues.apache.org/jira/browse/HDFS-16520

> Project: Hadoop HDFS

> Issue Type: Improvement

> Components: dfsclient, ec

>Affects Versions: 3.3.1, 3.3.2

>Reporter: daimin

>Assignee: daimin

>Priority: Major

> Labels: pull-request-available

> Time Spent: 3h 50m

> Remaining Estimate: 0h

>

> HDFS client 'pread' represents 'position read', this kind of read just need a

> range of data instead of reading the whole file/block. By using

> BlockReaderFactory#setLength, client tells datanode the block length to be

> read from disk and sent to client.

> To EC file, the block length to read is not well set, by default using

> 'block.getBlockSize() - offsetInBlock' to both pread and sread. Thus datanode

> read much more data and send to client, and abort when client closes

> connection. There is a lot waste of resource to this situation.

--

This message was sent by Atlassian Jira

(v8.20.7#820007)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16520) Improve EC pread: avoid potential reading whole block

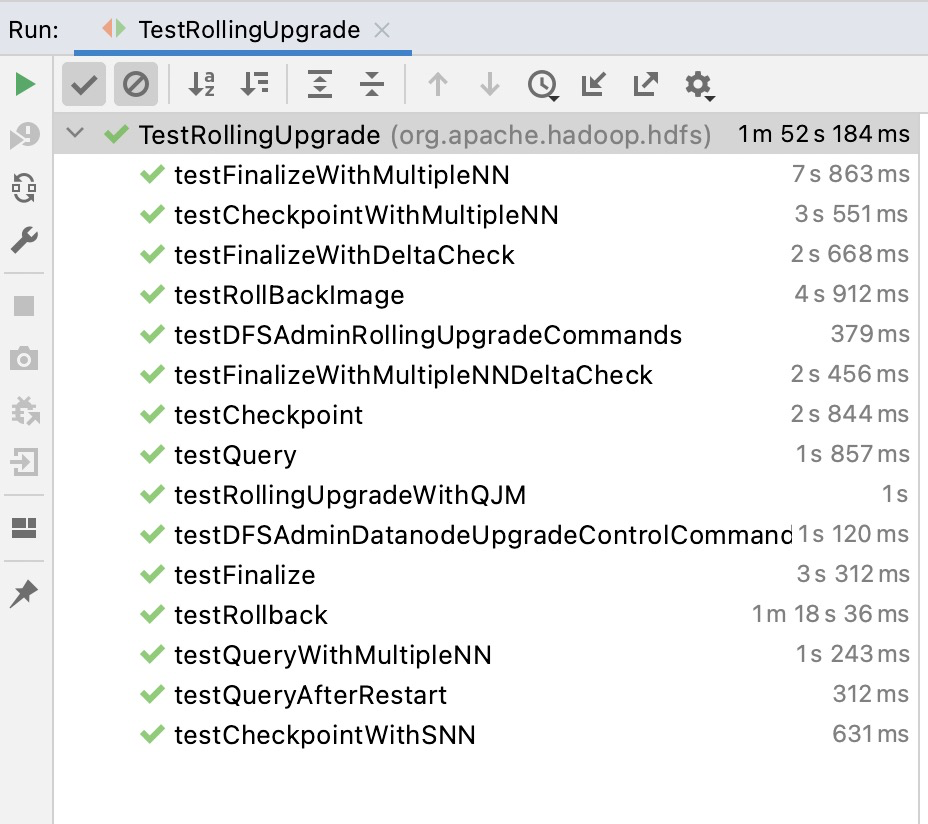

[ https://issues.apache.org/jira/browse/HDFS-16520?focusedWorklogId=763480=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-763480 ] ASF GitHub Bot logged work on HDFS-16520: - Author: ASF GitHub Bot Created on: 28/Apr/22 12:37 Start Date: 28/Apr/22 12:37 Worklog Time Spent: 10m Work Description: cndaimin commented on PR #4104: URL: https://github.com/apache/hadoop/pull/4104#issuecomment-1112151903 @ferhui The failed test `hadoop.hdfs.TestRollingUpgrade` seems unrelated, and it runs success on my local PC:  Issue Time Tracking --- Worklog Id: (was: 763480) Time Spent: 3h 40m (was: 3.5h) > Improve EC pread: avoid potential reading whole block > - > > Key: HDFS-16520 > URL: https://issues.apache.org/jira/browse/HDFS-16520 > Project: Hadoop HDFS > Issue Type: Improvement > Components: dfsclient, ec >Affects Versions: 3.3.1, 3.3.2 >Reporter: daimin >Assignee: daimin >Priority: Major > Labels: pull-request-available > Time Spent: 3h 40m > Remaining Estimate: 0h > > HDFS client 'pread' represents 'position read', this kind of read just need a > range of data instead of reading the whole file/block. By using > BlockReaderFactory#setLength, client tells datanode the block length to be > read from disk and sent to client. > To EC file, the block length to read is not well set, by default using > 'block.getBlockSize() - offsetInBlock' to both pread and sread. Thus datanode > read much more data and send to client, and abort when client closes > connection. There is a lot waste of resource to this situation. -- This message was sent by Atlassian Jira (v8.20.7#820007) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16520) Improve EC pread: avoid potential reading whole block

[ https://issues.apache.org/jira/browse/HDFS-16520?focusedWorklogId=763424=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-763424 ] ASF GitHub Bot logged work on HDFS-16520: - Author: ASF GitHub Bot Created on: 28/Apr/22 11:03 Start Date: 28/Apr/22 11:03 Worklog Time Spent: 10m Work Description: ferhui commented on PR #4104: URL: https://github.com/apache/hadoop/pull/4104#issuecomment-1112070060 @cndaimin can you check and confirm whether the failed test is related to this PR? Issue Time Tracking --- Worklog Id: (was: 763424) Time Spent: 3.5h (was: 3h 20m) > Improve EC pread: avoid potential reading whole block > - > > Key: HDFS-16520 > URL: https://issues.apache.org/jira/browse/HDFS-16520 > Project: Hadoop HDFS > Issue Type: Improvement > Components: dfsclient, ec >Affects Versions: 3.3.1, 3.3.2 >Reporter: daimin >Assignee: daimin >Priority: Major > Labels: pull-request-available > Time Spent: 3.5h > Remaining Estimate: 0h > > HDFS client 'pread' represents 'position read', this kind of read just need a > range of data instead of reading the whole file/block. By using > BlockReaderFactory#setLength, client tells datanode the block length to be > read from disk and sent to client. > To EC file, the block length to read is not well set, by default using > 'block.getBlockSize() - offsetInBlock' to both pread and sread. Thus datanode > read much more data and send to client, and abort when client closes > connection. There is a lot waste of resource to this situation. -- This message was sent by Atlassian Jira (v8.20.7#820007) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16520) Improve EC pread: avoid potential reading whole block

[

https://issues.apache.org/jira/browse/HDFS-16520?focusedWorklogId=762192=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-762192

]

ASF GitHub Bot logged work on HDFS-16520:

-

Author: ASF GitHub Bot

Created on: 26/Apr/22 09:10

Start Date: 26/Apr/22 09:10

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on PR #4104:

URL: https://github.com/apache/hadoop/pull/4104#issuecomment-1109548468

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 44s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 1s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 1 new or modified test files. |

_ trunk Compile Tests _ |

| +0 :ok: | mvndep | 16m 39s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 25m 23s | | trunk passed |

| +1 :green_heart: | compile | 6m 20s | | trunk passed with JDK

Ubuntu-11.0.14.1+1-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | compile | 6m 1s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | checkstyle | 1m 36s | | trunk passed |

| +1 :green_heart: | mvnsite | 3m 4s | | trunk passed |

| +1 :green_heart: | javadoc | 2m 28s | | trunk passed with JDK

Ubuntu-11.0.14.1+1-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 2m 49s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 6m 25s | | trunk passed |

| +1 :green_heart: | shadedclient | 22m 55s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 30s | | Maven dependency ordering for patch |

| +1 :green_heart: | mvninstall | 2m 18s | | the patch passed |

| +1 :green_heart: | compile | 6m 9s | | the patch passed with JDK

Ubuntu-11.0.14.1+1-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javac | 6m 9s | | the patch passed |

| +1 :green_heart: | compile | 5m 46s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | javac | 5m 46s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 1m 15s | | the patch passed |

| +1 :green_heart: | mvnsite | 2m 29s | | the patch passed |

| +1 :green_heart: | javadoc | 1m 44s | | the patch passed with JDK

Ubuntu-11.0.14.1+1-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 2m 15s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 6m 4s | | the patch passed |

| +1 :green_heart: | shadedclient | 22m 44s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 2m 42s | | hadoop-hdfs-client in the patch

passed. |

| -1 :x: | unit | 245m 41s |

[/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4104/8/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs in the patch passed. |

| +1 :green_heart: | asflicense | 1m 16s | | The patch does not

generate ASF License warnings. |

| | | 394m 14s | | |

| Reason | Tests |

|---:|:--|

| Failed junit tests | hadoop.hdfs.TestRollingUpgrade |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4104/8/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/4104 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell |

| uname | Linux 0fd20f4378da 4.15.0-58-generic #64-Ubuntu SMP Tue Aug 6

11:12:41 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 719ae6e859d2af3d72e0a75c4854b3f6734ec6f8 |

| Default Java | Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| Multi-JDK versions |

/usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.14.1+1-Ubuntu-0ubuntu1.20.04

/usr/lib/jvm/java-8-openjdk-amd64:Private

[jira] [Work logged] (HDFS-16520) Improve EC pread: avoid potential reading whole block

[

https://issues.apache.org/jira/browse/HDFS-16520?focusedWorklogId=761941=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-761941

]

ASF GitHub Bot logged work on HDFS-16520:

-

Author: ASF GitHub Bot

Created on: 25/Apr/22 17:59

Start Date: 25/Apr/22 17:59

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on PR #4104:

URL: https://github.com/apache/hadoop/pull/4104#issuecomment-1108871769

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 42s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 1 new or modified test files. |

_ trunk Compile Tests _ |

| +0 :ok: | mvndep | 15m 54s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 25m 23s | | trunk passed |

| +1 :green_heart: | compile | 6m 23s | | trunk passed with JDK

Ubuntu-11.0.14.1+1-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | compile | 6m 4s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | checkstyle | 1m 36s | | trunk passed |

| +1 :green_heart: | mvnsite | 3m 6s | | trunk passed |

| +1 :green_heart: | javadoc | 2m 24s | | trunk passed with JDK

Ubuntu-11.0.14.1+1-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 2m 47s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 6m 24s | | trunk passed |

| +1 :green_heart: | shadedclient | 22m 51s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 31s | | Maven dependency ordering for patch |

| +1 :green_heart: | mvninstall | 2m 19s | | the patch passed |

| +1 :green_heart: | compile | 6m 2s | | the patch passed with JDK

Ubuntu-11.0.14.1+1-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javac | 6m 2s | | the patch passed |

| +1 :green_heart: | compile | 5m 47s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | javac | 5m 47s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 1m 14s | | the patch passed |

| +1 :green_heart: | mvnsite | 2m 30s | | the patch passed |

| +1 :green_heart: | javadoc | 1m 46s | | the patch passed with JDK

Ubuntu-11.0.14.1+1-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 2m 17s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 6m 2s | | the patch passed |

| +1 :green_heart: | shadedclient | 22m 46s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 2m 37s | | hadoop-hdfs-client in the patch

passed. |

| -1 :x: | unit | 241m 21s |

[/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4104/7/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs in the patch passed. |

| +1 :green_heart: | asflicense | 1m 18s | | The patch does not

generate ASF License warnings. |

| | | 388m 55s | | |

| Reason | Tests |

|---:|:--|

| Failed junit tests |

hadoop.hdfs.TestDFSStripedInputStreamWithRandomECPolicy |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4104/7/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/4104 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell |

| uname | Linux 57b83b423bd6 4.15.0-58-generic #64-Ubuntu SMP Tue Aug 6

11:12:41 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / ba75850a01e1369a49239199bf7f4c748ddef6cf |

| Default Java | Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| Multi-JDK versions |

/usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.14.1+1-Ubuntu-0ubuntu1.20.04

[jira] [Work logged] (HDFS-16520) Improve EC pread: avoid potential reading whole block

[

https://issues.apache.org/jira/browse/HDFS-16520?focusedWorklogId=761720=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-761720

]

ASF GitHub Bot logged work on HDFS-16520:

-

Author: ASF GitHub Bot

Created on: 25/Apr/22 11:30

Start Date: 25/Apr/22 11:30

Worklog Time Spent: 10m

Work Description: cndaimin commented on code in PR #4104:

URL: https://github.com/apache/hadoop/pull/4104#discussion_r857526838

##

hadoop-hdfs-project/hadoop-hdfs-client/src/main/java/org/apache/hadoop/hdfs/DFSStripedInputStream.java:

##

@@ -250,9 +255,16 @@ boolean createBlockReader(LocatedBlock block, long

offsetInBlock,

if (dnInfo == null) {

break;

}

+if (readTo < 0 || readTo > block.getBlockSize()) {

+ readTo = block.getBlockSize();

+}

reader = getBlockReader(block, offsetInBlock,

-block.getBlockSize() - offsetInBlock,

+readTo - offsetInBlock,

dnInfo.addr, dnInfo.storageType, dnInfo.info);

+if (blockReaderListener != null) {

Review Comment:

Updated. Thanks @ferhui

Issue Time Tracking

---

Worklog Id: (was: 761720)

Time Spent: 3h (was: 2h 50m)

> Improve EC pread: avoid potential reading whole block

> -

>

> Key: HDFS-16520

> URL: https://issues.apache.org/jira/browse/HDFS-16520

> Project: Hadoop HDFS

> Issue Type: Improvement

> Components: dfsclient, ec

>Affects Versions: 3.3.1, 3.3.2

>Reporter: daimin

>Assignee: daimin

>Priority: Major

> Labels: pull-request-available

> Time Spent: 3h

> Remaining Estimate: 0h

>

> HDFS client 'pread' represents 'position read', this kind of read just need a

> range of data instead of reading the whole file/block. By using

> BlockReaderFactory#setLength, client tells datanode the block length to be

> read from disk and sent to client.

> To EC file, the block length to read is not well set, by default using

> 'block.getBlockSize() - offsetInBlock' to both pread and sread. Thus datanode

> read much more data and send to client, and abort when client closes

> connection. There is a lot waste of resource to this situation.

--

This message was sent by Atlassian Jira

(v8.20.7#820007)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16520) Improve EC pread: avoid potential reading whole block

[

https://issues.apache.org/jira/browse/HDFS-16520?focusedWorklogId=761711=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-761711

]

ASF GitHub Bot logged work on HDFS-16520:

-

Author: ASF GitHub Bot

Created on: 25/Apr/22 11:16

Start Date: 25/Apr/22 11:16

Worklog Time Spent: 10m

Work Description: ferhui commented on code in PR #4104:

URL: https://github.com/apache/hadoop/pull/4104#discussion_r857516983

##

hadoop-hdfs-project/hadoop-hdfs-client/src/main/java/org/apache/hadoop/hdfs/DFSStripedInputStream.java:

##

@@ -250,9 +255,16 @@ boolean createBlockReader(LocatedBlock block, long

offsetInBlock,

if (dnInfo == null) {

break;

}

+if (readTo < 0 || readTo > block.getBlockSize()) {

+ readTo = block.getBlockSize();

+}

reader = getBlockReader(block, offsetInBlock,

-block.getBlockSize() - offsetInBlock,

+readTo - offsetInBlock,

dnInfo.addr, dnInfo.storageType, dnInfo.info);

+if (blockReaderListener != null) {

Review Comment:

Thanks, It looks good.

But please check the checkstyle again.

Issue Time Tracking

---

Worklog Id: (was: 761711)

Time Spent: 2h 50m (was: 2h 40m)

> Improve EC pread: avoid potential reading whole block

> -

>

> Key: HDFS-16520

> URL: https://issues.apache.org/jira/browse/HDFS-16520

> Project: Hadoop HDFS

> Issue Type: Improvement

> Components: dfsclient, ec

>Affects Versions: 3.3.1, 3.3.2

>Reporter: daimin

>Assignee: daimin

>Priority: Major

> Labels: pull-request-available

> Time Spent: 2h 50m

> Remaining Estimate: 0h

>

> HDFS client 'pread' represents 'position read', this kind of read just need a

> range of data instead of reading the whole file/block. By using

> BlockReaderFactory#setLength, client tells datanode the block length to be

> read from disk and sent to client.

> To EC file, the block length to read is not well set, by default using

> 'block.getBlockSize() - offsetInBlock' to both pread and sread. Thus datanode

> read much more data and send to client, and abort when client closes

> connection. There is a lot waste of resource to this situation.

--

This message was sent by Atlassian Jira

(v8.20.7#820007)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16520) Improve EC pread: avoid potential reading whole block

[

https://issues.apache.org/jira/browse/HDFS-16520?focusedWorklogId=761685=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-761685

]

ASF GitHub Bot logged work on HDFS-16520:

-

Author: ASF GitHub Bot

Created on: 25/Apr/22 09:59

Start Date: 25/Apr/22 09:59

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on PR #4104:

URL: https://github.com/apache/hadoop/pull/4104#issuecomment-1108352875

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 40s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 1s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 1 new or modified test files. |

_ trunk Compile Tests _ |

| +0 :ok: | mvndep | 16m 47s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 25m 24s | | trunk passed |

| +1 :green_heart: | compile | 6m 21s | | trunk passed with JDK

Ubuntu-11.0.14.1+1-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | compile | 6m 1s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | checkstyle | 1m 37s | | trunk passed |

| +1 :green_heart: | mvnsite | 3m 3s | | trunk passed |

| +1 :green_heart: | javadoc | 2m 26s | | trunk passed with JDK

Ubuntu-11.0.14.1+1-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 2m 47s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 6m 25s | | trunk passed |

| +1 :green_heart: | shadedclient | 22m 58s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 32s | | Maven dependency ordering for patch |

| +1 :green_heart: | mvninstall | 2m 17s | | the patch passed |

| +1 :green_heart: | compile | 6m 0s | | the patch passed with JDK

Ubuntu-11.0.14.1+1-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javac | 6m 0s | | the patch passed |

| +1 :green_heart: | compile | 5m 48s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | javac | 5m 48s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| -0 :warning: | checkstyle | 1m 16s |

[/results-checkstyle-hadoop-hdfs-project.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4104/6/artifact/out/results-checkstyle-hadoop-hdfs-project.txt)

| hadoop-hdfs-project: The patch generated 2 new + 31 unchanged - 0 fixed =

33 total (was 31) |

| +1 :green_heart: | mvnsite | 2m 34s | | the patch passed |

| +1 :green_heart: | javadoc | 1m 45s | | the patch passed with JDK

Ubuntu-11.0.14.1+1-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 2m 14s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 6m 10s | | the patch passed |

| +1 :green_heart: | shadedclient | 22m 55s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 2m 37s | | hadoop-hdfs-client in the patch

passed. |

| -1 :x: | unit | 246m 7s |

[/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4104/6/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs in the patch passed. |

| +1 :green_heart: | asflicense | 1m 16s | | The patch does not

generate ASF License warnings. |

| | | 394m 51s | | |

| Reason | Tests |

|---:|:--|

| Failed junit tests | hadoop.hdfs.TestClientProtocolForPipelineRecovery |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4104/6/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/4104 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell |

| uname | Linux cb6cf665c582 4.15.0-58-generic #64-Ubuntu SMP Tue Aug 6

11:12:41 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk /

[jira] [Work logged] (HDFS-16520) Improve EC pread: avoid potential reading whole block

[

https://issues.apache.org/jira/browse/HDFS-16520?focusedWorklogId=761571=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-761571

]

ASF GitHub Bot logged work on HDFS-16520:

-

Author: ASF GitHub Bot

Created on: 25/Apr/22 03:26

Start Date: 25/Apr/22 03:26

Worklog Time Spent: 10m

Work Description: cndaimin commented on code in PR #4104:

URL: https://github.com/apache/hadoop/pull/4104#discussion_r857234796

##

hadoop-hdfs-project/hadoop-hdfs-client/src/main/java/org/apache/hadoop/hdfs/DFSStripedInputStream.java:

##

@@ -250,9 +255,16 @@ boolean createBlockReader(LocatedBlock block, long

offsetInBlock,

if (dnInfo == null) {

break;

}

+if (readTo < 0 || readTo > block.getBlockSize()) {

+ readTo = block.getBlockSize();

+}

reader = getBlockReader(block, offsetInBlock,

-block.getBlockSize() - offsetInBlock,

+readTo - offsetInBlock,

dnInfo.addr, dnInfo.storageType, dnInfo.info);

+if (blockReaderListener != null) {

Review Comment:

> It is for test here, right? Can we use fault injector here? Refer to

DFSClientFaultInjector

Yes, `DFSClientFaultInjector` is better here. Thanks, updated.

Issue Time Tracking

---

Worklog Id: (was: 761571)

Time Spent: 2.5h (was: 2h 20m)

> Improve EC pread: avoid potential reading whole block

> -

>

> Key: HDFS-16520

> URL: https://issues.apache.org/jira/browse/HDFS-16520

> Project: Hadoop HDFS

> Issue Type: Improvement

> Components: dfsclient, ec

>Affects Versions: 3.3.1, 3.3.2

>Reporter: daimin

>Assignee: daimin

>Priority: Major

> Labels: pull-request-available

> Time Spent: 2.5h

> Remaining Estimate: 0h

>

> HDFS client 'pread' represents 'position read', this kind of read just need a

> range of data instead of reading the whole file/block. By using

> BlockReaderFactory#setLength, client tells datanode the block length to be

> read from disk and sent to client.

> To EC file, the block length to read is not well set, by default using

> 'block.getBlockSize() - offsetInBlock' to both pread and sread. Thus datanode

> read much more data and send to client, and abort when client closes

> connection. There is a lot waste of resource to this situation.

--

This message was sent by Atlassian Jira

(v8.20.7#820007)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16520) Improve EC pread: avoid potential reading whole block

[

https://issues.apache.org/jira/browse/HDFS-16520?focusedWorklogId=761400=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-761400

]

ASF GitHub Bot logged work on HDFS-16520:

-

Author: ASF GitHub Bot

Created on: 24/Apr/22 06:53

Start Date: 24/Apr/22 06:53

Worklog Time Spent: 10m

Work Description: ferhui commented on code in PR #4104:

URL: https://github.com/apache/hadoop/pull/4104#discussion_r849327618

##

hadoop-hdfs-project/hadoop-hdfs-client/src/main/java/org/apache/hadoop/hdfs/DFSStripedInputStream.java:

##

@@ -250,9 +255,16 @@ boolean createBlockReader(LocatedBlock block, long

offsetInBlock,

if (dnInfo == null) {

break;

}

+if (readTo < 0 || readTo > block.getBlockSize()) {

+ readTo = block.getBlockSize();

+}

reader = getBlockReader(block, offsetInBlock,

-block.getBlockSize() - offsetInBlock,

+readTo - offsetInBlock,

dnInfo.addr, dnInfo.storageType, dnInfo.info);

+if (blockReaderListener != null) {

Review Comment:

It is for test here, right? Can we use fault injector here? Refer to

DFSClientFaultInjector

Issue Time Tracking

---

Worklog Id: (was: 761400)

Time Spent: 2h 20m (was: 2h 10m)

> Improve EC pread: avoid potential reading whole block

> -

>

> Key: HDFS-16520

> URL: https://issues.apache.org/jira/browse/HDFS-16520

> Project: Hadoop HDFS

> Issue Type: Improvement

> Components: dfsclient, ec

>Affects Versions: 3.3.1, 3.3.2

>Reporter: daimin

>Assignee: daimin

>Priority: Major

> Labels: pull-request-available

> Time Spent: 2h 20m

> Remaining Estimate: 0h

>

> HDFS client 'pread' represents 'position read', this kind of read just need a

> range of data instead of reading the whole file/block. By using

> BlockReaderFactory#setLength, client tells datanode the block length to be

> read from disk and sent to client.

> To EC file, the block length to read is not well set, by default using

> 'block.getBlockSize() - offsetInBlock' to both pread and sread. Thus datanode

> read much more data and send to client, and abort when client closes

> connection. There is a lot waste of resource to this situation.

--

This message was sent by Atlassian Jira

(v8.20.7#820007)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16520) Improve EC pread: avoid potential reading whole block

[ https://issues.apache.org/jira/browse/HDFS-16520?focusedWorklogId=760665=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-760665 ] ASF GitHub Bot logged work on HDFS-16520: - Author: ASF GitHub Bot Created on: 22/Apr/22 07:30 Start Date: 22/Apr/22 07:30 Worklog Time Spent: 10m Work Description: cndaimin commented on PR #4104: URL: https://github.com/apache/hadoop/pull/4104#issuecomment-1106106812 @tasanuma Thanks for your review. Issue Time Tracking --- Worklog Id: (was: 760665) Time Spent: 2h 10m (was: 2h) > Improve EC pread: avoid potential reading whole block > - > > Key: HDFS-16520 > URL: https://issues.apache.org/jira/browse/HDFS-16520 > Project: Hadoop HDFS > Issue Type: Improvement > Components: dfsclient, ec >Affects Versions: 3.3.1, 3.3.2 >Reporter: daimin >Assignee: daimin >Priority: Major > Labels: pull-request-available > Time Spent: 2h 10m > Remaining Estimate: 0h > > HDFS client 'pread' represents 'position read', this kind of read just need a > range of data instead of reading the whole file/block. By using > BlockReaderFactory#setLength, client tells datanode the block length to be > read from disk and sent to client. > To EC file, the block length to read is not well set, by default using > 'block.getBlockSize() - offsetInBlock' to both pread and sread. Thus datanode > read much more data and send to client, and abort when client closes > connection. There is a lot waste of resource to this situation. -- This message was sent by Atlassian Jira (v8.20.7#820007) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16520) Improve EC pread: avoid potential reading whole block

[ https://issues.apache.org/jira/browse/HDFS-16520?focusedWorklogId=759745=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-759745 ] ASF GitHub Bot logged work on HDFS-16520: - Author: ASF GitHub Bot Created on: 21/Apr/22 04:01 Start Date: 21/Apr/22 04:01 Worklog Time Spent: 10m Work Description: cndaimin commented on PR #4104: URL: https://github.com/apache/hadoop/pull/4104#issuecomment-1104683519 @Hexiaoqiao @jojochuang Could you please take a look on this PR? In practice, we saved lots of client memory, and network traffic to both client and datanode by avoiding extra data to read. Issue Time Tracking --- Worklog Id: (was: 759745) Time Spent: 2h (was: 1h 50m) > Improve EC pread: avoid potential reading whole block > - > > Key: HDFS-16520 > URL: https://issues.apache.org/jira/browse/HDFS-16520 > Project: Hadoop HDFS > Issue Type: Improvement > Components: dfsclient, ec >Affects Versions: 3.3.1, 3.3.2 >Reporter: daimin >Assignee: daimin >Priority: Major > Labels: pull-request-available > Time Spent: 2h > Remaining Estimate: 0h > > HDFS client 'pread' represents 'position read', this kind of read just need a > range of data instead of reading the whole file/block. By using > BlockReaderFactory#setLength, client tells datanode the block length to be > read from disk and sent to client. > To EC file, the block length to read is not well set, by default using > 'block.getBlockSize() - offsetInBlock' to both pread and sread. Thus datanode > read much more data and send to client, and abort when client closes > connection. There is a lot waste of resource to this situation. -- This message was sent by Atlassian Jira (v8.20.7#820007) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16520) Improve EC pread: avoid potential reading whole block

[ https://issues.apache.org/jira/browse/HDFS-16520?focusedWorklogId=759744=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-759744 ] ASF GitHub Bot logged work on HDFS-16520: - Author: ASF GitHub Bot Created on: 21/Apr/22 04:01 Start Date: 21/Apr/22 04:01 Worklog Time Spent: 10m Work Description: cndaimin commented on PR #4104: URL: https://github.com/apache/hadoop/pull/4104#issuecomment-1104683461 @Hexiaoqiao @jojochuang Could you please take a look on this PR? In practice, we saved lots of client memory, and network traffic to both client and datanode by avoiding extra data to read. Issue Time Tracking --- Worklog Id: (was: 759744) Time Spent: 1h 50m (was: 1h 40m) > Improve EC pread: avoid potential reading whole block > - > > Key: HDFS-16520 > URL: https://issues.apache.org/jira/browse/HDFS-16520 > Project: Hadoop HDFS > Issue Type: Improvement > Components: dfsclient, ec >Affects Versions: 3.3.1, 3.3.2 >Reporter: daimin >Assignee: daimin >Priority: Major > Labels: pull-request-available > Time Spent: 1h 50m > Remaining Estimate: 0h > > HDFS client 'pread' represents 'position read', this kind of read just need a > range of data instead of reading the whole file/block. By using > BlockReaderFactory#setLength, client tells datanode the block length to be > read from disk and sent to client. > To EC file, the block length to read is not well set, by default using > 'block.getBlockSize() - offsetInBlock' to both pread and sread. Thus datanode > read much more data and send to client, and abort when client closes > connection. There is a lot waste of resource to this situation. -- This message was sent by Atlassian Jira (v8.20.7#820007) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16520) Improve EC pread: avoid potential reading whole block

[

https://issues.apache.org/jira/browse/HDFS-16520?focusedWorklogId=755344=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-755344

]

ASF GitHub Bot logged work on HDFS-16520:

-

Author: ASF GitHub Bot

Created on: 11/Apr/22 17:42

Start Date: 11/Apr/22 17:42

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on PR #4104:

URL: https://github.com/apache/hadoop/pull/4104#issuecomment-1095344815

:confetti_ball: **+1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 40s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 1 new or modified test files. |

_ trunk Compile Tests _ |

| +0 :ok: | mvndep | 15m 46s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 25m 2s | | trunk passed |

| +1 :green_heart: | compile | 5m 59s | | trunk passed with JDK

Ubuntu-11.0.14.1+1-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | compile | 5m 44s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | checkstyle | 1m 17s | | trunk passed |

| +1 :green_heart: | mvnsite | 2m 30s | | trunk passed |

| +1 :green_heart: | javadoc | 1m 49s | | trunk passed with JDK

Ubuntu-11.0.14.1+1-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 2m 16s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 5m 59s | | trunk passed |

| +1 :green_heart: | shadedclient | 22m 45s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 27s | | Maven dependency ordering for patch |

| +1 :green_heart: | mvninstall | 2m 5s | | the patch passed |

| +1 :green_heart: | compile | 5m 54s | | the patch passed with JDK

Ubuntu-11.0.14.1+1-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javac | 5m 54s | | the patch passed |

| +1 :green_heart: | compile | 5m 37s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | javac | 5m 37s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 1m 5s | | the patch passed |

| +1 :green_heart: | mvnsite | 2m 10s | | the patch passed |

| +1 :green_heart: | javadoc | 1m 29s | | the patch passed with JDK

Ubuntu-11.0.14.1+1-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 2m 0s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 5m 47s | | the patch passed |

| +1 :green_heart: | shadedclient | 22m 29s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 2m 23s | | hadoop-hdfs-client in the patch

passed. |

| +1 :green_heart: | unit | 228m 54s | | hadoop-hdfs in the patch

passed. |

| +1 :green_heart: | asflicense | 0m 50s | | The patch does not

generate ASF License warnings. |

| | | 369m 3s | | |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4104/5/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/4104 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell |

| uname | Linux 480a7fa0fba0 4.15.0-58-generic #64-Ubuntu SMP Tue Aug 6

11:12:41 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 2ce6ac6e6c7161fa9dd6d84bfb3645c4127ae533 |

| Default Java | Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| Multi-JDK versions |

/usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.14.1+1-Ubuntu-0ubuntu1.20.04

/usr/lib/jvm/java-8-openjdk-amd64:Private

Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| Test Results |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4104/5/testReport/ |

| Max. process+thread count | 3437 (vs. ulimit of 5500) |

| modules | C: hadoop-hdfs-project/hadoop-hdfs-client

[jira] [Work logged] (HDFS-16520) Improve EC pread: avoid potential reading whole block

[

https://issues.apache.org/jira/browse/HDFS-16520?focusedWorklogId=755156=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-755156

]

ASF GitHub Bot logged work on HDFS-16520:

-

Author: ASF GitHub Bot

Created on: 11/Apr/22 09:54

Start Date: 11/Apr/22 09:54

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on PR #4104:

URL: https://github.com/apache/hadoop/pull/4104#issuecomment-1094839139

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 42s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 1s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 1 new or modified test files. |

_ trunk Compile Tests _ |

| +0 :ok: | mvndep | 16m 13s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 25m 18s | | trunk passed |

| +1 :green_heart: | compile | 6m 1s | | trunk passed with JDK

Ubuntu-11.0.14.1+1-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | compile | 5m 45s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | checkstyle | 1m 17s | | trunk passed |

| +1 :green_heart: | mvnsite | 2m 25s | | trunk passed |

| +1 :green_heart: | javadoc | 1m 52s | | trunk passed with JDK

Ubuntu-11.0.14.1+1-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 2m 20s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 5m 54s | | trunk passed |

| +1 :green_heart: | shadedclient | 23m 1s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 28s | | Maven dependency ordering for patch |

| +1 :green_heart: | mvninstall | 2m 7s | | the patch passed |

| +1 :green_heart: | compile | 5m 54s | | the patch passed with JDK

Ubuntu-11.0.14.1+1-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javac | 5m 54s | | the patch passed |

| +1 :green_heart: | compile | 5m 33s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | javac | 5m 33s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| -0 :warning: | checkstyle | 1m 6s |

[/results-checkstyle-hadoop-hdfs-project.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4104/4/artifact/out/results-checkstyle-hadoop-hdfs-project.txt)

| hadoop-hdfs-project: The patch generated 1 new + 29 unchanged - 0 fixed =

30 total (was 29) |

| +1 :green_heart: | mvnsite | 2m 11s | | the patch passed |

| +1 :green_heart: | javadoc | 1m 29s | | the patch passed with JDK

Ubuntu-11.0.14.1+1-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 1m 57s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 5m 45s | | the patch passed |

| +1 :green_heart: | shadedclient | 22m 26s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 2m 22s | | hadoop-hdfs-client in the patch

passed. |

| -1 :x: | unit | 229m 20s |

[/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4104/4/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs in the patch passed. |

| +1 :green_heart: | asflicense | 0m 50s | | The patch does not

generate ASF License warnings. |

| | | 370m 31s | | |

| Reason | Tests |

|---:|:--|

| Failed junit tests |

hadoop.hdfs.TestDFSStripedInputStreamWithRandomECPolicy |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4104/4/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/4104 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell |

| uname | Linux 9f7e91d244d9 4.15.0-58-generic #64-Ubuntu SMP Tue Aug 6

11:12:41 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk /

[jira] [Work logged] (HDFS-16520) Improve EC pread: avoid potential reading whole block

[ https://issues.apache.org/jira/browse/HDFS-16520?focusedWorklogId=750089=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-750089 ] ASF GitHub Bot logged work on HDFS-16520: - Author: ASF GitHub Bot Created on: 30/Mar/22 13:14 Start Date: 30/Mar/22 13:14 Worklog Time Spent: 10m Work Description: cndaimin commented on pull request #4104: URL: https://github.com/apache/hadoop/pull/4104#issuecomment-1083126362 @ferhui I updated this PR by adding a test verify the length of block readers. Could you please take a look again? Thanks a lot -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 750089) Time Spent: 1h 20m (was: 1h 10m) > Improve EC pread: avoid potential reading whole block > - > > Key: HDFS-16520 > URL: https://issues.apache.org/jira/browse/HDFS-16520 > Project: Hadoop HDFS > Issue Type: Improvement > Components: dfsclient, ec >Affects Versions: 3.3.1, 3.3.2 >Reporter: daimin >Assignee: daimin >Priority: Major > Labels: pull-request-available > Time Spent: 1h 20m > Remaining Estimate: 0h > > HDFS client 'pread' represents 'position read', this kind of read just need a > range of data instead of reading the whole file/block. By using > BlockReaderFactory#setLength, client tells datanode the block length to be > read from disk and sent to client. > To EC file, the block length to read is not well set, by default using > 'block.getBlockSize() - offsetInBlock' to both pread and sread. Thus datanode > read much more data and send to client, and abort when client closes > connection. There is a lot waste of resource to this situation. -- This message was sent by Atlassian Jira (v8.20.1#820001) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16520) Improve EC pread: avoid potential reading whole block

[

https://issues.apache.org/jira/browse/HDFS-16520?focusedWorklogId=749392=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-749392

]

ASF GitHub Bot logged work on HDFS-16520:

-

Author: ASF GitHub Bot

Created on: 29/Mar/22 15:21

Start Date: 29/Mar/22 15:21

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on pull request #4104:

URL: https://github.com/apache/hadoop/pull/4104#issuecomment-1082009485

:confetti_ball: **+1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 39s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 1s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 1 new or modified test files. |

_ trunk Compile Tests _ |

| +0 :ok: | mvndep | 12m 59s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 23m 19s | | trunk passed |

| +1 :green_heart: | compile | 6m 5s | | trunk passed with JDK

Ubuntu-11.0.14+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | compile | 5m 37s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | checkstyle | 1m 12s | | trunk passed |

| +1 :green_heart: | mvnsite | 2m 32s | | trunk passed |

| +1 :green_heart: | javadoc | 1m 49s | | trunk passed with JDK

Ubuntu-11.0.14+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 2m 16s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 5m 45s | | trunk passed |

| +1 :green_heart: | shadedclient | 22m 41s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 27s | | Maven dependency ordering for patch |

| +1 :green_heart: | mvninstall | 2m 7s | | the patch passed |

| +1 :green_heart: | compile | 5m 44s | | the patch passed with JDK

Ubuntu-11.0.14+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javac | 5m 44s | | the patch passed |

| +1 :green_heart: | compile | 5m 32s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | javac | 5m 32s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| -0 :warning: | checkstyle | 1m 2s |

[/results-checkstyle-hadoop-hdfs-project.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4104/3/artifact/out/results-checkstyle-hadoop-hdfs-project.txt)

| hadoop-hdfs-project: The patch generated 3 new + 29 unchanged - 0 fixed =

32 total (was 29) |

| +1 :green_heart: | mvnsite | 2m 11s | | the patch passed |

| +1 :green_heart: | javadoc | 1m 29s | | the patch passed with JDK

Ubuntu-11.0.14+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 2m 0s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 5m 49s | | the patch passed |

| +1 :green_heart: | shadedclient | 22m 41s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 2m 23s | | hadoop-hdfs-client in the patch

passed. |

| +1 :green_heart: | unit | 226m 1s | | hadoop-hdfs in the patch

passed. |

| +1 :green_heart: | asflicense | 0m 47s | | The patch does not

generate ASF License warnings. |

| | | 361m 8s | | |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4104/3/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/4104 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell |

| uname | Linux 259ffc61732c 4.15.0-58-generic #64-Ubuntu SMP Tue Aug 6

11:12:41 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 3dac78493d53795dbe739c9942e9594b1b84da6a |

| Default Java | Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| Multi-JDK versions |

/usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.14+9-Ubuntu-0ubuntu2.20.04

/usr/lib/jvm/java-8-openjdk-amd64:Private

Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

|

[jira] [Work logged] (HDFS-16520) Improve EC pread: avoid potential reading whole block

[ https://issues.apache.org/jira/browse/HDFS-16520?focusedWorklogId=749099=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-749099 ] ASF GitHub Bot logged work on HDFS-16520: - Author: ASF GitHub Bot Created on: 29/Mar/22 03:19 Start Date: 29/Mar/22 03:19 Worklog Time Spent: 10m Work Description: cndaimin commented on pull request #4104: URL: https://github.com/apache/hadoop/pull/4104#issuecomment-1081358576 @jojochuang @ferhui Thanks for your review. Correctness of pread looks is well covered by `TestDFSStripedInputStream#testPread`, I will try to add some extra tests to verify the length of block readers. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 749099) Time Spent: 1h (was: 50m) > Improve EC pread: avoid potential reading whole block > - > > Key: HDFS-16520 > URL: https://issues.apache.org/jira/browse/HDFS-16520 > Project: Hadoop HDFS > Issue Type: Improvement > Components: dfsclient, ec >Affects Versions: 3.3.1, 3.3.2 >Reporter: daimin >Assignee: daimin >Priority: Major > Labels: pull-request-available > Time Spent: 1h > Remaining Estimate: 0h > > HDFS client 'pread' represents 'position read', this kind of read just need a > range of data instead of reading the whole file/block. By using > BlockReaderFactory#setLength, client tells datanode the block length to be > read from disk and sent to client. > To EC file, the block length to read is not well set, by default using > 'block.getBlockSize() - offsetInBlock' to both pread and sread. Thus datanode > read much more data and send to client, and abort when client closes > connection. There is a lot waste of resource to this situation. -- This message was sent by Atlassian Jira (v8.20.1#820001) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16520) Improve EC pread: avoid potential reading whole block

[ https://issues.apache.org/jira/browse/HDFS-16520?focusedWorklogId=748447=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-748447 ] ASF GitHub Bot logged work on HDFS-16520: - Author: ASF GitHub Bot Created on: 28/Mar/22 06:57 Start Date: 28/Mar/22 06:57 Worklog Time Spent: 10m Work Description: ferhui commented on pull request #4104: URL: https://github.com/apache/hadoop/pull/4104#issuecomment-1080269124 Good catch! overall looks great. @cndaimin could you please add a test case? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 748447) Time Spent: 50m (was: 40m) > Improve EC pread: avoid potential reading whole block > - > > Key: HDFS-16520 > URL: https://issues.apache.org/jira/browse/HDFS-16520 > Project: Hadoop HDFS > Issue Type: Improvement > Components: dfsclient, ec >Affects Versions: 3.3.1, 3.3.2 >Reporter: daimin >Assignee: daimin >Priority: Major > Labels: pull-request-available > Time Spent: 50m > Remaining Estimate: 0h > > HDFS client 'pread' represents 'position read', this kind of read just need a > range of data instead of reading the whole file/block. By using > BlockReaderFactory#setLength, client tells datanode the block length to be > read from disk and sent to client. > To EC file, the block length to read is not well set, by default using > 'block.getBlockSize() - offsetInBlock' to both pread and sread. Thus datanode > read much more data and send to client, and abort when client closes > connection. There is a lot waste of resource to this situation. -- This message was sent by Atlassian Jira (v8.20.1#820001) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16520) Improve EC pread: avoid potential reading whole block

[ https://issues.apache.org/jira/browse/HDFS-16520?focusedWorklogId=747582=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-747582 ] ASF GitHub Bot logged work on HDFS-16520: - Author: ASF GitHub Bot Created on: 25/Mar/22 02:32 Start Date: 25/Mar/22 02:32 Worklog Time Spent: 10m Work Description: jojochuang commented on pull request #4104: URL: https://github.com/apache/hadoop/pull/4104#issuecomment-1078594592 This looks like a great improvement. @sodonnel , @umamaheswararao , @ferhui @tasanuma you guys may be interested -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 747582) Time Spent: 40m (was: 0.5h) > Improve EC pread: avoid potential reading whole block > - > > Key: HDFS-16520 > URL: https://issues.apache.org/jira/browse/HDFS-16520 > Project: Hadoop HDFS > Issue Type: Improvement > Components: dfsclient, ec >Affects Versions: 3.3.1, 3.3.2 >Reporter: daimin >Assignee: daimin >Priority: Major > Labels: pull-request-available > Time Spent: 40m > Remaining Estimate: 0h > > HDFS client 'pread' represents 'position read', this kind of read just need a > range of data instead of reading the whole file/block. By using > BlockReaderFactory#setLength, client tells datanode the block length to be > read from disk and sent to client. > To EC file, the block length to read is not well set, by default using > 'block.getBlockSize() - offsetInBlock' to both pread and sread. Thus datanode > read much more data and send to client, and abort when client closes > connection. There is a lot waste of resource to this situation. -- This message was sent by Atlassian Jira (v8.20.1#820001) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16520) Improve EC pread: avoid potential reading whole block

[

https://issues.apache.org/jira/browse/HDFS-16520?focusedWorklogId=747170=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-747170

]

ASF GitHub Bot logged work on HDFS-16520:

-

Author: ASF GitHub Bot

Created on: 24/Mar/22 12:11

Start Date: 24/Mar/22 12:11

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on pull request #4104:

URL: https://github.com/apache/hadoop/pull/4104#issuecomment-1077561251

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 43s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 1s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| -1 :x: | test4tests | 0m 0s | | The patch doesn't appear to include

any new or modified tests. Please justify why no new tests are needed for this

patch. Also please list what manual steps were performed to verify this patch.

|

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 33m 39s | | trunk passed |

| +1 :green_heart: | compile | 1m 3s | | trunk passed with JDK

Ubuntu-11.0.14+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | compile | 0m 55s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | checkstyle | 0m 30s | | trunk passed |

| +1 :green_heart: | mvnsite | 1m 1s | | trunk passed |

| +1 :green_heart: | javadoc | 0m 46s | | trunk passed with JDK

Ubuntu-11.0.14+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 0m 40s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 2m 31s | | trunk passed |

| +1 :green_heart: | shadedclient | 21m 58s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 0m 50s | | the patch passed |

| +1 :green_heart: | compile | 0m 54s | | the patch passed with JDK

Ubuntu-11.0.14+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javac | 0m 54s | | the patch passed |

| +1 :green_heart: | compile | 0m 47s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | javac | 0m 47s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 0m 20s | | the patch passed |

| +1 :green_heart: | mvnsite | 0m 49s | | the patch passed |

| +1 :green_heart: | javadoc | 0m 35s | | the patch passed with JDK

Ubuntu-11.0.14+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 0m 32s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 2m 32s | | the patch passed |

| +1 :green_heart: | shadedclient | 22m 59s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 2m 24s | | hadoop-hdfs-client in the patch

passed. |

| +1 :green_heart: | asflicense | 0m 35s | | The patch does not

generate ASF License warnings. |

| | | 96m 3s | | |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4104/2/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/4104 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell |

| uname | Linux 993df16c3cc5 4.15.0-58-generic #64-Ubuntu SMP Tue Aug 6

11:12:41 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / ca5aafce9f31472ec2b9b58bfdb7349371a98aa6 |

| Default Java | Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| Multi-JDK versions |

/usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.14+9-Ubuntu-0ubuntu2.20.04

/usr/lib/jvm/java-8-openjdk-amd64:Private

Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| Test Results |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4104/2/testReport/ |

| Max. process+thread count | 726 (vs. ulimit of 5500) |

| modules | C: hadoop-hdfs-project/hadoop-hdfs-client U:

hadoop-hdfs-project/hadoop-hdfs-client |

| Console output |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4104/2/console |

|

[jira] [Work logged] (HDFS-16520) Improve EC pread: avoid potential reading whole block

[

https://issues.apache.org/jira/browse/HDFS-16520?focusedWorklogId=747022=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-747022

]

ASF GitHub Bot logged work on HDFS-16520:

-

Author: ASF GitHub Bot

Created on: 24/Mar/22 08:40

Start Date: 24/Mar/22 08:40

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on pull request #4104:

URL: https://github.com/apache/hadoop/pull/4104#issuecomment-1077373154

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 43s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| -1 :x: | test4tests | 0m 0s | | The patch doesn't appear to include

any new or modified tests. Please justify why no new tests are needed for this

patch. Also please list what manual steps were performed to verify this patch.

|

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 33m 43s | | trunk passed |

| +1 :green_heart: | compile | 1m 2s | | trunk passed with JDK

Ubuntu-11.0.14+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | compile | 0m 57s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | checkstyle | 0m 30s | | trunk passed |

| +1 :green_heart: | mvnsite | 1m 4s | | trunk passed |

| +1 :green_heart: | javadoc | 0m 46s | | trunk passed with JDK

Ubuntu-11.0.14+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 0m 39s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 2m 36s | | trunk passed |

| +1 :green_heart: | shadedclient | 21m 49s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 0m 51s | | the patch passed |

| +1 :green_heart: | compile | 0m 53s | | the patch passed with JDK

Ubuntu-11.0.14+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javac | 0m 53s | | the patch passed |

| +1 :green_heart: | compile | 0m 47s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | javac | 0m 47s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| -0 :warning: | checkstyle | 0m 20s |

[/results-checkstyle-hadoop-hdfs-project_hadoop-hdfs-client.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4104/1/artifact/out/results-checkstyle-hadoop-hdfs-project_hadoop-hdfs-client.txt)

| hadoop-hdfs-project/hadoop-hdfs-client: The patch generated 3 new + 16

unchanged - 0 fixed = 19 total (was 16) |

| +1 :green_heart: | mvnsite | 0m 50s | | the patch passed |

| +1 :green_heart: | javadoc | 0m 35s | | the patch passed with JDK

Ubuntu-11.0.14+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 0m 33s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 2m 30s | | the patch passed |

| +1 :green_heart: | shadedclient | 21m 43s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 2m 23s | | hadoop-hdfs-client in the patch

passed. |

| +1 :green_heart: | asflicense | 0m 34s | | The patch does not

generate ASF License warnings. |

| | | 94m 54s | | |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4104/1/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/4104 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell |

| uname | Linux ef7ab1a1ea82 4.15.0-58-generic #64-Ubuntu SMP Tue Aug 6

11:12:41 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / afaa5ded02cde8eac9a6521f3fdea4be314e011c |

| Default Java | Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| Multi-JDK versions |

/usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.14+9-Ubuntu-0ubuntu2.20.04

/usr/lib/jvm/java-8-openjdk-amd64:Private

Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| Test Results |

[jira] [Work logged] (HDFS-16520) Improve EC pread: avoid potential reading whole block