[GitHub] [flink] flinkbot edited a comment on issue #11871: [FLINK-17333][doc] add doc for 'create catalog' ddl

flinkbot edited a comment on issue #11871: URL: https://github.com/apache/flink/pull/11871#issuecomment-618188399 ## CI report: * ac40522a46f3f22f747e1196d45d91543cc6a87a Travis: [PENDING](https://travis-ci.com/github/flink-ci/flink/builds/161564583) Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=102) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on issue #11872: [FLINK-17227][metrics]Remove Datadog shade-plugin relocations

flinkbot edited a comment on issue #11872: URL: https://github.com/apache/flink/pull/11872#issuecomment-618188449 ## CI report: * 6ee34138baa74abb4b7c1f71ea98e360340a2b8c Travis: [PENDING](https://travis-ci.com/github/flink-ci/flink/builds/161564598) Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=103) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on issue #11749: [FLINK-16669][python][table] Support Python UDF in SQL function DDL.

flinkbot edited a comment on issue #11749: URL: https://github.com/apache/flink/pull/11749#issuecomment-613901508 ## CI report: * e3255e2c9750fa3c457bc853849c5c02ad463c2d Travis: [FAILURE](https://travis-ci.com/github/flink-ci/flink/builds/161384844) Azure: [FAILURE](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=43) * af2712838656f4c716a40d0248d2d9b0129b29cd UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on issue #10059: [FLINK-14543][FLINK-15901][table] Support partition for temporary table and HiveCatalog

flinkbot edited a comment on issue #10059: URL: https://github.com/apache/flink/pull/10059#issuecomment-548289939 ## CI report: * 5f91592c6f010dbb52511c54568c5d3c82082433 UNKNOWN * 13dab75e74ed139bb8802dcf2de0ef87464f046b Travis: [SUCCESS](https://travis-ci.com/github/flink-ci/flink/builds/161549021) Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=93) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (FLINK-17335) JDBCUpsertTableSink Upsert mysql exception No value specified for parameter 1

[

https://issues.apache.org/jira/browse/FLINK-17335?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

yutao updated FLINK-17335:

--

Description:

JDBCUpsertTableSink build = JDBCUpsertTableSink.builder()

.setTableSchema(results.getSchema())

.setOptions(JDBCOptions.builder()

.setDBUrl("MultiQueries=true=true=UTF-8")

.setDriverName("com.mysql.jdbc.Driver")

.setUsername("***")

.setPassword("***")

.setTableName("xkf_join_result")

.build())

.setFlushIntervalMills(1000)

.setFlushMaxSize(100)

.setMaxRetryTimes(3)

.build();

DataStream> retract = bsTableEnv.toRetractStream(results,

Row.class);

retract.print();

build.emitDataStream(retract);

java.sql.SQLException: No value specified for parameter 1

at com.mysql.jdbc.SQLError.createSQLException(SQLError.java:965)

at com.mysql.jdbc.SQLError.createSQLException(SQLError.java:898)

at com.mysql.jdbc.SQLError.createSQLException(SQLError.java:887)

at com.mysql.jdbc.SQLError.createSQLException(SQLError.java:861)

at

com.mysql.jdbc.PreparedStatement.checkAllParametersSet(PreparedStatement.java:2211)

at com.mysql.jdbc.PreparedStatement.fillSendPacket(PreparedStatement.java:2191)

at com.mysql.jdbc.PreparedStatement.fillSendPacket(PreparedStatement.java:2121)

at com.mysql.jdbc.PreparedStatement.execute(PreparedStatement.java:1162)

at

org.apache.flink.api.java.io.jdbc.writer.UpsertWriter.executeBatch(UpsertWriter.java:118)

at

org.apache.flink.api.java.io.jdbc.JDBCUpsertOutputFormat.flush(JDBCUpsertOutputFormat.java:159)

at

org.apache.flink.api.java.io.jdbc.JDBCUpsertSinkFunction.snapshotState(JDBCUpsertSinkFunction.java:56)

at

org.apache.flink.streaming.util.functions.StreamingFunctionUtils.trySnapshotFunctionState(StreamingFunctionUtils.java:118)

at

org.apache.flink.streaming.util.functions.StreamingFunctionUtils.snapshotFunctionState(StreamingFunctionUtils.java:99)

at

org.apache.flink.streaming.api.operators.AbstractUdfStreamOperator.snapshotState(AbstractUdfStreamOperator.java:90)

at

org.apache.flink.streaming.api.operators.AbstractStreamOperator.snapshotState(AbstractStreamOperator.java:402)

at

org.apache.flink.streaming.runtime.tasks.StreamTask$CheckpointingOperation.checkpointStreamOperator(StreamTask.java:1420)

at

org.apache.flink.streaming.runtime.tasks.StreamTask$CheckpointingOperation.executeCheckpointing(StreamTask.java:1354)

in code UpsertWriter you can see executeBatch() method ;when only one record

and tuple2’s first element is true then end for round

;deleteStatement.executeBatch(); get error

@Override

public void executeBatch() throws SQLException {

if (keyToRows.size() > 0) {

for (Map.Entry> entry : keyToRows.entrySet()) {

Row pk = entry.getKey();

Tuple2 tuple = entry.getValue();

if (tuple.f0) {

processOneRowInBatch(pk, tuple.f1);

} else {

setRecordToStatement(deleteStatement, pkTypes, pk);

deleteStatement.addBatch();

}

}

internalExecuteBatch();

deleteStatement.executeBatch();

keyToRows.clear();

}

> JDBCUpsertTableSink Upsert mysql exception No value specified for parameter 1

> -

>

> Key: FLINK-17335

> URL: https://issues.apache.org/jira/browse/FLINK-17335

> Project: Flink

> Issue Type: Bug

> Components: Connectors / JDBC

>Affects Versions: 1.10.0

>Reporter: yutao

>Priority: Major

>

> JDBCUpsertTableSink build = JDBCUpsertTableSink.builder()

> .setTableSchema(results.getSchema())

> .setOptions(JDBCOptions.builder()

> .setDBUrl("MultiQueries=true=true=UTF-8")

> .setDriverName("com.mysql.jdbc.Driver")

> .setUsername("***")

> .setPassword("***")

> .setTableName("xkf_join_result")

> .build())

> .setFlushIntervalMills(1000)

> .setFlushMaxSize(100)

> .setMaxRetryTimes(3)

> .build();

> DataStream> retract =

> bsTableEnv.toRetractStream(results, Row.class);

> retract.print();

> build.emitDataStream(retract);

> java.sql.SQLException: No value specified for parameter 1

> at com.mysql.jdbc.SQLError.createSQLException(SQLError.java:965)

> at com.mysql.jdbc.SQLError.createSQLException(SQLError.java:898)

> at com.mysql.jdbc.SQLError.createSQLException(SQLError.java:887)

> at com.mysql.jdbc.SQLError.createSQLException(SQLError.java:861)

> at

> com.mysql.jdbc.PreparedStatement.checkAllParametersSet(PreparedStatement.java:2211)

> at

> com.mysql.jdbc.PreparedStatement.fillSendPacket(PreparedStatement.java:2191)

> at

> com.mysql.jdbc.PreparedStatement.fillSendPacket(PreparedStatement.java:2121)

> at com.mysql.jdbc.PreparedStatement.execute(PreparedStatement.java:1162)

> at

> org.apache.flink.api.java.io.jdbc.writer.UpsertWriter.executeBatch(UpsertWriter.java:118)

> at

> org.apache.flink.api.java.io.jdbc.JDBCUpsertOutputFormat.flush(JDBCUpsertOutputFormat.java:159)

> at

> org.apache.flink.api.java.io.jdbc.JDBCUpsertSinkFunction.snapshotState(JDBCUpsertSinkFunction.java:56)

> at

>

[jira] [Created] (FLINK-17335) JDBCUpsertTableSink Upsert mysql exception No value specified for parameter 1

yutao created FLINK-17335: - Summary: JDBCUpsertTableSink Upsert mysql exception No value specified for parameter 1 Key: FLINK-17335 URL: https://issues.apache.org/jira/browse/FLINK-17335 Project: Flink Issue Type: Bug Components: Connectors / JDBC Affects Versions: 1.10.0 Reporter: yutao -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] flinkbot commented on issue #11872: [FLINK-17227][metrics]Remove Datadog shade-plugin relocations

flinkbot commented on issue #11872: URL: https://github.com/apache/flink/pull/11872#issuecomment-618188449 ## CI report: * 6ee34138baa74abb4b7c1f71ea98e360340a2b8c UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on issue #11870: [FLINK-17117][SQL-Blink]Remove useless cast class code for processElement method in SourceCon…

flinkbot edited a comment on issue #11870: URL: https://github.com/apache/flink/pull/11870#issuecomment-618183162 ## CI report: * c4c6356dfbd46e26171b6128482eecab13fe7d96 Travis: [PENDING](https://travis-ci.com/github/flink-ci/flink/builds/161563127) Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=101) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on issue #11797: [FLINK-17169][table-blink] Refactor BaseRow to use RowKind instead of byte header

flinkbot edited a comment on issue #11797: URL: https://github.com/apache/flink/pull/11797#issuecomment-615294694 ## CI report: * 85f40e3041783b1dbda1eb3b812f23e77936f7b3 UNKNOWN * b0730cb05f9d77f9d34ab7221020931ef5d2532d Travis: [SUCCESS](https://travis-ci.com/github/flink-ci/flink/builds/161547746) Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=91) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on issue #11869: [FLINK-17111][table] Support SHOW VIEWS in Flink SQL

flinkbot edited a comment on issue #11869: URL: https://github.com/apache/flink/pull/11869#issuecomment-618183108 ## CI report: * 28223e277ee06677a7c973300e3e1c85902874fd Travis: [PENDING](https://travis-ci.com/github/flink-ci/flink/builds/161563117) Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=100) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on issue #11766: [FLINK-16812][jdbc] support array types in PostgresRowConverter

flinkbot edited a comment on issue #11766: URL: https://github.com/apache/flink/pull/11766#issuecomment-614431072 ## CI report: * 3d58ff0f0f2f4caac54b5bc38dac153ae4f4ecf2 Travis: [SUCCESS](https://travis-ci.com/github/flink-ci/flink/builds/160495187) Azure: [SUCCESS](https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=7558) * 8f39828265ace10973087d314a1efe93c08c1ea0 Travis: [PENDING](https://travis-ci.com/github/flink-ci/flink/builds/161563026) Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=98) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot commented on issue #11871: [FLINK-17333][doc] add doc for 'create catalog' ddl

flinkbot commented on issue #11871: URL: https://github.com/apache/flink/pull/11871#issuecomment-618188399 ## CI report: * ac40522a46f3f22f747e1196d45d91543cc6a87a UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on issue #11859: [FLINK-16485][python] Support vectorized Python UDF in batch mode of old planner

flinkbot edited a comment on issue #11859: URL: https://github.com/apache/flink/pull/11859#issuecomment-617655293 ## CI report: * d7d37b26b6dc41871ee56900f8e9b6ed16b3fcf6 Travis: [SUCCESS](https://travis-ci.com/github/flink-ci/flink/builds/161549162) Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=94) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on issue #11867: [FLINK-17309][e2e tests][WIP]TPC-DS fail to run data generator

flinkbot edited a comment on issue #11867: URL: https://github.com/apache/flink/pull/11867#issuecomment-617863974 ## CI report: * 9c3d9347f989a84184f598190271f5e0b4703ba0 Travis: [SUCCESS](https://travis-ci.com/github/flink-ci/flink/builds/161547778) Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=92) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on issue #11804: [FLINK-16473][doc][jdbc] add documentation for JDBCCatalog and PostgresCatalog

flinkbot edited a comment on issue #11804: URL: https://github.com/apache/flink/pull/11804#issuecomment-615960634 ## CI report: * d467bd31393f9dc171b6625f9053360b73bfd64d Travis: [SUCCESS](https://travis-ci.com/github/flink-ci/flink/builds/161487842) Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=86) * b87a6c85fdcb0e63257ae3ee917837bff41c68ed Travis: [PENDING](https://travis-ci.com/github/flink-ci/flink/builds/161563068) Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=99) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot commented on issue #11870: [FLINK-17117][SQL-Blink]Remove useless cast class code for processElement method in SourceCon…

flinkbot commented on issue #11870: URL: https://github.com/apache/flink/pull/11870#issuecomment-618183162 ## CI report: * c4c6356dfbd46e26171b6128482eecab13fe7d96 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on issue #11804: [FLINK-16473][doc][jdbc] add documentation for JDBCCatalog and PostgresCatalog

flinkbot edited a comment on issue #11804: URL: https://github.com/apache/flink/pull/11804#issuecomment-615960634 ## CI report: * d467bd31393f9dc171b6625f9053360b73bfd64d Travis: [SUCCESS](https://travis-ci.com/github/flink-ci/flink/builds/161487842) Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=86) * b87a6c85fdcb0e63257ae3ee917837bff41c68ed UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot commented on issue #11869: [FLINK-17111][table] Support SHOW VIEWS in Flink SQL

flinkbot commented on issue #11869: URL: https://github.com/apache/flink/pull/11869#issuecomment-618183108 ## CI report: * 28223e277ee06677a7c973300e3e1c85902874fd UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on issue #11859: [FLINK-16485][python] Support vectorized Python UDF in batch mode of old planner

flinkbot edited a comment on issue #11859: URL: https://github.com/apache/flink/pull/11859#issuecomment-617655293 ## CI report: * d7d37b26b6dc41871ee56900f8e9b6ed16b3fcf6 Travis: [SUCCESS](https://travis-ci.com/github/flink-ci/flink/builds/161549162) Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=94) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on issue #11766: [FLINK-16812][jdbc] support array types in PostgresRowConverter

flinkbot edited a comment on issue #11766: URL: https://github.com/apache/flink/pull/11766#issuecomment-614431072 ## CI report: * 3d58ff0f0f2f4caac54b5bc38dac153ae4f4ecf2 Travis: [SUCCESS](https://travis-ci.com/github/flink-ci/flink/builds/160495187) Azure: [SUCCESS](https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=7558) * 8f39828265ace10973087d314a1efe93c08c1ea0 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot commented on issue #11871: [FLINK-17333][doc] add doc for 'create catalog' ddl

flinkbot commented on issue #11871: URL: https://github.com/apache/flink/pull/11871#issuecomment-618182406 Thanks a lot for your contribution to the Apache Flink project. I'm the @flinkbot. I help the community to review your pull request. We will use this comment to track the progress of the review. ## Automated Checks Last check on commit ac40522a46f3f22f747e1196d45d91543cc6a87a (Thu Apr 23 05:14:31 UTC 2020) ✅no warnings Mention the bot in a comment to re-run the automated checks. ## Review Progress * ❓ 1. The [description] looks good. * ❓ 2. There is [consensus] that the contribution should go into to Flink. * ❓ 3. Needs [attention] from. * ❓ 4. The change fits into the overall [architecture]. * ❓ 5. Overall code [quality] is good. Please see the [Pull Request Review Guide](https://flink.apache.org/contributing/reviewing-prs.html) for a full explanation of the review process. The Bot is tracking the review progress through labels. Labels are applied according to the order of the review items. For consensus, approval by a Flink committer of PMC member is required Bot commands The @flinkbot bot supports the following commands: - `@flinkbot approve description` to approve one or more aspects (aspects: `description`, `consensus`, `architecture` and `quality`) - `@flinkbot approve all` to approve all aspects - `@flinkbot approve-until architecture` to approve everything until `architecture` - `@flinkbot attention @username1 [@username2 ..]` to require somebody's attention - `@flinkbot disapprove architecture` to remove an approval you gave earlier This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Assigned] (FLINK-17289) Translate tutorials/etl.md to chinese

[ https://issues.apache.org/jira/browse/FLINK-17289?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Jingsong Lee reassigned FLINK-17289: Assignee: Li Ying > Translate tutorials/etl.md to chinese > - > > Key: FLINK-17289 > URL: https://issues.apache.org/jira/browse/FLINK-17289 > Project: Flink > Issue Type: Improvement > Components: chinese-translation, Documentation / Training >Reporter: David Anderson >Assignee: Li Ying >Priority: Major > > This is one of the new tutorials, and it needs translation. > docs/tutorials/etl.zh.md does not exist yet. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] flinkbot commented on issue #11872: [FLINK-17227][metrics]Remove Datadog shade-plugin relocations

flinkbot commented on issue #11872: URL: https://github.com/apache/flink/pull/11872#issuecomment-618182391 Thanks a lot for your contribution to the Apache Flink project. I'm the @flinkbot. I help the community to review your pull request. We will use this comment to track the progress of the review. ## Automated Checks Last check on commit 6ee34138baa74abb4b7c1f71ea98e360340a2b8c (Thu Apr 23 05:14:29 UTC 2020) **Warnings:** * **1 pom.xml files were touched**: Check for build and licensing issues. * No documentation files were touched! Remember to keep the Flink docs up to date! Mention the bot in a comment to re-run the automated checks. ## Review Progress * ❓ 1. The [description] looks good. * ❓ 2. There is [consensus] that the contribution should go into to Flink. * ❓ 3. Needs [attention] from. * ❓ 4. The change fits into the overall [architecture]. * ❓ 5. Overall code [quality] is good. Please see the [Pull Request Review Guide](https://flink.apache.org/contributing/reviewing-prs.html) for a full explanation of the review process. The Bot is tracking the review progress through labels. Labels are applied according to the order of the review items. For consensus, approval by a Flink committer of PMC member is required Bot commands The @flinkbot bot supports the following commands: - `@flinkbot approve description` to approve one or more aspects (aspects: `description`, `consensus`, `architecture` and `quality`) - `@flinkbot approve all` to approve all aspects - `@flinkbot approve-until architecture` to approve everything until `architecture` - `@flinkbot attention @username1 [@username2 ..]` to require somebody's attention - `@flinkbot disapprove architecture` to remove an approval you gave earlier This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (FLINK-17289) Translate tutorials/etl.md to chinese

[ https://issues.apache.org/jira/browse/FLINK-17289?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17090254#comment-17090254 ] Jingsong Lee commented on FLINK-17289: -- [~lyee] Assigned. > Translate tutorials/etl.md to chinese > - > > Key: FLINK-17289 > URL: https://issues.apache.org/jira/browse/FLINK-17289 > Project: Flink > Issue Type: Improvement > Components: chinese-translation, Documentation / Training >Reporter: David Anderson >Assignee: Li Ying >Priority: Major > > This is one of the new tutorials, and it needs translation. > docs/tutorials/etl.zh.md does not exist yet. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Comment Edited] (FLINK-17289) Translate tutorials/etl.md to chinese

[ https://issues.apache.org/jira/browse/FLINK-17289?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17090188#comment-17090188 ] Li Ying edited comment on FLINK-17289 at 4/23/20, 5:11 AM: --- Hi David, I'd like to do the translation. Could you please assign this job to me :) was (Author: lyee): Hi David, I'd like to do the translation. Could you please assigh this job to me :) > Translate tutorials/etl.md to chinese > - > > Key: FLINK-17289 > URL: https://issues.apache.org/jira/browse/FLINK-17289 > Project: Flink > Issue Type: Improvement > Components: chinese-translation, Documentation / Training >Reporter: David Anderson >Priority: Major > > This is one of the new tutorials, and it needs translation. > docs/tutorials/etl.zh.md does not exist yet. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (FLINK-17334) Flink does not support HIVE UDFs with primitive return types

[ https://issues.apache.org/jira/browse/FLINK-17334?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17090249#comment-17090249 ] Jingsong Lee commented on FLINK-17334: -- Hi [~royruan] thanks for reporting. Can you provide more information? Like what hive UDF? Maybe you can show the code. > Flink does not support HIVE UDFs with primitive return types > - > > Key: FLINK-17334 > URL: https://issues.apache.org/jira/browse/FLINK-17334 > Project: Flink > Issue Type: Bug > Components: Connectors / Hive >Affects Versions: 1.10.0 >Reporter: xin.ruan >Priority: Major > Fix For: 1.10.1 > > Original Estimate: 72h > Remaining Estimate: 72h > > We are currently migrating Hive UDF to Flink. While testing compatibility, we > found that Flink cannot support primitive types like boolean, int, etc. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (FLINK-17333) add doc for "create ddl"

[ https://issues.apache.org/jira/browse/FLINK-17333?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated FLINK-17333: --- Labels: pull-request-available (was: ) > add doc for "create ddl" > > > Key: FLINK-17333 > URL: https://issues.apache.org/jira/browse/FLINK-17333 > Project: Flink > Issue Type: Improvement > Components: Documentation, Table SQL / API >Reporter: Bowen Li >Assignee: Bowen Li >Priority: Major > Labels: pull-request-available > Fix For: 1.11.0 > > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (FLINK-17333) add doc for 'create catalog' ddl

[ https://issues.apache.org/jira/browse/FLINK-17333?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Bowen Li updated FLINK-17333: - Summary: add doc for 'create catalog' ddl (was: add doc for "create ddl") > add doc for 'create catalog' ddl > > > Key: FLINK-17333 > URL: https://issues.apache.org/jira/browse/FLINK-17333 > Project: Flink > Issue Type: Improvement > Components: Documentation, Table SQL / API >Reporter: Bowen Li >Assignee: Bowen Li >Priority: Major > Labels: pull-request-available > Fix For: 1.11.0 > > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Closed] (FLINK-17209) Allow users to specify dialect in sql-client yaml

[ https://issues.apache.org/jira/browse/FLINK-17209?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Jingsong Lee closed FLINK-17209. Resolution: Fixed master: aa489269a1429f25136765af94b05d10ef5b7fd3 > Allow users to specify dialect in sql-client yaml > - > > Key: FLINK-17209 > URL: https://issues.apache.org/jira/browse/FLINK-17209 > Project: Flink > Issue Type: Sub-task > Components: Table SQL / Client >Reporter: Rui Li >Assignee: Rui Li >Priority: Major > Labels: pull-request-available > Fix For: 1.11.0 > > Time Spent: 10m > Remaining Estimate: 0h > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Assigned] (FLINK-17198) DDL and DML compatibility for Hive connector

[ https://issues.apache.org/jira/browse/FLINK-17198?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Jingsong Lee reassigned FLINK-17198: Assignee: Rui Li > DDL and DML compatibility for Hive connector > > > Key: FLINK-17198 > URL: https://issues.apache.org/jira/browse/FLINK-17198 > Project: Flink > Issue Type: New Feature > Components: Connectors / Hive, Table SQL / Client >Reporter: Rui Li >Assignee: Rui Li >Priority: Major > Fix For: 1.11.0 > > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (FLINK-17227) Remove Datadog relocations

[ https://issues.apache.org/jira/browse/FLINK-17227?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated FLINK-17227: --- Labels: pull-request-available (was: ) > Remove Datadog relocations > -- > > Key: FLINK-17227 > URL: https://issues.apache.org/jira/browse/FLINK-17227 > Project: Flink > Issue Type: Sub-task > Components: Runtime / Metrics >Reporter: Chesnay Schepler >Assignee: molsion mo >Priority: Major > Labels: pull-request-available > Fix For: 1.11.0 > > > Now that we load the Datadog reporter as a plugin we should remove the > shade-plugin configuration/relocations. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (FLINK-17209) Allow users to specify dialect in sql-client yaml

[ https://issues.apache.org/jira/browse/FLINK-17209?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Jingsong Lee updated FLINK-17209: - Fix Version/s: 1.11.0 > Allow users to specify dialect in sql-client yaml > - > > Key: FLINK-17209 > URL: https://issues.apache.org/jira/browse/FLINK-17209 > Project: Flink > Issue Type: Sub-task > Components: Table SQL / Client >Reporter: Rui Li >Priority: Major > Labels: pull-request-available > Fix For: 1.11.0 > > Time Spent: 10m > Remaining Estimate: 0h > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] molsionmo opened a new pull request #11872: [FLINK-17227][metrics]Remove Datadog shade-plugin relocations

molsionmo opened a new pull request #11872: URL: https://github.com/apache/flink/pull/11872 ## What is the purpose of the change *Now that we load the Datadog reporters as plugins we should remove the shade-plugin configuration/relocations.* ## Verifying this change This change is a trivial rework / code cleanup without any test coverage. ## Does this pull request potentially affect one of the following parts: - Dependencies (does it add or upgrade a dependency): (no) - The public API, i.e., is any changed class annotated with `@Public(Evolving)`: (no) - The serializers: (no) - The runtime per-record code paths (performance sensitive): (no) - Anything that affects deployment or recovery: JobManager (and its components), Checkpointing, Kubernetes/Yarn/Mesos, ZooKeeper: (no) - The S3 file system connector: (no) ## Documentation - Does this pull request introduce a new feature? (no) - If yes, how is the feature documented? (not documented) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Assigned] (FLINK-17209) Allow users to specify dialect in sql-client yaml

[ https://issues.apache.org/jira/browse/FLINK-17209?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Jingsong Lee reassigned FLINK-17209: Assignee: Rui Li > Allow users to specify dialect in sql-client yaml > - > > Key: FLINK-17209 > URL: https://issues.apache.org/jira/browse/FLINK-17209 > Project: Flink > Issue Type: Sub-task > Components: Table SQL / Client >Reporter: Rui Li >Assignee: Rui Li >Priority: Major > Labels: pull-request-available > Fix For: 1.11.0 > > Time Spent: 10m > Remaining Estimate: 0h > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] bowenli86 opened a new pull request #11871: [FLINK-17333][doc] add doc for 'create catalog' ddl

bowenli86 opened a new pull request #11871: URL: https://github.com/apache/flink/pull/11871 ## What is the purpose of the change add doc for "create catalog" ddl ## Brief change log ## Verifying this change This change is a trivial rework / code cleanup without any test coverage. ## Does this pull request potentially affect one of the following parts: n/a ## Documentation - Does this pull request introduce a new feature? (no) - If yes, how is the feature documented? (docs) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] bowenli86 commented on issue #11766: [FLINK-16812][jdbc] support array types in PostgresRowConverter

bowenli86 commented on issue #11766: URL: https://github.com/apache/flink/pull/11766#issuecomment-618180474 @wuchong addressed comments. pls take another look This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] bowenli86 commented on issue #11804: [FLINK-16473][doc][jdbc] add documentation for JDBCCatalog and PostgresCatalog

bowenli86 commented on issue #11804: URL: https://github.com/apache/flink/pull/11804#issuecomment-618180336 @wuchong can you take another look? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (FLINK-17334) Flink does not support HIVE UDFs with primitive return types

[ https://issues.apache.org/jira/browse/FLINK-17334?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] xin.ruan updated FLINK-17334: - Summary: Flink does not support HIVE UDFs with primitive return types (was: Flink does not support UDFs with primitive return types) > Flink does not support HIVE UDFs with primitive return types > - > > Key: FLINK-17334 > URL: https://issues.apache.org/jira/browse/FLINK-17334 > Project: Flink > Issue Type: Bug > Components: Connectors / Hive >Affects Versions: 1.10.0 >Reporter: xin.ruan >Priority: Major > Fix For: 1.10.1 > > Original Estimate: 72h > Remaining Estimate: 72h > > We are currently migrating Hive UDF to Flink. While testing compatibility, we > found that Flink cannot support primitive types like boolean, int, etc. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Created] (FLINK-17334) Flink does not support UDFs with primitive return types

xin.ruan created FLINK-17334: Summary: Flink does not support UDFs with primitive return types Key: FLINK-17334 URL: https://issues.apache.org/jira/browse/FLINK-17334 Project: Flink Issue Type: Bug Components: Connectors / Hive Affects Versions: 1.10.0 Reporter: xin.ruan Fix For: 1.10.1 We are currently migrating Hive UDF to Flink. While testing compatibility, we found that Flink cannot support primitive types like boolean, int, etc. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Resolved] (FLINK-17138) LocalExecutorITCase.testParameterizedTypes failed on travis

[

https://issues.apache.org/jira/browse/FLINK-17138?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Jingsong Lee resolved FLINK-17138.

--

Resolution: Fixed

master: 27d1a48cc5c1ef7baf506d7c0db4d01ebdca6b70

Feel free to re-open if it is reproduced.

> LocalExecutorITCase.testParameterizedTypes failed on travis

> ---

>

> Key: FLINK-17138

> URL: https://issues.apache.org/jira/browse/FLINK-17138

> Project: Flink

> Issue Type: Bug

> Components: Table SQL / Planner

>Affects Versions: 1.10.0

>Reporter: Piotr Nowojski

>Assignee: Rui Li

>Priority: Major

> Labels: pull-request-available

> Fix For: 1.11.0

>

> Time Spent: 10m

> Remaining Estimate: 0h

>

> https://api.travis-ci.org/v3/job/674770944/log.txt

> release-1.10 branch build failed with

> {code}

> 11:49:51.608 [INFO] Running

> org.apache.flink.table.client.gateway.local.LocalExecutorITCase

> 11:52:40.202 [ERROR] Tests run: 64, Failures: 0, Errors: 1, Skipped: 5, Time

> elapsed: 168.589 s <<< FAILURE! - in

> org.apache.flink.table.client.gateway.local.LocalExecutorITCase

> 11:52:40.209 [ERROR] testParameterizedTypes[Planner:

> blink](org.apache.flink.table.client.gateway.local.LocalExecutorITCase) Time

> elapsed: 5.609 s <<< ERROR!

> org.apache.flink.table.client.gateway.SqlExecutionException: Invalid SQL

> statement.

> at

> org.apache.flink.table.client.gateway.local.LocalExecutorITCase.testParameterizedTypes(LocalExecutorITCase.java:903)

> Caused by: org.apache.flink.table.api.ValidationException: SQL validation

> failed. Failed to get PrimaryKey constraints

> at

> org.apache.flink.table.client.gateway.local.LocalExecutorITCase.testParameterizedTypes(LocalExecutorITCase.java:903)

> Caused by: org.apache.flink.table.catalog.exceptions.CatalogException: Failed

> to get PrimaryKey constraints

> at

> org.apache.flink.table.client.gateway.local.LocalExecutorITCase.testParameterizedTypes(LocalExecutorITCase.java:903)

> Caused by: java.lang.reflect.InvocationTargetException

> at

> org.apache.flink.table.client.gateway.local.LocalExecutorITCase.testParameterizedTypes(LocalExecutorITCase.java:903)

> Caused by: org.apache.hadoop.hive.metastore.api.MetaException: No current

> connection.

> at

> org.apache.flink.table.client.gateway.local.LocalExecutorITCase.testParameterizedTypes(LocalExecutorITCase.java:903)

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [flink] flinkbot commented on issue #11870: [FLINK-17117][SQL-Blink]Remove useless cast class code for processElement method in SourceCon…

flinkbot commented on issue #11870: URL: https://github.com/apache/flink/pull/11870#issuecomment-618179221 Thanks a lot for your contribution to the Apache Flink project. I'm the @flinkbot. I help the community to review your pull request. We will use this comment to track the progress of the review. ## Automated Checks Last check on commit c4c6356dfbd46e26171b6128482eecab13fe7d96 (Thu Apr 23 05:02:05 UTC 2020) **Warnings:** * No documentation files were touched! Remember to keep the Flink docs up to date! Mention the bot in a comment to re-run the automated checks. ## Review Progress * ❓ 1. The [description] looks good. * ❓ 2. There is [consensus] that the contribution should go into to Flink. * ❓ 3. Needs [attention] from. * ❓ 4. The change fits into the overall [architecture]. * ❓ 5. Overall code [quality] is good. Please see the [Pull Request Review Guide](https://flink.apache.org/contributing/reviewing-prs.html) for a full explanation of the review process. The Bot is tracking the review progress through labels. Labels are applied according to the order of the review items. For consensus, approval by a Flink committer of PMC member is required Bot commands The @flinkbot bot supports the following commands: - `@flinkbot approve description` to approve one or more aspects (aspects: `description`, `consensus`, `architecture` and `quality`) - `@flinkbot approve all` to approve all aspects - `@flinkbot approve-until architecture` to approve everything until `architecture` - `@flinkbot attention @username1 [@username2 ..]` to require somebody's attention - `@flinkbot disapprove architecture` to remove an approval you gave earlier This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Created] (FLINK-17333) add doc for "create ddl"

Bowen Li created FLINK-17333: Summary: add doc for "create ddl" Key: FLINK-17333 URL: https://issues.apache.org/jira/browse/FLINK-17333 Project: Flink Issue Type: Improvement Components: Documentation, Table SQL / API Reporter: Bowen Li Assignee: Bowen Li Fix For: 1.11.0 -- This message was sent by Atlassian Jira (v8.3.4#803005)

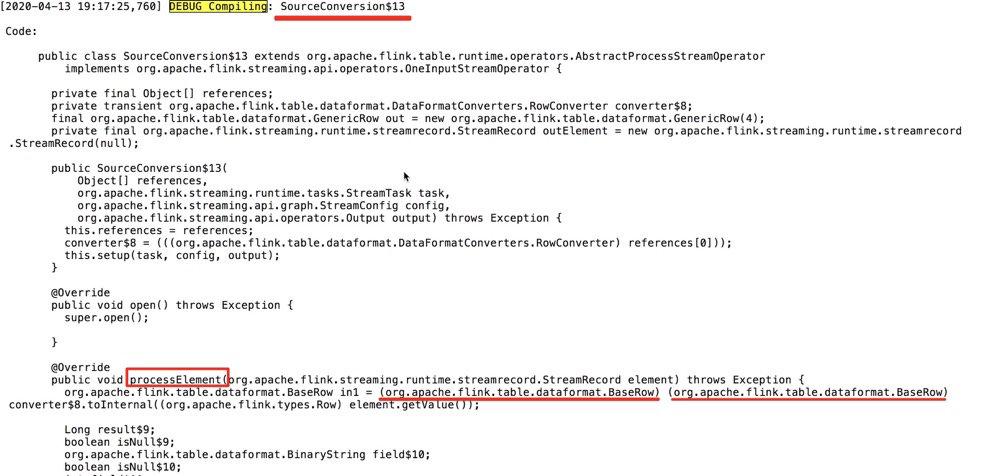

[GitHub] [flink] hehuiyuan opened a new pull request #11870: [FLINK-17117][SQL-Blink]Remove useless cast class code for processElement method in SourceCon…

hehuiyuan opened a new pull request #11870:

URL: https://github.com/apache/flink/pull/11870

This method `generateOneInputStreamOperator` when OperatorCodeGenerator

generates SourceConversion:

```

@Override

public void processElement($STREAM_RECORD $ELEMENT) throws Exception {

$inputTypeTerm $inputTerm = ($inputTypeTerm)

${converter(s"$ELEMENT.getValue()")};

${ctx.reusePerRecordCode()}

${ctx.reuseLocalVariableCode()}

${if (lazyInputUnboxingCode) "" else ctx.reuseInputUnboxingCode()}

$processCode

}

$inputTypeTerm $inputTerm = ($inputTypeTerm)

${converter(s"$ELEMENT.getValue()")};

ScanUtil calls generateOneInputStreamOperator

val generatedOperator =

OperatorCodeGenerator.generateOneInputStreamOperator[Any, BaseRow](

ctx,

convertName,

processCode,

outputRowType,

converter = inputTermConverter)

//inputTermConverter

val (inputTermConverter, inputRowType) = {

val convertFunc = CodeGenUtils.genToInternal(ctx, inputType)

internalInType match {

case rt: RowType => (convertFunc, rt)

case _ => ((record: String) =>

s"$GENERIC_ROW.of(${convertFunc(record)})",

RowType.of(internalInType))

}

}

```

There is an useless cast class code:

```

$inputTypeTerm $inputTerm = ($inputTypeTerm)

${converter(s"$ELEMENT.getValue()")};

```

CodeGenUtils.scala : genToInternal

```

def genToInternal(ctx: CodeGeneratorContext, t: DataType): String => String

= {

val iTerm = boxedTypeTermForType(fromDataTypeToLogicalType(t))

if (isConverterIdentity(t)) {

term => s"($iTerm) $term"

} else {

val eTerm = boxedTypeTermForExternalType(t)

val converter = ctx.addReusableObject(

DataFormatConverters.getConverterForDataType(t),

"converter")

term => s"($iTerm) $converter.toInternal(($eTerm) $term)"

}

}

```

The code `($iTerm) ` and `($inputTypeTerm)` are same.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Updated] (FLINK-17117) There are an useless cast operation for sql on blink when generate code

[

https://issues.apache.org/jira/browse/FLINK-17117?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

ASF GitHub Bot updated FLINK-17117:

---

Labels: pull-request-available (was: )

> There are an useless cast operation for sql on blink when generate code

>

>

> Key: FLINK-17117

> URL: https://issues.apache.org/jira/browse/FLINK-17117

> Project: Flink

> Issue Type: Wish

> Components: Table SQL / Planner

>Reporter: hehuiyuan

>Assignee: hehuiyuan

>Priority: Minor

> Labels: pull-request-available

> Attachments: image-2020-04-13-19-44-19-174.png

>

>

> !image-2020-04-13-19-44-19-174.png|width=641,height=305!

>

> This mehthod `generateOneInputStreamOperator` when OperatorCodeGenerator

> generates SourceConversion:

> {code:java}

> @Override

> public void processElement($STREAM_RECORD $ELEMENT) throws Exception {

> $inputTypeTerm $inputTerm = ($inputTypeTerm)

> ${converter(s"$ELEMENT.getValue()")};

> ${ctx.reusePerRecordCode()}

> ${ctx.reuseLocalVariableCode()}

> ${if (lazyInputUnboxingCode) "" else ctx.reuseInputUnboxingCode()}

> $processCode

> }

> {code}

>

> {code:java}

> $inputTypeTerm $inputTerm = ($inputTypeTerm)

> ${converter(s"$ELEMENT.getValue()")};

> {code}

> ScanUtil calls generateOneInputStreamOperator

> {code:java}

> val generatedOperator =

> OperatorCodeGenerator.generateOneInputStreamOperator[Any, BaseRow](

> ctx,

> convertName,

> processCode,

> outputRowType,

> converter = inputTermConverter)

> //inputTermConverter

> val (inputTermConverter, inputRowType) = {

> val convertFunc = CodeGenUtils.genToInternal(ctx, inputType)

> internalInType match {

> case rt: RowType => (convertFunc, rt)

> case _ => ((record: String) => s"$GENERIC_ROW.of(${convertFunc(record)})",

> RowType.of(internalInType))

> }

> }

> {code}

> CodeGenUtils.scala : genToInternal

> {code:java}

> def genToInternal(ctx: CodeGeneratorContext, t: DataType): String => String =

> {

> val iTerm = boxedTypeTermForType(fromDataTypeToLogicalType(t))

> if (isConverterIdentity(t)) {

> term => s"($iTerm) $term"

> } else {

> val eTerm = boxedTypeTermForExternalType(t)

> val converter = ctx.addReusableObject(

> DataFormatConverters.getConverterForDataType(t),

> "converter")

> term => s"($iTerm) $converter.toInternal(($eTerm) $term)"

> }

> }

> {code}

>

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [flink] flinkbot edited a comment on issue #10059: [FLINK-14543][FLINK-15901][table] Support partition for temporary table and HiveCatalog

flinkbot edited a comment on issue #10059: URL: https://github.com/apache/flink/pull/10059#issuecomment-548289939 ## CI report: * 5f91592c6f010dbb52511c54568c5d3c82082433 UNKNOWN * 13dab75e74ed139bb8802dcf2de0ef87464f046b Travis: [SUCCESS](https://travis-ci.com/github/flink-ci/flink/builds/161549021) Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=93) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot commented on issue #11869: [FLINK-17111][table] Support SHOW VIEWS in Flink SQL

flinkbot commented on issue #11869: URL: https://github.com/apache/flink/pull/11869#issuecomment-618175194 Thanks a lot for your contribution to the Apache Flink project. I'm the @flinkbot. I help the community to review your pull request. We will use this comment to track the progress of the review. ## Automated Checks Last check on commit 28223e277ee06677a7c973300e3e1c85902874fd (Thu Apr 23 04:47:15 UTC 2020) **Warnings:** * No documentation files were touched! Remember to keep the Flink docs up to date! * **This pull request references an unassigned [Jira ticket](https://issues.apache.org/jira/browse/FLINK-17111).** According to the [code contribution guide](https://flink.apache.org/contributing/contribute-code.html), tickets need to be assigned before starting with the implementation work. Mention the bot in a comment to re-run the automated checks. ## Review Progress * ❓ 1. The [description] looks good. * ❓ 2. There is [consensus] that the contribution should go into to Flink. * ❓ 3. Needs [attention] from. * ❓ 4. The change fits into the overall [architecture]. * ❓ 5. Overall code [quality] is good. Please see the [Pull Request Review Guide](https://flink.apache.org/contributing/reviewing-prs.html) for a full explanation of the review process. The Bot is tracking the review progress through labels. Labels are applied according to the order of the review items. For consensus, approval by a Flink committer of PMC member is required Bot commands The @flinkbot bot supports the following commands: - `@flinkbot approve description` to approve one or more aspects (aspects: `description`, `consensus`, `architecture` and `quality`) - `@flinkbot approve all` to approve all aspects - `@flinkbot approve-until architecture` to approve everything until `architecture` - `@flinkbot attention @username1 [@username2 ..]` to require somebody's attention - `@flinkbot disapprove architecture` to remove an approval you gave earlier This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (FLINK-17111) Support SHOW VIEWS in Flink SQL

[ https://issues.apache.org/jira/browse/FLINK-17111?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated FLINK-17111: --- Labels: pull-request-available (was: ) > Support SHOW VIEWS in Flink SQL > > > Key: FLINK-17111 > URL: https://issues.apache.org/jira/browse/FLINK-17111 > Project: Flink > Issue Type: Sub-task > Components: Table SQL / API, Table SQL / Planner >Affects Versions: 1.10.0 >Reporter: Zhenghua Gao >Priority: Major > Labels: pull-request-available > Fix For: 1.11.0 > > > SHOW TABLES and SHOW VIEWS are not SQL standard-compliant commands. > MySQL supports SHOW TABLES which lists the non-TEMPORARY tables(and views) in > a given database, and doesn't support SHOW VIEWS. > Oracle/SQL Server/PostgreSQL don't support SHOW TABLES and SHOW VIEWS. A > workaround is to query a system table which stores metadata of tables and > views. > Hive supports both SHOW TABLES and SHOW VIEWS. > We follows the Hive style which lists all tables and views with SHOW TABLES > and lists only views with SHOW VIEWS. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] docete opened a new pull request #11869: [FLINK-17111][table] Support SHOW VIEWS in Flink SQL

docete opened a new pull request #11869: URL: https://github.com/apache/flink/pull/11869 ## What is the purpose of the change FLINK-17106 introduces create/drop view in Flink SQL. But we can't list views from TableEnvironment or SQL. This PR supports SHOW VIEWS in Flink SQL. BTW: We follows the Hive style which lists all tables and views with SHOW TABLES and lists only views with SHOW VIEWS. ## Brief change log - 546d367 Add show views syntax in sql parser - fe6f4d9 Add listViews interface in TableEnvironment - 39fb3f9 hotfix create/drop view in batch mode for legacy planner - 05288fe Support SHOW VIEWS in blink planner - 28223e2 Support SHOW VIEWS in legacy planner ## Verifying this change This change added tests ## Does this pull request potentially affect one of the following parts: - Dependencies (does it add or upgrade a dependency): (yes / **no**) - The public API, i.e., is any changed class annotated with `@Public(Evolving)`: (**yes** / no) - The serializers: (yes / **no** / don't know) - The runtime per-record code paths (performance sensitive): (yes / **no** / don't know) - Anything that affects deployment or recovery: JobManager (and its components), Checkpointing, Yarn/Mesos, ZooKeeper: (yes / **no** / don't know) - The S3 file system connector: (yes / **no** / don't know) ## Documentation - Does this pull request introduce a new feature? (**yes** / no) - If yes, how is the feature documented? (not applicable / docs / **JavaDocs** / not documented) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on issue #11867: [FLINK-17309][e2e tests][WIP]TPC-DS fail to run data generator

flinkbot edited a comment on issue #11867: URL: https://github.com/apache/flink/pull/11867#issuecomment-617863974 ## CI report: * 9c3d9347f989a84184f598190271f5e0b4703ba0 Travis: [SUCCESS](https://travis-ci.com/github/flink-ci/flink/builds/161547778) Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=92) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Created] (FLINK-17332) Fix restart policy not equals to Never for native task manager pods

Canbin Zheng created FLINK-17332:

Summary: Fix restart policy not equals to Never for native task

manager pods

Key: FLINK-17332

URL: https://issues.apache.org/jira/browse/FLINK-17332

Project: Flink

Issue Type: Bug

Components: Deployment / Kubernetes

Affects Versions: 1.10.0, 1.10.1

Reporter: Canbin Zheng

Fix For: 1.11.0

Currently, we do not explicitly set the {{RestartPolicy}} for the TaskManager

Pod in native K8s setups so that it is {{Always}} by default. The task manager

pod itself should not restart the failed Container, the decision should always

made by the job manager.

Therefore, this ticket proposes to set the {{RestartPolicy}} to {{Never}} for

the task manager pods.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Updated] (FLINK-17332) Fix restart policy not equals to Never for native task manager pods

[

https://issues.apache.org/jira/browse/FLINK-17332?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Canbin Zheng updated FLINK-17332:

-

Description:

Currently, we do not explicitly set the {{RestartPolicy}} for the task manager

pods in the native K8s setups so that it is {{Always}} by default. The task

manager pod itself should not restart the failed Container, the decision should

always be made by the job manager.

Therefore, this ticket proposes to set the {{RestartPolicy}} to {{Never}} for

the task manager pods.

was:

Currently, we do not explicitly set the {{RestartPolicy}} for the TaskManager

Pod in native K8s setups so that it is {{Always}} by default. The task manager

pod itself should not restart the failed Container, the decision should always

made by the job manager.

Therefore, this ticket proposes to set the {{RestartPolicy}} to {{Never}} for

the task manager pods.

> Fix restart policy not equals to Never for native task manager pods

> ---

>

> Key: FLINK-17332

> URL: https://issues.apache.org/jira/browse/FLINK-17332

> Project: Flink

> Issue Type: Bug

> Components: Deployment / Kubernetes

>Affects Versions: 1.10.0, 1.10.1

>Reporter: Canbin Zheng

>Priority: Major

> Fix For: 1.11.0

>

>

> Currently, we do not explicitly set the {{RestartPolicy}} for the task

> manager pods in the native K8s setups so that it is {{Always}} by default.

> The task manager pod itself should not restart the failed Container, the

> decision should always be made by the job manager.

> Therefore, this ticket proposes to set the {{RestartPolicy}} to {{Never}} for

> the task manager pods.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [flink] flinkbot edited a comment on issue #11797: [FLINK-17169][table-blink] Refactor BaseRow to use RowKind instead of byte header

flinkbot edited a comment on issue #11797: URL: https://github.com/apache/flink/pull/11797#issuecomment-615294694 ## CI report: * 85f40e3041783b1dbda1eb3b812f23e77936f7b3 UNKNOWN * b0730cb05f9d77f9d34ab7221020931ef5d2532d Travis: [SUCCESS](https://travis-ci.com/github/flink-ci/flink/builds/161547746) Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=91) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Created] (FLINK-17331) Add NettyMessageContent interface for all the class which could be write to NettyMessage

Yangze Guo created FLINK-17331:

--

Summary: Add NettyMessageContent interface for all the class which

could be write to NettyMessage

Key: FLINK-17331

URL: https://issues.apache.org/jira/browse/FLINK-17331

Project: Flink

Issue Type: Improvement

Reporter: Yangze Guo

Currently, there are some classes, e.g. {{JobVertexID}}, {{ExecutionAttemptID}}

need to write to {{NettyMessage}}. However, the size of these classes in

{{ByteBuf}} are directly written in {{NettyMessage}} class, which is

error-prone. If someone edits those classes, there would be no warning or error

during the compile phase. I think it would be better to add a

{{NettyMessageContent}}(the name could be discussed) interface:

{code:java}

public interface NettyMessageContent {

void writeTo(ByteBuf bug)

int getContentLen();

}

{code}

Regarding the {{fromByteBuf}}, since it is a static method, we could not add it

to the interface. We might explain it in the javaDoc of {{NettyMessageContent}}.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Updated] (FLINK-17330) Avoid scheduling deadlocks caused by intra-logical-region ALL-to-ALL blocking edges

[

https://issues.apache.org/jira/browse/FLINK-17330?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Zhu Zhu updated FLINK-17330:

Description:

Imagine a job like this:

A -- (pipelined FORWARD) --> B -- (blocking ALL-to-ALL) --> D

A -- (pipelined FORWARD) --> C -- (pipelined FORWARD) --> D

parallelism=2 for all vertices.

We will have 2 execution pipelined regions:

R1 = {A1, B1, C1, D1}

R2 = {A2, B2, C2, D2}

R1 has a cross-region input edge (B2->D1).

R2 has a cross-region input edge (B1->D2).

Scheduling deadlock will happen since we schedule a region only when all its

inputs are consumable (i.e. blocking partitions to be finished). This is

because R1 can be scheduled only if R2 finishes, while R2 can be scheduled only

if R1 finishes.

To avoid this, one solution is to force a logical pipelined region with

intra-region ALL-to-ALL blocking edges to form one only execution pipelined

region, so that there would not be cyclic input dependency between regions.

Besides that, we should also pay attention to avoid cyclic cross-region

POINTWISE blocking edges.

was:

Imagine a job like this:

A -- (pipelined FORWARD) --> B -- (blocking ALL-to-ALL) --> D

A -- (pipelined FORWARD) --> C -- (pipelined FORWARD) --> D

parallelism=2 for all vertices.

We will have 2 execution pipelined regions:

R1 = {A1, B1, C1, D1}

R2 = {A2, B2, C2, D2}

R1 has a cross-region input edge (B2->D1).

R2 has a cross-region input edge (B1->D2).

Scheduling deadlock will happen since we schedule a region only when all its

inputs are consumable (i.e. blocking partitions to be finished). Because R1 can

be scheduled only if R2 finishes, while R2 can be scheduled only if R1 finishes.

To avoid this, one solution is to force a logical pipelined region with

intra-region ALL-to-ALL blocking edges to form one only execution pipelined

region, so that there would not be cyclic input dependency between regions.

Besides that, we should also pay attention to avoid cyclic cross-region

POINTWISE blocking edges.

> Avoid scheduling deadlocks caused by intra-logical-region ALL-to-ALL blocking

> edges

> ---

>

> Key: FLINK-17330

> URL: https://issues.apache.org/jira/browse/FLINK-17330

> Project: Flink

> Issue Type: Sub-task

> Components: Runtime / Coordination

>Affects Versions: 1.11.0

>Reporter: Zhu Zhu

>Priority: Major

> Fix For: 1.11.0

>

>

> Imagine a job like this:

> A -- (pipelined FORWARD) --> B -- (blocking ALL-to-ALL) --> D

> A -- (pipelined FORWARD) --> C -- (pipelined FORWARD) --> D

> parallelism=2 for all vertices.

> We will have 2 execution pipelined regions:

> R1 = {A1, B1, C1, D1}

> R2 = {A2, B2, C2, D2}

> R1 has a cross-region input edge (B2->D1).

> R2 has a cross-region input edge (B1->D2).

> Scheduling deadlock will happen since we schedule a region only when all its

> inputs are consumable (i.e. blocking partitions to be finished). This is

> because R1 can be scheduled only if R2 finishes, while R2 can be scheduled

> only if R1 finishes.

> To avoid this, one solution is to force a logical pipelined region with

> intra-region ALL-to-ALL blocking edges to form one only execution pipelined

> region, so that there would not be cyclic input dependency between regions.

> Besides that, we should also pay attention to avoid cyclic cross-region

> POINTWISE blocking edges.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (FLINK-17330) Avoid scheduling deadlocks caused by intra-logical-region ALL-to-ALL blocking edges

[

https://issues.apache.org/jira/browse/FLINK-17330?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17090210#comment-17090210

]

Zhu Zhu commented on FLINK-17330:

-

cc [~gjy] [~trohrmann]

Sorry this case was neglected.

What do you think of the proposal to "make logical pipelined region with

intra-region ALL-to-ALL blocking edges to form one only execution pipelined

region" to avoid cyclic input dependencies between regions?

> Avoid scheduling deadlocks caused by intra-logical-region ALL-to-ALL blocking

> edges

> ---

>

> Key: FLINK-17330

> URL: https://issues.apache.org/jira/browse/FLINK-17330

> Project: Flink

> Issue Type: Sub-task

> Components: Runtime / Coordination

>Affects Versions: 1.11.0

>Reporter: Zhu Zhu

>Priority: Major

> Fix For: 1.11.0

>

>

> Imagine a job like this:

> A -- (pipelined FORWARD) --> B -- (blocking ALL-to-ALL) --> D

> A -- (pipelined FORWARD) --> C -- (pipelined FORWARD) --> D

> parallelism=2 for all vertices.

> We will have 2 execution pipelined regions:

> R1 = {A1, B1, C1, D1}

> R2 = {A2, B2, C2, D2}

> R1 has a cross-region input edge (B2->D1).

> R2 has a cross-region input edge (B1->D2).

> Scheduling deadlock will happen since we schedule a region only when all its

> inputs are consumable (i.e. blocking partitions to be finished). Because R1

> can be scheduled only if R2 finishes, while R2 can be scheduled only if R1

> finishes.

> To avoid this, one solution is to force a logical pipelined region with

> intra-region ALL-to-ALL blocking edges to form one only execution pipelined

> region, so that there would not be cyclic input dependency between regions.

> Besides that, we should also pay attention to avoid cyclic cross-region

> POINTWISE blocking edges.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Updated] (FLINK-17330) Avoid scheduling deadlocks caused by intra-logical-region ALL-to-ALL blocking edges

[

https://issues.apache.org/jira/browse/FLINK-17330?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Zhu Zhu updated FLINK-17330:

Description:

Imagine a job like this:

A -- (pipelined FORWARD) --> B -- (blocking ALL-to-ALL) --> D

A -- (pipelined FORWARD) --> C -- (pipelined FORWARD) --> D

parallelism=2 for all vertices.

We will have 2 execution pipelined regions:

R1={A1, B1, C1, D1}

R2={A2, B2, C2, D2}

R1 has a cross-region input edge (B2->D1).

R2 has a cross-region input edge (B1->D2).

Scheduling deadlock will happen since we schedule a region only when all its

inputs are consumable (i.e. blocking partitions to be finished). Because R1 can

be scheduled only if R2 finishes, while R2 can be scheduled only if R1 finishes.

To avoid this, one solution is to force a logical pipelined region with

intra-region ALL-to-ALL blocking edges to form one only execution pipelined

region, so that there would not be cyclic input dependency between regions.

Besides that, we should also pay attention to avoid cyclic cross-region

POINTWISE blocking edges.

was:

Imagine a job like this:

A -- (pipelined FORWARD) --> B -- (blocking ALL-to-ALL) --> D

A -- (pipelined FORWARD) --> C -- (pipelined FORWARD) --> D

parallelism=2 for all vertices.

We will have 2 execution pipelined regions:

R1={A1, B1, C1, D1}, R2={A2, B2, C2, D2}

R1 has a cross-region input edge (B2->D1).

R2 has a cross-region input edge (B1->D2).

Scheduling deadlock will happen since we schedule a region only when all its

inputs are consumable (i.e. blocking partitions to be finished). Because R1 can

be scheduled only if R2 finishes, while R2 can be scheduled only if R1 finishes.

To avoid this, one solution is to force a logical pipelined region with

intra-region ALL-to-ALL blocking edges to form one only execution pipelined

region, so that there would not be cyclic input dependency between regions.

Besides that, we should also pay attention to avoid cyclic cross-region

POINTWISE blocking edges.

> Avoid scheduling deadlocks caused by intra-logical-region ALL-to-ALL blocking

> edges

> ---

>

> Key: FLINK-17330

> URL: https://issues.apache.org/jira/browse/FLINK-17330

> Project: Flink

> Issue Type: Sub-task

> Components: Runtime / Coordination

>Affects Versions: 1.11.0

>Reporter: Zhu Zhu

>Priority: Major

> Fix For: 1.11.0

>

>

> Imagine a job like this:

> A -- (pipelined FORWARD) --> B -- (blocking ALL-to-ALL) --> D

> A -- (pipelined FORWARD) --> C -- (pipelined FORWARD) --> D

> parallelism=2 for all vertices.

> We will have 2 execution pipelined regions:

> R1={A1, B1, C1, D1}

> R2={A2, B2, C2, D2}

> R1 has a cross-region input edge (B2->D1).

> R2 has a cross-region input edge (B1->D2).

> Scheduling deadlock will happen since we schedule a region only when all its

> inputs are consumable (i.e. blocking partitions to be finished). Because R1

> can be scheduled only if R2 finishes, while R2 can be scheduled only if R1

> finishes.

> To avoid this, one solution is to force a logical pipelined region with

> intra-region ALL-to-ALL blocking edges to form one only execution pipelined

> region, so that there would not be cyclic input dependency between regions.

> Besides that, we should also pay attention to avoid cyclic cross-region

> POINTWISE blocking edges.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Updated] (FLINK-17330) Avoid scheduling deadlocks caused by intra-logical-region ALL-to-ALL blocking edges

[

https://issues.apache.org/jira/browse/FLINK-17330?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Zhu Zhu updated FLINK-17330:

Description:

Imagine a job like this:

A -- (pipelined FORWARD) --> B -- (blocking ALL-to-ALL) --> D

A -- (pipelined FORWARD) --> C -- (pipelined FORWARD) --> D

parallelism=2 for all vertices.

We will have 2 execution pipelined regions:

R1 = {A1, B1, C1, D1}

R2 = {A2, B2, C2, D2}

R1 has a cross-region input edge (B2->D1).

R2 has a cross-region input edge (B1->D2).

Scheduling deadlock will happen since we schedule a region only when all its

inputs are consumable (i.e. blocking partitions to be finished). Because R1 can

be scheduled only if R2 finishes, while R2 can be scheduled only if R1 finishes.

To avoid this, one solution is to force a logical pipelined region with

intra-region ALL-to-ALL blocking edges to form one only execution pipelined

region, so that there would not be cyclic input dependency between regions.

Besides that, we should also pay attention to avoid cyclic cross-region

POINTWISE blocking edges.

was:

Imagine a job like this:

A -- (pipelined FORWARD) --> B -- (blocking ALL-to-ALL) --> D

A -- (pipelined FORWARD) --> C -- (pipelined FORWARD) --> D

parallelism=2 for all vertices.

We will have 2 execution pipelined regions:

R1={A1, B1, C1, D1}

R2={A2, B2, C2, D2}

R1 has a cross-region input edge (B2->D1).

R2 has a cross-region input edge (B1->D2).

Scheduling deadlock will happen since we schedule a region only when all its

inputs are consumable (i.e. blocking partitions to be finished). Because R1 can

be scheduled only if R2 finishes, while R2 can be scheduled only if R1 finishes.

To avoid this, one solution is to force a logical pipelined region with

intra-region ALL-to-ALL blocking edges to form one only execution pipelined

region, so that there would not be cyclic input dependency between regions.

Besides that, we should also pay attention to avoid cyclic cross-region

POINTWISE blocking edges.

> Avoid scheduling deadlocks caused by intra-logical-region ALL-to-ALL blocking

> edges

> ---

>

> Key: FLINK-17330

> URL: https://issues.apache.org/jira/browse/FLINK-17330

> Project: Flink

> Issue Type: Sub-task

> Components: Runtime / Coordination

>Affects Versions: 1.11.0

>Reporter: Zhu Zhu

>Priority: Major

> Fix For: 1.11.0

>

>

> Imagine a job like this:

> A -- (pipelined FORWARD) --> B -- (blocking ALL-to-ALL) --> D

> A -- (pipelined FORWARD) --> C -- (pipelined FORWARD) --> D

> parallelism=2 for all vertices.

> We will have 2 execution pipelined regions:

> R1 = {A1, B1, C1, D1}

> R2 = {A2, B2, C2, D2}

> R1 has a cross-region input edge (B2->D1).

> R2 has a cross-region input edge (B1->D2).

> Scheduling deadlock will happen since we schedule a region only when all its

> inputs are consumable (i.e. blocking partitions to be finished). Because R1

> can be scheduled only if R2 finishes, while R2 can be scheduled only if R1

> finishes.

> To avoid this, one solution is to force a logical pipelined region with

> intra-region ALL-to-ALL blocking edges to form one only execution pipelined

> region, so that there would not be cyclic input dependency between regions.

> Besides that, we should also pay attention to avoid cyclic cross-region

> POINTWISE blocking edges.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Updated] (FLINK-17330) Avoid scheduling deadlocks caused by intra-logical-region ALL-to-ALL blocking edges

[

https://issues.apache.org/jira/browse/FLINK-17330?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Zhu Zhu updated FLINK-17330:

Description:

Imagine a job like this:

A -- (pipelined FORWARD) --> B -- (blocking ALL-to-ALL) --> D

A -- (pipelined FORWARD) --> C -- (pipelined FORWARD) --> D

parallelism=2 for all vertices.

We will have 2 execution pipelined regions:

R1={A1, B1, C1, D1}, R2={A2, B2, C2, D2}

R1 has a cross-region input edge (B2->D1).

R2 has a cross-region input edge (B1->D2).

Scheduling deadlock will happen since we schedule a region only when all its

inputs are consumable (i.e. blocking partitions to be finished). Because R1 can

be scheduled only if R2 finishes, while R2 can be scheduled only if R1 finishes.

To avoid this, one solution is to force a logical pipelined region with

intra-region ALL-to-ALL blocking edges to form one only execution pipelined

region, so that there would not be cyclic input dependency between regions.

Besides that, we should also pay attention to avoid cyclic cross-region

POINTWISE blocking edges.

was:

Imagine a job like this:

A --(pipelined FORWARD)--> B --(blocking ALL-to-ALL)--> D

A --(pipelined FORWARD)--> C --(pipelined FORWARD)--> D

parallelism=2 for all vertices.

We will have 2 execution pipelined regions:

R1={A1, B1, C1, D1}, R2={A2, B2, C2, D2}

R1 has a cross-region input edge (B2->D1).

R2 has a cross-region input edge (B1->D2).

Scheduling deadlock will happen since we schedule a region only when all its

inputs are consumable (i.e. blocking partitions to be finished). Because R1 can

be scheduled only if R2 finishes, while R2 can be scheduled only if R1 finishes.

To avoid this, one solution is to force a logical pipelined region with

intra-region ALL-to-ALL blocking edges to form one only execution pipelined

region, so that there would not be cyclic input dependency between regions.

Besides that, we should also pay attention to avoid cyclic cross-region

POINTWISE blocking edges.

> Avoid scheduling deadlocks caused by intra-logical-region ALL-to-ALL blocking

> edges

> ---

>

> Key: FLINK-17330

> URL: https://issues.apache.org/jira/browse/FLINK-17330

> Project: Flink

> Issue Type: Sub-task

> Components: Runtime / Coordination

>Affects Versions: 1.11.0

>Reporter: Zhu Zhu

>Priority: Major

> Fix For: 1.11.0

>

>

> Imagine a job like this:

> A -- (pipelined FORWARD) --> B -- (blocking ALL-to-ALL) --> D

> A -- (pipelined FORWARD) --> C -- (pipelined FORWARD) --> D

> parallelism=2 for all vertices.

> We will have 2 execution pipelined regions:

> R1={A1, B1, C1, D1}, R2={A2, B2, C2, D2}

> R1 has a cross-region input edge (B2->D1).

> R2 has a cross-region input edge (B1->D2).