[jira] [Updated] (HIVE-24473) Update HBase version to 2.1.10

[ https://issues.apache.org/jira/browse/HIVE-24473?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Istvan Toth updated HIVE-24473: --- Attachment: (was: HIVE-24473.patch) > Update HBase version to 2.1.10 > -- > > Key: HIVE-24473 > URL: https://issues.apache.org/jira/browse/HIVE-24473 > Project: Hive > Issue Type: Improvement > Components: HBase Handler >Affects Versions: 4.0.0 >Reporter: Istvan Toth >Assignee: Istvan Toth >Priority: Major > Labels: pull-request-available > Time Spent: 10m > Remaining Estimate: 0h > > Hive currently builds with a 2.0.0 pre-release. > Update HBase to more recent version. > We cannot use anything later than 2.2.4 because of HBASE-22394 > So the options are 2.1.10 and 2.2.4 > I suggest 2.1.10 because it's a chronologically later release, and it > maximises compatibility with HBase server deployments. > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (HIVE-24473) Update HBase version to 2.1.10

[ https://issues.apache.org/jira/browse/HIVE-24473?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Istvan Toth updated HIVE-24473: --- Attachment: (was: HIVE-24473.02.patch) > Update HBase version to 2.1.10 > -- > > Key: HIVE-24473 > URL: https://issues.apache.org/jira/browse/HIVE-24473 > Project: Hive > Issue Type: Improvement > Components: HBase Handler >Affects Versions: 4.0.0 >Reporter: Istvan Toth >Assignee: Istvan Toth >Priority: Major > Labels: pull-request-available > Time Spent: 10m > Remaining Estimate: 0h > > Hive currently builds with a 2.0.0 pre-release. > Update HBase to more recent version. > We cannot use anything later than 2.2.4 because of HBASE-22394 > So the options are 2.1.10 and 2.2.4 > I suggest 2.1.10 because it's a chronologically later release, and it > maximises compatibility with HBase server deployments. > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Work logged] (HIVE-24433) AutoCompaction is not getting triggered for CamelCase Partition Values

[

https://issues.apache.org/jira/browse/HIVE-24433?focusedWorklogId=520054=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-520054

]

ASF GitHub Bot logged work on HIVE-24433:

-

Author: ASF GitHub Bot

Created on: 04/Dec/20 07:49

Start Date: 04/Dec/20 07:49

Worklog Time Spent: 10m

Work Description: nareshpr commented on a change in pull request #1712:

URL: https://github.com/apache/hive/pull/1712#discussion_r535899436

##

File path:

standalone-metastore/metastore-server/src/main/java/org/apache/hadoop/hive/metastore/txn/TxnHandler.java

##

@@ -2725,7 +2725,7 @@ private void insertTxnComponents(long txnid, LockRequest

rqst, Connection dbConn

}

String dbName = normalizeCase(lc.getDbname());

String tblName = normalizeCase(lc.getTablename());

- String partName = normalizeCase(lc.getPartitionname());

+ String partName = lc.getPartitionname();

Review comment:

I changed it to split & convert partition key to lower key.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 520054)

Time Spent: 2h (was: 1h 50m)

> AutoCompaction is not getting triggered for CamelCase Partition Values

> --

>

> Key: HIVE-24433

> URL: https://issues.apache.org/jira/browse/HIVE-24433

> Project: Hive

> Issue Type: Bug

>Reporter: Naresh P R

>Assignee: Naresh P R

>Priority: Major

> Labels: pull-request-available

> Time Spent: 2h

> Remaining Estimate: 0h

>

> PartionKeyValue is getting converted into lowerCase in below 2 places.

> [https://github.com/apache/hive/blob/master/standalone-metastore/metastore-server/src/main/java/org/apache/hadoop/hive/metastore/txn/TxnHandler.java#L2728]

> [https://github.com/apache/hive/blob/master/standalone-metastore/metastore-server/src/main/java/org/apache/hadoop/hive/metastore/txn/TxnHandler.java#L2851]

> Because of which TXN_COMPONENTS & HIVE_LOCKS tables are not having entries

> from proper partition values.

> When query completes, the entry moves from TXN_COMPONENTS to

> COMPLETED_TXN_COMPONENTS. Hive AutoCompaction will not recognize the

> partition & considers it as invalid partition

> {code:java}

> create table abc(name string) partitioned by(city string) stored as orc

> tblproperties('transactional'='true');

> insert into abc partition(city='Bangalore') values('aaa');

> {code}

> Example entry in COMPLETED_TXN_COMPONENTS

> {noformat}

> +---+--++---+-+-+---+

> | CTC_TXNID | CTC_DATABASE | CTC_TABLE | CTC_PARTITION |

> CTC_TIMESTAMP | CTC_WRITEID | CTC_UPDATE_DELETE |

> +---+--++---+-+-+---+

> | 2 | default | abc | city=bangalore | 2020-11-25 09:26:59

> | 1 | N |

> +---+--++---+-+-+---+

> {noformat}

>

> AutoCompaction fails to get triggered with below error

> {code:java}

> 2020-11-25T09:35:10,364 INFO [Thread-9]: compactor.Initiator

> (Initiator.java:run(98)) - Checking to see if we should compact

> default.abc.city=bangalore

> 2020-11-25T09:35:10,380 INFO [Thread-9]: compactor.Initiator

> (Initiator.java:run(155)) - Can't find partition

> default.compaction_test.city=bangalore, assuming it has been dropped and

> moving on{code}

> I verifed below 4 SQL's with my PR, those all produced correct

> PartitionKeyValue

> i.e, COMPLETED_TXN_COMPONENTS.CTC_PARTITION="city=Bangalore"

> {code:java}

> insert into table abc PARTITION(CitY='Bangalore') values('Dan');

> insert overwrite table abc partition(CiTy='Bangalore') select Name from abc;

> update table abc set Name='xy' where CiTy='Bangalore';

> delete from abc where CiTy='Bangalore';{code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Assigned] (HIVE-24467) ConditionalTask remove tasks that not selected exists thread safety problem

[

https://issues.apache.org/jira/browse/HIVE-24467?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

guojh reassigned HIVE-24467:

Assignee: guojh

> ConditionalTask remove tasks that not selected exists thread safety problem

> ---

>

> Key: HIVE-24467

> URL: https://issues.apache.org/jira/browse/HIVE-24467

> Project: Hive

> Issue Type: Bug

> Components: Hive

>Affects Versions: 2.3.4

>Reporter: guojh

>Assignee: guojh

>Priority: Major

> Labels: pull-request-available

> Time Spent: 0.5h

> Remaining Estimate: 0h

>

> When hive execute jobs in parallel(control by “hive.exec.parallel”

> parameter), ConditionalTasks remove the tasks that not selected in parallel,

> because there are thread safety issues, some task may not remove from the

> dependent task tree. This is a very serious bug, which causes some stage task

> not trigger execution.

> In our production cluster, the query run three conditional task in parallel,

> after apply the patch of HIVE-21638, we found Stage-3 is miss and not submit

> to runnable list for his parent Stage-31 is not done. But Stage-31 should

> removed for it not selected.

> Stage dependencies is below:

> {code:java}

> STAGE DEPENDENCIES:

> Stage-41 is a root stage

> Stage-26 depends on stages: Stage-41

> Stage-25 depends on stages: Stage-26 , consists of Stage-39, Stage-40,

> Stage-2

> Stage-39 has a backup stage: Stage-2

> Stage-23 depends on stages: Stage-39

> Stage-3 depends on stages: Stage-2, Stage-12, Stage-16, Stage-20, Stage-23,

> Stage-24, Stage-27, Stage-28, Stage-31, Stage-32, Stage-35, Stage-36

> Stage-8 depends on stages: Stage-3 , consists of Stage-5, Stage-4, Stage-6

> Stage-5

> Stage-0 depends on stages: Stage-5, Stage-4, Stage-7

> Stage-51 depends on stages: Stage-0

> Stage-4

> Stage-6

> Stage-7 depends on stages: Stage-6

> Stage-40 has a backup stage: Stage-2

> Stage-24 depends on stages: Stage-40

> Stage-2

> Stage-44 is a root stage

> Stage-30 depends on stages: Stage-44

> Stage-29 depends on stages: Stage-30 , consists of Stage-42, Stage-43,

> Stage-12

> Stage-42 has a backup stage: Stage-12

> Stage-27 depends on stages: Stage-42

> Stage-43 has a backup stage: Stage-12

> Stage-28 depends on stages: Stage-43

> Stage-12

> Stage-47 is a root stage

> Stage-34 depends on stages: Stage-47

> Stage-33 depends on stages: Stage-34 , consists of Stage-45, Stage-46,

> Stage-16

> Stage-45 has a backup stage: Stage-16

> Stage-31 depends on stages: Stage-45

> Stage-46 has a backup stage: Stage-16

> Stage-32 depends on stages: Stage-46

> Stage-16

> Stage-50 is a root stage

> Stage-38 depends on stages: Stage-50

> Stage-37 depends on stages: Stage-38 , consists of Stage-48, Stage-49,

> Stage-20

> Stage-48 has a backup stage: Stage-20

> Stage-35 depends on stages: Stage-48

> Stage-49 has a backup stage: Stage-20

> Stage-36 depends on stages: Stage-49

> Stage-20

> {code}

> Stage tasks execute log is below, we can see Stage-33 is conditional task and

> it consists of Stage-45, Stage-46, Stage-16, Stage-16 is launched, Stage-45

> and Stage-46 should remove from the dependent tree, Stage-31 is child of

> Stage-45 parent of Stage-3, So, Stage-31 should removed too. As see in the

> below log, we find Stage-31 is still in the parent list of Stage-3, this

> should not happend.

> {code:java}

> 2020-12-03T01:09:50,939 INFO [HiveServer2-Background-Pool: Thread-87372]

> ql.Driver: Launching Job 1 out of 17

> 2020-12-03T01:09:50,940 INFO [HiveServer2-Background-Pool: Thread-87372]

> ql.Driver: Starting task [Stage-26:MAPRED] in parallel

> 2020-12-03T01:09:50,941 INFO [HiveServer2-Background-Pool: Thread-87372]

> ql.Driver: Launching Job 2 out of 17

> 2020-12-03T01:09:50,943 INFO [HiveServer2-Background-Pool: Thread-87372]

> ql.Driver: Starting task [Stage-30:MAPRED] in parallel

> 2020-12-03T01:09:50,943 INFO [HiveServer2-Background-Pool: Thread-87372]

> ql.Driver: Launching Job 3 out of 17

> 2020-12-03T01:09:50,943 INFO [HiveServer2-Background-Pool: Thread-87372]

> ql.Driver: Starting task [Stage-34:MAPRED] in parallel

> 2020-12-03T01:09:50,944 INFO [HiveServer2-Background-Pool: Thread-87372]

> ql.Driver: Launching Job 4 out of 17

> 2020-12-03T01:09:50,944 INFO [HiveServer2-Background-Pool: Thread-87372]

> ql.Driver: Starting task [Stage-38:MAPRED] in parallel

> 2020-12-03T01:10:32,946 INFO [HiveServer2-Background-Pool: Thread-87372]

> ql.Driver: Starting task [Stage-29:CONDITIONAL] in parallel

> 2020-12-03T01:10:32,946 INFO [HiveServer2-Background-Pool: Thread-87372]

> ql.Driver: Starting task [Stage-33:CONDITIONAL] in parallel

> 2020-12-03T01:10:32,946 INFO [HiveServer2-Background-Pool: Thread-87372]

> ql.Driver: Starting

[jira] [Work logged] (HIVE-24467) ConditionalTask remove tasks that not selected exists thread safety problem

[

https://issues.apache.org/jira/browse/HIVE-24467?focusedWorklogId=519995=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-519995

]

ASF GitHub Bot logged work on HIVE-24467:

-

Author: ASF GitHub Bot

Created on: 04/Dec/20 04:29

Start Date: 04/Dec/20 04:29

Worklog Time Spent: 10m

Work Description: anishek commented on pull request #1743:

URL: https://github.com/apache/hive/pull/1743#issuecomment-738557480

may be someone with more experience on the execution side should look at

this, @maheshk114 can you help here ?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 519995)

Time Spent: 0.5h (was: 20m)

> ConditionalTask remove tasks that not selected exists thread safety problem

> ---

>

> Key: HIVE-24467

> URL: https://issues.apache.org/jira/browse/HIVE-24467

> Project: Hive

> Issue Type: Bug

> Components: Hive

>Affects Versions: 2.3.4

>Reporter: guojh

>Priority: Major

> Labels: pull-request-available

> Time Spent: 0.5h

> Remaining Estimate: 0h

>

> When hive execute jobs in parallel(control by “hive.exec.parallel”

> parameter), ConditionalTasks remove the tasks that not selected in parallel,

> because there are thread safety issues, some task may not remove from the

> dependent task tree. This is a very serious bug, which causes some stage task

> not trigger execution.

> In our production cluster, the query run three conditional task in parallel,

> after apply the patch of HIVE-21638, we found Stage-3 is miss and not submit

> to runnable list for his parent Stage-31 is not done. But Stage-31 should

> removed for it not selected.

> Stage dependencies is below:

> {code:java}

> STAGE DEPENDENCIES:

> Stage-41 is a root stage

> Stage-26 depends on stages: Stage-41

> Stage-25 depends on stages: Stage-26 , consists of Stage-39, Stage-40,

> Stage-2

> Stage-39 has a backup stage: Stage-2

> Stage-23 depends on stages: Stage-39

> Stage-3 depends on stages: Stage-2, Stage-12, Stage-16, Stage-20, Stage-23,

> Stage-24, Stage-27, Stage-28, Stage-31, Stage-32, Stage-35, Stage-36

> Stage-8 depends on stages: Stage-3 , consists of Stage-5, Stage-4, Stage-6

> Stage-5

> Stage-0 depends on stages: Stage-5, Stage-4, Stage-7

> Stage-51 depends on stages: Stage-0

> Stage-4

> Stage-6

> Stage-7 depends on stages: Stage-6

> Stage-40 has a backup stage: Stage-2

> Stage-24 depends on stages: Stage-40

> Stage-2

> Stage-44 is a root stage

> Stage-30 depends on stages: Stage-44

> Stage-29 depends on stages: Stage-30 , consists of Stage-42, Stage-43,

> Stage-12

> Stage-42 has a backup stage: Stage-12

> Stage-27 depends on stages: Stage-42

> Stage-43 has a backup stage: Stage-12

> Stage-28 depends on stages: Stage-43

> Stage-12

> Stage-47 is a root stage

> Stage-34 depends on stages: Stage-47

> Stage-33 depends on stages: Stage-34 , consists of Stage-45, Stage-46,

> Stage-16

> Stage-45 has a backup stage: Stage-16

> Stage-31 depends on stages: Stage-45

> Stage-46 has a backup stage: Stage-16

> Stage-32 depends on stages: Stage-46

> Stage-16

> Stage-50 is a root stage

> Stage-38 depends on stages: Stage-50

> Stage-37 depends on stages: Stage-38 , consists of Stage-48, Stage-49,

> Stage-20

> Stage-48 has a backup stage: Stage-20

> Stage-35 depends on stages: Stage-48

> Stage-49 has a backup stage: Stage-20

> Stage-36 depends on stages: Stage-49

> Stage-20

> {code}

> Stage tasks execute log is below, we can see Stage-33 is conditional task and

> it consists of Stage-45, Stage-46, Stage-16, Stage-16 is launched, Stage-45

> and Stage-46 should remove from the dependent tree, Stage-31 is child of

> Stage-45 parent of Stage-3, So, Stage-31 should removed too. As see in the

> below log, we find Stage-31 is still in the parent list of Stage-3, this

> should not happend.

> {code:java}

> 2020-12-03T01:09:50,939 INFO [HiveServer2-Background-Pool: Thread-87372]

> ql.Driver: Launching Job 1 out of 17

> 2020-12-03T01:09:50,940 INFO [HiveServer2-Background-Pool: Thread-87372]

> ql.Driver: Starting task [Stage-26:MAPRED] in parallel

> 2020-12-03T01:09:50,941 INFO [HiveServer2-Background-Pool: Thread-87372]

> ql.Driver: Launching Job 2 out of 17

> 2020-12-03T01:09:50,943 INFO [HiveServer2-Background-Pool: Thread-87372]

> ql.Driver: Starting task [Stage-30:MAPRED] in parallel

> 2020-12-03T01:09:50,943 INFO

[jira] [Work logged] (HIVE-24467) ConditionalTask remove tasks that not selected exists thread safety problem

[

https://issues.apache.org/jira/browse/HIVE-24467?focusedWorklogId=519985=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-519985

]

ASF GitHub Bot logged work on HIVE-24467:

-

Author: ASF GitHub Bot

Created on: 04/Dec/20 03:38

Start Date: 04/Dec/20 03:38

Worklog Time Spent: 10m

Work Description: gjhkael commented on pull request #1743:

URL: https://github.com/apache/hive/pull/1743#issuecomment-738545155

@anishek Please review this pr. Thanks.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 519985)

Time Spent: 20m (was: 10m)

> ConditionalTask remove tasks that not selected exists thread safety problem

> ---

>

> Key: HIVE-24467

> URL: https://issues.apache.org/jira/browse/HIVE-24467

> Project: Hive

> Issue Type: Bug

> Components: Hive

>Affects Versions: 2.3.4

>Reporter: guojh

>Priority: Major

> Labels: pull-request-available

> Time Spent: 20m

> Remaining Estimate: 0h

>

> When hive execute jobs in parallel(control by “hive.exec.parallel”

> parameter), ConditionalTasks remove the tasks that not selected in parallel,

> because there are thread safety issues, some task may not remove from the

> dependent task tree. This is a very serious bug, which causes some stage task

> not trigger execution.

> In our production cluster, the query run three conditional task in parallel,

> after apply the patch of HIVE-21638, we found Stage-3 is miss and not submit

> to runnable list for his parent Stage-31 is not done. But Stage-31 should

> removed for it not selected.

> Stage dependencies is below:

> {code:java}

> STAGE DEPENDENCIES:

> Stage-41 is a root stage

> Stage-26 depends on stages: Stage-41

> Stage-25 depends on stages: Stage-26 , consists of Stage-39, Stage-40,

> Stage-2

> Stage-39 has a backup stage: Stage-2

> Stage-23 depends on stages: Stage-39

> Stage-3 depends on stages: Stage-2, Stage-12, Stage-16, Stage-20, Stage-23,

> Stage-24, Stage-27, Stage-28, Stage-31, Stage-32, Stage-35, Stage-36

> Stage-8 depends on stages: Stage-3 , consists of Stage-5, Stage-4, Stage-6

> Stage-5

> Stage-0 depends on stages: Stage-5, Stage-4, Stage-7

> Stage-51 depends on stages: Stage-0

> Stage-4

> Stage-6

> Stage-7 depends on stages: Stage-6

> Stage-40 has a backup stage: Stage-2

> Stage-24 depends on stages: Stage-40

> Stage-2

> Stage-44 is a root stage

> Stage-30 depends on stages: Stage-44

> Stage-29 depends on stages: Stage-30 , consists of Stage-42, Stage-43,

> Stage-12

> Stage-42 has a backup stage: Stage-12

> Stage-27 depends on stages: Stage-42

> Stage-43 has a backup stage: Stage-12

> Stage-28 depends on stages: Stage-43

> Stage-12

> Stage-47 is a root stage

> Stage-34 depends on stages: Stage-47

> Stage-33 depends on stages: Stage-34 , consists of Stage-45, Stage-46,

> Stage-16

> Stage-45 has a backup stage: Stage-16

> Stage-31 depends on stages: Stage-45

> Stage-46 has a backup stage: Stage-16

> Stage-32 depends on stages: Stage-46

> Stage-16

> Stage-50 is a root stage

> Stage-38 depends on stages: Stage-50

> Stage-37 depends on stages: Stage-38 , consists of Stage-48, Stage-49,

> Stage-20

> Stage-48 has a backup stage: Stage-20

> Stage-35 depends on stages: Stage-48

> Stage-49 has a backup stage: Stage-20

> Stage-36 depends on stages: Stage-49

> Stage-20

> {code}

> Stage tasks execute log is below, we can see Stage-33 is conditional task and

> it consists of Stage-45, Stage-46, Stage-16, Stage-16 is launched, Stage-45

> and Stage-46 should remove from the dependent tree, Stage-31 is child of

> Stage-45 parent of Stage-3, So, Stage-31 should removed too. As see in the

> below log, we find Stage-31 is still in the parent list of Stage-3, this

> should not happend.

> {code:java}

> 2020-12-03T01:09:50,939 INFO [HiveServer2-Background-Pool: Thread-87372]

> ql.Driver: Launching Job 1 out of 17

> 2020-12-03T01:09:50,940 INFO [HiveServer2-Background-Pool: Thread-87372]

> ql.Driver: Starting task [Stage-26:MAPRED] in parallel

> 2020-12-03T01:09:50,941 INFO [HiveServer2-Background-Pool: Thread-87372]

> ql.Driver: Launching Job 2 out of 17

> 2020-12-03T01:09:50,943 INFO [HiveServer2-Background-Pool: Thread-87372]

> ql.Driver: Starting task [Stage-30:MAPRED] in parallel

> 2020-12-03T01:09:50,943 INFO [HiveServer2-Background-Pool: Thread-87372]

> ql.Driver: Launching Job 3

[jira] [Updated] (HIVE-24467) ConditionalTask remove tasks that not selected exists thread safety problem

[

https://issues.apache.org/jira/browse/HIVE-24467?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

ASF GitHub Bot updated HIVE-24467:

--

Labels: pull-request-available (was: )

> ConditionalTask remove tasks that not selected exists thread safety problem

> ---

>

> Key: HIVE-24467

> URL: https://issues.apache.org/jira/browse/HIVE-24467

> Project: Hive

> Issue Type: Bug

> Components: Hive

>Affects Versions: 2.3.4

>Reporter: guojh

>Priority: Major

> Labels: pull-request-available

> Time Spent: 10m

> Remaining Estimate: 0h

>

> When hive execute jobs in parallel(control by “hive.exec.parallel”

> parameter), ConditionalTasks remove the tasks that not selected in parallel,

> because there are thread safety issues, some task may not remove from the

> dependent task tree. This is a very serious bug, which causes some stage task

> not trigger execution.

> In our production cluster, the query run three conditional task in parallel,

> after apply the patch of HIVE-21638, we found Stage-3 is miss and not submit

> to runnable list for his parent Stage-31 is not done. But Stage-31 should

> removed for it not selected.

> Stage dependencies is below:

> {code:java}

> STAGE DEPENDENCIES:

> Stage-41 is a root stage

> Stage-26 depends on stages: Stage-41

> Stage-25 depends on stages: Stage-26 , consists of Stage-39, Stage-40,

> Stage-2

> Stage-39 has a backup stage: Stage-2

> Stage-23 depends on stages: Stage-39

> Stage-3 depends on stages: Stage-2, Stage-12, Stage-16, Stage-20, Stage-23,

> Stage-24, Stage-27, Stage-28, Stage-31, Stage-32, Stage-35, Stage-36

> Stage-8 depends on stages: Stage-3 , consists of Stage-5, Stage-4, Stage-6

> Stage-5

> Stage-0 depends on stages: Stage-5, Stage-4, Stage-7

> Stage-51 depends on stages: Stage-0

> Stage-4

> Stage-6

> Stage-7 depends on stages: Stage-6

> Stage-40 has a backup stage: Stage-2

> Stage-24 depends on stages: Stage-40

> Stage-2

> Stage-44 is a root stage

> Stage-30 depends on stages: Stage-44

> Stage-29 depends on stages: Stage-30 , consists of Stage-42, Stage-43,

> Stage-12

> Stage-42 has a backup stage: Stage-12

> Stage-27 depends on stages: Stage-42

> Stage-43 has a backup stage: Stage-12

> Stage-28 depends on stages: Stage-43

> Stage-12

> Stage-47 is a root stage

> Stage-34 depends on stages: Stage-47

> Stage-33 depends on stages: Stage-34 , consists of Stage-45, Stage-46,

> Stage-16

> Stage-45 has a backup stage: Stage-16

> Stage-31 depends on stages: Stage-45

> Stage-46 has a backup stage: Stage-16

> Stage-32 depends on stages: Stage-46

> Stage-16

> Stage-50 is a root stage

> Stage-38 depends on stages: Stage-50

> Stage-37 depends on stages: Stage-38 , consists of Stage-48, Stage-49,

> Stage-20

> Stage-48 has a backup stage: Stage-20

> Stage-35 depends on stages: Stage-48

> Stage-49 has a backup stage: Stage-20

> Stage-36 depends on stages: Stage-49

> Stage-20

> {code}

> Stage tasks execute log is below, we can see Stage-33 is conditional task and

> it consists of Stage-45, Stage-46, Stage-16, Stage-16 is launched, Stage-45

> and Stage-46 should remove from the dependent tree, Stage-31 is child of

> Stage-45 parent of Stage-3, So, Stage-31 should removed too. As see in the

> below log, we find Stage-31 is still in the parent list of Stage-3, this

> should not happend.

> {code:java}

> 2020-12-03T01:09:50,939 INFO [HiveServer2-Background-Pool: Thread-87372]

> ql.Driver: Launching Job 1 out of 17

> 2020-12-03T01:09:50,940 INFO [HiveServer2-Background-Pool: Thread-87372]

> ql.Driver: Starting task [Stage-26:MAPRED] in parallel

> 2020-12-03T01:09:50,941 INFO [HiveServer2-Background-Pool: Thread-87372]

> ql.Driver: Launching Job 2 out of 17

> 2020-12-03T01:09:50,943 INFO [HiveServer2-Background-Pool: Thread-87372]

> ql.Driver: Starting task [Stage-30:MAPRED] in parallel

> 2020-12-03T01:09:50,943 INFO [HiveServer2-Background-Pool: Thread-87372]

> ql.Driver: Launching Job 3 out of 17

> 2020-12-03T01:09:50,943 INFO [HiveServer2-Background-Pool: Thread-87372]

> ql.Driver: Starting task [Stage-34:MAPRED] in parallel

> 2020-12-03T01:09:50,944 INFO [HiveServer2-Background-Pool: Thread-87372]

> ql.Driver: Launching Job 4 out of 17

> 2020-12-03T01:09:50,944 INFO [HiveServer2-Background-Pool: Thread-87372]

> ql.Driver: Starting task [Stage-38:MAPRED] in parallel

> 2020-12-03T01:10:32,946 INFO [HiveServer2-Background-Pool: Thread-87372]

> ql.Driver: Starting task [Stage-29:CONDITIONAL] in parallel

> 2020-12-03T01:10:32,946 INFO [HiveServer2-Background-Pool: Thread-87372]

> ql.Driver: Starting task [Stage-33:CONDITIONAL] in parallel

> 2020-12-03T01:10:32,946 INFO [HiveServer2-Background-Pool: Thread-87372]

> ql.Driver:

[jira] [Work logged] (HIVE-24467) ConditionalTask remove tasks that not selected exists thread safety problem

[

https://issues.apache.org/jira/browse/HIVE-24467?focusedWorklogId=519984=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-519984

]

ASF GitHub Bot logged work on HIVE-24467:

-

Author: ASF GitHub Bot

Created on: 04/Dec/20 03:37

Start Date: 04/Dec/20 03:37

Worklog Time Spent: 10m

Work Description: gjhkael opened a new pull request #1743:

URL: https://github.com/apache/hive/pull/1743

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 519984)

Remaining Estimate: 0h

Time Spent: 10m

> ConditionalTask remove tasks that not selected exists thread safety problem

> ---

>

> Key: HIVE-24467

> URL: https://issues.apache.org/jira/browse/HIVE-24467

> Project: Hive

> Issue Type: Bug

> Components: Hive

>Affects Versions: 2.3.4

>Reporter: guojh

>Priority: Major

> Time Spent: 10m

> Remaining Estimate: 0h

>

> When hive execute jobs in parallel(control by “hive.exec.parallel”

> parameter), ConditionalTasks remove the tasks that not selected in parallel,

> because there are thread safety issues, some task may not remove from the

> dependent task tree. This is a very serious bug, which causes some stage task

> not trigger execution.

> In our production cluster, the query run three conditional task in parallel,

> after apply the patch of HIVE-21638, we found Stage-3 is miss and not submit

> to runnable list for his parent Stage-31 is not done. But Stage-31 should

> removed for it not selected.

> Stage dependencies is below:

> {code:java}

> STAGE DEPENDENCIES:

> Stage-41 is a root stage

> Stage-26 depends on stages: Stage-41

> Stage-25 depends on stages: Stage-26 , consists of Stage-39, Stage-40,

> Stage-2

> Stage-39 has a backup stage: Stage-2

> Stage-23 depends on stages: Stage-39

> Stage-3 depends on stages: Stage-2, Stage-12, Stage-16, Stage-20, Stage-23,

> Stage-24, Stage-27, Stage-28, Stage-31, Stage-32, Stage-35, Stage-36

> Stage-8 depends on stages: Stage-3 , consists of Stage-5, Stage-4, Stage-6

> Stage-5

> Stage-0 depends on stages: Stage-5, Stage-4, Stage-7

> Stage-51 depends on stages: Stage-0

> Stage-4

> Stage-6

> Stage-7 depends on stages: Stage-6

> Stage-40 has a backup stage: Stage-2

> Stage-24 depends on stages: Stage-40

> Stage-2

> Stage-44 is a root stage

> Stage-30 depends on stages: Stage-44

> Stage-29 depends on stages: Stage-30 , consists of Stage-42, Stage-43,

> Stage-12

> Stage-42 has a backup stage: Stage-12

> Stage-27 depends on stages: Stage-42

> Stage-43 has a backup stage: Stage-12

> Stage-28 depends on stages: Stage-43

> Stage-12

> Stage-47 is a root stage

> Stage-34 depends on stages: Stage-47

> Stage-33 depends on stages: Stage-34 , consists of Stage-45, Stage-46,

> Stage-16

> Stage-45 has a backup stage: Stage-16

> Stage-31 depends on stages: Stage-45

> Stage-46 has a backup stage: Stage-16

> Stage-32 depends on stages: Stage-46

> Stage-16

> Stage-50 is a root stage

> Stage-38 depends on stages: Stage-50

> Stage-37 depends on stages: Stage-38 , consists of Stage-48, Stage-49,

> Stage-20

> Stage-48 has a backup stage: Stage-20

> Stage-35 depends on stages: Stage-48

> Stage-49 has a backup stage: Stage-20

> Stage-36 depends on stages: Stage-49

> Stage-20

> {code}

> Stage tasks execute log is below, we can see Stage-33 is conditional task and

> it consists of Stage-45, Stage-46, Stage-16, Stage-16 is launched, Stage-45

> and Stage-46 should remove from the dependent tree, Stage-31 is child of

> Stage-45 parent of Stage-3, So, Stage-31 should removed too. As see in the

> below log, we find Stage-31 is still in the parent list of Stage-3, this

> should not happend.

> {code:java}

> 2020-12-03T01:09:50,939 INFO [HiveServer2-Background-Pool: Thread-87372]

> ql.Driver: Launching Job 1 out of 17

> 2020-12-03T01:09:50,940 INFO [HiveServer2-Background-Pool: Thread-87372]

> ql.Driver: Starting task [Stage-26:MAPRED] in parallel

> 2020-12-03T01:09:50,941 INFO [HiveServer2-Background-Pool: Thread-87372]

> ql.Driver: Launching Job 2 out of 17

> 2020-12-03T01:09:50,943 INFO [HiveServer2-Background-Pool: Thread-87372]

> ql.Driver: Starting task [Stage-30:MAPRED] in parallel

> 2020-12-03T01:09:50,943 INFO [HiveServer2-Background-Pool: Thread-87372]

> ql.Driver: Launching Job 3 out of 17

> 2020-12-03T01:09:50,943 INFO [HiveServer2-Background-Pool:

[jira] [Updated] (HIVE-24467) ConditionalTask remove tasks that not selected exists thread safety problem

[

https://issues.apache.org/jira/browse/HIVE-24467?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

guojh updated HIVE-24467:

-

Description:

When hive execute jobs in parallel(control by “hive.exec.parallel” parameter),

ConditionalTasks remove the tasks that not selected in parallel, because there

are thread safety issues, some task may not remove from the dependent task

tree. This is a very serious bug, which causes some stage task not trigger

execution.

In our production cluster, the query run three conditional task in parallel,

after apply the patch of HIVE-21638, we found Stage-3 is miss and not submit to

runnable list for his parent Stage-31 is not done. But Stage-31 should removed

for it not selected.

Stage dependencies is below:

{code:java}

STAGE DEPENDENCIES:

Stage-41 is a root stage

Stage-26 depends on stages: Stage-41

Stage-25 depends on stages: Stage-26 , consists of Stage-39, Stage-40, Stage-2

Stage-39 has a backup stage: Stage-2

Stage-23 depends on stages: Stage-39

Stage-3 depends on stages: Stage-2, Stage-12, Stage-16, Stage-20, Stage-23,

Stage-24, Stage-27, Stage-28, Stage-31, Stage-32, Stage-35, Stage-36

Stage-8 depends on stages: Stage-3 , consists of Stage-5, Stage-4, Stage-6

Stage-5

Stage-0 depends on stages: Stage-5, Stage-4, Stage-7

Stage-51 depends on stages: Stage-0

Stage-4

Stage-6

Stage-7 depends on stages: Stage-6

Stage-40 has a backup stage: Stage-2

Stage-24 depends on stages: Stage-40

Stage-2

Stage-44 is a root stage

Stage-30 depends on stages: Stage-44

Stage-29 depends on stages: Stage-30 , consists of Stage-42, Stage-43,

Stage-12

Stage-42 has a backup stage: Stage-12

Stage-27 depends on stages: Stage-42

Stage-43 has a backup stage: Stage-12

Stage-28 depends on stages: Stage-43

Stage-12

Stage-47 is a root stage

Stage-34 depends on stages: Stage-47

Stage-33 depends on stages: Stage-34 , consists of Stage-45, Stage-46,

Stage-16

Stage-45 has a backup stage: Stage-16

Stage-31 depends on stages: Stage-45

Stage-46 has a backup stage: Stage-16

Stage-32 depends on stages: Stage-46

Stage-16

Stage-50 is a root stage

Stage-38 depends on stages: Stage-50

Stage-37 depends on stages: Stage-38 , consists of Stage-48, Stage-49,

Stage-20

Stage-48 has a backup stage: Stage-20

Stage-35 depends on stages: Stage-48

Stage-49 has a backup stage: Stage-20

Stage-36 depends on stages: Stage-49

Stage-20

{code}

Stage tasks execute log is below, we can see Stage-33 is conditional task and

it consists of Stage-45, Stage-46, Stage-16, Stage-16 is launched, Stage-45 and

Stage-46 should remove from the dependent tree, Stage-31 is child of Stage-45

parent of Stage-3, So, Stage-31 should removed too. As see in the below log, we

find Stage-31 is still in the parent list of Stage-3, this should not happend.

{code:java}

2020-12-03T01:09:50,939 INFO [HiveServer2-Background-Pool: Thread-87372]

ql.Driver: Launching Job 1 out of 17

2020-12-03T01:09:50,940 INFO [HiveServer2-Background-Pool: Thread-87372]

ql.Driver: Starting task [Stage-26:MAPRED] in parallel

2020-12-03T01:09:50,941 INFO [HiveServer2-Background-Pool: Thread-87372]

ql.Driver: Launching Job 2 out of 17

2020-12-03T01:09:50,943 INFO [HiveServer2-Background-Pool: Thread-87372]

ql.Driver: Starting task [Stage-30:MAPRED] in parallel

2020-12-03T01:09:50,943 INFO [HiveServer2-Background-Pool: Thread-87372]

ql.Driver: Launching Job 3 out of 17

2020-12-03T01:09:50,943 INFO [HiveServer2-Background-Pool: Thread-87372]

ql.Driver: Starting task [Stage-34:MAPRED] in parallel

2020-12-03T01:09:50,944 INFO [HiveServer2-Background-Pool: Thread-87372]

ql.Driver: Launching Job 4 out of 17

2020-12-03T01:09:50,944 INFO [HiveServer2-Background-Pool: Thread-87372]

ql.Driver: Starting task [Stage-38:MAPRED] in parallel

2020-12-03T01:10:32,946 INFO [HiveServer2-Background-Pool: Thread-87372]

ql.Driver: Starting task [Stage-29:CONDITIONAL] in parallel

2020-12-03T01:10:32,946 INFO [HiveServer2-Background-Pool: Thread-87372]

ql.Driver: Starting task [Stage-33:CONDITIONAL] in parallel

2020-12-03T01:10:32,946 INFO [HiveServer2-Background-Pool: Thread-87372]

ql.Driver: Starting task [Stage-37:CONDITIONAL] in parallel

2020-12-03T01:10:34,946 INFO [HiveServer2-Background-Pool: Thread-87372]

ql.Driver: Launching Job 5 out of 17

2020-12-03T01:10:34,947 INFO [HiveServer2-Background-Pool: Thread-87372]

ql.Driver: Starting task [Stage-16:MAPRED] in parallel

2020-12-03T01:10:34,948 INFO [HiveServer2-Background-Pool: Thread-87372]

ql.Driver: Launching Job 6 out of 17

2020-12-03T01:10:34,948 INFO [HiveServer2-Background-Pool: Thread-87372]

ql.Driver: Starting task [Stage-12:MAPRED] in parallel

2020-12-03T01:10:34,949 INFO [HiveServer2-Background-Pool: Thread-87372]

ql.Driver: Launching Job 7 out of 17

2020-12-03T01:10:34,950 INFO [HiveServer2-Background-Pool: Thread-87372]

ql.Driver: Starting task

[jira] [Updated] (HIVE-24467) ConditionalTask remove tasks that not selected exists thread safety problem

[

https://issues.apache.org/jira/browse/HIVE-24467?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

guojh updated HIVE-24467:

-

Description:

When hive execute jobs in parallel(control by “hive.exec.parallel” parameter),

ConditionalTasks remove the tasks that not selected in parallel, because there

are thread safety issues, some task may not remove from the dependent task

tree. This is a very serious bug, which causes some stage task not trigger

execution.

In our production cluster, the query run three conditional task in parallel,

after apply the patch of HIVE-21638, we found Stage-3 is miss and not submit to

runnable list for his parent Stage-31 is not done. But Stage-31 should removed

for it not selected.

Stage dependencies is below:

{code:java}

STAGE DEPENDENCIES:

Stage-41 is a root stage

Stage-26 depends on stages: Stage-41

Stage-25 depends on stages: Stage-26 , consists of Stage-39, Stage-40, Stage-2

Stage-39 has a backup stage: Stage-2

Stage-23 depends on stages: Stage-39

Stage-3 depends on stages: Stage-2, Stage-12, Stage-16, Stage-20, Stage-23,

Stage-24, Stage-27, Stage-28, Stage-31, Stage-32, Stage-35, Stage-36

Stage-8 depends on stages: Stage-3 , consists of Stage-5, Stage-4, Stage-6

Stage-5

Stage-0 depends on stages: Stage-5, Stage-4, Stage-7

Stage-51 depends on stages: Stage-0

Stage-4

Stage-6

Stage-7 depends on stages: Stage-6

Stage-40 has a backup stage: Stage-2

Stage-24 depends on stages: Stage-40

Stage-2

Stage-44 is a root stage

Stage-30 depends on stages: Stage-44

Stage-29 depends on stages: Stage-30 , consists of Stage-42, Stage-43,

Stage-12

Stage-42 has a backup stage: Stage-12

Stage-27 depends on stages: Stage-42

Stage-43 has a backup stage: Stage-12

Stage-28 depends on stages: Stage-43

Stage-12

Stage-47 is a root stage

Stage-34 depends on stages: Stage-47

Stage-33 depends on stages: Stage-34 , consists of Stage-45, Stage-46,

Stage-16

Stage-45 has a backup stage: Stage-16

Stage-31 depends on stages: Stage-45

Stage-46 has a backup stage: Stage-16

Stage-32 depends on stages: Stage-46

Stage-16

Stage-50 is a root stage

Stage-38 depends on stages: Stage-50

Stage-37 depends on stages: Stage-38 , consists of Stage-48, Stage-49,

Stage-20

Stage-48 has a backup stage: Stage-20

Stage-35 depends on stages: Stage-48

Stage-49 has a backup stage: Stage-20

Stage-36 depends on stages: Stage-49

Stage-20

{code}

Stage tasks execute log is below, we can see Stage-33 is conditional task and

it consists of Stage-45, Stage-46, Stage-16, Stage-16 is launched, Stage-45 and

Stage-46 should remove from the dependent tree, Stage-31 is child of Stage-45

parent of Stage-3, So, Stage-31 should removed too.

{code:java}

2020-12-03T01:09:50,939 INFO [HiveServer2-Background-Pool: Thread-87372]

ql.Driver: Launching Job 1 out of 17

2020-12-03T01:09:50,940 INFO [HiveServer2-Background-Pool: Thread-87372]

ql.Driver: Starting task [Stage-26:MAPRED] in parallel

2020-12-03T01:09:50,941 INFO [HiveServer2-Background-Pool: Thread-87372]

ql.Driver: Launching Job 2 out of 17

2020-12-03T01:09:50,943 INFO [HiveServer2-Background-Pool: Thread-87372]

ql.Driver: Starting task [Stage-30:MAPRED] in parallel

2020-12-03T01:09:50,943 INFO [HiveServer2-Background-Pool: Thread-87372]

ql.Driver: Launching Job 3 out of 17

2020-12-03T01:09:50,943 INFO [HiveServer2-Background-Pool: Thread-87372]

ql.Driver: Starting task [Stage-34:MAPRED] in parallel

2020-12-03T01:09:50,944 INFO [HiveServer2-Background-Pool: Thread-87372]

ql.Driver: Launching Job 4 out of 17

2020-12-03T01:09:50,944 INFO [HiveServer2-Background-Pool: Thread-87372]

ql.Driver: Starting task [Stage-38:MAPRED] in parallel

2020-12-03T01:10:32,946 INFO [HiveServer2-Background-Pool: Thread-87372]

ql.Driver: Starting task [Stage-29:CONDITIONAL] in parallel

2020-12-03T01:10:32,946 INFO [HiveServer2-Background-Pool: Thread-87372]

ql.Driver: Starting task [Stage-33:CONDITIONAL] in parallel

2020-12-03T01:10:32,946 INFO [HiveServer2-Background-Pool: Thread-87372]

ql.Driver: Starting task [Stage-37:CONDITIONAL] in parallel

2020-12-03T01:10:34,946 INFO [HiveServer2-Background-Pool: Thread-87372]

ql.Driver: Launching Job 5 out of 17

2020-12-03T01:10:34,947 INFO [HiveServer2-Background-Pool: Thread-87372]

ql.Driver: Starting task [Stage-16:MAPRED] in parallel

2020-12-03T01:10:34,948 INFO [HiveServer2-Background-Pool: Thread-87372]

ql.Driver: Launching Job 6 out of 17

2020-12-03T01:10:34,948 INFO [HiveServer2-Background-Pool: Thread-87372]

ql.Driver: Starting task [Stage-12:MAPRED] in parallel

2020-12-03T01:10:34,949 INFO [HiveServer2-Background-Pool: Thread-87372]

ql.Driver: Launching Job 7 out of 17

2020-12-03T01:10:34,950 INFO [HiveServer2-Background-Pool: Thread-87372]

ql.Driver: Starting task [Stage-20:MAPRED] in parallel

2020-12-03T01:10:34,950 INFO [HiveServer2-Background-Pool: Thread-87372]

ql.Driver:

[jira] [Updated] (HIVE-24484) Upgrade Hadoop to 3.2.1

[ https://issues.apache.org/jira/browse/HIVE-24484?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated HIVE-24484: -- Labels: pull-request-available (was: ) > Upgrade Hadoop to 3.2.1 > --- > > Key: HIVE-24484 > URL: https://issues.apache.org/jira/browse/HIVE-24484 > Project: Hive > Issue Type: Improvement >Reporter: David Mollitor >Assignee: David Mollitor >Priority: Major > Labels: pull-request-available > Time Spent: 10m > Remaining Estimate: 0h > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Work logged] (HIVE-24484) Upgrade Hadoop to 3.2.1

[ https://issues.apache.org/jira/browse/HIVE-24484?focusedWorklogId=519956=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-519956 ] ASF GitHub Bot logged work on HIVE-24484: - Author: ASF GitHub Bot Created on: 04/Dec/20 00:48 Start Date: 04/Dec/20 00:48 Worklog Time Spent: 10m Work Description: belugabehr opened a new pull request #1742: URL: https://github.com/apache/hive/pull/1742 ### What changes were proposed in this pull request? ### Why are the changes needed? ### Does this PR introduce _any_ user-facing change? ### How was this patch tested? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 519956) Remaining Estimate: 0h Time Spent: 10m > Upgrade Hadoop to 3.2.1 > --- > > Key: HIVE-24484 > URL: https://issues.apache.org/jira/browse/HIVE-24484 > Project: Hive > Issue Type: Improvement >Reporter: David Mollitor >Assignee: David Mollitor >Priority: Major > Time Spent: 10m > Remaining Estimate: 0h > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Work logged] (HIVE-23891) Using UNION sql clause and speculative execution can cause file duplication in Tez

[

https://issues.apache.org/jira/browse/HIVE-23891?focusedWorklogId=519955=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-519955

]

ASF GitHub Bot logged work on HIVE-23891:

-

Author: ASF GitHub Bot

Created on: 04/Dec/20 00:47

Start Date: 04/Dec/20 00:47

Worklog Time Spent: 10m

Work Description: github-actions[bot] closed pull request #1294:

URL: https://github.com/apache/hive/pull/1294

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 519955)

Time Spent: 2h 20m (was: 2h 10m)

> Using UNION sql clause and speculative execution can cause file duplication

> in Tez

> --

>

> Key: HIVE-23891

> URL: https://issues.apache.org/jira/browse/HIVE-23891

> Project: Hive

> Issue Type: Bug

>Reporter: George Pachitariu

>Assignee: George Pachitariu

>Priority: Major

> Labels: pull-request-available

> Attachments: HIVE-23891.1.patch

>

> Time Spent: 2h 20m

> Remaining Estimate: 0h

>

> Hello,

> the specific scenario when this can happen:

> - the execution engine is Tez;

> - speculative execution is on;

> - the query inserts into a table and the last step is a UNION sql clause;

> The problem is that Tez creates an extra layer of subdirectories when there

> is a UNION. Later, when deduplicating, Hive doesn't take that into account

> and only deduplicates folders but not the files inside.

> So for a query like this:

> {code:sql}

> insert overwrite table union_all

> select * from union_first_part

> union all

> select * from union_second_part;

> {code}

> The folder structure afterwards will be like this (a possible example):

> {code:java}

> .../union_all/HIVE_UNION_SUBDIR_1/00_0

> .../union_all/HIVE_UNION_SUBDIR_1/00_1

> .../union_all/HIVE_UNION_SUBDIR_2/00_1

> {code}

> The attached patch increases the number of folder levels that Hive will check

> recursively for duplicates when we have a UNION in Tez.

> Feel free to reach out if you have any questions :).

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Assigned] (HIVE-24484) Upgrade Hadoop to 3.2.1

[ https://issues.apache.org/jira/browse/HIVE-24484?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] David Mollitor reassigned HIVE-24484: - > Upgrade Hadoop to 3.2.1 > --- > > Key: HIVE-24484 > URL: https://issues.apache.org/jira/browse/HIVE-24484 > Project: Hive > Issue Type: Improvement >Reporter: David Mollitor >Assignee: David Mollitor >Priority: Major > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Work logged] (HIVE-21588) Remove HBase dependency from hive-metastore

[ https://issues.apache.org/jira/browse/HIVE-21588?focusedWorklogId=519923=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-519923 ] ASF GitHub Bot logged work on HIVE-21588: - Author: ASF GitHub Bot Created on: 04/Dec/20 00:02 Start Date: 04/Dec/20 00:02 Worklog Time Spent: 10m Work Description: sunchao commented on pull request #1723: URL: https://github.com/apache/hive/pull/1723#issuecomment-738461352 Merged. Thanks @wangyum ! This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 519923) Time Spent: 1h 40m (was: 1.5h) > Remove HBase dependency from hive-metastore > --- > > Key: HIVE-21588 > URL: https://issues.apache.org/jira/browse/HIVE-21588 > Project: Hive > Issue Type: Task > Components: HBase Metastore >Affects Versions: 4.0.0 >Reporter: Yuming Wang >Assignee: Yuming Wang >Priority: Major > Labels: pull-request-available > Attachments: HIVE-21588.01.patch, HIVE-21588.02.patch > > Time Spent: 1h 40m > Remaining Estimate: 0h > > HIVE-17234 has removed HBase metastore from master. But maven dependency have > not been removed. We should remove it. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Work logged] (HIVE-21588) Remove HBase dependency from hive-metastore

[ https://issues.apache.org/jira/browse/HIVE-21588?focusedWorklogId=519922=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-519922 ] ASF GitHub Bot logged work on HIVE-21588: - Author: ASF GitHub Bot Created on: 04/Dec/20 00:01 Start Date: 04/Dec/20 00:01 Worklog Time Spent: 10m Work Description: sunchao merged pull request #1723: URL: https://github.com/apache/hive/pull/1723 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 519922) Time Spent: 1.5h (was: 1h 20m) > Remove HBase dependency from hive-metastore > --- > > Key: HIVE-21588 > URL: https://issues.apache.org/jira/browse/HIVE-21588 > Project: Hive > Issue Type: Task > Components: HBase Metastore >Affects Versions: 4.0.0 >Reporter: Yuming Wang >Assignee: Yuming Wang >Priority: Major > Labels: pull-request-available > Attachments: HIVE-21588.01.patch, HIVE-21588.02.patch > > Time Spent: 1.5h > Remaining Estimate: 0h > > HIVE-17234 has removed HBase metastore from master. But maven dependency have > not been removed. We should remove it. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Work logged] (HIVE-21588) Remove HBase dependency from hive-metastore

[ https://issues.apache.org/jira/browse/HIVE-21588?focusedWorklogId=519921=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-519921 ] ASF GitHub Bot logged work on HIVE-21588: - Author: ASF GitHub Bot Created on: 04/Dec/20 00:01 Start Date: 04/Dec/20 00:01 Worklog Time Spent: 10m Work Description: sunchao commented on a change in pull request #1723: URL: https://github.com/apache/hive/pull/1723#discussion_r535735693 ## File path: ql/src/test/org/apache/hadoop/hive/ql/txn/compactor/TestWorker.java ## @@ -17,7 +17,6 @@ */ package org.apache.hadoop.hive.ql.txn.compactor; -import it.unimi.dsi.fastutil.booleans.AbstractBooleanBidirectionalIterator; Review comment: Gotcha, cool. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 519921) Time Spent: 1h 20m (was: 1h 10m) > Remove HBase dependency from hive-metastore > --- > > Key: HIVE-21588 > URL: https://issues.apache.org/jira/browse/HIVE-21588 > Project: Hive > Issue Type: Task > Components: HBase Metastore >Affects Versions: 4.0.0 >Reporter: Yuming Wang >Assignee: Yuming Wang >Priority: Major > Labels: pull-request-available > Attachments: HIVE-21588.01.patch, HIVE-21588.02.patch > > Time Spent: 1h 20m > Remaining Estimate: 0h > > HIVE-17234 has removed HBase metastore from master. But maven dependency have > not been removed. We should remove it. -- This message was sent by Atlassian Jira (v8.3.4#803005)

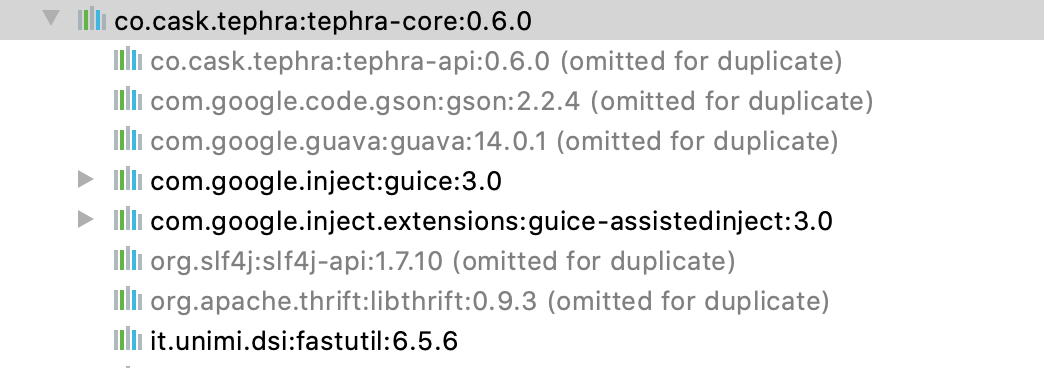

[jira] [Work logged] (HIVE-21588) Remove HBase dependency from hive-metastore

[ https://issues.apache.org/jira/browse/HIVE-21588?focusedWorklogId=519918=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-519918 ] ASF GitHub Bot logged work on HIVE-21588: - Author: ASF GitHub Bot Created on: 03/Dec/20 23:36 Start Date: 03/Dec/20 23:36 Worklog Time Spent: 10m Work Description: wangyum commented on a change in pull request #1723: URL: https://github.com/apache/hive/pull/1723#discussion_r535725208 ## File path: ql/src/test/org/apache/hadoop/hive/ql/txn/compactor/TestWorker.java ## @@ -17,7 +17,6 @@ */ package org.apache.hadoop.hive.ql.txn.compactor; -import it.unimi.dsi.fastutil.booleans.AbstractBooleanBidirectionalIterator; Review comment: Yes, this need `it.unimi.dsi:fastutil`, we have removed this dependency:  This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 519918) Time Spent: 1h 10m (was: 1h) > Remove HBase dependency from hive-metastore > --- > > Key: HIVE-21588 > URL: https://issues.apache.org/jira/browse/HIVE-21588 > Project: Hive > Issue Type: Task > Components: HBase Metastore >Affects Versions: 4.0.0 >Reporter: Yuming Wang >Assignee: Yuming Wang >Priority: Major > Labels: pull-request-available > Attachments: HIVE-21588.01.patch, HIVE-21588.02.patch > > Time Spent: 1h 10m > Remaining Estimate: 0h > > HIVE-17234 has removed HBase metastore from master. But maven dependency have > not been removed. We should remove it. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Resolved] (HIVE-24220) Unable to reopen a closed bug report

[ https://issues.apache.org/jira/browse/HIVE-24220?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Ankur Tagra resolved HIVE-24220. Resolution: Won't Fix > Unable to reopen a closed bug report > > > Key: HIVE-24220 > URL: https://issues.apache.org/jira/browse/HIVE-24220 > Project: Hive > Issue Type: Bug >Reporter: Ankur Tagra >Assignee: Ankur Tagra >Priority: Major > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Reopened] (HIVE-24220) Unable to reopen a closed bug report

[ https://issues.apache.org/jira/browse/HIVE-24220?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Ankur Tagra reopened HIVE-24220: > Unable to reopen a closed bug report > > > Key: HIVE-24220 > URL: https://issues.apache.org/jira/browse/HIVE-24220 > Project: Hive > Issue Type: Bug >Reporter: Ankur Tagra >Assignee: Ankur Tagra >Priority: Major > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Resolved] (HIVE-24220) Unable to reopen a closed bug report

[ https://issues.apache.org/jira/browse/HIVE-24220?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Ankur Tagra resolved HIVE-24220. Resolution: Fixed > Unable to reopen a closed bug report > > > Key: HIVE-24220 > URL: https://issues.apache.org/jira/browse/HIVE-24220 > Project: Hive > Issue Type: Bug >Reporter: Ankur Tagra >Assignee: Ankur Tagra >Priority: Major > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Reopened] (HIVE-24220) Unable to reopen a closed bug report

[ https://issues.apache.org/jira/browse/HIVE-24220?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Ankur Tagra reopened HIVE-24220: > Unable to reopen a closed bug report > > > Key: HIVE-24220 > URL: https://issues.apache.org/jira/browse/HIVE-24220 > Project: Hive > Issue Type: Bug >Reporter: Ankur Tagra >Assignee: Ankur Tagra >Priority: Major > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Work logged] (HIVE-24432) Delete Notification Events in Batches

[ https://issues.apache.org/jira/browse/HIVE-24432?focusedWorklogId=519874=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-519874 ] ASF GitHub Bot logged work on HIVE-24432: - Author: ASF GitHub Bot Created on: 03/Dec/20 21:21 Start Date: 03/Dec/20 21:21 Worklog Time Spent: 10m Work Description: belugabehr commented on pull request #1710: URL: https://github.com/apache/hive/pull/1710#issuecomment-738326173 @nrg4878 Review please for HMS work? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 519874) Time Spent: 1h 20m (was: 1h 10m) > Delete Notification Events in Batches > - > > Key: HIVE-24432 > URL: https://issues.apache.org/jira/browse/HIVE-24432 > Project: Hive > Issue Type: Improvement >Affects Versions: 3.2.0 >Reporter: David Mollitor >Assignee: David Mollitor >Priority: Major > Labels: pull-request-available > Time Spent: 1h 20m > Remaining Estimate: 0h > > Notification events are loaded in batches (reduces memory pressure on the > HMS), but all of the deletes happen under a single transactions and, when > deleting many records, can put a lot of pressure on the backend database. > Instead, delete events in batches (in different transactions) as well. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Work logged] (HIVE-21737) Upgrade Avro to version 1.10.1

[ https://issues.apache.org/jira/browse/HIVE-21737?focusedWorklogId=519872=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-519872 ] ASF GitHub Bot logged work on HIVE-21737: - Author: ASF GitHub Bot Created on: 03/Dec/20 21:12 Start Date: 03/Dec/20 21:12 Worklog Time Spent: 10m Work Description: iemejia commented on pull request #1635: URL: https://github.com/apache/hive/pull/1635#issuecomment-738315389 Thanks @sunchao eager to see this finally happening ! This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 519872) Time Spent: 3h 40m (was: 3.5h) > Upgrade Avro to version 1.10.1 > -- > > Key: HIVE-21737 > URL: https://issues.apache.org/jira/browse/HIVE-21737 > Project: Hive > Issue Type: Improvement > Components: Hive >Reporter: Ismaël Mejía >Assignee: Fokko Driesprong >Priority: Major > Labels: pull-request-available > Attachments: > 0001-HIVE-21737-Make-Avro-use-in-Hive-compatible-with-Avr.patch > > Time Spent: 3h 40m > Remaining Estimate: 0h > > Avro >= 1.9.x bring a lot of fixes including a leaner version of Avro without > Jackson in the public API and Guava as a dependency. Worth the update. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Resolved] (HIVE-24220) Unable to reopen a closed bug report

[ https://issues.apache.org/jira/browse/HIVE-24220?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Ankur Tagra resolved HIVE-24220. Resolution: Fixed > Unable to reopen a closed bug report > > > Key: HIVE-24220 > URL: https://issues.apache.org/jira/browse/HIVE-24220 > Project: Hive > Issue Type: Bug >Reporter: Ankur Tagra >Assignee: Ankur Tagra >Priority: Major > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Work logged] (HIVE-24468) Use Event Time instead of Current Time in Notification Log DB Entry

[

https://issues.apache.org/jira/browse/HIVE-24468?focusedWorklogId=519861=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-519861

]

ASF GitHub Bot logged work on HIVE-24468:

-

Author: ASF GitHub Bot

Created on: 03/Dec/20 20:39

Start Date: 03/Dec/20 20:39

Worklog Time Spent: 10m

Work Description: belugabehr commented on pull request #1728:

URL: https://github.com/apache/hive/pull/1728#issuecomment-738293741

@pvary Just so we are clear "now()" is not an SQL function. It's

implemented on this DBListener class:

```

private int now() {

long millis = System.currentTimeMillis();

millis /= 1000;

if (millis > Integer.MAX_VALUE) {

LOG.warn("We've passed max int value in seconds since the epoch, " +

"all notification times will be the same!");

return Integer.MAX_VALUE;

}

return (int)millis;

}

```

https://github.com/apache/hive/blob/master/hcatalog/server-extensions/src/main/java/org/apache/hive/hcatalog/listener/DbNotificationListener.java#L941-L950

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 519861)

Time Spent: 1.5h (was: 1h 20m)

> Use Event Time instead of Current Time in Notification Log DB Entry

> ---

>

> Key: HIVE-24468

> URL: https://issues.apache.org/jira/browse/HIVE-24468

> Project: Hive

> Issue Type: Improvement

>Reporter: David Mollitor

>Assignee: David Mollitor

>Priority: Major

> Labels: pull-request-available

> Time Spent: 1.5h

> Remaining Estimate: 0h

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Work logged] (HIVE-24468) Use Event Time instead of Current Time in Notification Log DB Entry

[ https://issues.apache.org/jira/browse/HIVE-24468?focusedWorklogId=519849=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-519849 ] ASF GitHub Bot logged work on HIVE-24468: - Author: ASF GitHub Bot Created on: 03/Dec/20 20:01 Start Date: 03/Dec/20 20:01 Worklog Time Spent: 10m Work Description: belugabehr edited a comment on pull request #1728: URL: https://github.com/apache/hive/pull/1728#issuecomment-738274162 @pvary I agree with your understanding that the SELECT FOR UPDATE is a lock, and therefore the timestamps should be always increasing, but imagine if the HMS clock on two instances were off by 5s (or more). The HMS with the slower clock would generate events that were earlier in time, but with a higher ID. So there is not strong enforcement of the time being sequential. It's all based on the HMS clocks being in sync and trusting those clocks. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 519849) Time Spent: 1h 20m (was: 1h 10m) > Use Event Time instead of Current Time in Notification Log DB Entry > --- > > Key: HIVE-24468 > URL: https://issues.apache.org/jira/browse/HIVE-24468 > Project: Hive > Issue Type: Improvement >Reporter: David Mollitor >Assignee: David Mollitor >Priority: Major > Labels: pull-request-available > Time Spent: 1h 20m > Remaining Estimate: 0h > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Work logged] (HIVE-24468) Use Event Time instead of Current Time in Notification Log DB Entry

[ https://issues.apache.org/jira/browse/HIVE-24468?focusedWorklogId=519847=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-519847 ] ASF GitHub Bot logged work on HIVE-24468: - Author: ASF GitHub Bot Created on: 03/Dec/20 20:00 Start Date: 03/Dec/20 20:00 Worklog Time Spent: 10m Work Description: belugabehr commented on pull request #1728: URL: https://github.com/apache/hive/pull/1728#issuecomment-738274162 @pvary I agree with your understanding that the SELECT FOR UPDATE is a lock, and therefore the time is the same, but imagine if the HMS clock on two instances were off by 5s (or more). The HMS with the slower clock would generate events that were earlier in time, but with a higher ID. So there is not strong enforcement of the time being sequential. It's all based on the HMS clocks being in sync and trusting those clocks. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 519847) Time Spent: 1h 10m (was: 1h) > Use Event Time instead of Current Time in Notification Log DB Entry > --- > > Key: HIVE-24468 > URL: https://issues.apache.org/jira/browse/HIVE-24468 > Project: Hive > Issue Type: Improvement >Reporter: David Mollitor >Assignee: David Mollitor >Priority: Major > Labels: pull-request-available > Time Spent: 1h 10m > Remaining Estimate: 0h > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Work logged] (HIVE-21588) Remove HBase dependency from hive-metastore

[ https://issues.apache.org/jira/browse/HIVE-21588?focusedWorklogId=519825=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-519825 ] ASF GitHub Bot logged work on HIVE-21588: - Author: ASF GitHub Bot Created on: 03/Dec/20 19:03 Start Date: 03/Dec/20 19:03 Worklog Time Spent: 10m Work Description: sunchao commented on a change in pull request #1723: URL: https://github.com/apache/hive/pull/1723#discussion_r535502356 ## File path: ql/src/test/org/apache/hadoop/hive/ql/txn/compactor/TestWorker.java ## @@ -17,7 +17,6 @@ */ package org.apache.hadoop.hive.ql.txn.compactor; -import it.unimi.dsi.fastutil.booleans.AbstractBooleanBidirectionalIterator; Review comment: is this related? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 519825) Time Spent: 1h (was: 50m) > Remove HBase dependency from hive-metastore > --- > > Key: HIVE-21588 > URL: https://issues.apache.org/jira/browse/HIVE-21588 > Project: Hive > Issue Type: Task > Components: HBase Metastore >Affects Versions: 4.0.0 >Reporter: Yuming Wang >Assignee: Yuming Wang >Priority: Major > Labels: pull-request-available > Attachments: HIVE-21588.01.patch, HIVE-21588.02.patch > > Time Spent: 1h > Remaining Estimate: 0h > > HIVE-17234 has removed HBase metastore from master. But maven dependency have > not been removed. We should remove it. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Work logged] (HIVE-24470) Separate HiveMetastore Thrift and Driver logic

[ https://issues.apache.org/jira/browse/HIVE-24470?focusedWorklogId=519813=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-519813 ] ASF GitHub Bot logged work on HIVE-24470: - Author: ASF GitHub Bot Created on: 03/Dec/20 18:44 Start Date: 03/Dec/20 18:44 Worklog Time Spent: 10m Work Description: fenglu-g commented on pull request #1740: URL: https://github.com/apache/hive/pull/1740#issuecomment-738212050 @nrg4878 and others, PTAL, thanks. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 519813) Time Spent: 20m (was: 10m) > Separate HiveMetastore Thrift and Driver logic > -- > > Key: HIVE-24470 > URL: https://issues.apache.org/jira/browse/HIVE-24470 > Project: Hive > Issue Type: Improvement > Components: Standalone Metastore >Reporter: Cameron Moberg >Assignee: Cameron Moberg >Priority: Minor > Labels: pull-request-available > Time Spent: 20m > Remaining Estimate: 0h > > In the file HiveMetastore.java the majority of the code is a thrift interface > rather than the actual logic behind starting hive metastore, this should be > moved out into a separate file to clean up the file. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Work logged] (HIVE-24468) Use Event Time instead of Current Time in Notification Log DB Entry

[ https://issues.apache.org/jira/browse/HIVE-24468?focusedWorklogId=519812=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-519812 ] ASF GitHub Bot logged work on HIVE-24468: - Author: ASF GitHub Bot Created on: 03/Dec/20 18:42 Start Date: 03/Dec/20 18:42 Worklog Time Spent: 10m Work Description: pvary commented on pull request #1728: URL: https://github.com/apache/hive/pull/1728#issuecomment-738211024 > So, the answer is yes. The timestamps could be out of order. Before this patch the timestamps were in order as we locked the NEXT_EVENT_ID table with SELECT FOR UPDATE, so the timestamp was aligned with the EVENT_ID. (There might be some exceptions if some backend RDBMS reuses the value returned by the function now() in a single transaction, but I think we should overlook this for now ) After this PR the timestamps could become out of order. Which is IMHO an API change even if the order requirement is not documented. So the users should be aware of this change and we should seriously consider this before proceeding. Good to have you back and starting to cleaning up these stuff! Thanks, Peter This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 519812) Time Spent: 1h (was: 50m) > Use Event Time instead of Current Time in Notification Log DB Entry > --- > > Key: HIVE-24468 > URL: https://issues.apache.org/jira/browse/HIVE-24468 > Project: Hive > Issue Type: Improvement >Reporter: David Mollitor >Assignee: David Mollitor >Priority: Major > Labels: pull-request-available > Time Spent: 1h > Remaining Estimate: 0h > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (HIVE-24470) Separate HiveMetastore Thrift and Driver logic

[ https://issues.apache.org/jira/browse/HIVE-24470?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated HIVE-24470: -- Labels: pull-request-available (was: ) > Separate HiveMetastore Thrift and Driver logic > -- > > Key: HIVE-24470 > URL: https://issues.apache.org/jira/browse/HIVE-24470 > Project: Hive > Issue Type: Improvement > Components: Standalone Metastore >Reporter: Cameron Moberg >Assignee: Cameron Moberg >Priority: Minor > Labels: pull-request-available > Time Spent: 10m > Remaining Estimate: 0h > > In the file HiveMetastore.java the majority of the code is a thrift interface > rather than the actual logic behind starting hive metastore, this should be > moved out into a separate file to clean up the file. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Work logged] (HIVE-24470) Separate HiveMetastore Thrift and Driver logic

[ https://issues.apache.org/jira/browse/HIVE-24470?focusedWorklogId=519811=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-519811 ] ASF GitHub Bot logged work on HIVE-24470: - Author: ASF GitHub Bot Created on: 03/Dec/20 18:41 Start Date: 03/Dec/20 18:41 Worklog Time Spent: 10m Work Description: Noremac201 opened a new pull request #1740: URL: https://github.com/apache/hive/pull/1740 ### What changes were proposed in this pull request? 1. Refactor HiveMetastore.HMSHandler into its own class ### Why are the changes needed? This will pave the way for cleaner changes since now we don't have the driver class nested with 10,000 line HMSHandler file so there is a clearer separation of duties. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Existing unit tests, building/running manually Not additional tests were added since this was a pure refactoring This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 519811) Remaining Estimate: 0h Time Spent: 10m > Separate HiveMetastore Thrift and Driver logic > -- > > Key: HIVE-24470 > URL: https://issues.apache.org/jira/browse/HIVE-24470 > Project: Hive > Issue Type: Improvement > Components: Standalone Metastore >Reporter: Cameron Moberg >Assignee: Cameron Moberg >Priority: Minor > Time Spent: 10m > Remaining Estimate: 0h > > In the file HiveMetastore.java the majority of the code is a thrift interface > rather than the actual logic behind starting hive metastore, this should be > moved out into a separate file to clean up the file. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Resolved] (HIVE-24281) Unable to reopen a closed bug report

[ https://issues.apache.org/jira/browse/HIVE-24281?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Ankur Tagra resolved HIVE-24281. Resolution: Fixed > Unable to reopen a closed bug report > > > Key: HIVE-24281 > URL: https://issues.apache.org/jira/browse/HIVE-24281 > Project: Hive > Issue Type: Bug > Components: API >Affects Versions: 1.2.0 >Reporter: Ankur Tagra >Assignee: Ankur Tagra >Priority: Trivial > > Unable to reopen a closed bug report -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Assigned] (HIVE-24394) Enable printing explain to console at query start

[ https://issues.apache.org/jira/browse/HIVE-24394?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Johan Gustavsson reassigned HIVE-24394: --- Assignee: Jesus Camacho Rodriguez > Enable printing explain to console at query start > - > > Key: HIVE-24394 > URL: https://issues.apache.org/jira/browse/HIVE-24394 > Project: Hive > Issue Type: Improvement > Components: Hive, Query Processor >Affects Versions: 2.3.7, 3.1.2 >Reporter: Johan Gustavsson >Assignee: Jesus Camacho Rodriguez >Priority: Minor > Labels: pull-request-available > Time Spent: 10m > Remaining Estimate: 0h > > Currently there is a hive.log.explain.output option that prints extended > explain to log. While this is helpful for internal investigations, it limits > the information that is available to users. So we should add options to make > this print non-extended explain to console,. for general user consumption, to > make it easier for users to debug queries and workflows without having to > resubmit queries with explain. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Reopened] (HIVE-24281) Unable to reopen a closed bug report

[ https://issues.apache.org/jira/browse/HIVE-24281?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Ankur Tagra reopened HIVE-24281: > Unable to reopen a closed bug report > > > Key: HIVE-24281 > URL: https://issues.apache.org/jira/browse/HIVE-24281 > Project: Hive > Issue Type: Bug > Components: API >Affects Versions: 1.2.0 >Reporter: Ankur Tagra >Assignee: Ankur Tagra >Priority: Trivial > Fix For: 0.11.1 > > > Unable to reopen a closed bug report -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Work logged] (HIVE-17709) remove sun.misc.Cleaner references

[ https://issues.apache.org/jira/browse/HIVE-17709?focusedWorklogId=519785=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-519785 ] ASF GitHub Bot logged work on HIVE-17709: - Author: ASF GitHub Bot Created on: 03/Dec/20 17:29 Start Date: 03/Dec/20 17:29 Worklog Time Spent: 10m Work Description: abstractdog opened a new pull request #1739: URL: https://github.com/apache/hive/pull/1739 ### What changes were proposed in this pull request? ### Why are the changes needed? ### Does this PR introduce _any_ user-facing change? ### How was this patch tested? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 519785) Remaining Estimate: 0h Time Spent: 10m > remove sun.misc.Cleaner references > -- > > Key: HIVE-17709 > URL: https://issues.apache.org/jira/browse/HIVE-17709 > Project: Hive > Issue Type: Sub-task > Components: Build Infrastructure >Reporter: Zoltan Haindrich >Assignee: László Bodor >Priority: Major > Time Spent: 10m > Remaining Estimate: 0h > > according to: > https://github.com/apache/hive/blob/188f7fb47aec3f98ef53965ba6ae84e23bd26f59/llap-server/src/java/org/apache/hadoop/hive/llap/cache/SimpleAllocator.java#L36 > HADOOP-12760 will be the long term fix -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (HIVE-17709) remove sun.misc.Cleaner references

[ https://issues.apache.org/jira/browse/HIVE-17709?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated HIVE-17709: -- Labels: pull-request-available (was: ) > remove sun.misc.Cleaner references > -- > > Key: HIVE-17709 > URL: https://issues.apache.org/jira/browse/HIVE-17709 > Project: Hive > Issue Type: Sub-task > Components: Build Infrastructure >Reporter: Zoltan Haindrich >Assignee: László Bodor >Priority: Major > Labels: pull-request-available > Time Spent: 10m > Remaining Estimate: 0h > > according to: > https://github.com/apache/hive/blob/188f7fb47aec3f98ef53965ba6ae84e23bd26f59/llap-server/src/java/org/apache/hadoop/hive/llap/cache/SimpleAllocator.java#L36 > HADOOP-12760 will be the long term fix -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Work logged] (HIVE-24481) Skipped compaction can cause data corruption with streaming