[jira] [Work logged] (HIVE-25268) date_format udf doesn't work for dates prior to 1900 if the timezone is different from UTC

[

https://issues.apache.org/jira/browse/HIVE-25268?focusedWorklogId=612959=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-612959

]

ASF GitHub Bot logged work on HIVE-25268:

-

Author: ASF GitHub Bot

Created on: 22/Jun/21 05:29

Start Date: 22/Jun/21 05:29

Worklog Time Spent: 10m

Work Description: guptanikhil007 commented on a change in pull request

#2409:

URL: https://github.com/apache/hive/pull/2409#discussion_r655886832

##

File path: ql/src/test/queries/clientpositive/udf_date_format.q

##

@@ -78,3 +78,16 @@ select date_format("2015-04-08 10:30:45","-MM-dd

HH:mm:ss.SSS z");

--julian date

set hive.local.time.zone=UTC;

select date_format("1001-01-05","dd---MM--");

+

+--dates prior to 1900

+set hive.local.time.zone=Asia/Bangkok;

+select date_format('1400-01-14 01:01:10.123', '-MM-dd HH:mm:ss.SSS z');

+select date_format('1800-01-14 01:01:10.123', '-MM-dd HH:mm:ss.SSS z');

+

+set hive.local.time.zone=Europe/Berlin;

+select date_format('1400-01-14 01:01:10.123', '-MM-dd HH:mm:ss.SSS z');

+select date_format('1800-01-14 01:01:10.123', '-MM-dd HH:mm:ss.SSS z');

+

+set hive.local.time.zone=Africa/Johannesburg;

+select date_format('1400-01-14 01:01:10.123', '-MM-dd HH:mm:ss.SSS z');

Review comment:

Once this patch is merged I will update the Hive wiki as well

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 612959)

Time Spent: 3h 50m (was: 3h 40m)

> date_format udf doesn't work for dates prior to 1900 if the timezone is

> different from UTC

> --

>

> Key: HIVE-25268

> URL: https://issues.apache.org/jira/browse/HIVE-25268

> Project: Hive

> Issue Type: Bug

> Components: UDF

>Affects Versions: 3.1.0, 3.1.1, 3.1.2, 4.0.0

>Reporter: Nikhil Gupta

>Assignee: Nikhil Gupta

>Priority: Major

> Labels: pull-request-available

> Fix For: 4.0.0

>

> Time Spent: 3h 50m

> Remaining Estimate: 0h

>

> *Hive 1.2.1*:

> {code:java}

> select date_format('1400-01-14 01:00:00', '-MM-dd HH:mm:ss z');

> +--+--+

> | _c0|

> +--+--+

> | 1400-01-14 01:00:00 ICT |

> +--+--+

> select date_format('1800-01-14 01:00:00', '-MM-dd HH:mm:ss z');

> +--+--+

> | _c0|

> +--+--+

> | 1800-01-14 01:00:00 ICT |

> +--+--+

> {code}

> *Hive 3.1, Hive 4.0:*

> {code:java}

> select date_format('1400-01-14 01:00:00', '-MM-dd HH:mm:ss z');

> +--+

> | _c0|

> +--+

> | 1400-01-06 01:17:56 ICT |

> +--+

> select date_format('1800-01-14 01:00:00', '-MM-dd HH:mm:ss z');

> +--+

> | _c0|

> +--+

> | 1800-01-14 01:17:56 ICT |

> +--+

> {code}

> VM timezone is set to 'Asia/Bangkok'

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Work logged] (HIVE-25268) date_format udf doesn't work for dates prior to 1900 if the timezone is different from UTC

[

https://issues.apache.org/jira/browse/HIVE-25268?focusedWorklogId=612958=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-612958

]

ASF GitHub Bot logged work on HIVE-25268:

-

Author: ASF GitHub Bot

Created on: 22/Jun/21 05:28

Start Date: 22/Jun/21 05:28

Worklog Time Spent: 10m

Work Description: guptanikhil007 commented on a change in pull request

#2409:

URL: https://github.com/apache/hive/pull/2409#discussion_r655886389

##

File path:

ql/src/java/org/apache/hadoop/hive/ql/udf/generic/GenericUDFDateFormat.java

##

@@ -111,17 +123,18 @@ public Object evaluate(DeferredObject[] arguments) throws

HiveException {

// the function should support both short date and full timestamp format

// time part of the timestamp should not be skipped

Timestamp ts = getTimestampValue(arguments, 0, tsConverters);

+

if (ts == null) {

Date d = getDateValue(arguments, 0, dtInputTypes, dtConverters);

if (d == null) {

return null;

}

ts = Timestamp.ofEpochMilli(d.toEpochMilli(id), id);

}

-

-

-date.setTime(ts.toEpochMilli(id));

-String res = formatter.format(date);

+Timestamp ts2 = TimestampTZUtil.convertTimestampToZone(ts, timeZone,

ZoneId.of("UTC"));

Review comment:

done

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 612958)

Time Spent: 3h 40m (was: 3.5h)

> date_format udf doesn't work for dates prior to 1900 if the timezone is

> different from UTC

> --

>

> Key: HIVE-25268

> URL: https://issues.apache.org/jira/browse/HIVE-25268

> Project: Hive

> Issue Type: Bug

> Components: UDF

>Affects Versions: 3.1.0, 3.1.1, 3.1.2, 4.0.0

>Reporter: Nikhil Gupta

>Assignee: Nikhil Gupta

>Priority: Major

> Labels: pull-request-available

> Fix For: 4.0.0

>

> Time Spent: 3h 40m

> Remaining Estimate: 0h

>

> *Hive 1.2.1*:

> {code:java}

> select date_format('1400-01-14 01:00:00', '-MM-dd HH:mm:ss z');

> +--+--+

> | _c0|

> +--+--+

> | 1400-01-14 01:00:00 ICT |

> +--+--+

> select date_format('1800-01-14 01:00:00', '-MM-dd HH:mm:ss z');

> +--+--+

> | _c0|

> +--+--+

> | 1800-01-14 01:00:00 ICT |

> +--+--+

> {code}

> *Hive 3.1, Hive 4.0:*

> {code:java}

> select date_format('1400-01-14 01:00:00', '-MM-dd HH:mm:ss z');

> +--+

> | _c0|

> +--+

> | 1400-01-06 01:17:56 ICT |

> +--+

> select date_format('1800-01-14 01:00:00', '-MM-dd HH:mm:ss z');

> +--+

> | _c0|

> +--+

> | 1800-01-14 01:17:56 ICT |

> +--+

> {code}

> VM timezone is set to 'Asia/Bangkok'

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Work logged] (HIVE-25268) date_format udf doesn't work for dates prior to 1900 if the timezone is different from UTC

[

https://issues.apache.org/jira/browse/HIVE-25268?focusedWorklogId=612955=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-612955

]

ASF GitHub Bot logged work on HIVE-25268:

-

Author: ASF GitHub Bot

Created on: 22/Jun/21 04:42

Start Date: 22/Jun/21 04:42

Worklog Time Spent: 10m

Work Description: guptanikhil007 commented on a change in pull request

#2409:

URL: https://github.com/apache/hive/pull/2409#discussion_r655870639

##

File path: ql/src/test/queries/clientpositive/udf_date_format.q

##

@@ -78,3 +78,16 @@ select date_format("2015-04-08 10:30:45","-MM-dd

HH:mm:ss.SSS z");

--julian date

set hive.local.time.zone=UTC;

select date_format("1001-01-05","dd---MM--");

+

+--dates prior to 1900

+set hive.local.time.zone=Asia/Bangkok;

+select date_format('1400-01-14 01:01:10.123', '-MM-dd HH:mm:ss.SSS z');

+select date_format('1800-01-14 01:01:10.123', '-MM-dd HH:mm:ss.SSS z');

+

+set hive.local.time.zone=Europe/Berlin;

+select date_format('1400-01-14 01:01:10.123', '-MM-dd HH:mm:ss.SSS z');

+select date_format('1800-01-14 01:01:10.123', '-MM-dd HH:mm:ss.SSS z');

+

+set hive.local.time.zone=Africa/Johannesburg;

+select date_format('1400-01-14 01:01:10.123', '-MM-dd HH:mm:ss.SSS z');

Review comment:

All the existing tests with SimpleDateFormat Formatter is passing except

the milliseconds change which I have mentioned in my comment.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 612955)

Time Spent: 3.5h (was: 3h 20m)

> date_format udf doesn't work for dates prior to 1900 if the timezone is

> different from UTC

> --

>

> Key: HIVE-25268

> URL: https://issues.apache.org/jira/browse/HIVE-25268

> Project: Hive

> Issue Type: Bug

> Components: UDF

>Affects Versions: 3.1.0, 3.1.1, 3.1.2, 4.0.0

>Reporter: Nikhil Gupta

>Assignee: Nikhil Gupta

>Priority: Major

> Labels: pull-request-available

> Fix For: 4.0.0

>

> Time Spent: 3.5h

> Remaining Estimate: 0h

>

> *Hive 1.2.1*:

> {code:java}

> select date_format('1400-01-14 01:00:00', '-MM-dd HH:mm:ss z');

> +--+--+

> | _c0|

> +--+--+

> | 1400-01-14 01:00:00 ICT |

> +--+--+

> select date_format('1800-01-14 01:00:00', '-MM-dd HH:mm:ss z');

> +--+--+

> | _c0|

> +--+--+

> | 1800-01-14 01:00:00 ICT |

> +--+--+

> {code}

> *Hive 3.1, Hive 4.0:*

> {code:java}

> select date_format('1400-01-14 01:00:00', '-MM-dd HH:mm:ss z');

> +--+

> | _c0|

> +--+

> | 1400-01-06 01:17:56 ICT |

> +--+

> select date_format('1800-01-14 01:00:00', '-MM-dd HH:mm:ss z');

> +--+

> | _c0|

> +--+

> | 1800-01-14 01:17:56 ICT |

> +--+

> {code}

> VM timezone is set to 'Asia/Bangkok'

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Resolved] (HIVE-25231) Add an ability to migrate CSV generated to hive table in replstats

[ https://issues.apache.org/jira/browse/HIVE-25231?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Ayush Saxena resolved HIVE-25231. - Hadoop Flags: Reviewed Resolution: Fixed > Add an ability to migrate CSV generated to hive table in replstats > -- > > Key: HIVE-25231 > URL: https://issues.apache.org/jira/browse/HIVE-25231 > Project: Hive > Issue Type: Improvement >Reporter: Ayush Saxena >Assignee: Ayush Saxena >Priority: Major > Labels: pull-request-available > Time Spent: 40m > Remaining Estimate: 0h > > Add an option to replstats.sh to load the CSV generated using the replication > policy into a hive table/view. -- This message was sent by Atlassian Jira (v8.3.4#803005)

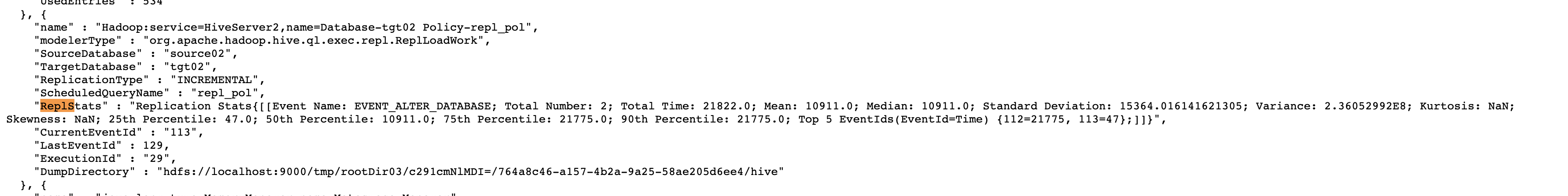

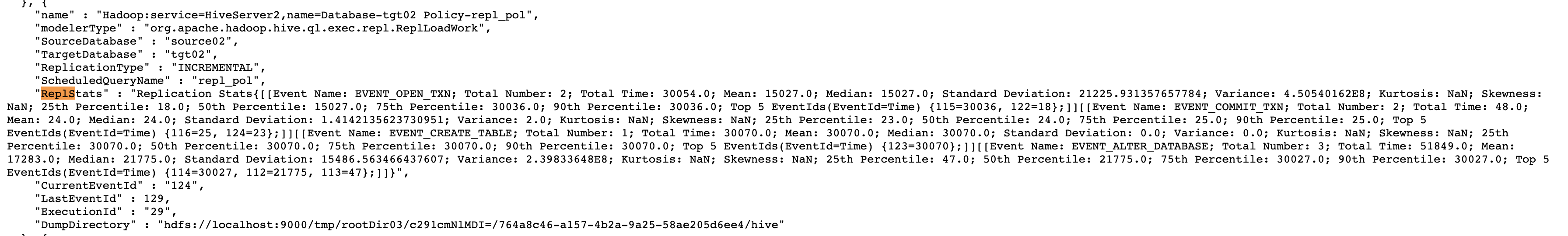

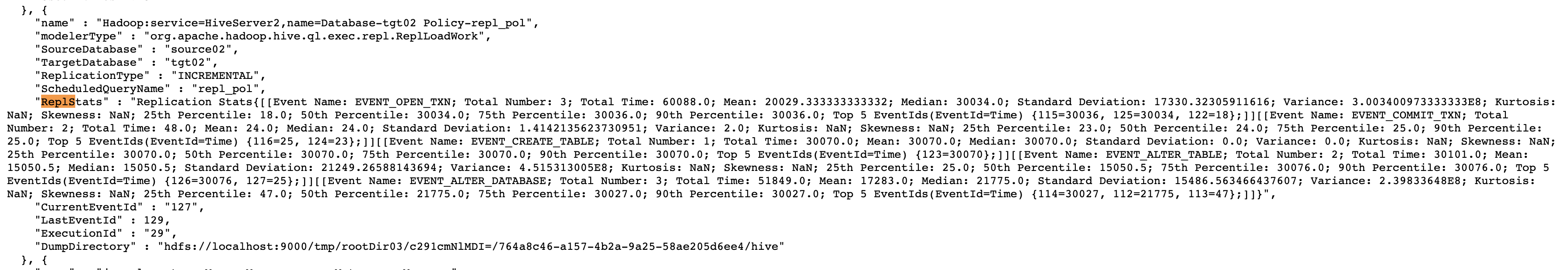

[jira] [Work logged] (HIVE-25207) Expose incremental load statistics via JMX

[ https://issues.apache.org/jira/browse/HIVE-25207?focusedWorklogId=612952=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-612952 ] ASF GitHub Bot logged work on HIVE-25207: - Author: ASF GitHub Bot Created on: 22/Jun/21 04:01 Start Date: 22/Jun/21 04:01 Worklog Time Spent: 10m Work Description: ayushtkn commented on pull request #2356: URL: https://github.com/apache/hive/pull/2356#issuecomment-865509568 **First Snap:**  **Second Snap**  **Third Snap**  -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 612952) Time Spent: 1h 20m (was: 1h 10m) > Expose incremental load statistics via JMX > -- > > Key: HIVE-25207 > URL: https://issues.apache.org/jira/browse/HIVE-25207 > Project: Hive > Issue Type: Improvement >Reporter: Ayush Saxena >Assignee: Ayush Saxena >Priority: Major > Labels: pull-request-available > Time Spent: 1h 20m > Remaining Estimate: 0h > > Expose the incremental load details and statistics at per policy level in the > JMX. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (HIVE-24078) result rows not equal in mr and tez

[ https://issues.apache.org/jira/browse/HIVE-24078?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] dailong updated HIVE-24078: --- Summary: result rows not equal in mr and tez (was: result rows not equal in mr and tez.) > result rows not equal in mr and tez > --- > > Key: HIVE-24078 > URL: https://issues.apache.org/jira/browse/HIVE-24078 > Project: Hive > Issue Type: Bug > Components: HiveServer2, Tez >Affects Versions: 3.1.2 >Reporter: kuqiqi >Priority: Blocker > > select > rank_num, > province_name, > programset_id, > programset_name, > programset_type, > cv, > uv, > pt, > rank_num2, > rank_num3, > city_name, > level, > cp_code, > cp_name, > version_type, > zz.city_code, > zz.province_alias, > '20200815' dt > from > (SELECT row_number() over(partition BY > a1.province_alias,a1.city_code,a1.version_type > ORDER BY cast(a1.cv AS bigint) DESC) AS rank_num, > province_name(a1.province_alias) AS province_name, > a1.program_set_id AS programset_id, > a2.programset_name, > a2.type_name AS programset_type, > a1.cv, > a1.uv, > cast(a1.pt/360 as decimal(20,2)) pt, > row_number() over (partition by > a1.province_alias,a1.city_code,a1.version_type order by cast(a1.uv as bigint) > desc ) as rank_num2, > row_number() over (partition by > a1.province_alias,a1.city_code,a1.version_type order by cast(a1.pt as bigint) > desc ) as rank_num3, > a1.city_code, > a1.city_name, > '3' as level, > a2.cp_code, > a2.cp_name, > '20200815'as dt, > a1.province_alias, > a1.version_type > FROM temp.dmp_device_vod_valid_day_v1_20200815_hn a1 > LEFT JOIN temp.dmp_device_vod_valid_day_v2_20200815_hn a2 ON > a1.program_set_id=a2.programset_id > WHERE a2.programset_name IS NOT NULL ) zz > where rank_num<1000 or rank_num2<1000 or rank_num3<1000 > ; > > This sql gets 76742 rows in mr, but 76681 rows in tez.How to fix it? > I think the problem maybe lies in row_number. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (HIVE-24078) result rows not equal in mr and tez.

[ https://issues.apache.org/jira/browse/HIVE-24078?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] dailong updated HIVE-24078: --- Summary: result rows not equal in mr and tez. (was: result rows not equal in mr and tez) > result rows not equal in mr and tez. > > > Key: HIVE-24078 > URL: https://issues.apache.org/jira/browse/HIVE-24078 > Project: Hive > Issue Type: Bug > Components: HiveServer2, Tez >Affects Versions: 3.1.2 >Reporter: kuqiqi >Priority: Blocker > > select > rank_num, > province_name, > programset_id, > programset_name, > programset_type, > cv, > uv, > pt, > rank_num2, > rank_num3, > city_name, > level, > cp_code, > cp_name, > version_type, > zz.city_code, > zz.province_alias, > '20200815' dt > from > (SELECT row_number() over(partition BY > a1.province_alias,a1.city_code,a1.version_type > ORDER BY cast(a1.cv AS bigint) DESC) AS rank_num, > province_name(a1.province_alias) AS province_name, > a1.program_set_id AS programset_id, > a2.programset_name, > a2.type_name AS programset_type, > a1.cv, > a1.uv, > cast(a1.pt/360 as decimal(20,2)) pt, > row_number() over (partition by > a1.province_alias,a1.city_code,a1.version_type order by cast(a1.uv as bigint) > desc ) as rank_num2, > row_number() over (partition by > a1.province_alias,a1.city_code,a1.version_type order by cast(a1.pt as bigint) > desc ) as rank_num3, > a1.city_code, > a1.city_name, > '3' as level, > a2.cp_code, > a2.cp_name, > '20200815'as dt, > a1.province_alias, > a1.version_type > FROM temp.dmp_device_vod_valid_day_v1_20200815_hn a1 > LEFT JOIN temp.dmp_device_vod_valid_day_v2_20200815_hn a2 ON > a1.program_set_id=a2.programset_id > WHERE a2.programset_name IS NOT NULL ) zz > where rank_num<1000 or rank_num2<1000 or rank_num3<1000 > ; > > This sql gets 76742 rows in mr, but 76681 rows in tez.How to fix it? > I think the problem maybe lies in row_number. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (HIVE-24078) result rows not equal in mr and tez

[ https://issues.apache.org/jira/browse/HIVE-24078?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] dailong updated HIVE-24078: --- Summary: result rows not equal in mr and tez (was: result rows not equal in mr and tez.) > result rows not equal in mr and tez > --- > > Key: HIVE-24078 > URL: https://issues.apache.org/jira/browse/HIVE-24078 > Project: Hive > Issue Type: Bug > Components: HiveServer2, Tez >Affects Versions: 3.1.2 >Reporter: kuqiqi >Priority: Blocker > > select > rank_num, > province_name, > programset_id, > programset_name, > programset_type, > cv, > uv, > pt, > rank_num2, > rank_num3, > city_name, > level, > cp_code, > cp_name, > version_type, > zz.city_code, > zz.province_alias, > '20200815' dt > from > (SELECT row_number() over(partition BY > a1.province_alias,a1.city_code,a1.version_type > ORDER BY cast(a1.cv AS bigint) DESC) AS rank_num, > province_name(a1.province_alias) AS province_name, > a1.program_set_id AS programset_id, > a2.programset_name, > a2.type_name AS programset_type, > a1.cv, > a1.uv, > cast(a1.pt/360 as decimal(20,2)) pt, > row_number() over (partition by > a1.province_alias,a1.city_code,a1.version_type order by cast(a1.uv as bigint) > desc ) as rank_num2, > row_number() over (partition by > a1.province_alias,a1.city_code,a1.version_type order by cast(a1.pt as bigint) > desc ) as rank_num3, > a1.city_code, > a1.city_name, > '3' as level, > a2.cp_code, > a2.cp_name, > '20200815'as dt, > a1.province_alias, > a1.version_type > FROM temp.dmp_device_vod_valid_day_v1_20200815_hn a1 > LEFT JOIN temp.dmp_device_vod_valid_day_v2_20200815_hn a2 ON > a1.program_set_id=a2.programset_id > WHERE a2.programset_name IS NOT NULL ) zz > where rank_num<1000 or rank_num2<1000 or rank_num3<1000 > ; > > This sql gets 76742 rows in mr, but 76681 rows in tez.How to fix it? > I think the problem maybe lies in row_number. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (HIVE-24078) result rows not equal in mr and tez.

[ https://issues.apache.org/jira/browse/HIVE-24078?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] dailong updated HIVE-24078: --- Summary: result rows not equal in mr and tez. (was: result rows not equal in mr and tez) > result rows not equal in mr and tez. > > > Key: HIVE-24078 > URL: https://issues.apache.org/jira/browse/HIVE-24078 > Project: Hive > Issue Type: Bug > Components: HiveServer2, Tez >Affects Versions: 3.1.2 >Reporter: kuqiqi >Priority: Blocker > > select > rank_num, > province_name, > programset_id, > programset_name, > programset_type, > cv, > uv, > pt, > rank_num2, > rank_num3, > city_name, > level, > cp_code, > cp_name, > version_type, > zz.city_code, > zz.province_alias, > '20200815' dt > from > (SELECT row_number() over(partition BY > a1.province_alias,a1.city_code,a1.version_type > ORDER BY cast(a1.cv AS bigint) DESC) AS rank_num, > province_name(a1.province_alias) AS province_name, > a1.program_set_id AS programset_id, > a2.programset_name, > a2.type_name AS programset_type, > a1.cv, > a1.uv, > cast(a1.pt/360 as decimal(20,2)) pt, > row_number() over (partition by > a1.province_alias,a1.city_code,a1.version_type order by cast(a1.uv as bigint) > desc ) as rank_num2, > row_number() over (partition by > a1.province_alias,a1.city_code,a1.version_type order by cast(a1.pt as bigint) > desc ) as rank_num3, > a1.city_code, > a1.city_name, > '3' as level, > a2.cp_code, > a2.cp_name, > '20200815'as dt, > a1.province_alias, > a1.version_type > FROM temp.dmp_device_vod_valid_day_v1_20200815_hn a1 > LEFT JOIN temp.dmp_device_vod_valid_day_v2_20200815_hn a2 ON > a1.program_set_id=a2.programset_id > WHERE a2.programset_name IS NOT NULL ) zz > where rank_num<1000 or rank_num2<1000 or rank_num3<1000 > ; > > This sql gets 76742 rows in mr, but 76681 rows in tez.How to fix it? > I think the problem maybe lies in row_number. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (HIVE-24078) result rows not equal in mr and tez

[ https://issues.apache.org/jira/browse/HIVE-24078?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] dailong updated HIVE-24078: --- Summary: result rows not equal in mr and tez (was: result rows not equal in mr and tez.) > result rows not equal in mr and tez > --- > > Key: HIVE-24078 > URL: https://issues.apache.org/jira/browse/HIVE-24078 > Project: Hive > Issue Type: Bug > Components: HiveServer2, Tez >Affects Versions: 3.1.2 >Reporter: kuqiqi >Priority: Blocker > > select > rank_num, > province_name, > programset_id, > programset_name, > programset_type, > cv, > uv, > pt, > rank_num2, > rank_num3, > city_name, > level, > cp_code, > cp_name, > version_type, > zz.city_code, > zz.province_alias, > '20200815' dt > from > (SELECT row_number() over(partition BY > a1.province_alias,a1.city_code,a1.version_type > ORDER BY cast(a1.cv AS bigint) DESC) AS rank_num, > province_name(a1.province_alias) AS province_name, > a1.program_set_id AS programset_id, > a2.programset_name, > a2.type_name AS programset_type, > a1.cv, > a1.uv, > cast(a1.pt/360 as decimal(20,2)) pt, > row_number() over (partition by > a1.province_alias,a1.city_code,a1.version_type order by cast(a1.uv as bigint) > desc ) as rank_num2, > row_number() over (partition by > a1.province_alias,a1.city_code,a1.version_type order by cast(a1.pt as bigint) > desc ) as rank_num3, > a1.city_code, > a1.city_name, > '3' as level, > a2.cp_code, > a2.cp_name, > '20200815'as dt, > a1.province_alias, > a1.version_type > FROM temp.dmp_device_vod_valid_day_v1_20200815_hn a1 > LEFT JOIN temp.dmp_device_vod_valid_day_v2_20200815_hn a2 ON > a1.program_set_id=a2.programset_id > WHERE a2.programset_name IS NOT NULL ) zz > where rank_num<1000 or rank_num2<1000 or rank_num3<1000 > ; > > This sql gets 76742 rows in mr, but 76681 rows in tez.How to fix it? > I think the problem maybe lies in row_number. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (HIVE-24078) result rows not equal in mr and tez.

[ https://issues.apache.org/jira/browse/HIVE-24078?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] dailong updated HIVE-24078: --- Summary: result rows not equal in mr and tez. (was: result rows not equal in mr and tez) > result rows not equal in mr and tez. > > > Key: HIVE-24078 > URL: https://issues.apache.org/jira/browse/HIVE-24078 > Project: Hive > Issue Type: Bug > Components: HiveServer2, Tez >Affects Versions: 3.1.2 >Reporter: kuqiqi >Priority: Blocker > > select > rank_num, > province_name, > programset_id, > programset_name, > programset_type, > cv, > uv, > pt, > rank_num2, > rank_num3, > city_name, > level, > cp_code, > cp_name, > version_type, > zz.city_code, > zz.province_alias, > '20200815' dt > from > (SELECT row_number() over(partition BY > a1.province_alias,a1.city_code,a1.version_type > ORDER BY cast(a1.cv AS bigint) DESC) AS rank_num, > province_name(a1.province_alias) AS province_name, > a1.program_set_id AS programset_id, > a2.programset_name, > a2.type_name AS programset_type, > a1.cv, > a1.uv, > cast(a1.pt/360 as decimal(20,2)) pt, > row_number() over (partition by > a1.province_alias,a1.city_code,a1.version_type order by cast(a1.uv as bigint) > desc ) as rank_num2, > row_number() over (partition by > a1.province_alias,a1.city_code,a1.version_type order by cast(a1.pt as bigint) > desc ) as rank_num3, > a1.city_code, > a1.city_name, > '3' as level, > a2.cp_code, > a2.cp_name, > '20200815'as dt, > a1.province_alias, > a1.version_type > FROM temp.dmp_device_vod_valid_day_v1_20200815_hn a1 > LEFT JOIN temp.dmp_device_vod_valid_day_v2_20200815_hn a2 ON > a1.program_set_id=a2.programset_id > WHERE a2.programset_name IS NOT NULL ) zz > where rank_num<1000 or rank_num2<1000 or rank_num3<1000 > ; > > This sql gets 76742 rows in mr, but 76681 rows in tez.How to fix it? > I think the problem maybe lies in row_number. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Work logged] (HIVE-25246) Fix the clean up of open repl created transactions

[

https://issues.apache.org/jira/browse/HIVE-25246?focusedWorklogId=612942=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-612942

]

ASF GitHub Bot logged work on HIVE-25246:

-

Author: ASF GitHub Bot

Created on: 22/Jun/21 03:23

Start Date: 22/Jun/21 03:23

Worklog Time Spent: 10m

Work Description: hmangla98 commented on a change in pull request #2396:

URL: https://github.com/apache/hive/pull/2396#discussion_r655847667

##

File path:

standalone-metastore/metastore-server/src/main/java/org/apache/hadoop/hive/metastore/txn/TxnHandler.java

##

@@ -1339,7 +1340,8 @@ public void commitTxn(CommitTxnRequest rqst)

// corresponding open txn event.

LOG.info("Target txn id is missing for source txn id : " +

sourceTxnId +

" and repl policy " + rqst.getReplPolicy());

-return;

+throw new NoSuchTxnException("Source transaction: " +

JavaUtils.txnIdToString(sourceTxnId)

Review comment:

abort txn txnId would not have replPolicy set. So, its execution would

not come here.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 612942)

Time Spent: 2h 10m (was: 2h)

> Fix the clean up of open repl created transactions

> --

>

> Key: HIVE-25246

> URL: https://issues.apache.org/jira/browse/HIVE-25246

> Project: Hive

> Issue Type: Improvement

>Reporter: Haymant Mangla

>Assignee: Haymant Mangla

>Priority: Major

> Labels: pull-request-available

> Time Spent: 2h 10m

> Remaining Estimate: 0h

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Work logged] (HIVE-25246) Fix the clean up of open repl created transactions

[

https://issues.apache.org/jira/browse/HIVE-25246?focusedWorklogId=612939=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-612939

]

ASF GitHub Bot logged work on HIVE-25246:

-

Author: ASF GitHub Bot

Created on: 22/Jun/21 03:06

Start Date: 22/Jun/21 03:06

Worklog Time Spent: 10m

Work Description: pkumarsinha commented on a change in pull request #2396:

URL: https://github.com/apache/hive/pull/2396#discussion_r655842392

##

File path:

standalone-metastore/metastore-server/src/main/java/org/apache/hadoop/hive/metastore/txn/TxnHandler.java

##

@@ -1339,7 +1340,8 @@ public void commitTxn(CommitTxnRequest rqst)

// corresponding open txn event.

LOG.info("Target txn id is missing for source txn id : " +

sourceTxnId +

" and repl policy " + rqst.getReplPolicy());

-return;

+throw new NoSuchTxnException("Source transaction: " +

JavaUtils.txnIdToString(sourceTxnId)

Review comment:

Didn't get this. Don't we do "abort txn txnId" here the txn id is of

target and not of source, right?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 612939)

Time Spent: 2h (was: 1h 50m)

> Fix the clean up of open repl created transactions

> --

>

> Key: HIVE-25246

> URL: https://issues.apache.org/jira/browse/HIVE-25246

> Project: Hive

> Issue Type: Improvement

>Reporter: Haymant Mangla

>Assignee: Haymant Mangla

>Priority: Major

> Labels: pull-request-available

> Time Spent: 2h

> Remaining Estimate: 0h

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Work logged] (HIVE-25246) Fix the clean up of open repl created transactions

[

https://issues.apache.org/jira/browse/HIVE-25246?focusedWorklogId=612938=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-612938

]

ASF GitHub Bot logged work on HIVE-25246:

-

Author: ASF GitHub Bot

Created on: 22/Jun/21 03:05

Start Date: 22/Jun/21 03:05

Worklog Time Spent: 10m

Work Description: pkumarsinha commented on a change in pull request #2396:

URL: https://github.com/apache/hive/pull/2396#discussion_r655842392

##

File path:

standalone-metastore/metastore-server/src/main/java/org/apache/hadoop/hive/metastore/txn/TxnHandler.java

##

@@ -1339,7 +1340,8 @@ public void commitTxn(CommitTxnRequest rqst)

// corresponding open txn event.

LOG.info("Target txn id is missing for source txn id : " +

sourceTxnId +

" and repl policy " + rqst.getReplPolicy());

-return;

+throw new NoSuchTxnException("Source transaction: " +

JavaUtils.txnIdToString(sourceTxnId)

Review comment:

Didn't get this. Don't we do "abort txn " here the txn id is of

target and not of source, right?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 612938)

Time Spent: 1h 50m (was: 1h 40m)

> Fix the clean up of open repl created transactions

> --

>

> Key: HIVE-25246

> URL: https://issues.apache.org/jira/browse/HIVE-25246

> Project: Hive

> Issue Type: Improvement

>Reporter: Haymant Mangla

>Assignee: Haymant Mangla

>Priority: Major

> Labels: pull-request-available

> Time Spent: 1h 50m

> Remaining Estimate: 0h

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Work logged] (HIVE-25272) READ transactions are getting logged in NOTIFICATION LOG

[

https://issues.apache.org/jira/browse/HIVE-25272?focusedWorklogId=612937=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-612937

]

ASF GitHub Bot logged work on HIVE-25272:

-

Author: ASF GitHub Bot

Created on: 22/Jun/21 03:03

Start Date: 22/Jun/21 03:03

Worklog Time Spent: 10m

Work Description: pkumarsinha commented on a change in pull request #2413:

URL: https://github.com/apache/hive/pull/2413#discussion_r655841503

##

File path:

itests/hive-unit/src/test/java/org/apache/hadoop/hive/ql/parse/TestReplicationScenariosAcidTables.java

##

@@ -176,6 +177,28 @@ public void

testReplOperationsNotCapturedInNotificationLog() throws Throwable {

assert lastEventId == currentEventId;

}

+ @Test

+ public void testREADOperationsNotCapturedInNotificationLog() throws

Throwable {

Review comment:

Done

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 612937)

Time Spent: 1h 20m (was: 1h 10m)

> READ transactions are getting logged in NOTIFICATION LOG

>

>

> Key: HIVE-25272

> URL: https://issues.apache.org/jira/browse/HIVE-25272

> Project: Hive

> Issue Type: Bug

>Reporter: Pravin Sinha

>Assignee: Pravin Sinha

>Priority: Major

> Labels: pull-request-available

> Time Spent: 1h 20m

> Remaining Estimate: 0h

>

> While READ transactions are already skipped from getting logged in

> NOTIFICATION logs, few are still getting logged. Need to skip those

> transactions as well.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Work logged] (HIVE-25246) Fix the clean up of open repl created transactions

[

https://issues.apache.org/jira/browse/HIVE-25246?focusedWorklogId=612934=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-612934

]

ASF GitHub Bot logged work on HIVE-25246:

-

Author: ASF GitHub Bot

Created on: 22/Jun/21 02:56

Start Date: 22/Jun/21 02:56

Worklog Time Spent: 10m

Work Description: hmangla98 commented on a change in pull request #2396:

URL: https://github.com/apache/hive/pull/2396#discussion_r655839436

##

File path:

standalone-metastore/metastore-server/src/main/java/org/apache/hadoop/hive/metastore/txn/TxnHandler.java

##

@@ -1339,7 +1340,8 @@ public void commitTxn(CommitTxnRequest rqst)

// corresponding open txn event.

LOG.info("Target txn id is missing for source txn id : " +

sourceTxnId +

" and repl policy " + rqst.getReplPolicy());

-return;

+throw new NoSuchTxnException("Source transaction: " +

JavaUtils.txnIdToString(sourceTxnId)

Review comment:

no, we have only source txn id and the replPolicy. Using this info, we

query the REPL_TXN_MAP table and get corresponding target txn id and then

commit this txn.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 612934)

Time Spent: 1h 40m (was: 1.5h)

> Fix the clean up of open repl created transactions

> --

>

> Key: HIVE-25246

> URL: https://issues.apache.org/jira/browse/HIVE-25246

> Project: Hive

> Issue Type: Improvement

>Reporter: Haymant Mangla

>Assignee: Haymant Mangla

>Priority: Major

> Labels: pull-request-available

> Time Spent: 1h 40m

> Remaining Estimate: 0h

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Work logged] (HIVE-25246) Fix the clean up of open repl created transactions

[

https://issues.apache.org/jira/browse/HIVE-25246?focusedWorklogId=612933=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-612933

]

ASF GitHub Bot logged work on HIVE-25246:

-

Author: ASF GitHub Bot

Created on: 22/Jun/21 02:54

Start Date: 22/Jun/21 02:54

Worklog Time Spent: 10m

Work Description: pkumarsinha commented on a change in pull request #2396:

URL: https://github.com/apache/hive/pull/2396#discussion_r655838555

##

File path:

standalone-metastore/metastore-server/src/main/java/org/apache/hadoop/hive/metastore/txn/TxnHandler.java

##

@@ -1339,7 +1340,8 @@ public void commitTxn(CommitTxnRequest rqst)

// corresponding open txn event.

LOG.info("Target txn id is missing for source txn id : " +

sourceTxnId +

" and repl policy " + rqst.getReplPolicy());

-return;

+throw new NoSuchTxnException("Source transaction: " +

JavaUtils.txnIdToString(sourceTxnId)

Review comment:

Aren't we aborting based on target txn id so we know which target txn id

we are looking for?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 612933)

Time Spent: 1.5h (was: 1h 20m)

> Fix the clean up of open repl created transactions

> --

>

> Key: HIVE-25246

> URL: https://issues.apache.org/jira/browse/HIVE-25246

> Project: Hive

> Issue Type: Improvement

>Reporter: Haymant Mangla

>Assignee: Haymant Mangla

>Priority: Major

> Labels: pull-request-available

> Time Spent: 1.5h

> Remaining Estimate: 0h

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Work logged] (HIVE-25231) Add an ability to migrate CSV generated to hive table in replstats

[ https://issues.apache.org/jira/browse/HIVE-25231?focusedWorklogId=612931=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-612931 ] ASF GitHub Bot logged work on HIVE-25231: - Author: ASF GitHub Bot Created on: 22/Jun/21 02:27 Start Date: 22/Jun/21 02:27 Worklog Time Spent: 10m Work Description: aasha merged pull request #2379: URL: https://github.com/apache/hive/pull/2379 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 612931) Time Spent: 40m (was: 0.5h) > Add an ability to migrate CSV generated to hive table in replstats > -- > > Key: HIVE-25231 > URL: https://issues.apache.org/jira/browse/HIVE-25231 > Project: Hive > Issue Type: Improvement >Reporter: Ayush Saxena >Assignee: Ayush Saxena >Priority: Major > Labels: pull-request-available > Time Spent: 40m > Remaining Estimate: 0h > > Add an option to replstats.sh to load the CSV generated using the replication > policy into a hive table/view. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Work logged] (HIVE-25243) Llap external client - Handle nested values when the parent struct is null

[

https://issues.apache.org/jira/browse/HIVE-25243?focusedWorklogId=612928=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-612928

]

ASF GitHub Bot logged work on HIVE-25243:

-

Author: ASF GitHub Bot

Created on: 22/Jun/21 01:51

Start Date: 22/Jun/21 01:51

Worklog Time Spent: 10m

Work Description: maheshk114 commented on a change in pull request #2391:

URL: https://github.com/apache/hive/pull/2391#discussion_r655817539

##

File path: ql/src/java/org/apache/hadoop/hive/ql/io/arrow/Serializer.java

##

@@ -347,6 +347,21 @@ private void writeStruct(NonNullableStructVector

arrowVector, StructColumnVector

final ColumnVector[] hiveFieldVectors = hiveVector == null ? null :

hiveVector.fields;

final int fieldSize = fieldTypeInfos.size();

+// This is to handle following scenario -

+// if any struct value itself is NULL, we get structVector.isNull[i]=true

+// but we don't get the same for it's child fields which later causes

exceptions while setting to arrow vectors

+// see - https://issues.apache.org/jira/browse/HIVE-25243

+if (hiveVector != null && hiveFieldVectors != null) {

+ for (int i = 0; i < size; i++) {

+if (hiveVector.isNull[i]) {

+ for (ColumnVector fieldVector : hiveFieldVectors) {

+fieldVector.isNull[i] = true;

Review comment:

to me it looks like if one of the filed is null ..then all fields are

set to null.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 612928)

Time Spent: 1h (was: 50m)

> Llap external client - Handle nested values when the parent struct is null

> --

>

> Key: HIVE-25243

> URL: https://issues.apache.org/jira/browse/HIVE-25243

> Project: Hive

> Issue Type: Bug

> Components: Serializers/Deserializers

>Reporter: Shubham Chaurasia

>Assignee: Shubham Chaurasia

>Priority: Major

> Labels: pull-request-available

> Time Spent: 1h

> Remaining Estimate: 0h

>

> Consider the following table in text format -

> {code}

> +---+

> | c8 |

> +---+

> | NULL |

> | {"r":null,"s":null,"t":null} |

> | {"r":"a","s":9,"t":2.2} |

> +---+

> {code}

> When we query above table via llap external client, it throws following

> exception -

> {code:java}

> Caused by: java.lang.NullPointerException: src

> at io.netty.util.internal.ObjectUtil.checkNotNull(ObjectUtil.java:33)

> at

> io.netty.buffer.UnsafeByteBufUtil.setBytes(UnsafeByteBufUtil.java:537)

> at

> io.netty.buffer.PooledUnsafeDirectByteBuf.setBytes(PooledUnsafeDirectByteBuf.java:199)

> at io.netty.buffer.WrappedByteBuf.setBytes(WrappedByteBuf.java:486)

> at

> io.netty.buffer.UnsafeDirectLittleEndian.setBytes(UnsafeDirectLittleEndian.java:34)

> at io.netty.buffer.ArrowBuf.setBytes(ArrowBuf.java:933)

> at

> org.apache.arrow.vector.BaseVariableWidthVector.setBytes(BaseVariableWidthVector.java:1191)

> at

> org.apache.arrow.vector.BaseVariableWidthVector.setSafe(BaseVariableWidthVector.java:1026)

> at

> org.apache.hadoop.hive.ql.io.arrow.Serializer.lambda$static$15(Serializer.java:834)

> at

> org.apache.hadoop.hive.ql.io.arrow.Serializer.writeGeneric(Serializer.java:777)

> at

> org.apache.hadoop.hive.ql.io.arrow.Serializer.writePrimitive(Serializer.java:581)

> at

> org.apache.hadoop.hive.ql.io.arrow.Serializer.write(Serializer.java:290)

> at

> org.apache.hadoop.hive.ql.io.arrow.Serializer.writeStruct(Serializer.java:359)

> at

> org.apache.hadoop.hive.ql.io.arrow.Serializer.write(Serializer.java:296)

> at

> org.apache.hadoop.hive.ql.io.arrow.Serializer.serializeBatch(Serializer.java:213)

> at

> org.apache.hadoop.hive.ql.exec.vector.filesink.VectorFileSinkArrowOperator.process(VectorFileSinkArrowOperator.java:135)

> {code}

> Created a test to repro it -

> {code:java}

> /**

> * TestMiniLlapVectorArrowWithLlapIODisabled - turns off llap io while

> testing LLAP external client flow.

> * The aim of turning off LLAP IO is -

> * when we create table through this test, LLAP caches them and returns the

> same

> * when we do a read query, due to this we miss some code paths which may

> have been hit otherwise.

> */

> public class TestMiniLlapVectorArrowWithLlapIODisabled extends

> BaseJdbcWithMiniLlap

[jira] [Commented] (HIVE-23556) Support hive.metastore.limit.partition.request for get_partitions_ps

[ https://issues.apache.org/jira/browse/HIVE-23556?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17366918#comment-17366918 ] Toshihiko Uchida commented on HIVE-23556: - [~kgyrtkirk] Thanks for taking a look at the issue! Got it. > Support hive.metastore.limit.partition.request for get_partitions_ps > > > Key: HIVE-23556 > URL: https://issues.apache.org/jira/browse/HIVE-23556 > Project: Hive > Issue Type: Improvement >Reporter: Toshihiko Uchida >Assignee: Toshihiko Uchida >Priority: Minor > Attachments: HIVE-23556.2.patch, HIVE-23556.3.patch, > HIVE-23556.4.patch, HIVE-23556.patch > > > HIVE-13884 added the configuration hive.metastore.limit.partition.request to > limit the number of partitions that can be requested. > Currently, it takes in effect for the following MetaStore APIs > * get_partitions, > * get_partitions_with_auth, > * get_partitions_by_filter, > * get_partitions_spec_by_filter, > * get_partitions_by_expr, > but not for > * get_partitions_ps, > * get_partitions_ps_with_auth. > This issue proposes to apply the configuration also to get_partitions_ps and > get_partitions_ps_with_auth. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Work logged] (HIVE-25246) Fix the clean up of open repl created transactions

[

https://issues.apache.org/jira/browse/HIVE-25246?focusedWorklogId=612908=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-612908

]

ASF GitHub Bot logged work on HIVE-25246:

-

Author: ASF GitHub Bot

Created on: 21/Jun/21 23:10

Start Date: 21/Jun/21 23:10

Worklog Time Spent: 10m

Work Description: hmangla98 commented on a change in pull request #2396:

URL: https://github.com/apache/hive/pull/2396#discussion_r655760894

##

File path: ql/src/test/org/apache/hadoop/hive/ql/lockmgr/TestDbTxnManager.java

##

@@ -516,10 +516,17 @@ public void testHeartbeaterReplicationTxn() throws

Exception {

} catch (LockException e) {

exception = e;

}

-Assert.assertNotNull("Txn should have been aborted", exception);

-Assert.assertEquals(ErrorMsg.TXN_ABORTED,

exception.getCanonicalErrorMsg());

+Assert.assertNotNull("Source transaction with txnId: 1, missing from

REPL_TXN_MAP", exception);

Review comment:

If this entry is missing, the exception would be thrown which will be

caught up in line 517. So, e would be not null.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 612908)

Time Spent: 1h 20m (was: 1h 10m)

> Fix the clean up of open repl created transactions

> --

>

> Key: HIVE-25246

> URL: https://issues.apache.org/jira/browse/HIVE-25246

> Project: Hive

> Issue Type: Improvement

>Reporter: Haymant Mangla

>Assignee: Haymant Mangla

>Priority: Major

> Labels: pull-request-available

> Time Spent: 1h 20m

> Remaining Estimate: 0h

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Work logged] (HIVE-25246) Fix the clean up of open repl created transactions

[

https://issues.apache.org/jira/browse/HIVE-25246?focusedWorklogId=612905=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-612905

]

ASF GitHub Bot logged work on HIVE-25246:

-

Author: ASF GitHub Bot

Created on: 21/Jun/21 23:05

Start Date: 21/Jun/21 23:05

Worklog Time Spent: 10m

Work Description: hmangla98 commented on a change in pull request #2396:

URL: https://github.com/apache/hive/pull/2396#discussion_r655759104

##

File path:

standalone-metastore/metastore-server/src/main/java/org/apache/hadoop/hive/metastore/txn/TxnHandler.java

##

@@ -1339,7 +1340,8 @@ public void commitTxn(CommitTxnRequest rqst)

// corresponding open txn event.

LOG.info("Target txn id is missing for source txn id : " +

sourceTxnId +

" and repl policy " + rqst.getReplPolicy());

-return;

+throw new NoSuchTxnException("Source transaction: " +

JavaUtils.txnIdToString(sourceTxnId)

Review comment:

target txn id is not present. that's the reason this exception is being

thrown.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 612905)

Time Spent: 1h 10m (was: 1h)

> Fix the clean up of open repl created transactions

> --

>

> Key: HIVE-25246

> URL: https://issues.apache.org/jira/browse/HIVE-25246

> Project: Hive

> Issue Type: Improvement

>Reporter: Haymant Mangla

>Assignee: Haymant Mangla

>Priority: Major

> Labels: pull-request-available

> Time Spent: 1h 10m

> Remaining Estimate: 0h

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Resolved] (HIVE-25229) Hive lineage is not generated for columns on CREATE MATERIALIZED VIEW

[ https://issues.apache.org/jira/browse/HIVE-25229?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Jesus Camacho Rodriguez resolved HIVE-25229. Fix Version/s: 4.0.0 Resolution: Fixed Pushed to master, thanks [~soumyakanti.das]! > Hive lineage is not generated for columns on CREATE MATERIALIZED VIEW > - > > Key: HIVE-25229 > URL: https://issues.apache.org/jira/browse/HIVE-25229 > Project: Hive > Issue Type: Bug >Reporter: Soumyakanti Das >Assignee: Soumyakanti Das >Priority: Major > Labels: pull-request-available > Fix For: 4.0.0 > > Time Spent: 20m > Remaining Estimate: 0h > > While creating materialized view HookContext is supposed to send lineage info > which is missing. > CREATE MATERIALIZED VIEW tbl1_view as select * from tbl1; > Hook Context passed from hive.ql.Driver to Hive Hook of Atlas through > hookRunner.runPostExecHooks call doesn't have lineage info. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Work logged] (HIVE-25229) Hive lineage is not generated for columns on CREATE MATERIALIZED VIEW

[ https://issues.apache.org/jira/browse/HIVE-25229?focusedWorklogId=612867=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-612867 ] ASF GitHub Bot logged work on HIVE-25229: - Author: ASF GitHub Bot Created on: 21/Jun/21 20:45 Start Date: 21/Jun/21 20:45 Worklog Time Spent: 10m Work Description: jcamachor merged pull request #2377: URL: https://github.com/apache/hive/pull/2377 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 612867) Time Spent: 20m (was: 10m) > Hive lineage is not generated for columns on CREATE MATERIALIZED VIEW > - > > Key: HIVE-25229 > URL: https://issues.apache.org/jira/browse/HIVE-25229 > Project: Hive > Issue Type: Bug >Reporter: Soumyakanti Das >Assignee: Soumyakanti Das >Priority: Major > Labels: pull-request-available > Time Spent: 20m > Remaining Estimate: 0h > > While creating materialized view HookContext is supposed to send lineage info > which is missing. > CREATE MATERIALIZED VIEW tbl1_view as select * from tbl1; > Hook Context passed from hive.ql.Driver to Hive Hook of Atlas through > hookRunner.runPostExecHooks call doesn't have lineage info. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Resolved] (HIVE-24425) Create table in REMOTE db should fail

[ https://issues.apache.org/jira/browse/HIVE-24425?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Naveen Gangam resolved HIVE-24425. -- Fix Version/s: 4.0.0 Resolution: Fixed This fix has been merged to master. Closing the jira. Thank you for the contribute [~dantongdong] and Welcome to the Hive community. > Create table in REMOTE db should fail > - > > Key: HIVE-24425 > URL: https://issues.apache.org/jira/browse/HIVE-24425 > Project: Hive > Issue Type: Sub-task >Reporter: Naveen Gangam >Assignee: Dantong Dong >Priority: Major > Labels: pull-request-available > Fix For: 4.0.0 > > Time Spent: 40m > Remaining Estimate: 0h > > Currently it creates the table in that DB but show tables does not show > anything. Preventing the creation of table will resolve this inconsistency > too. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Work logged] (HIVE-24425) Create table in REMOTE db should fail

[ https://issues.apache.org/jira/browse/HIVE-24425?focusedWorklogId=612828=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-612828 ] ASF GitHub Bot logged work on HIVE-24425: - Author: ASF GitHub Bot Created on: 21/Jun/21 19:00 Start Date: 21/Jun/21 19:00 Worklog Time Spent: 10m Work Description: nrg4878 commented on pull request #2393: URL: https://github.com/apache/hive/pull/2393#issuecomment-865270113 Fix has been committed to master. Please close this PR. Thank you for your work on this. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 612828) Time Spent: 40m (was: 0.5h) > Create table in REMOTE db should fail > - > > Key: HIVE-24425 > URL: https://issues.apache.org/jira/browse/HIVE-24425 > Project: Hive > Issue Type: Sub-task >Reporter: Naveen Gangam >Assignee: Dantong Dong >Priority: Major > Labels: pull-request-available > Time Spent: 40m > Remaining Estimate: 0h > > Currently it creates the table in that DB but show tables does not show > anything. Preventing the creation of table will resolve this inconsistency > too. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Work logged] (HIVE-25246) Fix the clean up of open repl created transactions

[

https://issues.apache.org/jira/browse/HIVE-25246?focusedWorklogId=612817=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-612817

]

ASF GitHub Bot logged work on HIVE-25246:

-

Author: ASF GitHub Bot

Created on: 21/Jun/21 18:26

Start Date: 21/Jun/21 18:26

Worklog Time Spent: 10m

Work Description: pkumarsinha commented on a change in pull request #2396:

URL: https://github.com/apache/hive/pull/2396#discussion_r655604672

##

File path: ql/src/test/org/apache/hadoop/hive/metastore/txn/TestTxnHandler.java

##

@@ -1690,6 +1701,19 @@ private void checkReplTxnForTest(Long startTxnId, Long

endTxnId, String replPoli

}

}

+ private boolean targetTxnsPresentInReplTxnMap(Long startTxnId, Long

endTxnId, List targetTxnId) throws Exception {

+String[] output = TestTxnDbUtil.queryToString(conf, "SELECT

\"RTM_TARGET_TXN_ID\" FROM \"REPL_TXN_MAP\" WHERE " +

+" \"RTM_SRC_TXN_ID\" >= " + startTxnId + "AND \"RTM_SRC_TXN_ID\"

<= " + endTxnId).split("\n");

+List replayedTxns = new ArrayList<>();

+for (int idx = 1; idx < output.length; idx++) {

+ Long txnId = Long.parseLong(output[idx].trim());

+ if (targetTxnId.contains(txnId)) {

Review comment:

Do you really need this check?

##

File path:

standalone-metastore/metastore-server/src/main/java/org/apache/hadoop/hive/metastore/txn/TxnHandler.java

##

@@ -1339,7 +1340,8 @@ public void commitTxn(CommitTxnRequest rqst)

// corresponding open txn event.

LOG.info("Target txn id is missing for source txn id : " +

sourceTxnId +

Review comment:

This isn't an info level log. We can remove it actually as the exception

is being thrown and that would appear any way

##

File path: ql/src/test/org/apache/hadoop/hive/ql/lockmgr/TestDbTxnManager.java

##

@@ -516,10 +516,17 @@ public void testHeartbeaterReplicationTxn() throws

Exception {

} catch (LockException e) {

exception = e;

}

-Assert.assertNotNull("Txn should have been aborted", exception);

-Assert.assertEquals(ErrorMsg.TXN_ABORTED,

exception.getCanonicalErrorMsg());

+Assert.assertNotNull("Source transaction with txnId: 1, missing from

REPL_TXN_MAP", exception);

Review comment:

The message you get if the assertion fails. If this entry is missing,

the exception object would be null. Is that expected?

##

File path:

standalone-metastore/metastore-server/src/main/java/org/apache/hadoop/hive/metastore/txn/TxnHandler.java

##

@@ -1339,7 +1340,8 @@ public void commitTxn(CommitTxnRequest rqst)

// corresponding open txn event.

LOG.info("Target txn id is missing for source txn id : " +

sourceTxnId +

" and repl policy " + rqst.getReplPolicy());

-return;

+throw new NoSuchTxnException("Source transaction: " +

JavaUtils.txnIdToString(sourceTxnId)

Review comment:

Add target txn id as well.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 612817)

Time Spent: 1h (was: 50m)

> Fix the clean up of open repl created transactions

> --

>

> Key: HIVE-25246

> URL: https://issues.apache.org/jira/browse/HIVE-25246

> Project: Hive

> Issue Type: Improvement

>Reporter: Haymant Mangla

>Assignee: Haymant Mangla

>Priority: Major

> Labels: pull-request-available

> Time Spent: 1h

> Remaining Estimate: 0h

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Work logged] (HIVE-25268) date_format udf doesn't work for dates prior to 1900 if the timezone is different from UTC

[

https://issues.apache.org/jira/browse/HIVE-25268?focusedWorklogId=612797=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-612797

]

ASF GitHub Bot logged work on HIVE-25268:

-

Author: ASF GitHub Bot

Created on: 21/Jun/21 17:14

Start Date: 21/Jun/21 17:14

Worklog Time Spent: 10m

Work Description: sankarh commented on a change in pull request #2409:

URL: https://github.com/apache/hive/pull/2409#discussion_r655565647

##

File path: ql/src/test/queries/clientpositive/udf_date_format.q

##

@@ -78,3 +78,16 @@ select date_format("2015-04-08 10:30:45","-MM-dd

HH:mm:ss.SSS z");

--julian date

set hive.local.time.zone=UTC;

select date_format("1001-01-05","dd---MM--");

+

+--dates prior to 1900

+set hive.local.time.zone=Asia/Bangkok;

+select date_format('1400-01-14 01:01:10.123', '-MM-dd HH:mm:ss.SSS z');

+select date_format('1800-01-14 01:01:10.123', '-MM-dd HH:mm:ss.SSS z');

+

+set hive.local.time.zone=Europe/Berlin;

+select date_format('1400-01-14 01:01:10.123', '-MM-dd HH:mm:ss.SSS z');

+select date_format('1800-01-14 01:01:10.123', '-MM-dd HH:mm:ss.SSS z');

+

+set hive.local.time.zone=Africa/Johannesburg;

+select date_format('1400-01-14 01:01:10.123', '-MM-dd HH:mm:ss.SSS z');

Review comment:

Do we have tests for other formats (to ensure DateTimeFormat doesn't

break anything)? Also need update of wiki doc.

##

File path:

ql/src/java/org/apache/hadoop/hive/ql/udf/generic/GenericUDFDateFormat.java

##

@@ -111,17 +123,18 @@ public Object evaluate(DeferredObject[] arguments) throws

HiveException {

// the function should support both short date and full timestamp format

// time part of the timestamp should not be skipped

Timestamp ts = getTimestampValue(arguments, 0, tsConverters);

+

if (ts == null) {

Date d = getDateValue(arguments, 0, dtInputTypes, dtConverters);

if (d == null) {

return null;

}

ts = Timestamp.ofEpochMilli(d.toEpochMilli(id), id);

}

-

-

-date.setTime(ts.toEpochMilli(id));

-String res = formatter.format(date);

+Timestamp ts2 = TimestampTZUtil.convertTimestampToZone(ts, timeZone,

ZoneId.of("UTC"));

Review comment:

Add comments on why this conversion is needed.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 612797)

Time Spent: 3h 20m (was: 3h 10m)

> date_format udf doesn't work for dates prior to 1900 if the timezone is

> different from UTC

> --

>

> Key: HIVE-25268

> URL: https://issues.apache.org/jira/browse/HIVE-25268

> Project: Hive

> Issue Type: Bug

> Components: UDF

>Affects Versions: 3.1.0, 3.1.1, 3.1.2, 4.0.0

>Reporter: Nikhil Gupta

>Assignee: Nikhil Gupta

>Priority: Major

> Labels: pull-request-available

> Fix For: 4.0.0

>

> Time Spent: 3h 20m

> Remaining Estimate: 0h

>

> *Hive 1.2.1*:

> {code:java}

> select date_format('1400-01-14 01:00:00', '-MM-dd HH:mm:ss z');

> +--+--+

> | _c0|

> +--+--+

> | 1400-01-14 01:00:00 ICT |

> +--+--+

> select date_format('1800-01-14 01:00:00', '-MM-dd HH:mm:ss z');

> +--+--+

> | _c0|

> +--+--+

> | 1800-01-14 01:00:00 ICT |

> +--+--+

> {code}

> *Hive 3.1, Hive 4.0:*

> {code:java}

> select date_format('1400-01-14 01:00:00', '-MM-dd HH:mm:ss z');

> +--+

> | _c0|

> +--+

> | 1400-01-06 01:17:56 ICT |

> +--+

> select date_format('1800-01-14 01:00:00', '-MM-dd HH:mm:ss z');

> +--+

> | _c0|

> +--+

> | 1800-01-14 01:17:56 ICT |

> +--+

> {code}

> VM timezone is set to 'Asia/Bangkok'

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Resolved] (HIVE-25235) Remove ThreadPoolExecutorWithOomHook

[

https://issues.apache.org/jira/browse/HIVE-25235?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

David Mollitor resolved HIVE-25235.

---

Fix Version/s: 4.0.0

Resolution: Fixed

Pushed to master. Thanks [~mgergely] and [~dengzh] for the reviews!

> Remove ThreadPoolExecutorWithOomHook

>

>

> Key: HIVE-25235

> URL: https://issues.apache.org/jira/browse/HIVE-25235

> Project: Hive

> Issue Type: Improvement

> Components: HiveServer2

>Reporter: David Mollitor

>Assignee: David Mollitor

>Priority: Major

> Labels: pull-request-available

> Fix For: 4.0.0

>

> Time Spent: 1h

> Remaining Estimate: 0h

>

> While I was looking at [HIVE-24846] to better perform OOM logging and I just

> realized that this is not a good way to handle OOM.

> https://stackoverflow.com/questions/1692230/is-it-possible-to-catch-out-of-memory-exception-in-java

> bq. there's likely no easy way for you to recover from it if you do catch it

> If we want to handle OOM, it's best to do it from outside. It's best to do it

> with the JVM facilities:

> {{-XX:+ExitOnOutOfMemoryError}}

> {{-XX:OnOutOfMemoryError}}

> It seems odd that the OOM handler attempts to load a handler and then do more

> work when clearly the server is hosed at this point and just requesting to do

> more work will further add to memory pressure.

> The current OOM logic in {{HiveServer2OomHookRunner}} causes HiveServer2 to

> shutdown, but we already have that with the JVM shutdown hook. This JVM

> shutdown hook is triggered if {{-XX:OnOutOfMemoryError="kill -9 %p"}} exists

> and is the appropriate thing to do.

> https://github.com/apache/hive/blob/328d197431b2ff1000fd9c56ce758013eff81ad8/service/src/java/org/apache/hive/service/server/HiveServer2.java#L443-L444

> https://github.com/apache/hive/blob/cb0541a31b87016fae8e4c0e7130532c6e5f8de7/service/src/java/org/apache/hive/service/server/HiveServer2OomHookRunner.java#L42-L44

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Work logged] (HIVE-25235) Remove ThreadPoolExecutorWithOomHook

[

https://issues.apache.org/jira/browse/HIVE-25235?focusedWorklogId=612791=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-612791

]

ASF GitHub Bot logged work on HIVE-25235:

-

Author: ASF GitHub Bot

Created on: 21/Jun/21 17:07

Start Date: 21/Jun/21 17:07

Worklog Time Spent: 10m

Work Description: belugabehr merged pull request #2383:

URL: https://github.com/apache/hive/pull/2383

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 612791)

Time Spent: 1h (was: 50m)

> Remove ThreadPoolExecutorWithOomHook

>

>

> Key: HIVE-25235

> URL: https://issues.apache.org/jira/browse/HIVE-25235

> Project: Hive

> Issue Type: Improvement

> Components: HiveServer2

>Reporter: David Mollitor

>Assignee: David Mollitor

>Priority: Major

> Labels: pull-request-available

> Time Spent: 1h

> Remaining Estimate: 0h

>

> While I was looking at [HIVE-24846] to better perform OOM logging and I just

> realized that this is not a good way to handle OOM.

> https://stackoverflow.com/questions/1692230/is-it-possible-to-catch-out-of-memory-exception-in-java

> bq. there's likely no easy way for you to recover from it if you do catch it

> If we want to handle OOM, it's best to do it from outside. It's best to do it

> with the JVM facilities:

> {{-XX:+ExitOnOutOfMemoryError}}

> {{-XX:OnOutOfMemoryError}}

> It seems odd that the OOM handler attempts to load a handler and then do more

> work when clearly the server is hosed at this point and just requesting to do

> more work will further add to memory pressure.

> The current OOM logic in {{HiveServer2OomHookRunner}} causes HiveServer2 to

> shutdown, but we already have that with the JVM shutdown hook. This JVM

> shutdown hook is triggered if {{-XX:OnOutOfMemoryError="kill -9 %p"}} exists

> and is the appropriate thing to do.

> https://github.com/apache/hive/blob/328d197431b2ff1000fd9c56ce758013eff81ad8/service/src/java/org/apache/hive/service/server/HiveServer2.java#L443-L444

> https://github.com/apache/hive/blob/cb0541a31b87016fae8e4c0e7130532c6e5f8de7/service/src/java/org/apache/hive/service/server/HiveServer2OomHookRunner.java#L42-L44

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Resolved] (HIVE-24951) Table created with Uppercase name using CTAS does not produce result for select queries

[

https://issues.apache.org/jira/browse/HIVE-24951?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Naveen Gangam resolved HIVE-24951.

--

Fix Version/s: 4.0.0

Resolution: Fixed

Fix has been committed to master. Closing the jira. Thank you for the fix

[~Rajkumar Singh]

> Table created with Uppercase name using CTAS does not produce result for

> select queries

> ---

>

> Key: HIVE-24951

> URL: https://issues.apache.org/jira/browse/HIVE-24951

> Project: Hive

> Issue Type: Bug

> Components: Metastore

>Affects Versions: 4.0.0

>Reporter: Rajkumar Singh

>Assignee: Rajkumar Singh

>Priority: Major

> Labels: pull-request-available

> Fix For: 4.0.0

>

> Time Spent: 0.5h

> Remaining Estimate: 0h

>

> Steps to repro:

> {code:java}

> CREATE EXTERNAL TABLE MY_TEST AS SELECT * FROM source

> Table created with Location but does not have any data moved to it.

> /warehouse/tablespace/external/hive/MY_TEST

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Work logged] (HIVE-24951) Table created with Uppercase name using CTAS does not produce result for select queries

[

https://issues.apache.org/jira/browse/HIVE-24951?focusedWorklogId=612775=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-612775

]

ASF GitHub Bot logged work on HIVE-24951:

-

Author: ASF GitHub Bot

Created on: 21/Jun/21 16:46

Start Date: 21/Jun/21 16:46

Worklog Time Spent: 10m

Work Description: nrg4878 commented on pull request #2125:

URL: https://github.com/apache/hive/pull/2125#issuecomment-865187692

Fix has been merged to master.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 612775)

Time Spent: 0.5h (was: 20m)

> Table created with Uppercase name using CTAS does not produce result for

> select queries

> ---

>

> Key: HIVE-24951

> URL: https://issues.apache.org/jira/browse/HIVE-24951

> Project: Hive

> Issue Type: Bug

> Components: Metastore

>Affects Versions: 4.0.0

>Reporter: Rajkumar Singh

>Assignee: Rajkumar Singh

>Priority: Major

> Labels: pull-request-available

> Time Spent: 0.5h

> Remaining Estimate: 0h

>

> Steps to repro:

> {code:java}

> CREATE EXTERNAL TABLE MY_TEST AS SELECT * FROM source

> Table created with Location but does not have any data moved to it.

> /warehouse/tablespace/external/hive/MY_TEST

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Work logged] (HIVE-25272) READ transactions are getting logged in NOTIFICATION LOG

[ https://issues.apache.org/jira/browse/HIVE-25272?focusedWorklogId=612747=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-612747 ] ASF GitHub Bot logged work on HIVE-25272: - Author: ASF GitHub Bot Created on: 21/Jun/21 16:07 Start Date: 21/Jun/21 16:07 Worklog Time Spent: 10m Work Description: pvary commented on pull request #2413: URL: https://github.com/apache/hive/pull/2413#issuecomment-865160700 @deniskuzZ: This PR extends the scope of the READ-ONLY transactions. If you have time, could you please take a look? Thanks, Peter -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 612747) Time Spent: 1h (was: 50m) > READ transactions are getting logged in NOTIFICATION LOG > > > Key: HIVE-25272 > URL: https://issues.apache.org/jira/browse/HIVE-25272 > Project: Hive > Issue Type: Bug >Reporter: Pravin Sinha >Assignee: Pravin Sinha >Priority: Major > Labels: pull-request-available > Time Spent: 1h > Remaining Estimate: 0h > > While READ transactions are already skipped from getting logged in > NOTIFICATION logs, few are still getting logged. Need to skip those > transactions as well. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Work logged] (HIVE-25272) READ transactions are getting logged in NOTIFICATION LOG

[

https://issues.apache.org/jira/browse/HIVE-25272?focusedWorklogId=612745=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-612745

]

ASF GitHub Bot logged work on HIVE-25272:

-

Author: ASF GitHub Bot

Created on: 21/Jun/21 16:05

Start Date: 21/Jun/21 16:05

Worklog Time Spent: 10m

Work Description: pvary commented on a change in pull request #2413:

URL: https://github.com/apache/hive/pull/2413#discussion_r655517089

##

File path:

itests/hive-unit/src/test/java/org/apache/hadoop/hive/ql/parse/TestReplicationScenariosAcidTables.java

##

@@ -176,6 +177,28 @@ public void

testReplOperationsNotCapturedInNotificationLog() throws Throwable {

assert lastEventId == currentEventId;

}

+ @Test

+ public void testREADOperationsNotCapturedInNotificationLog() throws

Throwable {

+//Perform empty bootstrap dump and load

+primary.hiveConf.set("hive.txn.readonly.enabled", "true");

+primary.run("create table " + primaryDbName + ".t1 (id int)");

+primary.dump(primaryDbName);

+replica.run("REPL LOAD " + primaryDbName + " INTO " + replicatedDbName);

+//Perform empty incremental dump and load so that all db level properties

are altered.

+primary.dump(primaryDbName);

+replica.run("REPL LOAD " + primaryDbName + " INTO " + replicatedDbName);

+primary.run("insert into " + primaryDbName + ".t1 values(1)");

+long lastEventId = primary.getCurrentNotificationEventId().getEventId();

+primary.run("DESCRIBE DATABASE " + primaryDbName );

+primary.run("SELECT * from " + primaryDbName + ".t1");

+primary.run("SHOW tables " + primaryDbName);

Review comment:

What is the reason behind running these commands but discarding the

results?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 612745)

Time Spent: 40m (was: 0.5h)

> READ transactions are getting logged in NOTIFICATION LOG

>

>

> Key: HIVE-25272

> URL: https://issues.apache.org/jira/browse/HIVE-25272

> Project: Hive

> Issue Type: Bug

>Reporter: Pravin Sinha

>Assignee: Pravin Sinha

>Priority: Major

> Labels: pull-request-available

> Time Spent: 40m

> Remaining Estimate: 0h

>

> While READ transactions are already skipped from getting logged in

> NOTIFICATION logs, few are still getting logged. Need to skip those

> transactions as well.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Work logged] (HIVE-25272) READ transactions are getting logged in NOTIFICATION LOG

[

https://issues.apache.org/jira/browse/HIVE-25272?focusedWorklogId=612746=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-612746

]

ASF GitHub Bot logged work on HIVE-25272:

-

Author: ASF GitHub Bot

Created on: 21/Jun/21 16:05

Start Date: 21/Jun/21 16:05

Worklog Time Spent: 10m

Work Description: pvary commented on a change in pull request #2413:

URL: https://github.com/apache/hive/pull/2413#discussion_r655517694

##

File path:

itests/hive-unit/src/test/java/org/apache/hadoop/hive/ql/parse/TestReplicationScenariosAcidTables.java

##

@@ -176,6 +177,28 @@ public void

testReplOperationsNotCapturedInNotificationLog() throws Throwable {

assert lastEventId == currentEventId;

}

+ @Test

+ public void testREADOperationsNotCapturedInNotificationLog() throws

Throwable {

+//Perform empty bootstrap dump and load

+primary.hiveConf.set("hive.txn.readonly.enabled", "true");

+primary.run("create table " + primaryDbName + ".t1 (id int)");

+primary.dump(primaryDbName);

+replica.run("REPL LOAD " + primaryDbName + " INTO " + replicatedDbName);

+//Perform empty incremental dump and load so that all db level properties

are altered.

+primary.dump(primaryDbName);

+replica.run("REPL LOAD " + primaryDbName + " INTO " + replicatedDbName);

+primary.run("insert into " + primaryDbName + ".t1 values(1)");

+long lastEventId = primary.getCurrentNotificationEventId().getEventId();

+primary.run("DESCRIBE DATABASE " + primaryDbName );

+primary.run("SELECT * from " + primaryDbName + ".t1");

+primary.run("SHOW tables " + primaryDbName);

Review comment:

never mind, I got it

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 612746)

Time Spent: 50m (was: 40m)

> READ transactions are getting logged in NOTIFICATION LOG

>

>

> Key: HIVE-25272

> URL: https://issues.apache.org/jira/browse/HIVE-25272

> Project: Hive

> Issue Type: Bug

>Reporter: Pravin Sinha

>Assignee: Pravin Sinha

>Priority: Major

> Labels: pull-request-available

> Time Spent: 50m

> Remaining Estimate: 0h

>

> While READ transactions are already skipped from getting logged in

> NOTIFICATION logs, few are still getting logged. Need to skip those

> transactions as well.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (HIVE-25173) Fix build failure of hive-pre-upgrade due to missing dependency on pentaho-aggdesigner-algorithm

[

https://issues.apache.org/jira/browse/HIVE-25173?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17366693#comment-17366693

]

Stamatis Zampetakis commented on HIVE-25173:

>From the Hive side, its fine no need to bring back the conjars repo. I just

>wanted to understand the root cause and the implications, thanks Julian!

> Fix build failure of hive-pre-upgrade due to missing dependency on

> pentaho-aggdesigner-algorithm

>

>

> Key: HIVE-25173