[GitHub] [kafka] dajac commented on pull request #7265: Don't merge this, just testing something in the build

dajac commented on pull request #7265: URL: https://github.com/apache/kafka/pull/7265#issuecomment-715752369 @mumrah I guess that we could close this one, isn't it? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] dajac closed pull request #7336: KAFKA-8107:Flaky Test kafka.api.ClientIdQuotaTest.testQuotaOverrideDe…

dajac closed pull request #7336: URL: https://github.com/apache/kafka/pull/7336 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] dajac commented on pull request #7336: KAFKA-8107:Flaky Test kafka.api.ClientIdQuotaTest.testQuotaOverrideDe…

dajac commented on pull request #7336: URL: https://github.com/apache/kafka/pull/7336#issuecomment-715749977 Closing as this has been fixed by https://github.com/apache/kafka/pull/8394. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (KAFKA-10639) There should be an EnvironmentConfigProvider that will do variable substitution using environment variable.

[ https://issues.apache.org/jira/browse/KAFKA-10639?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17220011#comment-17220011 ] Brad Davis commented on KAFKA-10639: I've actually already locally implemented this, but want to contribute it back upstream. The limiting factor is primarily making sure that there are appropriate tests around it and ensuring conformance to existing coding standards. > There should be an EnvironmentConfigProvider that will do variable > substitution using environment variable. > --- > > Key: KAFKA-10639 > URL: https://issues.apache.org/jira/browse/KAFKA-10639 > Project: Kafka > Issue Type: Improvement > Components: config >Affects Versions: 2.5.1 >Reporter: Brad Davis >Priority: Major > > Running Kafka Connect in the same docker container in multiple stages (like > dev vs production) means that a file based approach to secret hiding using > the file config provider isn't viable. However, docker container instances > can have their environment variables customized on a per-container basis, and > our existing tech stack typically exposes per-stage secrets (like the dev DB > password vs the prod DB password) through env vars within the containers. > > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Created] (KAFKA-10639) There should be an EnvironmentConfigProvider that will do variable substitution using environment variable.

Brad Davis created KAFKA-10639: -- Summary: There should be an EnvironmentConfigProvider that will do variable substitution using environment variable. Key: KAFKA-10639 URL: https://issues.apache.org/jira/browse/KAFKA-10639 Project: Kafka Issue Type: Improvement Components: config Affects Versions: 2.5.1 Reporter: Brad Davis Running Kafka Connect in the same docker container in multiple stages (like dev vs production) means that a file based approach to secret hiding using the file config provider isn't viable. However, docker container instances can have their environment variables customized on a per-container basis, and our existing tech stack typically exposes per-stage secrets (like the dev DB password vs the prod DB password) through env vars within the containers. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] RamanVerma commented on a change in pull request #9364: KAFKA-10471 Mark broker crash during log loading as unclean shutdown

RamanVerma commented on a change in pull request #9364:

URL: https://github.com/apache/kafka/pull/9364#discussion_r511277713

##

File path: core/src/main/scala/kafka/log/LogManager.scala

##

@@ -298,26 +300,38 @@ class LogManager(logDirs: Seq[File],

/**

* Recover and load all logs in the given data directories

*/

- private def loadLogs(): Unit = {

+ private[log] def loadLogs(): Unit = {

info(s"Loading logs from log dirs $liveLogDirs")

val startMs = time.hiResClockMs()

val threadPools = ArrayBuffer.empty[ExecutorService]

val offlineDirs = mutable.Set.empty[(String, IOException)]

-val jobs = mutable.Map.empty[File, Seq[Future[_]]]

+val jobs = ArrayBuffer.empty[Seq[Future[_]]]

var numTotalLogs = 0

for (dir <- liveLogDirs) {

val logDirAbsolutePath = dir.getAbsolutePath

+ var hadCleanShutdown: Boolean = false

try {

val pool = Executors.newFixedThreadPool(numRecoveryThreadsPerDataDir)

threadPools.append(pool)

val cleanShutdownFile = new File(dir, Log.CleanShutdownFile)

if (cleanShutdownFile.exists) {

info(s"Skipping recovery for all logs in $logDirAbsolutePath since

clean shutdown file was found")

+ // Cache the clean shutdown status and use that for rest of log

loading workflow. Delete the CleanShutdownFile

+ // so that if broker crashes while loading the log, it is considered

hard shutdown during the next boot up. KAFKA-10471

+ try {

+cleanShutdownFile.delete()

+ } catch {

+case e: IOException =>

Review comment:

`java.nio.file.Files` API throws `IOException` but not the one used here

`java.io.File`. Will remove this exception

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] stan-confluent commented on pull request #9488: Pin ducktape to version 0.7.10

stan-confluent commented on pull request #9488: URL: https://github.com/apache/kafka/pull/9488#issuecomment-715656921 https://github.com/apache/kafka/pull/9490 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] stan-confluent commented on pull request #9490: Pin ducktape to version 0.7.10

stan-confluent commented on pull request #9490: URL: https://github.com/apache/kafka/pull/9490#issuecomment-715656906 cc @omkreddy @edenhill @sdanduConf @andrewegel This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] stan-confluent opened a new pull request #9490: Pin ducktape to version 0.7.10

stan-confluent opened a new pull request #9490: URL: https://github.com/apache/kafka/pull/9490 Ducktape version 0.7.10 pinned paramiko to version 2.3.2 to deal with random SSHExceptions confluent had been seeing since ducktape was updated to a later version of paramiko. The idea is that we can backport ducktape 0.7.10 change as far back as possible, while 2.7 and trunk can update to 0.8.0 and python3 separately. Tested: In progress, but unlikely to affect anything, since the only difference between ducktape 0.7.9 and 0.7.10 is paramiko version downgrade. ### Committer Checklist (excluded from commit message) - [ ] Verify design and implementation - [ ] Verify test coverage and CI build status - [ ] Verify documentation (including upgrade notes) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] warrenzhu25 opened a new pull request #9491: KAFKA-10623: Refactor code to avoid discovery conflicts for admin.VersionRange

warrenzhu25 opened a new pull request #9491: URL: https://github.com/apache/kafka/pull/9491 Rename admin.VersionRange into admin.Versions to avoid discovery conflicts ### Committer Checklist (excluded from commit message) - [ ] Verify design and implementation - [ ] Verify test coverage and CI build status - [ ] Verify documentation (including upgrade notes) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] stan-confluent commented on pull request #9488: Pin ducktape to version 0.7.10

stan-confluent commented on pull request #9488: URL: https://github.com/apache/kafka/pull/9488#issuecomment-715656527 @omkreddy sounds good, let me also re-run the tests on 2.6, just to be sure. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] stan-confluent closed pull request #9488: Pin ducktape to version 0.7.10

stan-confluent closed pull request #9488: URL: https://github.com/apache/kafka/pull/9488 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] omkreddy edited a comment on pull request #9488: Pin ducktape to version 0.7.10

omkreddy edited a comment on pull request #9488: URL: https://github.com/apache/kafka/pull/9488#issuecomment-715651991 @stan-confluent Yes, Please update the PR and base against 2.6. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] omkreddy edited a comment on pull request #9488: Pin ducktape to version 0.7.10

omkreddy edited a comment on pull request #9488: URL: https://github.com/apache/kafka/pull/9488#issuecomment-715651991 @stan-confluent Yes, Please update the PR and re-point to 2.6. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] omkreddy commented on pull request #9488: Pin ducktape to version 0.7.10

omkreddy commented on pull request #9488: URL: https://github.com/apache/kafka/pull/9488#issuecomment-715651991 @stan-confluent Yes, Pls update the PR and re-point to 2.6. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] stan-confluent commented on pull request #9488: Pin ducktape to version 0.7.10

stan-confluent commented on pull request #9488: URL: https://github.com/apache/kafka/pull/9488#issuecomment-715651165 > We have PR #9480 to update the version in setup.py. I plan to commit #9480 to trunk and 2,.7 branches. > > I think, we can update ducktape 0.7.10 to 2.6 and below. Awesome, thanks Manikumar. Do you want me to re-point this PR to 2.6 branch? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] stan-confluent commented on a change in pull request #9480: KAFKA-10592: Fix vagrant for a system tests with python3

stan-confluent commented on a change in pull request #9480: URL: https://github.com/apache/kafka/pull/9480#discussion_r511233150 ## File path: tests/setup.py ## @@ -51,7 +51,7 @@ def run_tests(self): license="apache2.0", packages=find_packages(), include_package_data=True, - install_requires=["ducktape==0.7.9", "requests==2.22.0"], + install_requires=["ducktape==0.8.0", "requests==2.24.0"], Review comment: Why do we need to pin `requests` explicitly? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] omkreddy edited a comment on pull request #9488: Pin ducktape to version 0.7.10

omkreddy edited a comment on pull request #9488: URL: https://github.com/apache/kafka/pull/9488#issuecomment-715649754 We have PR https://github.com/apache/kafka/pull/9480 to update the version in setup.py. I plan to commit #9480 to trunk and 2,.7 branches. I think, we can update ducktape 0.7.10 to 2.6 and below. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] omkreddy edited a comment on pull request #9488: Pin ducktape to version 0.7.10

omkreddy edited a comment on pull request #9488: URL: https://github.com/apache/kafka/pull/9488#issuecomment-715649754 We have a PR https://github.com/apache/kafka/pull/9480 to update the version in setup.py. I plan to commit #9480 to trunk and 2,.7 branches. I think, we can update ducktape 0.7.10 to 2.6 and below. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] omkreddy commented on pull request #9488: Pin ducktape to version 0.7.10

omkreddy commented on pull request #9488: URL: https://github.com/apache/kafka/pull/9488#issuecomment-715649754 We have a PR https://github.com/apache/kafka/pull/9480 to update the version in setup.py. I plan to commit #9480 to trunk and 2,.7 branches. I think, we can update duktape 0.7.10 to 2.6 and below. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] ableegoldman commented on pull request #9489: MINOR: demote "Committing task offsets" log to DEBUG

ableegoldman commented on pull request #9489: URL: https://github.com/apache/kafka/pull/9489#issuecomment-715639072 @guozhangwang @vvcephei WDYT? I'm generally all for more logs but this is pretty extreme This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] ableegoldman opened a new pull request #9489: MINOR: demote "Committing task offsets" log to DEBUG

ableegoldman opened a new pull request #9489: URL: https://github.com/apache/kafka/pull/9489 This message absolutely floods the logs, especially in an eos application where the commit interval is just 100ms. It's definitely a useful message but I don't think there's any justification for it being at the INFO level when it's logged 10 times a second. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (KAFKA-10545) Create topic IDs and propagate to brokers

[ https://issues.apache.org/jira/browse/KAFKA-10545?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Justine Olshan updated KAFKA-10545: --- Description: First step for KIP-516 The goals are: * Create and store topic IDs in a ZK Node and controller memory. * Propagate topic ID to brokers with updated LeaderAndIsrRequest, UpdateMetadata * Store topic ID in memory on broker, persistent file in log was: First step for KIP-516 The goals are: * Create and store topic IDs in a ZK Node and controller memory. * Propagate topic ID to brokers with updated LeaderAndIsrRequest * Store topic ID in memory on broker, persistent file in log > Create topic IDs and propagate to brokers > - > > Key: KAFKA-10545 > URL: https://issues.apache.org/jira/browse/KAFKA-10545 > Project: Kafka > Issue Type: Sub-task >Reporter: Justine Olshan >Assignee: Justine Olshan >Priority: Major > > First step for KIP-516 > The goals are: > * Create and store topic IDs in a ZK Node and controller memory. > * Propagate topic ID to brokers with updated LeaderAndIsrRequest, > UpdateMetadata > * Store topic ID in memory on broker, persistent file in log -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (KAFKA-10547) Add topic IDs to MetadataResponse

[ https://issues.apache.org/jira/browse/KAFKA-10547?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Justine Olshan updated KAFKA-10547: --- Summary: Add topic IDs to MetadataResponse (was: Add topic IDs to MetadataResponse, UpdateMetadata) > Add topic IDs to MetadataResponse > - > > Key: KAFKA-10547 > URL: https://issues.apache.org/jira/browse/KAFKA-10547 > Project: Kafka > Issue Type: Sub-task >Reporter: Justine Olshan >Priority: Major > > Prevent reads from deleted topics > Will be able to use TopicDescription to identify the topic ID -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] stan-confluent commented on pull request #9488: Pin ducktape to version 0.7.10

stan-confluent commented on pull request #9488: URL: https://github.com/apache/kafka/pull/9488#issuecomment-715636766 cc @omkreddy @edenhill - folks, I think you've been working on updating kafkatest to python 3, so adding you as reviewers. also cc @andrewegel and @sdanduConf This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] stan-confluent opened a new pull request #9488: Pin ducktape to version 0.7.10

stan-confluent opened a new pull request #9488: URL: https://github.com/apache/kafka/pull/9488 Ducktape version 0.7.10 pinned paramiko to version 2.3.2 to deal with random `SSHException`s confluent had been seeing since ducktape was updated to a later version of paramiko. This PR pins both the version in `setup.py` and in `ducker-ak`'s `Dockerfile` to the same version. Previously ducker version was pinned to 0.8.0. I'll send a separate PR that pins both versions to 0.8.0 (unless someone is already working on that separately) - the idea is that we can backport ducktape 0.7.10 change as far back as possible, while keep the ducktape 0.8.0 change in trunk only (unless we plan to backport python3 changes). Tested: http://confluent-kafka-branch-builder-system-test-results.s3-us-west-2.amazonaws.com/2020-10-23--001.1603440055--stan-confluent--ducktape-710--3c51234d0/report.html There are 10 failing tests, 9 of which have been failing before this change, and 1 (streams_upgrade_test) I've seen failing on different branches recently as well, though it was passing on trunk as of couple of days ago. Since ducktape version only changed the paramiko dependencies, I don't think it could've affected it. ### Committer Checklist (excluded from commit message) - [ ] Verify design and implementation - [ ] Verify test coverage and CI build status - [ ] Verify documentation (including upgrade notes) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] hachikuji commented on a change in pull request #9418: KAFKA-10601; Add support for append linger to Raft implementation

hachikuji commented on a change in pull request #9418:

URL: https://github.com/apache/kafka/pull/9418#discussion_r511200238

##

File path:

raft/src/main/java/org/apache/kafka/raft/internals/BatchAccumulator.java

##

@@ -0,0 +1,294 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.kafka.raft.internals;

+

+import org.apache.kafka.common.memory.MemoryPool;

+import org.apache.kafka.common.record.CompressionType;

+import org.apache.kafka.common.record.MemoryRecords;

+import org.apache.kafka.common.record.RecordBatch;

+import org.apache.kafka.common.utils.Time;

+import org.apache.kafka.common.utils.Timer;

+import org.apache.kafka.raft.RecordSerde;

+

+import java.io.Closeable;

+import java.nio.ByteBuffer;

+import java.util.ArrayList;

+import java.util.List;

+import java.util.concurrent.locks.ReentrantLock;

+

+/**

+ * TODO: Also flush after minimum size limit is reached?

+ */

+public class BatchAccumulator implements Closeable {

+private final int epoch;

+private final Time time;

+private final Timer lingerTimer;

+private final int lingerMs;

+private final int maxBatchSize;

+private final CompressionType compressionType;

+private final MemoryPool memoryPool;

+private final ReentrantLock lock;

+private final RecordSerde serde;

+

+private long nextOffset;

+private BatchBuilder currentBatch;

+private List> completed;

+

+public BatchAccumulator(

+int epoch,

+long baseOffset,

+int lingerMs,

+int maxBatchSize,

+MemoryPool memoryPool,

+Time time,

+CompressionType compressionType,

+RecordSerde serde

+) {

+this.epoch = epoch;

+this.lingerMs = lingerMs;

+this.maxBatchSize = maxBatchSize;

+this.memoryPool = memoryPool;

+this.time = time;

+this.lingerTimer = time.timer(lingerMs);

+this.compressionType = compressionType;

+this.serde = serde;

+this.nextOffset = baseOffset;

+this.completed = new ArrayList<>();

+this.lock = new ReentrantLock();

+}

+

+/**

+ * Append a list of records into an atomic batch. We guarantee all records

+ * are included in the same underlying record batch so that either all of

+ * the records become committed or none of them do.

+ *

+ * @param epoch the expected leader epoch

+ * @param records the list of records to include in a batch

+ * @return the offset of the last message or {@link Long#MAX_VALUE} if the

epoch

+ * does not match

+ */

+public Long append(int epoch, List records) {

+if (epoch != this.epoch) {

+// If the epoch does not match, then the state machine probably

+// has not gotten the notification about the latest epoch change.

+// In this case, ignore the append and return a large offset value

+// which will never be committed.

Review comment:

Right. It is important to ensure that the state machine has observed the

latest leader epoch. Otherwise there may be committed data inflight which the

state machine has yet to see.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Commented] (KAFKA-10633) Constant probing rebalances in Streams 2.6

[ https://issues.apache.org/jira/browse/KAFKA-10633?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17219963#comment-17219963 ] A. Sophie Blee-Goldman commented on KAFKA-10633: The issue with directory contents being deleted but not the directories themselves sounds like KAFKA-10564 (also fixed in 2.7.0/2.6.1). I don't believe that particular bug has any real implications, other than being annoying/misleading in the logs – it should still delete everything in the task directory, including the checkpoint file which is how the assignor determines which persistent state is/isn't on an instance. I'm kind of surprised that you would still get a rebalance after redeploying like that when using static membership. Unless it takes longer than the static group membership timeout I guess. To be honest, I'm not that familiar with the specifics of static membership in general – maybe [~bchen225242] can chime in here. But I suppose I would start by checking out the logs on the Streams side after it comes back up, and see if it's logged any reason for triggering a rebalance explicitly. (There are a few reasons to trigger a rebalance after a static member is bounced, for example if it's hostname changed for IQ. If anything like that happened it should be logged clearly) > Constant probing rebalances in Streams 2.6 > -- > > Key: KAFKA-10633 > URL: https://issues.apache.org/jira/browse/KAFKA-10633 > Project: Kafka > Issue Type: Bug > Components: streams >Affects Versions: 2.6.0 >Reporter: Bradley Peterson >Priority: Major > Attachments: Discover 2020-10-21T23 34 03.867Z - 2020-10-21T23 44 > 46.409Z.csv > > > We are seeing a few issues with the new rebalancing behavior in Streams 2.6. > This ticket is for constant probing rebalances on one StreamThread, but I'll > mention the other issues, as they may be related. > First, when we redeploy the application we see tasks being moved, even though > the task assignment was stable before redeploying. We would expect to see > tasks assigned back to the same instances and no movement. The application is > in EC2, with persistent EBS volumes, and we use static group membership to > avoid rebalancing. To redeploy the app we terminate all EC2 instances. The > new instances will reattach the EBS volumes and use the same group member id. > After redeploying, we sometimes see the group leader go into a tight probing > rebalance loop. This doesn't happen immediately, it could be several hours > later. Because the redeploy caused task movement, we see expected probing > rebalances every 10 minutes. But, then one thread will go into a tight loop > logging messages like "Triggering the followup rebalance scheduled for > 1603323868771 ms.", handling the partition assignment (which doesn't change), > then "Requested to schedule probing rebalance for 1603323868771 ms." This > repeats several times a second until the app is restarted again. I'll attach > a log export from one such incident. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] bbejeck edited a comment on pull request #9486: KAFKA-9381: Fix releaseTarGz to publish valid scaladoc files

bbejeck edited a comment on pull request #9486: URL: https://github.com/apache/kafka/pull/9486#issuecomment-715620710 Need to test locally that the `uploadArchives` task triggers `archive` blocks in the build.gradle file. If correct then the `kafka-streams-scala-scaladoc.jar` should get created properly, and with scaladoc disabled for core, we won't publish non-public scaladoc This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] bbejeck commented on pull request #9486: KAFKA-9381: Fix releaseTarGz to publish valid scaladoc files

bbejeck commented on pull request #9486: URL: https://github.com/apache/kafka/pull/9486#issuecomment-715620710 Need to test locally that the `uploadArchives` task triggers `archive` blocks in the build.gradle file. If correct then the `kafka-streams-scala-scaladoc.jar` should get created properly This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (KAFKA-10633) Constant probing rebalances in Streams 2.6

[ https://issues.apache.org/jira/browse/KAFKA-10633?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17219958#comment-17219958 ] Bradley Peterson commented on KAFKA-10633: -- Sophie (et al.), do you have any thoughts about what would cause rebalances (and task movements) after redeploying? If we could fix that, then this would be less of a problem, because we would rarely have probing rebalances. We do have another problem where state directories are not deleted, but their contents are (almost like KAFKA-6647, but not quite the same). Is it possible that is confusing the task assignor? > Constant probing rebalances in Streams 2.6 > -- > > Key: KAFKA-10633 > URL: https://issues.apache.org/jira/browse/KAFKA-10633 > Project: Kafka > Issue Type: Bug > Components: streams >Affects Versions: 2.6.0 >Reporter: Bradley Peterson >Priority: Major > Attachments: Discover 2020-10-21T23 34 03.867Z - 2020-10-21T23 44 > 46.409Z.csv > > > We are seeing a few issues with the new rebalancing behavior in Streams 2.6. > This ticket is for constant probing rebalances on one StreamThread, but I'll > mention the other issues, as they may be related. > First, when we redeploy the application we see tasks being moved, even though > the task assignment was stable before redeploying. We would expect to see > tasks assigned back to the same instances and no movement. The application is > in EC2, with persistent EBS volumes, and we use static group membership to > avoid rebalancing. To redeploy the app we terminate all EC2 instances. The > new instances will reattach the EBS volumes and use the same group member id. > After redeploying, we sometimes see the group leader go into a tight probing > rebalance loop. This doesn't happen immediately, it could be several hours > later. Because the redeploy caused task movement, we see expected probing > rebalances every 10 minutes. But, then one thread will go into a tight loop > logging messages like "Triggering the followup rebalance scheduled for > 1603323868771 ms.", handling the partition assignment (which doesn't change), > then "Requested to schedule probing rebalance for 1603323868771 ms." This > repeats several times a second until the app is restarted again. I'll attach > a log export from one such incident. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] rajinisivaram commented on a change in pull request #9382: KAFKA-10554; Perform follower truncation based on diverging epochs in Fetch response

rajinisivaram commented on a change in pull request #9382: URL: https://github.com/apache/kafka/pull/9382#discussion_r511174838 ## File path: core/src/main/scala/kafka/server/ReplicaManager.scala ## @@ -770,7 +770,7 @@ class ReplicaManager(val config: KafkaConfig, logManager.abortAndPauseCleaning(topicPartition) val initialFetchState = InitialFetchState(BrokerEndPoint(config.brokerId, "localhost", -1), - partition.getLeaderEpoch, futureLog.highWatermark) + partition.getLeaderEpoch, futureLog.highWatermark, lastFetchedEpoch = None) Review comment: Looking at this again, I think a bit more work is required to set the offsets and epoch correctly for AlterLogDirsThread in order to use `lastFetchedEpoch`. So I have reverted the changes for ReplicaAlterLogDirsThread. Will do that in a follow-on PR instead. In this PR, we will use the old truncation path in this case. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] wcarlson5 commented on a change in pull request #9487: KAFKA-9331 add a streams handler

wcarlson5 commented on a change in pull request #9487:

URL: https://github.com/apache/kafka/pull/9487#discussion_r511149017

##

File path: streams/src/main/java/org/apache/kafka/streams/KafkaStreams.java

##

@@ -346,26 +351,92 @@ public void setStateListener(final

KafkaStreams.StateListener listener) {

* Set the handler invoked when a {@link

StreamsConfig#NUM_STREAM_THREADS_CONFIG internal thread} abruptly

* terminates due to an uncaught exception.

*

- * @param eh the uncaught exception handler for all internal threads;

{@code null} deletes the current handler

+ * @param uncaughtExceptionHandler the uncaught exception handler for all

internal threads; {@code null} deletes the current handler

* @throws IllegalStateException if this {@code KafkaStreams} instance is

not in state {@link State#CREATED CREATED}.

+ *

+ * @Deprecated Since 2.7.0. Use {@link

KafkaStreams#setUncaughtExceptionHandler(StreamsUncaughtExceptionHandler)}

instead.

+ *

*/

-public void setUncaughtExceptionHandler(final

Thread.UncaughtExceptionHandler eh) {

+public void setUncaughtExceptionHandler(final

Thread.UncaughtExceptionHandler uncaughtExceptionHandler) {

synchronized (stateLock) {

if (state == State.CREATED) {

for (final StreamThread thread : threads) {

-thread.setUncaughtExceptionHandler(eh);

+

thread.setUncaughtExceptionHandler(uncaughtExceptionHandler);

}

if (globalStreamThread != null) {

-globalStreamThread.setUncaughtExceptionHandler(eh);

+

globalStreamThread.setUncaughtExceptionHandler(uncaughtExceptionHandler);

}

} else {

throw new IllegalStateException("Can only set

UncaughtExceptionHandler in CREATED state. " +

-"Current state is: " + state);

+"Current state is: " + state);

+}

+}

+}

+

+/**

+ * Set the handler invoked when a {@link

StreamsConfig#NUM_STREAM_THREADS_CONFIG internal thread}

+ * throws an unexpected exception.

+ * These might be exceptions indicating rare bugs in Kafka Streams, or they

+ * might be exceptions thrown by your code, for example a

NullPointerException thrown from your processor

+ * logic.

+ *

+ * Note, this handler must be threadsafe, since it will be shared among

all threads, and invoked from any

+ * thread that encounters such an exception.

+ *

+ * @param streamsUncaughtExceptionHandler the uncaught exception handler

of type {@link StreamsUncaughtExceptionHandler} for all internal threads;

{@code null} deletes the current handler

+ * @throws IllegalStateException if this {@code KafkaStreams} instance is

not in state {@link State#CREATED CREATED}.

+ * @throws NullPointerException @NotNull if

streamsUncaughtExceptionHandler is null.

+ */

+public void setUncaughtExceptionHandler(final

StreamsUncaughtExceptionHandler streamsUncaughtExceptionHandler) {

+final StreamsUncaughtExceptionHandler handler = exception ->

handleStreamsUncaughtException(exception, streamsUncaughtExceptionHandler);

+synchronized (stateLock) {

+if (state == State.CREATED) {

+Objects.requireNonNull(streamsUncaughtExceptionHandler);

+for (final StreamThread thread : threads) {

+thread.setStreamsUncaughtExceptionHandler(handler);

+}

+if (globalStreamThread != null) {

+globalStreamThread.setUncaughtExceptionHandler(handler);

+}

+} else {

+throw new IllegalStateException("Can only set

UncaughtExceptionHandler in CREATED state. " +

+"Current state is: " + state);

}

}

}

+private StreamsUncaughtExceptionHandler.StreamThreadExceptionResponse

handleStreamsUncaughtException(final Throwable e,

+

final StreamsUncaughtExceptionHandler

streamsUncaughtExceptionHandler) {

+final StreamsUncaughtExceptionHandler.StreamThreadExceptionResponse

action = streamsUncaughtExceptionHandler.handle(e);

+switch (action) {

+//case REPLACE_STREAM_THREAD:

+//log.error("Encountered the following exception during

processing " +

+//"and the the stream thread will be replaced: ", e);

+//this.addStreamsThread();

+//break;

+case SHUTDOWN_CLIENT:

+log.error("Encountered the following exception during

processing " +

+"and the client is going to shut down: ", e);

+close(Duration.ZERO);

+break;

+case SHUTDOWN_APPLICATION:

+

[GitHub] [kafka] wcarlson5 commented on a change in pull request #9487: KAFKA-9331 add a streams handler

wcarlson5 commented on a change in pull request #9487: URL: https://github.com/apache/kafka/pull/9487#discussion_r511148601 ## File path: streams/src/main/java/org/apache/kafka/streams/KafkaStreams.java ## @@ -782,7 +849,12 @@ private KafkaStreams(final InternalTopologyBuilder internalTopologyBuilder, cacheSizePerThread, stateDirectory, delegatingStateRestoreListener, -i + 1); +i + 1, +KafkaStreams.this::close, Review comment: This will call closeToError but I am testing if that has a problem. So far it does not This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] wcarlson5 commented on pull request #9273: KAFKA-9331: changes for Streams uncaught exception handler

wcarlson5 commented on pull request #9273: URL: https://github.com/apache/kafka/pull/9273#issuecomment-715581699 Moved to https://github.com/apache/kafka/pull/9487 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] wcarlson5 closed pull request #9273: KAFKA-9331: changes for Streams uncaught exception handler

wcarlson5 closed pull request #9273: URL: https://github.com/apache/kafka/pull/9273 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] wcarlson5 opened a new pull request #9487: KAFKA-9331 add a streams handler

wcarlson5 opened a new pull request #9487: URL: https://github.com/apache/kafka/pull/9487 *More detailed description of your change, if necessary. The PR title and PR message become the squashed commit message, so use a separate comment to ping reviewers.* *Summary of testing strategy (including rationale) for the feature or bug fix. Unit and/or integration tests are expected for any behaviour change and system tests should be considered for larger changes.* ### Committer Checklist (excluded from commit message) - [ ] Verify design and implementation - [ ] Verify test coverage and CI build status - [ ] Verify documentation (including upgrade notes) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] mjsax commented on a change in pull request #9486: KAFKA-9381: Fix releaseTarGz to publish valid scaladoc files

mjsax commented on a change in pull request #9486:

URL: https://github.com/apache/kafka/pull/9486#discussion_r511135914

##

File path: build.gradle

##

@@ -942,6 +942,7 @@ project(':core') {

from(project(':streams').jar) { into("libs/") }

from(project(':streams').configurations.runtime) { into("libs/") }

from(project(':streams:streams-scala').jar) { into("libs/") }

+

from(project(':streams:streams-scala').configurations.archives.artifacts.files.filter

{ file -> file.name.matches(".*scaladoc.*")}) { into("libs/") }

Review comment:

Do we actually need to do this? I don't see any other line here that

would copy JavaDocs-jar into the `lib` directory?

\cc @ijuma

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] jsancio commented on a change in pull request #9418: KAFKA-10601; Add support for append linger to Raft implementation

jsancio commented on a change in pull request #9418:

URL: https://github.com/apache/kafka/pull/9418#discussion_r52281

##

File path:

raft/src/main/java/org/apache/kafka/raft/internals/BatchAccumulator.java

##

@@ -0,0 +1,294 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.kafka.raft.internals;

+

+import org.apache.kafka.common.memory.MemoryPool;

+import org.apache.kafka.common.record.CompressionType;

+import org.apache.kafka.common.record.MemoryRecords;

+import org.apache.kafka.common.record.RecordBatch;

+import org.apache.kafka.common.utils.Time;

+import org.apache.kafka.common.utils.Timer;

+import org.apache.kafka.raft.RecordSerde;

+

+import java.io.Closeable;

+import java.nio.ByteBuffer;

+import java.util.ArrayList;

+import java.util.List;

+import java.util.concurrent.locks.ReentrantLock;

+

+/**

+ * TODO: Also flush after minimum size limit is reached?

+ */

+public class BatchAccumulator implements Closeable {

+private final int epoch;

+private final Time time;

+private final Timer lingerTimer;

+private final int lingerMs;

+private final int maxBatchSize;

+private final CompressionType compressionType;

+private final MemoryPool memoryPool;

+private final ReentrantLock lock;

+private final RecordSerde serde;

+

+private long nextOffset;

+private BatchBuilder currentBatch;

+private List> completed;

+

+public BatchAccumulator(

+int epoch,

+long baseOffset,

+int lingerMs,

+int maxBatchSize,

+MemoryPool memoryPool,

+Time time,

+CompressionType compressionType,

+RecordSerde serde

+) {

+this.epoch = epoch;

+this.lingerMs = lingerMs;

+this.maxBatchSize = maxBatchSize;

+this.memoryPool = memoryPool;

+this.time = time;

+this.lingerTimer = time.timer(lingerMs);

+this.compressionType = compressionType;

+this.serde = serde;

+this.nextOffset = baseOffset;

+this.completed = new ArrayList<>();

+this.lock = new ReentrantLock();

+}

+

+/**

+ * Append a list of records into an atomic batch. We guarantee all records

+ * are included in the same underlying record batch so that either all of

+ * the records become committed or none of them do.

+ *

+ * @param epoch the expected leader epoch

+ * @param records the list of records to include in a batch

+ * @return the offset of the last message or {@link Long#MAX_VALUE} if the

epoch

+ * does not match

+ */

+public Long append(int epoch, List records) {

+if (epoch != this.epoch) {

+// If the epoch does not match, then the state machine probably

+// has not gotten the notification about the latest epoch change.

+// In this case, ignore the append and return a large offset value

+// which will never be committed.

+return Long.MAX_VALUE;

+}

+

+Object serdeContext = serde.newWriteContext();

+int batchSize = 0;

+for (T record : records) {

+batchSize += serde.recordSize(record, serdeContext);

+}

+

+if (batchSize > maxBatchSize) {

+throw new IllegalArgumentException("The total size of " + records

+ " is " + batchSize +

+", which exceeds the maximum allowed batch size of " +

maxBatchSize);

+}

+

+lock.lock();

+try {

+BatchBuilder batch = maybeAllocateBatch(batchSize);

+if (batch == null) {

+return null;

+}

+

+if (isEmpty()) {

+lingerTimer.update();

+lingerTimer.reset(lingerMs);

+}

+

+for (T record : records) {

+batch.appendRecord(record, serdeContext);

+nextOffset += 1;

+}

+

+return nextOffset - 1;

+} finally {

+lock.unlock();

+}

+}

+

+private BatchBuilder maybeAllocateBatch(int batchSize) {

+if (currentBatch == null) {

+startNewBatch();

+} else if (!currentBatch.hasRoomFor(batchSize)) {

+

[GitHub] [kafka] bbejeck commented on pull request #9486: KAFKA-9381: Fix releaseTarGz to publish valid scaladoc files

bbejeck commented on pull request #9486: URL: https://github.com/apache/kafka/pull/9486#issuecomment-715558972 >We probably only want to publish scaladoc for kafka streams since it's the only module that has a public Scala API. ack, working on that now This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] mjsax commented on pull request #9486: KAFKA-9381: Fix releaseTarGz to publish valid scaladoc files

mjsax commented on pull request #9486: URL: https://github.com/apache/kafka/pull/9486#issuecomment-715556674 @ijuma Correct! That is what KAFKA-9381 actually is all about. LGTM. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] ijuma commented on pull request #9486: KAFKA-9381: Fix releaseTarGz to publish valid scaladoc files

ijuma commented on pull request #9486: URL: https://github.com/apache/kafka/pull/9486#issuecomment-715554159 We probably only want to publish scaladoc for kafka streams since it's the only module that has a public Scala API. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (KAFKA-10638) QueryableStateIntegrationTest fails due to stricter store checking

[

https://issues.apache.org/jira/browse/KAFKA-10638?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Bill Bejeck updated KAFKA-10638:

Fix Version/s: 2.7.0

> QueryableStateIntegrationTest fails due to stricter store checking

> --

>

> Key: KAFKA-10638

> URL: https://issues.apache.org/jira/browse/KAFKA-10638

> Project: Kafka

> Issue Type: Bug

> Components: streams

>Affects Versions: 2.7.0

>Reporter: John Roesler

>Assignee: John Roesler

>Priority: Blocker

> Fix For: 2.7.0

>

>

> Observed:

> {code:java}

> org.apache.kafka.streams.errors.InvalidStateStoreException: Cannot get state

> store source-table because the stream thread is PARTITIONS_ASSIGNED, not

> RUNNING

> at

> org.apache.kafka.streams.state.internals.StreamThreadStateStoreProvider.stores(StreamThreadStateStoreProvider.java:81)

> at

> org.apache.kafka.streams.state.internals.WrappingStoreProvider.stores(WrappingStoreProvider.java:50)

> at

> org.apache.kafka.streams.state.internals.CompositeReadOnlyKeyValueStore.get(CompositeReadOnlyKeyValueStore.java:52)

> at

> org.apache.kafka.streams.integration.StoreQueryIntegrationTest.shouldQuerySpecificActivePartitionStores(StoreQueryIntegrationTest.java:200)

> at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

> at

> sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

> at

> sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> at java.lang.reflect.Method.invoke(Method.java:498)

> at

> org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:59)

> at

> org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12)

> at

> org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:56)

> at

> org.junit.internal.runners.statements.InvokeMethod.evaluate(InvokeMethod.java:17)

> at

> org.junit.internal.runners.statements.RunBefores.evaluate(RunBefores.java:26)

> at

> org.junit.internal.runners.statements.RunAfters.evaluate(RunAfters.java:27)

> at org.junit.rules.ExternalResource$1.evaluate(ExternalResource.java:54)

> at org.junit.rules.TestWatcher$1.evaluate(TestWatcher.java:61)

> at org.junit.runners.ParentRunner$3.evaluate(ParentRunner.java:306)

> at

> org.junit.runners.BlockJUnit4ClassRunner$1.evaluate(BlockJUnit4ClassRunner.java:100)

> at org.junit.runners.ParentRunner.runLeaf(ParentRunner.java:366)

> at

> org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:103)

> at

> org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:63)

> at org.junit.runners.ParentRunner$4.run(ParentRunner.java:331)

> at org.junit.runners.ParentRunner$1.schedule(ParentRunner.java:79)

> at org.junit.runners.ParentRunner.runChildren(ParentRunner.java:329)

> at org.junit.runners.ParentRunner.access$100(ParentRunner.java:66)

> at org.junit.runners.ParentRunner$2.evaluate(ParentRunner.java:293)

> at org.junit.runners.ParentRunner$3.evaluate(ParentRunner.java:306)

> at org.junit.runners.ParentRunner.run(ParentRunner.java:413)

> at

> org.gradle.api.internal.tasks.testing.junit.JUnitTestClassExecutor.runTestClass(JUnitTestClassExecutor.java:110)

> at

> org.gradle.api.internal.tasks.testing.junit.JUnitTestClassExecutor.execute(JUnitTestClassExecutor.java:58)

> at

> org.gradle.api.internal.tasks.testing.junit.JUnitTestClassExecutor.execute(JUnitTestClassExecutor.java:38)

> at

> org.gradle.api.internal.tasks.testing.junit.AbstractJUnitTestClassProcessor.processTestClass(AbstractJUnitTestClassProcessor.java:62)

> at

> org.gradle.api.internal.tasks.testing.SuiteTestClassProcessor.processTestClass(SuiteTestClassProcessor.java:51)

> at sun.reflect.GeneratedMethodAccessor23.invoke(Unknown Source)

> at

> sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> at java.lang.reflect.Method.invoke(Method.java:498)

> at

> org.gradle.internal.dispatch.ReflectionDispatch.dispatch(ReflectionDispatch.java:36)

> at

> org.gradle.internal.dispatch.ReflectionDispatch.dispatch(ReflectionDispatch.java:24)

> at

> org.gradle.internal.dispatch.ContextClassLoaderDispatch.dispatch(ContextClassLoaderDispatch.java:33)

> at

> org.gradle.internal.dispatch.ProxyDispatchAdapter$DispatchingInvocationHandler.invoke(ProxyDispatchAdapter.java:94)

> at com.sun.proxy.$Proxy2.processTestClass(Unknown Source)

> at

> org.gradle.api.internal.tasks.testing.worker.TestWorker.processTestClass(TestWorker.java:119)

> at

[GitHub] [kafka] bbejeck commented on pull request #9486: KAFKA-9381: Fix releaseTarGz to publish valid scaladoc files

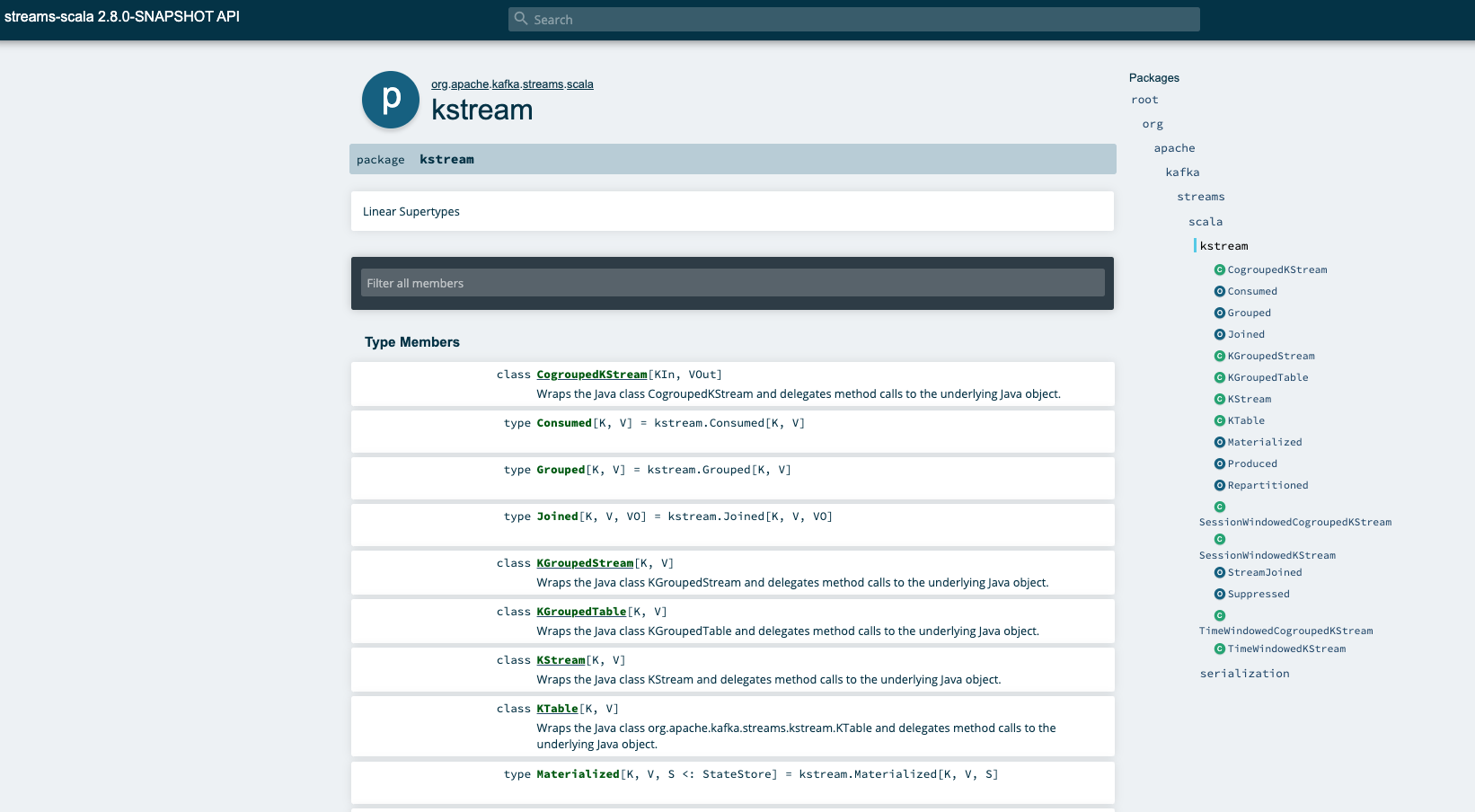

bbejeck commented on pull request #9486: URL: https://github.com/apache/kafka/pull/9486#issuecomment-715526717 Results of rendering contents of scaladoc jar  This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] bbejeck commented on a change in pull request #9486: KAFKA-9381: Fix releaseTarGz to publish valid scaladoc files

bbejeck commented on a change in pull request #9486:

URL: https://github.com/apache/kafka/pull/9486#discussion_r511082358

##

File path: build.gradle

##

@@ -1439,7 +1440,7 @@ project(':streams:streams-scala') {

}

jar {

-dependsOn 'copyDependantLibs'

+dependsOn 'copyDependantLibs' , 'scaladocJar'

Review comment:

@ijuma good point, I've removed it and still get the required doc jars

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Commented] (KAFKA-10638) QueryableStateIntegrationTest fails due to stricter store checking

[

https://issues.apache.org/jira/browse/KAFKA-10638?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17219873#comment-17219873

]

John Roesler commented on KAFKA-10638:

--

Hey [~bbejeck] , I've marked this as a 2.7.0 blocker. It's a regression (due to

KAFKA-10598), but fortunately, only a regression in the store query test.

Still, we should get the fix in.

> QueryableStateIntegrationTest fails due to stricter store checking

> --

>

> Key: KAFKA-10638

> URL: https://issues.apache.org/jira/browse/KAFKA-10638

> Project: Kafka

> Issue Type: Bug

> Components: streams

>Affects Versions: 2.7.0

>Reporter: John Roesler

>Assignee: John Roesler

>Priority: Blocker

>

> Observed:

> {code:java}

> org.apache.kafka.streams.errors.InvalidStateStoreException: Cannot get state

> store source-table because the stream thread is PARTITIONS_ASSIGNED, not

> RUNNING

> at

> org.apache.kafka.streams.state.internals.StreamThreadStateStoreProvider.stores(StreamThreadStateStoreProvider.java:81)

> at

> org.apache.kafka.streams.state.internals.WrappingStoreProvider.stores(WrappingStoreProvider.java:50)

> at

> org.apache.kafka.streams.state.internals.CompositeReadOnlyKeyValueStore.get(CompositeReadOnlyKeyValueStore.java:52)

> at

> org.apache.kafka.streams.integration.StoreQueryIntegrationTest.shouldQuerySpecificActivePartitionStores(StoreQueryIntegrationTest.java:200)

> at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

> at

> sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

> at

> sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> at java.lang.reflect.Method.invoke(Method.java:498)

> at

> org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:59)

> at

> org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12)

> at

> org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:56)

> at

> org.junit.internal.runners.statements.InvokeMethod.evaluate(InvokeMethod.java:17)

> at

> org.junit.internal.runners.statements.RunBefores.evaluate(RunBefores.java:26)

> at

> org.junit.internal.runners.statements.RunAfters.evaluate(RunAfters.java:27)

> at org.junit.rules.ExternalResource$1.evaluate(ExternalResource.java:54)

> at org.junit.rules.TestWatcher$1.evaluate(TestWatcher.java:61)

> at org.junit.runners.ParentRunner$3.evaluate(ParentRunner.java:306)

> at

> org.junit.runners.BlockJUnit4ClassRunner$1.evaluate(BlockJUnit4ClassRunner.java:100)

> at org.junit.runners.ParentRunner.runLeaf(ParentRunner.java:366)

> at

> org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:103)

> at

> org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:63)

> at org.junit.runners.ParentRunner$4.run(ParentRunner.java:331)

> at org.junit.runners.ParentRunner$1.schedule(ParentRunner.java:79)

> at org.junit.runners.ParentRunner.runChildren(ParentRunner.java:329)

> at org.junit.runners.ParentRunner.access$100(ParentRunner.java:66)

> at org.junit.runners.ParentRunner$2.evaluate(ParentRunner.java:293)

> at org.junit.runners.ParentRunner$3.evaluate(ParentRunner.java:306)

> at org.junit.runners.ParentRunner.run(ParentRunner.java:413)

> at

> org.gradle.api.internal.tasks.testing.junit.JUnitTestClassExecutor.runTestClass(JUnitTestClassExecutor.java:110)

> at

> org.gradle.api.internal.tasks.testing.junit.JUnitTestClassExecutor.execute(JUnitTestClassExecutor.java:58)

> at

> org.gradle.api.internal.tasks.testing.junit.JUnitTestClassExecutor.execute(JUnitTestClassExecutor.java:38)

> at

> org.gradle.api.internal.tasks.testing.junit.AbstractJUnitTestClassProcessor.processTestClass(AbstractJUnitTestClassProcessor.java:62)

> at

> org.gradle.api.internal.tasks.testing.SuiteTestClassProcessor.processTestClass(SuiteTestClassProcessor.java:51)

> at sun.reflect.GeneratedMethodAccessor23.invoke(Unknown Source)

> at

> sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> at java.lang.reflect.Method.invoke(Method.java:498)

> at

> org.gradle.internal.dispatch.ReflectionDispatch.dispatch(ReflectionDispatch.java:36)

> at

> org.gradle.internal.dispatch.ReflectionDispatch.dispatch(ReflectionDispatch.java:24)

> at

> org.gradle.internal.dispatch.ContextClassLoaderDispatch.dispatch(ContextClassLoaderDispatch.java:33)

> at

> org.gradle.internal.dispatch.ProxyDispatchAdapter$DispatchingInvocationHandler.invoke(ProxyDispatchAdapter.java:94)

> at

[jira] [Created] (KAFKA-10638) QueryableStateIntegrationTest fails due to stricter store checking

John Roesler created KAFKA-10638:

Summary: QueryableStateIntegrationTest fails due to stricter store

checking

Key: KAFKA-10638

URL: https://issues.apache.org/jira/browse/KAFKA-10638

Project: Kafka

Issue Type: Bug

Components: streams

Affects Versions: 2.7.0

Reporter: John Roesler

Assignee: John Roesler

Observed:

{code:java}

org.apache.kafka.streams.errors.InvalidStateStoreException: Cannot get state

store source-table because the stream thread is PARTITIONS_ASSIGNED, not RUNNING

at

org.apache.kafka.streams.state.internals.StreamThreadStateStoreProvider.stores(StreamThreadStateStoreProvider.java:81)

at

org.apache.kafka.streams.state.internals.WrappingStoreProvider.stores(WrappingStoreProvider.java:50)

at

org.apache.kafka.streams.state.internals.CompositeReadOnlyKeyValueStore.get(CompositeReadOnlyKeyValueStore.java:52)

at

org.apache.kafka.streams.integration.StoreQueryIntegrationTest.shouldQuerySpecificActivePartitionStores(StoreQueryIntegrationTest.java:200)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at

sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at

sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at

org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:59)

at

org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12)

at

org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:56)

at

org.junit.internal.runners.statements.InvokeMethod.evaluate(InvokeMethod.java:17)

at

org.junit.internal.runners.statements.RunBefores.evaluate(RunBefores.java:26)

at

org.junit.internal.runners.statements.RunAfters.evaluate(RunAfters.java:27)

at org.junit.rules.ExternalResource$1.evaluate(ExternalResource.java:54)

at org.junit.rules.TestWatcher$1.evaluate(TestWatcher.java:61)

at org.junit.runners.ParentRunner$3.evaluate(ParentRunner.java:306)

at

org.junit.runners.BlockJUnit4ClassRunner$1.evaluate(BlockJUnit4ClassRunner.java:100)

at org.junit.runners.ParentRunner.runLeaf(ParentRunner.java:366)

at

org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:103)

at

org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:63)

at org.junit.runners.ParentRunner$4.run(ParentRunner.java:331)

at org.junit.runners.ParentRunner$1.schedule(ParentRunner.java:79)

at org.junit.runners.ParentRunner.runChildren(ParentRunner.java:329)

at org.junit.runners.ParentRunner.access$100(ParentRunner.java:66)

at org.junit.runners.ParentRunner$2.evaluate(ParentRunner.java:293)

at org.junit.runners.ParentRunner$3.evaluate(ParentRunner.java:306)

at org.junit.runners.ParentRunner.run(ParentRunner.java:413)

at

org.gradle.api.internal.tasks.testing.junit.JUnitTestClassExecutor.runTestClass(JUnitTestClassExecutor.java:110)

at

org.gradle.api.internal.tasks.testing.junit.JUnitTestClassExecutor.execute(JUnitTestClassExecutor.java:58)

at

org.gradle.api.internal.tasks.testing.junit.JUnitTestClassExecutor.execute(JUnitTestClassExecutor.java:38)

at

org.gradle.api.internal.tasks.testing.junit.AbstractJUnitTestClassProcessor.processTestClass(AbstractJUnitTestClassProcessor.java:62)

at

org.gradle.api.internal.tasks.testing.SuiteTestClassProcessor.processTestClass(SuiteTestClassProcessor.java:51)

at sun.reflect.GeneratedMethodAccessor23.invoke(Unknown Source)

at

sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at

org.gradle.internal.dispatch.ReflectionDispatch.dispatch(ReflectionDispatch.java:36)

at

org.gradle.internal.dispatch.ReflectionDispatch.dispatch(ReflectionDispatch.java:24)

at

org.gradle.internal.dispatch.ContextClassLoaderDispatch.dispatch(ContextClassLoaderDispatch.java:33)

at

org.gradle.internal.dispatch.ProxyDispatchAdapter$DispatchingInvocationHandler.invoke(ProxyDispatchAdapter.java:94)

at com.sun.proxy.$Proxy2.processTestClass(Unknown Source)

at

org.gradle.api.internal.tasks.testing.worker.TestWorker.processTestClass(TestWorker.java:119)

at sun.reflect.GeneratedMethodAccessor22.invoke(Unknown Source)

at

sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at

org.gradle.internal.dispatch.ReflectionDispatch.dispatch(ReflectionDispatch.java:36)

at

[GitHub] [kafka] ijuma commented on a change in pull request #9486: KAFKA-9381: Fix releaseTarGz to publish valid scaladoc files

ijuma commented on a change in pull request #9486:

URL: https://github.com/apache/kafka/pull/9486#discussion_r511073744

##

File path: build.gradle

##

@@ -1439,7 +1440,7 @@ project(':streams:streams-scala') {

}

jar {

-dependsOn 'copyDependantLibs'

+dependsOn 'copyDependantLibs' , 'scaladocJar'

Review comment:

Hmm, seems weird to require scaladoc when building jars.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Commented] (KAFKA-10633) Constant probing rebalances in Streams 2.6

[ https://issues.apache.org/jira/browse/KAFKA-10633?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17219866#comment-17219866 ] Bradley Peterson commented on KAFKA-10633: -- Thank you, Sophie! I'll do a build with the PR for KAFKA-10455 and confirm that it fixes this issue. > Constant probing rebalances in Streams 2.6 > -- > > Key: KAFKA-10633 > URL: https://issues.apache.org/jira/browse/KAFKA-10633 > Project: Kafka > Issue Type: Bug > Components: streams >Affects Versions: 2.6.0 >Reporter: Bradley Peterson >Priority: Major > Attachments: Discover 2020-10-21T23 34 03.867Z - 2020-10-21T23 44 > 46.409Z.csv > > > We are seeing a few issues with the new rebalancing behavior in Streams 2.6. > This ticket is for constant probing rebalances on one StreamThread, but I'll > mention the other issues, as they may be related. > First, when we redeploy the application we see tasks being moved, even though > the task assignment was stable before redeploying. We would expect to see > tasks assigned back to the same instances and no movement. The application is > in EC2, with persistent EBS volumes, and we use static group membership to > avoid rebalancing. To redeploy the app we terminate all EC2 instances. The > new instances will reattach the EBS volumes and use the same group member id. > After redeploying, we sometimes see the group leader go into a tight probing > rebalance loop. This doesn't happen immediately, it could be several hours > later. Because the redeploy caused task movement, we see expected probing > rebalances every 10 minutes. But, then one thread will go into a tight loop > logging messages like "Triggering the followup rebalance scheduled for > 1603323868771 ms.", handling the partition assignment (which doesn't change), > then "Requested to schedule probing rebalance for 1603323868771 ms." This > repeats several times a second until the app is restarted again. I'll attach > a log export from one such incident. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (KAFKA-10284) Group membership update due to static member rejoin should be persisted

[ https://issues.apache.org/jira/browse/KAFKA-10284?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] John Roesler updated KAFKA-10284: - Fix Version/s: 2.5.2 > Group membership update due to static member rejoin should be persisted > --- > > Key: KAFKA-10284 > URL: https://issues.apache.org/jira/browse/KAFKA-10284 > Project: Kafka > Issue Type: Bug > Components: consumer >Affects Versions: 2.3.0, 2.4.0, 2.5.0, 2.6.0 >Reporter: Boyang Chen >Assignee: feyman >Priority: Critical > Labels: help-wanted > Fix For: 2.7.0, 2.5.2, 2.6.1 > > Attachments: How to reproduce the issue in KAFKA-10284.md > > > For known static members rejoin, we would update its corresponding member.id > without triggering a new rebalance. This serves the purpose for avoiding > unnecessary rebalance for static membership, as well as fencing purpose if > some still uses the old member.id. > The bug is that we don't actually persist the membership update, so if no > upcoming rebalance gets triggered, this new member.id information will get > lost during group coordinator immigration, thus bringing up the zombie member > identity. > The bug find credit goes to [~hachikuji] -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] vvcephei commented on pull request #9414: KAFKA-10585: Kafka Streams should clean up the state store directory from cleanup

vvcephei commented on pull request #9414: URL: https://github.com/apache/kafka/pull/9414#issuecomment-715502309 Thanks for that fix, @dongjinleekr ! Unfortunately, there are still 61 test failures: https://ci-builds.apache.org/job/Kafka/job/kafka-pr/job/PR-9414/6/?cloudbees-analytics-link=scm-reporting%2Fstage%2Ffailure#showFailuresLink Do you mind taking another look? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (KAFKA-10633) Constant probing rebalances in Streams 2.6

[ https://issues.apache.org/jira/browse/KAFKA-10633?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17219861#comment-17219861 ] A. Sophie Blee-Goldman commented on KAFKA-10633: A thread should always reset its scheduled rebalance after triggering one, and it will only set the rebalance schedule when it receives its assignment after a rebalance. And the probing rebalance is always scheduled for 10min past the current time, so the fact that you see the same time printed in the "Triggering the follow rebalance" and "Requested to schedule probing rebalance" messages indicates that the member did not actually go through a rebalance, it just received its previous assignment directly from the broker. Also, a rebalance will never be completed in under a second, so seeing `Triggering the followup rebalance scheduled for 1603323868771 ms` followed immediately by `Requested to schedule probing rebalance for 1603323868771 ms` seems to verify that it did not in fact go through a rebalance. [~thebearmayor] this issue is fixed in 2.7.0 and 2.6.1 – not sure when 2.6.1 will be released but the 2.7.0 release is currently in progress so it should hopefully be available soon. Apologies for our oversight in designing KIP-441 > Constant probing rebalances in Streams 2.6 > -- > > Key: KAFKA-10633 > URL: https://issues.apache.org/jira/browse/KAFKA-10633 > Project: Kafka > Issue Type: Bug > Components: streams >Affects Versions: 2.6.0 >Reporter: Bradley Peterson >Priority: Major > Attachments: Discover 2020-10-21T23 34 03.867Z - 2020-10-21T23 44 > 46.409Z.csv > > > We are seeing a few issues with the new rebalancing behavior in Streams 2.6. > This ticket is for constant probing rebalances on one StreamThread, but I'll > mention the other issues, as they may be related. > First, when we redeploy the application we see tasks being moved, even though > the task assignment was stable before redeploying. We would expect to see > tasks assigned back to the same instances and no movement. The application is > in EC2, with persistent EBS volumes, and we use static group membership to > avoid rebalancing. To redeploy the app we terminate all EC2 instances. The > new instances will reattach the EBS volumes and use the same group member id. > After redeploying, we sometimes see the group leader go into a tight probing > rebalance loop. This doesn't happen immediately, it could be several hours > later. Because the redeploy caused task movement, we see expected probing > rebalances every 10 minutes. But, then one thread will go into a tight loop > logging messages like "Triggering the followup rebalance scheduled for > 1603323868771 ms.", handling the partition assignment (which doesn't change), > then "Requested to schedule probing rebalance for 1603323868771 ms." This > repeats several times a second until the app is restarted again. I'll attach > a log export from one such incident. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (KAFKA-10633) Constant probing rebalances in Streams 2.6

[ https://issues.apache.org/jira/browse/KAFKA-10633?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17219859#comment-17219859 ] A. Sophie Blee-Goldman commented on KAFKA-10633: I'm like 99% sure this will turn out to be KAFKA-10455 > Constant probing rebalances in Streams 2.6 > -- > > Key: KAFKA-10633 > URL: https://issues.apache.org/jira/browse/KAFKA-10633 > Project: Kafka > Issue Type: Bug > Components: streams >Affects Versions: 2.6.0 >Reporter: Bradley Peterson >Priority: Major > Attachments: Discover 2020-10-21T23 34 03.867Z - 2020-10-21T23 44 > 46.409Z.csv > > > We are seeing a few issues with the new rebalancing behavior in Streams 2.6. > This ticket is for constant probing rebalances on one StreamThread, but I'll > mention the other issues, as they may be related. > First, when we redeploy the application we see tasks being moved, even though > the task assignment was stable before redeploying. We would expect to see > tasks assigned back to the same instances and no movement. The application is > in EC2, with persistent EBS volumes, and we use static group membership to > avoid rebalancing. To redeploy the app we terminate all EC2 instances. The > new instances will reattach the EBS volumes and use the same group member id. > After redeploying, we sometimes see the group leader go into a tight probing > rebalance loop. This doesn't happen immediately, it could be several hours > later. Because the redeploy caused task movement, we see expected probing > rebalances every 10 minutes. But, then one thread will go into a tight loop > logging messages like "Triggering the followup rebalance scheduled for > 1603323868771 ms.", handling the partition assignment (which doesn't change), > then "Requested to schedule probing rebalance for 1603323868771 ms." This > repeats several times a second until the app is restarted again. I'll attach > a log export from one such incident. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] vvcephei merged pull request #9481: KAFKA-10284: Disable static membership test in 2.4

vvcephei merged pull request #9481: URL: https://github.com/apache/kafka/pull/9481 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] vvcephei commented on pull request #9481: KAFKA-10284: Disable static membership test in 2.4

vvcephei commented on pull request #9481: URL: https://github.com/apache/kafka/pull/9481#issuecomment-715499343 Thanks @abbccdda ! This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] vvcephei commented on a change in pull request #9481: KAFKA-10284: Disable static membership test in 2.4

vvcephei commented on a change in pull request #9481: URL: https://github.com/apache/kafka/pull/9481#discussion_r511058225 ## File path: tests/kafkatest/tests/streams/streams_static_membership_test.py ## @@ -48,6 +49,7 @@ def __init__(self, test_context): throughput=1000, acks=1) +@ignore Review comment: ```suggestion # This test fails due to a bug that is fixed in 2.5+ (KAFKA-10284). We opted not to backport # the fix to 2.4 and instead marked this test as ignored. If desired, the fix can be backported, # but it is non-trivial to do so. @ignore ``` This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] rajinisivaram commented on pull request #9382: KAFKA-10554; Perform follower truncation based on diverging epochs in Fetch response

rajinisivaram commented on pull request #9382: URL: https://github.com/apache/kafka/pull/9382#issuecomment-715493391 @hachikuji Thanks for the review, have addressed the comments. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] rajinisivaram commented on a change in pull request #9382: KAFKA-10554; Perform follower truncation based on diverging epochs in Fetch response