[GitHub] [kafka] satishd commented on a change in pull request #10173: KAFKA-9548 Added SPIs and public classes/interfaces introduced in KIP-405 for tiered storage feature in Kafka.

satishd commented on a change in pull request #10173:

URL: https://github.com/apache/kafka/pull/10173#discussion_r585333787

##

File path:

clients/src/main/java/org/apache/kafka/server/log/remote/storage/RemoteLogSegmentMetadata.java

##

@@ -0,0 +1,283 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.kafka.server.log.remote.storage;

+

+import org.apache.kafka.common.annotation.InterfaceStability;

+

+import java.io.Serializable;

+import java.util.Collections;

+import java.util.Map;

+import java.util.NavigableMap;

+import java.util.Objects;

+import java.util.concurrent.ConcurrentSkipListMap;

+

+/**

+ * It describes the metadata about a topic partition's remote log segment in

the remote storage. This is uniquely

+ * represented with {@link RemoteLogSegmentId}.

+ *

+ * New instance is always created with the state as {@link

RemoteLogSegmentState#COPY_SEGMENT_STARTED}. This can be

+ * updated by applying {@link RemoteLogSegmentMetadataUpdate} for the

respective {@link RemoteLogSegmentId} of the

+ * {@code RemoteLogSegmentMetadata}.

+ */

+@InterfaceStability.Evolving

+public class RemoteLogSegmentMetadata implements Serializable {

Review comment:

No, this is not strictly needed.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] satishd commented on a change in pull request #10173: KAFKA-9548 Added SPIs and public classes/interfaces introduced in KIP-405 for tiered storage feature in Kafka.

satishd commented on a change in pull request #10173:

URL: https://github.com/apache/kafka/pull/10173#discussion_r585282329

##

File path:

clients/src/main/java/org/apache/kafka/server/log/remote/storage/RemoteLogMetadataManager.java

##

@@ -0,0 +1,196 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.kafka.server.log.remote.storage;

+

+import org.apache.kafka.common.Configurable;

+import org.apache.kafka.common.TopicIdPartition;

+import org.apache.kafka.common.annotation.InterfaceStability;

+

+import java.io.Closeable;

+import java.util.Iterator;

+import java.util.Map;

+import java.util.Optional;

+import java.util.Set;

+

+/**

+ * This interface provides storing and fetching remote log segment metadata

with strongly consistent semantics.

+ *

+ * This class can be plugged in to Kafka cluster by adding the implementation

class as

+ * remote.log.metadata.manager.class.name property value. There

is an inbuilt implementation backed by

+ * topic storage in the local cluster. This is used as the default

implementation if

+ * remote.log.metadata.manager.class.name is not configured.

+ *

+ *

+ * remote.log.metadata.manager.class.path property is about the

class path of the RemoteLogStorageManager

+ * implementation. If specified, the RemoteLogStorageManager implementation

and its dependent libraries will be loaded

+ * by a dedicated classloader which searches this class path before the Kafka

broker class path. The syntax of this

+ * parameter is same with the standard Java class path string.

+ *

+ *

+ * remote.log.metadata.manager.listener.name property is about

listener name of the local broker to which

+ * it should get connected if needed by RemoteLogMetadataManager

implementation. When this is configured all other

+ * required properties can be passed as properties with prefix of

'remote.log.metadata.manager.listener.

+ *

+ * "cluster.id", "broker.id" and all other properties prefixed with

"remote.log.metadata." are passed when

+ * {@link #configure(Map)} is invoked on this instance.

+ *

+ */

+@InterfaceStability.Evolving

+public interface RemoteLogMetadataManager extends Configurable, Closeable {

+

+/**

+ * Stores {@link }RemoteLogSegmentMetadata} with the containing {@link

}RemoteLogSegmentId} into {@link RemoteLogMetadataManager}.

+ *

+ * RemoteLogSegmentMetadata is identified by RemoteLogSegmentId.

+ *

+ * @param remoteLogSegmentMetadata metadata about the remote log segment.

+ * @throws RemoteStorageException if there are any storage related errors

occurred.

+ */

+void putRemoteLogSegmentMetadata(RemoteLogSegmentMetadata

remoteLogSegmentMetadata) throws RemoteStorageException;

+

+/**

+ * This method is used to update the {@link RemoteLogSegmentMetadata}.

Currently, it allows to update with the new

+ * state based on the life cycle of the segment. It can go through the

below state transitions.

+ *

+ *

+ * +-++--+

+ * |COPY_SEGMENT_STARTED |--->|COPY_SEGMENT_FINISHED |

+ * +---+-++--+---+

+ * | |

+ * | |

+ * v v

+ * +--+-+-+

+ * |DELETE_SEGMENT_STARTED|

+ * +---+--+

+ * |

+ * |

+ * v

+ * +---+---+

+ * |DELETE_SEGMENT_FINISHED|

+ * +---+

+ *

+ *

+ * {@link RemoteLogSegmentState#COPY_SEGMENT_STARTED} - This state

indicates that the segment copying to remote storage is started but not yet

finished.

+ * {@link RemoteLogSegmentState#COPY_SEGMENT_FINISHED} - This state

indicates that the segment copying to remote storage is finished.

+ *

+ * The leader broker copies the log segments to the remote storage and

puts the remote log segment metadata with the

+ * state as

[GitHub] [kafka] satishd commented on a change in pull request #10173: KAFKA-9548 Added SPIs and public classes/interfaces introduced in KIP-405 for tiered storage feature in Kafka.

satishd commented on a change in pull request #10173:

URL: https://github.com/apache/kafka/pull/10173#discussion_r585331289

##

File path: clients/src/main/java/org/apache/kafka/common/TopicIdPartition.java

##

@@ -0,0 +1,77 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.kafka.common;

+

+import java.io.Serializable;

+import java.util.Objects;

+import java.util.UUID;

+

+/**

+ * This represents universally unique identifier with topic id for a topic

partition. This makes sure that topics

+ * recreated with the same name will always have unique topic identifiers.

+ */

+public class TopicIdPartition implements Serializable {

Review comment:

sure, I will have a followup PR once this class is updated with Uuid.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Commented] (KAFKA-12393) Document multi-tenancy considerations

[ https://issues.apache.org/jira/browse/KAFKA-12393?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17293471#comment-17293471 ] ASF GitHub Bot commented on KAFKA-12393: miguno commented on pull request #334: URL: https://github.com/apache/kafka-site/pull/334#issuecomment-788696584 @bbejeck wrote in https://github.com/apache/kafka-site/pull/334#pullrequestreview-600990037: > Also, @miguno, can you create an identical PR to go against docs in AK trunk? Yes, I will do this once the content review of this PR is completed. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org > Document multi-tenancy considerations > - > > Key: KAFKA-12393 > URL: https://issues.apache.org/jira/browse/KAFKA-12393 > Project: Kafka > Issue Type: Bug > Components: documentation >Reporter: Michael G. Noll >Assignee: Michael G. Noll >Priority: Minor > > We should provide an overview of multi-tenancy consideration (e.g., user > spaces, security) as the current documentation lacks such information. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] satishd commented on a change in pull request #10173: KAFKA-9548 Added SPIs and public classes/interfaces introduced in KIP-405 for tiered storage feature in Kafka.

satishd commented on a change in pull request #10173:

URL: https://github.com/apache/kafka/pull/10173#discussion_r585283720

##

File path:

clients/src/main/java/org/apache/kafka/server/log/remote/storage/RemoteLogSegmentMetadata.java

##

@@ -0,0 +1,283 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.kafka.server.log.remote.storage;

+

+import org.apache.kafka.common.annotation.InterfaceStability;

+

+import java.io.Serializable;

+import java.util.Collections;

+import java.util.Map;

+import java.util.NavigableMap;

+import java.util.Objects;

+import java.util.concurrent.ConcurrentSkipListMap;

+

+/**

+ * It describes the metadata about a topic partition's remote log segment in

the remote storage. This is uniquely

+ * represented with {@link RemoteLogSegmentId}.

+ *

+ * New instance is always created with the state as {@link

RemoteLogSegmentState#COPY_SEGMENT_STARTED}. This can be

+ * updated by applying {@link RemoteLogSegmentMetadataUpdate} for the

respective {@link RemoteLogSegmentId} of the

+ * {@code RemoteLogSegmentMetadata}.

+ */

+@InterfaceStability.Evolving

+public class RemoteLogSegmentMetadata implements Serializable {

Review comment:

It is strictly not required. I was using java serialization earlier(POC)

before I added Kafka protocol serdes for remote log segment or partition

metadata.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] satishd commented on a change in pull request #10173: KAFKA-9548 Added SPIs and public classes/interfaces introduced in KIP-405 for tiered storage feature in Kafka.

satishd commented on a change in pull request #10173:

URL: https://github.com/apache/kafka/pull/10173#discussion_r585269658

##

File path: clients/src/main/java/org/apache/kafka/common/TopicIdPartition.java

##

@@ -0,0 +1,77 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.kafka.common;

+

+import java.io.Serializable;

+import java.util.Objects;

+import java.util.UUID;

+

+/**

+ * This represents universally unique identifier with topic id for a topic

partition. This makes sure that topics

+ * recreated with the same name will always have unique topic identifiers.

+ */

+public class TopicIdPartition implements Serializable {

Review comment:

sure, I will have a followup PR once this class is updated with Uuid.

##

File path:

clients/src/main/java/org/apache/kafka/server/log/remote/storage/RemoteLogSegmentMetadata.java

##

@@ -0,0 +1,283 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.kafka.server.log.remote.storage;

+

+import org.apache.kafka.common.annotation.InterfaceStability;

+

+import java.io.Serializable;

+import java.util.Collections;

+import java.util.Map;

+import java.util.NavigableMap;

+import java.util.Objects;

+import java.util.concurrent.ConcurrentSkipListMap;

+

+/**

+ * It describes the metadata about a topic partition's remote log segment in

the remote storage. This is uniquely

+ * represented with {@link RemoteLogSegmentId}.

+ *

+ * New instance is always created with the state as {@link

RemoteLogSegmentState#COPY_SEGMENT_STARTED}. This can be

+ * updated by applying {@link RemoteLogSegmentMetadataUpdate} for the

respective {@link RemoteLogSegmentId} of the

+ * {@code RemoteLogSegmentMetadata}.

+ */

+@InterfaceStability.Evolving

+public class RemoteLogSegmentMetadata implements Serializable {

Review comment:

It is strictly not required. I was using java serialization earlier

before I added Kafka protocol serdes for remote log segment or partition

metadata.

##

File path:

clients/src/main/java/org/apache/kafka/server/log/remote/storage/RemoteLogMetadataManager.java

##

@@ -0,0 +1,196 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.kafka.server.log.remote.storage;

+

+import org.apache.kafka.common.Configurable;

+import org.apache.kafka.common.TopicIdPartition;

+import org.apache.kafka.common.annotation.InterfaceStability;

+

+import java.io.Closeable;

+import java.util.Iterator;

+import java.util.Map;

+import java.util.Optional;

+import java.util.Set;

+

+/**

+ * This interface provides storing and fetching remote log segment metadata

with strongly consistent semantics.

+ *

+ * This class can be

[GitHub] [kafka] chia7712 commented on a change in pull request #10223: KAFKA-12394: Return `TOPIC_AUTHORIZATION_FAILED` in delete topic response if no describe permission

chia7712 commented on a change in pull request #10223:

URL: https://github.com/apache/kafka/pull/10223#discussion_r585322244

##

File path: core/src/main/scala/kafka/server/KafkaApis.scala

##

@@ -1884,20 +1884,26 @@ class KafkaApis(val requestChannel: RequestChannel,

val authorizedDeleteTopics =

authHelper.filterByAuthorized(request.context, DELETE, TOPIC,

results.asScala.filter(result => result.name() != null))(_.name)

results.forEach { topic =>

-val unresolvedTopicId = !(topic.topicId() == Uuid.ZERO_UUID) &&

topic.name() == null

- if (!config.usesTopicId &&

topicIdsFromRequest.contains(topic.topicId)) {

- topic.setErrorCode(Errors.UNSUPPORTED_VERSION.code)

- topic.setErrorMessage("Topic IDs are not supported on the server.")

- } else if (unresolvedTopicId)

- topic.setErrorCode(Errors.UNKNOWN_TOPIC_ID.code)

- else if (topicIdsFromRequest.contains(topic.topicId) &&

!authorizedDescribeTopics(topic.name))

- topic.setErrorCode(Errors.UNKNOWN_TOPIC_ID.code)

- else if (!authorizedDeleteTopics.contains(topic.name))

- topic.setErrorCode(Errors.TOPIC_AUTHORIZATION_FAILED.code)

- else if (!metadataCache.contains(topic.name))

- topic.setErrorCode(Errors.UNKNOWN_TOPIC_OR_PARTITION.code)

- else

- toDelete += topic.name

+val unresolvedTopicId = topic.topicId() != Uuid.ZERO_UUID &&

topic.name() == null

+if (!config.usesTopicId &&

topicIdsFromRequest.contains(topic.topicId)) {

+ topic.setErrorCode(Errors.UNSUPPORTED_VERSION.code)

+ topic.setErrorMessage("Topic IDs are not supported on the server.")

+} else if (unresolvedTopicId) {

+ topic.setErrorCode(Errors.UNKNOWN_TOPIC_ID.code)

+} else if (topicIdsFromRequest.contains(topic.topicId) &&

!authorizedDescribeTopics(topic.name)) {

+ // Because the client does not have Describe permission, the name

should

+ // not be returned in the response. Note, however, that we do not

consider

+ // the topicId itself to be sensitive, so there is no reason to

obscure

+ // this case with `UNKNOWN_TOPIC_ID`.

+ topic.setName(null)

+ topic.setErrorCode(Errors.TOPIC_AUTHORIZATION_FAILED.code)

+} else if (!authorizedDeleteTopics.contains(topic.name)) {

Review comment:

according to

https://github.com/apache/kafka/pull/10184#discussion_r585086425, should it

handle the case `name provided, topic missing, describable =>

UNKNOWN_TOPIC_OR_PARTITION`?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] dongjinleekr commented on pull request #10244: KAFKA-12399: Add log4j2 Appender

dongjinleekr commented on pull request #10244: URL: https://github.com/apache/kafka/pull/10244#issuecomment-788692068 @omkreddy @huxihx Could you have a look? :smile: This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] dongjinleekr opened a new pull request #10244: KAFKA-12399: Add log4j2 Appender

dongjinleekr opened a new pull request #10244: URL: https://github.com/apache/kafka/pull/10244 This PR implements `log4j2-appender`, a log4j2 equivalent of traditional `log4j-appender`. All `log4j-appender` configuration properties are supported, with some additions: 1. `brokerList` is deprecated for the consistency of the other CLI tools. `bootstrapServers` is added instead. 2. `requiredNumAcks` is deprecated for the consistency of `Producer`'s `Properties` instance. `acks` is added instead. 3. A new configuration option, `producerClass`, is added. Any `Producer` implementation with the `Properties` argument is supported. The unit test itself uses this feature with `MockProducer`. 4. In `log4j-appender`, the default values of `retries`, `requiredNumAcks`, `deliveryTimeoutMs`, `lingerMs`, and `batchSize` are redundantly defined in `Log4jAppender` implementation. Since their default values are already defined in `ProducerConfig`, `log4j2-appender` does not define their default values. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (KAFKA-8154) Buffer Overflow exceptions between brokers and with clients

[ https://issues.apache.org/jira/browse/KAFKA-8154?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17293463#comment-17293463 ] Gordon commented on KAFKA-8154: --- Rushed, and grabbed the wrong file. Sorry about that. Added the kafka-clients jar, in case that saves you any effort. > Buffer Overflow exceptions between brokers and with clients > --- > > Key: KAFKA-8154 > URL: https://issues.apache.org/jira/browse/KAFKA-8154 > Project: Kafka > Issue Type: Bug > Components: clients >Affects Versions: 2.1.0 >Reporter: Rajesh Nataraja >Priority: Major > Attachments: server.properties.txt > > > https://github.com/apache/kafka/pull/6495 > https://github.com/apache/kafka/pull/5785 -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Created] (KAFKA-12399) Add log4j2 Appender

Dongjin Lee created KAFKA-12399: --- Summary: Add log4j2 Appender Key: KAFKA-12399 URL: https://issues.apache.org/jira/browse/KAFKA-12399 Project: Kafka Issue Type: Improvement Components: logging Reporter: Dongjin Lee Assignee: Dongjin Lee As a following job of KAFKA-9366, we have to provide a log4j2 counterpart to log4j-appender. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (KAFKA-8206) A consumer can't discover new group coordinator when the cluster was partly restarted

[

https://issues.apache.org/jira/browse/KAFKA-8206?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17293424#comment-17293424

]

Ivan Yurchenko commented on KAFKA-8206:

---

Sorry, unfortunately, I didn't proceed to making a KIP. (BTW, I stopped seeing

this issue after some upgrade.)

> A consumer can't discover new group coordinator when the cluster was partly

> restarted

> -

>

> Key: KAFKA-8206

> URL: https://issues.apache.org/jira/browse/KAFKA-8206

> Project: Kafka

> Issue Type: Bug

>Affects Versions: 1.0.0, 2.0.0, 2.2.0

>Reporter: alex gabriel

>Priority: Critical

> Labels: needs-kip

>

> *A consumer can't discover new group coordinator when the cluster was partly

> restarted*

> Preconditions:

> I use Kafka server and Java kafka-client lib 2.2 version

> I have 2 Kafka nodes running localy (localhost:9092, localhost:9093) and 1

> ZK(localhost:2181)

> I have replication factor 2 for the all my topics and

> '_unclean.leader.election.enable=true_' on both Kafka nodes.

> Steps to reproduce:

> 1) Start 2nodes (localhost:9092/localhost:9093)

> 2) Start consumer with 'bootstrap.servers=localhost:9092,localhost:9093'

> {noformat}

> // discovered group coordinator (0-node)

> 2019-04-09 16:23:18,963 INFO

> [org.apache.kafka.clients.consumer.internals.AbstractCoordinator$FindCoordinatorResponseHandler.onSuccess]

> - [Consumer clientId=events-consumer0, groupId=events-group-gabriel]

> Discovered group coordinator localhost:9092 (id: 2147483647 rack: null)>

> ...metadatacache is updated (2 nodes in the cluster list)

> 2019-04-09 16:23:18,928 DEBUG

> [org.apache.kafka.clients.NetworkClient$DefaultMetadataUpdater.maybeUpdate] -

> [Consumer clientId=events-consumer0, groupId=events-group-gabriel] Sending

> metadata request (type=MetadataRequest, topics=) to node localhost:9092

> (id: -1 rack: null)>

> 2019-04-09 16:23:18,940 DEBUG [org.apache.kafka.clients.Metadata.update] -

> Updated cluster metadata version 2 to MetadataCache{cluster=Cluster(id =

> P3pz1xU0SjK-Dhy6h2G5YA, nodes = [localhost:9092 (id: 0 rack: null),

> localhost:9093 (id: 1 rack: null)], partitions = [], controller =

> localhost:9092 (id: 0 rack: null))}>

> {noformat}

> 3) Shutdown 1-node (localhost:9093)

> {noformat}

> // metadata was updated to the 4 version (but for some reasons it still had 2

> alive nodes inside cluster)

> 2019-04-09 16:23:46,981 DEBUG [org.apache.kafka.clients.Metadata.update] -

> Updated cluster metadata version 4 to MetadataCache{cluster=Cluster(id =

> P3pz1xU0SjK-Dhy6h2G5YA, nodes = [localhost:9093 (id: 1 rack: null),

> localhost:9092 (id: 0 rack: null)], partitions = [Partition(topic =

> events-sorted, partition = 1, leader = 0, replicas = [0,1], isr = [0,1],

> offlineReplicas = []), Partition(topic = events-sorted, partition = 0, leader

> = 0, replicas = [0,1], isr = [0,1], offlineReplicas = [])], controller =

> localhost:9092 (id: 0 rack: null))}>

> //consumers thinks that node-1 is still alive and try to send coordinator

> lookup to it but failed

> 2019-04-09 16:23:46,981 INFO

> [org.apache.kafka.clients.consumer.internals.AbstractCoordinator$FindCoordinatorResponseHandler.onSuccess]

> - [Consumer clientId=events-consumer0, groupId=events-group-gabriel]

> Discovered group coordinator localhost:9093 (id: 2147483646 rack: null)>

> 2019-04-09 16:23:46,981 INFO

> [org.apache.kafka.clients.consumer.internals.AbstractCoordinator.markCoordinatorUnknown]

> - [Consumer clientId=events-consumer0, groupId=events-group-gabriel] Group

> coordinator localhost:9093 (id: 2147483646 rack: null) is unavailable or

> invalid, will attempt rediscovery>

> 2019-04-09 16:24:01,117 DEBUG

> [org.apache.kafka.clients.NetworkClient.handleDisconnections] - [Consumer

> clientId=events-consumer0, groupId=events-group-gabriel] Node 1 disconnected.>

> 2019-04-09 16:24:01,117 WARN

> [org.apache.kafka.clients.NetworkClient.processDisconnection] - [Consumer

> clientId=events-consumer0, groupId=events-group-gabriel] Connection to node 1

> (localhost:9093) could not be established. Broker may not be available.>

> // refreshing metadata again

> 2019-04-09 16:24:01,117 DEBUG

> [org.apache.kafka.clients.consumer.internals.ConsumerNetworkClient$RequestFutureCompletionHandler.fireCompletion]

> - [Consumer clientId=events-consumer0, groupId=events-group-gabriel]

> Cancelled request with header RequestHeader(apiKey=FIND_COORDINATOR,

> apiVersion=2, clientId=events-consumer0, correlationId=112) due to node 1

> being disconnected>

> 2019-04-09 16:24:01,117 DEBUG

> [org.apache.kafka.clients.consumer.internals.AbstractCoordinator.ensureCoordinatorReady]

> - [Consumer clientId=events-consumer0, groupId=events-group-gabriel]

> Coordinator discovery failed, refreshing metadata>

> // metadata

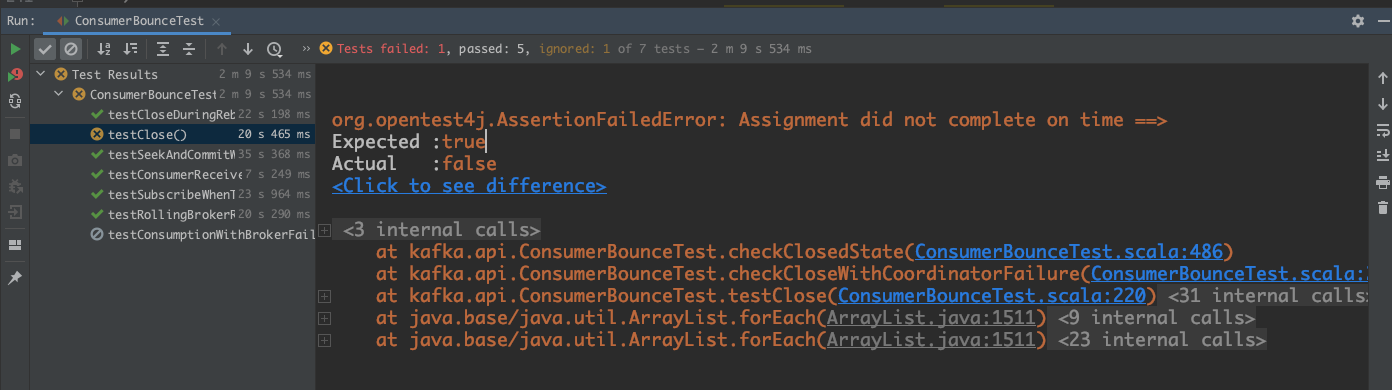

[GitHub] [kafka] dengziming opened a new pull request #10243: KAFKA-12398: Fix flaky test `ConsumerBounceTest.testClose`

dengziming opened a new pull request #10243: URL: https://github.com/apache/kafka/pull/10243 *More detailed description of your change* The test fails some times as follow:  We'd better use `TestUtils.waitUntilTrue` instead of waiting for 1 second because sometimes 1 second is too long and sometimes is too short. *Summary of testing strategy (including rationale) for the feature or bug fix. Unit and/or integration tests are expected for any behaviour change and system tests should be considered for larger changes.* ### Committer Checklist (excluded from commit message) - [ ] Verify design and implementation - [ ] Verify test coverage and CI build status - [ ] Verify documentation (including upgrade notes) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] showuon opened a new pull request #10242: MINOR: format the revoking active log output

showuon opened a new pull request #10242: URL: https://github.com/apache/kafka/pull/10242 Missed one new line symbol. ### Committer Checklist (excluded from commit message) - [ ] Verify design and implementation - [ ] Verify test coverage and CI build status - [ ] Verify documentation (including upgrade notes) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Created] (KAFKA-12398) Fix flaky test `ConsumerBounceTest.testClose`

dengziming created KAFKA-12398: -- Summary: Fix flaky test `ConsumerBounceTest.testClose` Key: KAFKA-12398 URL: https://issues.apache.org/jira/browse/KAFKA-12398 Project: Kafka Issue Type: Improvement Reporter: dengziming Assignee: dengziming Attachments: image-2021-03-02-14-22-34-367.png Sometimes it failed with the following error: !image-2021-03-02-14-22-34-367.png! -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] lbradstreet opened a new pull request #10241: MINOR: time and log producer state recovery phases

lbradstreet opened a new pull request #10241: URL: https://github.com/apache/kafka/pull/10241 During a slow log recovery it's easy to think that loading `.snapshot` files is a multi-second process. Often it isn't the snapshot loading that takes most of the time, rather it's the time taken to further rebuild the producer state from segment files. This PR times both snapshot load and segment recovery phases to better indicate what is taking time. Example test output: ``` [2021-03-01 22:35:28,129] INFO [Log partition=foo-0, dir=/var/folders/cb/5my51vjd1js380qcr_v245bhgp/T/kafka-16876782135717603479] Reloading from producer snapshot and rebuilding producer state from offset 0 [2021-03-01 22:35:28,129] INFO [Log partition=foo-0, dir=/var/folders/cb/5my51vjd1js380qcr_v245bhgp/T/kafka-16876782135717603479] Producer state recovery took 0ms for snapshot load and 0ms for segment recovery from offset 0 [2021-03-01 22:35:28,131] INFO Completed load of Log(dir=/var/folders/cb/5my51vjd1js380qcr_v245bhgp/T/kafka- ``` This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Comment Edited] (KAFKA-8154) Buffer Overflow exceptions between brokers and with clients

[ https://issues.apache.org/jira/browse/KAFKA-8154?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17293371#comment-17293371 ] Ori Popowski edited comment on KAFKA-8154 at 3/2/21, 5:48 AM: -- [~gordonmessmer] thank you very much for publishing this JAR. Edit: this JAR is empty. But I got the idea. Will try to build myself. Thanks was (Author: oripwk): [~gordonmessmer] thank you very much for publishing this JAR > Buffer Overflow exceptions between brokers and with clients > --- > > Key: KAFKA-8154 > URL: https://issues.apache.org/jira/browse/KAFKA-8154 > Project: Kafka > Issue Type: Bug > Components: clients >Affects Versions: 2.1.0 >Reporter: Rajesh Nataraja >Priority: Major > Attachments: server.properties.txt > > > https://github.com/apache/kafka/pull/6495 > https://github.com/apache/kafka/pull/5785 -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (KAFKA-8154) Buffer Overflow exceptions between brokers and with clients

[ https://issues.apache.org/jira/browse/KAFKA-8154?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17293371#comment-17293371 ] Ori Popowski commented on KAFKA-8154: - [~gordonmessmer] thank you very much for publishing this JAR > Buffer Overflow exceptions between brokers and with clients > --- > > Key: KAFKA-8154 > URL: https://issues.apache.org/jira/browse/KAFKA-8154 > Project: Kafka > Issue Type: Bug > Components: clients >Affects Versions: 2.1.0 >Reporter: Rajesh Nataraja >Priority: Major > Attachments: server.properties.txt > > > https://github.com/apache/kafka/pull/6495 > https://github.com/apache/kafka/pull/5785 -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] chia7712 commented on a change in pull request #10206: KAFKA-12369; Implement `ListTransactions` API

chia7712 commented on a change in pull request #10206:

URL: https://github.com/apache/kafka/pull/10206#discussion_r585252099

##

File path:

clients/src/main/resources/common/message/ListTransactionsResponse.json

##

@@ -0,0 +1,35 @@

+// Licensed to the Apache Software Foundation (ASF) under one or more

+// contributor license agreements. See the NOTICE file distributed with

+// this work for additional information regarding copyright ownership.

+// The ASF licenses this file to You under the Apache License, Version 2.0

+// (the "License"); you may not use this file except in compliance with

+// the License. You may obtain a copy of the License at

+//

+//http://www.apache.org/licenses/LICENSE-2.0

+//

+// Unless required by applicable law or agreed to in writing, software

+// distributed under the License is distributed on an "AS IS" BASIS,

+// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+// See the License for the specific language governing permissions and

+// limitations under the License.

+

+{

+ "apiKey": 66,

+ "type": "response",

+ "name": "ListTransactionsResponse",

+ "validVersions": "0",

+ "flexibleVersions": "0+",

+ "fields": [

+ { "name": "ThrottleTimeMs", "type": "int32", "versions": "0+",

+"about": "The duration in milliseconds for which the request was

throttled due to a quota violation, or zero if the request did not violate any

quota." },

+ { "name": "ErrorCode", "type": "int16", "versions": "0+" },

+ { "name": "UnknownStateFilters", "type": "[]string", "default": "null",

"versions": "0+", "nullableVersions": "0+",

Review comment:

the name in request is called `StatesFilter`. Could you make consistent

naming?

##

File path: core/src/main/scala/kafka/server/KafkaApis.scala

##

@@ -3303,6 +3304,28 @@ class KafkaApis(val requestChannel: RequestChannel,

new

DescribeTransactionsResponse(response.setThrottleTimeMs(requestThrottleMs)))

}

+ def handleListTransactionsRequest(request: RequestChannel.Request): Unit = {

+val listTransactionsRequest = request.body[ListTransactionsRequest]

+val filteredProducerIds =

listTransactionsRequest.data.producerIdFilter.asScala.map(Long.unbox).toSet

+val filteredStates =

listTransactionsRequest.data.statesFilter.asScala.toSet

+val response = txnCoordinator.handleListTransactions(filteredProducerIds,

filteredStates)

+

+// The response should contain only transactionalIds that the principal

+// has `Describe` permission to access.

+if (response.transactionStates != null) {

Review comment:

Is this null check necessary? the filed is NOT nullable. There appears

to be a bug if we set null to it in production code.

##

File path:

clients/src/main/resources/common/message/ListTransactionsResponse.json

##

@@ -0,0 +1,35 @@

+// Licensed to the Apache Software Foundation (ASF) under one or more

+// contributor license agreements. See the NOTICE file distributed with

+// this work for additional information regarding copyright ownership.

+// The ASF licenses this file to You under the Apache License, Version 2.0

+// (the "License"); you may not use this file except in compliance with

+// the License. You may obtain a copy of the License at

+//

+//http://www.apache.org/licenses/LICENSE-2.0

+//

+// Unless required by applicable law or agreed to in writing, software

+// distributed under the License is distributed on an "AS IS" BASIS,

+// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+// See the License for the specific language governing permissions and

+// limitations under the License.

+

+{

+ "apiKey": 66,

+ "type": "response",

+ "name": "ListTransactionsResponse",

+ "validVersions": "0",

+ "flexibleVersions": "0+",

+ "fields": [

+ { "name": "ThrottleTimeMs", "type": "int32", "versions": "0+",

+"about": "The duration in milliseconds for which the request was

throttled due to a quota violation, or zero if the request did not violate any

quota." },

+ { "name": "ErrorCode", "type": "int16", "versions": "0+" },

+ { "name": "UnknownStateFilters", "type": "[]string", "default": "null",

"versions": "0+", "nullableVersions": "0+",

Review comment:

Why it requires `nullableVersions`?

##

File path: core/src/main/scala/kafka/server/KafkaApis.scala

##

@@ -3303,6 +3304,28 @@ class KafkaApis(val requestChannel: RequestChannel,

new

DescribeTransactionsResponse(response.setThrottleTimeMs(requestThrottleMs)))

}

+ def handleListTransactionsRequest(request: RequestChannel.Request): Unit = {

+val listTransactionsRequest = request.body[ListTransactionsRequest]

+val filteredProducerIds =

listTransactionsRequest.data.producerIdFilter.asScala.map(Long.unbox).toSet

+val filteredStates =

listTransactionsRequest.data.statesFilter.asScala.toSet

+val response =

[GitHub] [kafka] chia7712 commented on a change in pull request #10203: MINOR: Prepare for Gradle 7.0

chia7712 commented on a change in pull request #10203:

URL: https://github.com/apache/kafka/pull/10203#discussion_r585246882

##

File path: build.gradle

##

@@ -1586,15 +1605,17 @@ project(':streams:test-utils') {

archivesBaseName = "kafka-streams-test-utils"

dependencies {

-compile project(':streams')

-compile project(':clients')

+implementation project(':streams')

Review comment:

I guess this is similar to `connect-runtime` that it is easy to be used

in users' testing scope. Maybe we should keep exposing both modules.

##

File path: release.py

##

@@ -631,7 +631,7 @@ def select_gpg_key():

contents = f.read()

if not user_ok("Going to build and upload mvn artifacts based on these

settings:\n" + contents + '\nOK (y/n)?: '):

fail("Retry again later")

-cmd("Building and uploading archives", "./gradlewAll uploadArchives",

cwd=kafka_dir, env=jdk8_env, shell=True)

+cmd("Building and uploading archives", "./gradlewAll publish", cwd=kafka_dir,

env=jdk8_env, shell=True)

Review comment:

the root/`README.md` still use `./gradlewAll uploadArchives`. It would

be great to make them consistent.

##

File path: build.gradle

##

@@ -1468,7 +1461,7 @@ project(':streams') {

include('log4j*jar')

include('*hamcrest*')

}

-from (configurations.runtime) {

+from (configurations.runtimeClasspath) {

Review comment:

Is there a jira already?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] chia7712 commented on a change in pull request #10193: MINOR: correct the error message of validating uint32

chia7712 commented on a change in pull request #10193:

URL: https://github.com/apache/kafka/pull/10193#discussion_r585237560

##

File path:

clients/src/main/java/org/apache/kafka/common/protocol/types/Type.java

##

@@ -320,7 +320,7 @@ public Long validate(Object item) {

if (item instanceof Long)

return (Long) item;

else

-throw new SchemaException(item + " is not a Long.");

+throw new SchemaException(item + " is not an unsigned

integer.");

Review comment:

make sense. will copy that.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] chia7712 commented on pull request #9758: MINOR: remove FetchResponse.AbortedTransaction and redundant construc…

chia7712 commented on pull request #9758: URL: https://github.com/apache/kafka/pull/9758#issuecomment-788567775 @ijuma Thanks for all your great comments. I have updated this PR. Please take a look. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] chia7712 commented on a change in pull request #9758: MINOR: remove FetchResponse.AbortedTransaction and redundant construc…

chia7712 commented on a change in pull request #9758:

URL: https://github.com/apache/kafka/pull/9758#discussion_r585235586

##

File path:

clients/src/main/java/org/apache/kafka/common/requests/FetchResponse.java

##

@@ -365,17 +126,92 @@ public int sessionId() {

* @param partIterator The partition iterator.

* @return The response size in bytes.

*/

-public static int sizeOf(short version,

-

Iterator>> partIterator) {

+public static int sizeOf(short version,

+ Iterator> partIterator) {

// Since the throttleTimeMs and metadata field sizes are constant and

fixed, we can

// use arbitrary values here without affecting the result.

-FetchResponseData data = toMessage(0, Errors.NONE, partIterator,

INVALID_SESSION_ID);

+LinkedHashMap data =

new LinkedHashMap<>();

+partIterator.forEachRemaining(entry -> data.put(entry.getKey(),

entry.getValue()));

ObjectSerializationCache cache = new ObjectSerializationCache();

-return 4 + data.size(cache, version);

+return 4 + FetchResponse.of(Errors.NONE, 0, INVALID_SESSION_ID,

data).data.size(cache, version);

}

@Override

public boolean shouldClientThrottle(short version) {

return version >= 8;

}

-}

+

+public static Optional

divergingEpoch(FetchResponseData.PartitionData partitionResponse) {

+return partitionResponse.divergingEpoch().epoch() < 0 ?

Optional.empty()

+: Optional.of(partitionResponse.divergingEpoch());

+}

+

+public static boolean isDivergingEpoch(FetchResponseData.PartitionData

partitionResponse) {

+return partitionResponse.divergingEpoch().epoch() >= 0;

+}

+

+public static Optional

preferredReadReplica(FetchResponseData.PartitionData partitionResponse) {

+return partitionResponse.preferredReadReplica() ==

INVALID_PREFERRED_REPLICA_ID ? Optional.empty()

+: Optional.of(partitionResponse.preferredReadReplica());

+}

+

+public static boolean isPreferredReplica(FetchResponseData.PartitionData

partitionResponse) {

+return partitionResponse.preferredReadReplica() !=

INVALID_PREFERRED_REPLICA_ID;

+}

+

+public static FetchResponseData.PartitionData partitionResponse(int

partition, Errors error) {

+return new FetchResponseData.PartitionData()

+.setPartitionIndex(partition)

+.setErrorCode(error.code())

+.setHighWatermark(FetchResponse.INVALID_HIGH_WATERMARK);

+}

+

+/**

+ * cast the BaseRecords of PartitionData to Records. KRPC converts the

byte array to MemoryRecords so this method

+ * never fail if the data is from KRPC.

+ *

+ * @param partition partition data

+ * @return Records or empty record if the records in PartitionData is null.

+ */

+public static Records records(FetchResponseData.PartitionData partition) {

Review comment:

good point. will copy that

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] ableegoldman commented on a change in pull request #10163: KAFKA-10357: Extract setup of changelog from Streams partition assignor

ableegoldman commented on a change in pull request #10163:

URL: https://github.com/apache/kafka/pull/10163#discussion_r583907570

##

File path:

streams/src/main/java/org/apache/kafka/streams/processor/internals/ChangelogTopics.java

##

@@ -0,0 +1,132 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.kafka.streams.processor.internals;

+

+import org.apache.kafka.common.TopicPartition;

+import org.apache.kafka.common.utils.LogContext;

+import org.apache.kafka.streams.processor.TaskId;

+import

org.apache.kafka.streams.processor.internals.InternalTopologyBuilder.TopicsInfo;

+import org.slf4j.Logger;

+

+import java.util.Collections;

+import java.util.HashMap;

+import java.util.HashSet;

+import java.util.Map;

+import java.util.Set;

+import java.util.stream.Collectors;

+

+import static

org.apache.kafka.streams.processor.internals.assignment.StreamsAssignmentProtocolVersions.UNKNOWN;

+

+public class ChangelogTopics {

+

+private final InternalTopicManager internalTopicManager;

+private final Map topicGroups;

+private final Map> tasksForTopicGroup;

+private final Map> changelogPartitionsForTask

= new HashMap<>();

+private final Map>

preExistingChangelogPartitionsForTask = new HashMap<>();

+private final Set

preExistingNonSourceTopicBasedChangelogPartitions = new HashSet<>();

+private final Set sourceTopicBasedChangelogTopics = new

HashSet<>();

+private final Set sourceTopicBasedChangelogTopicPartitions

= new HashSet<>();

+private final Logger log;

+

+public ChangelogTopics(final InternalTopicManager internalTopicManager,

+ final Map topicGroups,

+ final Map> tasksForTopicGroup,

+ final String logPrefix) {

+this.internalTopicManager = internalTopicManager;

+this.topicGroups = topicGroups;

+this.tasksForTopicGroup = tasksForTopicGroup;

+final LogContext logContext = new LogContext(logPrefix);

+log = logContext.logger(getClass());

+}

+

+public void setup() {

Review comment:

Is there a specific reason we need to make an explicit call to `setup()`

rather than just doing this in the constructor? I'm always worried we'll end up

forgetting to call `setup` again after some refactoring and someone will waste

a day debugging their code because they tried to use a `Changelogs` object

before/without first calling `setup()`

##

File path:

streams/src/main/java/org/apache/kafka/streams/processor/internals/ChangelogTopics.java

##

@@ -0,0 +1,132 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.kafka.streams.processor.internals;

+

+import org.apache.kafka.common.TopicPartition;

+import org.apache.kafka.common.utils.LogContext;

+import org.apache.kafka.streams.processor.TaskId;

+import

org.apache.kafka.streams.processor.internals.InternalTopologyBuilder.TopicsInfo;

+import org.slf4j.Logger;

+

+import java.util.Collections;

+import java.util.HashMap;

+import java.util.HashSet;

+import java.util.Map;

+import java.util.Set;

+import java.util.stream.Collectors;

+

+import static

org.apache.kafka.streams.processor.internals.assignment.StreamsAssignmentProtocolVersions.UNKNOWN;

+

+public class ChangelogTopics {

+

+private final InternalTopicManager internalTopicManager;

+private final Map topicGroups;

+private final Map> tasksForTopicGroup;

+private final Map>

[GitHub] [kafka] chia7712 commented on a change in pull request #9758: MINOR: remove FetchResponse.AbortedTransaction and redundant construc…

chia7712 commented on a change in pull request #9758:

URL: https://github.com/apache/kafka/pull/9758#discussion_r585229860

##

File path:

clients/src/main/java/org/apache/kafka/common/requests/FetchResponse.java

##

@@ -365,17 +126,92 @@ public int sessionId() {

* @param partIterator The partition iterator.

* @return The response size in bytes.

*/

-public static int sizeOf(short version,

-

Iterator>> partIterator) {

+public static int sizeOf(short version,

+ Iterator> partIterator) {

// Since the throttleTimeMs and metadata field sizes are constant and

fixed, we can

// use arbitrary values here without affecting the result.

-FetchResponseData data = toMessage(0, Errors.NONE, partIterator,

INVALID_SESSION_ID);

+LinkedHashMap data =

new LinkedHashMap<>();

+partIterator.forEachRemaining(entry -> data.put(entry.getKey(),

entry.getValue()));

ObjectSerializationCache cache = new ObjectSerializationCache();

-return 4 + data.size(cache, version);

+return 4 + FetchResponse.of(Errors.NONE, 0, INVALID_SESSION_ID,

data).data.size(cache, version);

}

@Override

public boolean shouldClientThrottle(short version) {

return version >= 8;

}

-}

+

+public static Optional

divergingEpoch(FetchResponseData.PartitionData partitionResponse) {

+return partitionResponse.divergingEpoch().epoch() < 0 ?

Optional.empty()

+: Optional.of(partitionResponse.divergingEpoch());

+}

+

+public static boolean isDivergingEpoch(FetchResponseData.PartitionData

partitionResponse) {

+return partitionResponse.divergingEpoch().epoch() >= 0;

+}

+

+public static Optional

preferredReadReplica(FetchResponseData.PartitionData partitionResponse) {

+return partitionResponse.preferredReadReplica() ==

INVALID_PREFERRED_REPLICA_ID ? Optional.empty()

+: Optional.of(partitionResponse.preferredReadReplica());

+}

+

+public static boolean isPreferredReplica(FetchResponseData.PartitionData

partitionResponse) {

+return partitionResponse.preferredReadReplica() !=

INVALID_PREFERRED_REPLICA_ID;

+}

+

+public static FetchResponseData.PartitionData partitionResponse(int

partition, Errors error) {

+return new FetchResponseData.PartitionData()

+.setPartitionIndex(partition)

+.setErrorCode(error.code())

+.setHighWatermark(FetchResponse.INVALID_HIGH_WATERMARK);

+}

+

+/**

+ * cast the BaseRecords of PartitionData to Records. KRPC converts the

byte array to MemoryRecords so this method

+ * never fail if the data is from KRPC.

+ *

+ * @param partition partition data

+ * @return Records or empty record if the records in PartitionData is null.

+ */

+public static Records records(FetchResponseData.PartitionData partition) {

+return partition.records() == null ? MemoryRecords.EMPTY : (Records)

partition.records();

Review comment:

will copy that

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] chia7712 commented on a change in pull request #9758: MINOR: remove FetchResponse.AbortedTransaction and redundant construc…

chia7712 commented on a change in pull request #9758:

URL: https://github.com/apache/kafka/pull/9758#discussion_r585226663

##

File path:

clients/src/main/java/org/apache/kafka/common/requests/FetchResponse.java

##

@@ -365,17 +126,92 @@ public int sessionId() {

* @param partIterator The partition iterator.

* @return The response size in bytes.

*/

-public static int sizeOf(short version,

-

Iterator>> partIterator) {

+public static int sizeOf(short version,

+ Iterator> partIterator) {

// Since the throttleTimeMs and metadata field sizes are constant and

fixed, we can

// use arbitrary values here without affecting the result.

-FetchResponseData data = toMessage(0, Errors.NONE, partIterator,

INVALID_SESSION_ID);

+LinkedHashMap data =

new LinkedHashMap<>();

+partIterator.forEachRemaining(entry -> data.put(entry.getKey(),

entry.getValue()));

ObjectSerializationCache cache = new ObjectSerializationCache();

-return 4 + data.size(cache, version);

+return 4 + FetchResponse.of(Errors.NONE, 0, INVALID_SESSION_ID,

data).data.size(cache, version);

}

@Override

public boolean shouldClientThrottle(short version) {

return version >= 8;

}

-}

+

+public static Optional

divergingEpoch(FetchResponseData.PartitionData partitionResponse) {

+return partitionResponse.divergingEpoch().epoch() < 0 ?

Optional.empty()

+: Optional.of(partitionResponse.divergingEpoch());

+}

+

+public static boolean isDivergingEpoch(FetchResponseData.PartitionData

partitionResponse) {

+return partitionResponse.divergingEpoch().epoch() >= 0;

+}

+

+public static Optional

preferredReadReplica(FetchResponseData.PartitionData partitionResponse) {

+return partitionResponse.preferredReadReplica() ==

INVALID_PREFERRED_REPLICA_ID ? Optional.empty()

+: Optional.of(partitionResponse.preferredReadReplica());

+}

+

+public static boolean isPreferredReplica(FetchResponseData.PartitionData

partitionResponse) {

+return partitionResponse.preferredReadReplica() !=

INVALID_PREFERRED_REPLICA_ID;

+}

+

+public static FetchResponseData.PartitionData partitionResponse(int

partition, Errors error) {

+return new FetchResponseData.PartitionData()

+.setPartitionIndex(partition)

+.setErrorCode(error.code())

+.setHighWatermark(FetchResponse.INVALID_HIGH_WATERMARK);

+}

+

+/**

+ * cast the BaseRecords of PartitionData to Records. KRPC converts the

byte array to MemoryRecords so this method

+ * never fail if the data is from KRPC.

Review comment:

good one. will copy that

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] ableegoldman commented on pull request #10215: KAFKA-12375: don't reuse thread.id until a thread has fully shut down

ableegoldman commented on pull request #10215: URL: https://github.com/apache/kafka/pull/10215#issuecomment-788556426 This should be ready for a final review @cadonna @wcarlson5 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] ableegoldman commented on a change in pull request #10215: KAFKA-12375: don't reuse thread.id until a thread has fully shut down

ableegoldman commented on a change in pull request #10215:

URL: https://github.com/apache/kafka/pull/10215#discussion_r585225255

##

File path: streams/src/main/java/org/apache/kafka/streams/KafkaStreams.java

##

@@ -463,9 +464,8 @@ private void replaceStreamThread(final Throwable throwable)

{

closeToError();

}

final StreamThread deadThread = (StreamThread) Thread.currentThread();

-threads.remove(deadThread);

Review comment:

Yeah I think swapping the names would make the code unnecessarily

complicated, and it would definitely make reading the logs more difficult.

Just to note: in the current rebalance protocol, the thread name should not

impact the task assignment since within a client tasks are always just assigned

to their previous owner (we maximize stickiness & balance)

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] chia7712 commented on a change in pull request #9758: MINOR: remove FetchResponse.AbortedTransaction and redundant construc…

chia7712 commented on a change in pull request #9758:

URL: https://github.com/apache/kafka/pull/9758#discussion_r585224829

##

File path: core/src/main/scala/kafka/server/KafkaApis.scala

##

@@ -761,79 +754,84 @@ class KafkaApis(val requestChannel: RequestChannel,

// For fetch requests from clients, check if down-conversion is

disabled for the particular partition

if (!fetchRequest.isFromFollower &&

!logConfig.forall(_.messageDownConversionEnable)) {

trace(s"Conversion to message format ${downConvertMagic.get} is

disabled for partition $tp. Sending unsupported version response to $clientId.")

- errorResponse(Errors.UNSUPPORTED_VERSION)

+ FetchResponse.partitionResponse(tp.partition,

Errors.UNSUPPORTED_VERSION)

} else {

try {

trace(s"Down converting records from partition $tp to message

format version $magic for fetch request from $clientId")

// Because down-conversion is extremely memory intensive, we

want to try and delay the down-conversion as much

// as possible. With KIP-283, we have the ability to lazily

down-convert in a chunked manner. The lazy, chunked

// down-conversion always guarantees that at least one batch

of messages is down-converted and sent out to the

// client.

-val error = maybeDownConvertStorageError(partitionData.error)

-new FetchResponse.PartitionData[BaseRecords](error,

partitionData.highWatermark,

- partitionData.lastStableOffset, partitionData.logStartOffset,

- partitionData.preferredReadReplica,

partitionData.abortedTransactions,

- new LazyDownConversionRecords(tp, unconvertedRecords, magic,

fetchContext.getFetchOffset(tp).get, time))

+new FetchResponseData.PartitionData()

+ .setPartitionIndex(tp.partition)

+

.setErrorCode(maybeDownConvertStorageError(Errors.forCode(partitionData.errorCode)).code)

+ .setHighWatermark(partitionData.highWatermark)

+ .setLastStableOffset(partitionData.lastStableOffset)

+ .setLogStartOffset(partitionData.logStartOffset)

+ .setAbortedTransactions(partitionData.abortedTransactions)

+ .setRecords(new LazyDownConversionRecords(tp,

unconvertedRecords, magic, fetchContext.getFetchOffset(tp).get, time))

+

.setPreferredReadReplica(partitionData.preferredReadReplica())

} catch {

case e: UnsupportedCompressionTypeException =>

trace("Received unsupported compression type error during

down-conversion", e)

- errorResponse(Errors.UNSUPPORTED_COMPRESSION_TYPE)

+ FetchResponse.partitionResponse(tp.partition,

Errors.UNSUPPORTED_COMPRESSION_TYPE)

}

}

case None =>

-val error = maybeDownConvertStorageError(partitionData.error)

-new FetchResponse.PartitionData[BaseRecords](error,

- partitionData.highWatermark,

- partitionData.lastStableOffset,

- partitionData.logStartOffset,

- partitionData.preferredReadReplica,

- partitionData.abortedTransactions,

- partitionData.divergingEpoch,

- unconvertedRecords)

+new FetchResponseData.PartitionData()

+ .setPartitionIndex(tp.partition)

+

.setErrorCode(maybeDownConvertStorageError(Errors.forCode(partitionData.errorCode)).code)

+ .setHighWatermark(partitionData.highWatermark)

+ .setLastStableOffset(partitionData.lastStableOffset)

+ .setLogStartOffset(partitionData.logStartOffset)

+ .setAbortedTransactions(partitionData.abortedTransactions)

+ .setRecords(unconvertedRecords)

+ .setPreferredReadReplica(partitionData.preferredReadReplica)

+ .setDivergingEpoch(partitionData.divergingEpoch)

}

}

}

// the callback for process a fetch response, invoked before throttling

def processResponseCallback(responsePartitionData: Seq[(TopicPartition,

FetchPartitionData)]): Unit = {

- val partitions = new util.LinkedHashMap[TopicPartition,

FetchResponse.PartitionData[Records]]

+ val partitions = new util.LinkedHashMap[TopicPartition,

FetchResponseData.PartitionData]

val reassigningPartitions = mutable.Set[TopicPartition]()

responsePartitionData.foreach { case (tp, data) =>

val abortedTransactions = data.abortedTransactions.map(_.asJava).orNull

val lastStableOffset =

data.lastStableOffset.getOrElse(FetchResponse.INVALID_LAST_STABLE_OFFSET)

-if (data.isReassignmentFetch)

- reassigningPartitions.add(tp)

-val error =

[GitHub] [kafka] chia7712 commented on a change in pull request #9758: MINOR: remove FetchResponse.AbortedTransaction and redundant construc…

chia7712 commented on a change in pull request #9758:

URL: https://github.com/apache/kafka/pull/9758#discussion_r585224204

##

File path: core/src/test/scala/unit/kafka/server/FetchSessionTest.scala

##

@@ -534,15 +588,21 @@ class FetchSessionTest {

Optional.empty()))

val session2context = fetchManager.newContext(JFetchMetadata.INITIAL,

session1req, EMPTY_PART_LIST, false)

assertEquals(classOf[FullFetchContext], session2context.getClass)

-val session2RespData = new util.LinkedHashMap[TopicPartition,

FetchResponse.PartitionData[Records]]

-session2RespData.put(new TopicPartition("foo", 0), new

FetchResponse.PartitionData(

- Errors.NONE, 100, 100, 100, null, null))

-session2RespData.put(new TopicPartition("foo", 1), new

FetchResponse.PartitionData(

- Errors.NONE, 10, 10, 10, null, null))

+val session2RespData = new util.LinkedHashMap[TopicPartition,

FetchResponseData.PartitionData]

+session2RespData.put(new TopicPartition("foo", 0),

+ new FetchResponseData.PartitionData()

+.setHighWatermark(100)

+.setLastStableOffset(100)

+.setLogStartOffset(100))

+session2RespData.put(new TopicPartition("foo", 1),

+ new FetchResponseData.PartitionData()

+.setHighWatermark(10)

Review comment:

good catch. will fix all similar issues in next commit.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] ableegoldman commented on a change in pull request #10215: KAFKA-12375: don't reuse thread.id until a thread has fully shut down

ableegoldman commented on a change in pull request #10215:

URL: https://github.com/apache/kafka/pull/10215#discussion_r585223909

##

File path: streams/src/main/java/org/apache/kafka/streams/KafkaStreams.java

##

@@ -1047,9 +1047,15 @@ private int getNumStreamThreads(final boolean

hasGlobalTopology) {

if

(!streamThread.waitOnThreadState(StreamThread.State.DEAD, timeoutMs - begin)) {

log.warn("Thread " + streamThread.getName() +

" did not shutdown in the allotted time");

timeout = true;

+// Don't remove from threads until shutdown is

complete. We will trim it from the

+// list once it reaches DEAD, and if for some

reason it's hanging indefinitely in the

+// shutdown then we should just consider this

thread.id to be burned

+} else {

+threads.remove(streamThread);

}

}

-threads.remove(streamThread);

+// Don't remove from threads until shutdown is

complete since this will let another thread

+// reuse its thread.id. We will trim any DEAD threads

from the list later

final long cacheSizePerThread =

getCacheSizePerThread(threads.size());

Review comment:

Good catch. I added a method `getNumLiveStreamThreads` to use instead of

just `threads.size()` which will trim the list of any DEAD threads and return

the actual number of living threads

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] chia7712 commented on a change in pull request #9758: MINOR: remove FetchResponse.AbortedTransaction and redundant construc…

chia7712 commented on a change in pull request #9758:

URL: https://github.com/apache/kafka/pull/9758#discussion_r585221282

##

File path: core/src/test/scala/unit/kafka/server/AbstractFetcherThreadTest.scala

##

@@ -1144,8 +1143,14 @@ class AbstractFetcherThreadTest {

(Errors.NONE, records)

}

-(partition, new FetchData(error, leaderState.highWatermark,

leaderState.highWatermark, leaderState.logStartOffset,

- Optional.empty[Integer], List.empty.asJava, divergingEpoch.asJava,

records))

+(partition, new FetchResponseData.PartitionData()

+ .setErrorCode(error.code)

+ .setHighWatermark(leaderState.highWatermark)

+ .setLastStableOffset(leaderState.highWatermark)

+ .setLogStartOffset(leaderState.logStartOffset)

+ .setAbortedTransactions(Collections.emptyList())

+ .setRecords(records)

+ .setDivergingEpoch(divergingEpoch.getOrElse(new

FetchResponseData.EpochEndOffset)))

Review comment:

the type `FetchablePartitionResponse` is gone and the replacement

`FetchResponseData.PartitionData` (generated data) can't accept `Optional` type.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] ableegoldman commented on a change in pull request #10215: KAFKA-12375: don't reuse thread.id until a thread has fully shut down

ableegoldman commented on a change in pull request #10215:

URL: https://github.com/apache/kafka/pull/10215#discussion_r585220510

##

File path: streams/src/main/java/org/apache/kafka/streams/KafkaStreams.java

##

@@ -1047,9 +1047,15 @@ private int getNumStreamThreads(final boolean

hasGlobalTopology) {

if

(!streamThread.waitOnThreadState(StreamThread.State.DEAD, timeoutMs - begin)) {

log.warn("Thread " + streamThread.getName() +

" did not shutdown in the allotted time");

timeout = true;

+// Don't remove from threads until shutdown is

complete. We will trim it from the

+// list once it reaches DEAD, and if for some

reason it's hanging indefinitely in the

Review comment:

We're trimming it in `getNextThreadIndex`. But if we're going to rely on

`threads.size()` elsewhere, which it seems we do, then yeah we should trim it

more aggressively

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] chia7712 commented on a change in pull request #9758: MINOR: remove FetchResponse.AbortedTransaction and redundant construc…

chia7712 commented on a change in pull request #9758:

URL: https://github.com/apache/kafka/pull/9758#discussion_r585219789

##

File path: core/src/main/scala/kafka/server/AbstractFetcherThread.scala

##

@@ -416,9 +412,8 @@ abstract class AbstractFetcherThread(name: String,

"expected to persist.")

partitionsWithError += topicPartition

-case _ =>

- error(s"Error for partition $topicPartition at offset

${currentFetchState.fetchOffset}",

-partitionData.error.exception)

+case partitionError: Errors =>

Review comment:

you are right. fixed

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] ableegoldman commented on a change in pull request #10215: KAFKA-12375: don't reuse thread.id until a thread has fully shut down

ableegoldman commented on a change in pull request #10215:

URL: https://github.com/apache/kafka/pull/10215#discussion_r585219164

##

File path: streams/src/main/java/org/apache/kafka/streams/KafkaStreams.java

##

@@ -1047,9 +1047,15 @@ private int getNumStreamThreads(final boolean

hasGlobalTopology) {

if

(!streamThread.waitOnThreadState(StreamThread.State.DEAD, timeoutMs - begin)) {

log.warn("Thread " + streamThread.getName() +

" did not shutdown in the allotted time");

timeout = true;

+// Don't remove from threads until shutdown is

complete. We will trim it from the

+// list once it reaches DEAD, and if for some

reason it's hanging indefinitely in the

+// shutdown then we should just consider this

thread.id to be burned

+} else {

+threads.remove(streamThread);

Review comment:

Yeah, I was mainly trying to keep things simple. There's definitely a

tradeoff in when we resize the cache: either we resize it right away and risk

an OOM or we resize it whenever we find newly DEAD threads but potentially have

to wait to "reclaim" the memory of a thread.

Both scenarios run into trouble when a thread is hanging in shutdown, but if

that occurs something has already gone wrong so I don't think we need to

guarantee Streams will continue running perfectly. But the downside to resizing

the cache only once a thread reaches DEAD is that a user could call

`removeStreamThread()` with a timeout of 0 and then never call add/remove

thread again, and they'll never get back the memory of the removed thread since

we only trim the `threads` inside these methods (or the exception handler). ie,

it seems ok to lazily remove DEAD threads if we only use the `threads` list to

find a unique `threadId`, but not to lazily resize the cache. WDYT?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] abbccdda commented on pull request #10240: KAFKA-12381: only return leader not available for internal topic creation