[GitHub] [airflow] potiuk commented on pull request #10280: Enable Sphinx spellcheck for docs generation

potiuk commented on pull request #10280: URL: https://github.com/apache/airflow/pull/10280#issuecomment-672112122 > @potiuk Do we need to bump the EPOCH number so that the CI uses the updated Dockerfile ? Absolutely not. It's only needed in case stuff is removed) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] kaxil commented on pull request #10280: Enable Sphinx spellcheck for docs generation

kaxil commented on pull request #10280: URL: https://github.com/apache/airflow/pull/10280#issuecomment-672111000 @potiuk Do we need to bump the EPOCH number so that the CI uses the updated Dockerfile ? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] potiuk commented on pull request #10280: Enable Sphinx spellcheck for docs generation

potiuk commented on pull request #10280: URL: https://github.com/apache/airflow/pull/10280#issuecomment-672112284 And only for pip installs. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] atsalolikhin-spokeo commented on issue #8388: Task instance details page blows up when no dagrun

atsalolikhin-spokeo commented on issue #8388: URL: https://github.com/apache/airflow/issues/8388#issuecomment-672153377 I ran into this in AWS, running Amazon Linux 2. `uname -a` shows: Linux ip-172-20-2-227.us-west-2.compute.internal 4.14.165-131.185.amzn2.x86_64 #1 SMP Wed Jan 15 14:19:56 UTC 2020 x86_64 x86_64 x86_64 GNU/Linux This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] kaxil commented on a change in pull request #10280: Enable Sphinx spellcheck for docs generation

kaxil commented on a change in pull request #10280: URL: https://github.com/apache/airflow/pull/10280#discussion_r468780812 ## File path: docs/spelling_wordlist.txt ## @@ -0,0 +1,1366 @@ +Ack +Acyclic +Airbnb +AirflowException +Aizhamal +Alphasort +Analytics +Anand +Ansible +AppBuilder +Arg +Args +Async +Auth +Autoscale +Avro +Azkaban +Azri +Backend +Backends +Backfill +BackfillJobTest +Backfills +Banco +Bas +BaseClient +BaseOperator +BaseView +Beauchemin +Behaviour +Bigquery +Bigtable +Bitshift +Bluecore +Bolke +Bool +Booleans +Boto +BounceX +Boxel +Bq +Breguła +CLoud +CSRFProtect +Cancelled +Cassanda +Catchup +Celect +Cgroups +Changelog +Chao +CheckOperator +Checklicence +Checkr +Cinimex +Cloudant +Cloudwatch +ClusterManagerClient +Codecov +Colour +ComputeNodeState +Computenodes +Config +Configs +Cron +Ctrl +Daemonize +DagFileProcessorManager +DagRun +Dagbag +Dagre +Dask +Databricks +Datadog +Dataflow +Dataframe +Datalake +Datanodes +Dataprep +Dataproc +Dataset +Datasets +Datastore +Datasync +DateFrame +Datetimepicker +Datetimes +Davydov +De +Decrypt +Decrypts +Deserialize +Deserialized +Dingding +Dlp +Dockerfile +Dockerhub +Dockerise +Docstring +Docstrings +Dont +Driesprong +Drivy +Dsn +Dynamodb +EDITMSG +ETag +Eg +EmrAddSteps +EmrCreateJobFlow +Enum +Env +Exasol +Failover +Feng +Fernet +FileSensor +Filebeat +Filepath +Fileshare +Fileshares +Filesystem +Firehose +Firestore +Fokko +Formaturas +Fundera +GCP +GCS +GH +GSoD +Gannt +Gantt +Gao +Gcp +Gentner +GetPartitions +GiB +Github +Gitter +Glassdoor +Gmail +Groupalia +Groupon +Grpc +Gunicorn +Guziel +Gzip +HCatalog +HTTPBasicAuth +Harenslak +Hashable +Hashicorp +Highcharts +Hitesh +Hiveserver +Hoc +Homan +Hostname +Hou +Http +HttpError +HttpRequest +IdP +Imap +Imberman +InsecureClient +InspectContentResponse +Investorise +JPype +Jakob +Jarek +Jdbc +Jiajie +Jinja +Jinjafied +Jinjafy +Jira +JobComplete +JobExists +JobRunning +Json +Jupyter +KYLIN +Kalibrr +Kamil +Kaxil +Kengo +Kerberized +Kerberos +KerberosClient +KevinYang +KeyManagementServiceClient +Keyfile +Kibana +Kinesis +Kombu +Kube +Kubernetes +Kusto +Kwargs +Kylin +LaunchTemplateParameters +Letsbonus +Lifecycle +LineItem +ListGenerator +Logstash +Lowin +Lyft +Maheshwari +Makefile +Mapreduce +Masternode +Maxime +Memorystore +Mesos +MessageAttributes +Metastore +Metatsore +Mixin +Mongo +Moto +Mysql +NFS +NaN +Naik +Namenode +Namespace +Nextdoor +Nones +NotFound +Nullable +Nurmamat +OAuth +Oauth +Oauthlib +Okta +Oozie +Opsgenie +Optimise +PEM +PTarget +Pagerduty +Papermill +Parallelize +Parameterizing +Paramiko +Params +Pem +Pinot +Popen +Postgres +Postgresql +Potiuk +Pre +Precommit +Preprocessed +Proc +Protobuf +PublisherClient +Pubsub +Py +PyPI +Pylint +Pyspark +PythonOperator +Qingping +Qplum +Quantopian +Qubole +Quboles +Quoble +RBAC +Readme +Realtime +Rebasing +Rebrand +Reddit +Redhat +Reinitialising +Retrives +Riccomini +Roadmap +Robinhood +SIGTERM +SSHClient +SSHTunnelForwarder +SaaS +Sagemaker +Sasl +SecretManagerClient +Seedlist +Seki +Sendgrid +Siddharth +SlackHook +SparkPi +SparkR +SparkSQL +Sql +Sqlalchemy +Sqlite +Sqoop +Stackdriver +Standarization +StatsD +Statsd +Stringified +Subclasses +Subdirectory +Submodules +Subpackages +Subpath +SubscriberClient +Subtasks +Sumit +Swtch +Systemd +TCP +TLS +TTY +TThe +TZ +TaskInstance +Taskfail +Templated +Templating +Teradata +TextToSpeechClient +Tez +Thinknear +ToC +Tomasz +Tooltip +Tsai +UA +UNload +Uellendall +Umask +Un +Undead +Undeads +Unpausing +Unpickle +Upsert +Upsight +Uptime +Urbaszek +Url +Utils +Vendorize +Vertica +Vevo +WTF +WaiterModel +Wasb +WebClient +Webhook +Webserver +Werkzeug +Wiedmer +XCom +XComs +Xcom +Xero +Xiaodong +Yamllint +Yandex +Yieldr +Zego +Zendesk +Zhong +Zsh +Zymergen +aIRFLOW +abc +accessor +accountmaking +acessible +ack +ackIds +acknowledgement +actionCard +acurate +acyclic +adhoc +aijamalnk +airbnb +airfl +airflowignore +ajax +alertPolicies +alexvanboxel +allAuthenticatedUsers +allUsers +allowinsert +analyse +analytics +analyticsreporting +analyzeEntities +analyzeSentiment +analyzeSyntax +aoen +apache +api +apikey +apis +appbuilder +approle +arg +args +arn +arraysize +artwr +asc +ascii +asciiart +asia +assertEqualIgnoreMultipleSpaces +ast +async +athena +attemping +attr +attrs +auth +authMechanism +authenication +authenticator +authorised +autoclass +autocommit +autocomplete +autodetect +autodetected +autoenv +autogenerated +autorestart +autoscale +autoscaling +avro +aws +awsbatch +backend +backends +backfill +backfilled +backfilling +backfills +backoff +backport +backreference +backtick +backticks +balancer +baseOperator +basedn +basestring +basetaskrunner +bashrc +basph +batchGet +bc +bcc +beeen +behaviour +behaviours +behavoiur +bicket +bigquery +bigtable +bitshift +bolkedebruin +booktabs +boolean +booleans +bootDiskType +boto +botocore +bowser +bq +bugfix +bugfixes +buildType +bytestring +cacert +callables +cancelled +carbonite +cas +cassandra +casted +catchup +cattrs +ccache +celeryd

[GitHub] [airflow] kaxil commented on a change in pull request #10280: Enable Sphinx spellcheck for docs generation

kaxil commented on a change in pull request #10280: URL: https://github.com/apache/airflow/pull/10280#discussion_r468780276 ## File path: docs/spelling_wordlist.txt ## @@ -0,0 +1,1366 @@ +Ack +Acyclic +Airbnb +AirflowException +Aizhamal +Alphasort +Analytics +Anand +Ansible +AppBuilder +Arg +Args +Async +Auth +Autoscale +Avro +Azkaban +Azri +Backend +Backends +Backfill +BackfillJobTest +Backfills +Banco +Bas +BaseClient +BaseOperator +BaseView +Beauchemin +Behaviour +Bigquery +Bigtable +Bitshift +Bluecore +Bolke +Bool +Booleans +Boto +BounceX +Boxel +Bq +Breguła +CLoud +CSRFProtect +Cancelled +Cassanda +Catchup +Celect +Cgroups +Changelog +Chao +CheckOperator +Checklicence +Checkr +Cinimex +Cloudant +Cloudwatch +ClusterManagerClient +Codecov +Colour +ComputeNodeState +Computenodes +Config +Configs +Cron +Ctrl +Daemonize +DagFileProcessorManager +DagRun +Dagbag +Dagre +Dask +Databricks +Datadog +Dataflow +Dataframe +Datalake +Datanodes +Dataprep +Dataproc +Dataset +Datasets +Datastore +Datasync +DateFrame +Datetimepicker +Datetimes +Davydov +De +Decrypt +Decrypts +Deserialize +Deserialized +Dingding +Dlp +Dockerfile +Dockerhub +Dockerise +Docstring +Docstrings +Dont +Driesprong +Drivy +Dsn +Dynamodb +EDITMSG +ETag +Eg +EmrAddSteps +EmrCreateJobFlow +Enum +Env +Exasol +Failover +Feng +Fernet +FileSensor +Filebeat +Filepath +Fileshare +Fileshares +Filesystem +Firehose +Firestore +Fokko +Formaturas +Fundera +GCP +GCS +GH +GSoD +Gannt +Gantt +Gao +Gcp +Gentner +GetPartitions +GiB +Github +Gitter +Glassdoor +Gmail +Groupalia +Groupon +Grpc +Gunicorn +Guziel +Gzip +HCatalog +HTTPBasicAuth +Harenslak +Hashable +Hashicorp +Highcharts +Hitesh +Hiveserver +Hoc +Homan +Hostname +Hou +Http +HttpError +HttpRequest +IdP +Imap +Imberman +InsecureClient +InspectContentResponse +Investorise +JPype +Jakob +Jarek +Jdbc +Jiajie +Jinja +Jinjafied +Jinjafy +Jira +JobComplete +JobExists +JobRunning +Json +Jupyter +KYLIN +Kalibrr +Kamil +Kaxil +Kengo +Kerberized +Kerberos +KerberosClient +KevinYang +KeyManagementServiceClient +Keyfile +Kibana +Kinesis +Kombu +Kube +Kubernetes +Kusto +Kwargs +Kylin +LaunchTemplateParameters +Letsbonus +Lifecycle +LineItem +ListGenerator +Logstash +Lowin +Lyft +Maheshwari +Makefile +Mapreduce +Masternode +Maxime +Memorystore +Mesos +MessageAttributes +Metastore +Metatsore +Mixin +Mongo +Moto +Mysql +NFS +NaN +Naik +Namenode +Namespace +Nextdoor +Nones +NotFound +Nullable +Nurmamat +OAuth +Oauth +Oauthlib +Okta +Oozie +Opsgenie +Optimise +PEM +PTarget +Pagerduty +Papermill +Parallelize +Parameterizing +Paramiko +Params +Pem +Pinot +Popen +Postgres +Postgresql +Potiuk +Pre +Precommit +Preprocessed +Proc +Protobuf +PublisherClient +Pubsub +Py +PyPI +Pylint +Pyspark +PythonOperator +Qingping +Qplum +Quantopian +Qubole +Quboles +Quoble +RBAC +Readme +Realtime +Rebasing +Rebrand +Reddit +Redhat +Reinitialising +Retrives +Riccomini +Roadmap +Robinhood +SIGTERM +SSHClient +SSHTunnelForwarder +SaaS +Sagemaker +Sasl +SecretManagerClient +Seedlist +Seki +Sendgrid +Siddharth +SlackHook +SparkPi +SparkR +SparkSQL +Sql +Sqlalchemy +Sqlite +Sqoop +Stackdriver +Standarization +StatsD +Statsd +Stringified +Subclasses +Subdirectory +Submodules +Subpackages +Subpath +SubscriberClient +Subtasks +Sumit +Swtch +Systemd +TCP +TLS +TTY +TThe +TZ +TaskInstance +Taskfail +Templated +Templating +Teradata +TextToSpeechClient +Tez +Thinknear +ToC +Tomasz +Tooltip +Tsai +UA +UNload +Uellendall +Umask +Un +Undead +Undeads +Unpausing +Unpickle +Upsert +Upsight +Uptime +Urbaszek +Url +Utils +Vendorize +Vertica +Vevo +WTF +WaiterModel +Wasb +WebClient +Webhook +Webserver +Werkzeug +Wiedmer +XCom +XComs +Xcom +Xero +Xiaodong +Yamllint +Yandex +Yieldr +Zego +Zendesk +Zhong +Zsh +Zymergen +aIRFLOW +abc +accessor +accountmaking +acessible +ack +ackIds +acknowledgement +actionCard +acurate +acyclic +adhoc +aijamalnk +airbnb +airfl +airflowignore +ajax +alertPolicies +alexvanboxel +allAuthenticatedUsers +allUsers +allowinsert +analyse +analytics +analyticsreporting +analyzeEntities +analyzeSentiment +analyzeSyntax +aoen +apache +api +apikey +apis +appbuilder +approle +arg +args +arn +arraysize +artwr +asc +ascii +asciiart +asia +assertEqualIgnoreMultipleSpaces +ast +async +athena +attemping +attr +attrs +auth +authMechanism +authenication +authenticator +authorised +autoclass +autocommit +autocomplete +autodetect +autodetected +autoenv +autogenerated +autorestart +autoscale +autoscaling +avro +aws +awsbatch +backend +backends +backfill +backfilled +backfilling +backfills +backoff +backport +backreference +backtick +backticks +balancer +baseOperator +basedn +basestring +basetaskrunner +bashrc +basph +batchGet +bc +bcc +beeen +behaviour +behaviours +behavoiur +bicket +bigquery +bigtable +bitshift +bolkedebruin +booktabs +boolean +booleans +bootDiskType +boto +botocore +bowser +bq +bugfix +bugfixes +buildType +bytestring +cacert +callables +cancelled +carbonite +cas +cassandra +casted +catchup +cattrs +ccache +celeryd

[GitHub] [airflow] atsalolikhin-spokeo commented on issue #8388: Task instance details page blows up when no dagrun

atsalolikhin-spokeo commented on issue #8388:

URL: https://github.com/apache/airflow/issues/8388#issuecomment-672169136

Thank you for re-opening this issue. I've checked the master version:

```

[airflow@ip-172-20-2-227 airflow]$ airflow version

2.0.0.dev0

[airflow@ip-172-20-2-227 airflow]$

```

I can't login through the Web UI, though. It's asking me for username and

password.

When I started the webserver, it said:

[2020-08-11 18:25:27,417] {manager.py:719} WARNING - No user yet created,

use flask fab command to do it.

I tried, but it didn't work. Help?

```

[airflow@ip-172-20-2-227 airflow]$ flask fab create-user

Role [Public]:

Username: admin

User first name: admin

User last name: admin

Email: ad...@example.com

Password:

Repeat for confirmation:

Usage: flask fab create-user [OPTIONS]

Error: Could not locate a Flask application. You did not provide the

"FLASK_APP" environment variable, and a "wsgi.py" or "app.py" module was not

found in the current directory.

[airflow@ip-172-20-2-227 airflow]$

```

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] atsalolikhin-spokeo commented on issue #8388: Task instance details page blows up when no dagrun

atsalolikhin-spokeo commented on issue #8388: URL: https://github.com/apache/airflow/issues/8388#issuecomment-672185365 I see this on my console: ``` Please make sure to build the frontend in static/ directory and restart the server ``` This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] vlasvlasvlas commented on pull request #9715: create CODE_OF_CONDUCT.md

vlasvlasvlas commented on pull request #9715: URL: https://github.com/apache/airflow/pull/9715#issuecomment-672185359 yeayyy! :) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] ryanrichholt commented on pull request #6317: [AIRFLOW-5644] Simplify TriggerDagRunOperator usage

ryanrichholt commented on pull request #6317: URL: https://github.com/apache/airflow/pull/6317#issuecomment-672277149 In the DagRun model list view there is an option to search by run_id. Since we set the run_id when the dag is triggered externally, this field can be used when we need to check on progress for a specific case. This seems to work alright, but there are some downsides: - run_id doesn't propagate to subdags, so we basically cant use subdags - run_id can't be set when triggering the dag from some of the quick buttons in the ui - with the create dag_run form, the run_id can be set, but the conf cannot So, I'm also exploring options for moving away from using run_ids, and just adding this info to the run conf. But, I think this is going to require updating the DagRun model views, or a custom view plugin. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (AIRFLOW-5644) Simplify TriggerDagRunOperator usage

[ https://issues.apache.org/jira/browse/AIRFLOW-5644?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17175840#comment-17175840 ] ASF GitHub Bot commented on AIRFLOW-5644: - ryanrichholt commented on pull request #6317: URL: https://github.com/apache/airflow/pull/6317#issuecomment-672277149 In the DagRun model list view there is an option to search by run_id. Since we set the run_id when the dag is triggered externally, this field can be used when we need to check on progress for a specific case. This seems to work alright, but there are some downsides: - run_id doesn't propagate to subdags, so we basically cant use subdags - run_id can't be set when triggering the dag from some of the quick buttons in the ui - with the create dag_run form, the run_id can be set, but the conf cannot So, I'm also exploring options for moving away from using run_ids, and just adding this info to the run conf. But, I think this is going to require updating the DagRun model views, or a custom view plugin. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org > Simplify TriggerDagRunOperator usage > > > Key: AIRFLOW-5644 > URL: https://issues.apache.org/jira/browse/AIRFLOW-5644 > Project: Apache Airflow > Issue Type: Improvement > Components: operators >Affects Versions: 2.0.0 >Reporter: Bas Harenslak >Priority: Major > Fix For: 2.0.0 > > > The TriggerDagRunOperator usage is rather odd at the moment, especially the > way to pass a conf. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [airflow] maganaluis commented on pull request #8256: updated _write_args on PythonVirtualenvOperator

maganaluis commented on pull request #8256: URL: https://github.com/apache/airflow/pull/8256#issuecomment-672291543 @feluelle Sorry for the late response, should be up to date now. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] darwinyip commented on issue #10275: Trigger rule for "always"

darwinyip commented on issue #10275: URL: https://github.com/apache/airflow/issues/10275#issuecomment-672091385 That's true. Closing issue. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] mik-laj commented on issue #8388: Task instance details page blows up when no dagrun

mik-laj commented on issue #8388: URL: https://github.com/apache/airflow/issues/8388#issuecomment-672213162 Is the issue discussed in this ticket still present in this version? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (AIRFLOW-3964) Consolidate and de-duplicate sensor tasks

[

https://issues.apache.org/jira/browse/AIRFLOW-3964?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17175710#comment-17175710

]

ASF GitHub Bot commented on AIRFLOW-3964:

-

YingboWang commented on a change in pull request #5499:

URL: https://github.com/apache/airflow/pull/5499#discussion_r468732624

##

File path: airflow/models/taskinstance.py

##

@@ -280,7 +281,8 @@ def try_number(self):

database, in all other cases this will be incremented.

"""

# This is designed so that task logs end up in the right file.

-if self.state == State.RUNNING:

+# TODO: whether we need sensing here or not (in sensor and

task_instance state machine)

+if self.state in State.running():

Review comment:

"sensing" state means this TI is currently being poked by smart sensor.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Consolidate and de-duplicate sensor tasks

> --

>

> Key: AIRFLOW-3964

> URL: https://issues.apache.org/jira/browse/AIRFLOW-3964

> Project: Apache Airflow

> Issue Type: Improvement

> Components: dependencies, operators, scheduler

>Affects Versions: 1.10.0

>Reporter: Yingbo Wang

>Assignee: Yingbo Wang

>Priority: Critical

>

> h2. Problem

> h3. Airflow Sensor:

> Sensors are a certain type of operator that will keep running until a certain

> criterion is met. Examples include a specific file landing in HDFS or S3, a

> partition appearing in Hive, or a specific time of the day. Sensors are

> derived from BaseSensorOperator and run a poke method at a specified

> poke_interval until it returns True.

> Airflow Sensor duplication is a normal problem for large scale airflow

> project. There are duplicated partitions needing to be detected from

> same/different DAG. In Airbnb there are 88 boxes running four different types

> of sensors everyday. The number of running sensor tasks ranges from 8k to

> 16k, which takes great amount of resources. Although Airflow team had

> redirected all sensors to a specific queue to allocate relatively minor

> resource, there is still large room to reduce the number of workers and

> relief DB pressure by optimizing the sensor mechanism.

> Existing sensor implementation creates an identical task for any sensor task

> with specific dag_id, task_id and execution_date. This task is responsible of

> keeping querying DB until the specified partitions exists. Even if two tasks

> are waiting for same partition in DB, they are creating two connections with

> the DB and checking the status in two separate processes. In one hand, DB

> need to run duplicate jobs in multiple processes which will take both cpu and

> memory resources. At the same time, Airflow need to maintain a process for

> each sensor to query and wait for the partition/table to be created.

> h1. ***Design*

> There are several issues need to be resolved for our smart sensor.

> h2. Persist sensor infor in DB and avoid file parsing before running

> Current Airflow implementation need to parse the DAG python file before

> running a task. Parsing multiple python file in a smart sensor make the case

> low efficiency and overload. Since sensor tasks need relatively more “light

> weight” executing information -- less number of properties with simple

> structure (most are built in type instead of function or object). We propose

> to skip the parsing for smart sensor. The easiest way is to persist all task

> instance information in airflow metaDB.

> h3. Solution:

> It will be hard to dump the whole task instance object dictionary. And we do

> not really need that much information.

> We add two sets to the base sensor class as “persist_fields” and

> “execute_fields”.

> h4. “persist_fields” dump to airflow.task_instance column “attr_dict”

> saves the attribute names that should be used to accomplish a sensor poking

> job. For example:

> # the “NamedHivePartitionSensor” define its persist_fields as

> ('partition_names', 'metastore_conn_id', 'hook') since these properties are

> enough for its poking function.

> # While the HivePartitionSensor can be slightly different use persist_fields

> as ('schema', 'table', 'partition', 'metastore_conn_id')

> If we have two tasks that have same property value for all field in

> persist_fields. That means these two tasks are poking the same item and they

> are holding duplicate poking jobs in senser.

> *The persist_fields can help us in deduplicate sensor tasks*. In a more

> broader way. If we can list

[GitHub] [airflow] YingboWang commented on a change in pull request #5499: [AIRFLOW-3964][AIP-17] Build smart sensor

YingboWang commented on a change in pull request #5499: URL: https://github.com/apache/airflow/pull/5499#discussion_r468732624 ## File path: airflow/models/taskinstance.py ## @@ -280,7 +281,8 @@ def try_number(self): database, in all other cases this will be incremented. """ # This is designed so that task logs end up in the right file. -if self.state == State.RUNNING: +# TODO: whether we need sensing here or not (in sensor and task_instance state machine) +if self.state in State.running(): Review comment: "sensing" state means this TI is currently being poked by smart sensor. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] kaxil commented on pull request #10280: Enable Sphinx spellcheck for docs generation

kaxil commented on pull request #10280: URL: https://github.com/apache/airflow/pull/10280#issuecomment-672117470 > > @potiuk Do we need to bump the EPOCH number so that the CI uses the updated Dockerfile ? > > Absolutely not. It's only needed in case stuff is removed) Any ideas on how we fix the failing test: https://github.com/apache/airflow/pull/10280/checks?check_run_id=969045276#step:5:6023 ``` THESE PACKAGES DO NOT MATCH THE HASHES FROM THE REQUIREMENTS FILE. If you have updated the package versions, please update the hashes. Otherwise, examine the package contents carefully; someone may have tampered with them. ``` This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

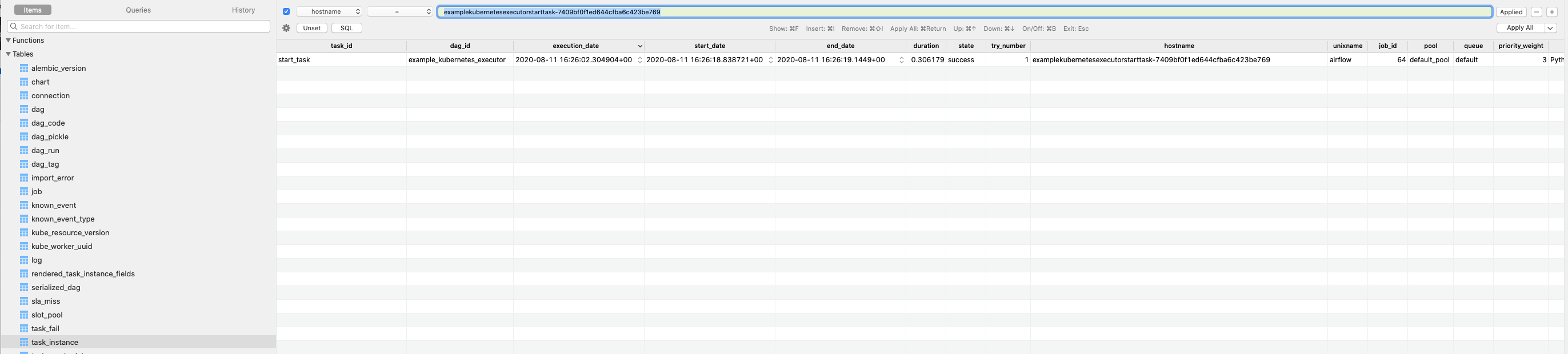

[GitHub] [airflow] Labbs opened a new issue #10292: Kubernetes Executor - invalid hostname in the database

Labbs opened a new issue #10292:

URL: https://github.com/apache/airflow/issues/10292

**Apache Airflow version**: 1.10.11

**Kubernetes version (if you are using kubernetes)** (use `kubectl version`):

```

Client Version: version.Info{Major:"1", Minor:"18", GitVersion:"v1.18.1",

GitCommit:"7879fc12a63337efff607952a323df90cdc7a335", GitTreeState:"clean",

BuildDate:"2020-04-10T21:53:51Z", GoVersion:"go1.14.2", Compiler:"gc",

Platform:"darwin/amd64"}

Server Version: version.Info{Major:"1", Minor:"17+",

GitVersion:"v1.17.6-eks-4e7f64",

GitCommit:"4e7f642f9f4cbb3c39a4fc6ee84fe341a8ade94c", GitTreeState:"clean",

BuildDate:"2020-06-11T13:55:35Z", GoVersion:"go1.13.9", Compiler:"gc",

Platform:"linux/amd64"}

```

**Environment**:

- **Cloud provider or hardware configuration**: AWS

**What happened**:

When I want to retrieve the logs from the GUI, Airflow indicates that it has

not found the pod

```

HTTP response body:

b'{"kind":"Status","apiVersion":"v1","metadata":{},"status":"Failure","message":"pods

\\"examplekubernetesexecutorstarttask-7409bf0f1ed644cfba6c423be769\\" not

found","reason":"NotFound","details":{"name":"examplekubernetesexecutorstarttask-7409bf0f1ed644cfba6c423be769","kind":"pods"},"code":404}\n'

```

After checking in the kubernetes and the database, the hostname is in the

database is not complete.

```

kubectl get po -n airflow-testing | grep

examplekubernetesexecutorstarttask-7409bf0f1ed644cfba6c423be769

examplekubernetesexecutorstarttask-7409bf0f1ed644cfba6c423be769a8c4

0/1 Completed 0 107m

```

It's missing 4 characters in the hostname.

If I update the database with the complete hostname, airflow retrieve the

log without issue

**What you expected to happen**:

The hostname must be complete in the database so that airflow can retrieve

the logs in kubernetes with the correct pod name.

**How to reproduce it**:

1- configure airflow for use KubernetesExecutor

```

AIRFLOW__KUBERNETES__DELETE_WORKER_PODS=False

AIRFLOW__CORE__EXECUTOR=KubernetesExecutor

```

2- add dag

```

from airflow import DAG

from airflow.operators.python_operator import PythonOperator

from airflow.utils.dates import days_ago

args = {

"owner": "airflow",

}

def print_stuff():

print("ok")

dag = DAG(

dag_id="example_kubernetes_executor",

default_args=args,

schedule_interval=None,

start_date=days_ago(2),

tags=["example"],

)

start_task = PythonOperator(

dag=dag,

task_id="start_task",

python_callable=print_stuff)

one_task = PythonOperator(

dag=dag,

task_id="one_task",

python_callable=print_stuff,

executor_config={"KubernetesExecutor": {"image":

"apache/airflow:latest"}},

)

start_task >> one_task

```

3- trigger execution

4- get logs from UI

Result:

```

*** Trying to get logs (last 100 lines) from worker pod

examplekubernetesexecutorstarttask-7409bf0f1ed644cfba6c423be769 ***

*** Unable to fetch logs from worker pod

examplekubernetesexecutorstarttask-7409bf0f1ed644cfba6c423be769 ***

(404)

Reason: Not Found

HTTP response headers: HTTPHeaderDict({'Audit-Id':

'28495ca5-86f3-4935-9cef-f825b79cdff6', 'Cache-Control': 'no-cache, private',

'Content-Type': 'application/json', 'Date': 'Tue, 11 Aug 2020 16:27:43 GMT',

'Content-Length': '294'})

HTTP response body:

b'{"kind":"Status","apiVersion":"v1","metadata":{},"status":"Failure","message":"pods

\\"examplekubernetesexecutorstarttask-7409bf0f1ed644cfba6c423be769\\" not

found","reason":"NotFound","details":{"name":"examplekubernetesexecutorstarttask-7409bf0f1ed644cfba6c423be769","kind":"pods"},"code":404}\n'

```

**Anything else we need to know**:

The problem is permanent regardless of the dag used.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] kaxil commented on a change in pull request #10280: Enable Sphinx spellcheck for docs generation

kaxil commented on a change in pull request #10280: URL: https://github.com/apache/airflow/pull/10280#discussion_r468780072 ## File path: docs/spelling_wordlist.txt ## @@ -0,0 +1,1366 @@ +Ack +Acyclic +Airbnb +AirflowException +Aizhamal +Alphasort +Analytics +Anand +Ansible +AppBuilder +Arg +Args +Async +Auth +Autoscale +Avro +Azkaban +Azri +Backend +Backends +Backfill +BackfillJobTest +Backfills +Banco +Bas +BaseClient +BaseOperator +BaseView +Beauchemin +Behaviour +Bigquery +Bigtable +Bitshift +Bluecore +Bolke +Bool +Booleans +Boto +BounceX +Boxel +Bq +Breguła +CLoud +CSRFProtect +Cancelled +Cassanda +Catchup +Celect +Cgroups +Changelog +Chao +CheckOperator +Checklicence +Checkr +Cinimex +Cloudant +Cloudwatch +ClusterManagerClient +Codecov +Colour +ComputeNodeState +Computenodes +Config +Configs +Cron +Ctrl +Daemonize +DagFileProcessorManager +DagRun +Dagbag +Dagre +Dask +Databricks +Datadog +Dataflow +Dataframe +Datalake +Datanodes +Dataprep +Dataproc +Dataset +Datasets +Datastore +Datasync +DateFrame +Datetimepicker +Datetimes +Davydov +De +Decrypt +Decrypts +Deserialize +Deserialized +Dingding +Dlp +Dockerfile +Dockerhub +Dockerise +Docstring +Docstrings +Dont +Driesprong +Drivy +Dsn +Dynamodb +EDITMSG +ETag +Eg +EmrAddSteps +EmrCreateJobFlow +Enum +Env +Exasol +Failover +Feng +Fernet +FileSensor +Filebeat +Filepath +Fileshare +Fileshares +Filesystem +Firehose +Firestore +Fokko +Formaturas +Fundera +GCP +GCS +GH +GSoD +Gannt +Gantt +Gao +Gcp +Gentner +GetPartitions +GiB +Github +Gitter +Glassdoor +Gmail +Groupalia +Groupon +Grpc +Gunicorn +Guziel +Gzip +HCatalog +HTTPBasicAuth +Harenslak +Hashable +Hashicorp +Highcharts +Hitesh +Hiveserver +Hoc +Homan +Hostname +Hou +Http +HttpError +HttpRequest +IdP +Imap +Imberman +InsecureClient +InspectContentResponse +Investorise +JPype +Jakob +Jarek +Jdbc +Jiajie +Jinja +Jinjafied +Jinjafy +Jira +JobComplete +JobExists +JobRunning +Json +Jupyter +KYLIN +Kalibrr +Kamil +Kaxil +Kengo +Kerberized +Kerberos +KerberosClient +KevinYang +KeyManagementServiceClient +Keyfile +Kibana +Kinesis +Kombu +Kube +Kubernetes +Kusto +Kwargs +Kylin +LaunchTemplateParameters +Letsbonus +Lifecycle +LineItem +ListGenerator +Logstash +Lowin +Lyft +Maheshwari +Makefile +Mapreduce +Masternode +Maxime +Memorystore +Mesos +MessageAttributes +Metastore +Metatsore +Mixin +Mongo +Moto +Mysql +NFS +NaN +Naik +Namenode +Namespace +Nextdoor +Nones +NotFound +Nullable +Nurmamat +OAuth +Oauth +Oauthlib +Okta +Oozie +Opsgenie +Optimise +PEM +PTarget +Pagerduty +Papermill +Parallelize +Parameterizing +Paramiko +Params +Pem +Pinot +Popen +Postgres +Postgresql +Potiuk +Pre +Precommit +Preprocessed +Proc +Protobuf +PublisherClient +Pubsub +Py +PyPI +Pylint +Pyspark +PythonOperator +Qingping +Qplum +Quantopian +Qubole +Quboles +Quoble +RBAC +Readme +Realtime +Rebasing +Rebrand +Reddit +Redhat +Reinitialising +Retrives +Riccomini +Roadmap +Robinhood +SIGTERM +SSHClient +SSHTunnelForwarder +SaaS +Sagemaker +Sasl +SecretManagerClient +Seedlist +Seki +Sendgrid +Siddharth +SlackHook +SparkPi +SparkR +SparkSQL +Sql +Sqlalchemy +Sqlite +Sqoop +Stackdriver +Standarization +StatsD +Statsd +Stringified +Subclasses +Subdirectory +Submodules +Subpackages +Subpath +SubscriberClient +Subtasks +Sumit +Swtch +Systemd +TCP +TLS +TTY +TThe +TZ +TaskInstance +Taskfail +Templated +Templating +Teradata +TextToSpeechClient +Tez +Thinknear +ToC +Tomasz +Tooltip +Tsai +UA +UNload +Uellendall +Umask +Un +Undead +Undeads +Unpausing +Unpickle +Upsert +Upsight +Uptime +Urbaszek +Url +Utils +Vendorize +Vertica +Vevo +WTF +WaiterModel +Wasb +WebClient +Webhook +Webserver +Werkzeug +Wiedmer +XCom +XComs +Xcom +Xero +Xiaodong +Yamllint +Yandex +Yieldr +Zego +Zendesk +Zhong +Zsh +Zymergen +aIRFLOW +abc +accessor +accountmaking +acessible +ack +ackIds +acknowledgement +actionCard +acurate +acyclic +adhoc +aijamalnk +airbnb +airfl +airflowignore +ajax +alertPolicies +alexvanboxel +allAuthenticatedUsers +allUsers +allowinsert +analyse +analytics +analyticsreporting +analyzeEntities +analyzeSentiment +analyzeSyntax +aoen +apache +api +apikey +apis +appbuilder +approle +arg +args +arn +arraysize +artwr +asc +ascii +asciiart +asia +assertEqualIgnoreMultipleSpaces +ast +async +athena +attemping +attr +attrs +auth +authMechanism +authenication +authenticator +authorised +autoclass +autocommit +autocomplete +autodetect +autodetected +autoenv +autogenerated +autorestart +autoscale +autoscaling +avro +aws +awsbatch +backend +backends +backfill +backfilled +backfilling +backfills +backoff +backport +backreference +backtick +backticks +balancer +baseOperator +basedn +basestring +basetaskrunner +bashrc +basph +batchGet +bc +bcc +beeen +behaviour +behaviours +behavoiur +bicket +bigquery +bigtable +bitshift +bolkedebruin +booktabs +boolean +booleans +bootDiskType +boto +botocore +bowser +bq +bugfix +bugfixes +buildType +bytestring +cacert +callables +cancelled +carbonite +cas +cassandra +casted +catchup +cattrs +ccache +celeryd

[GitHub] [airflow] kaxil commented on a change in pull request #10280: Enable Sphinx spellcheck for docs generation

kaxil commented on a change in pull request #10280: URL: https://github.com/apache/airflow/pull/10280#discussion_r468779562 ## File path: docs/spelling_wordlist.txt ## @@ -0,0 +1,1366 @@ +Ack +Acyclic +Airbnb +AirflowException +Aizhamal +Alphasort +Analytics +Anand +Ansible +AppBuilder +Arg +Args +Async +Auth +Autoscale +Avro +Azkaban +Azri +Backend +Backends +Backfill +BackfillJobTest +Backfills +Banco +Bas +BaseClient +BaseOperator +BaseView +Beauchemin +Behaviour +Bigquery +Bigtable +Bitshift +Bluecore +Bolke +Bool +Booleans +Boto +BounceX +Boxel +Bq +Breguła +CLoud +CSRFProtect +Cancelled +Cassanda +Catchup +Celect +Cgroups +Changelog +Chao +CheckOperator +Checklicence +Checkr +Cinimex +Cloudant +Cloudwatch +ClusterManagerClient +Codecov +Colour +ComputeNodeState +Computenodes +Config +Configs +Cron +Ctrl +Daemonize +DagFileProcessorManager +DagRun +Dagbag +Dagre +Dask +Databricks +Datadog +Dataflow +Dataframe +Datalake +Datanodes +Dataprep +Dataproc +Dataset +Datasets +Datastore +Datasync +DateFrame +Datetimepicker +Datetimes +Davydov +De +Decrypt +Decrypts +Deserialize +Deserialized +Dingding +Dlp +Dockerfile +Dockerhub +Dockerise +Docstring +Docstrings +Dont +Driesprong +Drivy +Dsn +Dynamodb +EDITMSG +ETag +Eg +EmrAddSteps +EmrCreateJobFlow +Enum +Env +Exasol +Failover +Feng +Fernet +FileSensor +Filebeat +Filepath +Fileshare +Fileshares +Filesystem +Firehose +Firestore +Fokko +Formaturas +Fundera +GCP +GCS +GH +GSoD +Gannt +Gantt +Gao +Gcp +Gentner +GetPartitions +GiB +Github +Gitter +Glassdoor +Gmail +Groupalia +Groupon +Grpc +Gunicorn +Guziel +Gzip +HCatalog +HTTPBasicAuth +Harenslak +Hashable +Hashicorp +Highcharts +Hitesh +Hiveserver +Hoc +Homan +Hostname +Hou +Http +HttpError +HttpRequest +IdP +Imap +Imberman +InsecureClient +InspectContentResponse +Investorise +JPype +Jakob +Jarek +Jdbc +Jiajie +Jinja +Jinjafied +Jinjafy +Jira +JobComplete +JobExists +JobRunning +Json +Jupyter +KYLIN +Kalibrr +Kamil +Kaxil +Kengo +Kerberized +Kerberos +KerberosClient +KevinYang +KeyManagementServiceClient +Keyfile +Kibana +Kinesis +Kombu +Kube +Kubernetes +Kusto +Kwargs +Kylin +LaunchTemplateParameters +Letsbonus +Lifecycle +LineItem +ListGenerator +Logstash +Lowin +Lyft +Maheshwari +Makefile +Mapreduce +Masternode +Maxime +Memorystore +Mesos +MessageAttributes +Metastore +Metatsore +Mixin +Mongo +Moto +Mysql +NFS +NaN +Naik +Namenode +Namespace +Nextdoor +Nones +NotFound +Nullable +Nurmamat +OAuth +Oauth +Oauthlib +Okta +Oozie +Opsgenie +Optimise +PEM +PTarget +Pagerduty +Papermill +Parallelize +Parameterizing +Paramiko +Params +Pem +Pinot +Popen +Postgres +Postgresql +Potiuk +Pre +Precommit +Preprocessed +Proc +Protobuf +PublisherClient +Pubsub +Py +PyPI +Pylint +Pyspark +PythonOperator +Qingping +Qplum +Quantopian +Qubole +Quboles +Quoble +RBAC +Readme +Realtime +Rebasing +Rebrand +Reddit +Redhat +Reinitialising +Retrives +Riccomini +Roadmap +Robinhood +SIGTERM +SSHClient +SSHTunnelForwarder +SaaS +Sagemaker +Sasl +SecretManagerClient +Seedlist +Seki +Sendgrid +Siddharth +SlackHook +SparkPi +SparkR +SparkSQL +Sql +Sqlalchemy +Sqlite +Sqoop +Stackdriver +Standarization +StatsD +Statsd +Stringified +Subclasses +Subdirectory +Submodules +Subpackages +Subpath +SubscriberClient +Subtasks +Sumit +Swtch +Systemd +TCP +TLS +TTY +TThe +TZ +TaskInstance +Taskfail +Templated +Templating +Teradata +TextToSpeechClient +Tez +Thinknear +ToC +Tomasz +Tooltip +Tsai +UA +UNload +Uellendall +Umask +Un +Undead +Undeads +Unpausing +Unpickle +Upsert +Upsight +Uptime +Urbaszek +Url +Utils +Vendorize +Vertica +Vevo +WTF +WaiterModel +Wasb +WebClient +Webhook +Webserver +Werkzeug +Wiedmer +XCom +XComs +Xcom +Xero +Xiaodong +Yamllint +Yandex +Yieldr +Zego +Zendesk +Zhong +Zsh +Zymergen +aIRFLOW +abc +accessor +accountmaking +acessible +ack +ackIds +acknowledgement +actionCard +acurate +acyclic +adhoc +aijamalnk +airbnb +airfl +airflowignore +ajax +alertPolicies +alexvanboxel +allAuthenticatedUsers +allUsers +allowinsert +analyse +analytics +analyticsreporting +analyzeEntities +analyzeSentiment +analyzeSyntax +aoen +apache +api +apikey +apis +appbuilder +approle +arg +args +arn +arraysize +artwr +asc +ascii +asciiart +asia +assertEqualIgnoreMultipleSpaces +ast +async +athena +attemping +attr +attrs +auth +authMechanism +authenication +authenticator +authorised +autoclass +autocommit +autocomplete +autodetect +autodetected +autoenv +autogenerated +autorestart +autoscale +autoscaling +avro +aws +awsbatch +backend +backends +backfill +backfilled +backfilling +backfills +backoff +backport +backreference +backtick +backticks +balancer +baseOperator +basedn +basestring +basetaskrunner +bashrc +basph +batchGet +bc +bcc +beeen +behaviour +behaviours +behavoiur +bicket +bigquery +bigtable +bitshift +bolkedebruin +booktabs +boolean +booleans +bootDiskType +boto +botocore +bowser Review comment: This actually is a typo. It is used in `privacy_notice.rst` -> It should be `browser`

[GitHub] [airflow] boring-cyborg[bot] commented on issue #10292: Kubernetes Executor - invalid hostname in the database

boring-cyborg[bot] commented on issue #10292: URL: https://github.com/apache/airflow/issues/10292#issuecomment-672167998 Thanks for opening your first issue here! Be sure to follow the issue template! This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] houqp commented on a change in pull request #10267: Add Authentication for Stable API

houqp commented on a change in pull request #10267: URL: https://github.com/apache/airflow/pull/10267#discussion_r468873335 ## File path: airflow/api_connexion/endpoints/dag_run_endpoint.py ## @@ -151,6 +156,7 @@ def get_dag_runs_batch(session): total_entries=total_entries)) +@security.requires_authentication Review comment: if no auth is the exception here (i.e. only /health and /version), wouldn't it be safer to make auth required the default, then turn off auth specifically for those two endpoints? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] kaxil commented on pull request #10280: Enable Sphinx spellcheck for docs generation

kaxil commented on pull request #10280: URL: https://github.com/apache/airflow/pull/10280#issuecomment-672130815 Got it, I restarted the tests again 爛 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] mik-laj commented on issue #8388: Task instance details page blows up when no dagrun

mik-laj commented on issue #8388: URL: https://github.com/apache/airflow/issues/8388#issuecomment-672155750 This fix may not have been officially released. Can you check to see if the problem is in the master version? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] nadflinn opened a new issue #8388: Task instance details page blows up when no dagrun

nadflinn opened a new issue #8388: URL: https://github.com/apache/airflow/issues/8388 **Apache Airflow version**: 1.10.9 although I think it would apply to 1.10.10, 1.10.8, 1.10.7, and 1.10.6 **Kubernetes version (if you are using kubernetes)** (use `kubectl version`): **Environment**: - **Cloud provider or hardware configuration**: aws - **OS** (e.g. from /etc/os-release): Ubuntu 18.04.3 LTS - **Kernel** (e.g. `uname -a`): `Linux 567ff872fc2d 3.13.0-170-generic #220-Ubuntu SMP Thu May 9 12:40:49 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux` - **Install tools**: - **Others**: **What happened**: If you have a DAG that has not been turned on before and you click on that DAG in the admin page and then in the DAG graph click on one of the tasks and then click on `Task Instance Details` you get the "Ooops" explosion error page. The error is: ``` Traceback (most recent call last): File "/usr/local/lib/python3.7/dist-packages/flask/app.py", line 2447, in wsgi_app response = self.full_dispatch_request() File "/usr/local/lib/python3.7/dist-packages/flask/app.py", line 1952, in full_dispatch_request rv = self.handle_user_exception(e) File "/usr/local/lib/python3.7/dist-packages/flask/app.py", line 1821, in handle_user_exception reraise(exc_type, exc_value, tb) File "/usr/local/lib/python3.7/dist-packages/flask/_compat.py", line 39, in reraise raise value File "/usr/local/lib/python3.7/dist-packages/flask/app.py", line 1950, in full_dispatch_request rv = self.dispatch_request() File "/usr/local/lib/python3.7/dist-packages/flask/app.py", line 1936, in dispatch_request return self.view_functions[rule.endpoint](**req.view_args) File "/usr/local/lib/python3.7/dist-packages/flask_admin/base.py", line 69, in inner return self._run_view(f, *args, **kwargs) File "/usr/local/lib/python3.7/dist-packages/flask_admin/base.py", line 368, in _run_view return fn(self, *args, **kwargs) File "/usr/local/lib/python3.7/dist-packages/flask_login/utils.py", line 258, in decorated_view return func(*args, **kwargs) File "/usr/local/lib/python3.7/dist-packages/airflow/www/utils.py", line 290, in wrapper return f(*args, **kwargs) File "/usr/local/lib/python3.7/dist-packages/airflow/www/views.py", line 1041, in task dep_context=dep_context)] File "/usr/local/lib/python3.7/dist-packages/airflow/www/views.py", line 1039, in failed_dep_reasons = [(dep.dep_name, dep.reason) for dep in File "/usr/local/lib/python3.7/dist-packages/airflow/models/taskinstance.py", line 668, in get_failed_dep_statuses dep_context): File "/usr/local/lib/python3.7/dist-packages/airflow/ti_deps/deps/base_ti_dep.py", line 106, in get_dep_statuses for dep_status in self._get_dep_statuses(ti, session, dep_context): File "/usr/local/lib/python3.7/dist-packages/airflow/ti_deps/deps/dagrun_id_dep.py", line 51, in _get_dep_statuses if not dagrun.run_id or not match(BackfillJob.ID_PREFIX + '.*', dagrun.run_id): AttributeError: 'NoneType' object has no attribute 'run_id' ``` **What you expected to happen**: No error page. It should have loaded the standard task instance details page. I believe the reason that this is happening is because there is no check to see if a dagrun exists in `DagrunIdDep`: https://github.com/apache/airflow/blob/master/airflow/ti_deps/deps/dagrun_id_dep.py#L50 This is a bit of an edge case because there isn't really any reason someone should be checking out task instance details for a DAG that has never been run. That said we should probably avoid the error page. **How to reproduce it**: If you have a DAG that has not been turned on before and you click on that DAG in the admin page and then in the DAG graph click on one of the tasks and then click on `Task Instance Details` you get the "Ooops" explosion error page. **Anything else we need to know**: This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] atsalolikhin-spokeo commented on issue #8388: Task instance details page blows up when no dagrun

atsalolikhin-spokeo commented on issue #8388: URL: https://github.com/apache/airflow/issues/8388#issuecomment-672214778 I'm sorry, I can't tell. I don't seem to have a fully functional Airflow installation. The console (CLI) keeps telling me: ``` Please make sure to build the frontend in static/ directory and restart the server ``` but I don't know how to do that. And the example bash operator disappeared from the UI after I did the "yarn prod run". This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] potiuk commented on pull request #10280: Enable Sphinx spellcheck for docs generation

potiuk commented on pull request #10280: URL: https://github.com/apache/airflow/pull/10280#issuecomment-672125831 I re-run it. It looks like a transient error. Since we are modifying the dependencie pretty early in the Dockerfile, It gets rebuild from the point it is modified and sometimes there is a problem when downloading the packages where checksum does not match on some transient 404 not found error (usually this happens when apt get gets an error during download but this error is somehow swallowed by firewall or smth). And since we are rebuilding the image in all steps that need it, this might happen rather frequently. You will notice that in this PR each "Build CI image" takes much longer than usual 3-4 minutes (it might be 12 minutes or more because almost all image is rebuilt). BTW. This is one of the problems my (soon to submit) PR is going to address. We are going to build each image only once - so even if Dockerfile is modified at the beginning the build it will be built once and such pre-built image will be used by all the subsequent steps. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] atsalolikhin-spokeo commented on issue #8388: Task instance details page blows up when no dagrun

atsalolikhin-spokeo commented on issue #8388: URL: https://github.com/apache/airflow/issues/8388#issuecomment-672150672 Hello, I just installed Airflow for the first time, following the [Quick Start](https://airflow.apache.org/docs/stable/start.html) guide. I ran the bash example operator (still following the guide): ``` # run your first task instance airflow run example_bash_operator runme_0 2015-01-01 ``` And then, when I went to see how the run went, I got the error page with the mushroom cloud and the error. Now, this is on Apache Airflow v1.10.11 (latest stable), which I see was released on July 10, 2020. And this pull request was merged on April 15th. And this issue is closed. But I'm running into the same broken behavior. Here is my error page: ``` Ooops. / ( () ) \___ /( ( ( ) _)) ) )\ (( ( )() ) ( ) ) ((/ ( _( ) ( _) ) ( () ) ) ( ( ( (_) ((( ) .((_ ) . )_ ( ( )( ( )) ) . ) ( ) ( ( ( ( ) ( _ ( _) ). ) . ) ) ( ) ( ( ( ) ( ) ( )) ) _)( ) ) ) ( ( ( \ ) ((_ ( ) ( ) ) ) ) )) ( ) ( ( ( ( (_ ( ) ( _) ) ( ) ) ) ( ( ( ( ( ) (_ ) ) ) _) ) _( ( ) (( ( )(( _) _) _(_ ( (_ ) (_((__(_(__(( ( ( | ) ) ) )_))__))_)___) ((__)\\||lll|l||/// \_)) ( /(/ ( ) ) )\ ) (( ( ( | | ) ) )\ ) ( /(| / ( )) ) ) )) ) ( ( _(|)_) ) ( ||\(|(|)|/|| ) (|(||(||)) ( //|/l|||)|\\ \ ) (/ / // /|//\\ \ \ \ _) --- Node: ip-172-20-2-227.us-west-2.compute.internal --- Traceback (most recent call last): File "/usr/local/lib64/python3.7/site-packages/flask/app.py", line 2447, in wsgi_app response = self.full_dispatch_request() File "/usr/local/lib64/python3.7/site-packages/flask/app.py", line 1952, in full_dispatch_request rv = self.handle_user_exception(e) File "/usr/local/lib64/python3.7/site-packages/flask/app.py", line 1821, in handle_user_exception reraise(exc_type, exc_value, tb) File "/usr/local/lib64/python3.7/site-packages/flask/_compat.py", line 39, in reraise raise value File "/usr/local/lib64/python3.7/site-packages/flask/app.py", line 1950, in full_dispatch_request rv = self.dispatch_request() File "/usr/local/lib64/python3.7/site-packages/flask/app.py", line 1936, in dispatch_request return self.view_functions[rule.endpoint](**req.view_args) File "/home/airflow/.local/lib/python3.7/site-packages/flask_admin/base.py", line 69, in inner return self._run_view(f, *args, **kwargs) File "/home/airflow/.local/lib/python3.7/site-packages/flask_admin/base.py", line 368, in _run_view return fn(self, *args, **kwargs) File "/usr/local/lib/python3.7/site-packages/flask_login/utils.py", line 258, in decorated_view return func(*args, **kwargs) File "/home/airflow/.local/lib/python3.7/site-packages/airflow/www/utils.py", line 286, in wrapper return f(*args, **kwargs) File "/home/airflow/.local/lib/python3.7/site-packages/airflow/www/views.py", line 1055, in task dep_context=dep_context)] File "/home/airflow/.local/lib/python3.7/site-packages/airflow/www/views.py", line 1053, in failed_dep_reasons = [(dep.dep_name, dep.reason) for dep in File "/home/airflow/.local/lib/python3.7/site-packages/airflow/models/taskinstance.py", line 683, in get_failed_dep_statuses dep_context): File "/home/airflow/.local/lib/python3.7/site-packages/airflow/ti_deps/deps/base_ti_dep.py", line 106, in get_dep_statuses for dep_status in self._get_dep_statuses(ti, session, dep_context): File "/home/airflow/.local/lib/python3.7/site-packages/airflow/ti_deps/deps/dagrun_id_dep.py", line 51, in _get_dep_statuses if not dagrun.run_id or not match(BackfillJob.ID_PREFIX + '.*', dagrun.run_id): AttributeError: 'NoneType' object has no attribute 'run_id' ``` This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL

[GitHub] [airflow] atsalolikhin-spokeo commented on issue #8388: Task instance details page blows up when no dagrun

atsalolikhin-spokeo commented on issue #8388: URL: https://github.com/apache/airflow/issues/8388#issuecomment-672183000 Here is a screnshot, showing there is no toggle switch like there was in 1.10.11  This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] atsalolikhin-spokeo edited a comment on issue #8388: Task instance details page blows up when no dagrun

atsalolikhin-spokeo edited a comment on issue #8388: URL: https://github.com/apache/airflow/issues/8388#issuecomment-672183000 Here is a screenshot, showing there is no toggle switch like there was in 1.10.11  This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] atsalolikhin-spokeo commented on issue #8388: Task instance details page blows up when no dagrun

atsalolikhin-spokeo commented on issue #8388:

URL: https://github.com/apache/airflow/issues/8388#issuecomment-672180803

Thank you! I figured it out, actually.

```

Error: Could not locate a Flask application. You did not provide the

"FLASK_APP" environment variable, and a "wsgi.py" or "app.py" module was not

found in the current directory.

[airflow@ip-172-20-2-227 airflow]$ find . -name wsgi.py

[airflow@ip-172-20-2-227 airflow]$ find . -name app.py

./airflow/www/app.py

[airflow@ip-172-20-2-227 airflow]$ cd airflow/www

[airflow@ip-172-20-2-227 www]$ flask fab create-user

Role [Public]: Admin

Username: admin

User first name: admin

User last name: admin

Email: ad...@example.com

Password:

Repeat for confirmation:

[2020-08-11 18:34:09,566] {dagbag.py:409} INFO - Filling up the DagBag from

/home/airflow/airflow/dags

Please make sure to build the frontend in static/ directory and restart the

server

[2020-08-11 18:34:09,735] {manager.py:719} WARNING - No user yet created,

use flask fab command to do it.

User admin created.

[airflow@ip-172-20-2-227 www]$

```

(I did read the documentation section you linked to, thank you; just after I

already created an `admin` user and logged in.)

Don't laugh, but I can't figure out how to unpause the bash example

operator. Instead of a sliding toggle switch, there is a checkbox.

I installed airflow by running "pip3 install --user ." in the top directory

of the top of master.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] atsalolikhin-spokeo commented on issue #8388: Task instance details page blows up when no dagrun

atsalolikhin-spokeo commented on issue #8388: URL: https://github.com/apache/airflow/issues/8388#issuecomment-672201902 I've installed JS using yarn as per the linked instructions. Now Airflow is showing 18 DAGs (before it was 22). The bash example operator DAG is gone. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[airflow] branch constraints-1-10 updated: Updating constraints. GH run id:204255384

This is an automated email from the ASF dual-hosted git repository. github-bot pushed a commit to branch constraints-1-10 in repository https://gitbox.apache.org/repos/asf/airflow.git The following commit(s) were added to refs/heads/constraints-1-10 by this push: new 04c58ae Updating constraints. GH run id:204255384 04c58ae is described below commit 04c58ae6ad92be90c8775d4c47f34f84e4428edb Author: Automated Github Actions commit AuthorDate: Tue Aug 11 20:21:44 2020 + Updating constraints. GH run id:204255384 This update in constraints is automatically committed by CI pushing reference 'refs/heads/v1-10-test' to apache/airflow with commit sha a281faa00627ebdbde3c947e2857ae3e60b9273e. All tests passed in this build so we determined we can push the updated constraints. See https://github.com/apache/airflow/blob/master/README.md#installing-from-pypi for details. --- constraints-3.5.txt | 1 + constraints-3.6.txt | 2 +- constraints-3.8.txt | 2 +- 3 files changed, 3 insertions(+), 2 deletions(-) diff --git a/constraints-3.5.txt b/constraints-3.5.txt index 441cd41..38877ff 100644 --- a/constraints-3.5.txt +++ b/constraints-3.5.txt @@ -150,6 +150,7 @@ imagesize==1.2.0 importlib-metadata==1.7.0 importlib-resources==3.0.0 inflection==0.5.0 +iniconfig==1.0.1 ipdb==0.13.3 ipython-genutils==0.2.0 ipython==7.9.0 diff --git a/constraints-3.6.txt b/constraints-3.6.txt index 78cbf28..49a473f 100644 --- a/constraints-3.6.txt +++ b/constraints-3.6.txt @@ -96,7 +96,7 @@ docker-pycreds==0.4.0 docker==3.7.3 docopt==0.6.2 docutils==0.16 -ecdsa==0.15 +ecdsa==0.14.1 elasticsearch-dsl==5.4.0 elasticsearch==5.5.3 email-validator==1.1.1 diff --git a/constraints-3.8.txt b/constraints-3.8.txt index bff0628..c7d89e5 100644 --- a/constraints-3.8.txt +++ b/constraints-3.8.txt @@ -95,7 +95,7 @@ docker-pycreds==0.4.0 docker==3.7.3 docopt==0.6.2 docutils==0.16 -ecdsa==0.14.1 +ecdsa==0.15 elasticsearch-dsl==5.4.0 elasticsearch==5.5.3 email-validator==1.1.1

[airflow] branch constraints-1-10 updated: Updating constraints. GH run id:204255384

This is an automated email from the ASF dual-hosted git repository. github-bot pushed a commit to branch constraints-1-10 in repository https://gitbox.apache.org/repos/asf/airflow.git The following commit(s) were added to refs/heads/constraints-1-10 by this push: new 04c58ae Updating constraints. GH run id:204255384 04c58ae is described below commit 04c58ae6ad92be90c8775d4c47f34f84e4428edb Author: Automated Github Actions commit AuthorDate: Tue Aug 11 20:21:44 2020 + Updating constraints. GH run id:204255384 This update in constraints is automatically committed by CI pushing reference 'refs/heads/v1-10-test' to apache/airflow with commit sha a281faa00627ebdbde3c947e2857ae3e60b9273e. All tests passed in this build so we determined we can push the updated constraints. See https://github.com/apache/airflow/blob/master/README.md#installing-from-pypi for details. --- constraints-3.5.txt | 1 + constraints-3.6.txt | 2 +- constraints-3.8.txt | 2 +- 3 files changed, 3 insertions(+), 2 deletions(-) diff --git a/constraints-3.5.txt b/constraints-3.5.txt index 441cd41..38877ff 100644 --- a/constraints-3.5.txt +++ b/constraints-3.5.txt @@ -150,6 +150,7 @@ imagesize==1.2.0 importlib-metadata==1.7.0 importlib-resources==3.0.0 inflection==0.5.0 +iniconfig==1.0.1 ipdb==0.13.3 ipython-genutils==0.2.0 ipython==7.9.0 diff --git a/constraints-3.6.txt b/constraints-3.6.txt index 78cbf28..49a473f 100644 --- a/constraints-3.6.txt +++ b/constraints-3.6.txt @@ -96,7 +96,7 @@ docker-pycreds==0.4.0 docker==3.7.3 docopt==0.6.2 docutils==0.16 -ecdsa==0.15 +ecdsa==0.14.1 elasticsearch-dsl==5.4.0 elasticsearch==5.5.3 email-validator==1.1.1 diff --git a/constraints-3.8.txt b/constraints-3.8.txt index bff0628..c7d89e5 100644 --- a/constraints-3.8.txt +++ b/constraints-3.8.txt @@ -95,7 +95,7 @@ docker-pycreds==0.4.0 docker==3.7.3 docopt==0.6.2 docutils==0.16 -ecdsa==0.14.1 +ecdsa==0.15 elasticsearch-dsl==5.4.0 elasticsearch==5.5.3 email-validator==1.1.1

[GitHub] [airflow] jaketf opened a new issue #10293: Add Dataproc Job Sensor

jaketf opened a new issue #10293: URL: https://github.com/apache/airflow/issues/10293 **Description** The Dataproc hook now has separate methods for [submitting](https://github.com/apache/airflow/blob/master/airflow/providers/google/cloud/hooks/dataproc.py#L778) and [waiting for job completion](https://github.com/apache/airflow/blob/master/airflow/providers/google/cloud/hooks/dataproc.py#L705). We should improve this further by adding a method to simply poke the status of the job. Finally implement a `DataprocJobSensor` so we can use reschedule mode for jobs known to run for a long time. **Use case / motivation** For Long running dataproc jobs that are known to run for a long time (e.g. > 1 hr) we should not block a slot for the entire duration of the job. **Related Issues** N/A **Other notes ** @varundhussa is currently working on this for a customer with this use case. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (AIRFLOW-5071) Thousand os Executor reports task instance X finished (success) although the task says its queued. Was the task killed externally?

[

https://issues.apache.org/jira/browse/AIRFLOW-5071?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17175866#comment-17175866

]

Nikolay commented on AIRFLOW-5071:

--

I was observing this issue, when multiple parallel instances of dags were

running. I'm using KubernetesExecutor. Also not only sensors were failing

(PythonOperator for example).

I decreased max_active_runs property, increased min_file_process_interval and

after that I haven't seen this issue for a while.

> Thousand os Executor reports task instance X finished (success) although the

> task says its queued. Was the task killed externally?

> --

>

> Key: AIRFLOW-5071

> URL: https://issues.apache.org/jira/browse/AIRFLOW-5071

> Project: Apache Airflow

> Issue Type: Bug

> Components: DAG, scheduler

>Affects Versions: 1.10.3

>Reporter: msempere

>Priority: Critical

> Fix For: 1.10.12

>

> Attachments: image-2020-01-27-18-10-29-124.png,

> image-2020-07-08-07-58-42-972.png

>

>

> I'm opening this issue because since I update to 1.10.3 I'm seeing thousands

> of daily messages like the following in the logs:

>

> ```

> {{__init__.py:1580}} ERROR - Executor reports task instance 2019-07-29 00:00:00+00:00 [queued]> finished (success) although the task says

> its queued. Was the task killed externally?

> {{jobs.py:1484}} ERROR - Executor reports task instance 2019-07-29 00:00:00+00:00 [queued]> finished (success) although the task says

> its queued. Was the task killed externally?

> ```

> -And looks like this is triggering also thousand of daily emails because the

> flag to send email in case of failure is set to True.-

> I have Airflow setup to use Celery and Redis as a backend queue service.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [airflow] darwinyip closed issue #10275: Trigger rule for "always"

darwinyip closed issue #10275: URL: https://github.com/apache/airflow/issues/10275 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] atsalolikhin-spokeo commented on issue #8388: Task instance details page blows up when no dagrun

atsalolikhin-spokeo commented on issue #8388: URL: https://github.com/apache/airflow/issues/8388#issuecomment-672152435 I don't have the rights to re-open this issue. Is it enough for me to comment on it here? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] kaxil commented on a change in pull request #10280: Enable Sphinx spellcheck for docs generation

kaxil commented on a change in pull request #10280: URL: https://github.com/apache/airflow/pull/10280#discussion_r468781032 ## File path: docs/spelling_wordlist.txt ## @@ -0,0 +1,1366 @@ +Ack +Acyclic +Airbnb +AirflowException +Aizhamal +Alphasort +Analytics +Anand +Ansible +AppBuilder +Arg +Args +Async +Auth +Autoscale +Avro +Azkaban +Azri +Backend +Backends +Backfill +BackfillJobTest +Backfills +Banco +Bas +BaseClient +BaseOperator +BaseView +Beauchemin +Behaviour +Bigquery +Bigtable +Bitshift +Bluecore +Bolke +Bool +Booleans +Boto +BounceX +Boxel +Bq +Breguła +CLoud +CSRFProtect +Cancelled +Cassanda +Catchup +Celect +Cgroups +Changelog +Chao +CheckOperator +Checklicence +Checkr +Cinimex +Cloudant +Cloudwatch +ClusterManagerClient +Codecov +Colour +ComputeNodeState +Computenodes +Config +Configs +Cron +Ctrl +Daemonize +DagFileProcessorManager +DagRun +Dagbag +Dagre +Dask +Databricks +Datadog +Dataflow +Dataframe +Datalake +Datanodes +Dataprep +Dataproc +Dataset +Datasets +Datastore +Datasync +DateFrame +Datetimepicker +Datetimes +Davydov +De +Decrypt +Decrypts +Deserialize +Deserialized +Dingding +Dlp +Dockerfile +Dockerhub +Dockerise +Docstring +Docstrings +Dont +Driesprong +Drivy +Dsn +Dynamodb +EDITMSG +ETag +Eg +EmrAddSteps +EmrCreateJobFlow +Enum +Env +Exasol +Failover +Feng +Fernet +FileSensor +Filebeat +Filepath +Fileshare +Fileshares +Filesystem +Firehose +Firestore +Fokko +Formaturas +Fundera +GCP +GCS +GH +GSoD +Gannt +Gantt +Gao +Gcp +Gentner +GetPartitions +GiB +Github +Gitter +Glassdoor +Gmail +Groupalia +Groupon +Grpc +Gunicorn +Guziel +Gzip +HCatalog +HTTPBasicAuth +Harenslak +Hashable +Hashicorp +Highcharts +Hitesh +Hiveserver +Hoc +Homan +Hostname +Hou +Http +HttpError +HttpRequest +IdP +Imap +Imberman +InsecureClient +InspectContentResponse +Investorise +JPype +Jakob +Jarek +Jdbc +Jiajie +Jinja +Jinjafied +Jinjafy +Jira +JobComplete +JobExists +JobRunning +Json +Jupyter +KYLIN +Kalibrr +Kamil +Kaxil +Kengo +Kerberized +Kerberos +KerberosClient +KevinYang +KeyManagementServiceClient +Keyfile +Kibana +Kinesis +Kombu +Kube +Kubernetes +Kusto +Kwargs +Kylin +LaunchTemplateParameters +Letsbonus +Lifecycle +LineItem +ListGenerator +Logstash +Lowin +Lyft +Maheshwari +Makefile +Mapreduce +Masternode +Maxime +Memorystore +Mesos +MessageAttributes +Metastore +Metatsore +Mixin +Mongo +Moto +Mysql +NFS +NaN +Naik +Namenode +Namespace +Nextdoor +Nones +NotFound +Nullable +Nurmamat +OAuth +Oauth +Oauthlib +Okta +Oozie +Opsgenie +Optimise +PEM +PTarget +Pagerduty +Papermill +Parallelize +Parameterizing +Paramiko +Params +Pem +Pinot +Popen +Postgres +Postgresql +Potiuk +Pre +Precommit +Preprocessed +Proc +Protobuf +PublisherClient +Pubsub +Py +PyPI +Pylint +Pyspark +PythonOperator +Qingping +Qplum +Quantopian +Qubole +Quboles +Quoble +RBAC +Readme +Realtime +Rebasing +Rebrand +Reddit +Redhat +Reinitialising +Retrives +Riccomini +Roadmap +Robinhood +SIGTERM +SSHClient +SSHTunnelForwarder +SaaS +Sagemaker +Sasl +SecretManagerClient +Seedlist +Seki +Sendgrid +Siddharth +SlackHook +SparkPi +SparkR +SparkSQL +Sql +Sqlalchemy +Sqlite +Sqoop +Stackdriver +Standarization +StatsD +Statsd +Stringified +Subclasses +Subdirectory +Submodules +Subpackages +Subpath +SubscriberClient +Subtasks +Sumit +Swtch +Systemd +TCP +TLS +TTY +TThe +TZ +TaskInstance +Taskfail +Templated +Templating +Teradata +TextToSpeechClient +Tez +Thinknear +ToC +Tomasz +Tooltip +Tsai +UA +UNload +Uellendall +Umask +Un +Undead +Undeads +Unpausing +Unpickle +Upsert +Upsight +Uptime +Urbaszek +Url +Utils +Vendorize +Vertica +Vevo +WTF +WaiterModel +Wasb +WebClient +Webhook +Webserver +Werkzeug +Wiedmer +XCom +XComs +Xcom +Xero +Xiaodong +Yamllint +Yandex +Yieldr +Zego +Zendesk +Zhong +Zsh +Zymergen +aIRFLOW +abc +accessor +accountmaking +acessible +ack +ackIds +acknowledgement +actionCard +acurate +acyclic +adhoc +aijamalnk +airbnb +airfl +airflowignore +ajax +alertPolicies +alexvanboxel +allAuthenticatedUsers +allUsers +allowinsert +analyse +analytics +analyticsreporting +analyzeEntities +analyzeSentiment +analyzeSyntax +aoen +apache +api +apikey +apis +appbuilder +approle +arg +args +arn +arraysize +artwr +asc +ascii +asciiart +asia +assertEqualIgnoreMultipleSpaces +ast +async +athena +attemping +attr +attrs +auth +authMechanism +authenication +authenticator +authorised +autoclass +autocommit +autocomplete +autodetect +autodetected +autoenv +autogenerated +autorestart +autoscale +autoscaling +avro +aws +awsbatch +backend +backends +backfill +backfilled +backfilling +backfills +backoff +backport +backreference +backtick +backticks +balancer +baseOperator +basedn +basestring +basetaskrunner +bashrc +basph +batchGet +bc +bcc +beeen +behaviour +behaviours +behavoiur +bicket +bigquery +bigtable +bitshift +bolkedebruin +booktabs +boolean +booleans +bootDiskType +boto +botocore +bowser +bq +bugfix +bugfixes +buildType +bytestring +cacert +callables +cancelled +carbonite +cas +cassandra +casted +catchup +cattrs +ccache +celeryd

[GitHub] [airflow] atsalolikhin-spokeo edited a comment on issue #8388: Task instance details page blows up when no dagrun

atsalolikhin-spokeo edited a comment on issue #8388:

URL: https://github.com/apache/airflow/issues/8388#issuecomment-672169136

Thank you for re-opening this issue. I've installed the master version:

```

[airflow@ip-172-20-2-227 airflow]$ airflow version

2.0.0.dev0

[airflow@ip-172-20-2-227 airflow]$

```

I can't login through the Web UI, though. It's asking me for username and

password.

When I started the webserver, it said:

[2020-08-11 18:25:27,417] {manager.py:719} WARNING - No user yet created,

use flask fab command to do it.

I tried, but it didn't work. Help?

```

[airflow@ip-172-20-2-227 airflow]$ flask fab create-user

Role [Public]:

Username: admin

User first name: admin

User last name: admin

Email: ad...@example.com

Password:

Repeat for confirmation:

Usage: flask fab create-user [OPTIONS]

Error: Could not locate a Flask application. You did not provide the

"FLASK_APP" environment variable, and a "wsgi.py" or "app.py" module was not

found in the current directory.

[airflow@ip-172-20-2-227 airflow]$

```

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] kaxil commented on a change in pull request #10227: Use Hash of Serialized DAG to determine DAG is changed or not

kaxil commented on a change in pull request #10227:

URL: https://github.com/apache/airflow/pull/10227#discussion_r468781710

##

File path: airflow/models/serialized_dag.py

##

@@ -102,9 +109,11 @@ def write_dag(cls, dag: DAG, min_update_interval:

Optional[int] = None, session:

return

log.debug("Checking if DAG (%s) changed", dag.dag_id)

-serialized_dag_from_db: SerializedDagModel =

session.query(cls).get(dag.dag_id)

new_serialized_dag = cls(dag)

-if serialized_dag_from_db and (serialized_dag_from_db.data ==

new_serialized_dag.data):

+serialized_dag_hash_from_db = session.query(

+cls.dag_hash).filter(cls.dag_id == dag.dag_id).scalar()

+

+if serialized_dag_hash_from_db and (serialized_dag_hash_from_db ==

new_serialized_dag.dag_hash):

Review comment:

Updated

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] atsalolikhin-spokeo commented on issue #8388: Task instance details page blows up when no dagrun

atsalolikhin-spokeo commented on issue #8388: URL: https://github.com/apache/airflow/issues/8388#issuecomment-672194980 Okay. I used `sqlite3` to change the setting in `airflow.db` via CLI as you suggested. Now the UI is showing all 22 DAGs are unpaused. ```sql update dag set is_paused = '0'; ``` This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] mik-laj edited a comment on issue #8388: Task instance details page blows up when no dagrun

mik-laj edited a comment on issue #8388: URL: https://github.com/apache/airflow/issues/8388#issuecomment-672186288 You should compile JS files now, but this is a problematic process. https://github.com/apache/airflow/blob/master/CONTRIBUTING.rst#installing-yarn-and-its-packages Another solution: can you try to unpause the DAG with CLI and then go directly to the URL? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] kaxil closed issue #10116: Use Hash / SHA to compare Serialized DAGs

kaxil closed issue #10116: URL: https://github.com/apache/airflow/issues/10116 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] kaxil merged pull request #10227: Use Hash of Serialized DAG to determine DAG is changed or not

kaxil merged pull request #10227: URL: https://github.com/apache/airflow/pull/10227 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[airflow] branch master updated: Use Hash of Serialized DAG to determine DAG is changed or not (#10227)

This is an automated email from the ASF dual-hosted git repository.

kaxilnaik pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/airflow.git

The following commit(s) were added to refs/heads/master by this push:

new adce6f0 Use Hash of Serialized DAG to determine DAG is changed or not

(#10227)

adce6f0 is described below

commit adce6f029609e89f3651a89df40700589ec16237

Author: Kaxil Naik

AuthorDate: Tue Aug 11 22:31:55 2020 +0100

Use Hash of Serialized DAG to determine DAG is changed or not (#10227)

closes #10116

---

...c3a5a_add_dag_hash_column_to_serialized_dag_.py | 46 ++

airflow/models/serialized_dag.py | 11 --

tests/models/test_serialized_dag.py| 17

3 files changed, 62 insertions(+), 12 deletions(-)

diff --git

a/airflow/migrations/versions/da3f683c3a5a_add_dag_hash_column_to_serialized_dag_.py

b/airflow/migrations/versions/da3f683c3a5a_add_dag_hash_column_to_serialized_dag_.py

new file mode 100644

index 000..4dbc77a

--- /dev/null

+++

b/airflow/migrations/versions/da3f683c3a5a_add_dag_hash_column_to_serialized_dag_.py

@@ -0,0 +1,46 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+

+"""Add dag_hash Column to serialized_dag table

+

+Revision ID: da3f683c3a5a

+Revises: 8d48763f6d53

+Create Date: 2020-08-07 20:52:09.178296

+

+"""

+

+import sqlalchemy as sa

+from alembic import op

+

+# revision identifiers, used by Alembic.

+revision = 'da3f683c3a5a'

+down_revision = '8d48763f6d53'

+branch_labels = None

+depends_on = None

+

+

+def upgrade():

+"""Apply Add dag_hash Column to serialized_dag table"""

+op.add_column(

+'serialized_dag',