This is an automated email from the ASF dual-hosted git repository.

hvanhovell pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/master by this push:

new c9878a21295 [MINOR][CONNECT] Adding Proto Debug String to Job

Description

c9878a21295 is described below

commit c9878a212958bc54be529ef99f5e5d1ddf513ec8

Author: Martin Grund <martin.gr...@databricks.com>

AuthorDate: Mon Apr 3 22:09:26 2023 -0400

[MINOR][CONNECT] Adding Proto Debug String to Job Description

### What changes were proposed in this pull request?

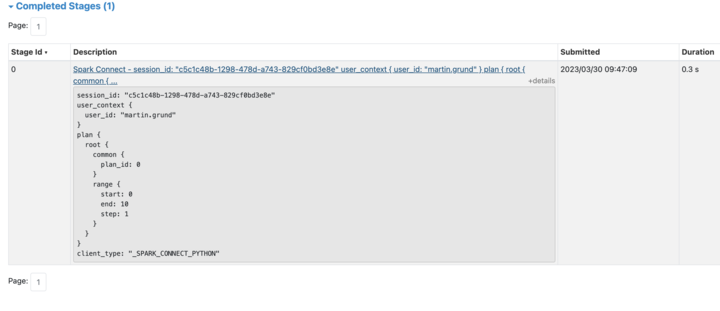

Instead of just showing the Scala callsite show the abbreviate version of

the proto message in the Spark UI.

### Why are the changes needed?

Debugging

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Manual

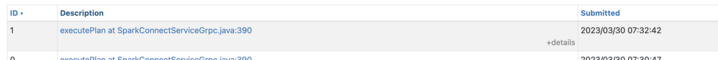

Before:

After:

Closes #40603 from grundprinzip/debug_info.

Authored-by: Martin Grund <martin.gr...@databricks.com>

Signed-off-by: Herman van Hovell <her...@databricks.com>

---

.../spark/sql/connect/service/SparkConnectStreamHandler.scala | 11 +++++++++++

1 file changed, 11 insertions(+)

diff --git

a/connector/connect/server/src/main/scala/org/apache/spark/sql/connect/service/SparkConnectStreamHandler.scala

b/connector/connect/server/src/main/scala/org/apache/spark/sql/connect/service/SparkConnectStreamHandler.scala

index 74983eeecf3..96e16623222 100644

---

a/connector/connect/server/src/main/scala/org/apache/spark/sql/connect/service/SparkConnectStreamHandler.scala

+++

b/connector/connect/server/src/main/scala/org/apache/spark/sql/connect/service/SparkConnectStreamHandler.scala

@@ -21,6 +21,7 @@ import scala.collection.JavaConverters._

import com.google.protobuf.ByteString

import io.grpc.stub.StreamObserver

+import org.apache.commons.lang3.StringUtils

import org.apache.spark.SparkEnv

import org.apache.spark.connect.proto

@@ -49,6 +50,16 @@ class SparkConnectStreamHandler(responseObserver:

StreamObserver[ExecutePlanResp

.getOrCreateIsolatedSession(v.getUserContext.getUserId, v.getSessionId)

.session

session.withActive {

+

+ // Add debug information to the query execution so that the jobs are

traceable.

+ val debugString = v.toString

+ session.sparkContext.setLocalProperty(

+ "callSite.short",

+ s"Spark Connect - ${StringUtils.abbreviate(debugString, 128)}")

+ session.sparkContext.setLocalProperty(

+ "callSite.long",

+ StringUtils.abbreviate(debugString, 2048))

+

v.getPlan.getOpTypeCase match {

case proto.Plan.OpTypeCase.COMMAND => handleCommand(session, v)

case proto.Plan.OpTypeCase.ROOT => handlePlan(session, v)

---------------------------------------------------------------------

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org