spark git commit: [SPARK-25425][SQL] Extra options should override session options in DataSource V2

Repository: spark

Updated Branches:

refs/heads/master bb2f069cf -> e06da95cd

[SPARK-25425][SQL] Extra options should override session options in DataSource

V2

## What changes were proposed in this pull request?

In the PR, I propose overriding session options by extra options in DataSource

V2. Extra options are more specific and set via `.option()`, and should

overwrite more generic session options. Entries from seconds map overwrites

entries with the same key from the first map, for example:

```Scala

scala> Map("option" -> false) ++ Map("option" -> true)

res0: scala.collection.immutable.Map[String,Boolean] = Map(option -> true)

```

## How was this patch tested?

Added a test for checking which option is propagated to a data source in

`load()`.

Closes #22413 from MaxGekk/session-options.

Lead-authored-by: Maxim Gekk

Co-authored-by: Dongjoon Hyun

Co-authored-by: Maxim Gekk

Signed-off-by: Dongjoon Hyun

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/e06da95c

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/e06da95c

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/e06da95c

Branch: refs/heads/master

Commit: e06da95cd9423f55cdb154a2778b0bddf7be984c

Parents: bb2f069

Author: Maxim Gekk

Authored: Sat Sep 15 17:24:11 2018 -0700

Committer: Dongjoon Hyun

Committed: Sat Sep 15 17:24:11 2018 -0700

--

.../org/apache/spark/sql/DataFrameReader.scala | 2 +-

.../org/apache/spark/sql/DataFrameWriter.scala | 8 +++--

.../sql/sources/v2/DataSourceV2Suite.scala | 35 +++-

.../sources/v2/SimpleWritableDataSource.scala | 6 +++-

4 files changed, 45 insertions(+), 6 deletions(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/e06da95c/sql/core/src/main/scala/org/apache/spark/sql/DataFrameReader.scala

--

diff --git a/sql/core/src/main/scala/org/apache/spark/sql/DataFrameReader.scala

b/sql/core/src/main/scala/org/apache/spark/sql/DataFrameReader.scala

index e6c2cba..fe69f25 100644

--- a/sql/core/src/main/scala/org/apache/spark/sql/DataFrameReader.scala

+++ b/sql/core/src/main/scala/org/apache/spark/sql/DataFrameReader.scala

@@ -202,7 +202,7 @@ class DataFrameReader private[sql](sparkSession:

SparkSession) extends Logging {

DataSourceOptions.PATHS_KEY ->

objectMapper.writeValueAsString(paths.toArray)

}

Dataset.ofRows(sparkSession, DataSourceV2Relation.create(

- ds, extraOptions.toMap ++ sessionOptions + pathsOption,

+ ds, sessionOptions ++ extraOptions.toMap + pathsOption,

userSpecifiedSchema = userSpecifiedSchema))

} else {

loadV1Source(paths: _*)

http://git-wip-us.apache.org/repos/asf/spark/blob/e06da95c/sql/core/src/main/scala/org/apache/spark/sql/DataFrameWriter.scala

--

diff --git a/sql/core/src/main/scala/org/apache/spark/sql/DataFrameWriter.scala

b/sql/core/src/main/scala/org/apache/spark/sql/DataFrameWriter.scala

index dfb8c47..188fce7 100644

--- a/sql/core/src/main/scala/org/apache/spark/sql/DataFrameWriter.scala

+++ b/sql/core/src/main/scala/org/apache/spark/sql/DataFrameWriter.scala

@@ -241,10 +241,12 @@ final class DataFrameWriter[T] private[sql](ds:

Dataset[T]) {

val source = cls.newInstance().asInstanceOf[DataSourceV2]

source match {

case provider: BatchWriteSupportProvider =>

- val options = extraOptions ++

- DataSourceV2Utils.extractSessionConfigs(source,

df.sparkSession.sessionState.conf)

+ val sessionOptions = DataSourceV2Utils.extractSessionConfigs(

+source,

+df.sparkSession.sessionState.conf)

+ val options = sessionOptions ++ extraOptions

- val relation = DataSourceV2Relation.create(source, options.toMap)

+ val relation = DataSourceV2Relation.create(source, options)

if (mode == SaveMode.Append) {

runCommand(df.sparkSession, "save") {

AppendData.byName(relation, df.logicalPlan)

http://git-wip-us.apache.org/repos/asf/spark/blob/e06da95c/sql/core/src/test/scala/org/apache/spark/sql/sources/v2/DataSourceV2Suite.scala

--

diff --git

a/sql/core/src/test/scala/org/apache/spark/sql/sources/v2/DataSourceV2Suite.scala

b/sql/core/src/test/scala/org/apache/spark/sql/sources/v2/DataSourceV2Suite.scala

index f6c3e0c..7cc8abc 100644

---

a/sql/core/src/test/scala/org/apache/spark/sql/sources/v2/DataSourceV2Suite.scala

+++

b/sql/core/src/test/scala/org/apache/spark/sql/sources/v2/DataSourceV2Suite.scala

@@ -17,6 +17,8 @@

package org.apache.sp

[2/2] spark git commit: [SPARK-25438][SQL][TEST] Fix FilterPushdownBenchmark to use the same memory assumption

[SPARK-25438][SQL][TEST] Fix FilterPushdownBenchmark to use the same memory assumption ## What changes were proposed in this pull request? This PR aims to fix three things in `FilterPushdownBenchmark`. **1. Use the same memory assumption.** The following configurations are used in ORC and Parquet. - Memory buffer for writing - parquet.block.size (default: 128MB) - orc.stripe.size (default: 64MB) - Compression chunk size - parquet.page.size (default: 1MB) - orc.compress.size (default: 256KB) SPARK-24692 used 1MB, the default value of `parquet.page.size`, for `parquet.block.size` and `orc.stripe.size`. But, it missed to match `orc.compress.size`. So, the current benchmark shows the result from ORC with 256KB memory for compression and Parquet with 1MB. To compare correctly, we need to be consistent. **2. Dictionary encoding should not be enforced for all cases.** SPARK-24206 enforced dictionary encoding for all test cases. This PR recovers the default behavior in general and enforces dictionary encoding only in case of `prepareStringDictTable`. **3. Generate test result on AWS r3.xlarge** SPARK-24206 generated the result on AWS in order to reproduce and compare easily. This PR also aims to update the result on the same machine again in the same reason. Specifically, AWS r3.xlarge with Instance Store is used. ## How was this patch tested? Manual. Enable the test cases and run `FilterPushdownBenchmark` on `AWS r3.xlarge`. It takes about 4 hours 15 minutes. Closes #22427 from dongjoon-hyun/SPARK-25438. Authored-by: Dongjoon Hyun Signed-off-by: Dongjoon Hyun Project: http://git-wip-us.apache.org/repos/asf/spark/repo Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/fefaa3c3 Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/fefaa3c3 Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/fefaa3c3 Branch: refs/heads/master Commit: fefaa3c30df2c56046370081cb51bfe68d26976b Parents: e06da95 Author: Dongjoon Hyun Authored: Sat Sep 15 17:48:39 2018 -0700 Committer: Dongjoon Hyun Committed: Sat Sep 15 17:48:39 2018 -0700 -- .../FilterPushdownBenchmark-results.txt | 912 +-- .../benchmark/FilterPushdownBenchmark.scala | 11 +- 2 files changed, 428 insertions(+), 495 deletions(-) -- - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[2/2] spark git commit: [SPARK-25438][SQL][TEST] Fix FilterPushdownBenchmark to use the same memory assumption

[SPARK-25438][SQL][TEST] Fix FilterPushdownBenchmark to use the same memory assumption ## What changes were proposed in this pull request? This PR aims to fix three things in `FilterPushdownBenchmark`. **1. Use the same memory assumption.** The following configurations are used in ORC and Parquet. - Memory buffer for writing - parquet.block.size (default: 128MB) - orc.stripe.size (default: 64MB) - Compression chunk size - parquet.page.size (default: 1MB) - orc.compress.size (default: 256KB) SPARK-24692 used 1MB, the default value of `parquet.page.size`, for `parquet.block.size` and `orc.stripe.size`. But, it missed to match `orc.compress.size`. So, the current benchmark shows the result from ORC with 256KB memory for compression and Parquet with 1MB. To compare correctly, we need to be consistent. **2. Dictionary encoding should not be enforced for all cases.** SPARK-24206 enforced dictionary encoding for all test cases. This PR recovers the default behavior in general and enforces dictionary encoding only in case of `prepareStringDictTable`. **3. Generate test result on AWS r3.xlarge** SPARK-24206 generated the result on AWS in order to reproduce and compare easily. This PR also aims to update the result on the same machine again in the same reason. Specifically, AWS r3.xlarge with Instance Store is used. ## How was this patch tested? Manual. Enable the test cases and run `FilterPushdownBenchmark` on `AWS r3.xlarge`. It takes about 4 hours 15 minutes. Closes #22427 from dongjoon-hyun/SPARK-25438. Authored-by: Dongjoon Hyun Signed-off-by: Dongjoon Hyun (cherry picked from commit fefaa3c30df2c56046370081cb51bfe68d26976b) Signed-off-by: Dongjoon Hyun Project: http://git-wip-us.apache.org/repos/asf/spark/repo Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/b40e5fee Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/b40e5fee Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/b40e5fee Branch: refs/heads/branch-2.4 Commit: b40e5feec2660891590e21807133a508cbd004d3 Parents: ae2ca0e Author: Dongjoon Hyun Authored: Sat Sep 15 17:48:39 2018 -0700 Committer: Dongjoon Hyun Committed: Sat Sep 15 17:48:53 2018 -0700 -- .../FilterPushdownBenchmark-results.txt | 912 +-- .../benchmark/FilterPushdownBenchmark.scala | 11 +- 2 files changed, 428 insertions(+), 495 deletions(-) -- - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[1/2] spark git commit: [SPARK-25438][SQL][TEST] Fix FilterPushdownBenchmark to use the same memory assumption

Repository: spark

Updated Branches:

refs/heads/master e06da95cd -> fefaa3c30

http://git-wip-us.apache.org/repos/asf/spark/blob/fefaa3c3/sql/core/benchmarks/FilterPushdownBenchmark-results.txt

--

diff --git a/sql/core/benchmarks/FilterPushdownBenchmark-results.txt

b/sql/core/benchmarks/FilterPushdownBenchmark-results.txt

index a75a15c..e680ddf 100644

--- a/sql/core/benchmarks/FilterPushdownBenchmark-results.txt

+++ b/sql/core/benchmarks/FilterPushdownBenchmark-results.txt

@@ -2,737 +2,669 @@

Pushdown for many distinct value case

-Java HotSpot(TM) 64-Bit Server VM 1.8.0_151-b12 on Mac OS X 10.12.6

-Intel(R) Core(TM) i7-7820HQ CPU @ 2.90GHz

-

+OpenJDK 64-Bit Server VM 1.8.0_181-b13 on Linux 3.10.0-862.3.2.el7.x86_64

+Intel(R) Xeon(R) CPU E5-2670 v2 @ 2.50GHz

Select 0 string row (value IS NULL): Best/Avg Time(ms)Rate(M/s) Per

Row(ns) Relative

-Parquet Vectorized8970 / 9122 1.8

570.3 1.0X

-Parquet Vectorized (Pushdown) 471 / 491 33.4

30.0 19.0X

-Native ORC Vectorized 7661 / 7853 2.1

487.0 1.2X

-Native ORC Vectorized (Pushdown) 1134 / 1161 13.9

72.1 7.9X

-

-Java HotSpot(TM) 64-Bit Server VM 1.8.0_151-b12 on Mac OS X 10.12.6

-Intel(R) Core(TM) i7-7820HQ CPU @ 2.90GHz

+Parquet Vectorized 11405 / 11485 1.4

725.1 1.0X

+Parquet Vectorized (Pushdown) 675 / 690 23.3

42.9 16.9X

+Native ORC Vectorized 7127 / 7170 2.2

453.1 1.6X

+Native ORC Vectorized (Pushdown) 519 / 541 30.3

33.0 22.0X

+OpenJDK 64-Bit Server VM 1.8.0_181-b13 on Linux 3.10.0-862.3.2.el7.x86_64

+Intel(R) Xeon(R) CPU E5-2670 v2 @ 2.50GHz

Select 0 string row ('7864320' < value < '7864320'): Best/Avg Time(ms)

Rate(M/s) Per Row(ns) Relative

-Parquet Vectorized9246 / 9297 1.7

587.8 1.0X

-Parquet Vectorized (Pushdown) 480 / 488 32.8

30.5 19.3X

-Native ORC Vectorized 7838 / 7850 2.0

498.3 1.2X

-Native ORC Vectorized (Pushdown) 1054 / 1118 14.9

67.0 8.8X

-

-Java HotSpot(TM) 64-Bit Server VM 1.8.0_151-b12 on Mac OS X 10.12.6

-Intel(R) Core(TM) i7-7820HQ CPU @ 2.90GHz

+Parquet Vectorized 11457 / 11473 1.4

728.4 1.0X

+Parquet Vectorized (Pushdown) 656 / 686 24.0

41.7 17.5X

+Native ORC Vectorized 7328 / 7342 2.1

465.9 1.6X

+Native ORC Vectorized (Pushdown) 539 / 565 29.2

34.2 21.3X

+OpenJDK 64-Bit Server VM 1.8.0_181-b13 on Linux 3.10.0-862.3.2.el7.x86_64

+Intel(R) Xeon(R) CPU E5-2670 v2 @ 2.50GHz

Select 1 string row (value = '7864320'): Best/Avg Time(ms)Rate(M/s) Per

Row(ns) Relative

-Parquet Vectorized8989 / 9100 1.7

571.5 1.0X

-Parquet Vectorized (Pushdown) 448 / 467 35.1

28.5 20.1X

-Native ORC Vectorized 7680 / 7768 2.0

488.3 1.2X

-Native ORC Vectorized (Pushdown) 1067 / 1118 14.7

67.8 8.4X

-

-Java HotSpot(TM) 64-Bit Server VM 1.8.0_151-b12 on Mac OS X 10.12.6

-Intel(R) Core(TM) i7-7820HQ CPU @ 2.90GHz

+Parquet Vectorized 11878 / 11888 1.3

755.2 1.0X

+Parquet Vectorized (Pushdown) 630 / 654 25.0

40.1 18.9X

+Native ORC Vectorized 7342 / 7362 2.1

466.8 1.6X

+Native ORC Vectorized (Pushdown) 519 / 537 30.3

33.0 22.9X

+OpenJDK 64-Bit Server VM 1.8.0_181-b13 on Linux 3.10.0-862.3.2.el7.x86_64

+Intel(R) Xeon(R) CPU E5-2670 v2 @ 2.50GHz

Select 1 string row (value <=> '7864320'): Best/Avg Time(ms)Rate(M/s)

Per Row(ns) Relative

-Parquet Vectorized9115 / 9266 1.7

579.5 1.0X

-Parquet Vectorized (Pushdown) 466 / 492 33.7

29.7 19.5X

-N

[1/2] spark git commit: [SPARK-25438][SQL][TEST] Fix FilterPushdownBenchmark to use the same memory assumption

Repository: spark

Updated Branches:

refs/heads/branch-2.4 ae2ca0e5d -> b40e5feec

http://git-wip-us.apache.org/repos/asf/spark/blob/b40e5fee/sql/core/benchmarks/FilterPushdownBenchmark-results.txt

--

diff --git a/sql/core/benchmarks/FilterPushdownBenchmark-results.txt

b/sql/core/benchmarks/FilterPushdownBenchmark-results.txt

index a75a15c..e680ddf 100644

--- a/sql/core/benchmarks/FilterPushdownBenchmark-results.txt

+++ b/sql/core/benchmarks/FilterPushdownBenchmark-results.txt

@@ -2,737 +2,669 @@

Pushdown for many distinct value case

-Java HotSpot(TM) 64-Bit Server VM 1.8.0_151-b12 on Mac OS X 10.12.6

-Intel(R) Core(TM) i7-7820HQ CPU @ 2.90GHz

-

+OpenJDK 64-Bit Server VM 1.8.0_181-b13 on Linux 3.10.0-862.3.2.el7.x86_64

+Intel(R) Xeon(R) CPU E5-2670 v2 @ 2.50GHz

Select 0 string row (value IS NULL): Best/Avg Time(ms)Rate(M/s) Per

Row(ns) Relative

-Parquet Vectorized8970 / 9122 1.8

570.3 1.0X

-Parquet Vectorized (Pushdown) 471 / 491 33.4

30.0 19.0X

-Native ORC Vectorized 7661 / 7853 2.1

487.0 1.2X

-Native ORC Vectorized (Pushdown) 1134 / 1161 13.9

72.1 7.9X

-

-Java HotSpot(TM) 64-Bit Server VM 1.8.0_151-b12 on Mac OS X 10.12.6

-Intel(R) Core(TM) i7-7820HQ CPU @ 2.90GHz

+Parquet Vectorized 11405 / 11485 1.4

725.1 1.0X

+Parquet Vectorized (Pushdown) 675 / 690 23.3

42.9 16.9X

+Native ORC Vectorized 7127 / 7170 2.2

453.1 1.6X

+Native ORC Vectorized (Pushdown) 519 / 541 30.3

33.0 22.0X

+OpenJDK 64-Bit Server VM 1.8.0_181-b13 on Linux 3.10.0-862.3.2.el7.x86_64

+Intel(R) Xeon(R) CPU E5-2670 v2 @ 2.50GHz

Select 0 string row ('7864320' < value < '7864320'): Best/Avg Time(ms)

Rate(M/s) Per Row(ns) Relative

-Parquet Vectorized9246 / 9297 1.7

587.8 1.0X

-Parquet Vectorized (Pushdown) 480 / 488 32.8

30.5 19.3X

-Native ORC Vectorized 7838 / 7850 2.0

498.3 1.2X

-Native ORC Vectorized (Pushdown) 1054 / 1118 14.9

67.0 8.8X

-

-Java HotSpot(TM) 64-Bit Server VM 1.8.0_151-b12 on Mac OS X 10.12.6

-Intel(R) Core(TM) i7-7820HQ CPU @ 2.90GHz

+Parquet Vectorized 11457 / 11473 1.4

728.4 1.0X

+Parquet Vectorized (Pushdown) 656 / 686 24.0

41.7 17.5X

+Native ORC Vectorized 7328 / 7342 2.1

465.9 1.6X

+Native ORC Vectorized (Pushdown) 539 / 565 29.2

34.2 21.3X

+OpenJDK 64-Bit Server VM 1.8.0_181-b13 on Linux 3.10.0-862.3.2.el7.x86_64

+Intel(R) Xeon(R) CPU E5-2670 v2 @ 2.50GHz

Select 1 string row (value = '7864320'): Best/Avg Time(ms)Rate(M/s) Per

Row(ns) Relative

-Parquet Vectorized8989 / 9100 1.7

571.5 1.0X

-Parquet Vectorized (Pushdown) 448 / 467 35.1

28.5 20.1X

-Native ORC Vectorized 7680 / 7768 2.0

488.3 1.2X

-Native ORC Vectorized (Pushdown) 1067 / 1118 14.7

67.8 8.4X

-

-Java HotSpot(TM) 64-Bit Server VM 1.8.0_151-b12 on Mac OS X 10.12.6

-Intel(R) Core(TM) i7-7820HQ CPU @ 2.90GHz

+Parquet Vectorized 11878 / 11888 1.3

755.2 1.0X

+Parquet Vectorized (Pushdown) 630 / 654 25.0

40.1 18.9X

+Native ORC Vectorized 7342 / 7362 2.1

466.8 1.6X

+Native ORC Vectorized (Pushdown) 519 / 537 30.3

33.0 22.9X

+OpenJDK 64-Bit Server VM 1.8.0_181-b13 on Linux 3.10.0-862.3.2.el7.x86_64

+Intel(R) Xeon(R) CPU E5-2670 v2 @ 2.50GHz

Select 1 string row (value <=> '7864320'): Best/Avg Time(ms)Rate(M/s)

Per Row(ns) Relative

-Parquet Vectorized9115 / 9266 1.7

579.5 1.0X

-Parquet Vectorized (Pushdown) 466 / 492 33.7

29.7 19.5

spark git commit: [SPARK-25423][SQL] Output "dataFilters" in DataSourceScanExec.metadata

Repository: spark

Updated Branches:

refs/heads/master 30aa37fca -> 4b9542e3a

[SPARK-25423][SQL] Output "dataFilters" in DataSourceScanExec.metadata

## What changes were proposed in this pull request?

Output `dataFilters` in `DataSourceScanExec.metadata`.

## How was this patch tested?

unit tests

Closes #22435 from wangyum/SPARK-25423.

Authored-by: Yuming Wang

Signed-off-by: Dongjoon Hyun

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/4b9542e3

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/4b9542e3

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/4b9542e3

Branch: refs/heads/master

Commit: 4b9542e3a3d0c493a05061be5a9f8d278c0ac980

Parents: 30aa37f

Author: Yuming Wang

Authored: Mon Sep 17 11:26:08 2018 -0700

Committer: Dongjoon Hyun

Committed: Mon Sep 17 11:26:08 2018 -0700

--

.../spark/sql/execution/DataSourceScanExec.scala| 1 +

.../DataSourceScanExecRedactionSuite.scala | 16

2 files changed, 17 insertions(+)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/4b9542e3/sql/core/src/main/scala/org/apache/spark/sql/execution/DataSourceScanExec.scala

--

diff --git

a/sql/core/src/main/scala/org/apache/spark/sql/execution/DataSourceScanExec.scala

b/sql/core/src/main/scala/org/apache/spark/sql/execution/DataSourceScanExec.scala

index 36ed016..738c066 100644

---

a/sql/core/src/main/scala/org/apache/spark/sql/execution/DataSourceScanExec.scala

+++

b/sql/core/src/main/scala/org/apache/spark/sql/execution/DataSourceScanExec.scala

@@ -284,6 +284,7 @@ case class FileSourceScanExec(

"Batched" -> supportsBatch.toString,

"PartitionFilters" -> seqToString(partitionFilters),

"PushedFilters" -> seqToString(pushedDownFilters),

+"DataFilters" -> seqToString(dataFilters),

"Location" -> locationDesc)

val withOptPartitionCount =

relation.partitionSchemaOption.map { _ =>

http://git-wip-us.apache.org/repos/asf/spark/blob/4b9542e3/sql/core/src/test/scala/org/apache/spark/sql/execution/DataSourceScanExecRedactionSuite.scala

--

diff --git

a/sql/core/src/test/scala/org/apache/spark/sql/execution/DataSourceScanExecRedactionSuite.scala

b/sql/core/src/test/scala/org/apache/spark/sql/execution/DataSourceScanExecRedactionSuite.scala

index c8d045a..11a1c9a 100644

---

a/sql/core/src/test/scala/org/apache/spark/sql/execution/DataSourceScanExecRedactionSuite.scala

+++

b/sql/core/src/test/scala/org/apache/spark/sql/execution/DataSourceScanExecRedactionSuite.scala

@@ -83,4 +83,20 @@ class DataSourceScanExecRedactionSuite extends QueryTest

with SharedSQLContext {

}

}

+ test("FileSourceScanExec metadata") {

+withTempPath { path =>

+ val dir = path.getCanonicalPath

+ spark.range(0, 10).write.parquet(dir)

+ val df = spark.read.parquet(dir)

+

+ assert(isIncluded(df.queryExecution, "Format"))

+ assert(isIncluded(df.queryExecution, "ReadSchema"))

+ assert(isIncluded(df.queryExecution, "Batched"))

+ assert(isIncluded(df.queryExecution, "PartitionFilters"))

+ assert(isIncluded(df.queryExecution, "PushedFilters"))

+ assert(isIncluded(df.queryExecution, "DataFilters"))

+ assert(isIncluded(df.queryExecution, "Location"))

+}

+ }

+

}

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

spark git commit: [SPARK-16323][SQL] Add IntegralDivide expression

Repository: spark

Updated Branches:

refs/heads/master 4b9542e3a -> 553af22f2

[SPARK-16323][SQL] Add IntegralDivide expression

## What changes were proposed in this pull request?

The PR takes over #14036 and it introduces a new expression `IntegralDivide` in

order to avoid the several unneded cast added previously.

In order to prove the performance gain, the following benchmark has been run:

```

test("Benchmark IntegralDivide") {

val r = new scala.util.Random(91)

val nData = 100

val testDataInt = (1 to nData).map(_ => (r.nextInt(), r.nextInt()))

val testDataLong = (1 to nData).map(_ => (r.nextLong(), r.nextLong()))

val testDataShort = (1 to nData).map(_ => (r.nextInt().toShort,

r.nextInt().toShort))

// old code

val oldExprsInt = testDataInt.map(x =>

Cast(Divide(Cast(Literal(x._1), DoubleType), Cast(Literal(x._2),

DoubleType)), LongType))

val oldExprsLong = testDataLong.map(x =>

Cast(Divide(Cast(Literal(x._1), DoubleType), Cast(Literal(x._2),

DoubleType)), LongType))

val oldExprsShort = testDataShort.map(x =>

Cast(Divide(Cast(Literal(x._1), DoubleType), Cast(Literal(x._2),

DoubleType)), LongType))

// new code

val newExprsInt = testDataInt.map(x => IntegralDivide(x._1, x._2))

val newExprsLong = testDataLong.map(x => IntegralDivide(x._1, x._2))

val newExprsShort = testDataShort.map(x => IntegralDivide(x._1, x._2))

Seq(("Long", "old", oldExprsLong),

("Long", "new", newExprsLong),

("Int", "old", oldExprsInt),

("Int", "new", newExprsShort),

("Short", "old", oldExprsShort),

("Short", "new", oldExprsShort)).foreach { case (dt, t, ds) =>

val start = System.nanoTime()

ds.foreach(e => e.eval(EmptyRow))

val endNoCodegen = System.nanoTime()

println(s"Running $nData op with $t code on $dt (no-codegen):

${(endNoCodegen - start) / 100} ms")

}

}

```

The results on my laptop are:

```

Running 100 op with old code on Long (no-codegen): 600 ms

Running 100 op with new code on Long (no-codegen): 112 ms

Running 100 op with old code on Int (no-codegen): 560 ms

Running 100 op with new code on Int (no-codegen): 135 ms

Running 100 op with old code on Short (no-codegen): 317 ms

Running 100 op with new code on Short (no-codegen): 153 ms

```

Showing a 2-5X improvement. The benchmark doesn't include code generation as it

is pretty hard to test the performance there as for such simple operations the

most of the time is spent in the code generation/compilation process.

## How was this patch tested?

added UTs

Closes #22395 from mgaido91/SPARK-16323.

Authored-by: Marco Gaido

Signed-off-by: Dongjoon Hyun

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/553af22f

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/553af22f

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/553af22f

Branch: refs/heads/master

Commit: 553af22f2c8ecdc039c8d06431564b1432e60d2d

Parents: 4b9542e

Author: Marco Gaido

Authored: Mon Sep 17 11:33:50 2018 -0700

Committer: Dongjoon Hyun

Committed: Mon Sep 17 11:33:50 2018 -0700

--

.../catalyst/analysis/FunctionRegistry.scala| 1 +

.../apache/spark/sql/catalyst/dsl/package.scala | 1 +

.../sql/catalyst/expressions/arithmetic.scala | 28

.../spark/sql/catalyst/parser/AstBuilder.scala | 2 +-

.../expressions/ArithmeticExpressionSuite.scala | 18 ++---

.../catalyst/parser/ExpressionParserSuite.scala | 4 +--

.../sql-tests/results/operators.sql.out | 8 +++---

7 files changed, 45 insertions(+), 17 deletions(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/553af22f/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/analysis/FunctionRegistry.scala

--

diff --git

a/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/analysis/FunctionRegistry.scala

b/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/analysis/FunctionRegistry.scala

index 77860e1..8b69a47 100644

---

a/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/analysis/FunctionRegistry.scala

+++

b/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/analysis/FunctionRegistry.scala

@@ -267,6 +267,7 @@ object FunctionRegistry {

expression[Subtract]("-"),

expression[Multiply]("*"),

expression[Divide]("/"),

+expression[IntegralDivide]("div"),

expression[Remainder]("%"),

// aggregate functions

http://git-wip-us.apache.org/repos/asf/spark/blob/

spark git commit: Revert "[SPARK-23173][SQL] rename spark.sql.fromJsonForceNullableSchema"

Repository: spark

Updated Branches:

refs/heads/master a71f6a175 -> cb1b55cf7

Revert "[SPARK-23173][SQL] rename spark.sql.fromJsonForceNullableSchema"

This reverts commit 6c7db7fd1ced1d143b1389d09990a620fc16be46.

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/cb1b55cf

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/cb1b55cf

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/cb1b55cf

Branch: refs/heads/master

Commit: cb1b55cf771018f1560f6b173cdd7c6ca8061bc7

Parents: a71f6a1

Author: Dongjoon Hyun

Authored: Wed Sep 19 14:33:40 2018 -0700

Committer: Dongjoon Hyun

Committed: Wed Sep 19 14:33:40 2018 -0700

--

.../sql/catalyst/expressions/jsonExpressions.scala | 4 ++--

.../org/apache/spark/sql/internal/SQLConf.scala | 16

2 files changed, 10 insertions(+), 10 deletions(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/cb1b55cf/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/jsonExpressions.scala

--

diff --git

a/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/jsonExpressions.scala

b/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/jsonExpressions.scala

index ade10ab..bd9090a 100644

---

a/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/jsonExpressions.scala

+++

b/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/jsonExpressions.scala

@@ -517,12 +517,12 @@ case class JsonToStructs(

timeZoneId: Option[String] = None)

extends UnaryExpression with TimeZoneAwareExpression with CodegenFallback

with ExpectsInputTypes {

- val forceNullableSchema: Boolean =

SQLConf.get.getConf(SQLConf.FROM_JSON_FORCE_NULLABLE_SCHEMA)

+ val forceNullableSchema =

SQLConf.get.getConf(SQLConf.FROM_JSON_FORCE_NULLABLE_SCHEMA)

// The JSON input data might be missing certain fields. We force the

nullability

// of the user-provided schema to avoid data corruptions. In particular, the

parquet-mr encoder

// can generate incorrect files if values are missing in columns declared as

non-nullable.

- val nullableSchema: DataType = if (forceNullableSchema) schema.asNullable

else schema

+ val nullableSchema = if (forceNullableSchema) schema.asNullable else schema

override def nullable: Boolean = true

http://git-wip-us.apache.org/repos/asf/spark/blob/cb1b55cf/sql/catalyst/src/main/scala/org/apache/spark/sql/internal/SQLConf.scala

--

diff --git

a/sql/catalyst/src/main/scala/org/apache/spark/sql/internal/SQLConf.scala

b/sql/catalyst/src/main/scala/org/apache/spark/sql/internal/SQLConf.scala

index 4499a35..b1e9b17 100644

--- a/sql/catalyst/src/main/scala/org/apache/spark/sql/internal/SQLConf.scala

+++ b/sql/catalyst/src/main/scala/org/apache/spark/sql/internal/SQLConf.scala

@@ -608,6 +608,14 @@ object SQLConf {

.stringConf

.createWithDefault("_corrupt_record")

+ val FROM_JSON_FORCE_NULLABLE_SCHEMA =

buildConf("spark.sql.fromJsonForceNullableSchema")

+.internal()

+.doc("When true, force the output schema of the from_json() function to be

nullable " +

+ "(including all the fields). Otherwise, the schema might not be

compatible with" +

+ "actual data, which leads to curruptions.")

+.booleanConf

+.createWithDefault(true)

+

val BROADCAST_TIMEOUT = buildConf("spark.sql.broadcastTimeout")

.doc("Timeout in seconds for the broadcast wait time in broadcast joins.")

.timeConf(TimeUnit.SECONDS)

@@ -1354,14 +1362,6 @@ object SQLConf {

"When this conf is not set, the value from

`spark.redaction.string.regex` is used.")

.fallbackConf(org.apache.spark.internal.config.STRING_REDACTION_PATTERN)

- val FROM_JSON_FORCE_NULLABLE_SCHEMA =

buildConf("spark.sql.function.fromJson.forceNullable")

-.internal()

-.doc("When true, force the output schema of the from_json() function to be

nullable " +

- "(including all the fields). Otherwise, the schema might not be

compatible with" +

- "actual data, which leads to corruptions.")

-.booleanConf

-.createWithDefault(true)

-

val CONCAT_BINARY_AS_STRING =

buildConf("spark.sql.function.concatBinaryAsString")

.doc("When this option is set to false and all inputs are binary,

`functions.concat` returns " +

"an output as binary. Otherwise, it returns as a string. ")

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

spark git commit: Revert "[SPARK-23173][SQL] rename spark.sql.fromJsonForceNullableSchema"

Repository: spark

Updated Branches:

refs/heads/branch-2.4 538ae62e0 -> 9fefb47fe

Revert "[SPARK-23173][SQL] rename spark.sql.fromJsonForceNullableSchema"

This reverts commit 6c7db7fd1ced1d143b1389d09990a620fc16be46.

(cherry picked from commit cb1b55cf771018f1560f6b173cdd7c6ca8061bc7)

Signed-off-by: Dongjoon Hyun

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/9fefb47f

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/9fefb47f

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/9fefb47f

Branch: refs/heads/branch-2.4

Commit: 9fefb47feab14b865978bdb8e6155a976de72416

Parents: 538ae62

Author: Dongjoon Hyun

Authored: Wed Sep 19 14:33:40 2018 -0700

Committer: Dongjoon Hyun

Committed: Wed Sep 19 14:38:21 2018 -0700

--

.../sql/catalyst/expressions/jsonExpressions.scala | 4 ++--

.../org/apache/spark/sql/internal/SQLConf.scala | 16

2 files changed, 10 insertions(+), 10 deletions(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/9fefb47f/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/jsonExpressions.scala

--

diff --git

a/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/jsonExpressions.scala

b/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/jsonExpressions.scala

index ade10ab..bd9090a 100644

---

a/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/jsonExpressions.scala

+++

b/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/jsonExpressions.scala

@@ -517,12 +517,12 @@ case class JsonToStructs(

timeZoneId: Option[String] = None)

extends UnaryExpression with TimeZoneAwareExpression with CodegenFallback

with ExpectsInputTypes {

- val forceNullableSchema: Boolean =

SQLConf.get.getConf(SQLConf.FROM_JSON_FORCE_NULLABLE_SCHEMA)

+ val forceNullableSchema =

SQLConf.get.getConf(SQLConf.FROM_JSON_FORCE_NULLABLE_SCHEMA)

// The JSON input data might be missing certain fields. We force the

nullability

// of the user-provided schema to avoid data corruptions. In particular, the

parquet-mr encoder

// can generate incorrect files if values are missing in columns declared as

non-nullable.

- val nullableSchema: DataType = if (forceNullableSchema) schema.asNullable

else schema

+ val nullableSchema = if (forceNullableSchema) schema.asNullable else schema

override def nullable: Boolean = true

http://git-wip-us.apache.org/repos/asf/spark/blob/9fefb47f/sql/catalyst/src/main/scala/org/apache/spark/sql/internal/SQLConf.scala

--

diff --git

a/sql/catalyst/src/main/scala/org/apache/spark/sql/internal/SQLConf.scala

b/sql/catalyst/src/main/scala/org/apache/spark/sql/internal/SQLConf.scala

index 5e2ac02..3e9cde4 100644

--- a/sql/catalyst/src/main/scala/org/apache/spark/sql/internal/SQLConf.scala

+++ b/sql/catalyst/src/main/scala/org/apache/spark/sql/internal/SQLConf.scala

@@ -588,6 +588,14 @@ object SQLConf {

.stringConf

.createWithDefault("_corrupt_record")

+ val FROM_JSON_FORCE_NULLABLE_SCHEMA =

buildConf("spark.sql.fromJsonForceNullableSchema")

+.internal()

+.doc("When true, force the output schema of the from_json() function to be

nullable " +

+ "(including all the fields). Otherwise, the schema might not be

compatible with" +

+ "actual data, which leads to curruptions.")

+.booleanConf

+.createWithDefault(true)

+

val BROADCAST_TIMEOUT = buildConf("spark.sql.broadcastTimeout")

.doc("Timeout in seconds for the broadcast wait time in broadcast joins.")

.timeConf(TimeUnit.SECONDS)

@@ -1334,14 +1342,6 @@ object SQLConf {

"When this conf is not set, the value from

`spark.redaction.string.regex` is used.")

.fallbackConf(org.apache.spark.internal.config.STRING_REDACTION_PATTERN)

- val FROM_JSON_FORCE_NULLABLE_SCHEMA =

buildConf("spark.sql.function.fromJson.forceNullable")

-.internal()

-.doc("When true, force the output schema of the from_json() function to be

nullable " +

- "(including all the fields). Otherwise, the schema might not be

compatible with" +

- "actual data, which leads to corruptions.")

-.booleanConf

-.createWithDefault(true)

-

val CONCAT_BINARY_AS_STRING =

buildConf("spark.sql.function.concatBinaryAsString")

.doc("When this option is set to false and all inputs are binary,

`functions.concat` returns " +

"an output as binary. Otherwise, it returns as a string. ")

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

spark git commit: [SPARK-25425][SQL][BACKPORT-2.4] Extra options should override session options in DataSource V2

Repository: spark

Updated Branches:

refs/heads/branch-2.4 9031c7848 -> a9a8d3a4b

[SPARK-25425][SQL][BACKPORT-2.4] Extra options should override session options

in DataSource V2

## What changes were proposed in this pull request?

In the PR, I propose overriding session options by extra options in DataSource

V2. Extra options are more specific and set via `.option()`, and should

overwrite more generic session options.

## How was this patch tested?

Added tests for read and write paths.

Closes #22474 from MaxGekk/session-options-2.4.

Authored-by: Maxim Gekk

Signed-off-by: Dongjoon Hyun

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/a9a8d3a4

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/a9a8d3a4

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/a9a8d3a4

Branch: refs/heads/branch-2.4

Commit: a9a8d3a4b92be89defd82d5f2eeb3f9af45c687d

Parents: 9031c78

Author: Maxim Gekk

Authored: Wed Sep 19 16:53:26 2018 -0700

Committer: Dongjoon Hyun

Committed: Wed Sep 19 16:53:26 2018 -0700

--

.../org/apache/spark/sql/DataFrameReader.scala | 2 +-

.../org/apache/spark/sql/DataFrameWriter.scala | 8 +++--

.../sql/sources/v2/DataSourceV2Suite.scala | 33

.../sources/v2/SimpleWritableDataSource.scala | 7 -

4 files changed, 45 insertions(+), 5 deletions(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/a9a8d3a4/sql/core/src/main/scala/org/apache/spark/sql/DataFrameReader.scala

--

diff --git a/sql/core/src/main/scala/org/apache/spark/sql/DataFrameReader.scala

b/sql/core/src/main/scala/org/apache/spark/sql/DataFrameReader.scala

index 371ec70..27a1af2 100644

--- a/sql/core/src/main/scala/org/apache/spark/sql/DataFrameReader.scala

+++ b/sql/core/src/main/scala/org/apache/spark/sql/DataFrameReader.scala

@@ -202,7 +202,7 @@ class DataFrameReader private[sql](sparkSession:

SparkSession) extends Logging {

DataSourceOptions.PATHS_KEY ->

objectMapper.writeValueAsString(paths.toArray)

}

Dataset.ofRows(sparkSession, DataSourceV2Relation.create(

- ds, extraOptions.toMap ++ sessionOptions + pathsOption,

+ ds, sessionOptions ++ extraOptions.toMap + pathsOption,

userSpecifiedSchema = userSpecifiedSchema))

} else {

loadV1Source(paths: _*)

http://git-wip-us.apache.org/repos/asf/spark/blob/a9a8d3a4/sql/core/src/main/scala/org/apache/spark/sql/DataFrameWriter.scala

--

diff --git a/sql/core/src/main/scala/org/apache/spark/sql/DataFrameWriter.scala

b/sql/core/src/main/scala/org/apache/spark/sql/DataFrameWriter.scala

index 4aeddfd..80ade7c 100644

--- a/sql/core/src/main/scala/org/apache/spark/sql/DataFrameWriter.scala

+++ b/sql/core/src/main/scala/org/apache/spark/sql/DataFrameWriter.scala

@@ -241,10 +241,12 @@ final class DataFrameWriter[T] private[sql](ds:

Dataset[T]) {

val source = cls.newInstance().asInstanceOf[DataSourceV2]

source match {

case ws: WriteSupport =>

- val options = extraOptions ++

- DataSourceV2Utils.extractSessionConfigs(source,

df.sparkSession.sessionState.conf)

+ val sessionOptions = DataSourceV2Utils.extractSessionConfigs(

+source,

+df.sparkSession.sessionState.conf)

+ val options = sessionOptions ++ extraOptions

+ val relation = DataSourceV2Relation.create(source, options)

- val relation = DataSourceV2Relation.create(source, options.toMap)

if (mode == SaveMode.Append) {

runCommand(df.sparkSession, "save") {

AppendData.byName(relation, df.logicalPlan)

http://git-wip-us.apache.org/repos/asf/spark/blob/a9a8d3a4/sql/core/src/test/scala/org/apache/spark/sql/sources/v2/DataSourceV2Suite.scala

--

diff --git

a/sql/core/src/test/scala/org/apache/spark/sql/sources/v2/DataSourceV2Suite.scala

b/sql/core/src/test/scala/org/apache/spark/sql/sources/v2/DataSourceV2Suite.scala

index 12beca2..bafde50 100644

---

a/sql/core/src/test/scala/org/apache/spark/sql/sources/v2/DataSourceV2Suite.scala

+++

b/sql/core/src/test/scala/org/apache/spark/sql/sources/v2/DataSourceV2Suite.scala

@@ -17,6 +17,7 @@

package org.apache.spark.sql.sources.v2

+import java.io.File

import java.util.{ArrayList, List => JList}

import test.org.apache.spark.sql.sources.v2._

@@ -322,6 +323,38 @@ class DataSourceV2Suite extends QueryTest with

SharedSQLContext {

checkCanonicalizedOutput(df, 2, 2)

checkCanonicalizedOutput(df.select('i), 2, 1)

}

+

+ test("SPARK-25425: extra options should override sessions opti

spark git commit: [SPARK-25489][ML][TEST] Refactor UDTSerializationBenchmark

Repository: spark

Updated Branches:

refs/heads/master a72d118cd -> 9bf04d854

[SPARK-25489][ML][TEST] Refactor UDTSerializationBenchmark

## What changes were proposed in this pull request?

Refactor `UDTSerializationBenchmark` to use main method and print the output as

a separate file.

Run blow command to generate benchmark results:

```

SPARK_GENERATE_BENCHMARK_FILES=1 build/sbt "mllib/test:runMain

org.apache.spark.mllib.linalg.UDTSerializationBenchmark"

```

## How was this patch tested?

Manual tests.

Closes #22499 from seancxmao/SPARK-25489.

Authored-by: seancxmao

Signed-off-by: Dongjoon Hyun

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/9bf04d85

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/9bf04d85

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/9bf04d85

Branch: refs/heads/master

Commit: 9bf04d8543d70ba8e55c970f2a8e2df872cf74f6

Parents: a72d118

Author: seancxmao

Authored: Sun Sep 23 13:34:06 2018 -0700

Committer: Dongjoon Hyun

Committed: Sun Sep 23 13:34:06 2018 -0700

--

.../UDTSerializationBenchmark-results.txt | 13

.../linalg/UDTSerializationBenchmark.scala | 70 ++--

2 files changed, 49 insertions(+), 34 deletions(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/9bf04d85/mllib/benchmarks/UDTSerializationBenchmark-results.txt

--

diff --git a/mllib/benchmarks/UDTSerializationBenchmark-results.txt

b/mllib/benchmarks/UDTSerializationBenchmark-results.txt

new file mode 100644

index 000..169f4c6

--- /dev/null

+++ b/mllib/benchmarks/UDTSerializationBenchmark-results.txt

@@ -0,0 +1,13 @@

+

+VectorUDT de/serialization

+

+

+Java HotSpot(TM) 64-Bit Server VM 1.8.0_131-b11 on Mac OS X 10.13.6

+Intel(R) Core(TM) i7-6820HQ CPU @ 2.70GHz

+

+VectorUDT de/serialization: Best/Avg Time(ms)Rate(M/s) Per

Row(ns) Relative

+

+serialize 144 / 206 0.0

143979.7 1.0X

+deserialize114 / 135 0.0

113802.6 1.3X

+

+

http://git-wip-us.apache.org/repos/asf/spark/blob/9bf04d85/mllib/src/test/scala/org/apache/spark/mllib/linalg/UDTSerializationBenchmark.scala

--

diff --git

a/mllib/src/test/scala/org/apache/spark/mllib/linalg/UDTSerializationBenchmark.scala

b/mllib/src/test/scala/org/apache/spark/mllib/linalg/UDTSerializationBenchmark.scala

index e2976e1..1a2216e 100644

---

a/mllib/src/test/scala/org/apache/spark/mllib/linalg/UDTSerializationBenchmark.scala

+++

b/mllib/src/test/scala/org/apache/spark/mllib/linalg/UDTSerializationBenchmark.scala

@@ -17,53 +17,55 @@

package org.apache.spark.mllib.linalg

-import org.apache.spark.benchmark.Benchmark

+import org.apache.spark.benchmark.{Benchmark, BenchmarkBase}

import org.apache.spark.sql.catalyst.encoders.ExpressionEncoder

/**

* Serialization benchmark for VectorUDT.

+ * To run this benchmark:

+ * {{{

+ * 1. without sbt: bin/spark-submit --class

+ * 2. build/sbt "mllib/test:runMain "

+ * 3. generate result: SPARK_GENERATE_BENCHMARK_FILES=1 build/sbt

"mllib/test:runMain "

+ *Results will be written to

"benchmarks/UDTSerializationBenchmark-results.txt".

+ * }}}

*/

-object UDTSerializationBenchmark {

+object UDTSerializationBenchmark extends BenchmarkBase {

- def main(args: Array[String]): Unit = {

-val iters = 1e2.toInt

-val numRows = 1e3.toInt

+ override def benchmark(): Unit = {

-val encoder = ExpressionEncoder[Vector].resolveAndBind()

+runBenchmark("VectorUDT de/serialization") {

+ val iters = 1e2.toInt

+ val numRows = 1e3.toInt

-val vectors = (1 to numRows).map { i =>

- Vectors.dense(Array.fill(1e5.toInt)(1.0 * i))

-}.toArray

-val rows = vectors.map(encoder.toRow)

+ val encoder = ExpressionEncoder[Vector].resolveAndBind()

-val benchmark = new Benchmark("VectorUDT de/serialization", numRows, iters)

+ val vectors = (1 to numRows).map { i =>

+Vectors.dense(Array.fill(1e5.toInt)(1.0 * i))

+ }.toArray

+ val rows = vectors.map(encoder.toRow)

-benchmark.addCase("serialize") { _ =>

- var sum = 0

- var i = 0

- while (i < numRows) {

-sum += encoder.toRow(vectors(i)).numFields

-i += 1

+ val benchmark = new Benchmark("

spark git commit: [SPARK-25478][SQL][TEST] Refactor CompressionSchemeBenchmark to use main method

Repository: spark Updated Branches: refs/heads/master d522a563a -> c79072aaf [SPARK-25478][SQL][TEST] Refactor CompressionSchemeBenchmark to use main method ## What changes were proposed in this pull request? Refactor `CompressionSchemeBenchmark` to use main method. Generate benchmark result: ```sh SPARK_GENERATE_BENCHMARK_FILES=1 build/sbt "sql/test:runMain org.apache.spark.sql.execution.columnar.compression.CompressionSchemeBenchmark" ``` ## How was this patch tested? manual tests Closes #22486 from wangyum/SPARK-25478. Lead-authored-by: Yuming Wang Co-authored-by: Dongjoon Hyun Signed-off-by: Dongjoon Hyun Project: http://git-wip-us.apache.org/repos/asf/spark/repo Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/c79072aa Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/c79072aa Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/c79072aa Branch: refs/heads/master Commit: c79072aafa2f406c342e393e0c61bb5cb3e89a7f Parents: d522a56 Author: Yuming Wang Authored: Sun Sep 23 20:46:40 2018 -0700 Committer: Dongjoon Hyun Committed: Sun Sep 23 20:46:40 2018 -0700 -- .../CompressionSchemeBenchmark-results.txt | 137 ++ .../CompressionSchemeBenchmark.scala| 138 +++ 2 files changed, 156 insertions(+), 119 deletions(-) -- http://git-wip-us.apache.org/repos/asf/spark/blob/c79072aa/sql/core/benchmarks/CompressionSchemeBenchmark-results.txt -- diff --git a/sql/core/benchmarks/CompressionSchemeBenchmark-results.txt b/sql/core/benchmarks/CompressionSchemeBenchmark-results.txt new file mode 100644 index 000..caa9378 --- /dev/null +++ b/sql/core/benchmarks/CompressionSchemeBenchmark-results.txt @@ -0,0 +1,137 @@ + +Compression Scheme Benchmark + + +OpenJDK 64-Bit Server VM 1.8.0_181-b13 on Linux 3.10.0-862.3.2.el7.x86_64 +Intel(R) Xeon(R) CPU E5-2670 v2 @ 2.50GHz +BOOLEAN Encode: Best/Avg Time(ms)Rate(M/s) Per Row(ns) Relative + +PassThrough(1.000) 4 /4 17998.9 0.1 1.0X +RunLengthEncoding(2.501) 680 / 680 98.7 10.1 0.0X +BooleanBitSet(0.125) 365 / 365183.9 5.4 0.0X + +OpenJDK 64-Bit Server VM 1.8.0_181-b13 on Linux 3.10.0-862.3.2.el7.x86_64 +Intel(R) Xeon(R) CPU E5-2670 v2 @ 2.50GHz +BOOLEAN Decode: Best/Avg Time(ms)Rate(M/s) Per Row(ns) Relative + +PassThrough144 / 144466.5 2.1 1.0X +RunLengthEncoding 679 / 679 98.9 10.1 0.2X +BooleanBitSet 1425 / 1431 47.1 21.2 0.1X + +OpenJDK 64-Bit Server VM 1.8.0_181-b13 on Linux 3.10.0-862.3.2.el7.x86_64 +Intel(R) Xeon(R) CPU E5-2670 v2 @ 2.50GHz +SHORT Encode (Lower Skew): Best/Avg Time(ms)Rate(M/s) Per Row(ns) Relative + +PassThrough(1.000) 7 /7 10115.0 0.1 1.0X +RunLengthEncoding(1.494) 1671 / 1672 40.2 24.9 0.0X + +OpenJDK 64-Bit Server VM 1.8.0_181-b13 on Linux 3.10.0-862.3.2.el7.x86_64 +Intel(R) Xeon(R) CPU E5-2670 v2 @ 2.50GHz +SHORT Decode (Lower Skew): Best/Avg Time(ms)Rate(M/s) Per Row(ns) Relative + +PassThrough 1128 / 1128 59.5 16.8 1.0X +RunLengthEncoding 1630 / 1633 41.2 24.3 0.7X + +OpenJDK 64-Bit Server VM 1.8.0_181-b13 on Linux 3.10.0-862.3.2.el7.x86_64 +Intel(R) Xeon(R) CPU E5-2670 v2 @ 2.50GHz +SHORT Encode (Higher Skew): Best/Avg Time(ms)Rate(M/s) Per Row(ns) Relative + +PassThrough(1.000) 7 /7 10164.2 0.1 1.0X +RunLengthEncoding(1.989) 1562 / 1563 43.0 23.3 0.0X + +OpenJDK 64-Bit Server VM 1.8.0_181-b13 on Linux 3.10.0-862.3.2.el7.x8

spark git commit: [SPARK-25460][BRANCH-2.4][SS] DataSourceV2: SS sources do not respect SessionConfigSupport

Repository: spark

Updated Branches:

refs/heads/branch-2.4 51d5378f8 -> ec384284e

[SPARK-25460][BRANCH-2.4][SS] DataSourceV2: SS sources do not respect

SessionConfigSupport

## What changes were proposed in this pull request?

This PR proposes to backport SPARK-25460 to branch-2.4:

This PR proposes to respect `SessionConfigSupport` in SS datasources as well.

Currently these are only respected in batch sources:

https://github.com/apache/spark/blob/e06da95cd9423f55cdb154a2778b0bddf7be984c/sql/core/src/main/scala/org/apache/spark/sql/DataFrameReader.scala#L198-L203

https://github.com/apache/spark/blob/e06da95cd9423f55cdb154a2778b0bddf7be984c/sql/core/src/main/scala/org/apache/spark/sql/DataFrameWriter.scala#L244-L249

If a developer makes a datasource V2 that supports both structured streaming

and batch jobs, batch jobs respect a specific configuration, let's say, URL to

connect and fetch data (which end users might not be aware of); however,

structured streaming ends up with not supporting this (and should explicitly be

set into options).

## How was this patch tested?

Unit tests were added.

Closes #22529 from HyukjinKwon/SPARK-25460-backport.

Authored-by: hyukjinkwon

Signed-off-by: Dongjoon Hyun

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/ec384284

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/ec384284

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/ec384284

Branch: refs/heads/branch-2.4

Commit: ec384284eb427d7573bd94c70e23e4137971

Parents: 51d5378

Author: hyukjinkwon

Authored: Mon Sep 24 08:49:19 2018 -0700

Committer: Dongjoon Hyun

Committed: Mon Sep 24 08:49:19 2018 -0700

--

.../spark/sql/streaming/DataStreamReader.scala | 18 ++-

.../spark/sql/streaming/DataStreamWriter.scala | 16 ++-

.../sources/StreamingDataSourceV2Suite.scala| 118 ---

3 files changed, 125 insertions(+), 27 deletions(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/ec384284/sql/core/src/main/scala/org/apache/spark/sql/streaming/DataStreamReader.scala

--

diff --git

a/sql/core/src/main/scala/org/apache/spark/sql/streaming/DataStreamReader.scala

b/sql/core/src/main/scala/org/apache/spark/sql/streaming/DataStreamReader.scala

index 7eb5db5..a9cb5e8 100644

---

a/sql/core/src/main/scala/org/apache/spark/sql/streaming/DataStreamReader.scala

+++

b/sql/core/src/main/scala/org/apache/spark/sql/streaming/DataStreamReader.scala

@@ -26,6 +26,7 @@ import org.apache.spark.internal.Logging

import org.apache.spark.sql.{AnalysisException, DataFrame, Dataset,

SparkSession}

import org.apache.spark.sql.execution.command.DDLUtils

import org.apache.spark.sql.execution.datasources.DataSource

+import org.apache.spark.sql.execution.datasources.v2.DataSourceV2Utils

import org.apache.spark.sql.execution.streaming.{StreamingRelation,

StreamingRelationV2}

import org.apache.spark.sql.sources.StreamSourceProvider

import org.apache.spark.sql.sources.v2.{ContinuousReadSupport,

DataSourceOptions, MicroBatchReadSupport}

@@ -158,7 +159,6 @@ final class DataStreamReader private[sql](sparkSession:

SparkSession) extends Lo

}

val ds = DataSource.lookupDataSource(source,

sparkSession.sqlContext.conf).newInstance()

-val options = new DataSourceOptions(extraOptions.asJava)

// We need to generate the V1 data source so we can pass it to the V2

relation as a shim.

// We can't be sure at this point whether we'll actually want to use V2,

since we don't know the

// writer or whether the query is continuous.

@@ -173,12 +173,16 @@ final class DataStreamReader private[sql](sparkSession:

SparkSession) extends Lo

}

ds match {

case s: MicroBatchReadSupport =>

+val sessionOptions = DataSourceV2Utils.extractSessionConfigs(

+ ds = s, conf = sparkSession.sessionState.conf)

+val options = sessionOptions ++ extraOptions

+val dataSourceOptions = new DataSourceOptions(options.asJava)

var tempReader: MicroBatchReader = null

val schema = try {

tempReader = s.createMicroBatchReader(

Optional.ofNullable(userSpecifiedSchema.orNull),

Utils.createTempDir(namePrefix =

s"temporaryReader").getCanonicalPath,

-options)

+dataSourceOptions)

tempReader.readSchema()

} finally {

// Stop tempReader to avoid side-effect thing

@@ -190,17 +194,21 @@ final class DataStreamReader private[sql](sparkSession:

SparkSession) extends Lo

Dataset.ofRows(

sparkSession,

StreamingRelationV2(

-s, source, extraOptions.toMap,

+s, source, options,

schema.toAttributes, v1Re

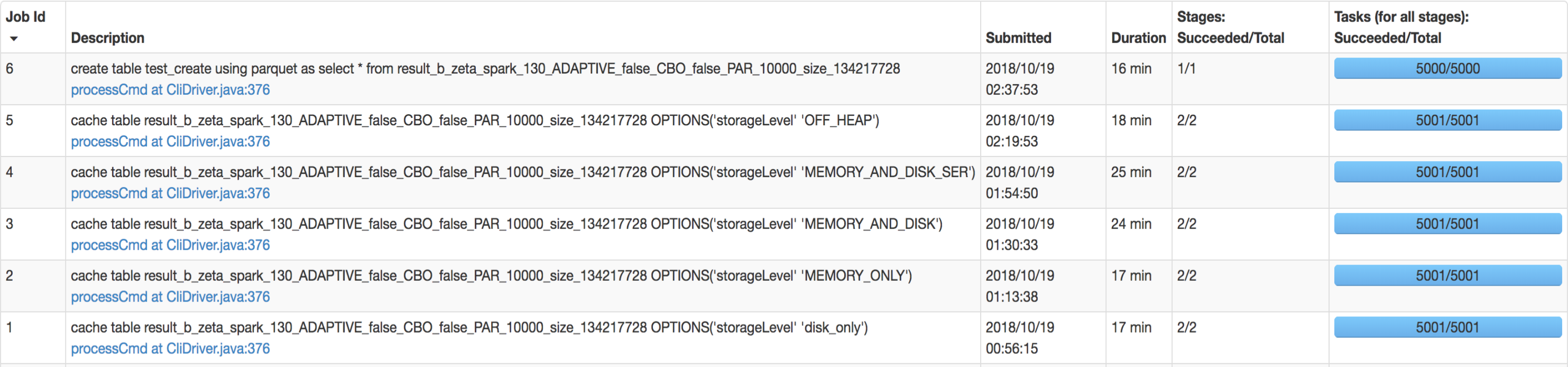

spark git commit: [SPARK-25503][CORE][WEBUI] Total task message in stage page is ambiguous

Repository: spark

Updated Branches:

refs/heads/master 2c9ffda1b -> 615792da4

[SPARK-25503][CORE][WEBUI] Total task message in stage page is ambiguous

## What changes were proposed in this pull request?

Test steps :

1) bin/spark-shell --conf spark.ui.retainedTasks=10

2) val rdd = sc.parallelize(1 to 1000, 1000)

3) rdd.count

Stage page tab in the UI will display 10 tasks, but display message is wrong.

It should reverse.

**Before fix :**

**After fix**

## How was this patch tested?

Manually tested

Closes #22525 from shahidki31/SparkUI.

Authored-by: Shahid

Signed-off-by: Dongjoon Hyun

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/615792da

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/615792da

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/615792da

Branch: refs/heads/master

Commit: 615792da42b3ee3c5f623c869fada17a3aa92884

Parents: 2c9ffda

Author: Shahid

Authored: Mon Sep 24 20:03:52 2018 -0700

Committer: Dongjoon Hyun

Committed: Mon Sep 24 20:03:52 2018 -0700

--

core/src/main/scala/org/apache/spark/ui/jobs/StagePage.scala | 2 +-

1 file changed, 1 insertion(+), 1 deletion(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/615792da/core/src/main/scala/org/apache/spark/ui/jobs/StagePage.scala

--

diff --git a/core/src/main/scala/org/apache/spark/ui/jobs/StagePage.scala

b/core/src/main/scala/org/apache/spark/ui/jobs/StagePage.scala

index fd6a298..7428bbe 100644

--- a/core/src/main/scala/org/apache/spark/ui/jobs/StagePage.scala

+++ b/core/src/main/scala/org/apache/spark/ui/jobs/StagePage.scala

@@ -133,7 +133,7 @@ private[ui] class StagePage(parent: StagesTab, store:

AppStatusStore) extends We

val totalTasksNumStr = if (totalTasks == storedTasks) {

s"$totalTasks"

} else {

- s"$storedTasks, showing ${totalTasks}"

+ s"$totalTasks, showing $storedTasks"

}

val summary =

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

spark git commit: [SPARK-25503][CORE][WEBUI] Total task message in stage page is ambiguous

Repository: spark

Updated Branches:

refs/heads/branch-2.4 ffc081c8f -> e4c03e822

[SPARK-25503][CORE][WEBUI] Total task message in stage page is ambiguous

## What changes were proposed in this pull request?

Test steps :

1) bin/spark-shell --conf spark.ui.retainedTasks=10

2) val rdd = sc.parallelize(1 to 1000, 1000)

3) rdd.count

Stage page tab in the UI will display 10 tasks, but display message is wrong.

It should reverse.

**Before fix :**

**After fix**

## How was this patch tested?

Manually tested

Closes #22525 from shahidki31/SparkUI.

Authored-by: Shahid

Signed-off-by: Dongjoon Hyun

(cherry picked from commit 615792da42b3ee3c5f623c869fada17a3aa92884)

Signed-off-by: Dongjoon Hyun

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/e4c03e82

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/e4c03e82

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/e4c03e82

Branch: refs/heads/branch-2.4

Commit: e4c03e82278791fcc725600dc5b1f31741340139

Parents: ffc081c

Author: Shahid

Authored: Mon Sep 24 20:03:52 2018 -0700

Committer: Dongjoon Hyun

Committed: Mon Sep 24 20:04:26 2018 -0700

--

core/src/main/scala/org/apache/spark/ui/jobs/StagePage.scala | 2 +-

1 file changed, 1 insertion(+), 1 deletion(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/e4c03e82/core/src/main/scala/org/apache/spark/ui/jobs/StagePage.scala

--

diff --git a/core/src/main/scala/org/apache/spark/ui/jobs/StagePage.scala

b/core/src/main/scala/org/apache/spark/ui/jobs/StagePage.scala

index fd6a298..7428bbe 100644

--- a/core/src/main/scala/org/apache/spark/ui/jobs/StagePage.scala

+++ b/core/src/main/scala/org/apache/spark/ui/jobs/StagePage.scala

@@ -133,7 +133,7 @@ private[ui] class StagePage(parent: StagesTab, store:

AppStatusStore) extends We

val totalTasksNumStr = if (totalTasks == storedTasks) {

s"$totalTasks"

} else {

- s"$storedTasks, showing ${totalTasks}"

+ s"$totalTasks, showing $storedTasks"

}

val summary =

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

spark git commit: [SPARK-25503][CORE][WEBUI] Total task message in stage page is ambiguous

Repository: spark

Updated Branches:

refs/heads/branch-2.3 12717ba0e -> 9674d083e

[SPARK-25503][CORE][WEBUI] Total task message in stage page is ambiguous

## What changes were proposed in this pull request?

Test steps :

1) bin/spark-shell --conf spark.ui.retainedTasks=10

2) val rdd = sc.parallelize(1 to 1000, 1000)

3) rdd.count

Stage page tab in the UI will display 10 tasks, but display message is wrong.

It should reverse.

**Before fix :**

**After fix**

## How was this patch tested?

Manually tested

Closes #22525 from shahidki31/SparkUI.

Authored-by: Shahid

Signed-off-by: Dongjoon Hyun

(cherry picked from commit 615792da42b3ee3c5f623c869fada17a3aa92884)

Signed-off-by: Dongjoon Hyun

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/9674d083

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/9674d083

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/9674d083

Branch: refs/heads/branch-2.3

Commit: 9674d083eca9e1fe0f5e8ea63640f25c907017ec

Parents: 12717ba

Author: Shahid

Authored: Mon Sep 24 20:03:52 2018 -0700

Committer: Dongjoon Hyun

Committed: Mon Sep 24 20:04:52 2018 -0700

--

core/src/main/scala/org/apache/spark/ui/jobs/StagePage.scala | 2 +-

1 file changed, 1 insertion(+), 1 deletion(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/9674d083/core/src/main/scala/org/apache/spark/ui/jobs/StagePage.scala

--

diff --git a/core/src/main/scala/org/apache/spark/ui/jobs/StagePage.scala

b/core/src/main/scala/org/apache/spark/ui/jobs/StagePage.scala

index 365a974..3ba7d4a 100644

--- a/core/src/main/scala/org/apache/spark/ui/jobs/StagePage.scala

+++ b/core/src/main/scala/org/apache/spark/ui/jobs/StagePage.scala

@@ -133,7 +133,7 @@ private[ui] class StagePage(parent: StagesTab, store:

AppStatusStore) extends We

val totalTasksNumStr = if (totalTasks == storedTasks) {

s"$totalTasks"

} else {

- s"$storedTasks, showing ${totalTasks}"

+ s"$totalTasks, showing $storedTasks"

}

val summary =

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

spark git commit: [SPARK-25486][TEST] Refactor SortBenchmark to use main method

Repository: spark

Updated Branches:

refs/heads/master 9cbd001e2 -> 04db03537

[SPARK-25486][TEST] Refactor SortBenchmark to use main method

## What changes were proposed in this pull request?

Refactor SortBenchmark to use main method.

Generate benchmark result:

```

SPARK_GENERATE_BENCHMARK_FILES=1 build/sbt "sql/test:runMain

org.apache.spark.sql.execution.benchmark.SortBenchmark"

```

## How was this patch tested?

manual tests

Closes #22495 from yucai/SPARK-25486.

Authored-by: yucai

Signed-off-by: Dongjoon Hyun

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/04db0353

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/04db0353

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/04db0353

Branch: refs/heads/master

Commit: 04db035378012907c93f6e5b4faa6ec11f1fc67b

Parents: 9cbd001

Author: yucai

Authored: Tue Sep 25 11:13:05 2018 -0700

Committer: Dongjoon Hyun

Committed: Tue Sep 25 11:13:05 2018 -0700

--

sql/core/benchmarks/SortBenchmark-results.txt | 17 +

.../sql/execution/benchmark/SortBenchmark.scala | 38 +---

2 files changed, 33 insertions(+), 22 deletions(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/04db0353/sql/core/benchmarks/SortBenchmark-results.txt

--

diff --git a/sql/core/benchmarks/SortBenchmark-results.txt

b/sql/core/benchmarks/SortBenchmark-results.txt

new file mode 100644

index 000..0d00a0c

--- /dev/null

+++ b/sql/core/benchmarks/SortBenchmark-results.txt

@@ -0,0 +1,17 @@

+

+radix sort

+

+

+Java HotSpot(TM) 64-Bit Server VM 1.8.0_162-b12 on Mac OS X 10.13.6

+Intel(R) Core(TM) i7-7820HQ CPU @ 2.90GHz

+

+radix sort 2500: Best/Avg Time(ms)Rate(M/s) Per

Row(ns) Relative

+

+reference TimSort key prefix array 11770 / 11960 2.1

470.8 1.0X

+reference Arrays.sort 2106 / 2128 11.9

84.3 5.6X

+radix sort one byte 93 / 100269.7

3.7 126.9X

+radix sort two bytes 171 / 179146.0

6.9 68.7X

+radix sort eight bytes 659 / 664 37.9

26.4 17.9X

+radix sort key prefix array 1024 / 1053 24.4

41.0 11.5X

+

+

http://git-wip-us.apache.org/repos/asf/spark/blob/04db0353/sql/core/src/test/scala/org/apache/spark/sql/execution/benchmark/SortBenchmark.scala

--

diff --git

a/sql/core/src/test/scala/org/apache/spark/sql/execution/benchmark/SortBenchmark.scala

b/sql/core/src/test/scala/org/apache/spark/sql/execution/benchmark/SortBenchmark.scala

index 17619ec..958a064 100644

---

a/sql/core/src/test/scala/org/apache/spark/sql/execution/benchmark/SortBenchmark.scala

+++

b/sql/core/src/test/scala/org/apache/spark/sql/execution/benchmark/SortBenchmark.scala

@@ -19,7 +19,7 @@ package org.apache.spark.sql.execution.benchmark

import java.util.{Arrays, Comparator}

-import org.apache.spark.benchmark.Benchmark

+import org.apache.spark.benchmark.{Benchmark, BenchmarkBase}

import org.apache.spark.unsafe.array.LongArray

import org.apache.spark.unsafe.memory.MemoryBlock

import org.apache.spark.util.collection.Sorter

@@ -28,12 +28,15 @@ import org.apache.spark.util.random.XORShiftRandom

/**

* Benchmark to measure performance for aggregate primitives.

- * To run this:

- * build/sbt "sql/test-only *benchmark.SortBenchmark"

- *

- * Benchmarks in this file are skipped in normal builds.

+ * {{{

+ * To run this benchmark:

+ * 1. without sbt: bin/spark-submit --class

+ * 2. build/sbt "sql/test:runMain "

+ * 3. generate result: SPARK_GENERATE_BENCHMARK_FILES=1 build/sbt

"sql/test:runMain "

+ * Results will be written to "benchmarks/-results.txt".

+ * }}}

*/

-class SortBenchmark extends BenchmarkWithCodegen {

+object SortBenchmark extends BenchmarkBase {

private def referenceKeyPrefixSort(buf: LongArray, lo: Int, hi: Int, refCmp:

PrefixComparator) {

val sortBuffer = new LongArray(MemoryBlock.fromLongArray(new

Array[Long](buf.size().toInt)))

@@ -54,10 +57,10 @@ class SortBenchmark extends BenchmarkWithCodegen {

new LongArray(MemoryBlock.fromLongArray(extended)))

}

- ignore("sort") {

+ def sortBenchmark(): Unit = {

val size = 2500

spark git commit: [SPARK-25534][SQL] Make `SQLHelper` trait

Repository: spark

Updated Branches:

refs/heads/master 473d0d862 -> 81cbcca60

[SPARK-25534][SQL] Make `SQLHelper` trait

## What changes were proposed in this pull request?

Currently, Spark has 7 `withTempPath` and 6 `withSQLConf` functions. This PR

aims to remove duplicated and inconsistent code and reduce them to the

following meaningful implementations.

**withTempPath**

- `SQLHelper.withTempPath`: The one which was used in `SQLTestUtils`.

**withSQLConf**

- `SQLHelper.withSQLConf`: The one which was used in `PlanTest`.

- `ExecutorSideSQLConfSuite.withSQLConf`: The one which doesn't throw

`AnalysisException` on StaticConf changes.

- `SQLTestUtils.withSQLConf`: The one which overrides intentionally to change

the active session.

```scala

protected override def withSQLConf(pairs: (String, String)*)(f: => Unit):

Unit = {

SparkSession.setActiveSession(spark)

super.withSQLConf(pairs: _*)(f)

}

```

## How was this patch tested?

Pass the Jenkins with the existing tests.

Closes #22548 from dongjoon-hyun/SPARK-25534.

Authored-by: Dongjoon Hyun

Signed-off-by: Dongjoon Hyun

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/81cbcca6

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/81cbcca6

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/81cbcca6

Branch: refs/heads/master

Commit: 81cbcca60099fd267492769b465d01e90d7deeac

Parents: 473d0d8

Author: Dongjoon Hyun

Authored: Tue Sep 25 23:03:54 2018 -0700

Committer: Dongjoon Hyun

Committed: Tue Sep 25 23:03:54 2018 -0700

--

.../spark/sql/catalyst/plans/PlanTest.scala | 31 +-

.../spark/sql/catalyst/plans/SQLHelper.scala| 64

.../benchmark/DataSourceReadBenchmark.scala | 23 +--

.../benchmark/FilterPushdownBenchmark.scala | 24 +---

.../datasources/csv/CSVBenchmarks.scala | 12 +---

.../datasources/json/JsonBenchmarks.scala | 11 +---

.../streaming/CheckpointFileManagerSuite.scala | 10 +--

.../apache/spark/sql/test/SQLTestUtils.scala| 13

.../spark/sql/hive/orc/OrcReadBenchmark.scala | 25 ++--

9 files changed, 81 insertions(+), 132 deletions(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/81cbcca6/sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/plans/PlanTest.scala

--

diff --git

a/sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/plans/PlanTest.scala

b/sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/plans/PlanTest.scala

index 67740c3..3081ff9 100644

---

a/sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/plans/PlanTest.scala

+++

b/sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/plans/PlanTest.scala

@@ -22,7 +22,6 @@ import org.scalatest.Suite

import org.scalatest.Tag

import org.apache.spark.SparkFunSuite

-import org.apache.spark.sql.AnalysisException

import org.apache.spark.sql.catalyst.analysis.SimpleAnalyzer

import org.apache.spark.sql.catalyst.expressions._

import org.apache.spark.sql.catalyst.expressions.CodegenObjectFactoryMode

@@ -57,7 +56,7 @@ trait CodegenInterpretedPlanTest extends PlanTest {

* Provides helper methods for comparing plans, but without the overhead of

* mandating a FunSuite.

*/

-trait PlanTestBase extends PredicateHelper { self: Suite =>

+trait PlanTestBase extends PredicateHelper with SQLHelper { self: Suite =>

// TODO(gatorsmile): remove this from PlanTest and all the analyzer rules

protected def conf = SQLConf.get

@@ -174,32 +173,4 @@ trait PlanTestBase extends PredicateHelper { self: Suite =>

plan1 == plan2

}

}

-

- /**

- * Sets all SQL configurations specified in `pairs`, calls `f`, and then

restores all SQL

- * configurations.

- */

- protected def withSQLConf(pairs: (String, String)*)(f: => Unit): Unit = {

-val conf = SQLConf.get

-val (keys, values) = pairs.unzip

-val currentValues = keys.map { key =>

- if (conf.contains(key)) {

-Some(conf.getConfString(key))

- } else {

-None

- }

-}

-(keys, values).zipped.foreach { (k, v) =>

- if (SQLConf.staticConfKeys.contains(k)) {

-throw new AnalysisException(s"Cannot modify the value of a static

config: $k")

- }

- conf.setConfString(k, v)

-}

-try f finally {

- keys.zip(currentValues).foreach {

-case (key, Some(value)) => conf.setConfString(key, value)

-case (key, None) => conf.unsetConf(key)

- }

-}

- }

}

http://git-wip-us.apache.org/repos/asf/spark/blob/81cbcca6/sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/plans/SQLHelper.scala

--

diff --git

a/sql/catalyst/src/t

spark git commit: [SPARK-25425][SQL][BACKPORT-2.3] Extra options should override session options in DataSource V2

Repository: spark

Updated Branches:

refs/heads/branch-2.3 9674d083e -> cbb228e48

[SPARK-25425][SQL][BACKPORT-2.3] Extra options should override session options

in DataSource V2

## What changes were proposed in this pull request?

In the PR, I propose overriding session options by extra options in DataSource

V2. Extra options are more specific and set via `.option()`, and should

overwrite more generic session options.

## How was this patch tested?

Added tests for read and write paths.

Closes #22489 from MaxGekk/session-options-2.3.

Authored-by: Maxim Gekk

Signed-off-by: Dongjoon Hyun

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/cbb228e4

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/cbb228e4

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/cbb228e4

Branch: refs/heads/branch-2.3

Commit: cbb228e48bb046e7d88d6bf1c9b9e3b252241552

Parents: 9674d08

Author: Maxim Gekk

Authored: Tue Sep 25 23:35:57 2018 -0700

Committer: Dongjoon Hyun

Committed: Tue Sep 25 23:35:57 2018 -0700

--

.../org/apache/spark/sql/DataFrameReader.scala | 8 ++--

.../org/apache/spark/sql/DataFrameWriter.scala | 8 ++--

.../sql/sources/v2/DataSourceV2Suite.scala | 50

.../sources/v2/SimpleWritableDataSource.scala | 7 ++-

4 files changed, 56 insertions(+), 17 deletions(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/cbb228e4/sql/core/src/main/scala/org/apache/spark/sql/DataFrameReader.scala

--

diff --git a/sql/core/src/main/scala/org/apache/spark/sql/DataFrameReader.scala

b/sql/core/src/main/scala/org/apache/spark/sql/DataFrameReader.scala

index 395e1c9..1d74b35 100644

--- a/sql/core/src/main/scala/org/apache/spark/sql/DataFrameReader.scala

+++ b/sql/core/src/main/scala/org/apache/spark/sql/DataFrameReader.scala

@@ -190,10 +190,10 @@ class DataFrameReader private[sql](sparkSession:

SparkSession) extends Logging {

val cls = DataSource.lookupDataSource(source,

sparkSession.sessionState.conf)

if (classOf[DataSourceV2].isAssignableFrom(cls)) {

val ds = cls.newInstance()

- val options = new DataSourceOptions((extraOptions ++

-DataSourceV2Utils.extractSessionConfigs(

- ds = ds.asInstanceOf[DataSourceV2],

- conf = sparkSession.sessionState.conf)).asJava)

+ val sessionOptions = DataSourceV2Utils.extractSessionConfigs(

+ds = ds.asInstanceOf[DataSourceV2],

+conf = sparkSession.sessionState.conf)

+ val options = new DataSourceOptions((sessionOptions ++

extraOptions).asJava)

// Streaming also uses the data source V2 API. So it may be that the

data source implements

// v2, but has no v2 implementation for batch reads. In that case, we

fall back to loading

http://git-wip-us.apache.org/repos/asf/spark/blob/cbb228e4/sql/core/src/main/scala/org/apache/spark/sql/DataFrameWriter.scala

--

diff --git a/sql/core/src/main/scala/org/apache/spark/sql/DataFrameWriter.scala

b/sql/core/src/main/scala/org/apache/spark/sql/DataFrameWriter.scala

index 6c9fb52..3fcefb1 100644

--- a/sql/core/src/main/scala/org/apache/spark/sql/DataFrameWriter.scala

+++ b/sql/core/src/main/scala/org/apache/spark/sql/DataFrameWriter.scala

@@ -243,10 +243,10 @@ final class DataFrameWriter[T] private[sql](ds:

Dataset[T]) {

val ds = cls.newInstance()

ds match {

case ws: WriteSupport =>

- val options = new DataSourceOptions((extraOptions ++

-DataSourceV2Utils.extractSessionConfigs(

- ds = ds.asInstanceOf[DataSourceV2],

- conf = df.sparkSession.sessionState.conf)).asJava)

+ val sessionOptions = DataSourceV2Utils.extractSessionConfigs(

+ds = ds.asInstanceOf[DataSourceV2],

+conf = df.sparkSession.sessionState.conf)

+ val options = new DataSourceOptions((sessionOptions ++

extraOptions).asJava)

// Using a timestamp and a random UUID to distinguish different

writing jobs. This is good

// enough as there won't be tons of writing jobs created at the same

second.

val jobId = new SimpleDateFormat("MMddHHmmss", Locale.US)

http://git-wip-us.apache.org/repos/asf/spark/blob/cbb228e4/sql/core/src/test/scala/org/apache/spark/sql/sources/v2/DataSourceV2Suite.scala

--

diff --git

a/sql/core/src/test/scala/org/apache/spark/sql/sources/v2/DataSourceV2Suite.scala

b/sql/core/src/test/scala/org/apache/spark/sql/sources/v2/DataSourceV2Suite.scala

index 6ad0e5f..ec81e89 100644

---

a/sql/core/src/test/scala/org/apache/spark/sql/sources/v2/DataSourceV2Suite.scala

+++

spark git commit: [SPARK-24519][CORE] Compute SHUFFLE_MIN_NUM_PARTS_TO_HIGHLY_COMPRESS only once

Repository: spark

Updated Branches:

refs/heads/master bd2ae857d -> e702fb1d5

[SPARK-24519][CORE] Compute SHUFFLE_MIN_NUM_PARTS_TO_HIGHLY_COMPRESS only once

## What changes were proposed in this pull request?

Previously SPARK-24519 created a modifiable config

SHUFFLE_MIN_NUM_PARTS_TO_HIGHLY_COMPRESS. However, the config is being parsed

for every creation of MapStatus, which could be very expensive. Another problem

with the previous approach is that it created the illusion that this can be

changed dynamically at runtime, which was not true. This PR changes it so the

config is computed only once.

## How was this patch tested?

Removed a test case that's no longer valid.

Closes #22521 from rxin/SPARK-24519.

Authored-by: Reynold Xin

Signed-off-by: Dongjoon Hyun

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/e702fb1d

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/e702fb1d

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/e702fb1d

Branch: refs/heads/master

Commit: e702fb1d5218d062fcb8e618b92dad7958eb4062

Parents: bd2ae85

Author: Reynold Xin

Authored: Wed Sep 26 10:15:16 2018 -0700

Committer: Dongjoon Hyun

Committed: Wed Sep 26 10:15:16 2018 -0700

--

.../org/apache/spark/scheduler/MapStatus.scala | 12 ++---

.../apache/spark/scheduler/MapStatusSuite.scala | 28

2 files changed, 9 insertions(+), 31 deletions(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/e702fb1d/core/src/main/scala/org/apache/spark/scheduler/MapStatus.scala

--

diff --git a/core/src/main/scala/org/apache/spark/scheduler/MapStatus.scala

b/core/src/main/scala/org/apache/spark/scheduler/MapStatus.scala

index 659694d..0e221ed 100644

--- a/core/src/main/scala/org/apache/spark/scheduler/MapStatus.scala

+++ b/core/src/main/scala/org/apache/spark/scheduler/MapStatus.scala

@@ -49,10 +49,16 @@ private[spark] sealed trait MapStatus {

private[spark] object MapStatus {

+ /**

+ * Min partition number to use [[HighlyCompressedMapStatus]]. A bit ugly

here because in test