This is an automated email from the ASF dual-hosted git repository.

jshao pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/incubator-livy.git

The following commit(s) were added to refs/heads/master by this push:

new 25542e4 [LIVY-727] Fix session state always be idle though the yarn

application has been killed after restart livy

25542e4 is described below

commit 25542e4e78b39a3c9b9426a70a92ca7c183daea3

Author: runzhiwang <runzhiw...@tencent.com>

AuthorDate: Thu Dec 19 14:29:01 2019 +0800

[LIVY-727] Fix session state always be idle though the yarn application has

been killed after restart livy

## What changes were proposed in this pull request?

[LIVY-727] Fix session state always be idle though the yarn application has

been killed after restart livy.

Follows are steps to reproduce the problem:

1. Set livy.server.recovery.mode=recovery, and create a session in

yarn-cluster

2. Restart livy

3. Kill the yarn application of the session.

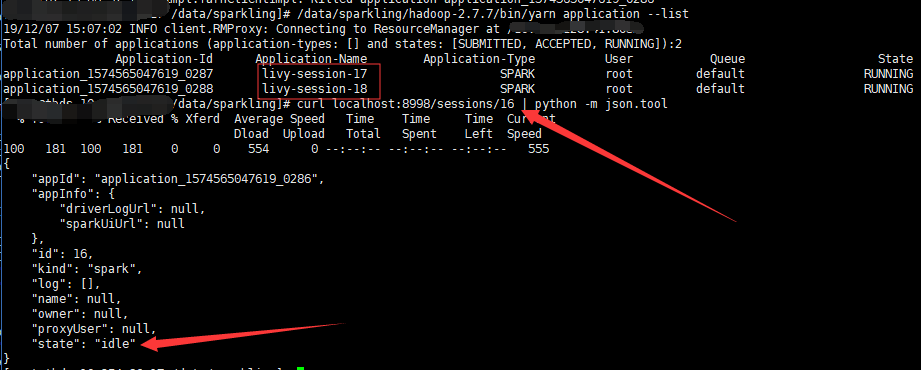

4. The session state will always be idle and never change to killed or

dead. Just as the image, livy-session-16 has been killed in yarn, but the state

is still idle.

The cause of the problem are as follows:

1. Because when recover session, livy will not startDriver again, so the

driverProcess is None.

2. SparkYarnApp will not be created in `driverProcess.map { _ =>

SparkApp.create(appTag, appId, driverProcess, livyConf, Some(this)) }` when

driverProcess is None.

3. So yarnAppMonitorThread of the session will never start, and the session

state will never change.

How to fix the bug:

1. If livy run in yarn, SparkApp will create even though the driverProcess

is None

2. If not run in yarn, SparkApp will not create, because the code require

driverProcess is not None at

https://github.com/apache/incubator-livy/blob/master/server/src/main/scala/org/apache/livy/utils/SparkApp.scala#L93,

and I don't want to change the behavior.

## How was this patch tested?

1. Set livy.server.recovery.mode=recovery, and create a session in

yarn-cluster

2. Restart livy

3. Kill the yarn application of the session.

4. The session state will change to killed

Author: runzhiwang <runzhiw...@tencent.com>

Closes #265 from runzhiwang/session-state.

---

.../org/apache/livy/server/interactive/InteractiveSession.scala | 7 ++++++-

1 file changed, 6 insertions(+), 1 deletion(-)

diff --git

a/server/src/main/scala/org/apache/livy/server/interactive/InteractiveSession.scala

b/server/src/main/scala/org/apache/livy/server/interactive/InteractiveSession.scala

index 4b318b8..790bd5a 100644

---

a/server/src/main/scala/org/apache/livy/server/interactive/InteractiveSession.scala

+++

b/server/src/main/scala/org/apache/livy/server/interactive/InteractiveSession.scala

@@ -399,7 +399,12 @@ class InteractiveSession(

app = mockApp.orElse {

val driverProcess = client.flatMap { c => Option(c.getDriverProcess) }

.map(new LineBufferedProcess(_,

livyConf.getInt(LivyConf.SPARK_LOGS_SIZE)))

- driverProcess.map { _ => SparkApp.create(appTag, appId, driverProcess,

livyConf, Some(this)) }

+

+ if (livyConf.isRunningOnYarn() || driverProcess.isDefined) {

+ Some(SparkApp.create(appTag, appId, driverProcess, livyConf,

Some(this)))

+ } else {

+ None

+ }

}

if (client.isEmpty) {