[GitHub] [hadoop] JohnZZGithub closed pull request #2204: YARN-10393. Make the heartbeat request from NM to RM consistent across heartbeat ID.

JohnZZGithub closed pull request #2204: URL: https://github.com/apache/hadoop/pull/2204 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] ThomasMarquardt closed pull request #2337: ABFS: Backport ABFS updates from trunk to Branch 3.3

ThomasMarquardt closed pull request #2337: URL: https://github.com/apache/hadoop/pull/2337 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] ThomasMarquardt commented on pull request #2337: ABFS: Backport ABFS updates from trunk to Branch 3.3

ThomasMarquardt commented on pull request #2337: URL: https://github.com/apache/hadoop/pull/2337#issuecomment-708705355 These have been backported to branch-3.3. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Updated] (HADOOP-17301) ABFS: read-ahead error reporting breaks buffer management

[ https://issues.apache.org/jira/browse/HADOOP-17301?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Thomas Marqardt updated HADOOP-17301: - Fix Version/s: 3.4.0 3.3.1 Resolution: Fixed Status: Resolved (was: Patch Available) Pushed to trunk in commit c4fff74 and backported to branch-3.3 in commit d5b4d04. All tests passing against my account in eastus2euap: namespace.enabled=true auth.type=SharedKey $mvn -T 1C -Dparallel-tests=abfs -Dscale -DtestsThreadCount=8 clean verify Tests run: 88, Failures: 0, Errors: 0, Skipped: 0 Tests run: 457, Failures: 0, Errors: 0, Skipped: 24 Tests run: 208, Failures: 0, Errors: 0, Skipped: 24 namespace.enabled=true auth.type=OAuth $mvn -T 1C -Dparallel-tests=abfs -Dscale -DtestsThreadCount=8 clean verify Tests run: 88, Failures: 0, Errors: 0, Skipped: 0 Tests run: 457, Failures: 0, Errors: 0, Skipped: 66 Tests run: 208, Failures: 0, Errors: 0, Skipped: 141 > ABFS: read-ahead error reporting breaks buffer management > - > > Key: HADOOP-17301 > URL: https://issues.apache.org/jira/browse/HADOOP-17301 > Project: Hadoop Common > Issue Type: Sub-task > Components: fs/azure >Affects Versions: 3.3.0 >Reporter: Sneha Vijayarajan >Assignee: Sneha Vijayarajan >Priority: Critical > Labels: pull-request-available > Fix For: 3.3.1, 3.4.0 > > Time Spent: 3h 50m > Remaining Estimate: 0h > > When reads done by readahead buffers failed, the exceptions where dropped and > the failure was not getting reported to the calling app. > Jira HADOOP-16852: Report read-ahead error back > tried to handle the scenario by reporting the error back to calling app. But > the commit has introduced a bug which can lead to ReadBuffer being injected > into read completed queue twice. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Updated] (HADOOP-17279) ABFS: Test testNegativeScenariosForCreateOverwriteDisabled fails for non-HNS account

[ https://issues.apache.org/jira/browse/HADOOP-17279?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Thomas Marqardt updated HADOOP-17279: - Fix Version/s: 3.3.1 Backported to branch-3.3 in commit da5db6a. All tests passing against my account in eastus2euap: namespace.enabled=true auth.type=SharedKey $mvn -T 1C -Dparallel-tests=abfs -Dscale -DtestsThreadCount=8 clean verify Tests run: 88, Failures: 0, Errors: 0, Skipped: 0 Tests run: 457, Failures: 0, Errors: 0, Skipped: 24 Tests run: 208, Failures: 0, Errors: 0, Skipped: 24 namespace.enabled=true auth.type=OAuth $mvn -T 1C -Dparallel-tests=abfs -Dscale -DtestsThreadCount=8 clean verify Tests run: 88, Failures: 0, Errors: 0, Skipped: 0 Tests run: 457, Failures: 0, Errors: 0, Skipped: 66 Tests run: 208, Failures: 0, Errors: 0, Skipped: 141 > ABFS: Test testNegativeScenariosForCreateOverwriteDisabled fails for non-HNS > account > > > Key: HADOOP-17279 > URL: https://issues.apache.org/jira/browse/HADOOP-17279 > Project: Hadoop Common > Issue Type: Sub-task > Components: fs/azure >Affects Versions: 3.3.0 >Reporter: Sneha Vijayarajan >Assignee: Sneha Vijayarajan >Priority: Major > Labels: pull-request-available > Fix For: 3.3.1, 3.4.0 > > Time Spent: 1h > Remaining Estimate: 0h > > Test testNegativeScenariosForCreateOverwriteDisabled fails when run against a > non-HNS account. The test creates a mock AbfsClient to mimic negative > scenarios. > Mock is triggered for valid values that come in for permission and umask > while creating a file. Permission and umask get defaulted to null values with > driver when creating a file for a nonHNS account. The mock trigger was not > enabled for these null parameters. > -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Updated] (HADOOP-17215) ABFS: Support for conditional overwrite

[ https://issues.apache.org/jira/browse/HADOOP-17215?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Thomas Marqardt updated HADOOP-17215: - Fix Version/s: 3.3.1 Backported to branch-3.3 in commit d166420. All tests passing against my account in eastus2euap: namespace.enabled=true auth.type=SharedKey $mvn -T 1C -Dparallel-tests=abfs -Dscale -DtestsThreadCount=8 clean verify Tests run: 88, Failures: 0, Errors: 0, Skipped: 0 Tests run: 457, Failures: 0, Errors: 0, Skipped: 24 Tests run: 208, Failures: 0, Errors: 0, Skipped: 24 namespace.enabled=true auth.type=OAuth $mvn -T 1C -Dparallel-tests=abfs -Dscale -DtestsThreadCount=8 clean verify Tests run: 88, Failures: 0, Errors: 0, Skipped: 0 Tests run: 457, Failures: 0, Errors: 0, Skipped: 66 Tests run: 208, Failures: 0, Errors: 0, Skipped: 141 > ABFS: Support for conditional overwrite > --- > > Key: HADOOP-17215 > URL: https://issues.apache.org/jira/browse/HADOOP-17215 > Project: Hadoop Common > Issue Type: Sub-task > Components: fs/azure >Affects Versions: 3.3.0 >Reporter: Sneha Vijayarajan >Assignee: Sneha Vijayarajan >Priority: Major > Labels: abfsactive > Fix For: 3.3.1, 3.4.0 > > > Filesystem Create APIs that do not accept an argument for overwrite flag end > up defaulting it to true. > We are observing that request count of creates with overwrite=true is more > and primarily because of the default setting of the flag is true of the > called Create API. When a create with overwrite ends up timing out, we have > observed that it could lead to race conditions between the first create and > retried one running almost parallel. > To avoid this scenario for create with overwrite=true request, ABFS driver > will always attempt to create without overwrite. If the create fails due to > fileAlreadyPresent, it will resend the request with overwrite=true. > > > -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Updated] (HADOOP-17166) ABFS: configure output stream thread pool

[ https://issues.apache.org/jira/browse/HADOOP-17166?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Thomas Marqardt updated HADOOP-17166: - Fix Version/s: 3.3.1 Resolution: Fixed Status: Resolved (was: Patch Available) Pushed to trunk in commit 8511926 and backported to branch-3.3 in commit f208da2. All tests passing against my account in eastus2euap: namespace.enabled=true auth.type=SharedKey $mvn -T 1C -Dparallel-tests=abfs -Dscale -DtestsThreadCount=8 clean verify Tests run: 88, Failures: 0, Errors: 0, Skipped: 0 Tests run: 457, Failures: 0, Errors: 0, Skipped: 24 Tests run: 208, Failures: 0, Errors: 0, Skipped: 24 namespace.enabled=true auth.type=OAuth $mvn -T 1C -Dparallel-tests=abfs -Dscale -DtestsThreadCount=8 clean verify Tests run: 88, Failures: 0, Errors: 0, Skipped: 0 Tests run: 457, Failures: 0, Errors: 0, Skipped: 66 Tests run: 208, Failures: 0, Errors: 0, Skipped: 141 > ABFS: configure output stream thread pool > - > > Key: HADOOP-17166 > URL: https://issues.apache.org/jira/browse/HADOOP-17166 > Project: Hadoop Common > Issue Type: Sub-task > Components: fs/azure >Affects Versions: 3.3.0 >Reporter: Bilahari T H >Assignee: Bilahari T H >Priority: Minor > Labels: pull-request-available > Fix For: 3.3.1, 3.4.0 > > Time Spent: 1h 50m > Remaining Estimate: 0h > -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Resolved] (HADOOP-16915) ABFS: Test failure ITestAzureBlobFileSystemRandomRead.testRandomReadPerformance

[ https://issues.apache.org/jira/browse/HADOOP-16915?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Thomas Marqardt resolved HADOOP-16915. -- Fix Version/s: 3.4.0 3.3.1 Resolution: Fixed Pushed to trunk in commit 64f36b9 and backported to branch-3.3 in commit cc73503. All tests passing against my account in eastus2euap: namespace.enabled=true auth.type=SharedKey $mvn -T 1C -Dparallel-tests=abfs -Dscale -DtestsThreadCount=8 clean verify Tests run: 88, Failures: 0, Errors: 0, Skipped: 0 Tests run: 457, Failures: 0, Errors: 0, Skipped: 24 Tests run: 208, Failures: 0, Errors: 0, Skipped: 24 namespace.enabled=true auth.type=OAuth $mvn -T 1C -Dparallel-tests=abfs -Dscale -DtestsThreadCount=8 clean verify Tests run: 88, Failures: 0, Errors: 0, Skipped: 0 Tests run: 457, Failures: 0, Errors: 0, Skipped: 66 Tests run: 208, Failures: 0, Errors: 0, Skipped: 141 > ABFS: Test failure > ITestAzureBlobFileSystemRandomRead.testRandomReadPerformance > --- > > Key: HADOOP-16915 > URL: https://issues.apache.org/jira/browse/HADOOP-16915 > Project: Hadoop Common > Issue Type: Sub-task >Reporter: Bilahari T H >Assignee: Bilahari T H >Priority: Major > Labels: abfsactive > Fix For: 3.3.1, 3.4.0 > > > Ref: https://issues.apache.org/jira/browse/HADOOP-16890 > The following test fails randomly. This test compares the perf between Non > HNS account against WASB. > ITestAzureBlobFileSystemRandomRead.testRandomReadPerformance -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Updated] (HADOOP-16966) ABFS: Upgrade Store REST API Version to 2019-12-12

[ https://issues.apache.org/jira/browse/HADOOP-16966?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Thomas Marqardt updated HADOOP-16966: - Fix Version/s: 3.3.1 Backported to branch-3.3 in commit 4072323. All tests passing against my account in eastus2euap: namespace.enabled=true auth.type=SharedKey $mvn -T 1C -Dparallel-tests=abfs -Dscale -DtestsThreadCount=8 clean verify Tests run: 88, Failures: 0, Errors: 0, Skipped: 0 Tests run: 457, Failures: 0, Errors: 0, Skipped: 24 Tests run: 208, Failures: 0, Errors: 0, Skipped: 24 namespace.enabled=true auth.type=OAuth $mvn -T 1C -Dparallel-tests=abfs -Dscale -DtestsThreadCount=8 clean verify Tests run: 88, Failures: 0, Errors: 0, Skipped: 0 Tests run: 457, Failures: 0, Errors: 0, Skipped: 66 Tests run: 208, Failures: 0, Errors: 0, Skipped: 141 > ABFS: Upgrade Store REST API Version to 2019-12-12 > -- > > Key: HADOOP-16966 > URL: https://issues.apache.org/jira/browse/HADOOP-16966 > Project: Hadoop Common > Issue Type: Sub-task > Components: fs/azure >Affects Versions: 3.3.0 >Reporter: Ishani >Assignee: Sneha Vijayarajan >Priority: Major > Labels: abfsactive > Fix For: 3.3.1 > > > Store REST API version on the backend clusters has been upgraded to > 2019-12-12. This Jira will align the Driver requests to reflect this latest > API version. > -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Updated] (HADOOP-17149) ABFS: Test failure: testFailedRequestWhenCredentialsNotCorrect fails when run with SharedKey

[ https://issues.apache.org/jira/browse/HADOOP-17149?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Thomas Marqardt updated HADOOP-17149: - Fix Version/s: 3.3.1 Backported to branch-3.3 in commit e481d01. All tests passing against my account in eastus2euap: namespace.enabled=true auth.type=SharedKey $mvn -T 1C -Dparallel-tests=abfs -Dscale -DtestsThreadCount=8 clean verify Tests run: 88, Failures: 0, Errors: 0, Skipped: 0 Tests run: 457, Failures: 0, Errors: 0, Skipped: 24 Tests run: 208, Failures: 0, Errors: 0, Skipped: 24 namespace.enabled=true auth.type=OAuth $mvn -T 1C -Dparallel-tests=abfs -Dscale -DtestsThreadCount=8 clean verify Tests run: 88, Failures: 0, Errors: 0, Skipped: 0 Tests run: 457, Failures: 0, Errors: 0, Skipped: 66 Tests run: 208, Failures: 0, Errors: 0, Skipped: 141 > ABFS: Test failure: testFailedRequestWhenCredentialsNotCorrect fails when run > with SharedKey > > > Key: HADOOP-17149 > URL: https://issues.apache.org/jira/browse/HADOOP-17149 > Project: Hadoop Common > Issue Type: Sub-task > Components: fs/azure >Affects Versions: 3.3.1 >Reporter: Sneha Vijayarajan >Assignee: Bilahari T H >Priority: Minor > Labels: abfsactive > Fix For: 3.3.1, 3.4.0 > > > When authentication is set to SharedKey, below test fails. > > [ERROR] > ITestGetNameSpaceEnabled.testFailedRequestWhenCredentialsNotCorrect:161 > Expecting > org.apache.hadoop.fs.azurebfs.contracts.exceptions.AbfsRestOperationException > with text "Server failed to authenticate the request. Make sure the value of > Authorization header is formed correctly including the signature.", 403 but > got : "void" > > This test fails when the newly introduced config > "fs.azure.account.hns.enabled" is set. This config will avoid network call to > check if namespace is enabled, whereas the test expects thsi call to be made. > > The assert in test to 403 needs check too. Should ideally be 401. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Updated] (HADOOP-17163) ABFS: Add debug log for rename failures

[ https://issues.apache.org/jira/browse/HADOOP-17163?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Thomas Marqardt updated HADOOP-17163: - Fix Version/s: 3.3.1 Backported to branch-3.3 in commit f73c90f. All tests passing against my account in eastus2euap: +namespace.enabled=true+ +auth.type=SharedKey+ $mvn -T 1C -Dparallel-tests=abfs -Dscale -DtestsThreadCount=8 clean verify Tests run: 88, Failures: 0, Errors: 0, Skipped: 0 Tests run: 457, Failures: 0, Errors: 0, Skipped: 24 Tests run: 208, Failures: 0, Errors: 0, Skipped: 24 +namespace.enabled=true+ +auth.type=OAuth+ $mvn -T 1C -Dparallel-tests=abfs -Dscale -DtestsThreadCount=8 clean verify Tests run: 88, Failures: 0, Errors: 0, Skipped: 0 Tests run: 457, Failures: 0, Errors: 0, Skipped: 66 Tests run: 208, Failures: 0, Errors: 0, Skipped: 141 > ABFS: Add debug log for rename failures > --- > > Key: HADOOP-17163 > URL: https://issues.apache.org/jira/browse/HADOOP-17163 > Project: Hadoop Common > Issue Type: Sub-task > Components: fs/azure >Affects Versions: 3.3.0 >Reporter: Bilahari T H >Assignee: Bilahari T H >Priority: Major > Fix For: 3.3.1, 3.4.0 > > > The JIRA [HADOOP-16281|https://issues.apache.org/jira/browse/HADOOP-16281] > has not yet been concluded. Untill then the logline could help debugging. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Updated] (HADOOP-17137) ABFS: Tests ITestAbfsNetworkStatistics need to be config setting agnostic

[

https://issues.apache.org/jira/browse/HADOOP-17137?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Thomas Marqardt updated HADOOP-17137:

-

Fix Version/s: 3.3.1

Backported to branch-3.3 in commit fbf151ef. All tests passing against my

account in eastus2euap:

+namespace.enabled=true+

+auth.type=SharedKey+

$mvn -T 1C -Dparallel-tests=abfs -Dscale -DtestsThreadCount=8 clean verify

Tests run: 88, Failures: 0, Errors: 0, Skipped: 0

Tests run: 457, Failures: 0, Errors: 0, Skipped: 24

Tests run: 208, Failures: 0, Errors: 0, Skipped: 24

+namespace.enabled=true+

+auth.type=OAuth+

$mvn -T 1C -Dparallel-tests=abfs -Dscale -DtestsThreadCount=8 clean verify

Tests run: 88, Failures: 0, Errors: 0, Skipped: 0

Tests run: 457, Failures: 0, Errors: 0, Skipped: 66

Tests run: 208, Failures: 0, Errors: 0, Skipped: 141

> ABFS: Tests ITestAbfsNetworkStatistics need to be config setting agnostic

> -

>

> Key: HADOOP-17137

> URL: https://issues.apache.org/jira/browse/HADOOP-17137

> Project: Hadoop Common

> Issue Type: Sub-task

> Components: fs/azure, test

>Affects Versions: 3.3.0

>Reporter: Sneha Vijayarajan

>Assignee: Bilahari T H

>Priority: Minor

> Labels: abfsactive

> Fix For: 3.3.1, 3.4.0

>

>

> Tess in ITestAbfsNetworkStatistics have asserts to a static number of

> network calls made from the start of fileystem instance creation. But this

> number of calls are dependent on the certain configs settings which allow

> creation of container or account is HNS enabled to avoid GetAcl call.

>

> The tests need to be modified to ensure that count asserts are made for the

> requests made by the tests alone.

>

> {code:java}

> [INFO] Running org.apache.hadoop.fs.azurebfs.ITestAbfsNetworkStatistics[INFO]

> Running org.apache.hadoop.fs.azurebfs.ITestAbfsNetworkStatistics[ERROR] Tests

> run: 2, Failures: 2, Errors: 0, Skipped: 0, Time elapsed: 4.148 s <<<

> FAILURE! - in org.apache.hadoop.fs.azurebfs.ITestAbfsNetworkStatistics[ERROR]

> testAbfsHttpResponseStatistics(org.apache.hadoop.fs.azurebfs.ITestAbfsNetworkStatistics)

> Time elapsed: 4.148 s <<< FAILURE!java.lang.AssertionError: Mismatch in

> get_responses expected:<8> but was:<7> at

> org.junit.Assert.fail(Assert.java:88) at

> org.junit.Assert.failNotEquals(Assert.java:834) at

> org.junit.Assert.assertEquals(Assert.java:645) at

> org.apache.hadoop.fs.azurebfs.AbstractAbfsIntegrationTest.assertAbfsStatistics(AbstractAbfsIntegrationTest.java:445)

> at

> org.apache.hadoop.fs.azurebfs.ITestAbfsNetworkStatistics.testAbfsHttpResponseStatistics(ITestAbfsNetworkStatistics.java:207)

> at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at

> sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

> at

> sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> at java.lang.reflect.Method.invoke(Method.java:498) at

> org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:50)

> at

> org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12)

> at

> org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:47)

> at

> org.junit.internal.runners.statements.InvokeMethod.evaluate(InvokeMethod.java:17)

> at

> org.junit.internal.runners.statements.RunBefores.evaluate(RunBefores.java:26)

> at

> org.junit.internal.runners.statements.RunAfters.evaluate(RunAfters.java:27)

> at org.junit.rules.TestWatcher$1.evaluate(TestWatcher.java:55) at

> org.junit.internal.runners.statements.FailOnTimeout$CallableStatement.call(FailOnTimeout.java:298)

> at

> org.junit.internal.runners.statements.FailOnTimeout$CallableStatement.call(FailOnTimeout.java:292)

> at java.util.concurrent.FutureTask.run(FutureTask.java:266) at

> java.lang.Thread.run(Thread.java:748)

> [ERROR]

> testAbfsHttpSendStatistics(org.apache.hadoop.fs.azurebfs.ITestAbfsNetworkStatistics)

> Time elapsed: 2.987 s <<< FAILURE!java.lang.AssertionError: Mismatch in

> connections_made expected:<6> but was:<5> at

> org.junit.Assert.fail(Assert.java:88) at

> org.junit.Assert.failNotEquals(Assert.java:834) at

> org.junit.Assert.assertEquals(Assert.java:645) at

> org.apache.hadoop.fs.azurebfs.AbstractAbfsIntegrationTest.assertAbfsStatistics(AbstractAbfsIntegrationTest.java:445)

> at

> org.apache.hadoop.fs.azurebfs.ITestAbfsNetworkStatistics.testAbfsHttpSendStatistics(ITestAbfsNetworkStatistics.java:91)

> at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at

> sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

> at

> sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> at

[GitHub] [hadoop] ayushtkn opened a new pull request #2386: HDFS-15633. Avoid redundant RPC calls for getDiskStatus.

ayushtkn opened a new pull request #2386: URL: https://github.com/apache/hadoop/pull/2386 https://issues.apache.org/jira/browse/HDFS-15633 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

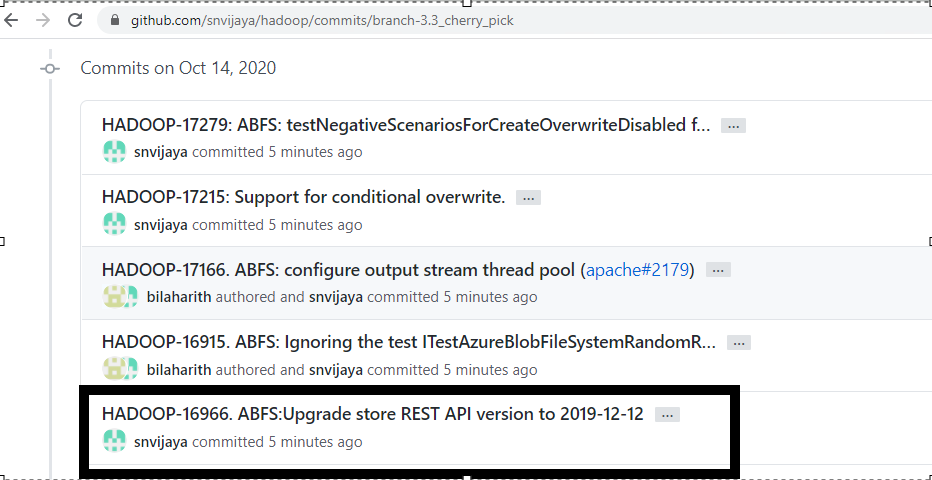

[GitHub] [hadoop] snvijaya commented on pull request #2337: ABFS: Backport ABFS updates from trunk to Branch 3.3

snvijaya commented on pull request #2337: URL: https://github.com/apache/hadoop/pull/2337#issuecomment-708585629 @steveloughran I just modified the commit message of the REST API version commit. But the PR doesnt show any new update, though the update is visible in the PR branch history. https://github.com/snvijaya/hadoop/commits/branch-3.3_cherry_pick Would this update be sufficient to retain the new commit messgae while merging ?  This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Work logged] (HADOOP-17272) ABFS Streams to support IOStatistics API

[

https://issues.apache.org/jira/browse/HADOOP-17272?focusedWorklogId=500776=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-500776

]

ASF GitHub Bot logged work on HADOOP-17272:

---

Author: ASF GitHub Bot

Created on: 14/Oct/20 17:42

Start Date: 14/Oct/20 17:42

Worklog Time Spent: 10m

Work Description: steveloughran commented on a change in pull request

#2353:

URL: https://github.com/apache/hadoop/pull/2353#discussion_r504849729

##

File path:

hadoop-tools/hadoop-azure/src/main/java/org/apache/hadoop/fs/azurebfs/services/AbfsInputStream.java

##

@@ -279,7 +289,15 @@ int readRemote(long position, byte[] b, int offset, int

length) throws IOExcepti

AbfsPerfTracker tracker = client.getAbfsPerfTracker();

try (AbfsPerfInfo perfInfo = new AbfsPerfInfo(tracker, "readRemote",

"read")) {

LOG.trace("Trigger client.read for path={} position={} offset={}

length={}", path, position, offset, length);

- op = client.read(path, position, b, offset, length, tolerateOobAppends ?

"*" : eTag, cachedSasToken.get());

+ if (ioStatistics != null) {

Review comment:

the methods in IOStatisticsBinding now all take a null

DurationTrackerFactory, so you don't need the two branches here any more

##

File path:

hadoop-tools/hadoop-azure/src/main/java/org/apache/hadoop/fs/azurebfs/AbfsStatistic.java

##

@@ -73,7 +73,36 @@

READ_THROTTLES("read_throttles",

"Total number of times a read operation is throttled."),

WRITE_THROTTLES("write_throttles",

- "Total number of times a write operation is throttled.");

+ "Total number of times a write operation is throttled."),

+

+ //OutputStream statistics.

+ BYTES_TO_UPLOAD("bytes_upload",

Review comment:

these should all be in hadoop-common *where possible*, so that we have

consistent names everywhere for aggregation

##

File path:

hadoop-tools/hadoop-azure/src/main/java/org/apache/hadoop/fs/azurebfs/services/AbfsInputStream.java

##

@@ -73,6 +78,8 @@

private long bytesFromReadAhead; // bytes read from readAhead; for testing

private long bytesFromRemoteRead; // bytes read remotely; for testing

+ private IOStatistics ioStatistics;

Review comment:

final?

##

File path:

hadoop-tools/hadoop-azure/src/main/java/org/apache/hadoop/fs/azurebfs/services/AbfsInputStreamStatisticsImpl.java

##

@@ -90,9 +107,7 @@ public void seek(long seekTo, long currentPos) {

*/

@Override

public void bytesRead(long bytes) {

-if (bytes > 0) {

- bytesRead += bytes;

-}

+ioStatisticsStore.incrementCounter(STREAM_READ_BYTES, bytes);

Review comment:

One thing to consider here is the cost of the map lookup on every IOP.

You can ask the IOStatisticsStore for a reference to the atomic counter, and

use that direct. I'm doing that for the output stream, reviewing this patch

makes me realise I should be doing it for read as well. at least the read byte

counters which are incremented on every read.

bytesUploaded = store.getCounterReference(

STREAM_WRITE_TOTAL_DATA.getSymbol());

bytesWritten = store.getCounterReference(

StreamStatisticNames.STREAM_WRITE_BYTES);

##

File path:

hadoop-tools/hadoop-azure/src/main/java/org/apache/hadoop/fs/azurebfs/services/AbfsOutputStream.java

##

@@ -376,27 +384,31 @@ private synchronized void writeCurrentBufferToService()

throws IOException {

position += bytesLength;

if (threadExecutor.getQueue().size() >= maxRequestsThatCanBeQueued) {

- long start = System.currentTimeMillis();

- waitForTaskToComplete();

- outputStreamStatistics.timeSpentTaskWait(start,

System.currentTimeMillis());

+ //Tracking time spent on waiting for task to complete.

+ try (DurationTracker ignored =

outputStreamStatistics.timeSpentTaskWait()) {

+waitForTaskToComplete();

+ }

}

-final Future job = completionService.submit(new Callable() {

- @Override

- public Void call() throws Exception {

-AbfsPerfTracker tracker = client.getAbfsPerfTracker();

-try (AbfsPerfInfo perfInfo = new AbfsPerfInfo(tracker,

-"writeCurrentBufferToService", "append")) {

- AbfsRestOperation op = client.append(path, offset, bytes, 0,

- bytesLength, cachedSasToken.get(), false);

- cachedSasToken.update(op.getSasToken());

- perfInfo.registerResult(op.getResult());

- byteBufferPool.putBuffer(ByteBuffer.wrap(bytes));

- perfInfo.registerSuccess(true);

- return null;

-}

- }

-});

+final Future job =

+completionService.submit(IOStatisticsBinding

+.trackDurationOfCallable((IOStatisticsStore) ioStatistics,

+

[GitHub] [hadoop] steveloughran commented on a change in pull request #2353: HADOOP-17272. ABFS Streams to support IOStatistics API

steveloughran commented on a change in pull request #2353:

URL: https://github.com/apache/hadoop/pull/2353#discussion_r504849729

##

File path:

hadoop-tools/hadoop-azure/src/main/java/org/apache/hadoop/fs/azurebfs/services/AbfsInputStream.java

##

@@ -279,7 +289,15 @@ int readRemote(long position, byte[] b, int offset, int

length) throws IOExcepti

AbfsPerfTracker tracker = client.getAbfsPerfTracker();

try (AbfsPerfInfo perfInfo = new AbfsPerfInfo(tracker, "readRemote",

"read")) {

LOG.trace("Trigger client.read for path={} position={} offset={}

length={}", path, position, offset, length);

- op = client.read(path, position, b, offset, length, tolerateOobAppends ?

"*" : eTag, cachedSasToken.get());

+ if (ioStatistics != null) {

Review comment:

the methods in IOStatisticsBinding now all take a null

DurationTrackerFactory, so you don't need the two branches here any more

##

File path:

hadoop-tools/hadoop-azure/src/main/java/org/apache/hadoop/fs/azurebfs/AbfsStatistic.java

##

@@ -73,7 +73,36 @@

READ_THROTTLES("read_throttles",

"Total number of times a read operation is throttled."),

WRITE_THROTTLES("write_throttles",

- "Total number of times a write operation is throttled.");

+ "Total number of times a write operation is throttled."),

+

+ //OutputStream statistics.

+ BYTES_TO_UPLOAD("bytes_upload",

Review comment:

these should all be in hadoop-common *where possible*, so that we have

consistent names everywhere for aggregation

##

File path:

hadoop-tools/hadoop-azure/src/main/java/org/apache/hadoop/fs/azurebfs/services/AbfsInputStream.java

##

@@ -73,6 +78,8 @@

private long bytesFromReadAhead; // bytes read from readAhead; for testing

private long bytesFromRemoteRead; // bytes read remotely; for testing

+ private IOStatistics ioStatistics;

Review comment:

final?

##

File path:

hadoop-tools/hadoop-azure/src/main/java/org/apache/hadoop/fs/azurebfs/services/AbfsInputStreamStatisticsImpl.java

##

@@ -90,9 +107,7 @@ public void seek(long seekTo, long currentPos) {

*/

@Override

public void bytesRead(long bytes) {

-if (bytes > 0) {

- bytesRead += bytes;

-}

+ioStatisticsStore.incrementCounter(STREAM_READ_BYTES, bytes);

Review comment:

One thing to consider here is the cost of the map lookup on every IOP.

You can ask the IOStatisticsStore for a reference to the atomic counter, and

use that direct. I'm doing that for the output stream, reviewing this patch

makes me realise I should be doing it for read as well. at least the read byte

counters which are incremented on every read.

bytesUploaded = store.getCounterReference(

STREAM_WRITE_TOTAL_DATA.getSymbol());

bytesWritten = store.getCounterReference(

StreamStatisticNames.STREAM_WRITE_BYTES);

##

File path:

hadoop-tools/hadoop-azure/src/main/java/org/apache/hadoop/fs/azurebfs/services/AbfsOutputStream.java

##

@@ -376,27 +384,31 @@ private synchronized void writeCurrentBufferToService()

throws IOException {

position += bytesLength;

if (threadExecutor.getQueue().size() >= maxRequestsThatCanBeQueued) {

- long start = System.currentTimeMillis();

- waitForTaskToComplete();

- outputStreamStatistics.timeSpentTaskWait(start,

System.currentTimeMillis());

+ //Tracking time spent on waiting for task to complete.

+ try (DurationTracker ignored =

outputStreamStatistics.timeSpentTaskWait()) {

+waitForTaskToComplete();

+ }

}

-final Future job = completionService.submit(new Callable() {

- @Override

- public Void call() throws Exception {

-AbfsPerfTracker tracker = client.getAbfsPerfTracker();

-try (AbfsPerfInfo perfInfo = new AbfsPerfInfo(tracker,

-"writeCurrentBufferToService", "append")) {

- AbfsRestOperation op = client.append(path, offset, bytes, 0,

- bytesLength, cachedSasToken.get(), false);

- cachedSasToken.update(op.getSasToken());

- perfInfo.registerResult(op.getResult());

- byteBufferPool.putBuffer(ByteBuffer.wrap(bytes));

- perfInfo.registerSuccess(true);

- return null;

-}

- }

-});

+final Future job =

+completionService.submit(IOStatisticsBinding

+.trackDurationOfCallable((IOStatisticsStore) ioStatistics,

+AbfsStatistic.TIME_SPENT_ON_PUT_REQUEST.getStatName(),

+() -> {

+ AbfsPerfTracker tracker = client.getAbfsPerfTracker();

Review comment:

given we are wrapping callables with callables, maybe the Abfs Perf

tracker could join in. Not needed for this patch, but later...

##

File path:

hadoop-tools/hadoop-azure/src/main/java/org/apache/hadoop/fs/azurebfs/services/AbfsOutputStreamStatisticsImpl.java

##

@@

[jira] [Work logged] (HADOOP-17271) S3A statistics to support IOStatistics

[ https://issues.apache.org/jira/browse/HADOOP-17271?focusedWorklogId=500767=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-500767 ] ASF GitHub Bot logged work on HADOOP-17271: --- Author: ASF GitHub Bot Created on: 14/Oct/20 17:16 Start Date: 14/Oct/20 17:16 Worklog Time Spent: 10m Work Description: hadoop-yetus removed a comment on pull request #2324: URL: https://github.com/apache/hadoop/pull/2324#issuecomment-704562880 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 500767) Time Spent: 4.5h (was: 4h 20m) > S3A statistics to support IOStatistics > -- > > Key: HADOOP-17271 > URL: https://issues.apache.org/jira/browse/HADOOP-17271 > Project: Hadoop Common > Issue Type: Sub-task > Components: fs/s3 >Affects Versions: 3.3.0 >Reporter: Steve Loughran >Assignee: Steve Loughran >Priority: Major > Labels: pull-request-available > Time Spent: 4.5h > Remaining Estimate: 0h > > S3A to rework statistics with > * API + Implementation split of the interfaces used by subcomponents when > reporting stats > * S3A Instrumentation to implement all the interfaces > * streams, etc to all implement IOStatisticsSources and serve to callers > * Add some tracking of durations of remote requests -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus removed a comment on pull request #2324: HADOOP-17271. S3A statistics to support IOStatistics

hadoop-yetus removed a comment on pull request #2324: URL: https://github.com/apache/hadoop/pull/2324#issuecomment-704562880 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] steveloughran commented on pull request #2337: ABFS: Backport ABFS updates from trunk to Branch 3.3

steveloughran commented on pull request #2337: URL: https://github.com/apache/hadoop/pull/2337#issuecomment-708538476 that rest API update patch needs to have a JIRA. I can see that we accidentally got it in to trunk without it...the backports can fix that. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Work logged] (HADOOP-16649) Defining hadoop-azure and hadoop-azure-datalake in HADOOP_OPTIONAL_TOOLS will ignore hadoop-azure

[ https://issues.apache.org/jira/browse/HADOOP-16649?focusedWorklogId=500746=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-500746 ] ASF GitHub Bot logged work on HADOOP-16649: --- Author: ASF GitHub Bot Created on: 14/Oct/20 16:18 Start Date: 14/Oct/20 16:18 Worklog Time Spent: 10m Work Description: Taccart opened a new pull request #2385: URL: https://github.com/apache/hadoop/pull/2385 The bug HADOOP-16649 is due to a regexp test : hadoop-azure is detected as already present when hadoop-azure-datalake is present. This PR contains an update for hadoop_add_param function to address the problem, and the corresponding bats test. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 500746) Remaining Estimate: 0h Time Spent: 10m > Defining hadoop-azure and hadoop-azure-datalake in HADOOP_OPTIONAL_TOOLS will > ignore hadoop-azure > - > > Key: HADOOP-16649 > URL: https://issues.apache.org/jira/browse/HADOOP-16649 > Project: Hadoop Common > Issue Type: Bug > Components: bin >Affects Versions: 3.2.1 > Environment: Shell, but it also trickles down into all code using > `FileSystem` >Reporter: Tom Lous >Priority: Minor > Time Spent: 10m > Remaining Estimate: 0h > > When defining both `hadoop-azure` and `hadoop-azure-datalake` in > HADOOP_OPTIONAL_TOOLS in `conf/hadoop-env.sh`, `hadoop-azure` will get > ignored. > eg setting this: > HADOOP_OPTIONAL_TOOLS="hadoop-azure-datalake,hadoop-azure" > > with debug on: > > DEBUG: Profiles: importing > /opt/hadoop/libexec/shellprofile.d/hadoop-azure-datalake.sh > DEBUG: HADOOP_SHELL_PROFILES accepted hadoop-azure-datalake > DEBUG: Profiles: importing /opt/hadoop/libexec/shellprofile.d/hadoop-azure.sh > DEBUG: HADOOP_SHELL_PROFILES > DEBUG: HADOOP_SHELL_PROFILES declined hadoop-azure hadoop-azure > > whereas: > > HADOOP_OPTIONAL_TOOLS="hadoop-azure" > > with debug on: > DEBUG: Profiles: importing /opt/hadoop/libexec/shellprofile.d/hadoop-azure.sh > DEBUG: HADOOP_SHELL_PROFILES accepted hadoop-azure > -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Updated] (HADOOP-16649) Defining hadoop-azure and hadoop-azure-datalake in HADOOP_OPTIONAL_TOOLS will ignore hadoop-azure

[ https://issues.apache.org/jira/browse/HADOOP-16649?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated HADOOP-16649: Labels: pull-request-available (was: ) > Defining hadoop-azure and hadoop-azure-datalake in HADOOP_OPTIONAL_TOOLS will > ignore hadoop-azure > - > > Key: HADOOP-16649 > URL: https://issues.apache.org/jira/browse/HADOOP-16649 > Project: Hadoop Common > Issue Type: Bug > Components: bin >Affects Versions: 3.2.1 > Environment: Shell, but it also trickles down into all code using > `FileSystem` >Reporter: Tom Lous >Priority: Minor > Labels: pull-request-available > Time Spent: 10m > Remaining Estimate: 0h > > When defining both `hadoop-azure` and `hadoop-azure-datalake` in > HADOOP_OPTIONAL_TOOLS in `conf/hadoop-env.sh`, `hadoop-azure` will get > ignored. > eg setting this: > HADOOP_OPTIONAL_TOOLS="hadoop-azure-datalake,hadoop-azure" > > with debug on: > > DEBUG: Profiles: importing > /opt/hadoop/libexec/shellprofile.d/hadoop-azure-datalake.sh > DEBUG: HADOOP_SHELL_PROFILES accepted hadoop-azure-datalake > DEBUG: Profiles: importing /opt/hadoop/libexec/shellprofile.d/hadoop-azure.sh > DEBUG: HADOOP_SHELL_PROFILES > DEBUG: HADOOP_SHELL_PROFILES declined hadoop-azure hadoop-azure > > whereas: > > HADOOP_OPTIONAL_TOOLS="hadoop-azure" > > with debug on: > DEBUG: Profiles: importing /opt/hadoop/libexec/shellprofile.d/hadoop-azure.sh > DEBUG: HADOOP_SHELL_PROFILES accepted hadoop-azure > -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] Taccart opened a new pull request #2385: HADOOP-16649. hadoop_add_param function : change regexp test by iterative equality test

Taccart opened a new pull request #2385: URL: https://github.com/apache/hadoop/pull/2385 The bug HADOOP-16649 is due to a regexp test : hadoop-azure is detected as already present when hadoop-azure-datalake is present. This PR contains an update for hadoop_add_param function to address the problem, and the corresponding bats test. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Work logged] (HADOOP-17301) ABFS: read-ahead error reporting breaks buffer management

[ https://issues.apache.org/jira/browse/HADOOP-17301?focusedWorklogId=500733=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-500733 ] ASF GitHub Bot logged work on HADOOP-17301: --- Author: ASF GitHub Bot Created on: 14/Oct/20 15:38 Start Date: 14/Oct/20 15:38 Worklog Time Spent: 10m Work Description: snvijaya commented on pull request #2369: URL: https://github.com/apache/hadoop/pull/2369#issuecomment-708485353 > +1, merged to trunk. > > @snvijaya -before I backport it, can you do a cherrypick of this into branch-3.3 and verify all the tests are good? thanks Thanks a lot @steveloughran . I have created a backport PR for this change. Tests went well, have pasted the result in the backport PR. PR: https://github.com/apache/hadoop/pull/2384 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 500733) Time Spent: 3h 50m (was: 3h 40m) > ABFS: read-ahead error reporting breaks buffer management > - > > Key: HADOOP-17301 > URL: https://issues.apache.org/jira/browse/HADOOP-17301 > Project: Hadoop Common > Issue Type: Sub-task > Components: fs/azure >Affects Versions: 3.3.0 >Reporter: Sneha Vijayarajan >Assignee: Sneha Vijayarajan >Priority: Critical > Labels: pull-request-available > Time Spent: 3h 50m > Remaining Estimate: 0h > > When reads done by readahead buffers failed, the exceptions where dropped and > the failure was not getting reported to the calling app. > Jira HADOOP-16852: Report read-ahead error back > tried to handle the scenario by reporting the error back to calling app. But > the commit has introduced a bug which can lead to ReadBuffer being injected > into read completed queue twice. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] snvijaya commented on pull request #2369: HADOOP-17301. ABFS: Fix bug introduced in HADOOP-16852 which reports read-ahead error back

snvijaya commented on pull request #2369: URL: https://github.com/apache/hadoop/pull/2369#issuecomment-708485353 > +1, merged to trunk. > > @snvijaya -before I backport it, can you do a cherrypick of this into branch-3.3 and verify all the tests are good? thanks Thanks a lot @steveloughran . I have created a backport PR for this change. Tests went well, have pasted the result in the backport PR. PR: https://github.com/apache/hadoop/pull/2384 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Comment Edited] (HADOOP-16649) Defining hadoop-azure and hadoop-azure-datalake in HADOOP_OPTIONAL_TOOLS will ignore hadoop-azure

[

https://issues.apache.org/jira/browse/HADOOP-16649?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17213929#comment-17213929

]

thierry accart edited comment on HADOOP-16649 at 10/14/20, 3:37 PM:

Hi

for information: I tried to enrich the {{hadoop_add_param.bats}} with a

dedicated test

@test "hadoop_add_param (positive 2)" {

\{{ {{ hadoop_add_param testvar hadoop-azure hadoop-azure

{{ {{ hadoop_add_param testvar hadoop-azure-datalake

}}\{{hadoop-azure-datalake }}{}}}

{{ {{ echo ">${testvar}<"

{{ {{ }}{{[ "}}{{${testvar}" = "hadoop-azure-datalake hadoop-azure" ]

{{ {{}

execution of {{run-bats.sh

(}}{\{hadoop-common-project/hadoop-common/src/test/scripts/run-bats.s}}h) does

*_not_* fail :/

was (Author: taccart):

Hi

for information: I tried to enrich the {{hadoop_add_param.bats}} with a

dedicated test

@test "hadoop_add_param (positive 2)" {

{{ \{{ hadoop_add_param testvar hadoop-azure hadoop-azure

{{ {{ hadoop_add_param testvar hadoop-azure-datalake

}}\{{hadoop-azure-datalake }}{{

{{ {{ echo ">${testvar}<"

{{ {{ }}{{[ "}}{{${testvar}" = "hadoop-azure-datalake hadoop-azure" ]

{{ {{}

execution of {{run-bats.sh

(}}{\{hadoop-common-project/hadoop-common/src/test/scripts/run-bats.s}}h) does

*_not_* fail :/

But, good news, changing internal order

{\{ hadoop_add_param testvar hadoop-azure hadoop-azure }}

{{ hadoop_add_param testvar hadoop-azure-datalake }}\{{hadoop-azure-datalake

}}{{}}

\{{}}

fails as expected :)

> Defining hadoop-azure and hadoop-azure-datalake in HADOOP_OPTIONAL_TOOLS will

> ignore hadoop-azure

> -

>

> Key: HADOOP-16649

> URL: https://issues.apache.org/jira/browse/HADOOP-16649

> Project: Hadoop Common

> Issue Type: Bug

> Components: bin

>Affects Versions: 3.2.1

> Environment: Shell, but it also trickles down into all code using

> `FileSystem`

>Reporter: Tom Lous

>Priority: Minor

>

> When defining both `hadoop-azure` and `hadoop-azure-datalake` in

> HADOOP_OPTIONAL_TOOLS in `conf/hadoop-env.sh`, `hadoop-azure` will get

> ignored.

> eg setting this:

> HADOOP_OPTIONAL_TOOLS="hadoop-azure-datalake,hadoop-azure"

>

> with debug on:

>

> DEBUG: Profiles: importing

> /opt/hadoop/libexec/shellprofile.d/hadoop-azure-datalake.sh

> DEBUG: HADOOP_SHELL_PROFILES accepted hadoop-azure-datalake

> DEBUG: Profiles: importing /opt/hadoop/libexec/shellprofile.d/hadoop-azure.sh

> DEBUG: HADOOP_SHELL_PROFILES

> DEBUG: HADOOP_SHELL_PROFILES declined hadoop-azure hadoop-azure

>

> whereas:

>

> HADOOP_OPTIONAL_TOOLS="hadoop-azure"

>

> with debug on:

> DEBUG: Profiles: importing /opt/hadoop/libexec/shellprofile.d/hadoop-azure.sh

> DEBUG: HADOOP_SHELL_PROFILES accepted hadoop-azure

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Work logged] (HADOOP-17301) ABFS: read-ahead error reporting breaks buffer management

[ https://issues.apache.org/jira/browse/HADOOP-17301?focusedWorklogId=500731=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-500731 ] ASF GitHub Bot logged work on HADOOP-17301: --- Author: ASF GitHub Bot Created on: 14/Oct/20 15:37 Start Date: 14/Oct/20 15:37 Worklog Time Spent: 10m Work Description: snvijaya commented on pull request #2384: URL: https://github.com/apache/hadoop/pull/2384#issuecomment-708484638 Test results from accounts on East US 2 region: ### NON-HNS: SharedKey: [INFO] Results: [INFO] [INFO] Tests run: 88, Failures: 0, Errors: 0, Skipped: 0 [INFO] Results: [INFO] [WARNING] Tests run: 452, Failures: 0, Errors: 0, Skipped: 244 [INFO] Results: [INFO] [WARNING] Tests run: 207, Failures: 0, Errors: 0, Skipped: 24 OAuth: [INFO] Results: [INFO] [INFO] Tests run: 88, Failures: 0, Errors: 0, Skipped: 0 [INFO] Results: [INFO] [WARNING] Tests run: 452, Failures: 0, Errors: 0, Skipped: 248 [INFO] Results: [INFO] [WARNING] Tests run: 207, Failures: 0, Errors: 0, Skipped: 140 ### HNS: SharedKey: [INFO] Results: [INFO] [INFO] Tests run: 88, Failures: 0, Errors: 0, Skipped: 0 [INFO] Results: [INFO] [WARNING] Tests run: 452, Failures: 0, Errors: 0, Skipped: 24 [INFO] Results: [INFO] [WARNING] Tests run: 207, Failures: 0, Errors: 0, Skipped: 24 OAuth: [INFO] Results: [INFO] [INFO] Tests run: 88, Failures: 0, Errors: 0, Skipped: 0 [INFO] Results: [INFO] [WARNING] Tests run: 452, Failures: 0, Errors: 0, Skipped: 66 [INFO] Results: [INFO] [WARNING] Tests run: 207, Failures: 0, Errors: 0, Skipped: 140 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 500731) Time Spent: 3h 40m (was: 3.5h) > ABFS: read-ahead error reporting breaks buffer management > - > > Key: HADOOP-17301 > URL: https://issues.apache.org/jira/browse/HADOOP-17301 > Project: Hadoop Common > Issue Type: Sub-task > Components: fs/azure >Affects Versions: 3.3.0 >Reporter: Sneha Vijayarajan >Assignee: Sneha Vijayarajan >Priority: Critical > Labels: pull-request-available > Time Spent: 3h 40m > Remaining Estimate: 0h > > When reads done by readahead buffers failed, the exceptions where dropped and > the failure was not getting reported to the calling app. > Jira HADOOP-16852: Report read-ahead error back > tried to handle the scenario by reporting the error back to calling app. But > the commit has introduced a bug which can lead to ReadBuffer being injected > into read completed queue twice. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] snvijaya commented on pull request #2384: ABFS: Backport HADOOP-17301 from trunk to Branch 3.3

snvijaya commented on pull request #2384: URL: https://github.com/apache/hadoop/pull/2384#issuecomment-708484638 Test results from accounts on East US 2 region: ### NON-HNS: SharedKey: [INFO] Results: [INFO] [INFO] Tests run: 88, Failures: 0, Errors: 0, Skipped: 0 [INFO] Results: [INFO] [WARNING] Tests run: 452, Failures: 0, Errors: 0, Skipped: 244 [INFO] Results: [INFO] [WARNING] Tests run: 207, Failures: 0, Errors: 0, Skipped: 24 OAuth: [INFO] Results: [INFO] [INFO] Tests run: 88, Failures: 0, Errors: 0, Skipped: 0 [INFO] Results: [INFO] [WARNING] Tests run: 452, Failures: 0, Errors: 0, Skipped: 248 [INFO] Results: [INFO] [WARNING] Tests run: 207, Failures: 0, Errors: 0, Skipped: 140 ### HNS: SharedKey: [INFO] Results: [INFO] [INFO] Tests run: 88, Failures: 0, Errors: 0, Skipped: 0 [INFO] Results: [INFO] [WARNING] Tests run: 452, Failures: 0, Errors: 0, Skipped: 24 [INFO] Results: [INFO] [WARNING] Tests run: 207, Failures: 0, Errors: 0, Skipped: 24 OAuth: [INFO] Results: [INFO] [INFO] Tests run: 88, Failures: 0, Errors: 0, Skipped: 0 [INFO] Results: [INFO] [WARNING] Tests run: 452, Failures: 0, Errors: 0, Skipped: 66 [INFO] Results: [INFO] [WARNING] Tests run: 207, Failures: 0, Errors: 0, Skipped: 140 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Work logged] (HADOOP-17301) ABFS: read-ahead error reporting breaks buffer management

[ https://issues.apache.org/jira/browse/HADOOP-17301?focusedWorklogId=500675=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-500675 ] ASF GitHub Bot logged work on HADOOP-17301: --- Author: ASF GitHub Bot Created on: 14/Oct/20 14:23 Start Date: 14/Oct/20 14:23 Worklog Time Spent: 10m Work Description: snvijaya opened a new pull request #2384: URL: https://github.com/apache/hadoop/pull/2384 Backport HADOOP-17301 from trunk to Branch 3.3 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 500675) Time Spent: 3.5h (was: 3h 20m) > ABFS: read-ahead error reporting breaks buffer management > - > > Key: HADOOP-17301 > URL: https://issues.apache.org/jira/browse/HADOOP-17301 > Project: Hadoop Common > Issue Type: Sub-task > Components: fs/azure >Affects Versions: 3.3.0 >Reporter: Sneha Vijayarajan >Assignee: Sneha Vijayarajan >Priority: Critical > Labels: pull-request-available > Time Spent: 3.5h > Remaining Estimate: 0h > > When reads done by readahead buffers failed, the exceptions where dropped and > the failure was not getting reported to the calling app. > Jira HADOOP-16852: Report read-ahead error back > tried to handle the scenario by reporting the error back to calling app. But > the commit has introduced a bug which can lead to ReadBuffer being injected > into read completed queue twice. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] snvijaya opened a new pull request #2384: ABFS: Backport HADOOP-17301 from trunk to Branch 3.3

snvijaya opened a new pull request #2384: URL: https://github.com/apache/hadoop/pull/2384 Backport HADOOP-17301 from trunk to Branch 3.3 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Work logged] (HADOOP-16878) FileUtil.copy() to throw IOException if the source and destination are the same

[

https://issues.apache.org/jira/browse/HADOOP-16878?focusedWorklogId=500669=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-500669

]

ASF GitHub Bot logged work on HADOOP-16878:

---

Author: ASF GitHub Bot

Created on: 14/Oct/20 14:20

Start Date: 14/Oct/20 14:20

Worklog Time Spent: 10m

Work Description: steveloughran commented on pull request #2383:

URL: https://github.com/apache/hadoop/pull/2383#issuecomment-708435662

LGTM, +1

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 500669)

Time Spent: 2h (was: 1h 50m)

> FileUtil.copy() to throw IOException if the source and destination are the

> same

> ---

>

> Key: HADOOP-16878

> URL: https://issues.apache.org/jira/browse/HADOOP-16878

> Project: Hadoop Common

> Issue Type: Improvement

>Affects Versions: 3.3.0

>Reporter: Gabor Bota

>Assignee: Gabor Bota

>Priority: Major

> Labels: pull-request-available

> Fix For: 3.4.0

>

> Attachments: hdfsTest.patch

>

> Time Spent: 2h

> Remaining Estimate: 0h

>

> We encountered an error during a test in our QE when the file destination and

> source path were the same. This happened during an ADLS test, and there were

> no meaningful error messages, so it was hard to find the root cause of the

> failure.

> The error we saw was that file size has changed during the copy operation.

> The new file creation in the destination - which is the same as the source -

> creates a file and sets the file length to zero. After this, getting the

> source file will fail because the sile size changed during the operation.

> I propose a solution to at least log in error level in the {{FileUtil}} if

> the source and destination of the copy operation is the same, so debugging

> issues like this will be easier in the future.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] steveloughran commented on pull request #2383: HADOOP-16878. FileUtil.copy() to throw IOException if the source and destination are the same

steveloughran commented on pull request #2383: URL: https://github.com/apache/hadoop/pull/2383#issuecomment-708435662 LGTM, +1 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] snvijaya commented on pull request #2368: Hadoop-17296. ABFS: Force reads to be always of buffer size

snvijaya commented on pull request #2368: URL: https://github.com/apache/hadoop/pull/2368#issuecomment-708424773 Re-ran the oAuth tests with the latest changes: ### OAuth: [INFO] Results: [INFO] [INFO] Tests run: 89, Failures: 0, Errors: 0, Skipped: 0 [INFO] Results: [INFO] [WARNING] Tests run: 458, Failures: 0, Errors: 0, Skipped: 66 [INFO] Results: [INFO] [WARNING] Tests run: 207, Failures: 0, Errors: 0, Skipped: 140 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Comment Edited] (HADOOP-16649) Defining hadoop-azure and hadoop-azure-datalake in HADOOP_OPTIONAL_TOOLS will ignore hadoop-azure

[

https://issues.apache.org/jira/browse/HADOOP-16649?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17213929#comment-17213929

]

thierry accart edited comment on HADOOP-16649 at 10/14/20, 1:49 PM:

Hi

for information: I tried to enrich the {{hadoop_add_param.bats}} with a

dedicated test

{{@test "hadoop_add_param (positive 2)" {}}

\{{ hadoop_add_param testvar hadoop-azure hadoop-azure }}

{{ hadoop_add_param testvar hadoop-azure-datalake }}\{{hadoop-azure-datalake

}}{{}}

{{ echo ">${testvar}<"}}

{{ }}{{[ "}}{{${testvar}" = "hadoop-azure-datalake hadoop-azure" ]}}

{{}}}

execution of {{run-bats.sh

(}}{\{hadoop-common-project/hadoop-common/src/test/scripts/run-bats.s}}h) does

*_not_* fail :/

But, good news, changing internal order

{\{ hadoop_add_param testvar hadoop-azure hadoop-azure }}

{{ hadoop_add_param testvar hadoop-azure-datalake }}\{{hadoop-azure-datalake

}}{{}}

\{{}}

fails as expected :)

was (Author: taccart):

Hi

for information: I tried to enrich the {{hadoop_add_param.bats}} with a

dedicated test

{{@test "hadoop_add_param (positive 2)" {}}

{{ hadoop_add_param testvar hadoop-azure hadoop-azure }}

{{ hadoop_add_param testvar hadoop-azure-datalake }}{{hadoop-azure-datalake

}}{{}}

{{ echo ">${testvar}<"}}

{{ }}{{[ "}}{{${testvar}" = "hadoop-azure-datalake hadoop-azure" ]}}

{{}}}

--> execution of {{run-bats.sh

(}}{{hadoop-common-project/hadoop-common/src/test/scripts/run-bats.s}}h) does

*_not_* fail :-/

But, good news, changing internal order

{{ hadoop_add_param testvar hadoop-azure hadoop-azure }}

{{ hadoop_add_param testvar hadoop-azure-datalake }}{{hadoop-azure-datalake

}}{{}}

{{}}

fails as expected :)

> Defining hadoop-azure and hadoop-azure-datalake in HADOOP_OPTIONAL_TOOLS will

> ignore hadoop-azure

> -

>

> Key: HADOOP-16649

> URL: https://issues.apache.org/jira/browse/HADOOP-16649

> Project: Hadoop Common

> Issue Type: Bug

> Components: bin

>Affects Versions: 3.2.1

> Environment: Shell, but it also trickles down into all code using

> `FileSystem`

>Reporter: Tom Lous

>Priority: Minor

>

> When defining both `hadoop-azure` and `hadoop-azure-datalake` in

> HADOOP_OPTIONAL_TOOLS in `conf/hadoop-env.sh`, `hadoop-azure` will get

> ignored.

> eg setting this:

> HADOOP_OPTIONAL_TOOLS="hadoop-azure-datalake,hadoop-azure"

>

> with debug on:

>

> DEBUG: Profiles: importing

> /opt/hadoop/libexec/shellprofile.d/hadoop-azure-datalake.sh

> DEBUG: HADOOP_SHELL_PROFILES accepted hadoop-azure-datalake

> DEBUG: Profiles: importing /opt/hadoop/libexec/shellprofile.d/hadoop-azure.sh

> DEBUG: HADOOP_SHELL_PROFILES

> DEBUG: HADOOP_SHELL_PROFILES declined hadoop-azure hadoop-azure

>

> whereas:

>

> HADOOP_OPTIONAL_TOOLS="hadoop-azure"

>

> with debug on:

> DEBUG: Profiles: importing /opt/hadoop/libexec/shellprofile.d/hadoop-azure.sh

> DEBUG: HADOOP_SHELL_PROFILES accepted hadoop-azure

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Comment Edited] (HADOOP-16649) Defining hadoop-azure and hadoop-azure-datalake in HADOOP_OPTIONAL_TOOLS will ignore hadoop-azure

[

https://issues.apache.org/jira/browse/HADOOP-16649?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17213929#comment-17213929

]

thierry accart edited comment on HADOOP-16649 at 10/14/20, 1:49 PM:

Hi

for information: I tried to enrich the {{hadoop_add_param.bats}} with a

dedicated test

@test "hadoop_add_param (positive 2)" {

{{ \{{ hadoop_add_param testvar hadoop-azure hadoop-azure

{{ {{ hadoop_add_param testvar hadoop-azure-datalake

}}\{{hadoop-azure-datalake }}{{

{{ {{ echo ">${testvar}<"

{{ {{ }}{{[ "}}{{${testvar}" = "hadoop-azure-datalake hadoop-azure" ]

{{ {{}

execution of {{run-bats.sh

(}}{\{hadoop-common-project/hadoop-common/src/test/scripts/run-bats.s}}h) does

*_not_* fail :/

But, good news, changing internal order

{\{ hadoop_add_param testvar hadoop-azure hadoop-azure }}

{{ hadoop_add_param testvar hadoop-azure-datalake }}\{{hadoop-azure-datalake

}}{{}}

\{{}}

fails as expected :)

was (Author: taccart):

Hi

for information: I tried to enrich the {{hadoop_add_param.bats}} with a

dedicated test

{{@test "hadoop_add_param (positive 2)" {}}

\{{ hadoop_add_param testvar hadoop-azure hadoop-azure }}

{{ hadoop_add_param testvar hadoop-azure-datalake }}\{{hadoop-azure-datalake

}}{{}}

{{ echo ">${testvar}<"}}

{{ }}{{[ "}}{{${testvar}" = "hadoop-azure-datalake hadoop-azure" ]}}

{{}}}

execution of {{run-bats.sh

(}}{\{hadoop-common-project/hadoop-common/src/test/scripts/run-bats.s}}h) does

*_not_* fail :/

But, good news, changing internal order

{\{ hadoop_add_param testvar hadoop-azure hadoop-azure }}

{{ hadoop_add_param testvar hadoop-azure-datalake }}\{{hadoop-azure-datalake

}}{{}}

\{{}}

fails as expected :)

> Defining hadoop-azure and hadoop-azure-datalake in HADOOP_OPTIONAL_TOOLS will

> ignore hadoop-azure

> -

>

> Key: HADOOP-16649

> URL: https://issues.apache.org/jira/browse/HADOOP-16649

> Project: Hadoop Common

> Issue Type: Bug

> Components: bin

>Affects Versions: 3.2.1

> Environment: Shell, but it also trickles down into all code using

> `FileSystem`

>Reporter: Tom Lous

>Priority: Minor

>

> When defining both `hadoop-azure` and `hadoop-azure-datalake` in

> HADOOP_OPTIONAL_TOOLS in `conf/hadoop-env.sh`, `hadoop-azure` will get

> ignored.

> eg setting this:

> HADOOP_OPTIONAL_TOOLS="hadoop-azure-datalake,hadoop-azure"

>

> with debug on:

>

> DEBUG: Profiles: importing

> /opt/hadoop/libexec/shellprofile.d/hadoop-azure-datalake.sh

> DEBUG: HADOOP_SHELL_PROFILES accepted hadoop-azure-datalake

> DEBUG: Profiles: importing /opt/hadoop/libexec/shellprofile.d/hadoop-azure.sh

> DEBUG: HADOOP_SHELL_PROFILES

> DEBUG: HADOOP_SHELL_PROFILES declined hadoop-azure hadoop-azure

>

> whereas:

>

> HADOOP_OPTIONAL_TOOLS="hadoop-azure"

>

> with debug on:

> DEBUG: Profiles: importing /opt/hadoop/libexec/shellprofile.d/hadoop-azure.sh

> DEBUG: HADOOP_SHELL_PROFILES accepted hadoop-azure

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Commented] (HADOOP-16649) Defining hadoop-azure and hadoop-azure-datalake in HADOOP_OPTIONAL_TOOLS will ignore hadoop-azure

[

https://issues.apache.org/jira/browse/HADOOP-16649?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17213929#comment-17213929

]

thierry accart commented on HADOOP-16649:

-

Hi

for information: I tried to enrich the {{hadoop_add_param.bats}} with a

dedicated test

{{@test "hadoop_add_param (positive 2)" {}}

{{ hadoop_add_param testvar hadoop-azure hadoop-azure }}

{{ hadoop_add_param testvar hadoop-azure-datalake }}{{hadoop-azure-datalake

}}{{}}

{{ echo ">${testvar}<"}}

{{ }}{{[ "}}{{${testvar}" = "hadoop-azure-datalake hadoop-azure" ]}}

{{}}}

--> execution of {{run-bats.sh

(}}{{hadoop-common-project/hadoop-common/src/test/scripts/run-bats.s}}h) does

*_not_* fail :-/

But, good news, changing internal order

{{ hadoop_add_param testvar hadoop-azure hadoop-azure }}

{{ hadoop_add_param testvar hadoop-azure-datalake }}{{hadoop-azure-datalake

}}{{}}

{{}}

fails as expected :)

> Defining hadoop-azure and hadoop-azure-datalake in HADOOP_OPTIONAL_TOOLS will

> ignore hadoop-azure

> -

>

> Key: HADOOP-16649

> URL: https://issues.apache.org/jira/browse/HADOOP-16649

> Project: Hadoop Common

> Issue Type: Bug

> Components: bin

>Affects Versions: 3.2.1

> Environment: Shell, but it also trickles down into all code using

> `FileSystem`

>Reporter: Tom Lous

>Priority: Minor

>

> When defining both `hadoop-azure` and `hadoop-azure-datalake` in

> HADOOP_OPTIONAL_TOOLS in `conf/hadoop-env.sh`, `hadoop-azure` will get

> ignored.

> eg setting this:

> HADOOP_OPTIONAL_TOOLS="hadoop-azure-datalake,hadoop-azure"

>

> with debug on:

>

> DEBUG: Profiles: importing

> /opt/hadoop/libexec/shellprofile.d/hadoop-azure-datalake.sh

> DEBUG: HADOOP_SHELL_PROFILES accepted hadoop-azure-datalake

> DEBUG: Profiles: importing /opt/hadoop/libexec/shellprofile.d/hadoop-azure.sh

> DEBUG: HADOOP_SHELL_PROFILES

> DEBUG: HADOOP_SHELL_PROFILES declined hadoop-azure hadoop-azure

>

> whereas:

>

> HADOOP_OPTIONAL_TOOLS="hadoop-azure"

>

> with debug on:

> DEBUG: Profiles: importing /opt/hadoop/libexec/shellprofile.d/hadoop-azure.sh

> DEBUG: HADOOP_SHELL_PROFILES accepted hadoop-azure

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] snvijaya commented on pull request #2368: Hadoop-17296. ABFS: Force reads to be always of buffer size

snvijaya commented on pull request #2368: URL: https://github.com/apache/hadoop/pull/2368#issuecomment-708410051 @mukund-thakur - Have addressed the review comments. Please have a look. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] snvijaya commented on a change in pull request #2368: Hadoop-17296. ABFS: Force reads to be always of buffer size

snvijaya commented on a change in pull request #2368:

URL: https://github.com/apache/hadoop/pull/2368#discussion_r504685349

##

File path:

hadoop-tools/hadoop-azure/src/test/java/org/apache/hadoop/fs/azurebfs/ITestAzureBlobFileSystemRandomRead.java

##

@@ -448,15 +477,119 @@ public void testRandomReadPerformance() throws Exception

{

ratio < maxAcceptableRatio);

}

+ /**

+ * With this test we should see a full buffer read being triggered in case

+ * alwaysReadBufferSize is on, else only the requested buffer size.

+ * Hence a seek done few bytes away from last read position will trigger

+ * a network read when alwaysReadBufferSize is off, whereas it will return

+ * from the internal buffer when it is on.

+ * Reading a full buffer size is the Gen1 behaviour.

+ * @throws Throwable

+ */

+ @Test

+ public void testAlwaysReadBufferSizeConfig() throws Throwable {

+testAlwaysReadBufferSizeConfig(false);

+testAlwaysReadBufferSizeConfig(true);

+ }

+

+ private void assertStatistics(AzureBlobFileSystem fs,

Review comment:

Redundant method removed.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] snvijaya commented on a change in pull request #2368: Hadoop-17296. ABFS: Force reads to be always of buffer size

snvijaya commented on a change in pull request #2368:

URL: https://github.com/apache/hadoop/pull/2368#discussion_r504650837

##

File path:

hadoop-tools/hadoop-azure/src/test/java/org/apache/hadoop/fs/azurebfs/services/TestAbfsInputStream.java

##

@@ -447,4 +490,168 @@ public void testReadAheadManagerForSuccessfulReadAhead()

throws Exception {

checkEvictedStatus(inputStream, 0, true);

}

+ /**

+ * Test readahead with different config settings for request request size and

+ * readAhead block size

+ * @throws Exception

+ */

+ @Test

+ public void testDiffReadRequestSizeAndRAHBlockSize() throws Exception {

+// Set requestRequestSize = 4MB and readAheadBufferSize=8MB

+ReadBufferManager.getBufferManager()

+.testResetReadBufferManager(FOUR_MB,

INCREASED_READ_BUFFER_AGE_THRESHOLD);

+testReadAheadConfigs(FOUR_MB, TEST_READAHEAD_DEPTH_4, false, EIGHT_MB);

+

+// Test for requestRequestSize =16KB and readAheadBufferSize=16KB

+ReadBufferManager.getBufferManager()

+.testResetReadBufferManager(SIXTEEN_KB,

INCREASED_READ_BUFFER_AGE_THRESHOLD);

+AbfsInputStream inputStream = testReadAheadConfigs(SIXTEEN_KB,

+TEST_READAHEAD_DEPTH_2, true, SIXTEEN_KB);

+testReadAheads(inputStream, SIXTEEN_KB, SIXTEEN_KB);

+

+// Test for requestRequestSize =16KB and readAheadBufferSize=48KB

+ReadBufferManager.getBufferManager()

+.testResetReadBufferManager(FORTY_EIGHT_KB,

INCREASED_READ_BUFFER_AGE_THRESHOLD);

+inputStream = testReadAheadConfigs(SIXTEEN_KB, TEST_READAHEAD_DEPTH_2,

true,

+FORTY_EIGHT_KB);

+testReadAheads(inputStream, SIXTEEN_KB, FORTY_EIGHT_KB);

+

+// Test for requestRequestSize =48KB and readAheadBufferSize=16KB

+ReadBufferManager.getBufferManager()

+.testResetReadBufferManager(FORTY_EIGHT_KB,

INCREASED_READ_BUFFER_AGE_THRESHOLD);

+inputStream = testReadAheadConfigs(FORTY_EIGHT_KB, TEST_READAHEAD_DEPTH_2,

+true,

+SIXTEEN_KB);

+testReadAheads(inputStream, FORTY_EIGHT_KB, SIXTEEN_KB);

+ }

+

+

+ private void testReadAheads(AbfsInputStream inputStream,

+ int readRequestSize,

+ int readAheadRequestSize)

+ throws Exception {

+if (readRequestSize > readAheadRequestSize) {

+ readAheadRequestSize = readRequestSize;

+}

+

+byte[] firstReadBuffer = new byte[readRequestSize];

+byte[] secondReadBuffer = new byte[readAheadRequestSize];

+

+// get the expected bytes to compare

+byte[] expectedFirstReadAheadBufferContents = new byte[readRequestSize];

+byte[] expectedSecondReadAheadBufferContents = new

byte[readAheadRequestSize];

+getExpectedBufferData(0, readRequestSize,

expectedFirstReadAheadBufferContents);

+getExpectedBufferData(readRequestSize, readAheadRequestSize,

+expectedSecondReadAheadBufferContents);

+

+assertTrue("Read should be of exact requested size",

+ inputStream.read(firstReadBuffer, 0, readRequestSize) ==

readRequestSize);

+assertTrue("Data mismatch found in RAH1",

+Arrays.equals(firstReadBuffer,

+expectedFirstReadAheadBufferContents));

+

+

+assertTrue("Read should be of exact requested size",

+inputStream.read(secondReadBuffer, 0, readAheadRequestSize) ==

readAheadRequestSize);

+assertTrue("Data mismatch found in RAH2",

+Arrays.equals(secondReadBuffer,

+expectedSecondReadAheadBufferContents));

+ }

+

+ public AbfsInputStream testReadAheadConfigs(int readRequestSize,

+ int readAheadQueueDepth,

+ boolean alwaysReadBufferSizeEnabled,

+ int readAheadBlockSize) throws Exception {

+Configuration

+config = new Configuration(

+this.getRawConfiguration());

+config.set("fs.azure.read.request.size",

Integer.toString(readRequestSize));

+config.set("fs.azure.readaheadqueue.depth",

+Integer.toString(readAheadQueueDepth));

+config.set("fs.azure.read.alwaysReadBufferSize",

+Boolean.toString(alwaysReadBufferSizeEnabled));

+config.set("fs.azure.read.readahead.blocksize",

+Integer.toString(readAheadBlockSize));

+if (readRequestSize > readAheadBlockSize) {

+ readAheadBlockSize = readRequestSize;

+}

+

+Path testPath = new Path(

+"/testReadAheadConfigs");

+final AzureBlobFileSystem fs = createTestFile(testPath,

+ALWAYS_READ_BUFFER_SIZE_TEST_FILE_SIZE, config);

+byte[] byteBuffer = new byte[ONE_MB];

+AbfsInputStream inputStream = this.getAbfsStore(fs)

+.openFileForRead(testPath, null);

+

+assertEquals("Unexpected AbfsInputStream buffer size", readRequestSize,

+inputStream.getBufferSize());

+assertEquals("Unexpected ReadAhead queue depth", readAheadQueueDepth,

+inputStream.getReadAheadQueueDepth());

+assertEquals("Unexpected AlwaysReadBufferSize settings",

+alwaysReadBufferSizeEnabled,

+inputStream.shouldAlwaysReadBufferSize());

+assertEquals("Unexpected readAhead

[GitHub] [hadoop] snvijaya commented on a change in pull request #2368: Hadoop-17296. ABFS: Force reads to be always of buffer size

snvijaya commented on a change in pull request #2368:

URL: https://github.com/apache/hadoop/pull/2368#discussion_r504649012

##

File path:

hadoop-tools/hadoop-azure/src/test/java/org/apache/hadoop/fs/azurebfs/services/TestAbfsInputStream.java

##

@@ -447,4 +490,168 @@ public void testReadAheadManagerForSuccessfulReadAhead()

throws Exception {

checkEvictedStatus(inputStream, 0, true);

}

+ /**

+ * Test readahead with different config settings for request request size and

+ * readAhead block size

+ * @throws Exception

+ */

+ @Test

+ public void testDiffReadRequestSizeAndRAHBlockSize() throws Exception {

+// Set requestRequestSize = 4MB and readAheadBufferSize=8MB

+ReadBufferManager.getBufferManager()

+.testResetReadBufferManager(FOUR_MB,

INCREASED_READ_BUFFER_AGE_THRESHOLD);

+testReadAheadConfigs(FOUR_MB, TEST_READAHEAD_DEPTH_4, false, EIGHT_MB);

+

+// Test for requestRequestSize =16KB and readAheadBufferSize=16KB