[jira] [Commented] (HDFS-16456) EC: Decommission a rack with only on dn will fail when the rack number is equal with replication

[

https://issues.apache.org/jira/browse/HDFS-16456?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17493011#comment-17493011

]

Wei-Chiu Chuang commented on HDFS-16456:

[~tasanuma] [~ferhui] would you be interesting in reviewing this?

> EC: Decommission a rack with only on dn will fail when the rack number is

> equal with replication

>

>

> Key: HDFS-16456

> URL: https://issues.apache.org/jira/browse/HDFS-16456

> Project: Hadoop HDFS

> Issue Type: Bug

> Components: ec, namenode

>Affects Versions: 3.4.0

>Reporter: caozhiqiang

>Priority: Critical

> Attachments: HDFS-16456.001.patch

>

>

> In below scenario, decommission will fail by TOO_MANY_NODES_ON_RACK reason:

> # Enable EC policy, such as RS-6-3-1024k.

> # The rack number in this cluster is equal with the replication number(9)

> # A rack only has one DN, and decommission this DN.

> The root cause is in

> BlockPlacementPolicyRackFaultTolerant::getMaxNodesPerRack() function, it will

> give a limit parameter maxNodesPerRack for choose targets. In this scenario,

> the maxNodesPerRack is 1, which means each rack can only be chosen one

> datanode.

> {code:java}

> protected int[] getMaxNodesPerRack(int numOfChosen, int numOfReplicas) {

>...

> // If more replicas than racks, evenly spread the replicas.

> // This calculation rounds up.

> int maxNodesPerRack = (totalNumOfReplicas - 1) / numOfRacks + 1;

> return new int[] {numOfReplicas, maxNodesPerRack};

> } {code}

> int maxNodesPerRack = (totalNumOfReplicas - 1) / numOfRacks + 1;

> here will be called, where totalNumOfReplicas=9 and numOfRacks=9

> When we decommission one dn which is only one node in its rack, the

> chooseOnce() in BlockPlacementPolicyRackFaultTolerant::chooseTargetInOrder()

> will throw NotEnoughReplicasException, but the exception will not be caught

> and fail to fallback to chooseEvenlyFromRemainingRacks() function.

> When decommission, after choose targets, verifyBlockPlacement() function will

> return the total rack number contains the invalid rack, and

> BlockPlacementStatusDefault::isPlacementPolicySatisfied() will return false

> and it will also cause decommission fail.

> {code:java}

> public BlockPlacementStatus verifyBlockPlacement(DatanodeInfo[] locs,

> int numberOfReplicas) {

> if (locs == null)

> locs = DatanodeDescriptor.EMPTY_ARRAY;

> if (!clusterMap.hasClusterEverBeenMultiRack()) {

> // only one rack

> return new BlockPlacementStatusDefault(1, 1, 1);

> }

> // Count locations on different racks.

> Set racks = new HashSet<>();

> for (DatanodeInfo dn : locs) {

> racks.add(dn.getNetworkLocation());

> }

> return new BlockPlacementStatusDefault(racks.size(), numberOfReplicas,

> clusterMap.getNumOfRacks());

> } {code}

> {code:java}

> public boolean isPlacementPolicySatisfied() {

> return requiredRacks <= currentRacks || currentRacks >= totalRacks;

> }{code}

> According to the above description, we should make the below modify to fix it:

> # In startDecommission() or stopDecommission(), we should also change the

> numOfRacks in class NetworkTopology. Or choose targets may fail for the

> maxNodesPerRack is too small. And even choose targets success,

> isPlacementPolicySatisfied will also return false cause decommission fail.

> # In BlockPlacementPolicyRackFaultTolerant::chooseTargetInOrder(), the first

> chooseOnce() function should also be put in try..catch..., or it will not

> fallback to call chooseEvenlyFromRemainingRacks() when throw exception.

> # In chooseEvenlyFromRemainingRacks(), this numResultsOflastChoose =

> results.size(); code should be move to after chooseOnce(), or it will throw

> lastException and make choose targets failed.

>

>

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Created] (HDFS-16457) Make fs.getspaceused.classname reconfigurable

yanbin.zhang created HDFS-16457: --- Summary: Make fs.getspaceused.classname reconfigurable Key: HDFS-16457 URL: https://issues.apache.org/jira/browse/HDFS-16457 Project: Hadoop HDFS Issue Type: Improvement Components: namenode Affects Versions: 3.3.0 Reporter: yanbin.zhang Assignee: yanbin.zhang Now if we want to switch fs.getspaceused.classname we need to restart the NameNode. It would be convenient if we can switch it at runtime. -- This message was sent by Atlassian Jira (v8.20.1#820001) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16316) Improve DirectoryScanner: add regular file check related block

[

https://issues.apache.org/jira/browse/HDFS-16316?focusedWorklogId=728013=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-728013

]

ASF GitHub Bot logged work on HDFS-16316:

-

Author: ASF GitHub Bot

Created on: 16/Feb/22 02:50

Start Date: 16/Feb/22 02:50

Worklog Time Spent: 10m

Work Description: jianghuazhu commented on pull request #3861:

URL: https://github.com/apache/hadoop/pull/3861#issuecomment-1041040826

Thanks for the suggestion, @jojochuang .

I re-updated the unit tests and also did some tests.

When I remove the fix, the newly added unit test does not succeed, which is

expected and does not affect the execution of other unit tests.

Here is an example of the test when removing the fix:

Here is an example during normal testing:

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 728013)

Time Spent: 3h 50m (was: 3h 40m)

> Improve DirectoryScanner: add regular file check related block

> --

>

> Key: HDFS-16316

> URL: https://issues.apache.org/jira/browse/HDFS-16316

> Project: Hadoop HDFS

> Issue Type: Bug

> Components: datanode

>Affects Versions: 2.9.2

>Reporter: JiangHua Zhu

>Assignee: JiangHua Zhu

>Priority: Major

> Labels: pull-request-available

> Attachments: screenshot-1.png, screenshot-2.png, screenshot-3.png,

> screenshot-4.png

>

> Time Spent: 3h 50m

> Remaining Estimate: 0h

>

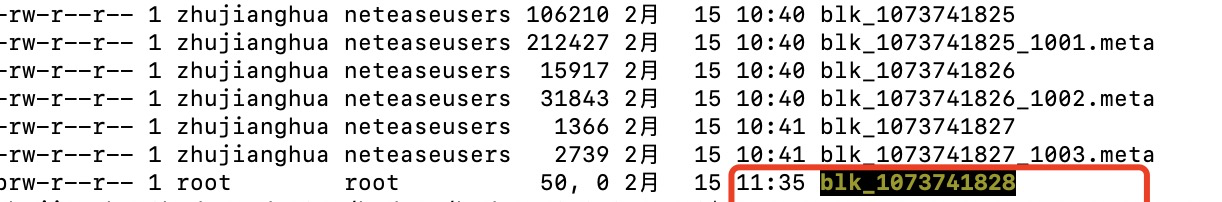

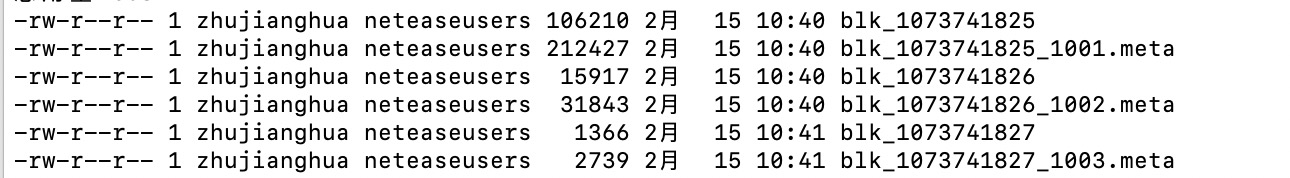

> Something unusual happened in the online environment.

> The DataNode is configured with 11 disks (${dfs.datanode.data.dir}). It is

> normal for 10 disks to calculate the used capacity, and the calculated value

> for the other 1 disk is much larger, which is very strange.

> This is about the live view on the NameNode:

> !screenshot-1.png!

> This is about the live view on the DataNode:

> !screenshot-2.png!

> We can look at the view on linux:

> !screenshot-3.png!

> There is a big gap here, regarding'/mnt/dfs/11/data'. This situation should

> be prohibited from happening.

> I found that there are some abnormal block files.

> There are wrong blk_.meta in some subdir directories, causing abnormal

> computing space.

> Here are some abnormal block files:

> !screenshot-4.png!

> Such files should not be used as normal blocks. They should be actively

> identified and filtered, which is good for cluster stability.

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Updated] (HDFS-16396) Reconfig slow peer parameters for datanode

[ https://issues.apache.org/jira/browse/HDFS-16396?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Takanobu Asanuma updated HDFS-16396: Fix Version/s: 3.3.3 > Reconfig slow peer parameters for datanode > -- > > Key: HDFS-16396 > URL: https://issues.apache.org/jira/browse/HDFS-16396 > Project: Hadoop HDFS > Issue Type: New Feature >Reporter: tomscut >Assignee: tomscut >Priority: Major > Labels: pull-request-available > Fix For: 3.4.0, 3.3.3 > > Time Spent: 5h 10m > Remaining Estimate: 0h > > In large clusters, rolling restart datanodes takes a long time. We can make > slow peers parameters and slow disks parameters in datanode reconfigurable to > facilitate cluster operation and maintenance. -- This message was sent by Atlassian Jira (v8.20.1#820001) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Updated] (HDFS-15745) Make DataNodePeerMetrics#LOW_THRESHOLD_MS and MIN_OUTLIER_DETECTION_NODES configurable

[ https://issues.apache.org/jira/browse/HDFS-15745?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Takanobu Asanuma updated HDFS-15745: Fix Version/s: 3.3.3 > Make DataNodePeerMetrics#LOW_THRESHOLD_MS and MIN_OUTLIER_DETECTION_NODES > configurable > -- > > Key: HDFS-15745 > URL: https://issues.apache.org/jira/browse/HDFS-15745 > Project: Hadoop HDFS > Issue Type: Improvement >Reporter: Haibin Huang >Assignee: Haibin Huang >Priority: Major > Labels: pull-request-available > Fix For: 3.4.0, 3.3.3 > > Attachments: HDFS-15745-001.patch, HDFS-15745-002.patch, > HDFS-15745-003.patch, HDFS-15745-branch-3.1.001.patch, > HDFS-15745-branch-3.2.001.patch, HDFS-15745-branch-3.3.001.patch, > image-2020-12-22-17-00-50-796.png > > Time Spent: 50m > Remaining Estimate: 0h > > When i enable DataNodePeerMetrics to find slow slow peer in cluster, i found > there is a lot of slow peer but ReportingNodes's averageDelay is very low, > and these slow peer node are normal. I think the reason of why generating so > many slow peer is that the value of DataNodePeerMetrics#LOW_THRESHOLD_MS is > too small (only 5ms) and it is not configurable. The default value of slow io > warning log threshold is 300ms, i.e. > DFSConfigKeys.DFS_DATANODE_SLOW_IO_WARNING_THRESHOLD_DEFAULT = 300, so > DataNodePeerMetrics#LOW_THRESHOLD_MS should not be less than 300ms, otherwise > namenode will get a lot of invalid slow peer information. > !image-2020-12-22-17-00-50-796.png! -- This message was sent by Atlassian Jira (v8.20.1#820001) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Updated] (HDFS-10650) DFSClient#mkdirs and DFSClient#primitiveMkdir should use default directory permission

[

https://issues.apache.org/jira/browse/HDFS-10650?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Konstantin Shvachko updated HDFS-10650:

---

Fix Version/s: 2.10.2

Merged this into branch-2.10.

Updated fix version.

> DFSClient#mkdirs and DFSClient#primitiveMkdir should use default directory

> permission

> -

>

> Key: HDFS-10650

> URL: https://issues.apache.org/jira/browse/HDFS-10650

> Project: Hadoop HDFS

> Issue Type: Bug

>Affects Versions: 2.6.0

>Reporter: John Zhuge

>Assignee: John Zhuge

>Priority: Minor

> Fix For: 3.0.0-alpha1, 2.10.2

>

> Attachments: HDFS-10650.001.patch, HDFS-10650.002.patch

>

>

> These 2 DFSClient methods should use default directory permission to create a

> directory.

> {code:java}

> public boolean mkdirs(String src, FsPermission permission,

> boolean createParent) throws IOException {

> if (permission == null) {

> permission = FsPermission.getDefault();

> }

> {code}

> {code:java}

> public boolean primitiveMkdir(String src, FsPermission absPermission,

> boolean createParent)

> throws IOException {

> checkOpen();

> if (absPermission == null) {

> absPermission =

> FsPermission.getDefault().applyUMask(dfsClientConf.uMask);

> }

> {code}

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-15745) Make DataNodePeerMetrics#LOW_THRESHOLD_MS and MIN_OUTLIER_DETECTION_NODES configurable

[ https://issues.apache.org/jira/browse/HDFS-15745?focusedWorklogId=727965=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-727965 ] ASF GitHub Bot logged work on HDFS-15745: - Author: ASF GitHub Bot Created on: 16/Feb/22 00:42 Start Date: 16/Feb/22 00:42 Worklog Time Spent: 10m Work Description: tasanuma merged pull request #3992: URL: https://github.com/apache/hadoop/pull/3992 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 727965) Time Spent: 50m (was: 40m) > Make DataNodePeerMetrics#LOW_THRESHOLD_MS and MIN_OUTLIER_DETECTION_NODES > configurable > -- > > Key: HDFS-15745 > URL: https://issues.apache.org/jira/browse/HDFS-15745 > Project: Hadoop HDFS > Issue Type: Improvement >Reporter: Haibin Huang >Assignee: Haibin Huang >Priority: Major > Labels: pull-request-available > Fix For: 3.4.0 > > Attachments: HDFS-15745-001.patch, HDFS-15745-002.patch, > HDFS-15745-003.patch, HDFS-15745-branch-3.1.001.patch, > HDFS-15745-branch-3.2.001.patch, HDFS-15745-branch-3.3.001.patch, > image-2020-12-22-17-00-50-796.png > > Time Spent: 50m > Remaining Estimate: 0h > > When i enable DataNodePeerMetrics to find slow slow peer in cluster, i found > there is a lot of slow peer but ReportingNodes's averageDelay is very low, > and these slow peer node are normal. I think the reason of why generating so > many slow peer is that the value of DataNodePeerMetrics#LOW_THRESHOLD_MS is > too small (only 5ms) and it is not configurable. The default value of slow io > warning log threshold is 300ms, i.e. > DFSConfigKeys.DFS_DATANODE_SLOW_IO_WARNING_THRESHOLD_DEFAULT = 300, so > DataNodePeerMetrics#LOW_THRESHOLD_MS should not be less than 300ms, otherwise > namenode will get a lot of invalid slow peer information. > !image-2020-12-22-17-00-50-796.png! -- This message was sent by Atlassian Jira (v8.20.1#820001) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-15745) Make DataNodePeerMetrics#LOW_THRESHOLD_MS and MIN_OUTLIER_DETECTION_NODES configurable

[ https://issues.apache.org/jira/browse/HDFS-15745?focusedWorklogId=727964=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-727964 ] ASF GitHub Bot logged work on HDFS-15745: - Author: ASF GitHub Bot Created on: 16/Feb/22 00:41 Start Date: 16/Feb/22 00:41 Worklog Time Spent: 10m Work Description: tasanuma commented on pull request #3992: URL: https://github.com/apache/hadoop/pull/3992#issuecomment-1040943937 The failed tests seem not to related. I'm merging it. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 727964) Time Spent: 40m (was: 0.5h) > Make DataNodePeerMetrics#LOW_THRESHOLD_MS and MIN_OUTLIER_DETECTION_NODES > configurable > -- > > Key: HDFS-15745 > URL: https://issues.apache.org/jira/browse/HDFS-15745 > Project: Hadoop HDFS > Issue Type: Improvement >Reporter: Haibin Huang >Assignee: Haibin Huang >Priority: Major > Labels: pull-request-available > Fix For: 3.4.0 > > Attachments: HDFS-15745-001.patch, HDFS-15745-002.patch, > HDFS-15745-003.patch, HDFS-15745-branch-3.1.001.patch, > HDFS-15745-branch-3.2.001.patch, HDFS-15745-branch-3.3.001.patch, > image-2020-12-22-17-00-50-796.png > > Time Spent: 40m > Remaining Estimate: 0h > > When i enable DataNodePeerMetrics to find slow slow peer in cluster, i found > there is a lot of slow peer but ReportingNodes's averageDelay is very low, > and these slow peer node are normal. I think the reason of why generating so > many slow peer is that the value of DataNodePeerMetrics#LOW_THRESHOLD_MS is > too small (only 5ms) and it is not configurable. The default value of slow io > warning log threshold is 300ms, i.e. > DFSConfigKeys.DFS_DATANODE_SLOW_IO_WARNING_THRESHOLD_DEFAULT = 300, so > DataNodePeerMetrics#LOW_THRESHOLD_MS should not be less than 300ms, otherwise > namenode will get a lot of invalid slow peer information. > !image-2020-12-22-17-00-50-796.png! -- This message was sent by Atlassian Jira (v8.20.1#820001) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16397) Reconfig slow disk parameters for datanode

[ https://issues.apache.org/jira/browse/HDFS-16397?focusedWorklogId=727951=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-727951 ] ASF GitHub Bot logged work on HDFS-16397: - Author: ASF GitHub Bot Created on: 16/Feb/22 00:16 Start Date: 16/Feb/22 00:16 Worklog Time Spent: 10m Work Description: tomscut commented on pull request #3828: URL: https://github.com/apache/hadoop/pull/3828#issuecomment-1040929250 Hi @tasanuma @ayushtkn @Hexiaoqiao , could you please review this PR? Thanks. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 727951) Time Spent: 1h (was: 50m) > Reconfig slow disk parameters for datanode > -- > > Key: HDFS-16397 > URL: https://issues.apache.org/jira/browse/HDFS-16397 > Project: Hadoop HDFS > Issue Type: New Feature >Reporter: tomscut >Assignee: tomscut >Priority: Major > Labels: pull-request-available > Time Spent: 1h > Remaining Estimate: 0h > > In large clusters, rolling restart datanodes takes long time. We can make > slow peers parameters and slow disks parameters in datanode reconfigurable to > facilitate cluster operation and maintenance. -- This message was sent by Atlassian Jira (v8.20.1#820001) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16316) Improve DirectoryScanner: add regular file check related block

[

https://issues.apache.org/jira/browse/HDFS-16316?focusedWorklogId=727862=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-727862

]

ASF GitHub Bot logged work on HDFS-16316:

-

Author: ASF GitHub Bot

Created on: 15/Feb/22 21:56

Start Date: 15/Feb/22 21:56

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on pull request #3861:

URL: https://github.com/apache/hadoop/pull/3861#issuecomment-1040835489

:confetti_ball: **+1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 43s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 2 new or modified test files. |

_ trunk Compile Tests _ |

| +0 :ok: | mvndep | 12m 48s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 25m 22s | | trunk passed |

| +1 :green_heart: | compile | 26m 32s | | trunk passed with JDK

Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | compile | 23m 0s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | checkstyle | 4m 8s | | trunk passed |

| +1 :green_heart: | mvnsite | 3m 21s | | trunk passed |

| +1 :green_heart: | javadoc | 2m 30s | | trunk passed with JDK

Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 3m 36s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 5m 57s | | trunk passed |

| +1 :green_heart: | shadedclient | 24m 8s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 29s | | Maven dependency ordering for patch |

| +1 :green_heart: | mvninstall | 2m 23s | | the patch passed |

| +1 :green_heart: | compile | 23m 11s | | the patch passed with JDK

Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javac | 23m 11s | | the patch passed |

| +1 :green_heart: | compile | 21m 53s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | javac | 21m 53s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 3m 33s | | the patch passed |

| +1 :green_heart: | mvnsite | 3m 22s | | the patch passed |

| +1 :green_heart: | javadoc | 2m 18s | | the patch passed with JDK

Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 3m 31s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 6m 34s | | the patch passed |

| +1 :green_heart: | shadedclient | 24m 1s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 17m 55s | | hadoop-common in the patch

passed. |

| +1 :green_heart: | unit | 230m 38s | | hadoop-hdfs in the patch

passed. |

| +1 :green_heart: | asflicense | 1m 7s | | The patch does not

generate ASF License warnings. |

| | | 471m 50s | | |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3861/7/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/3861 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell |

| uname | Linux dbbf79d9ac98 4.15.0-58-generic #64-Ubuntu SMP Tue Aug 6

11:12:41 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / f5e27e408d9aa8f1e563d139d35a001375e19f7f |

| Default Java | Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| Multi-JDK versions |

/usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04

/usr/lib/jvm/java-8-openjdk-amd64:Private

Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| Test Results |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3861/7/testReport/ |

| Max. process+thread count | 3544 (vs. ulimit of 5500) |

| modules | C: hadoop-common-project/hadoop-common

hadoop-hdfs-project/hadoop-hdfs U: .

[jira] [Work logged] (HDFS-16397) Reconfig slow disk parameters for datanode

[

https://issues.apache.org/jira/browse/HDFS-16397?focusedWorklogId=727763=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-727763

]

ASF GitHub Bot logged work on HDFS-16397:

-

Author: ASF GitHub Bot

Created on: 15/Feb/22 19:35

Start Date: 15/Feb/22 19:35

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on pull request #3828:

URL: https://github.com/apache/hadoop/pull/3828#issuecomment-1040706117

:confetti_ball: **+1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 41s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 2 new or modified test files. |

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 32m 18s | | trunk passed |

| +1 :green_heart: | compile | 1m 28s | | trunk passed with JDK

Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | compile | 1m 20s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | checkstyle | 1m 4s | | trunk passed |

| +1 :green_heart: | mvnsite | 1m 30s | | trunk passed |

| +1 :green_heart: | javadoc | 1m 4s | | trunk passed with JDK

Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 1m 34s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 3m 19s | | trunk passed |

| +1 :green_heart: | shadedclient | 22m 32s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 1m 16s | | the patch passed |

| +1 :green_heart: | compile | 1m 21s | | the patch passed with JDK

Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javac | 1m 21s | | the patch passed |

| +1 :green_heart: | compile | 1m 11s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | javac | 1m 11s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| -0 :warning: | checkstyle | 0m 51s |

[/results-checkstyle-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3828/5/artifact/out/results-checkstyle-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs-project/hadoop-hdfs: The patch generated 3 new + 136 unchanged

- 2 fixed = 139 total (was 138) |

| +1 :green_heart: | mvnsite | 1m 17s | | the patch passed |

| +1 :green_heart: | javadoc | 0m 50s | | the patch passed with JDK

Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 1m 24s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 3m 13s | | the patch passed |

| +1 :green_heart: | shadedclient | 22m 6s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 226m 8s | | hadoop-hdfs in the patch

passed. |

| +1 :green_heart: | asflicense | 0m 47s | | The patch does not

generate ASF License warnings. |

| | | 325m 11s | | |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3828/5/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/3828 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell |

| uname | Linux dcca2c281b61 4.15.0-112-generic #113-Ubuntu SMP Thu Jul 9

23:41:39 UTC 2020 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 10536590e771f077d4ffdbfb9fe92112fc40254e |

| Default Java | Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| Multi-JDK versions |

/usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04

/usr/lib/jvm/java-8-openjdk-amd64:Private

Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| Test Results |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3828/5/testReport/ |

| Max. process+thread count | 3263 (vs. ulimit of 5500) |

| modules | C: hadoop-hdfs-project/hadoop-hdfs U:

[jira] [Work logged] (HDFS-15745) Make DataNodePeerMetrics#LOW_THRESHOLD_MS and MIN_OUTLIER_DETECTION_NODES configurable

[ https://issues.apache.org/jira/browse/HDFS-15745?focusedWorklogId=727628=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-727628 ] ASF GitHub Bot logged work on HDFS-15745: - Author: ASF GitHub Bot Created on: 15/Feb/22 19:06 Start Date: 15/Feb/22 19:06 Worklog Time Spent: 10m Work Description: tasanuma opened a new pull request #3992: URL: https://github.com/apache/hadoop/pull/3992 ### Description of PR HDFS-15745. Make DataNodePeerMetrics#LOW_THRESHOLD_MS and MIN_OUTLIER_DETECTION_NODES configurable. ### How was this patch tested? ### For code changes: - [x] Does the title or this PR starts with the corresponding JIRA issue id (e.g. 'HADOOP-17799. Your PR title ...')? - [ ] Object storage: have the integration tests been executed and the endpoint declared according to the connector-specific documentation? - [ ] If adding new dependencies to the code, are these dependencies licensed in a way that is compatible for inclusion under [ASF 2.0](http://www.apache.org/legal/resolved.html#category-a)? - [ ] If applicable, have you updated the `LICENSE`, `LICENSE-binary`, `NOTICE-binary` files? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 727628) Time Spent: 0.5h (was: 20m) > Make DataNodePeerMetrics#LOW_THRESHOLD_MS and MIN_OUTLIER_DETECTION_NODES > configurable > -- > > Key: HDFS-15745 > URL: https://issues.apache.org/jira/browse/HDFS-15745 > Project: Hadoop HDFS > Issue Type: Improvement >Reporter: Haibin Huang >Assignee: Haibin Huang >Priority: Major > Labels: pull-request-available > Fix For: 3.4.0 > > Attachments: HDFS-15745-001.patch, HDFS-15745-002.patch, > HDFS-15745-003.patch, HDFS-15745-branch-3.1.001.patch, > HDFS-15745-branch-3.2.001.patch, HDFS-15745-branch-3.3.001.patch, > image-2020-12-22-17-00-50-796.png > > Time Spent: 0.5h > Remaining Estimate: 0h > > When i enable DataNodePeerMetrics to find slow slow peer in cluster, i found > there is a lot of slow peer but ReportingNodes's averageDelay is very low, > and these slow peer node are normal. I think the reason of why generating so > many slow peer is that the value of DataNodePeerMetrics#LOW_THRESHOLD_MS is > too small (only 5ms) and it is not configurable. The default value of slow io > warning log threshold is 300ms, i.e. > DFSConfigKeys.DFS_DATANODE_SLOW_IO_WARNING_THRESHOLD_DEFAULT = 300, so > DataNodePeerMetrics#LOW_THRESHOLD_MS should not be less than 300ms, otherwise > namenode will get a lot of invalid slow peer information. > !image-2020-12-22-17-00-50-796.png! -- This message was sent by Atlassian Jira (v8.20.1#820001) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16440) RBF: Support router get HAServiceStatus with Lifeline RPC address

[ https://issues.apache.org/jira/browse/HDFS-16440?focusedWorklogId=727614=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-727614 ] ASF GitHub Bot logged work on HDFS-16440: - Author: ASF GitHub Bot Created on: 15/Feb/22 19:05 Start Date: 15/Feb/22 19:05 Worklog Time Spent: 10m Work Description: goiri merged pull request #3971: URL: https://github.com/apache/hadoop/pull/3971 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 727614) Time Spent: 2h 10m (was: 2h) > RBF: Support router get HAServiceStatus with Lifeline RPC address > - > > Key: HDFS-16440 > URL: https://issues.apache.org/jira/browse/HDFS-16440 > Project: Hadoop HDFS > Issue Type: Improvement > Components: rbf >Reporter: YulongZ >Assignee: YulongZ >Priority: Minor > Labels: pull-request-available > Fix For: 3.4.0 > > Attachments: HDFS-16440.001.patch, HDFS-16440.003.patch, > HDFS-16440.004.patch > > Time Spent: 2h 10m > Remaining Estimate: 0h > > NamenodeHeartbeatService gets HAServiceStatus using > NNHAServiceTarget.getProxy. When we set a special > dfs.namenode.lifeline.rpc-address , NamenodeHeartbeatService may get > HAServiceStatus using NNHAServiceTarget.getHealthMonitorProxy. -- This message was sent by Atlassian Jira (v8.20.1#820001) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-15745) Make DataNodePeerMetrics#LOW_THRESHOLD_MS and MIN_OUTLIER_DETECTION_NODES configurable

[

https://issues.apache.org/jira/browse/HDFS-15745?focusedWorklogId=727611=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-727611

]

ASF GitHub Bot logged work on HDFS-15745:

-

Author: ASF GitHub Bot

Created on: 15/Feb/22 19:05

Start Date: 15/Feb/22 19:05

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on pull request #3992:

URL: https://github.com/apache/hadoop/pull/3992#issuecomment-1040638017

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 9m 48s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| -1 :x: | test4tests | 0m 0s | | The patch doesn't appear to include

any new or modified tests. Please justify why no new tests are needed for this

patch. Also please list what manual steps were performed to verify this patch.

|

_ branch-3.3 Compile Tests _ |

| +1 :green_heart: | mvninstall | 33m 37s | | branch-3.3 passed |

| +1 :green_heart: | compile | 1m 13s | | branch-3.3 passed |

| +1 :green_heart: | checkstyle | 0m 49s | | branch-3.3 passed |

| +1 :green_heart: | mvnsite | 1m 19s | | branch-3.3 passed |

| +1 :green_heart: | javadoc | 1m 25s | | branch-3.3 passed |

| +1 :green_heart: | spotbugs | 3m 14s | | branch-3.3 passed |

| +1 :green_heart: | shadedclient | 27m 9s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 1m 11s | | the patch passed |

| +1 :green_heart: | compile | 1m 6s | | the patch passed |

| +1 :green_heart: | javac | 1m 6s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 0m 41s | | the patch passed |

| +1 :green_heart: | mvnsite | 1m 15s | | the patch passed |

| +1 :green_heart: | xml | 0m 1s | | The patch has no ill-formed XML

file. |

| +1 :green_heart: | javadoc | 1m 16s | | the patch passed |

| +1 :green_heart: | spotbugs | 3m 16s | | the patch passed |

| +1 :green_heart: | shadedclient | 26m 59s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| -1 :x: | unit | 210m 34s |

[/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3992/1/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs in the patch passed. |

| +1 :green_heart: | asflicense | 0m 37s | | The patch does not

generate ASF License warnings. |

| | | 322m 44s | | |

| Reason | Tests |

|---:|:--|

| Failed junit tests |

hadoop.hdfs.server.blockmanagement.TestBlockTokenWithDFSStriped |

| | hadoop.hdfs.server.datanode.TestDirectoryScanner |

| | hadoop.hdfs.tools.offlineImageViewer.TestOfflineImageViewer |

| | hadoop.hdfs.TestRollingUpgrade |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3992/1/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/3992 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell xml |

| uname | Linux 84d43b1571d0 4.15.0-163-generic #171-Ubuntu SMP Fri Nov 5

11:55:11 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | branch-3.3 / 81aa7a942b0af7d854b94431e34dd731bdb343c7 |

| Default Java | Private Build-1.8.0_312-8u312-b07-0ubuntu1~18.04-b07 |

| Test Results |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3992/1/testReport/ |

| Max. process+thread count | 2163 (vs. ulimit of 5500) |

| modules | C: hadoop-hdfs-project/hadoop-hdfs U:

hadoop-hdfs-project/hadoop-hdfs |

| Console output |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3992/1/console |

| versions | git=2.17.1 maven=3.6.0 spotbugs=4.2.2 |

| Powered by | Apache Yetus 0.14.0-SNAPSHOT https://yetus.apache.org |

This message was automatically generated.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail:

[jira] [Work logged] (HDFS-16396) Reconfig slow peer parameters for datanode

[ https://issues.apache.org/jira/browse/HDFS-16396?focusedWorklogId=727563=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-727563 ] ASF GitHub Bot logged work on HDFS-16396: - Author: ASF GitHub Bot Created on: 15/Feb/22 19:01 Start Date: 15/Feb/22 19:01 Worklog Time Spent: 10m Work Description: tasanuma merged pull request #3827: URL: https://github.com/apache/hadoop/pull/3827 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 727563) Time Spent: 5h 10m (was: 5h) > Reconfig slow peer parameters for datanode > -- > > Key: HDFS-16396 > URL: https://issues.apache.org/jira/browse/HDFS-16396 > Project: Hadoop HDFS > Issue Type: New Feature >Reporter: tomscut >Assignee: tomscut >Priority: Major > Labels: pull-request-available > Fix For: 3.4.0 > > Time Spent: 5h 10m > Remaining Estimate: 0h > > In large clusters, rolling restart datanodes takes a long time. We can make > slow peers parameters and slow disks parameters in datanode reconfigurable to > facilitate cluster operation and maintenance. -- This message was sent by Atlassian Jira (v8.20.1#820001) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16316) Improve DirectoryScanner: add regular file check related block

[

https://issues.apache.org/jira/browse/HDFS-16316?focusedWorklogId=727536=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-727536

]

ASF GitHub Bot logged work on HDFS-16316:

-

Author: ASF GitHub Bot

Created on: 15/Feb/22 18:59

Start Date: 15/Feb/22 18:59

Worklog Time Spent: 10m

Work Description: jianghuazhu commented on a change in pull request #3861:

URL: https://github.com/apache/hadoop/pull/3861#discussion_r806465949

##

File path:

hadoop-hdfs-project/hadoop-hdfs/src/test/java/org/apache/hadoop/hdfs/server/datanode/TestDirectoryScanner.java

##

@@ -507,6 +509,71 @@ public void testDeleteBlockOnTransientStorage() throws

Exception {

}

}

+ @Test(timeout = 60)

+ public void testRegularBlock() throws Exception {

+// add a logger stream to check what has printed to log

+ByteArrayOutputStream loggerStream = new ByteArrayOutputStream();

Review comment:

Yes, it was my mistake.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 727536)

Time Spent: 3.5h (was: 3h 20m)

> Improve DirectoryScanner: add regular file check related block

> --

>

> Key: HDFS-16316

> URL: https://issues.apache.org/jira/browse/HDFS-16316

> Project: Hadoop HDFS

> Issue Type: Bug

> Components: datanode

>Affects Versions: 2.9.2

>Reporter: JiangHua Zhu

>Assignee: JiangHua Zhu

>Priority: Major

> Labels: pull-request-available

> Attachments: screenshot-1.png, screenshot-2.png, screenshot-3.png,

> screenshot-4.png

>

> Time Spent: 3.5h

> Remaining Estimate: 0h

>

> Something unusual happened in the online environment.

> The DataNode is configured with 11 disks (${dfs.datanode.data.dir}). It is

> normal for 10 disks to calculate the used capacity, and the calculated value

> for the other 1 disk is much larger, which is very strange.

> This is about the live view on the NameNode:

> !screenshot-1.png!

> This is about the live view on the DataNode:

> !screenshot-2.png!

> We can look at the view on linux:

> !screenshot-3.png!

> There is a big gap here, regarding'/mnt/dfs/11/data'. This situation should

> be prohibited from happening.

> I found that there are some abnormal block files.

> There are wrong blk_.meta in some subdir directories, causing abnormal

> computing space.

> Here are some abnormal block files:

> !screenshot-4.png!

> Such files should not be used as normal blocks. They should be actively

> identified and filtered, which is good for cluster stability.

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16316) Improve DirectoryScanner: add regular file check related block

[

https://issues.apache.org/jira/browse/HDFS-16316?focusedWorklogId=727516=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-727516

]

ASF GitHub Bot logged work on HDFS-16316:

-

Author: ASF GitHub Bot

Created on: 15/Feb/22 18:57

Start Date: 15/Feb/22 18:57

Worklog Time Spent: 10m

Work Description: jianghuazhu commented on pull request #3861:

URL: https://github.com/apache/hadoop/pull/3861#issuecomment-1039826045

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 727516)

Time Spent: 3h 20m (was: 3h 10m)

> Improve DirectoryScanner: add regular file check related block

> --

>

> Key: HDFS-16316

> URL: https://issues.apache.org/jira/browse/HDFS-16316

> Project: Hadoop HDFS

> Issue Type: Bug

> Components: datanode

>Affects Versions: 2.9.2

>Reporter: JiangHua Zhu

>Assignee: JiangHua Zhu

>Priority: Major

> Labels: pull-request-available

> Attachments: screenshot-1.png, screenshot-2.png, screenshot-3.png,

> screenshot-4.png

>

> Time Spent: 3h 20m

> Remaining Estimate: 0h

>

> Something unusual happened in the online environment.

> The DataNode is configured with 11 disks (${dfs.datanode.data.dir}). It is

> normal for 10 disks to calculate the used capacity, and the calculated value

> for the other 1 disk is much larger, which is very strange.

> This is about the live view on the NameNode:

> !screenshot-1.png!

> This is about the live view on the DataNode:

> !screenshot-2.png!

> We can look at the view on linux:

> !screenshot-3.png!

> There is a big gap here, regarding'/mnt/dfs/11/data'. This situation should

> be prohibited from happening.

> I found that there are some abnormal block files.

> There are wrong blk_.meta in some subdir directories, causing abnormal

> computing space.

> Here are some abnormal block files:

> !screenshot-4.png!

> Such files should not be used as normal blocks. They should be actively

> identified and filtered, which is good for cluster stability.

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16455) RBF: Router should explicitly specify the value of `jute.maxbuffer` in hadoop configuration files like core-site.xml

[

https://issues.apache.org/jira/browse/HDFS-16455?focusedWorklogId=727472=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-727472

]

ASF GitHub Bot logged work on HDFS-16455:

-

Author: ASF GitHub Bot

Created on: 15/Feb/22 18:54

Start Date: 15/Feb/22 18:54

Worklog Time Spent: 10m

Work Description: Neilxzn commented on a change in pull request #3983:

URL: https://github.com/apache/hadoop/pull/3983#discussion_r806389802

##

File path:

hadoop-common-project/hadoop-common/src/main/java/org/apache/hadoop/security/token/delegation/ZKDelegationTokenSecretManager.java

##

@@ -199,6 +202,10 @@ public ZKDelegationTokenSecretManager(Configuration conf) {

ZK_DTSM_ZK_SESSION_TIMEOUT_DEFAULT);

int numRetries =

conf.getInt(ZK_DTSM_ZK_NUM_RETRIES,

ZK_DTSM_ZK_NUM_RETRIES_DEFAULT);

+String juteMaxBuffer =

+conf.get(ZK_DTSM_ZK_JUTE_MAXBUFFER,

ZK_DTSM_ZK_JUTE_MAXBUFFER_DEFAULT);

Review comment:

Thank you for your review. fix it.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 727472)

Time Spent: 1h 50m (was: 1h 40m)

> RBF: Router should explicitly specify the value of `jute.maxbuffer` in hadoop

> configuration files like core-site.xml

>

>

> Key: HDFS-16455

> URL: https://issues.apache.org/jira/browse/HDFS-16455

> Project: Hadoop HDFS

> Issue Type: Bug

> Components: rbf

>Affects Versions: 3.3.0, 3.4.0

>Reporter: Max Xie

>Assignee: Max Xie

>Priority: Minor

> Labels: pull-request-available

> Time Spent: 1h 50m

> Remaining Estimate: 0h

>

> Based on the current design for delegation token in secure Router, the total

> number of tokens store and update in zookeeper using

> ZKDelegationTokenManager.

> But the default value of system property `jute.maxbuffer` is just 4MB, if

> Router store too many tokens in zk, it will throw IOException `{{{}Packet

> lenxx is out of range{}}}` and all Router will crash.

>

> In our cluster, Routers crashed because of it. The crash logs are below

> {code:java}

> 2022-02-09 02:15:51,607 INFO

> org.apache.hadoop.security.token.delegation.AbstractDelegationTokenSecretManager:

> Token renewal for identifier: (token for xxx: HDFS_DELEGATION_TOKEN

> owner=xxx/scheduler, renewer=hadoop, realUser=, issueDate=1644344146305,

> maxDate=1644948946305, sequenceNumbe

> r=27136070, masterKeyId=1107); total currentTokens 279548 2022-02-09

> 02:16:07,632 WARN org.apache.zookeeper.ClientCnxn: Session 0x1000172775a0012

> for server zkurl:2181, unexpected e

> rror, closing socket connection and attempting reconnect

> java.io.IOException: Packet len4194553 is out of range!

> at org.apache.zookeeper.ClientCnxnSocket.readLength(ClientCnxnSocket.java:113)

> at org.apache.zookeeper.ClientCnxnSocketNIO.doIO(ClientCnxnSocketNIO.java:79)

> at

> org.apache.zookeeper.ClientCnxnSocketNIO.doTransport(ClientCnxnSocketNIO.java:366)

> at org.apache.zookeeper.ClientCnxn$SendThread.run(ClientCnxn.java:1145)

> 2022-02-09 02:16:07,733 WARN org.apache.hadoop.ipc.Server: IPC Server handler

> 1254 on default port 9001, call Call#144 Retry#0

> org.apache.hadoop.hdfs.protocol.ClientProtocol.getDelegationToken from

> ip:46534

> java.lang.RuntimeException: Could not increment shared counter !!

> at

> org.apache.hadoop.security.token.delegation.ZKDelegationTokenSecretManager.incrementDelegationTokenSeqNum(ZKDelegationTokenSecretManager.java:582)

> {code}

> When we restart a Router, it crashed again

> {code:java}

> 2022-02-09 03:14:17,308 INFO

> org.apache.hadoop.security.token.delegation.ZKDelegationTokenSecretManager:

> Starting to load key cache.

> 2022-02-09 03:14:17,310 INFO

> org.apache.hadoop.security.token.delegation.ZKDelegationTokenSecretManager:

> Loaded key cache.

> 2022-02-09 03:14:32,930 WARN org.apache.zookeeper.ClientCnxn: Session

> 0x205584be35b0001 for server zkurl:2181, unexpected

> error, closing socket connection and attempting reconnect

> java.io.IOException: Packet len4194478 is out of range!

> at

> org.apache.zookeeper.ClientCnxnSocket.readLength(ClientCnxnSocket.java:113)

> at

> org.apache.zookeeper.ClientCnxnSocketNIO.doIO(ClientCnxnSocketNIO.java:79)

> at

> org.apache.zookeeper.ClientCnxnSocketNIO.doTransport(ClientCnxnSocketNIO.java:366)

> at

>

[jira] [Work logged] (HDFS-16440) RBF: Support router get HAServiceStatus with Lifeline RPC address

[ https://issues.apache.org/jira/browse/HDFS-16440?focusedWorklogId=727461=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-727461 ] ASF GitHub Bot logged work on HDFS-16440: - Author: ASF GitHub Bot Created on: 15/Feb/22 18:53 Start Date: 15/Feb/22 18:53 Worklog Time Spent: 10m Work Description: goiri commented on pull request #3971: URL: https://github.com/apache/hadoop/pull/3971#issuecomment-1039354689 It would be nice to have a full Yetus run, not sure what happened with the previous one. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 727461) Time Spent: 2h (was: 1h 50m) > RBF: Support router get HAServiceStatus with Lifeline RPC address > - > > Key: HDFS-16440 > URL: https://issues.apache.org/jira/browse/HDFS-16440 > Project: Hadoop HDFS > Issue Type: Improvement > Components: rbf >Reporter: YulongZ >Assignee: YulongZ >Priority: Minor > Labels: pull-request-available > Fix For: 3.4.0 > > Attachments: HDFS-16440.001.patch, HDFS-16440.003.patch, > HDFS-16440.004.patch > > Time Spent: 2h > Remaining Estimate: 0h > > NamenodeHeartbeatService gets HAServiceStatus using > NNHAServiceTarget.getProxy. When we set a special > dfs.namenode.lifeline.rpc-address , NamenodeHeartbeatService may get > HAServiceStatus using NNHAServiceTarget.getHealthMonitorProxy. -- This message was sent by Atlassian Jira (v8.20.1#820001) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16316) Improve DirectoryScanner: add regular file check related block

[

https://issues.apache.org/jira/browse/HDFS-16316?focusedWorklogId=727458=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-727458

]

ASF GitHub Bot logged work on HDFS-16316:

-

Author: ASF GitHub Bot

Created on: 15/Feb/22 18:52

Start Date: 15/Feb/22 18:52

Worklog Time Spent: 10m

Work Description: jianghuazhu removed a comment on pull request #3861:

URL: https://github.com/apache/hadoop/pull/3861#issuecomment-1039826045

Here are some examples of online clusters.

We construct a block device file such as:

This file is non-standard.

This kind of file is found when DirectoryScanner is working.

log:

`

2022-02-15 11:24:10,286 WARN

org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.FsDatasetImpl:

Block:1073741828 is not a regular file.

`

`

2022-02-15 11:24:10,286 WARN

org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.FsDatasetImpl: Reporting

the block blk_1073741828_0 as corrupt due to length mismatch

`

Then DataNode will tell NameNode that there are some unqualified blocks

through NameNodeRpcServer#reportBadBlocks(). After the NameNode gets the data,

it will process it further.

After a period of time, the DataNode will automatically clean up these

unqualified replica data.

Can you help review this pr again, @jojochuang .

Thank you so much.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 727458)

Time Spent: 3h 10m (was: 3h)

> Improve DirectoryScanner: add regular file check related block

> --

>

> Key: HDFS-16316

> URL: https://issues.apache.org/jira/browse/HDFS-16316

> Project: Hadoop HDFS

> Issue Type: Bug

> Components: datanode

>Affects Versions: 2.9.2

>Reporter: JiangHua Zhu

>Assignee: JiangHua Zhu

>Priority: Major

> Labels: pull-request-available

> Attachments: screenshot-1.png, screenshot-2.png, screenshot-3.png,

> screenshot-4.png

>

> Time Spent: 3h 10m

> Remaining Estimate: 0h

>

> Something unusual happened in the online environment.

> The DataNode is configured with 11 disks (${dfs.datanode.data.dir}). It is

> normal for 10 disks to calculate the used capacity, and the calculated value

> for the other 1 disk is much larger, which is very strange.

> This is about the live view on the NameNode:

> !screenshot-1.png!

> This is about the live view on the DataNode:

> !screenshot-2.png!

> We can look at the view on linux:

> !screenshot-3.png!

> There is a big gap here, regarding'/mnt/dfs/11/data'. This situation should

> be prohibited from happening.

> I found that there are some abnormal block files.

> There are wrong blk_.meta in some subdir directories, causing abnormal

> computing space.

> Here are some abnormal block files:

> !screenshot-4.png!

> Such files should not be used as normal blocks. They should be actively

> identified and filtered, which is good for cluster stability.

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16396) Reconfig slow peer parameters for datanode

[ https://issues.apache.org/jira/browse/HDFS-16396?focusedWorklogId=727436=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-727436 ] ASF GitHub Bot logged work on HDFS-16396: - Author: ASF GitHub Bot Created on: 15/Feb/22 18:50 Start Date: 15/Feb/22 18:50 Worklog Time Spent: 10m Work Description: tomscut commented on pull request #3827: URL: https://github.com/apache/hadoop/pull/3827#issuecomment-1039826206 Thanks @tasanuma and @ayushtkn for the review and confirming this. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 727436) Time Spent: 5h (was: 4h 50m) > Reconfig slow peer parameters for datanode > -- > > Key: HDFS-16396 > URL: https://issues.apache.org/jira/browse/HDFS-16396 > Project: Hadoop HDFS > Issue Type: New Feature >Reporter: tomscut >Assignee: tomscut >Priority: Major > Labels: pull-request-available > Fix For: 3.4.0 > > Time Spent: 5h > Remaining Estimate: 0h > > In large clusters, rolling restart datanodes takes a long time. We can make > slow peers parameters and slow disks parameters in datanode reconfigurable to > facilitate cluster operation and maintenance. -- This message was sent by Atlassian Jira (v8.20.1#820001) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16316) Improve DirectoryScanner: add regular file check related block

[

https://issues.apache.org/jira/browse/HDFS-16316?focusedWorklogId=727372=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-727372

]

ASF GitHub Bot logged work on HDFS-16316:

-

Author: ASF GitHub Bot

Created on: 15/Feb/22 18:44

Start Date: 15/Feb/22 18:44

Worklog Time Spent: 10m

Work Description: jojochuang commented on a change in pull request #3861:

URL: https://github.com/apache/hadoop/pull/3861#discussion_r806448723

##

File path:

hadoop-hdfs-project/hadoop-hdfs/src/test/java/org/apache/hadoop/hdfs/server/datanode/TestDirectoryScanner.java

##

@@ -507,6 +509,71 @@ public void testDeleteBlockOnTransientStorage() throws

Exception {

}

}

+ @Test(timeout = 60)

+ public void testRegularBlock() throws Exception {

+// add a logger stream to check what has printed to log

+ByteArrayOutputStream loggerStream = new ByteArrayOutputStream();

Review comment:

Can you use the Hadoop utility class LogCapturer

https://github.com/apache/hadoop/blob/6342d5e523941622a140fd877f06e9b59f48c48b/hadoop-common-project/hadoop-common/src/test/java/org/apache/hadoop/test/GenericTestUtils.java#L533

for this purpose?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 727372)

Time Spent: 3h (was: 2h 50m)

> Improve DirectoryScanner: add regular file check related block

> --

>

> Key: HDFS-16316

> URL: https://issues.apache.org/jira/browse/HDFS-16316

> Project: Hadoop HDFS

> Issue Type: Bug

> Components: datanode

>Affects Versions: 2.9.2

>Reporter: JiangHua Zhu

>Assignee: JiangHua Zhu

>Priority: Major

> Labels: pull-request-available

> Attachments: screenshot-1.png, screenshot-2.png, screenshot-3.png,

> screenshot-4.png

>

> Time Spent: 3h

> Remaining Estimate: 0h

>

> Something unusual happened in the online environment.

> The DataNode is configured with 11 disks (${dfs.datanode.data.dir}). It is

> normal for 10 disks to calculate the used capacity, and the calculated value

> for the other 1 disk is much larger, which is very strange.

> This is about the live view on the NameNode:

> !screenshot-1.png!

> This is about the live view on the DataNode:

> !screenshot-2.png!

> We can look at the view on linux:

> !screenshot-3.png!

> There is a big gap here, regarding'/mnt/dfs/11/data'. This situation should

> be prohibited from happening.

> I found that there are some abnormal block files.

> There are wrong blk_.meta in some subdir directories, causing abnormal

> computing space.

> Here are some abnormal block files:

> !screenshot-4.png!

> Such files should not be used as normal blocks. They should be actively

> identified and filtered, which is good for cluster stability.

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16455) RBF: Router should explicitly specify the value of `jute.maxbuffer` in hadoop configuration files like core-site.xml

[

https://issues.apache.org/jira/browse/HDFS-16455?focusedWorklogId=727325=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-727325

]

ASF GitHub Bot logged work on HDFS-16455:

-

Author: ASF GitHub Bot

Created on: 15/Feb/22 18:40

Start Date: 15/Feb/22 18:40

Worklog Time Spent: 10m

Work Description: goiri commented on a change in pull request #3983:

URL: https://github.com/apache/hadoop/pull/3983#discussion_r806077863

##

File path:

hadoop-common-project/hadoop-common/src/main/java/org/apache/hadoop/security/token/delegation/ZKDelegationTokenSecretManager.java

##

@@ -98,6 +98,8 @@

+ "kerberos.keytab";

public static final String ZK_DTSM_ZK_KERBEROS_PRINCIPAL = ZK_CONF_PREFIX

+ "kerberos.principal";

+ public static final String ZK_DTSM_ZK_JUTE_MAXBUFFER = ZK_CONF_PREFIX

+ + "jute.maxbuffer";

Review comment:

The indentation is not correct. Check the checkstyle.

##

File path:

hadoop-common-project/hadoop-common/src/main/java/org/apache/hadoop/security/token/delegation/ZKDelegationTokenSecretManager.java

##

@@ -199,6 +202,10 @@ public ZKDelegationTokenSecretManager(Configuration conf) {

ZK_DTSM_ZK_SESSION_TIMEOUT_DEFAULT);

int numRetries =

conf.getInt(ZK_DTSM_ZK_NUM_RETRIES,

ZK_DTSM_ZK_NUM_RETRIES_DEFAULT);

+String juteMaxBuffer =

+conf.get(ZK_DTSM_ZK_JUTE_MAXBUFFER,

ZK_DTSM_ZK_JUTE_MAXBUFFER_DEFAULT);

Review comment:

Indentation fix.

##

File path:

hadoop-common-project/hadoop-common/src/main/java/org/apache/hadoop/security/token/delegation/ZKDelegationTokenSecretManager.java

##

@@ -199,6 +202,10 @@ public ZKDelegationTokenSecretManager(Configuration conf) {

ZK_DTSM_ZK_SESSION_TIMEOUT_DEFAULT);

int numRetries =

conf.getInt(ZK_DTSM_ZK_NUM_RETRIES,

ZK_DTSM_ZK_NUM_RETRIES_DEFAULT);

+String juteMaxBuffer =

+conf.get(ZK_DTSM_ZK_JUTE_MAXBUFFER,

ZK_DTSM_ZK_JUTE_MAXBUFFER_DEFAULT);

+System.setProperty(ZKClientConfig.JUTE_MAXBUFFER,

+ juteMaxBuffer);

Review comment:

This could go to the previous line.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 727325)

Time Spent: 1h 40m (was: 1.5h)

> RBF: Router should explicitly specify the value of `jute.maxbuffer` in hadoop

> configuration files like core-site.xml

>

>

> Key: HDFS-16455

> URL: https://issues.apache.org/jira/browse/HDFS-16455

> Project: Hadoop HDFS

> Issue Type: Bug

> Components: rbf

>Affects Versions: 3.3.0, 3.4.0

>Reporter: Max Xie

>Assignee: Max Xie

>Priority: Minor

> Labels: pull-request-available

> Time Spent: 1h 40m

> Remaining Estimate: 0h

>

> Based on the current design for delegation token in secure Router, the total

> number of tokens store and update in zookeeper using

> ZKDelegationTokenManager.

> But the default value of system property `jute.maxbuffer` is just 4MB, if

> Router store too many tokens in zk, it will throw IOException `{{{}Packet

> lenxx is out of range{}}}` and all Router will crash.

>

> In our cluster, Routers crashed because of it. The crash logs are below

> {code:java}

> 2022-02-09 02:15:51,607 INFO

> org.apache.hadoop.security.token.delegation.AbstractDelegationTokenSecretManager:

> Token renewal for identifier: (token for xxx: HDFS_DELEGATION_TOKEN

> owner=xxx/scheduler, renewer=hadoop, realUser=, issueDate=1644344146305,

> maxDate=1644948946305, sequenceNumbe

> r=27136070, masterKeyId=1107); total currentTokens 279548 2022-02-09

> 02:16:07,632 WARN org.apache.zookeeper.ClientCnxn: Session 0x1000172775a0012

> for server zkurl:2181, unexpected e

> rror, closing socket connection and attempting reconnect

> java.io.IOException: Packet len4194553 is out of range!

> at org.apache.zookeeper.ClientCnxnSocket.readLength(ClientCnxnSocket.java:113)

> at org.apache.zookeeper.ClientCnxnSocketNIO.doIO(ClientCnxnSocketNIO.java:79)

> at

> org.apache.zookeeper.ClientCnxnSocketNIO.doTransport(ClientCnxnSocketNIO.java:366)

> at org.apache.zookeeper.ClientCnxn$SendThread.run(ClientCnxn.java:1145)

> 2022-02-09 02:16:07,733 WARN org.apache.hadoop.ipc.Server: IPC Server handler

> 1254 on default port 9001, call Call#144 Retry#0

>

[jira] [Work logged] (HDFS-16396) Reconfig slow peer parameters for datanode

[ https://issues.apache.org/jira/browse/HDFS-16396?focusedWorklogId=727302=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-727302 ] ASF GitHub Bot logged work on HDFS-16396: - Author: ASF GitHub Bot Created on: 15/Feb/22 18:38 Start Date: 15/Feb/22 18:38 Worklog Time Spent: 10m Work Description: tasanuma commented on pull request #3827: URL: https://github.com/apache/hadoop/pull/3827#issuecomment-1039851634 Merged it. Thanks for your contribution, @tomscut, and thanks for your review, @ayushtkn! -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 727302) Time Spent: 4h 50m (was: 4h 40m) > Reconfig slow peer parameters for datanode > -- > > Key: HDFS-16396 > URL: https://issues.apache.org/jira/browse/HDFS-16396 > Project: Hadoop HDFS > Issue Type: New Feature >Reporter: tomscut >Assignee: tomscut >Priority: Major > Labels: pull-request-available > Fix For: 3.4.0 > > Time Spent: 4h 50m > Remaining Estimate: 0h > > In large clusters, rolling restart datanodes takes a long time. We can make > slow peers parameters and slow disks parameters in datanode reconfigurable to > facilitate cluster operation and maintenance. -- This message was sent by Atlassian Jira (v8.20.1#820001) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16455) RBF: Router should explicitly specify the value of `jute.maxbuffer` in hadoop configuration files like core-site.xml

[

https://issues.apache.org/jira/browse/HDFS-16455?focusedWorklogId=727261=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-727261

]

ASF GitHub Bot logged work on HDFS-16455:

-

Author: ASF GitHub Bot

Created on: 15/Feb/22 18:35

Start Date: 15/Feb/22 18:35

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on pull request #3983:

URL: https://github.com/apache/hadoop/pull/3983#issuecomment-1039875071

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 727261)

Time Spent: 1.5h (was: 1h 20m)

> RBF: Router should explicitly specify the value of `jute.maxbuffer` in hadoop

> configuration files like core-site.xml

>

>

> Key: HDFS-16455

> URL: https://issues.apache.org/jira/browse/HDFS-16455

> Project: Hadoop HDFS

> Issue Type: Bug

> Components: rbf

>Affects Versions: 3.3.0, 3.4.0

>Reporter: Max Xie

>Assignee: Max Xie

>Priority: Minor

> Labels: pull-request-available

> Time Spent: 1.5h

> Remaining Estimate: 0h

>

> Based on the current design for delegation token in secure Router, the total

> number of tokens store and update in zookeeper using

> ZKDelegationTokenManager.

> But the default value of system property `jute.maxbuffer` is just 4MB, if

> Router store too many tokens in zk, it will throw IOException `{{{}Packet

> lenxx is out of range{}}}` and all Router will crash.

>

> In our cluster, Routers crashed because of it. The crash logs are below

> {code:java}

> 2022-02-09 02:15:51,607 INFO

> org.apache.hadoop.security.token.delegation.AbstractDelegationTokenSecretManager:

> Token renewal for identifier: (token for xxx: HDFS_DELEGATION_TOKEN

> owner=xxx/scheduler, renewer=hadoop, realUser=, issueDate=1644344146305,

> maxDate=1644948946305, sequenceNumbe

> r=27136070, masterKeyId=1107); total currentTokens 279548 2022-02-09

> 02:16:07,632 WARN org.apache.zookeeper.ClientCnxn: Session 0x1000172775a0012

> for server zkurl:2181, unexpected e

> rror, closing socket connection and attempting reconnect

> java.io.IOException: Packet len4194553 is out of range!

> at org.apache.zookeeper.ClientCnxnSocket.readLength(ClientCnxnSocket.java:113)

> at org.apache.zookeeper.ClientCnxnSocketNIO.doIO(ClientCnxnSocketNIO.java:79)

> at

> org.apache.zookeeper.ClientCnxnSocketNIO.doTransport(ClientCnxnSocketNIO.java:366)

> at org.apache.zookeeper.ClientCnxn$SendThread.run(ClientCnxn.java:1145)

> 2022-02-09 02:16:07,733 WARN org.apache.hadoop.ipc.Server: IPC Server handler

> 1254 on default port 9001, call Call#144 Retry#0

> org.apache.hadoop.hdfs.protocol.ClientProtocol.getDelegationToken from

> ip:46534

> java.lang.RuntimeException: Could not increment shared counter !!

> at

> org.apache.hadoop.security.token.delegation.ZKDelegationTokenSecretManager.incrementDelegationTokenSeqNum(ZKDelegationTokenSecretManager.java:582)

> {code}

> When we restart a Router, it crashed again

> {code:java}

> 2022-02-09 03:14:17,308 INFO

> org.apache.hadoop.security.token.delegation.ZKDelegationTokenSecretManager:

> Starting to load key cache.

> 2022-02-09 03:14:17,310 INFO

> org.apache.hadoop.security.token.delegation.ZKDelegationTokenSecretManager:

> Loaded key cache.

> 2022-02-09 03:14:32,930 WARN org.apache.zookeeper.ClientCnxn: Session

> 0x205584be35b0001 for server zkurl:2181, unexpected

> error, closing socket connection and attempting reconnect

> java.io.IOException: Packet len4194478 is out of range!

> at

> org.apache.zookeeper.ClientCnxnSocket.readLength(ClientCnxnSocket.java:113)

> at

> org.apache.zookeeper.ClientCnxnSocketNIO.doIO(ClientCnxnSocketNIO.java:79)

> at

> org.apache.zookeeper.ClientCnxnSocketNIO.doTransport(ClientCnxnSocketNIO.java:366)

> at

> org.apache.zookeeper.ClientCnxn$SendThread.run(ClientCnxn.java:1145)

> 2022-02-09 03:14:33,030 ERROR

> org.apache.hadoop.hdfs.server.federation.router.security.token.ZKDelegationTokenSecretManagerImpl:

> Error starting threads for z

> kDelegationTokens

> java.io.IOException: Could not start PathChildrenCache for tokens {code}

> Finnally, we config `-Djute.maxbuffer=1000` in hadoop-env,sh to fix this

> issue.

> After dig it, we found the number of the znode

> `/ZKDTSMRoot/ZKDTSMTokensRoot`'s children node was more than 25, which's

> data size was over 4MB.

>

> Maybe we should

[jira] [Work logged] (HDFS-16440) RBF: Support router get HAServiceStatus with Lifeline RPC address

[ https://issues.apache.org/jira/browse/HDFS-16440?focusedWorklogId=727260=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-727260 ] ASF GitHub Bot logged work on HDFS-16440: - Author: ASF GitHub Bot Created on: 15/Feb/22 18:35 Start Date: 15/Feb/22 18:35 Worklog Time Spent: 10m Work Description: yulongz commented on pull request #3971: URL: https://github.com/apache/hadoop/pull/3971#issuecomment-1039887623 @goiri This failed unit test is unrelated to my change. All tests work fine locally. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 727260) Time Spent: 1h 50m (was: 1h 40m) > RBF: Support router get HAServiceStatus with Lifeline RPC address > - > > Key: HDFS-16440 > URL: https://issues.apache.org/jira/browse/HDFS-16440 > Project: Hadoop HDFS > Issue Type: Improvement > Components: rbf >Reporter: YulongZ >Assignee: YulongZ >Priority: Minor > Labels: pull-request-available > Fix For: 3.4.0 > > Attachments: HDFS-16440.001.patch, HDFS-16440.003.patch, > HDFS-16440.004.patch > > Time Spent: 1h 50m > Remaining Estimate: 0h > > NamenodeHeartbeatService gets HAServiceStatus using > NNHAServiceTarget.getProxy. When we set a special > dfs.namenode.lifeline.rpc-address , NamenodeHeartbeatService may get > HAServiceStatus using NNHAServiceTarget.getHealthMonitorProxy. -- This message was sent by Atlassian Jira (v8.20.1#820001) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16316) Improve DirectoryScanner: add regular file check related block

[

https://issues.apache.org/jira/browse/HDFS-16316?focusedWorklogId=727252=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-727252

]

ASF GitHub Bot logged work on HDFS-16316:

-

Author: ASF GitHub Bot

Created on: 15/Feb/22 18:34

Start Date: 15/Feb/22 18:34

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on pull request #3861:

URL: https://github.com/apache/hadoop/pull/3861#issuecomment-1039728159

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 727252)

Time Spent: 2h 50m (was: 2h 40m)

> Improve DirectoryScanner: add regular file check related block

> --

>

> Key: HDFS-16316

> URL: https://issues.apache.org/jira/browse/HDFS-16316

> Project: Hadoop HDFS

> Issue Type: Bug

> Components: datanode

>Affects Versions: 2.9.2

>Reporter: JiangHua Zhu

>Assignee: JiangHua Zhu

>Priority: Major

> Labels: pull-request-available