[jira] [Work logged] (HDFS-16203) Discover datanodes with unbalanced block pool usage by the standard deviation

[

https://issues.apache.org/jira/browse/HDFS-16203?focusedWorklogId=651425=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-651425

]

ASF GitHub Bot logged work on HDFS-16203:

-

Author: ASF GitHub Bot

Created on: 16/Sep/21 01:06

Start Date: 16/Sep/21 01:06

Worklog Time Spent: 10m

Work Description: tomscut commented on pull request #3366:

URL: https://github.com/apache/hadoop/pull/3366#issuecomment-920496593

Thanks @tasanuma @ferhui for your review and merge.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 651425)

Time Spent: 6h 20m (was: 6h 10m)

> Discover datanodes with unbalanced block pool usage by the standard deviation

> -

>

> Key: HDFS-16203

> URL: https://issues.apache.org/jira/browse/HDFS-16203

> Project: Hadoop HDFS

> Issue Type: New Feature

>Reporter: tomscut

>Assignee: tomscut

>Priority: Major

> Labels: pull-request-available

> Fix For: 3.4.0

>

> Attachments: image-2021-09-01-19-16-27-172.png

>

> Time Spent: 6h 20m

> Remaining Estimate: 0h

>

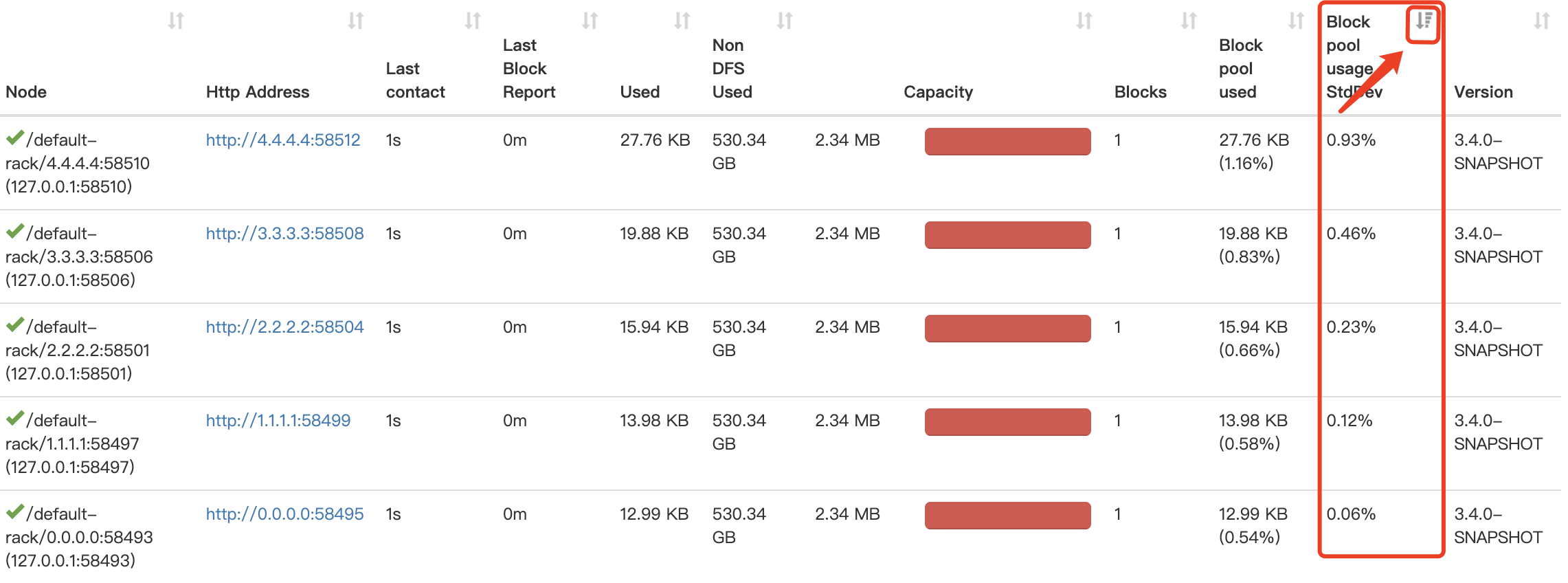

> *Discover datanodes with unbalanced volume usage by the standard deviation.*

> *In some scenarios, we may cause unbalanced datanode disk usage:*

> 1. Repair the damaged disk and make it online again.

> 2. Add disks to some Datanodes.

> 3. Some disks are damaged, resulting in slow data writing.

> 4. Use some custom volume choosing policies.

> In the case of unbalanced disk usage, a sudden increase in datanode write

> traffic may result in busy disk I/O with low volume usage, resulting in

> decreased throughput across datanodes.

> We need to find these nodes in time to do diskBalance, or other processing.

> Based on the volume usage of each datanode, we can calculate the standard

> deviation of the volume usage. The more unbalanced the volume, the higher the

> standard deviation.

> *We can display the result on the Web of namenode, and then sorting directly

> to find the nodes where the volumes usages are unbalanced.*

> *{color:#172b4d}This interface is only used to obtain metrics and does not

> adversely affect namenode performance.{color}*

>

> {color:#172b4d}!image-2021-09-01-19-16-27-172.png|width=581,height=216!{color}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16203) Discover datanodes with unbalanced block pool usage by the standard deviation

[

https://issues.apache.org/jira/browse/HDFS-16203?focusedWorklogId=651418=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-651418

]

ASF GitHub Bot logged work on HDFS-16203:

-

Author: ASF GitHub Bot

Created on: 16/Sep/21 01:00

Start Date: 16/Sep/21 01:00

Worklog Time Spent: 10m

Work Description: tasanuma merged pull request #3366:

URL: https://github.com/apache/hadoop/pull/3366

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 651418)

Time Spent: 6h (was: 5h 50m)

> Discover datanodes with unbalanced block pool usage by the standard deviation

> -

>

> Key: HDFS-16203

> URL: https://issues.apache.org/jira/browse/HDFS-16203

> Project: Hadoop HDFS

> Issue Type: New Feature

>Reporter: tomscut

>Assignee: tomscut

>Priority: Major

> Labels: pull-request-available

> Attachments: image-2021-09-01-19-16-27-172.png

>

> Time Spent: 6h

> Remaining Estimate: 0h

>

> *Discover datanodes with unbalanced volume usage by the standard deviation.*

> *In some scenarios, we may cause unbalanced datanode disk usage:*

> 1. Repair the damaged disk and make it online again.

> 2. Add disks to some Datanodes.

> 3. Some disks are damaged, resulting in slow data writing.

> 4. Use some custom volume choosing policies.

> In the case of unbalanced disk usage, a sudden increase in datanode write

> traffic may result in busy disk I/O with low volume usage, resulting in

> decreased throughput across datanodes.

> We need to find these nodes in time to do diskBalance, or other processing.

> Based on the volume usage of each datanode, we can calculate the standard

> deviation of the volume usage. The more unbalanced the volume, the higher the

> standard deviation.

> *We can display the result on the Web of namenode, and then sorting directly

> to find the nodes where the volumes usages are unbalanced.*

> *{color:#172b4d}This interface is only used to obtain metrics and does not

> adversely affect namenode performance.{color}*

>

> {color:#172b4d}!image-2021-09-01-19-16-27-172.png|width=581,height=216!{color}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16203) Discover datanodes with unbalanced block pool usage by the standard deviation

[

https://issues.apache.org/jira/browse/HDFS-16203?focusedWorklogId=651419=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-651419

]

ASF GitHub Bot logged work on HDFS-16203:

-

Author: ASF GitHub Bot

Created on: 16/Sep/21 01:01

Start Date: 16/Sep/21 01:01

Worklog Time Spent: 10m

Work Description: tasanuma commented on pull request #3366:

URL: https://github.com/apache/hadoop/pull/3366#issuecomment-920494267

Merged it. Thanks for the contribution, @tomscut. Thanks for reviewing it,

@ferhui.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 651419)

Time Spent: 6h 10m (was: 6h)

> Discover datanodes with unbalanced block pool usage by the standard deviation

> -

>

> Key: HDFS-16203

> URL: https://issues.apache.org/jira/browse/HDFS-16203

> Project: Hadoop HDFS

> Issue Type: New Feature

>Reporter: tomscut

>Assignee: tomscut

>Priority: Major

> Labels: pull-request-available

> Attachments: image-2021-09-01-19-16-27-172.png

>

> Time Spent: 6h 10m

> Remaining Estimate: 0h

>

> *Discover datanodes with unbalanced volume usage by the standard deviation.*

> *In some scenarios, we may cause unbalanced datanode disk usage:*

> 1. Repair the damaged disk and make it online again.

> 2. Add disks to some Datanodes.

> 3. Some disks are damaged, resulting in slow data writing.

> 4. Use some custom volume choosing policies.

> In the case of unbalanced disk usage, a sudden increase in datanode write

> traffic may result in busy disk I/O with low volume usage, resulting in

> decreased throughput across datanodes.

> We need to find these nodes in time to do diskBalance, or other processing.

> Based on the volume usage of each datanode, we can calculate the standard

> deviation of the volume usage. The more unbalanced the volume, the higher the

> standard deviation.

> *We can display the result on the Web of namenode, and then sorting directly

> to find the nodes where the volumes usages are unbalanced.*

> *{color:#172b4d}This interface is only used to obtain metrics and does not

> adversely affect namenode performance.{color}*

>

> {color:#172b4d}!image-2021-09-01-19-16-27-172.png|width=581,height=216!{color}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16203) Discover datanodes with unbalanced block pool usage by the standard deviation

[

https://issues.apache.org/jira/browse/HDFS-16203?focusedWorklogId=651412=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-651412

]

ASF GitHub Bot logged work on HDFS-16203:

-

Author: ASF GitHub Bot

Created on: 16/Sep/21 00:22

Start Date: 16/Sep/21 00:22

Worklog Time Spent: 10m

Work Description: tomscut commented on pull request #3366:

URL: https://github.com/apache/hadoop/pull/3366#issuecomment-920480429

The failed unit tests ```TestBlockTokenWithDFSStriped``` and

```TestBalancerWithHANameNodes``` are unrelated to the change, and work fine

locally.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 651412)

Time Spent: 5h 50m (was: 5h 40m)

> Discover datanodes with unbalanced block pool usage by the standard deviation

> -

>

> Key: HDFS-16203

> URL: https://issues.apache.org/jira/browse/HDFS-16203

> Project: Hadoop HDFS

> Issue Type: New Feature

>Reporter: tomscut

>Assignee: tomscut

>Priority: Major

> Labels: pull-request-available

> Attachments: image-2021-09-01-19-16-27-172.png

>

> Time Spent: 5h 50m

> Remaining Estimate: 0h

>

> *Discover datanodes with unbalanced volume usage by the standard deviation.*

> *In some scenarios, we may cause unbalanced datanode disk usage:*

> 1. Repair the damaged disk and make it online again.

> 2. Add disks to some Datanodes.

> 3. Some disks are damaged, resulting in slow data writing.

> 4. Use some custom volume choosing policies.

> In the case of unbalanced disk usage, a sudden increase in datanode write

> traffic may result in busy disk I/O with low volume usage, resulting in

> decreased throughput across datanodes.

> We need to find these nodes in time to do diskBalance, or other processing.

> Based on the volume usage of each datanode, we can calculate the standard

> deviation of the volume usage. The more unbalanced the volume, the higher the

> standard deviation.

> *We can display the result on the Web of namenode, and then sorting directly

> to find the nodes where the volumes usages are unbalanced.*

> *{color:#172b4d}This interface is only used to obtain metrics and does not

> adversely affect namenode performance.{color}*

>

> {color:#172b4d}!image-2021-09-01-19-16-27-172.png|width=581,height=216!{color}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16203) Discover datanodes with unbalanced block pool usage by the standard deviation

[

https://issues.apache.org/jira/browse/HDFS-16203?focusedWorklogId=651276=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-651276

]

ASF GitHub Bot logged work on HDFS-16203:

-

Author: ASF GitHub Bot

Created on: 15/Sep/21 18:56

Start Date: 15/Sep/21 18:56

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on pull request #3366:

URL: https://github.com/apache/hadoop/pull/3366#issuecomment-920294476

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 51s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 1s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +0 :ok: | jshint | 0m 0s | | jshint was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 1 new or modified test files. |

_ trunk Compile Tests _ |

| +0 :ok: | mvndep | 12m 33s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 29m 8s | | trunk passed |

| +1 :green_heart: | compile | 6m 35s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | compile | 6m 1s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | checkstyle | 1m 33s | | trunk passed |

| +1 :green_heart: | mvnsite | 3m 29s | | trunk passed |

| +1 :green_heart: | javadoc | 2m 44s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 3m 22s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 8m 24s | | trunk passed |

| +1 :green_heart: | shadedclient | 40m 35s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 30s | | Maven dependency ordering for patch |

| +1 :green_heart: | mvninstall | 3m 8s | | the patch passed |

| +1 :green_heart: | compile | 5m 39s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javac | 5m 39s | | the patch passed |

| +1 :green_heart: | compile | 4m 41s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | javac | 4m 41s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 1m 6s | | hadoop-hdfs-project: The

patch generated 0 new + 120 unchanged - 9 fixed = 120 total (was 129) |

| +1 :green_heart: | mvnsite | 2m 40s | | the patch passed |

| +1 :green_heart: | javadoc | 1m 56s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 2m 44s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 7m 2s | | the patch passed |

| +1 :green_heart: | shadedclient | 28m 20s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 2m 19s | | hadoop-hdfs-client in the patch

passed. |

| -1 :x: | unit | 242m 39s |

[/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3366/10/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs in the patch passed. |

| +1 :green_heart: | unit | 21m 58s | | hadoop-hdfs-rbf in the patch

passed. |

| +1 :green_heart: | asflicense | 0m 51s | | The patch does not

generate ASF License warnings. |

| | | 443m 36s | | |

| Reason | Tests |

|---:|:--|

| Failed junit tests |

hadoop.hdfs.server.blockmanagement.TestBlockTokenWithDFSStriped |

| | hadoop.hdfs.server.balancer.TestBalancerWithHANameNodes |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3366/10/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/3366 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell jshint |

| uname | Linux fcc8b33d75e1 4.15.0-58-generic #64-Ubuntu SMP Tue Aug 6

11:12:41 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

|

[jira] [Work logged] (HDFS-16203) Discover datanodes with unbalanced block pool usage by the standard deviation

[

https://issues.apache.org/jira/browse/HDFS-16203?focusedWorklogId=651059=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-651059

]

ASF GitHub Bot logged work on HDFS-16203:

-

Author: ASF GitHub Bot

Created on: 15/Sep/21 12:27

Start Date: 15/Sep/21 12:27

Worklog Time Spent: 10m

Work Description: tasanuma commented on pull request #3366:

URL: https://github.com/apache/hadoop/pull/3366#issuecomment-919974196

+1, pending Jenkins.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 651059)

Time Spent: 5.5h (was: 5h 20m)

> Discover datanodes with unbalanced block pool usage by the standard deviation

> -

>

> Key: HDFS-16203

> URL: https://issues.apache.org/jira/browse/HDFS-16203

> Project: Hadoop HDFS

> Issue Type: New Feature

>Reporter: tomscut

>Assignee: tomscut

>Priority: Major

> Labels: pull-request-available

> Attachments: image-2021-09-01-19-16-27-172.png

>

> Time Spent: 5.5h

> Remaining Estimate: 0h

>

> *Discover datanodes with unbalanced volume usage by the standard deviation.*

> *In some scenarios, we may cause unbalanced datanode disk usage:*

> 1. Repair the damaged disk and make it online again.

> 2. Add disks to some Datanodes.

> 3. Some disks are damaged, resulting in slow data writing.

> 4. Use some custom volume choosing policies.

> In the case of unbalanced disk usage, a sudden increase in datanode write

> traffic may result in busy disk I/O with low volume usage, resulting in

> decreased throughput across datanodes.

> We need to find these nodes in time to do diskBalance, or other processing.

> Based on the volume usage of each datanode, we can calculate the standard

> deviation of the volume usage. The more unbalanced the volume, the higher the

> standard deviation.

> *We can display the result on the Web of namenode, and then sorting directly

> to find the nodes where the volumes usages are unbalanced.*

> *{color:#172b4d}This interface is only used to obtain metrics and does not

> adversely affect namenode performance.{color}*

>

> {color:#172b4d}!image-2021-09-01-19-16-27-172.png|width=581,height=216!{color}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16203) Discover datanodes with unbalanced block pool usage by the standard deviation

[

https://issues.apache.org/jira/browse/HDFS-16203?focusedWorklogId=651032=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-651032

]

ASF GitHub Bot logged work on HDFS-16203:

-

Author: ASF GitHub Bot

Created on: 15/Sep/21 11:32

Start Date: 15/Sep/21 11:32

Worklog Time Spent: 10m

Work Description: tomscut commented on pull request #3366:

URL: https://github.com/apache/hadoop/pull/3366#issuecomment-919938103

> @tomscut Sorry for being late, but I have one more request. Could you

refactor the existing method?

>

> ```java

> public Map getDatanodeStorageReportMap(

> DatanodeReportType type) throws IOException {

> return getDatanodeStorageReportMap(type, true, -1);

> }

> ```

Thanks for your review, I udpated the code.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 651032)

Time Spent: 5h 20m (was: 5h 10m)

> Discover datanodes with unbalanced block pool usage by the standard deviation

> -

>

> Key: HDFS-16203

> URL: https://issues.apache.org/jira/browse/HDFS-16203

> Project: Hadoop HDFS

> Issue Type: New Feature

>Reporter: tomscut

>Assignee: tomscut

>Priority: Major

> Labels: pull-request-available

> Attachments: image-2021-09-01-19-16-27-172.png

>

> Time Spent: 5h 20m

> Remaining Estimate: 0h

>

> *Discover datanodes with unbalanced volume usage by the standard deviation.*

> *In some scenarios, we may cause unbalanced datanode disk usage:*

> 1. Repair the damaged disk and make it online again.

> 2. Add disks to some Datanodes.

> 3. Some disks are damaged, resulting in slow data writing.

> 4. Use some custom volume choosing policies.

> In the case of unbalanced disk usage, a sudden increase in datanode write

> traffic may result in busy disk I/O with low volume usage, resulting in

> decreased throughput across datanodes.

> We need to find these nodes in time to do diskBalance, or other processing.

> Based on the volume usage of each datanode, we can calculate the standard

> deviation of the volume usage. The more unbalanced the volume, the higher the

> standard deviation.

> *We can display the result on the Web of namenode, and then sorting directly

> to find the nodes where the volumes usages are unbalanced.*

> *{color:#172b4d}This interface is only used to obtain metrics and does not

> adversely affect namenode performance.{color}*

>

> {color:#172b4d}!image-2021-09-01-19-16-27-172.png|width=581,height=216!{color}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16203) Discover datanodes with unbalanced block pool usage by the standard deviation

[

https://issues.apache.org/jira/browse/HDFS-16203?focusedWorklogId=650993=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-650993

]

ASF GitHub Bot logged work on HDFS-16203:

-

Author: ASF GitHub Bot

Created on: 15/Sep/21 09:30

Start Date: 15/Sep/21 09:30

Worklog Time Spent: 10m

Work Description: tasanuma commented on pull request #3366:

URL: https://github.com/apache/hadoop/pull/3366#issuecomment-919856428

@tomscut Sorry for being late, but I have one more request. Could you

refactor the existing method?

```java

public Map getDatanodeStorageReportMap(

DatanodeReportType type) throws IOException {

return getDatanodeStorageReportMap(type, true, -1);

}

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 650993)

Time Spent: 5h 10m (was: 5h)

> Discover datanodes with unbalanced block pool usage by the standard deviation

> -

>

> Key: HDFS-16203

> URL: https://issues.apache.org/jira/browse/HDFS-16203

> Project: Hadoop HDFS

> Issue Type: New Feature

>Reporter: tomscut

>Assignee: tomscut

>Priority: Major

> Labels: pull-request-available

> Attachments: image-2021-09-01-19-16-27-172.png

>

> Time Spent: 5h 10m

> Remaining Estimate: 0h

>

> *Discover datanodes with unbalanced volume usage by the standard deviation.*

> *In some scenarios, we may cause unbalanced datanode disk usage:*

> 1. Repair the damaged disk and make it online again.

> 2. Add disks to some Datanodes.

> 3. Some disks are damaged, resulting in slow data writing.

> 4. Use some custom volume choosing policies.

> In the case of unbalanced disk usage, a sudden increase in datanode write

> traffic may result in busy disk I/O with low volume usage, resulting in

> decreased throughput across datanodes.

> We need to find these nodes in time to do diskBalance, or other processing.

> Based on the volume usage of each datanode, we can calculate the standard

> deviation of the volume usage. The more unbalanced the volume, the higher the

> standard deviation.

> *We can display the result on the Web of namenode, and then sorting directly

> to find the nodes where the volumes usages are unbalanced.*

> *{color:#172b4d}This interface is only used to obtain metrics and does not

> adversely affect namenode performance.{color}*

>

> {color:#172b4d}!image-2021-09-01-19-16-27-172.png|width=581,height=216!{color}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16203) Discover datanodes with unbalanced block pool usage by the standard deviation

[

https://issues.apache.org/jira/browse/HDFS-16203?focusedWorklogId=650938=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-650938

]

ASF GitHub Bot logged work on HDFS-16203:

-

Author: ASF GitHub Bot

Created on: 15/Sep/21 05:03

Start Date: 15/Sep/21 05:03

Worklog Time Spent: 10m

Work Description: tomscut commented on pull request #3366:

URL: https://github.com/apache/hadoop/pull/3366#issuecomment-919701384

> @tomscut Thanks.

> Is Related UT fixed? If it's been fixed, this PR looks good to me.

Yes, this related failed UT

```TestRouterRPCClientRetries#testNamenodeMetricsSlow``` is already fixed.

Thanks for your review again.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 650938)

Time Spent: 5h (was: 4h 50m)

> Discover datanodes with unbalanced block pool usage by the standard deviation

> -

>

> Key: HDFS-16203

> URL: https://issues.apache.org/jira/browse/HDFS-16203

> Project: Hadoop HDFS

> Issue Type: New Feature

>Reporter: tomscut

>Assignee: tomscut

>Priority: Major

> Labels: pull-request-available

> Attachments: image-2021-09-01-19-16-27-172.png

>

> Time Spent: 5h

> Remaining Estimate: 0h

>

> *Discover datanodes with unbalanced volume usage by the standard deviation.*

> *In some scenarios, we may cause unbalanced datanode disk usage:*

> 1. Repair the damaged disk and make it online again.

> 2. Add disks to some Datanodes.

> 3. Some disks are damaged, resulting in slow data writing.

> 4. Use some custom volume choosing policies.

> In the case of unbalanced disk usage, a sudden increase in datanode write

> traffic may result in busy disk I/O with low volume usage, resulting in

> decreased throughput across datanodes.

> We need to find these nodes in time to do diskBalance, or other processing.

> Based on the volume usage of each datanode, we can calculate the standard

> deviation of the volume usage. The more unbalanced the volume, the higher the

> standard deviation.

> *We can display the result on the Web of namenode, and then sorting directly

> to find the nodes where the volumes usages are unbalanced.*

> *{color:#172b4d}This interface is only used to obtain metrics and does not

> adversely affect namenode performance.{color}*

>

> {color:#172b4d}!image-2021-09-01-19-16-27-172.png|width=581,height=216!{color}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16203) Discover datanodes with unbalanced block pool usage by the standard deviation

[

https://issues.apache.org/jira/browse/HDFS-16203?focusedWorklogId=650937=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-650937

]

ASF GitHub Bot logged work on HDFS-16203:

-

Author: ASF GitHub Bot

Created on: 15/Sep/21 04:47

Start Date: 15/Sep/21 04:47

Worklog Time Spent: 10m

Work Description: ferhui commented on pull request #3366:

URL: https://github.com/apache/hadoop/pull/3366#issuecomment-919696025

@tomscut Thanks.

Is Related UT fixed? If it's been fixed, this PR looks good to me.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 650937)

Time Spent: 4h 50m (was: 4h 40m)

> Discover datanodes with unbalanced block pool usage by the standard deviation

> -

>

> Key: HDFS-16203

> URL: https://issues.apache.org/jira/browse/HDFS-16203

> Project: Hadoop HDFS

> Issue Type: New Feature

>Reporter: tomscut

>Assignee: tomscut

>Priority: Major

> Labels: pull-request-available

> Attachments: image-2021-09-01-19-16-27-172.png

>

> Time Spent: 4h 50m

> Remaining Estimate: 0h

>

> *Discover datanodes with unbalanced volume usage by the standard deviation.*

> *In some scenarios, we may cause unbalanced datanode disk usage:*

> 1. Repair the damaged disk and make it online again.

> 2. Add disks to some Datanodes.

> 3. Some disks are damaged, resulting in slow data writing.

> 4. Use some custom volume choosing policies.

> In the case of unbalanced disk usage, a sudden increase in datanode write

> traffic may result in busy disk I/O with low volume usage, resulting in

> decreased throughput across datanodes.

> We need to find these nodes in time to do diskBalance, or other processing.

> Based on the volume usage of each datanode, we can calculate the standard

> deviation of the volume usage. The more unbalanced the volume, the higher the

> standard deviation.

> *We can display the result on the Web of namenode, and then sorting directly

> to find the nodes where the volumes usages are unbalanced.*

> *{color:#172b4d}This interface is only used to obtain metrics and does not

> adversely affect namenode performance.{color}*

>

> {color:#172b4d}!image-2021-09-01-19-16-27-172.png|width=581,height=216!{color}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16203) Discover datanodes with unbalanced block pool usage by the standard deviation

[

https://issues.apache.org/jira/browse/HDFS-16203?focusedWorklogId=650543=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-650543

]

ASF GitHub Bot logged work on HDFS-16203:

-

Author: ASF GitHub Bot

Created on: 14/Sep/21 12:42

Start Date: 14/Sep/21 12:42

Worklog Time Spent: 10m

Work Description: tomscut commented on pull request #3366:

URL: https://github.com/apache/hadoop/pull/3366#issuecomment-919112684

> > Overall it looks good.

> > DataNode Info shows on namenode UI and Router UI, It is reasonable to

add this into router UI.

> > Maybe it is like namenode, just lines code changed. We can review it

here.

>

> Hi @ferhui , I added this into router UI and did some tests.

>

> There are two NS in our test cluster. NS1 supports this feature, and NS2

does not. In this case, the Router UI is shown as follows:

>

There are two NS in our test cluster. NS1 supports this feature, and NS2

does not. In this case, the Router UI is shown as this picture.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 650543)

Time Spent: 4h 40m (was: 4.5h)

> Discover datanodes with unbalanced block pool usage by the standard deviation

> -

>

> Key: HDFS-16203

> URL: https://issues.apache.org/jira/browse/HDFS-16203

> Project: Hadoop HDFS

> Issue Type: New Feature

>Reporter: tomscut

>Assignee: tomscut

>Priority: Major

> Labels: pull-request-available

> Attachments: image-2021-09-01-19-16-27-172.png

>

> Time Spent: 4h 40m

> Remaining Estimate: 0h

>

> *Discover datanodes with unbalanced volume usage by the standard deviation.*

> *In some scenarios, we may cause unbalanced datanode disk usage:*

> 1. Repair the damaged disk and make it online again.

> 2. Add disks to some Datanodes.

> 3. Some disks are damaged, resulting in slow data writing.

> 4. Use some custom volume choosing policies.

> In the case of unbalanced disk usage, a sudden increase in datanode write

> traffic may result in busy disk I/O with low volume usage, resulting in

> decreased throughput across datanodes.

> We need to find these nodes in time to do diskBalance, or other processing.

> Based on the volume usage of each datanode, we can calculate the standard

> deviation of the volume usage. The more unbalanced the volume, the higher the

> standard deviation.

> *We can display the result on the Web of namenode, and then sorting directly

> to find the nodes where the volumes usages are unbalanced.*

> *{color:#172b4d}This interface is only used to obtain metrics and does not

> adversely affect namenode performance.{color}*

>

> {color:#172b4d}!image-2021-09-01-19-16-27-172.png|width=581,height=216!{color}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16203) Discover datanodes with unbalanced block pool usage by the standard deviation

[

https://issues.apache.org/jira/browse/HDFS-16203?focusedWorklogId=650542=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-650542

]

ASF GitHub Bot logged work on HDFS-16203:

-

Author: ASF GitHub Bot

Created on: 14/Sep/21 12:39

Start Date: 14/Sep/21 12:39

Worklog Time Spent: 10m

Work Description: tomscut commented on pull request #3366:

URL: https://github.com/apache/hadoop/pull/3366#issuecomment-919110352

Those failed unit tests are unrelated to the change and they work fine

locally.

@tasanuma @ferhui Could you please help review this PR again. Thank you very

much.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 650542)

Time Spent: 4.5h (was: 4h 20m)

> Discover datanodes with unbalanced block pool usage by the standard deviation

> -

>

> Key: HDFS-16203

> URL: https://issues.apache.org/jira/browse/HDFS-16203

> Project: Hadoop HDFS

> Issue Type: New Feature

>Reporter: tomscut

>Assignee: tomscut

>Priority: Major

> Labels: pull-request-available

> Attachments: image-2021-09-01-19-16-27-172.png

>

> Time Spent: 4.5h

> Remaining Estimate: 0h

>

> *Discover datanodes with unbalanced volume usage by the standard deviation.*

> *In some scenarios, we may cause unbalanced datanode disk usage:*

> 1. Repair the damaged disk and make it online again.

> 2. Add disks to some Datanodes.

> 3. Some disks are damaged, resulting in slow data writing.

> 4. Use some custom volume choosing policies.

> In the case of unbalanced disk usage, a sudden increase in datanode write

> traffic may result in busy disk I/O with low volume usage, resulting in

> decreased throughput across datanodes.

> We need to find these nodes in time to do diskBalance, or other processing.

> Based on the volume usage of each datanode, we can calculate the standard

> deviation of the volume usage. The more unbalanced the volume, the higher the

> standard deviation.

> *We can display the result on the Web of namenode, and then sorting directly

> to find the nodes where the volumes usages are unbalanced.*

> *{color:#172b4d}This interface is only used to obtain metrics and does not

> adversely affect namenode performance.{color}*

>

> {color:#172b4d}!image-2021-09-01-19-16-27-172.png|width=581,height=216!{color}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16203) Discover datanodes with unbalanced block pool usage by the standard deviation

[

https://issues.apache.org/jira/browse/HDFS-16203?focusedWorklogId=650451=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-650451

]

ASF GitHub Bot logged work on HDFS-16203:

-

Author: ASF GitHub Bot

Created on: 14/Sep/21 09:33

Start Date: 14/Sep/21 09:33

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on pull request #3366:

URL: https://github.com/apache/hadoop/pull/3366#issuecomment-918983134

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 50s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +0 :ok: | jshint | 0m 0s | | jshint was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 1 new or modified test files. |

_ trunk Compile Tests _ |

| +0 :ok: | mvndep | 12m 54s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 22m 43s | | trunk passed |

| +1 :green_heart: | compile | 5m 39s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | compile | 5m 14s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | checkstyle | 1m 11s | | trunk passed |

| +1 :green_heart: | mvnsite | 3m 13s | | trunk passed |

| +1 :green_heart: | javadoc | 2m 33s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 3m 16s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 7m 25s | | trunk passed |

| +1 :green_heart: | shadedclient | 16m 43s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 29s | | Maven dependency ordering for patch |

| +1 :green_heart: | mvninstall | 2m 52s | | the patch passed |

| +1 :green_heart: | compile | 5m 30s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javac | 5m 30s | | the patch passed |

| +1 :green_heart: | compile | 4m 53s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | javac | 4m 53s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 1m 15s | | hadoop-hdfs-project: The

patch generated 0 new + 120 unchanged - 9 fixed = 120 total (was 129) |

| +1 :green_heart: | mvnsite | 2m 55s | | the patch passed |

| +1 :green_heart: | javadoc | 2m 0s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 2m 54s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 7m 45s | | the patch passed |

| +1 :green_heart: | shadedclient | 15m 57s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 2m 28s | | hadoop-hdfs-client in the patch

passed. |

| -1 :x: | unit | 299m 59s |

[/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3366/9/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs in the patch passed. |

| -1 :x: | unit | 36m 47s |

[/patch-unit-hadoop-hdfs-project_hadoop-hdfs-rbf.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3366/9/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs-rbf.txt)

| hadoop-hdfs-rbf in the patch passed. |

| +1 :green_heart: | asflicense | 0m 48s | | The patch does not

generate ASF License warnings. |

| | | 470m 39s | | |

| Reason | Tests |

|---:|:--|

| Failed junit tests |

hadoop.hdfs.TestDFSStripedOutputStreamWithRandomECPolicy |

| | hadoop.hdfs.TestRollingUpgrade |

| | hadoop.hdfs.TestErasureCodingExerciseAPIs |

| | hadoop.hdfs.tools.TestECAdmin |

| | hadoop.hdfs.TestFileChecksum |

| | hadoop.hdfs.server.datanode.fsdataset.impl.TestFsDatasetImpl |

| | hadoop.hdfs.TestParallelShortCircuitReadUnCached |

| | hadoop.hdfs.TestDatanodeReport |

| | hadoop.hdfs.TestErasureCodingPolicyWithSnapshotWithRandomECPolicy |

| | hadoop.hdfs.TestDecommissionWithStriped |

|

[jira] [Work logged] (HDFS-16203) Discover datanodes with unbalanced block pool usage by the standard deviation

[

https://issues.apache.org/jira/browse/HDFS-16203?focusedWorklogId=650366=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-650366

]

ASF GitHub Bot logged work on HDFS-16203:

-

Author: ASF GitHub Bot

Created on: 14/Sep/21 06:36

Start Date: 14/Sep/21 06:36

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on pull request #3366:

URL: https://github.com/apache/hadoop/pull/3366#issuecomment-918853006

:confetti_ball: **+1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 44s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 1s | | codespell was not available. |

| +0 :ok: | jshint | 0m 1s | | jshint was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 1 new or modified test files. |

_ trunk Compile Tests _ |

| +0 :ok: | mvndep | 12m 49s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 20m 23s | | trunk passed |

| +1 :green_heart: | compile | 4m 57s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | compile | 4m 35s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | checkstyle | 1m 12s | | trunk passed |

| +1 :green_heart: | mvnsite | 3m 5s | | trunk passed |

| +1 :green_heart: | javadoc | 2m 20s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 3m 8s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 6m 49s | | trunk passed |

| +1 :green_heart: | shadedclient | 14m 36s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 27s | | Maven dependency ordering for patch |

| +1 :green_heart: | mvninstall | 2m 36s | | the patch passed |

| +1 :green_heart: | compile | 4m 51s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javac | 4m 51s | | the patch passed |

| +1 :green_heart: | compile | 4m 28s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | javac | 4m 28s | | the patch passed |

| +1 :green_heart: | blanks | 0m 1s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 1m 4s | | hadoop-hdfs-project: The

patch generated 0 new + 120 unchanged - 9 fixed = 120 total (was 129) |

| +1 :green_heart: | mvnsite | 2m 40s | | the patch passed |

| +1 :green_heart: | javadoc | 1m 57s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 2m 47s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 7m 3s | | the patch passed |

| +1 :green_heart: | shadedclient | 15m 16s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 2m 18s | | hadoop-hdfs-client in the patch

passed. |

| +1 :green_heart: | unit | 241m 16s | | hadoop-hdfs in the patch

passed. |

| +1 :green_heart: | unit | 21m 27s | | hadoop-hdfs-rbf in the patch

passed. |

| +1 :green_heart: | asflicense | 0m 51s | | The patch does not

generate ASF License warnings. |

| | | 386m 2s | | |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3366/8/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/3366 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell jshint |

| uname | Linux fdb214245f36 4.15.0-58-generic #64-Ubuntu SMP Tue Aug 6

11:12:41 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 70e73b3f3604f5e52328bb692ea38ad9f9d57c2c |

| Default Java | Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Multi-JDK versions |

/usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04

/usr/lib/jvm/java-8-openjdk-amd64:Private

Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

|

[jira] [Work logged] (HDFS-16203) Discover datanodes with unbalanced block pool usage by the standard deviation

[

https://issues.apache.org/jira/browse/HDFS-16203?focusedWorklogId=650316=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-650316

]

ASF GitHub Bot logged work on HDFS-16203:

-

Author: ASF GitHub Bot

Created on: 14/Sep/21 01:40

Start Date: 14/Sep/21 01:40

Worklog Time Spent: 10m

Work Description: tomscut commented on a change in pull request #3366:

URL: https://github.com/apache/hadoop/pull/3366#discussion_r707841118

##

File path:

hadoop-hdfs-project/hadoop-hdfs-client/src/main/java/org/apache/hadoop/hdfs/server/protocol/StorageReport.java

##

@@ -48,6 +49,8 @@ public StorageReport(DatanodeStorage storage, boolean failed,

long capacity,

this.nonDfsUsed = nonDfsUsed;

this.remaining = remaining;

this.blockPoolUsed = bpUsed;

+this.blockPoolUsagePercent = capacity == 0 ? 0.0f :

Review comment:

Thanks @tasanuma for your review. Thus can prevent some anomalies. I

will update it soon.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 650316)

Time Spent: 4h (was: 3h 50m)

> Discover datanodes with unbalanced block pool usage by the standard deviation

> -

>

> Key: HDFS-16203

> URL: https://issues.apache.org/jira/browse/HDFS-16203

> Project: Hadoop HDFS

> Issue Type: New Feature

>Reporter: tomscut

>Assignee: tomscut

>Priority: Major

> Labels: pull-request-available

> Attachments: image-2021-09-01-19-16-27-172.png

>

> Time Spent: 4h

> Remaining Estimate: 0h

>

> *Discover datanodes with unbalanced volume usage by the standard deviation.*

> *In some scenarios, we may cause unbalanced datanode disk usage:*

> 1. Repair the damaged disk and make it online again.

> 2. Add disks to some Datanodes.

> 3. Some disks are damaged, resulting in slow data writing.

> 4. Use some custom volume choosing policies.

> In the case of unbalanced disk usage, a sudden increase in datanode write

> traffic may result in busy disk I/O with low volume usage, resulting in

> decreased throughput across datanodes.

> We need to find these nodes in time to do diskBalance, or other processing.

> Based on the volume usage of each datanode, we can calculate the standard

> deviation of the volume usage. The more unbalanced the volume, the higher the

> standard deviation.

> *We can display the result on the Web of namenode, and then sorting directly

> to find the nodes where the volumes usages are unbalanced.*

> *{color:#172b4d}This interface is only used to obtain metrics and does not

> adversely affect namenode performance.{color}*

>

> {color:#172b4d}!image-2021-09-01-19-16-27-172.png|width=581,height=216!{color}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16203) Discover datanodes with unbalanced block pool usage by the standard deviation

[

https://issues.apache.org/jira/browse/HDFS-16203?focusedWorklogId=650304=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-650304

]

ASF GitHub Bot logged work on HDFS-16203:

-

Author: ASF GitHub Bot

Created on: 14/Sep/21 01:04

Start Date: 14/Sep/21 01:04

Worklog Time Spent: 10m

Work Description: tasanuma commented on a change in pull request #3366:

URL: https://github.com/apache/hadoop/pull/3366#discussion_r707829233

##

File path:

hadoop-hdfs-project/hadoop-hdfs-client/src/main/java/org/apache/hadoop/hdfs/server/protocol/StorageReport.java

##

@@ -48,6 +49,8 @@ public StorageReport(DatanodeStorage storage, boolean failed,

long capacity,

this.nonDfsUsed = nonDfsUsed;

this.remaining = remaining;

this.blockPoolUsed = bpUsed;

+this.blockPoolUsagePercent = capacity == 0 ? 0.0f :

Review comment:

If I remember right, `capacity` can be 0.

```suggestion

this.blockPoolUsagePercent = capacity <= 0 ? 0.0f :

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 650304)

Time Spent: 3h 50m (was: 3h 40m)

> Discover datanodes with unbalanced block pool usage by the standard deviation

> -

>

> Key: HDFS-16203

> URL: https://issues.apache.org/jira/browse/HDFS-16203

> Project: Hadoop HDFS

> Issue Type: New Feature

>Reporter: tomscut

>Assignee: tomscut

>Priority: Major

> Labels: pull-request-available

> Attachments: image-2021-09-01-19-16-27-172.png

>

> Time Spent: 3h 50m

> Remaining Estimate: 0h

>

> *Discover datanodes with unbalanced volume usage by the standard deviation.*

> *In some scenarios, we may cause unbalanced datanode disk usage:*

> 1. Repair the damaged disk and make it online again.

> 2. Add disks to some Datanodes.

> 3. Some disks are damaged, resulting in slow data writing.

> 4. Use some custom volume choosing policies.

> In the case of unbalanced disk usage, a sudden increase in datanode write

> traffic may result in busy disk I/O with low volume usage, resulting in

> decreased throughput across datanodes.

> We need to find these nodes in time to do diskBalance, or other processing.

> Based on the volume usage of each datanode, we can calculate the standard

> deviation of the volume usage. The more unbalanced the volume, the higher the

> standard deviation.

> *We can display the result on the Web of namenode, and then sorting directly

> to find the nodes where the volumes usages are unbalanced.*

> *{color:#172b4d}This interface is only used to obtain metrics and does not

> adversely affect namenode performance.{color}*

>

> {color:#172b4d}!image-2021-09-01-19-16-27-172.png|width=581,height=216!{color}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16203) Discover datanodes with unbalanced block pool usage by the standard deviation

[

https://issues.apache.org/jira/browse/HDFS-16203?focusedWorklogId=650201=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-650201

]

ASF GitHub Bot logged work on HDFS-16203:

-

Author: ASF GitHub Bot

Created on: 13/Sep/21 19:20

Start Date: 13/Sep/21 19:20

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on pull request #3366:

URL: https://github.com/apache/hadoop/pull/3366#issuecomment-918502823

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 40s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +0 :ok: | jshint | 0m 0s | | jshint was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 1 new or modified test files. |

_ trunk Compile Tests _ |

| +0 :ok: | mvndep | 12m 50s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 20m 12s | | trunk passed |

| +1 :green_heart: | compile | 4m 52s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | compile | 4m 31s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | checkstyle | 1m 14s | | trunk passed |

| +1 :green_heart: | mvnsite | 3m 6s | | trunk passed |

| +1 :green_heart: | javadoc | 2m 18s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 3m 4s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 6m 48s | | trunk passed |

| +1 :green_heart: | shadedclient | 14m 34s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 25s | | Maven dependency ordering for patch |

| +1 :green_heart: | mvninstall | 2m 37s | | the patch passed |

| +1 :green_heart: | compile | 4m 46s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javac | 4m 46s | | the patch passed |

| +1 :green_heart: | compile | 4m 25s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | javac | 4m 25s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| -0 :warning: | checkstyle | 1m 6s |

[/results-checkstyle-hadoop-hdfs-project.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3366/7/artifact/out/results-checkstyle-hadoop-hdfs-project.txt)

| hadoop-hdfs-project: The patch generated 1 new + 120 unchanged - 9 fixed =

121 total (was 129) |

| +1 :green_heart: | mvnsite | 2m 43s | | the patch passed |

| +1 :green_heart: | javadoc | 1m 54s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 2m 42s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 6m 59s | | the patch passed |

| +1 :green_heart: | shadedclient | 14m 19s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 2m 20s | | hadoop-hdfs-client in the patch

passed. |

| -1 :x: | unit | 240m 4s |

[/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3366/7/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs in the patch passed. |

| +1 :green_heart: | unit | 22m 26s | | hadoop-hdfs-rbf in the patch

passed. |

| +1 :green_heart: | asflicense | 0m 46s | | The patch does not

generate ASF License warnings. |

| | | 384m 6s | | |

| Reason | Tests |

|---:|:--|

| Failed junit tests |

hadoop.hdfs.server.balancer.TestBalancerWithHANameNodes |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3366/7/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/3366 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell jshint |

| uname | Linux 698a23a1a331 4.15.0-136-generic #140-Ubuntu SMP Thu Jan

[jira] [Work logged] (HDFS-16203) Discover datanodes with unbalanced block pool usage by the standard deviation

[

https://issues.apache.org/jira/browse/HDFS-16203?focusedWorklogId=649982=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-649982

]

ASF GitHub Bot logged work on HDFS-16203:

-

Author: ASF GitHub Bot

Created on: 13/Sep/21 12:39

Start Date: 13/Sep/21 12:39

Worklog Time Spent: 10m

Work Description: tomscut commented on a change in pull request #3366:

URL: https://github.com/apache/hadoop/pull/3366#discussion_r707294263

##

File path:

hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/server/namenode/FSNamesystem.java

##

@@ -6537,14 +6537,45 @@ public String getLiveNodes() {

if (node.getUpgradeDomain() != null) {

innerinfo.put("upgradeDomain", node.getUpgradeDomain());

}

+ StorageReport[] storageReports = node.getStorageReports();

+ innerinfo.put("blockPoolUsedPercentStdDev",

+ getBlockPoolUsedPercentStdDev(storageReports));

info.put(node.getXferAddrWithHostname(), innerinfo.build());

}

return JSON.toString(info);

}

+ /**

+ * Return the standard deviation of storage block pool usage.

+ */

+ @VisibleForTesting

+ public float getBlockPoolUsedPercentStdDev(StorageReport[] storageReports) {

+ArrayList usagePercentList = new ArrayList<>();

+float totalUsagePercent = 0.0f;

+float dev = 0.0f;

+

+if (storageReports.length == 0) {

+ return dev;

+}

+

+for (StorageReport s : storageReports) {

+ usagePercentList.add(s.getBlockPoolUsagePercent());

+ totalUsagePercent += s.getBlockPoolUsagePercent();

+}

+

+totalUsagePercent /= storageReports.length;

+Collections.sort(usagePercentList);

Review comment:

Thank @ferhui for your comments, I will try this. At present, there is a

failed UT, which is related to my changes, and I am repairing it. In order not

to delay your time, you can review it after I fix it.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 649982)

Time Spent: 3.5h (was: 3h 20m)

> Discover datanodes with unbalanced block pool usage by the standard deviation

> -

>

> Key: HDFS-16203

> URL: https://issues.apache.org/jira/browse/HDFS-16203

> Project: Hadoop HDFS

> Issue Type: New Feature

>Reporter: tomscut

>Assignee: tomscut

>Priority: Major

> Labels: pull-request-available

> Attachments: image-2021-09-01-19-16-27-172.png

>

> Time Spent: 3.5h

> Remaining Estimate: 0h

>

> *Discover datanodes with unbalanced volume usage by the standard deviation.*

> *In some scenarios, we may cause unbalanced datanode disk usage:*

> 1. Repair the damaged disk and make it online again.

> 2. Add disks to some Datanodes.

> 3. Some disks are damaged, resulting in slow data writing.

> 4. Use some custom volume choosing policies.

> In the case of unbalanced disk usage, a sudden increase in datanode write

> traffic may result in busy disk I/O with low volume usage, resulting in

> decreased throughput across datanodes.

> We need to find these nodes in time to do diskBalance, or other processing.

> Based on the volume usage of each datanode, we can calculate the standard

> deviation of the volume usage. The more unbalanced the volume, the higher the

> standard deviation.

> *We can display the result on the Web of namenode, and then sorting directly

> to find the nodes where the volumes usages are unbalanced.*

> *{color:#172b4d}This interface is only used to obtain metrics and does not

> adversely affect namenode performance.{color}*

>

> {color:#172b4d}!image-2021-09-01-19-16-27-172.png|width=581,height=216!{color}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16203) Discover datanodes with unbalanced block pool usage by the standard deviation

[

https://issues.apache.org/jira/browse/HDFS-16203?focusedWorklogId=649969=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-649969

]

ASF GitHub Bot logged work on HDFS-16203:

-

Author: ASF GitHub Bot

Created on: 13/Sep/21 12:16

Start Date: 13/Sep/21 12:16

Worklog Time Spent: 10m

Work Description: ferhui commented on a change in pull request #3366:

URL: https://github.com/apache/hadoop/pull/3366#discussion_r707277187

##

File path:

hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/server/namenode/FSNamesystem.java

##

@@ -6537,14 +6537,45 @@ public String getLiveNodes() {

if (node.getUpgradeDomain() != null) {

innerinfo.put("upgradeDomain", node.getUpgradeDomain());

}

+ StorageReport[] storageReports = node.getStorageReports();

+ innerinfo.put("blockPoolUsedPercentStdDev",

+ getBlockPoolUsedPercentStdDev(storageReports));

info.put(node.getXferAddrWithHostname(), innerinfo.build());

}

return JSON.toString(info);

}

+ /**

+ * Return the standard deviation of storage block pool usage.

+ */

+ @VisibleForTesting

+ public float getBlockPoolUsedPercentStdDev(StorageReport[] storageReports) {

+ArrayList usagePercentList = new ArrayList<>();

+float totalUsagePercent = 0.0f;

+float dev = 0.0f;

+

+if (storageReports.length == 0) {

+ return dev;

+}

+

+for (StorageReport s : storageReports) {

+ usagePercentList.add(s.getBlockPoolUsagePercent());

+ totalUsagePercent += s.getBlockPoolUsagePercent();

+}

+

+totalUsagePercent /= storageReports.length;

+Collections.sort(usagePercentList);

Review comment:

@tomscut _assertEquals(String message, float expected, float actual,

float delta)_ Can you try this?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 649969)

Time Spent: 3h 20m (was: 3h 10m)

> Discover datanodes with unbalanced block pool usage by the standard deviation

> -

>

> Key: HDFS-16203

> URL: https://issues.apache.org/jira/browse/HDFS-16203

> Project: Hadoop HDFS

> Issue Type: New Feature

>Reporter: tomscut

>Assignee: tomscut

>Priority: Major

> Labels: pull-request-available

> Attachments: image-2021-09-01-19-16-27-172.png

>

> Time Spent: 3h 20m

> Remaining Estimate: 0h

>

> *Discover datanodes with unbalanced volume usage by the standard deviation.*

> *In some scenarios, we may cause unbalanced datanode disk usage:*

> 1. Repair the damaged disk and make it online again.

> 2. Add disks to some Datanodes.

> 3. Some disks are damaged, resulting in slow data writing.

> 4. Use some custom volume choosing policies.

> In the case of unbalanced disk usage, a sudden increase in datanode write

> traffic may result in busy disk I/O with low volume usage, resulting in

> decreased throughput across datanodes.

> We need to find these nodes in time to do diskBalance, or other processing.

> Based on the volume usage of each datanode, we can calculate the standard

> deviation of the volume usage. The more unbalanced the volume, the higher the

> standard deviation.

> *We can display the result on the Web of namenode, and then sorting directly

> to find the nodes where the volumes usages are unbalanced.*

> *{color:#172b4d}This interface is only used to obtain metrics and does not

> adversely affect namenode performance.{color}*

>

> {color:#172b4d}!image-2021-09-01-19-16-27-172.png|width=581,height=216!{color}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16203) Discover datanodes with unbalanced block pool usage by the standard deviation

[

https://issues.apache.org/jira/browse/HDFS-16203?focusedWorklogId=649738=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-649738

]

ASF GitHub Bot logged work on HDFS-16203:

-

Author: ASF GitHub Bot

Created on: 12/Sep/21 20:20

Start Date: 12/Sep/21 20:20

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on pull request #3366:

URL: https://github.com/apache/hadoop/pull/3366#issuecomment-917703816

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 40s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 1s | | codespell was not available. |

| +0 :ok: | jshint | 0m 1s | | jshint was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 1 new or modified test files. |

_ trunk Compile Tests _ |

| +0 :ok: | mvndep | 12m 44s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 20m 22s | | trunk passed |

| +1 :green_heart: | compile | 4m 58s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | compile | 4m 40s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | checkstyle | 1m 13s | | trunk passed |

| +1 :green_heart: | mvnsite | 3m 7s | | trunk passed |

| +1 :green_heart: | javadoc | 2m 19s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 3m 7s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 6m 46s | | trunk passed |

| +1 :green_heart: | shadedclient | 14m 35s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 27s | | Maven dependency ordering for patch |

| +1 :green_heart: | mvninstall | 2m 33s | | the patch passed |

| +1 :green_heart: | compile | 4m 49s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javac | 4m 49s | | the patch passed |

| +1 :green_heart: | compile | 4m 28s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | javac | 4m 28s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 1m 5s | | hadoop-hdfs-project: The

patch generated 0 new + 117 unchanged - 9 fixed = 117 total (was 126) |

| +1 :green_heart: | mvnsite | 2m 36s | | the patch passed |

| +1 :green_heart: | javadoc | 1m 55s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 2m 43s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 7m 1s | | the patch passed |

| +1 :green_heart: | shadedclient | 14m 38s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 2m 23s | | hadoop-hdfs-client in the patch

passed. |

| -1 :x: | unit | 234m 54s |

[/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3366/6/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs in the patch passed. |

| -1 :x: | unit | 20m 48s |

[/patch-unit-hadoop-hdfs-project_hadoop-hdfs-rbf.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3366/6/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs-rbf.txt)

| hadoop-hdfs-rbf in the patch passed. |

| +1 :green_heart: | asflicense | 0m 53s | | The patch does not

generate ASF License warnings. |

| | | 378m 10s | | |

| Reason | Tests |

|---:|:--|

| Failed junit tests |

hadoop.hdfs.server.balancer.TestBalancerWithHANameNodes |

| | hadoop.hdfs.server.federation.router.TestRouterRPCClientRetries |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3366/6/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/3366 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell

[jira] [Work logged] (HDFS-16203) Discover datanodes with unbalanced block pool usage by the standard deviation

[

https://issues.apache.org/jira/browse/HDFS-16203?focusedWorklogId=649706=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-649706

]

ASF GitHub Bot logged work on HDFS-16203:

-

Author: ASF GitHub Bot

Created on: 12/Sep/21 13:48

Start Date: 12/Sep/21 13:48

Worklog Time Spent: 10m

Work Description: tomscut commented on a change in pull request #3366:

URL: https://github.com/apache/hadoop/pull/3366#discussion_r706840884

##

File path:

hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/server/namenode/FSNamesystem.java

##

@@ -6537,14 +6537,45 @@ public String getLiveNodes() {

if (node.getUpgradeDomain() != null) {

innerinfo.put("upgradeDomain", node.getUpgradeDomain());

}

+ StorageReport[] storageReports = node.getStorageReports();

+ innerinfo.put("blockPoolUsedPercentStdDev",

+ getBlockPoolUsedPercentStdDev(storageReports));

info.put(node.getXferAddrWithHostname(), innerinfo.build());

}

return JSON.toString(info);

}

+ /**

+ * Return the standard deviation of storage block pool usage.

+ */

+ @VisibleForTesting

+ public float getBlockPoolUsedPercentStdDev(StorageReport[] storageReports) {

+ArrayList usagePercentList = new ArrayList<>();

+float totalUsagePercent = 0.0f;

+float dev = 0.0f;

+

+if (storageReports.length == 0) {

+ return dev;

+}

+

+for (StorageReport s : storageReports) {

+ usagePercentList.add(s.getBlockPoolUsagePercent());

+ totalUsagePercent += s.getBlockPoolUsagePercent();

+}

+

+totalUsagePercent /= storageReports.length;

+Collections.sort(usagePercentList);

Review comment:

@ferhui A float or double may lose precision when being evaluated. When

multiple values are operated on in different order, the results may be

inconsistent. After remote ```Collections.sort(usagePercentList);```, I only