[jira] [Updated] (ARROW-2296) Add num_rows to file footer

[ https://issues.apache.org/jira/browse/ARROW-2296?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Lawrence Chan updated ARROW-2296: - Description: Maybe I'm overlooking something, but I don't see something on the API surface to get the number of rows in a arrow file without reading all the record batches. This is useful when we want to read into contiguous buffers, because it allows us to allocate the right sizes up front. I'd like to propose that we add `num_rows` as a field in the file footer so it's easy to query without reading the whole file. Meanwhile, before we get that added to the official format fbs, it would be nice to have a method that iterates over the record batch headers and sums up the lengths without reading the actual record batch body. was: Maybe I'm overlooking something, but I don't see something on the API surface to get the number of rows in a arrow file without reading all the record batches. I'd like to propose that we add `num_rows` as a field in the file footer so it's easy to query without reading the whole file. Meanwhile, before we get that added to the official format fbs, it would be nice to have a method that iterates over the record batch headers and sums up the lengths without reading the actual record batch body. > Add num_rows to file footer > --- > > Key: ARROW-2296 > URL: https://issues.apache.org/jira/browse/ARROW-2296 > Project: Apache Arrow > Issue Type: Improvement > Components: C++, Format >Reporter: Lawrence Chan >Priority: Minor > > Maybe I'm overlooking something, but I don't see something on the API surface > to get the number of rows in a arrow file without reading all the record > batches. This is useful when we want to read into contiguous buffers, because > it allows us to allocate the right sizes up front. > I'd like to propose that we add `num_rows` as a field in the file footer so > it's easy to query without reading the whole file. > Meanwhile, before we get that added to the official format fbs, it would be > nice to have a method that iterates over the record batch headers and sums up > the lengths without reading the actual record batch body. -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Updated] (ARROW-2296) Add num_rows to file footer

[ https://issues.apache.org/jira/browse/ARROW-2296?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Lawrence Chan updated ARROW-2296: - Component/s: C++ > Add num_rows to file footer > --- > > Key: ARROW-2296 > URL: https://issues.apache.org/jira/browse/ARROW-2296 > Project: Apache Arrow > Issue Type: Improvement > Components: C++, Format >Reporter: Lawrence Chan >Priority: Minor > > Maybe I'm overlooking something, but I don't see something on the API surface > to get the number of rows in a arrow file without reading all the record > batches. > I'd like to propose that we add `num_rows` as a field to the footer so it's > easy to query without reading the whole file. > Meanwhile, before we get that added to the official format fbs, it would be > nice to have a method that iterates over the record batch headers and sums up > the lengths without reading the actual record batch body. -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Updated] (ARROW-2296) Add num_rows to file footer

[ https://issues.apache.org/jira/browse/ARROW-2296?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Lawrence Chan updated ARROW-2296: - Component/s: Format > Add num_rows to file footer > --- > > Key: ARROW-2296 > URL: https://issues.apache.org/jira/browse/ARROW-2296 > Project: Apache Arrow > Issue Type: Improvement > Components: C++, Format >Reporter: Lawrence Chan >Priority: Minor > > Maybe I'm overlooking something, but I don't see something on the API surface > to get the number of rows in a arrow file without reading all the record > batches. > I'd like to propose that we add `num_rows` as a field to the footer so it's > easy to query without reading the whole file. > Meanwhile, before we get that added to the official format fbs, it would be > nice to have a method that iterates over the record batch headers and sums up > the lengths without reading the actual record batch body. -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Created] (ARROW-2296) Add num_rows to file footer

Lawrence Chan created ARROW-2296: Summary: Add num_rows to file footer Key: ARROW-2296 URL: https://issues.apache.org/jira/browse/ARROW-2296 Project: Apache Arrow Issue Type: Improvement Reporter: Lawrence Chan Maybe I'm overlooking something, but I don't see something on the API surface to get the number of rows in a arrow file without reading all the record batches. I'd like to propose that we add `num_rows` as a field to the footer so it's easy to query without reading the whole file. Meanwhile, before we get that added to the official format fbs, it would be nice to have a method that iterates over the record batch headers and sums up the lengths without reading the actual record batch body. -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Updated] (ARROW-2296) Add num_rows to file footer

[ https://issues.apache.org/jira/browse/ARROW-2296?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Lawrence Chan updated ARROW-2296: - Description: Maybe I'm overlooking something, but I don't see something on the API surface to get the number of rows in a arrow file without reading all the record batches. I'd like to propose that we add `num_rows` as a field in the file footer so it's easy to query without reading the whole file. Meanwhile, before we get that added to the official format fbs, it would be nice to have a method that iterates over the record batch headers and sums up the lengths without reading the actual record batch body. was: Maybe I'm overlooking something, but I don't see something on the API surface to get the number of rows in a arrow file without reading all the record batches. I'd like to propose that we add `num_rows` as a field to the footer so it's easy to query without reading the whole file. Meanwhile, before we get that added to the official format fbs, it would be nice to have a method that iterates over the record batch headers and sums up the lengths without reading the actual record batch body. > Add num_rows to file footer > --- > > Key: ARROW-2296 > URL: https://issues.apache.org/jira/browse/ARROW-2296 > Project: Apache Arrow > Issue Type: Improvement > Components: C++, Format >Reporter: Lawrence Chan >Priority: Minor > > Maybe I'm overlooking something, but I don't see something on the API surface > to get the number of rows in a arrow file without reading all the record > batches. > I'd like to propose that we add `num_rows` as a field in the file footer so > it's easy to query without reading the whole file. > Meanwhile, before we get that added to the official format fbs, it would be > nice to have a method that iterates over the record batch headers and sums up > the lengths without reading the actual record batch body. -- This message was sent by Atlassian JIRA (v7.6.3#76005)

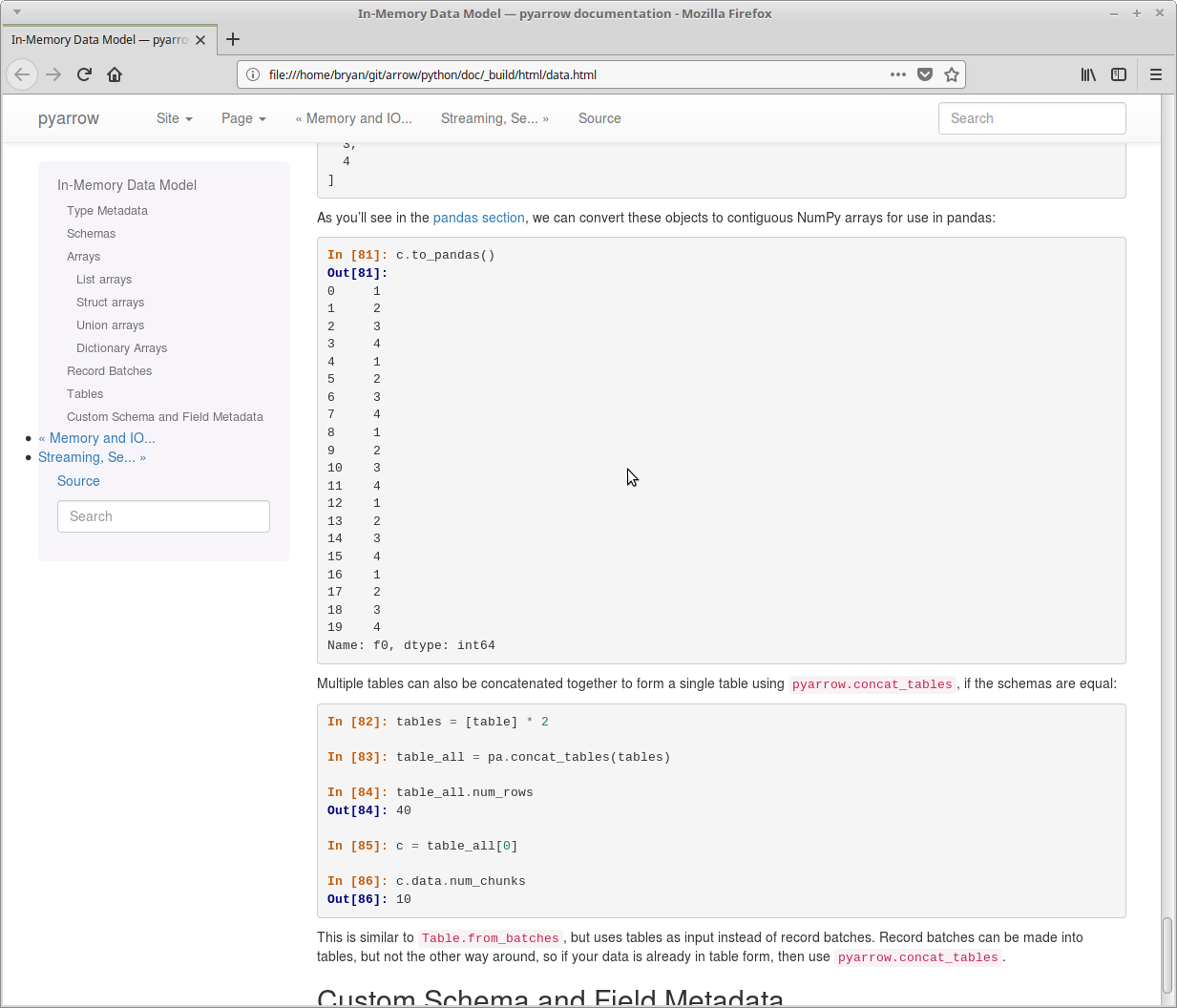

[jira] [Commented] (ARROW-2181) [Python] Add concat_tables to API reference, add documentation on use

[

https://issues.apache.org/jira/browse/ARROW-2181?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16393861#comment-16393861

]

ASF GitHub Bot commented on ARROW-2181:

---

BryanCutler commented on issue #1733: ARROW-2181: [PYTHON][DOC] Add doc on

usage of concat_tables

URL: https://github.com/apache/arrow/pull/1733#issuecomment-371983425

Screen of doc changes

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> [Python] Add concat_tables to API reference, add documentation on use

> -

>

> Key: ARROW-2181

> URL: https://issues.apache.org/jira/browse/ARROW-2181

> Project: Apache Arrow

> Issue Type: Improvement

> Components: Python

>Reporter: Wes McKinney

>Assignee: Bryan Cutler

>Priority: Major

> Labels: pull-request-available

> Fix For: 0.9.0

>

>

> This omission of documentation was mentioned on the mailing list on February

> 13. The documentation should illustrate the contrast between

> {{Table.from_batches}} and {{concat_tables}}.

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Commented] (ARROW-2099) [Python] Support DictionaryArray::FromArrays in Python bindings

[

https://issues.apache.org/jira/browse/ARROW-2099?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16393590#comment-16393590

]

ASF GitHub Bot commented on ARROW-2099:

---

wesm commented on a change in pull request #1734: ARROW-2099: [Python] Add safe

option to DictionaryArray.from_arrays to do boundschecking of indices by default

URL: https://github.com/apache/arrow/pull/1734#discussion_r173567908

##

File path: python/setup.py

##

@@ -208,8 +207,10 @@ def _run_cmake(self):

cmake_options.append('-DCMAKE_BUILD_TYPE={0}'

.format(self.build_type.lower()))

-cmake_options.append('-DBoost_NAMESPACE={}'.format(

-self.boost_namespace))

+

+if self.boost_namespace is not None:

+cmake_options.append('-DBoost_NAMESPACE={}'

+ .format(self.boost_namespace))

Review comment:

I added this to prevent a CMake warning when Boost isn't being bundled

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> [Python] Support DictionaryArray::FromArrays in Python bindings

> ---

>

> Key: ARROW-2099

> URL: https://issues.apache.org/jira/browse/ARROW-2099

> Project: Apache Arrow

> Issue Type: Improvement

> Components: Python

>Reporter: Wes McKinney

>Assignee: Wes McKinney

>Priority: Major

> Labels: pull-request-available

> Fix For: 0.9.0

>

>

> Follow up work from ARROW-1757.

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Updated] (ARROW-2099) [Python] Support DictionaryArray::FromArrays in Python bindings

[ https://issues.apache.org/jira/browse/ARROW-2099?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated ARROW-2099: -- Labels: pull-request-available (was: ) > [Python] Support DictionaryArray::FromArrays in Python bindings > --- > > Key: ARROW-2099 > URL: https://issues.apache.org/jira/browse/ARROW-2099 > Project: Apache Arrow > Issue Type: Improvement > Components: Python >Reporter: Wes McKinney >Assignee: Wes McKinney >Priority: Major > Labels: pull-request-available > Fix For: 0.9.0 > > > Follow up work from ARROW-1757. -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Commented] (ARROW-2099) [Python] Support DictionaryArray::FromArrays in Python bindings

[ https://issues.apache.org/jira/browse/ARROW-2099?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16393588#comment-16393588 ] ASF GitHub Bot commented on ARROW-2099: --- wesm opened a new pull request #1734: ARROW-2099: [Python] Add safe option to DictionaryArray.from_arrays to do boundschecking of indices by default URL: https://github.com/apache/arrow/pull/1734 This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org > [Python] Support DictionaryArray::FromArrays in Python bindings > --- > > Key: ARROW-2099 > URL: https://issues.apache.org/jira/browse/ARROW-2099 > Project: Apache Arrow > Issue Type: Improvement > Components: Python >Reporter: Wes McKinney >Assignee: Wes McKinney >Priority: Major > Labels: pull-request-available > Fix For: 0.9.0 > > > Follow up work from ARROW-1757. -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Updated] (ARROW-2295) Add to_numpy functions

[ https://issues.apache.org/jira/browse/ARROW-2295?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Lawrence Chan updated ARROW-2295: - Description: There are `to_pandas()` functions, but no `to_numpy()` functions. I'd like to propose that we include both. Also, `pyarrow.lib.Array.to_pandas()` returns a `numpy.ndarray`, which imho is very confusing :). I think it would be more intuitive for the `to_pandas()` functions to return `pandas.Series` and `pandas.DataFrame` objects, and the `to_numpy()` functions to return `numpy.ndarray` and either a dict of `numpy.ndarray` or a structured `numpy.ndarray` depending on a flag, for example. The `to_pandas()` function is of course welcome to use the `to_numpy()` func to avoid the additional index and whatnot of the `pandas.Series`. was: There are `to_pandas()` functions, but no `to_numpy()` functions. I'd like to propose that we include both. Also, `pyarrow.lib.Array.to_pandas()` returns a `numpy.ndarray`, which imho is very confusing :). I think it would be more intuitive for the `to_pandas()` functions to return `pandas.Series` and `pandas.DataFrame` objects, and the `to_numpy()` functions to return `numpy.ndarray` and either a dict of `numpy.ndarray` or a structured `numpy.ndarray` depending on a flag, for example. The `to_pandas()` function is of course welcome to use the `to_numpy()` func to avoid the additional indexes and whatnot of the `pandas.Series`. > Add to_numpy functions > -- > > Key: ARROW-2295 > URL: https://issues.apache.org/jira/browse/ARROW-2295 > Project: Apache Arrow > Issue Type: Improvement > Components: Python >Reporter: Lawrence Chan >Priority: Minor > > There are `to_pandas()` functions, but no `to_numpy()` functions. I'd like to > propose that we include both. > Also, `pyarrow.lib.Array.to_pandas()` returns a `numpy.ndarray`, which imho > is very confusing :). I think it would be more intuitive for the > `to_pandas()` functions to return `pandas.Series` and `pandas.DataFrame` > objects, and the `to_numpy()` functions to return `numpy.ndarray` and either > a dict of `numpy.ndarray` or a structured `numpy.ndarray` depending on a > flag, for example. The `to_pandas()` function is of course welcome to use the > `to_numpy()` func to avoid the additional index and whatnot of the > `pandas.Series`. > -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Updated] (ARROW-2295) Add to_numpy functions

[ https://issues.apache.org/jira/browse/ARROW-2295?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Lawrence Chan updated ARROW-2295: - Description: There are `to_pandas()` functions, but no `to_numpy()` functions. I'd like to propose that we include both. Also, `pyarrow.lib.Array.to_pandas()` returns a `numpy.ndarray`, which imho is very confusing :). I think it would be more intuitive for the `to_pandas()` functions to return `pandas.Series` and `pandas.DataFrame` objects, and the `to_numpy()` functions to return `numpy.ndarray` and either a ordered dict of `numpy.ndarray` or a structured `numpy.ndarray` depending on a flag, for example. The `to_pandas()` function is of course welcome to use the `to_numpy()` func to avoid the additional index and whatnot of the `pandas.Series`. was: There are `to_pandas()` functions, but no `to_numpy()` functions. I'd like to propose that we include both. Also, `pyarrow.lib.Array.to_pandas()` returns a `numpy.ndarray`, which imho is very confusing :). I think it would be more intuitive for the `to_pandas()` functions to return `pandas.Series` and `pandas.DataFrame` objects, and the `to_numpy()` functions to return `numpy.ndarray` and either a dict of `numpy.ndarray` or a structured `numpy.ndarray` depending on a flag, for example. The `to_pandas()` function is of course welcome to use the `to_numpy()` func to avoid the additional index and whatnot of the `pandas.Series`. > Add to_numpy functions > -- > > Key: ARROW-2295 > URL: https://issues.apache.org/jira/browse/ARROW-2295 > Project: Apache Arrow > Issue Type: Improvement > Components: Python >Reporter: Lawrence Chan >Priority: Minor > > There are `to_pandas()` functions, but no `to_numpy()` functions. I'd like to > propose that we include both. > Also, `pyarrow.lib.Array.to_pandas()` returns a `numpy.ndarray`, which imho > is very confusing :). I think it would be more intuitive for the > `to_pandas()` functions to return `pandas.Series` and `pandas.DataFrame` > objects, and the `to_numpy()` functions to return `numpy.ndarray` and either > a ordered dict of `numpy.ndarray` or a structured `numpy.ndarray` depending > on a flag, for example. The `to_pandas()` function is of course welcome to > use the `to_numpy()` func to avoid the additional index and whatnot of the > `pandas.Series`. > -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Updated] (ARROW-1491) [C++] Add casting implementations from strings to numbers or boolean

[ https://issues.apache.org/jira/browse/ARROW-1491?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Wes McKinney updated ARROW-1491: Fix Version/s: (was: 0.9.0) 0.10.0 > [C++] Add casting implementations from strings to numbers or boolean > > > Key: ARROW-1491 > URL: https://issues.apache.org/jira/browse/ARROW-1491 > Project: Apache Arrow > Issue Type: New Feature > Components: C++ >Reporter: Wes McKinney >Assignee: Licht Takeuchi >Priority: Major > Labels: pull-request-available > Fix For: 0.10.0 > > -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Created] (ARROW-2295) Add to_numpy functions

Lawrence Chan created ARROW-2295: Summary: Add to_numpy functions Key: ARROW-2295 URL: https://issues.apache.org/jira/browse/ARROW-2295 Project: Apache Arrow Issue Type: Improvement Components: Python Reporter: Lawrence Chan There are `to_pandas()` functions, but no `to_numpy()` functions. I'd like to propose that we include both. Also, `pyarrow.lib.Array.to_pandas()` returns a `numpy.ndarray`, which imho is very confusing :). I think it would be more intuitive for the `to_pandas()` functions to return `pandas.Series` and `pandas.DataFrame` objects, and the `to_numpy()` functions to return `numpy.ndarray` and either a dict of `numpy.ndarray` or a structured `numpy.ndarray` depending on a flag, for example. The `to_pandas()` function is of course welcome to use the `to_numpy()` func to avoid the additional indexes and whatnot of the `pandas.Series`. -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Updated] (ARROW-2027) [C++] ipc::Message::SerializeTo does not pad the message body

[ https://issues.apache.org/jira/browse/ARROW-2027?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Wes McKinney updated ARROW-2027: Fix Version/s: (was: 0.9.0) 0.10.0 > [C++] ipc::Message::SerializeTo does not pad the message body > - > > Key: ARROW-2027 > URL: https://issues.apache.org/jira/browse/ARROW-2027 > Project: Apache Arrow > Issue Type: Bug > Components: C++ >Reporter: Wes McKinney >Assignee: Panchen Xue >Priority: Major > Labels: pull-request-available > Fix For: 0.10.0 > > > I just want to note this here as a follow-up to ARROW-1860. I think that > padding is the correct behavior, but I wasn't sure enough to make the fix > there -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Commented] (ARROW-2027) [C++] ipc::Message::SerializeTo does not pad the message body

[ https://issues.apache.org/jira/browse/ARROW-2027?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16393515#comment-16393515 ] Wes McKinney commented on ARROW-2027: - Deferring this to 0.10.0 to prioritize getting ARROW-1860, ARROW-1996 done > [C++] ipc::Message::SerializeTo does not pad the message body > - > > Key: ARROW-2027 > URL: https://issues.apache.org/jira/browse/ARROW-2027 > Project: Apache Arrow > Issue Type: Bug > Components: C++ >Reporter: Wes McKinney >Assignee: Panchen Xue >Priority: Major > Labels: pull-request-available > Fix For: 0.10.0 > > > I just want to note this here as a follow-up to ARROW-1860. I think that > padding is the correct behavior, but I wasn't sure enough to make the fix > there -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Commented] (ARROW-2282) [Python] Create StringArray from buffers

[

https://issues.apache.org/jira/browse/ARROW-2282?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16393510#comment-16393510

]

ASF GitHub Bot commented on ARROW-2282:

---

wesm commented on a change in pull request #1720: ARROW-2282: [Python] Create

StringArray from buffers

URL: https://github.com/apache/arrow/pull/1720#discussion_r173561454

##

File path: python/pyarrow/tests/test_array.py

##

@@ -258,6 +258,26 @@ def test_union_from_sparse():

assert result.to_pylist() == [b'a', 1, b'b', b'c', 2, 3, b'd']

+def test_string_from_buffers():

+array = pa.array(["a", None, "b", "c"])

+

+buffers = array.buffers()

+copied = pa.StringArray.from_buffers(

+len(array), buffers[1], buffers[2], buffers[0], array.null_count,

+array.offset)

+assert copied.to_pylist() == ["a", None, "b", "c"]

+

+copied = pa.StringArray.from_buffers(

+len(array), buffers[1], buffers[2], buffers[0])

+assert copied.to_pylist() == ["a", None, "b", "c"]

+

+sliced = array[1:]

+copied = pa.StringArray.from_buffers(

+len(sliced), buffers[1], buffers[2], buffers[0], -1, sliced.offset)

+buffers = array.buffers()

+assert copied.to_pylist() == [None, "b", "c"]

Review comment:

We need to add checks for the computed null count, and for the case where

the null bitmap is not passed

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> [Python] Create StringArray from buffers

>

>

> Key: ARROW-2282

> URL: https://issues.apache.org/jira/browse/ARROW-2282

> Project: Apache Arrow

> Issue Type: Improvement

> Components: Python

>Reporter: Uwe L. Korn

>Assignee: Uwe L. Korn

>Priority: Major

> Labels: pull-request-available

> Fix For: 0.9.0

>

>

> While we will add a more general-purpose functionality in

> https://issues.apache.org/jira/browse/ARROW-2281, the interface is more

> complicate then the constructor that explicitly states all arguments:

> {{StringArray(int64_t length, const std::shared_ptr& value_offsets,

> …}}

> Thus I will also expose this explicit constructor.

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Created] (ARROW-2294) Fix splitAndTransfer for variable width vector

Siddharth Teotia created ARROW-2294: --- Summary: Fix splitAndTransfer for variable width vector Key: ARROW-2294 URL: https://issues.apache.org/jira/browse/ARROW-2294 Project: Apache Arrow Issue Type: Bug Components: Java - Vectors Reporter: Siddharth Teotia Assignee: Siddharth Teotia When we splitAndTransfer a vector, the value count to set for the target vector should be equal to split length and not the value count of source vector. We have seen cases in operator slike FLATTEN and under low memory conditions, we end up allocating a lot more memory for the target vector because of using a large value in setValueCount after split and transfer is done. -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Commented] (ARROW-2181) [Python] Add concat_tables to API reference, add documentation on use

[

https://issues.apache.org/jira/browse/ARROW-2181?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16393501#comment-16393501

]

ASF GitHub Bot commented on ARROW-2181:

---

BryanCutler commented on issue #1733: ARROW-2181: [PYTHON][DOC] Add doc on

usage of concat_tables

URL: https://github.com/apache/arrow/pull/1733#issuecomment-371936444

Great thanks, I'll run it

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> [Python] Add concat_tables to API reference, add documentation on use

> -

>

> Key: ARROW-2181

> URL: https://issues.apache.org/jira/browse/ARROW-2181

> Project: Apache Arrow

> Issue Type: Improvement

> Components: Python

>Reporter: Wes McKinney

>Assignee: Bryan Cutler

>Priority: Major

> Labels: pull-request-available

> Fix For: 0.9.0

>

>

> This omission of documentation was mentioned on the mailing list on February

> 13. The documentation should illustrate the contrast between

> {{Table.from_batches}} and {{concat_tables}}.

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Commented] (ARROW-2262) [Python] Support slicing on pyarrow.ChunkedArray

[

https://issues.apache.org/jira/browse/ARROW-2262?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16393495#comment-16393495

]

ASF GitHub Bot commented on ARROW-2262:

---

wesm commented on a change in pull request #1702: ARROW-2262: [Python] Support

slicing on pyarrow.ChunkedArray

URL: https://github.com/apache/arrow/pull/1702#discussion_r173559111

##

File path: python/pyarrow/table.pxi

##

@@ -77,6 +77,52 @@ cdef class ChunkedArray:

self._check_nullptr()

return self.chunked_array.null_count()

+def __getitem__(self, key):

+cdef int64_t item

+cdef int i

+self._check_nullptr()

+if isinstance(key, slice):

+return _normalize_slice(self, key)

+elif isinstance(key, six.integer_types):

+item = key

+if item >= self.chunked_array.length() or item < 0:

+return IndexError("ChunkedArray selection out of bounds")

Review comment:

Agreed, perhaps let's handle this as a follow up patch

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> [Python] Support slicing on pyarrow.ChunkedArray

>

>

> Key: ARROW-2262

> URL: https://issues.apache.org/jira/browse/ARROW-2262

> Project: Apache Arrow

> Issue Type: New Feature

> Components: Python

>Reporter: Uwe L. Korn

>Assignee: Uwe L. Korn

>Priority: Major

> Labels: pull-request-available

> Fix For: 0.9.0

>

>

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Resolved] (ARROW-2262) [Python] Support slicing on pyarrow.ChunkedArray

[ https://issues.apache.org/jira/browse/ARROW-2262?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Wes McKinney resolved ARROW-2262. - Resolution: Fixed Issue resolved by pull request 1702 [https://github.com/apache/arrow/pull/1702] > [Python] Support slicing on pyarrow.ChunkedArray > > > Key: ARROW-2262 > URL: https://issues.apache.org/jira/browse/ARROW-2262 > Project: Apache Arrow > Issue Type: New Feature > Components: Python >Reporter: Uwe L. Korn >Assignee: Uwe L. Korn >Priority: Major > Labels: pull-request-available > Fix For: 0.9.0 > > -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Commented] (ARROW-2262) [Python] Support slicing on pyarrow.ChunkedArray

[

https://issues.apache.org/jira/browse/ARROW-2262?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16393497#comment-16393497

]

ASF GitHub Bot commented on ARROW-2262:

---

wesm closed pull request #1702: ARROW-2262: [Python] Support slicing on

pyarrow.ChunkedArray

URL: https://github.com/apache/arrow/pull/1702

This is a PR merged from a forked repository.

As GitHub hides the original diff on merge, it is displayed below for

the sake of provenance:

As this is a foreign pull request (from a fork), the diff is supplied

below (as it won't show otherwise due to GitHub magic):

diff --git a/python/pyarrow/includes/libarrow.pxd

b/python/pyarrow/includes/libarrow.pxd

index d95f01661..776b96531 100644

--- a/python/pyarrow/includes/libarrow.pxd

+++ b/python/pyarrow/includes/libarrow.pxd

@@ -387,6 +387,8 @@ cdef extern from "arrow/api.h" namespace "arrow" nogil:

int num_chunks()

shared_ptr[CArray] chunk(int i)

shared_ptr[CDataType] type()

+shared_ptr[CChunkedArray] Slice(int64_t offset, int64_t length) const

+shared_ptr[CChunkedArray] Slice(int64_t offset) const

cdef cppclass CColumn" arrow::Column":

CColumn(const shared_ptr[CField]& field,

diff --git a/python/pyarrow/table.pxi b/python/pyarrow/table.pxi

index c27c0edd9..94041e465 100644

--- a/python/pyarrow/table.pxi

+++ b/python/pyarrow/table.pxi

@@ -77,6 +77,52 @@ cdef class ChunkedArray:

self._check_nullptr()

return self.chunked_array.null_count()

+def __getitem__(self, key):

+cdef int64_t item

+cdef int i

+self._check_nullptr()

+if isinstance(key, slice):

+return _normalize_slice(self, key)

+elif isinstance(key, six.integer_types):

+item = key

+if item >= self.chunked_array.length() or item < 0:

+return IndexError("ChunkedArray selection out of bounds")

+for i in range(self.num_chunks):

+if item < self.chunked_array.chunk(i).get().length():

+return self.chunk(i)[item]

+else:

+item -= self.chunked_array.chunk(i).get().length()

+else:

+raise TypeError("key must either be a slice or integer")

+

+def slice(self, offset=0, length=None):

+"""

+Compute zero-copy slice of this ChunkedArray

+

+Parameters

+--

+offset : int, default 0

+Offset from start of array to slice

+length : int, default None

+Length of slice (default is until end of batch starting from

+offset)

+

+Returns

+---

+sliced : ChunkedArray

+"""

+cdef shared_ptr[CChunkedArray] result

+

+if offset < 0:

+raise IndexError('Offset must be non-negative')

+

+if length is None:

+result = self.chunked_array.Slice(offset)

+else:

+result = self.chunked_array.Slice(offset, length)

+

+return pyarrow_wrap_chunked_array(result)

+

@property

def num_chunks(self):

"""

diff --git a/python/pyarrow/tests/test_table.py

b/python/pyarrow/tests/test_table.py

index e72761d32..356ecb7e0 100644

--- a/python/pyarrow/tests/test_table.py

+++ b/python/pyarrow/tests/test_table.py

@@ -24,6 +24,21 @@

import pyarrow as pa

+def test_chunked_array_getitem():

+data = [

+pa.array([1, 2, 3]),

+pa.array([4, 5, 6])

+]

+data = pa.chunked_array(data)

+assert data[1].as_py() == 2

+

+data_slice = data[2:4]

+assert data_slice.to_pylist() == [3, 4]

+

+data_slice = data[4:-1]

+assert data_slice.to_pylist() == [5]

+

+

def test_column_basics():

data = [

pa.array([-10, -5, 0, 5, 10])

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> [Python] Support slicing on pyarrow.ChunkedArray

>

>

> Key: ARROW-2262

> URL: https://issues.apache.org/jira/browse/ARROW-2262

> Project: Apache Arrow

> Issue Type: New Feature

> Components: Python

>Reporter: Uwe L. Korn

>Assignee: Uwe L. Korn

>Priority: Major

> Labels: pull-request-available

> Fix For: 0.9.0

>

>

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Commented] (ARROW-2181) [Python] Add concat_tables to API reference, add documentation on use

[

https://issues.apache.org/jira/browse/ARROW-2181?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16393494#comment-16393494

]

ASF GitHub Bot commented on ARROW-2181:

---

BryanCutler commented on a change in pull request #1733: ARROW-2181:

[PYTHON][DOC] Add doc on usage of concat_tables

URL: https://github.com/apache/arrow/pull/1733#discussion_r173559109

##

File path: python/doc/source/data.rst

##

@@ -393,6 +393,22 @@ objects to contiguous NumPy arrays for use in pandas:

c.to_pandas()

+Multiple tables can also be concatenated together to form a single table using

+``pa.concat_tables``, if the schemas are equal:

+

+.. ipython:: python

+

+ tables = [table] * 2

+ table_all = pa.concat_tables(tables)

+ table_all.num_rows

+ c = table_all[0]

+ c.data.num_chunks

+

+This is similar to ``Table.from_batches``, but uses tables as input instead of

+record batches. Record batches can be made into tables, but not the other way

+around, so if your data is already in table form, then use

+``pa.concat_tables``.

Review comment:

Sure, will do

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> [Python] Add concat_tables to API reference, add documentation on use

> -

>

> Key: ARROW-2181

> URL: https://issues.apache.org/jira/browse/ARROW-2181

> Project: Apache Arrow

> Issue Type: Improvement

> Components: Python

>Reporter: Wes McKinney

>Assignee: Bryan Cutler

>Priority: Major

> Labels: pull-request-available

> Fix For: 0.9.0

>

>

> This omission of documentation was mentioned on the mailing list on February

> 13. The documentation should illustrate the contrast between

> {{Table.from_batches}} and {{concat_tables}}.

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Updated] (ARROW-2193) [Plasma] plasma_store has runtime dependency on Boost shared libraries when ARROW_BOOST_USE_SHARED=on

[

https://issues.apache.org/jira/browse/ARROW-2193?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Wes McKinney updated ARROW-2193:

Fix Version/s: (was: 0.9.0)

0.10.0

> [Plasma] plasma_store has runtime dependency on Boost shared libraries when

> ARROW_BOOST_USE_SHARED=on

> -

>

> Key: ARROW-2193

> URL: https://issues.apache.org/jira/browse/ARROW-2193

> Project: Apache Arrow

> Issue Type: Bug

> Components: Plasma (C++)

>Reporter: Antoine Pitrou

>Assignee: Wes McKinney

>Priority: Major

> Labels: pull-request-available

> Fix For: 0.10.0

>

>

> I'm not sure why, but when I run the pyarrow test suite (for example

> {{py.test pyarrow/tests/test_plasma.py}}), plasma_store forks endlessly:

> {code:bash}

> $ ps fuwww

> USER PID %CPU %MEMVSZ RSS TTY STAT START TIME COMMAND

> [...]

> antoine 27869 12.0 0.4 863208 68976 pts/7S13:41 0:01

> /home/antoine/miniconda3/envs/pyarrow/bin/python

> /home/antoine/arrow/python/pyarrow/plasma_store -s /tmp/plasma_store40209423

> -m 1

> antoine 27885 13.0 0.4 863076 68560 pts/7S13:41 0:01 \_

> /home/antoine/miniconda3/envs/pyarrow/bin/python

> /home/antoine/arrow/python/pyarrow/plasma_store -s /tmp/plasma_store40209423

> -m 1

> antoine 27901 12.1 0.4 863076 68320 pts/7S13:41 0:01 \_

> /home/antoine/miniconda3/envs/pyarrow/bin/python

> /home/antoine/arrow/python/pyarrow/plasma_store -s /tmp/plasma_store40209423

> -m 1

> antoine 27920 13.6 0.4 863208 68868 pts/7S13:41 0:01 \_

> /home/antoine/miniconda3/envs/pyarrow/bin/python

> /home/antoine/arrow/python/pyarrow/plasma_store -s /tmp/plasma_store40209423

> -m 1

> [etc.]

> {code}

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Commented] (ARROW-2181) [Python] Add concat_tables to API reference, add documentation on use

[

https://issues.apache.org/jira/browse/ARROW-2181?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16393467#comment-16393467

]

ASF GitHub Bot commented on ARROW-2181:

---

wesm commented on issue #1733: ARROW-2181: [PYTHON][DOC] Add doc on usage of

concat_tables

URL: https://github.com/apache/arrow/pull/1733#issuecomment-371930766

see

https://github.com/apache/arrow/tree/master/python#building-the-documentation

-- if you are able to run the test suite / build the project then you can run

those commands

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> [Python] Add concat_tables to API reference, add documentation on use

> -

>

> Key: ARROW-2181

> URL: https://issues.apache.org/jira/browse/ARROW-2181

> Project: Apache Arrow

> Issue Type: Improvement

> Components: Python

>Reporter: Wes McKinney

>Assignee: Bryan Cutler

>Priority: Major

> Labels: pull-request-available

> Fix For: 0.9.0

>

>

> This omission of documentation was mentioned on the mailing list on February

> 13. The documentation should illustrate the contrast between

> {{Table.from_batches}} and {{concat_tables}}.

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Commented] (ARROW-2288) [Python] slicing logic defective

[

https://issues.apache.org/jira/browse/ARROW-2288?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16393464#comment-16393464

]

ASF GitHub Bot commented on ARROW-2288:

---

wesm closed pull request #1723: ARROW-2288: [Python] Fix slicing logic

URL: https://github.com/apache/arrow/pull/1723

This is a PR merged from a forked repository.

As GitHub hides the original diff on merge, it is displayed below for

the sake of provenance:

As this is a foreign pull request (from a fork), the diff is supplied

below (as it won't show otherwise due to GitHub magic):

diff --git a/python/pyarrow/array.pxi b/python/pyarrow/array.pxi

index e785c0ec5..cc65c0771 100644

--- a/python/pyarrow/array.pxi

+++ b/python/pyarrow/array.pxi

@@ -205,15 +205,25 @@ def asarray(values, type=None):

def _normalize_slice(object arrow_obj, slice key):

-cdef Py_ssize_t n = len(arrow_obj)

+cdef:

+Py_ssize_t start, stop, step

+Py_ssize_t n = len(arrow_obj)

start = key.start or 0

-while start < 0:

+if start < 0:

start += n

+if start < 0:

+start = 0

+elif start >= n:

+start = n

stop = key.stop if key.stop is not None else n

-while stop < 0:

+if stop < 0:

stop += n

+if stop < 0:

+stop = 0

+elif stop >= n:

+stop = n

step = key.step or 1

if step != 1:

diff --git a/python/pyarrow/tests/test_array.py

b/python/pyarrow/tests/test_array.py

index f034d78b3..4a337ad23 100644

--- a/python/pyarrow/tests/test_array.py

+++ b/python/pyarrow/tests/test_array.py

@@ -132,17 +132,18 @@ def test_array_slice():

# Test slice notation

assert arr[2:].equals(arr.slice(2))

-

assert arr[2:5].equals(arr.slice(2, 3))

-

assert arr[-5:].equals(arr.slice(len(arr) - 5))

-

with pytest.raises(IndexError):

arr[::-1]

-

with pytest.raises(IndexError):

arr[::2]

+n = len(arr)

+for start in range(-n * 2, n * 2):

+for stop in range(-n * 2, n * 2):

+assert arr[start:stop].to_pylist() == arr.to_pylist()[start:stop]

+

def test_array_factory_invalid_type():

arr = np.array([datetime.timedelta(1), datetime.timedelta(2)])

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> [Python] slicing logic defective

>

>

> Key: ARROW-2288

> URL: https://issues.apache.org/jira/browse/ARROW-2288

> Project: Apache Arrow

> Issue Type: Bug

> Components: Python

>Affects Versions: 0.8.0

>Reporter: Antoine Pitrou

>Assignee: Antoine Pitrou

>Priority: Major

> Labels: pull-request-available

> Fix For: 0.9.0

>

>

> The slicing logic tends to go too far when normalizing large negative bounds,

> which leads to results not in line with Python's slicing semantics:

> {code}

> >>> arr = pa.array([1,2,3,4])

> >>> arr[-99:100]

>

> [

> 2,

> 3,

> 4

> ]

> >>> arr.to_pylist()[-99:100]

> [1, 2, 3, 4]

> >>>

> >>>

> >>> arr[-6:-5]

>

> [

> 3

> ]

> >>> arr.to_pylist()[-6:-5]

> []

> {code}

> Also note this crash:

> {code}

> >>> arr[10:13]

> /home/antoine/arrow/cpp/src/arrow/array.cc:105 Check failed: (offset) <=

> (data.length)

> Abandon (core dumped)

> {code}

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Resolved] (ARROW-2288) [Python] slicing logic defective

[

https://issues.apache.org/jira/browse/ARROW-2288?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Wes McKinney resolved ARROW-2288.

-

Resolution: Fixed

Fix Version/s: 0.9.0

Issue resolved by pull request 1723

[https://github.com/apache/arrow/pull/1723]

> [Python] slicing logic defective

>

>

> Key: ARROW-2288

> URL: https://issues.apache.org/jira/browse/ARROW-2288

> Project: Apache Arrow

> Issue Type: Bug

> Components: Python

>Affects Versions: 0.8.0

>Reporter: Antoine Pitrou

>Assignee: Antoine Pitrou

>Priority: Major

> Labels: pull-request-available

> Fix For: 0.9.0

>

>

> The slicing logic tends to go too far when normalizing large negative bounds,

> which leads to results not in line with Python's slicing semantics:

> {code}

> >>> arr = pa.array([1,2,3,4])

> >>> arr[-99:100]

>

> [

> 2,

> 3,

> 4

> ]

> >>> arr.to_pylist()[-99:100]

> [1, 2, 3, 4]

> >>>

> >>>

> >>> arr[-6:-5]

>

> [

> 3

> ]

> >>> arr.to_pylist()[-6:-5]

> []

> {code}

> Also note this crash:

> {code}

> >>> arr[10:13]

> /home/antoine/arrow/cpp/src/arrow/array.cc:105 Check failed: (offset) <=

> (data.length)

> Abandon (core dumped)

> {code}

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Commented] (ARROW-2181) [Python] Add concat_tables to API reference, add documentation on use

[

https://issues.apache.org/jira/browse/ARROW-2181?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16393463#comment-16393463

]

ASF GitHub Bot commented on ARROW-2181:

---

wesm commented on a change in pull request #1733: ARROW-2181: [PYTHON][DOC] Add

doc on usage of concat_tables

URL: https://github.com/apache/arrow/pull/1733#discussion_r173553957

##

File path: python/doc/source/data.rst

##

@@ -393,6 +393,22 @@ objects to contiguous NumPy arrays for use in pandas:

c.to_pandas()

+Multiple tables can also be concatenated together to form a single table using

+``pa.concat_tables``, if the schemas are equal:

+

+.. ipython:: python

+

+ tables = [table] * 2

+ table_all = pa.concat_tables(tables)

+ table_all.num_rows

+ c = table_all[0]

+ c.data.num_chunks

+

+This is similar to ``Table.from_batches``, but uses tables as input instead of

+record batches. Record batches can be made into tables, but not the other way

+around, so if your data is already in table form, then use

+``pa.concat_tables``.

Review comment:

Can you spell out `pyarrow` here and above? We might turn these into API

links later

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> [Python] Add concat_tables to API reference, add documentation on use

> -

>

> Key: ARROW-2181

> URL: https://issues.apache.org/jira/browse/ARROW-2181

> Project: Apache Arrow

> Issue Type: Improvement

> Components: Python

>Reporter: Wes McKinney

>Assignee: Bryan Cutler

>Priority: Major

> Labels: pull-request-available

> Fix For: 0.9.0

>

>

> This omission of documentation was mentioned on the mailing list on February

> 13. The documentation should illustrate the contrast between

> {{Table.from_batches}} and {{concat_tables}}.

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Commented] (ARROW-2193) [Plasma] plasma_store has runtime dependency on Boost shared libraries when ARROW_BOOST_USE_SHARED=on

[

https://issues.apache.org/jira/browse/ARROW-2193?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16393461#comment-16393461

]

ASF GitHub Bot commented on ARROW-2193:

---

wesm commented on issue #1711: WIP ARROW-2193: [C++] Do not depend on Boost

libraries at runtime in plasma_store

URL: https://github.com/apache/arrow/pull/1711#issuecomment-371929957

@xhochy It seems that `CHECK_CXX_COMPILER_FLAG` doesn't turn up the right

answer on macOS. I'm afraid we'll have to leave this PR in WIP unless someone

else can figure this out for 0.9.0

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> [Plasma] plasma_store has runtime dependency on Boost shared libraries when

> ARROW_BOOST_USE_SHARED=on

> -

>

> Key: ARROW-2193

> URL: https://issues.apache.org/jira/browse/ARROW-2193

> Project: Apache Arrow

> Issue Type: Bug

> Components: Plasma (C++)

>Reporter: Antoine Pitrou

>Assignee: Wes McKinney

>Priority: Major

> Labels: pull-request-available

> Fix For: 0.9.0

>

>

> I'm not sure why, but when I run the pyarrow test suite (for example

> {{py.test pyarrow/tests/test_plasma.py}}), plasma_store forks endlessly:

> {code:bash}

> $ ps fuwww

> USER PID %CPU %MEMVSZ RSS TTY STAT START TIME COMMAND

> [...]

> antoine 27869 12.0 0.4 863208 68976 pts/7S13:41 0:01

> /home/antoine/miniconda3/envs/pyarrow/bin/python

> /home/antoine/arrow/python/pyarrow/plasma_store -s /tmp/plasma_store40209423

> -m 1

> antoine 27885 13.0 0.4 863076 68560 pts/7S13:41 0:01 \_

> /home/antoine/miniconda3/envs/pyarrow/bin/python

> /home/antoine/arrow/python/pyarrow/plasma_store -s /tmp/plasma_store40209423

> -m 1

> antoine 27901 12.1 0.4 863076 68320 pts/7S13:41 0:01 \_

> /home/antoine/miniconda3/envs/pyarrow/bin/python

> /home/antoine/arrow/python/pyarrow/plasma_store -s /tmp/plasma_store40209423

> -m 1

> antoine 27920 13.6 0.4 863208 68868 pts/7S13:41 0:01 \_

> /home/antoine/miniconda3/envs/pyarrow/bin/python

> /home/antoine/arrow/python/pyarrow/plasma_store -s /tmp/plasma_store40209423

> -m 1

> [etc.]

> {code}

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Commented] (ARROW-2193) [Plasma] plasma_store has runtime dependency on Boost shared libraries when ARROW_BOOST_USE_SHARED=on

[

https://issues.apache.org/jira/browse/ARROW-2193?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16393456#comment-16393456

]

ASF GitHub Bot commented on ARROW-2193:

---

wesm commented on issue #1711: WIP ARROW-2193: [C++] Do not depend on Boost

libraries at runtime in plasma_store

URL: https://github.com/apache/arrow/pull/1711#issuecomment-371928949

I am not sure why libboost_regex is still a runtime dependency, even with

`--as-needed` -- Plasma doesn't appear to have any symbols with a transitive

dependency on code in arrow/util/decimal.cc (where boost::regex is used)

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> [Plasma] plasma_store has runtime dependency on Boost shared libraries when

> ARROW_BOOST_USE_SHARED=on

> -

>

> Key: ARROW-2193

> URL: https://issues.apache.org/jira/browse/ARROW-2193

> Project: Apache Arrow

> Issue Type: Bug

> Components: Plasma (C++)

>Reporter: Antoine Pitrou

>Assignee: Wes McKinney

>Priority: Major

> Labels: pull-request-available

> Fix For: 0.9.0

>

>

> I'm not sure why, but when I run the pyarrow test suite (for example

> {{py.test pyarrow/tests/test_plasma.py}}), plasma_store forks endlessly:

> {code:bash}

> $ ps fuwww

> USER PID %CPU %MEMVSZ RSS TTY STAT START TIME COMMAND

> [...]

> antoine 27869 12.0 0.4 863208 68976 pts/7S13:41 0:01

> /home/antoine/miniconda3/envs/pyarrow/bin/python

> /home/antoine/arrow/python/pyarrow/plasma_store -s /tmp/plasma_store40209423

> -m 1

> antoine 27885 13.0 0.4 863076 68560 pts/7S13:41 0:01 \_

> /home/antoine/miniconda3/envs/pyarrow/bin/python

> /home/antoine/arrow/python/pyarrow/plasma_store -s /tmp/plasma_store40209423

> -m 1

> antoine 27901 12.1 0.4 863076 68320 pts/7S13:41 0:01 \_

> /home/antoine/miniconda3/envs/pyarrow/bin/python

> /home/antoine/arrow/python/pyarrow/plasma_store -s /tmp/plasma_store40209423

> -m 1

> antoine 27920 13.6 0.4 863208 68868 pts/7S13:41 0:01 \_

> /home/antoine/miniconda3/envs/pyarrow/bin/python

> /home/antoine/arrow/python/pyarrow/plasma_store -s /tmp/plasma_store40209423

> -m 1

> [etc.]

> {code}

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Commented] (ARROW-2181) [Python] Add concat_tables to API reference, add documentation on use

[

https://issues.apache.org/jira/browse/ARROW-2181?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16393449#comment-16393449

]

ASF GitHub Bot commented on ARROW-2181:

---

BryanCutler commented on issue #1733: ARROW-2181: [PYTHON][DOC] Add doc on

usage of concat_tables

URL: https://github.com/apache/arrow/pull/1733#issuecomment-371926208

cc @wesm @xhochy , I didn't get a chance to build the docs to try this out

yet. Is it done with the gen_api_docs docker image, or is there another easier

way to do it?

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> [Python] Add concat_tables to API reference, add documentation on use

> -

>

> Key: ARROW-2181

> URL: https://issues.apache.org/jira/browse/ARROW-2181

> Project: Apache Arrow

> Issue Type: Improvement

> Components: Python

>Reporter: Wes McKinney

>Assignee: Bryan Cutler

>Priority: Major

> Labels: pull-request-available

> Fix For: 0.9.0

>

>

> This omission of documentation was mentioned on the mailing list on February

> 13. The documentation should illustrate the contrast between

> {{Table.from_batches}} and {{concat_tables}}.

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Commented] (ARROW-2181) [Python] Add concat_tables to API reference, add documentation on use

[

https://issues.apache.org/jira/browse/ARROW-2181?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16393442#comment-16393442

]

ASF GitHub Bot commented on ARROW-2181:

---

BryanCutler opened a new pull request #1733: ARROW-2181: [PYTHON][DOC] Add doc

on usage of concat_tables

URL: https://github.com/apache/arrow/pull/1733

Adding Python API doc on usage of pa.concat_tables.

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> [Python] Add concat_tables to API reference, add documentation on use

> -

>

> Key: ARROW-2181

> URL: https://issues.apache.org/jira/browse/ARROW-2181

> Project: Apache Arrow

> Issue Type: Improvement

> Components: Python

>Reporter: Wes McKinney

>Assignee: Bryan Cutler

>Priority: Major

> Labels: pull-request-available

> Fix For: 0.9.0

>

>

> This omission of documentation was mentioned on the mailing list on February

> 13. The documentation should illustrate the contrast between

> {{Table.from_batches}} and {{concat_tables}}.

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Updated] (ARROW-2181) [Python] Add concat_tables to API reference, add documentation on use

[

https://issues.apache.org/jira/browse/ARROW-2181?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

ASF GitHub Bot updated ARROW-2181:

--

Labels: pull-request-available (was: )

> [Python] Add concat_tables to API reference, add documentation on use

> -

>

> Key: ARROW-2181

> URL: https://issues.apache.org/jira/browse/ARROW-2181

> Project: Apache Arrow

> Issue Type: Improvement

> Components: Python

>Reporter: Wes McKinney

>Assignee: Bryan Cutler

>Priority: Major

> Labels: pull-request-available

> Fix For: 0.9.0

>

>

> This omission of documentation was mentioned on the mailing list on February

> 13. The documentation should illustrate the contrast between

> {{Table.from_batches}} and {{concat_tables}}.

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Commented] (ARROW-2291) [C++] README missing instructions for libboost-regex-dev

[ https://issues.apache.org/jira/browse/ARROW-2291?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16393321#comment-16393321 ] ASF GitHub Bot commented on ARROW-2291: --- wesm closed pull request #1732: ARROW-2291: [C++] Add additional libboost-regex-dev to build instructions in README URL: https://github.com/apache/arrow/pull/1732 This is a PR merged from a forked repository. As GitHub hides the original diff on merge, it is displayed below for the sake of provenance: As this is a foreign pull request (from a fork), the diff is supplied below (as it won't show otherwise due to GitHub magic): diff --git a/cpp/README.md b/cpp/README.md index daeeade72..8018efd9e 100644 --- a/cpp/README.md +++ b/cpp/README.md @@ -35,6 +35,7 @@ On Ubuntu/Debian you can install the requirements with: ```shell sudo apt-get install cmake \ libboost-dev \ + libboost-regex-dev \ libboost-filesystem-dev \ libboost-system-dev ``` This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org > [C++] README missing instructions for libboost-regex-dev > > > Key: ARROW-2291 > URL: https://issues.apache.org/jira/browse/ARROW-2291 > Project: Apache Arrow > Issue Type: Improvement > Components: C++ > Environment: Ubuntu 16.04 >Reporter: Andy Grove >Assignee: Andy Grove >Priority: Trivial > Labels: pull-request-available > Fix For: 0.9.0 > > > After following the instructions in the README, I could not generate a > makefile using CMake because of a missing dependency. > The README needs to be updated to include installing libboost-regex-dev. > -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Updated] (ARROW-2291) [C++] README missing instructions for libboost-regex-dev

[ https://issues.apache.org/jira/browse/ARROW-2291?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated ARROW-2291: -- Labels: pull-request-available (was: ) > [C++] README missing instructions for libboost-regex-dev > > > Key: ARROW-2291 > URL: https://issues.apache.org/jira/browse/ARROW-2291 > Project: Apache Arrow > Issue Type: Improvement > Components: C++ > Environment: Ubuntu 16.04 >Reporter: Andy Grove >Assignee: Andy Grove >Priority: Trivial > Labels: pull-request-available > Fix For: 0.9.0 > > > After following the instructions in the README, I could not generate a > makefile using CMake because of a missing dependency. > The README needs to be updated to include installing libboost-regex-dev. > -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Created] (ARROW-2293) [JS] Print release vote e-mail template when making source release

Wes McKinney created ARROW-2293: --- Summary: [JS] Print release vote e-mail template when making source release Key: ARROW-2293 URL: https://issues.apache.org/jira/browse/ARROW-2293 Project: Apache Arrow Issue Type: Improvement Components: JavaScript Reporter: Wes McKinney This would help with streamlining the source release process. See https://github.com/apache/parquet-cpp/blob/master/dev/release/release-candidate for an example -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Commented] (ARROW-1974) [Python] Segfault when working with Arrow tables with duplicate columns

[

https://issues.apache.org/jira/browse/ARROW-1974?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16393313#comment-16393313

]

ASF GitHub Bot commented on ARROW-1974:

---

cpcloud commented on a change in pull request #447: ARROW-1974: Fix creating

Arrow table with duplicate column names

URL: https://github.com/apache/parquet-cpp/pull/447#discussion_r173526240

##

File path: src/parquet/schema.h

##

@@ -264,8 +264,11 @@ class PARQUET_EXPORT GroupNode : public Node {

bool Equals(const Node* other) const override;

NodePtr field(int i) const { return fields_[i]; }

+ // Get the index of a field by its name, or negative value if not found

+ // If several fields share the same name, the smallest index is returned

Review comment:

Right, makes sense.

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> [Python] Segfault when working with Arrow tables with duplicate columns

> ---

>

> Key: ARROW-1974

> URL: https://issues.apache.org/jira/browse/ARROW-1974

> Project: Apache Arrow

> Issue Type: Bug

> Components: C++, Python

>Affects Versions: 0.8.0

> Environment: Linux Mint 18.2

> Anaconda Python distribution + pyarrow installed from the conda-forge channel

>Reporter: Alexey Strokach

>Assignee: Antoine Pitrou

>Priority: Minor

> Labels: pull-request-available

> Fix For: 0.9.0

>

>

> I accidentally created a large number of Parquet files with two

> __index_level_0__ columns (through a Spark SQL query).

> PyArrow can read these files into tables, but it segfaults when converting

> the resulting tables to Pandas DataFrames or when saving the tables to

> Parquet files.

> {code:none}

> # Duplicate columns cause segmentation faults

> table = pq.read_table('/path/to/duplicate_column_file.parquet')

> table.to_pandas() # Segmentation fault

> pq.write_table(table, '/some/output.parquet') # Segmentation fault

> {code}

> If I remove the duplicate column using table.remove_column(...) everything

> works without segfaults.

> {code:none}

> # After removing duplicate columns, everything works fine

> table = pq.read_table('/path/to/duplicate_column_file.parquet')

> table.remove_column(34)

> table.to_pandas() # OK

> pq.write_table(table, '/some/output.parquet') # OK

> {code}

> For more concrete examples, see `test_segfault_1.py` and `test_segfault_2.py`

> here: https://gitlab.com/ostrokach/pyarrow_duplicate_column_errors.

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Commented] (ARROW-2236) [JS] Add more complete set of predicates

[

https://issues.apache.org/jira/browse/ARROW-2236?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16393062#comment-16393062

]

ASF GitHub Bot commented on ARROW-2236:

---

wesm commented on issue #1683: ARROW-2236: [JS] Add more complete set of

predicates

URL: https://github.com/apache/arrow/pull/1683#issuecomment-371856273

Nope, it should be pretty straightforward. It might be nice to have the

release script generate an e-mail template like

https://github.com/apache/parquet-cpp/blob/master/dev/release/release-candidate#L257

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> [JS] Add more complete set of predicates

>

>

> Key: ARROW-2236

> URL: https://issues.apache.org/jira/browse/ARROW-2236

> Project: Apache Arrow

> Issue Type: Task

> Components: JavaScript

>Reporter: Brian Hulette

>Assignee: Brian Hulette

>Priority: Major

> Labels: pull-request-available

> Fix For: JS-0.4.0

>

>

> Right now {{arrow.predicate}} only supports ==, >=, <=, &&, and ||

> We should also support !=, <, > at the very least

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Commented] (ARROW-2275) [C++] Buffer::mutable_data_ member uninitialized

[

https://issues.apache.org/jira/browse/ARROW-2275?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16393057#comment-16393057

]

ASF GitHub Bot commented on ARROW-2275:

---

wesm commented on a change in pull request #1717: ARROW-2275: [C++] Guard

against bad use of Buffer.mutable_data()

URL: https://github.com/apache/arrow/pull/1717#discussion_r173491445

##

File path: cpp/src/arrow/buffer.h

##

@@ -54,7 +54,11 @@ class ARROW_EXPORT Buffer {

///

/// \note The passed memory must be kept alive through some other means

Buffer(const uint8_t* data, int64_t size)

- : is_mutable_(false), data_(data), size_(size), capacity_(size) {}

+ : is_mutable_(false),

+data_(data),

+mutable_data_(nullptr),

Review comment:

`nullptr` is incompatible C++/CLI, which I believe is a way for C# code to

link to C++ libraries. see

https://msdn.microsoft.com/en-us/library/4ex65770.aspx

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> [C++] Buffer::mutable_data_ member uninitialized

>

>

> Key: ARROW-2275

> URL: https://issues.apache.org/jira/browse/ARROW-2275

> Project: Apache Arrow

> Issue Type: Bug

> Components: C++

>Affects Versions: 0.8.0

>Reporter: Antoine Pitrou

>Priority: Minor

> Labels: pull-request-available

> Fix For: 0.9.0

>

>

> For immutable buffers (i.e. most of them), the {{mutable_data_}} member is

> uninitialized. If the user calls {{mutable_data()}} by mistake on such a

> buffer, they will get a bogus pointer back.

> This is exacerbated by the Tensor API whose const and non-const

> {{raw_data()}} methods return different things...

> (also an idea: add a DCHECK for mutability before returning from

> {{mutable_data()}}?)

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Commented] (ARROW-2236) [JS] Add more complete set of predicates

[

https://issues.apache.org/jira/browse/ARROW-2236?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16393044#comment-16393044

]

ASF GitHub Bot commented on ARROW-2236:

---

TheNeuralBit commented on issue #1683: ARROW-2236: [JS] Add more complete set

of predicates

URL: https://github.com/apache/arrow/pull/1683#issuecomment-371852964

Thanks Wes! Anything I can do to help out with the release process?

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> [JS] Add more complete set of predicates

>

>

> Key: ARROW-2236

> URL: https://issues.apache.org/jira/browse/ARROW-2236

> Project: Apache Arrow

> Issue Type: Task

> Components: JavaScript

>Reporter: Brian Hulette

>Assignee: Brian Hulette

>Priority: Major

> Labels: pull-request-available

> Fix For: JS-0.4.0

>

>

> Right now {{arrow.predicate}} only supports ==, >=, <=, &&, and ||

> We should also support !=, <, > at the very least

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Commented] (ARROW-2275) [C++] Buffer::mutable_data_ member uninitialized

[

https://issues.apache.org/jira/browse/ARROW-2275?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16393035#comment-16393035

]

ASF GitHub Bot commented on ARROW-2275:

---

pitrou commented on a change in pull request #1717: ARROW-2275: [C++] Guard

against bad use of Buffer.mutable_data()

URL: https://github.com/apache/arrow/pull/1717#discussion_r173487404

##

File path: cpp/src/arrow/buffer.h

##

@@ -54,7 +54,11 @@ class ARROW_EXPORT Buffer {

///

/// \note The passed memory must be kept alive through some other means

Buffer(const uint8_t* data, int64_t size)

- : is_mutable_(false), data_(data), size_(size), capacity_(size) {}

+ : is_mutable_(false),

+data_(data),

+mutable_data_(nullptr),

Review comment:

Can you expand on the NULLPTR macro? Does it do something more then

`nullptr`?

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> [C++] Buffer::mutable_data_ member uninitialized

>

>

> Key: ARROW-2275

> URL: https://issues.apache.org/jira/browse/ARROW-2275

> Project: Apache Arrow

> Issue Type: Bug

> Components: C++

>Affects Versions: 0.8.0

>Reporter: Antoine Pitrou

>Priority: Minor

> Labels: pull-request-available

> Fix For: 0.9.0

>

>

> For immutable buffers (i.e. most of them), the {{mutable_data_}} member is

> uninitialized. If the user calls {{mutable_data()}} by mistake on such a

> buffer, they will get a bogus pointer back.

> This is exacerbated by the Tensor API whose const and non-const

> {{raw_data()}} methods return different things...

> (also an idea: add a DCHECK for mutability before returning from

> {{mutable_data()}}?)

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Commented] (ARROW-1974) [Python] Segfault when working with Arrow tables with duplicate columns

[

https://issues.apache.org/jira/browse/ARROW-1974?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16393031#comment-16393031

]

ASF GitHub Bot commented on ARROW-1974:

---

pitrou commented on a change in pull request #447: ARROW-1974: Fix creating

Arrow table with duplicate column names

URL: https://github.com/apache/parquet-cpp/pull/447#discussion_r173486366

##

File path: src/parquet/schema.h

##

@@ -264,8 +264,11 @@ class PARQUET_EXPORT GroupNode : public Node {

bool Equals(const Node* other) const override;

NodePtr field(int i) const { return fields_[i]; }

+ // Get the index of a field by its name, or negative value if not found

+ // If several fields share the same name, the smallest index is returned

Review comment:

Yes, it was, it just wasn't necessarily the one expected by the caller

according to its semantics.

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> [Python] Segfault when working with Arrow tables with duplicate columns

> ---

>

> Key: ARROW-1974

> URL: https://issues.apache.org/jira/browse/ARROW-1974

> Project: Apache Arrow

> Issue Type: Bug

> Components: C++, Python

>Affects Versions: 0.8.0

> Environment: Linux Mint 18.2

> Anaconda Python distribution + pyarrow installed from the conda-forge channel

>Reporter: Alexey Strokach

>Assignee: Antoine Pitrou

>Priority: Minor

> Labels: pull-request-available

> Fix For: 0.9.0

>

>

> I accidentally created a large number of Parquet files with two

> __index_level_0__ columns (through a Spark SQL query).

> PyArrow can read these files into tables, but it segfaults when converting

> the resulting tables to Pandas DataFrames or when saving the tables to

> Parquet files.

> {code:none}

> # Duplicate columns cause segmentation faults

> table = pq.read_table('/path/to/duplicate_column_file.parquet')

> table.to_pandas() # Segmentation fault

> pq.write_table(table, '/some/output.parquet') # Segmentation fault

> {code}

> If I remove the duplicate column using table.remove_column(...) everything

> works without segfaults.

> {code:none}

> # After removing duplicate columns, everything works fine

> table = pq.read_table('/path/to/duplicate_column_file.parquet')

> table.remove_column(34)

> table.to_pandas() # OK

> pq.write_table(table, '/some/output.parquet') # OK

> {code}

> For more concrete examples, see `test_segfault_1.py` and `test_segfault_2.py`

> here: https://gitlab.com/ostrokach/pyarrow_duplicate_column_errors.

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Commented] (ARROW-2236) [JS] Add more complete set of predicates

[

https://issues.apache.org/jira/browse/ARROW-2236?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16393011#comment-16393011

]

ASF GitHub Bot commented on ARROW-2236:

---

wesm commented on issue #1683: ARROW-2236: [JS] Add more complete set of

predicates

URL: https://github.com/apache/arrow/pull/1683#issuecomment-371846331

I'll get going on a 0.3.1 RC

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> [JS] Add more complete set of predicates

>

>

> Key: ARROW-2236

> URL: https://issues.apache.org/jira/browse/ARROW-2236

> Project: Apache Arrow

> Issue Type: Task

> Components: JavaScript

>Reporter: Brian Hulette

>Assignee: Brian Hulette

>Priority: Major

> Labels: pull-request-available

> Fix For: JS-0.4.0

>

>

> Right now {{arrow.predicate}} only supports ==, >=, <=, &&, and ||

> We should also support !=, <, > at the very least

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Commented] (ARROW-2236) [JS] Add more complete set of predicates

[

https://issues.apache.org/jira/browse/ARROW-2236?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16393009#comment-16393009

]

ASF GitHub Bot commented on ARROW-2236:

---

wesm closed pull request #1683: ARROW-2236: [JS] Add more complete set of

predicates

URL: https://github.com/apache/arrow/pull/1683

This is a PR merged from a forked repository.

As GitHub hides the original diff on merge, it is displayed below for

the sake of provenance:

As this is a foreign pull request (from a fork), the diff is supplied

below (as it won't show otherwise due to GitHub magic):

diff --git a/js/src/Arrow.externs.js b/js/src/Arrow.externs.js

index cf4db9134..be89be152 100644

--- a/js/src/Arrow.externs.js

+++ b/js/src/Arrow.externs.js

@@ -74,17 +74,24 @@ var custom = function () {};

var Value = function() {};

/** @type {?} */

-Value.prototype.gteq;

+Value.prototype.ge;

/** @type {?} */

-Value.prototype.lteq;

+Value.prototype.le;

/** @type {?} */

Value.prototype.eq;

+/** @type {?} */

+Value.prototype.lt;

+/** @type {?} */

+Value.prototype.gt;

+/** @type {?} */

+Value.prototype.ne;

var Col = function() {};

/** @type {?} */

Col.prototype.bind;

var Or = function() {};

var And = function() {};

+var Not = function() {};

var GTeq = function () {};

/** @type {?} */

GTeq.prototype.and;

@@ -108,6 +115,8 @@ Predicate.prototype.and;

/** @type {?} */

Predicate.prototype.or;

/** @type {?} */

+Predicate.prototype.not;

+/** @type {?} */

Predicate.prototype.ands;

var Literal = function() {};

@@ -209,6 +218,8 @@ Int128.prototype.plus

/** @type {?} */

Int128.prototype.hex

+var packBools = function() {};

+

var Type = function() {};

/** @type {?} */

Type.NONE = function() {};

diff --git a/js/src/Arrow.ts b/js/src/Arrow.ts

index 4a0a2ac6d..23e8b9983 100644

--- a/js/src/Arrow.ts

+++ b/js/src/Arrow.ts

@@ -18,7 +18,8 @@

import * as type_ from './type';

import * as data_ from './data';

import * as vector_ from './vector';

-import * as util_ from './util/int';

+import * as util_int_ from './util/int';

+import * as util_bit_ from './util/bit';

import * as visitor_ from './visitor';

import * as view_ from './vector/view';

import * as predicate_ from './predicate';

@@ -40,9 +41,10 @@ export { Table, DataFrame, NextFunc, BindFunc, CountByResult

};

export { Field, Schema, RecordBatch, Vector, Type };

export namespace util {

-export import Uint64 = util_.Uint64;

-export import Int64 = util_.Int64;

-export import Int128 = util_.Int128;

+export import Uint64 = util_int_.Uint64;

+export import Int64 = util_int_.Int64;

+export import Int128 = util_int_.Int128;

+export import packBools = util_bit_.packBools;

}

export namespace data {

@@ -173,6 +175,7 @@ export namespace predicate {

export import Or = predicate_.Or;

export import Col = predicate_.Col;

export import And = predicate_.And;

+export import Not = predicate_.Not;

export import GTeq = predicate_.GTeq;

export import LTeq = predicate_.LTeq;

export import Value = predicate_.Value;

@@ -222,16 +225,16 @@ Table['empty'] = Table.empty;