[jira] [Created] (FLINK-17900) Listener for when Files are committed in StreamingFileSink

Matthew McMahon created FLINK-17900: --- Summary: Listener for when Files are committed in StreamingFileSink Key: FLINK-17900 URL: https://issues.apache.org/jira/browse/FLINK-17900 Project: Flink Issue Type: Improvement Affects Versions: 1.10.0 Reporter: Matthew McMahon I have a scenario where once a file has been committed to S3 using the StreamingFileSink (I am using Java), that I should notify some downstream services. The idea is to produce a message on a Kafka topic for the files as they are finalized. I am currently looking into how this might be possible, and considering using reflection and/or checking the S3 bucket before/after the checkpoint is committed. Still trying to find a suitable way. However I was thinking it would be great if possible to register a listener that can be fired when StreamingFileSink commits a file. Does something like this exist, that I'm not aware of (new to flink) or could it be added? -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] caozhen1937 commented on a change in pull request #12296: [FLINK-17814][chinese-translation]Translate native kubernetes document to Chinese

caozhen1937 commented on a change in pull request #12296:

URL: https://github.com/apache/flink/pull/12296#discussion_r429596017

##

File path: docs/ops/deployment/native_kubernetes.zh.md

##

@@ -92,73 +90,73 @@ $ ./bin/kubernetes-session.sh \

-Dkubernetes.container.image=

{% endhighlight %}

-### Submitting jobs to an existing Session

+### 将作业提交到现有 Session

-Use the following command to submit a Flink Job to the Kubernetes cluster.

+使用以下命令将 Flink 作业提交到 Kubernetes 集群。

{% highlight bash %}

$ ./bin/flink run -d -e kubernetes-session -Dkubernetes.cluster-id=

examples/streaming/WindowJoin.jar

{% endhighlight %}

-### Accessing Job Manager UI

+### 访问 Job Manager UI

-There are several ways to expose a Service onto an external (outside of your

cluster) IP address.

-This can be configured using `kubernetes.service.exposed.type`.

+有几种方法可以将服务暴露到外部(集群外部) IP 地址。

+可以使用 `kubernetes.service.exposed.type` 进行配置。

-- `ClusterIP`: Exposes the service on a cluster-internal IP.

-The Service is only reachable within the cluster. If you want to access the

Job Manager ui or submit job to the existing session, you need to start a local

proxy.

-You can then use `localhost:8081` to submit a Flink job to the session or view

the dashboard.

+- `ClusterIP`:通过集群内部 IP 暴露服务。

+该服务只能在集群中访问。如果想访问 JobManager ui 或将作业提交到现有 session,则需要启动一个本地代理。

+然后你可以使用 `localhost:8081` 将 Flink 作业提交到 session 或查看仪表盘。

{% highlight bash %}

$ kubectl port-forward service/ 8081

{% endhighlight %}

-- `NodePort`: Exposes the service on each Node’s IP at a static port (the

`NodePort`). `:` could be used to contact the Job Manager

Service. `NodeIP` could be easily replaced with Kubernetes ApiServer address.

-You could find it in your kube config file.

+- `NodePort`:通过每个 Node 上的 IP 和静态端口(`NodePort`)暴露服务。`:`

可以用来连接 JobManager 服务。`NodeIP` 可以很容易地用 Kubernetes ApiServer 地址替换。

+你可以在 kube 配置文件找到它。

-- `LoadBalancer`: Default value, exposes the service externally using a cloud

provider’s load balancer.

-Since the cloud provider and Kubernetes needs some time to prepare the load

balancer, you may get a `NodePort` JobManager Web Interface in the client log.

-You can use `kubectl get services/` to get EXTERNAL-IP and then

construct the load balancer JobManager Web Interface manually

`http://:8081`.

+- `LoadBalancer`:默认值,使用云提供商的负载均衡器在外部暴露服务。

+由于云提供商和 Kubernetes 需要一些时间来准备负载均衡器,因此你可以在客户端日志中获得一个 `NodePort` 的 JobManager Web

界面。

+你可以使用 `kubectl get services/`获取 EXTERNAL-IP 然后手动构建负载均衡器 JobManager

Web 界面 `http://:8081`。

Review comment:

这个地方以及下面的一些地方 是符号和中文挨着,所以我就没有加空格。这个要加空格嘛?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [flink] caozhen1937 commented on a change in pull request #12296: [FLINK-17814][chinese-translation]Translate native kubernetes document to Chinese

caozhen1937 commented on a change in pull request #12296:

URL: https://github.com/apache/flink/pull/12296#discussion_r429596017

##

File path: docs/ops/deployment/native_kubernetes.zh.md

##

@@ -92,73 +90,73 @@ $ ./bin/kubernetes-session.sh \

-Dkubernetes.container.image=

{% endhighlight %}

-### Submitting jobs to an existing Session

+### 将作业提交到现有 Session

-Use the following command to submit a Flink Job to the Kubernetes cluster.

+使用以下命令将 Flink 作业提交到 Kubernetes 集群。

{% highlight bash %}

$ ./bin/flink run -d -e kubernetes-session -Dkubernetes.cluster-id=

examples/streaming/WindowJoin.jar

{% endhighlight %}

-### Accessing Job Manager UI

+### 访问 Job Manager UI

-There are several ways to expose a Service onto an external (outside of your

cluster) IP address.

-This can be configured using `kubernetes.service.exposed.type`.

+有几种方法可以将服务暴露到外部(集群外部) IP 地址。

+可以使用 `kubernetes.service.exposed.type` 进行配置。

-- `ClusterIP`: Exposes the service on a cluster-internal IP.

-The Service is only reachable within the cluster. If you want to access the

Job Manager ui or submit job to the existing session, you need to start a local

proxy.

-You can then use `localhost:8081` to submit a Flink job to the session or view

the dashboard.

+- `ClusterIP`:通过集群内部 IP 暴露服务。

+该服务只能在集群中访问。如果想访问 JobManager ui 或将作业提交到现有 session,则需要启动一个本地代理。

+然后你可以使用 `localhost:8081` 将 Flink 作业提交到 session 或查看仪表盘。

{% highlight bash %}

$ kubectl port-forward service/ 8081

{% endhighlight %}

-- `NodePort`: Exposes the service on each Node’s IP at a static port (the

`NodePort`). `:` could be used to contact the Job Manager

Service. `NodeIP` could be easily replaced with Kubernetes ApiServer address.

-You could find it in your kube config file.

+- `NodePort`:通过每个 Node 上的 IP 和静态端口(`NodePort`)暴露服务。`:`

可以用来连接 JobManager 服务。`NodeIP` 可以很容易地用 Kubernetes ApiServer 地址替换。

+你可以在 kube 配置文件找到它。

-- `LoadBalancer`: Default value, exposes the service externally using a cloud

provider’s load balancer.

-Since the cloud provider and Kubernetes needs some time to prepare the load

balancer, you may get a `NodePort` JobManager Web Interface in the client log.

-You can use `kubectl get services/` to get EXTERNAL-IP and then

construct the load balancer JobManager Web Interface manually

`http://:8081`.

+- `LoadBalancer`:默认值,使用云提供商的负载均衡器在外部暴露服务。

+由于云提供商和 Kubernetes 需要一些时间来准备负载均衡器,因此你可以在客户端日志中获得一个 `NodePort` 的 JobManager Web

界面。

+你可以使用 `kubectl get services/`获取 EXTERNAL-IP 然后手动构建负载均衡器 JobManager

Web 界面 `http://:8081`。

Review comment:

这个地方 是符号和中文挨着,所以我就没有加空格

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [flink] caozhen1937 commented on a change in pull request #12296: [FLINK-17814][chinese-translation]Translate native kubernetes document to Chinese

caozhen1937 commented on a change in pull request #12296:

URL: https://github.com/apache/flink/pull/12296#discussion_r429595609

##

File path: docs/ops/deployment/native_kubernetes.zh.md

##

@@ -92,73 +90,73 @@ $ ./bin/kubernetes-session.sh \

-Dkubernetes.container.image=

{% endhighlight %}

-### Submitting jobs to an existing Session

+### 将作业提交到现有 Session

-Use the following command to submit a Flink Job to the Kubernetes cluster.

+使用以下命令将 Flink 作业提交到 Kubernetes 集群。

{% highlight bash %}

$ ./bin/flink run -d -e kubernetes-session -Dkubernetes.cluster-id=

examples/streaming/WindowJoin.jar

{% endhighlight %}

-### Accessing Job Manager UI

+### 访问 Job Manager UI

-There are several ways to expose a Service onto an external (outside of your

cluster) IP address.

-This can be configured using `kubernetes.service.exposed.type`.

+有几种方法可以将服务暴露到外部(集群外部) IP 地址。

+可以使用 `kubernetes.service.exposed.type` 进行配置。

-- `ClusterIP`: Exposes the service on a cluster-internal IP.

-The Service is only reachable within the cluster. If you want to access the

Job Manager ui or submit job to the existing session, you need to start a local

proxy.

-You can then use `localhost:8081` to submit a Flink job to the session or view

the dashboard.

+- `ClusterIP`:通过集群内部 IP 暴露服务。

+该服务只能在集群中访问。如果想访问 JobManager ui 或将作业提交到现有 session,则需要启动一个本地代理。

+然后你可以使用 `localhost:8081` 将 Flink 作业提交到 session 或查看仪表盘。

{% highlight bash %}

$ kubectl port-forward service/ 8081

{% endhighlight %}

-- `NodePort`: Exposes the service on each Node’s IP at a static port (the

`NodePort`). `:` could be used to contact the Job Manager

Service. `NodeIP` could be easily replaced with Kubernetes ApiServer address.

-You could find it in your kube config file.

+- `NodePort`:通过每个 Node 上的 IP 和静态端口(`NodePort`)暴露服务。`:`

可以用来连接 JobManager 服务。`NodeIP` 可以很容易地用 Kubernetes ApiServer 地址替换。

+你可以在 kube 配置文件找到它。

-- `LoadBalancer`: Default value, exposes the service externally using a cloud

provider’s load balancer.

-Since the cloud provider and Kubernetes needs some time to prepare the load

balancer, you may get a `NodePort` JobManager Web Interface in the client log.

-You can use `kubectl get services/` to get EXTERNAL-IP and then

construct the load balancer JobManager Web Interface manually

`http://:8081`.

+- `LoadBalancer`:默认值,使用云提供商的负载均衡器在外部暴露服务。

Review comment:

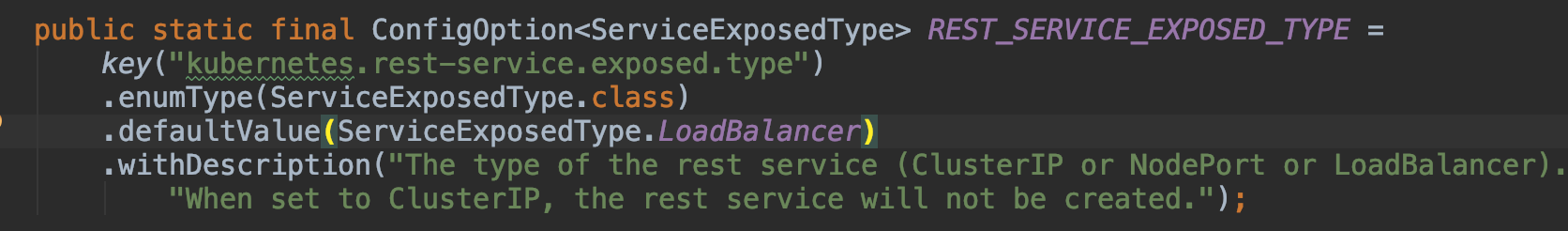

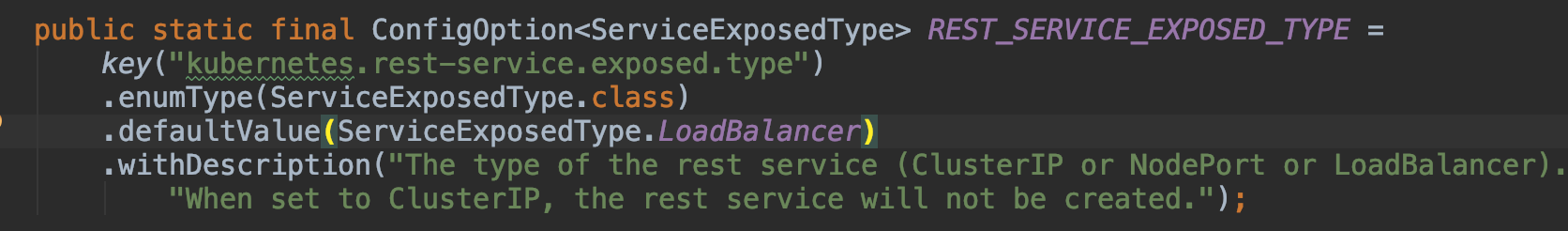

看代码中默认值是LoadBalancer

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [flink] caozhen1937 commented on a change in pull request #12296: [FLINK-17814][chinese-translation]Translate native kubernetes document to Chinese

caozhen1937 commented on a change in pull request #12296:

URL: https://github.com/apache/flink/pull/12296#discussion_r429595609

##

File path: docs/ops/deployment/native_kubernetes.zh.md

##

@@ -92,73 +90,73 @@ $ ./bin/kubernetes-session.sh \

-Dkubernetes.container.image=

{% endhighlight %}

-### Submitting jobs to an existing Session

+### 将作业提交到现有 Session

-Use the following command to submit a Flink Job to the Kubernetes cluster.

+使用以下命令将 Flink 作业提交到 Kubernetes 集群。

{% highlight bash %}

$ ./bin/flink run -d -e kubernetes-session -Dkubernetes.cluster-id=

examples/streaming/WindowJoin.jar

{% endhighlight %}

-### Accessing Job Manager UI

+### 访问 Job Manager UI

-There are several ways to expose a Service onto an external (outside of your

cluster) IP address.

-This can be configured using `kubernetes.service.exposed.type`.

+有几种方法可以将服务暴露到外部(集群外部) IP 地址。

+可以使用 `kubernetes.service.exposed.type` 进行配置。

-- `ClusterIP`: Exposes the service on a cluster-internal IP.

-The Service is only reachable within the cluster. If you want to access the

Job Manager ui or submit job to the existing session, you need to start a local

proxy.

-You can then use `localhost:8081` to submit a Flink job to the session or view

the dashboard.

+- `ClusterIP`:通过集群内部 IP 暴露服务。

+该服务只能在集群中访问。如果想访问 JobManager ui 或将作业提交到现有 session,则需要启动一个本地代理。

+然后你可以使用 `localhost:8081` 将 Flink 作业提交到 session 或查看仪表盘。

{% highlight bash %}

$ kubectl port-forward service/ 8081

{% endhighlight %}

-- `NodePort`: Exposes the service on each Node’s IP at a static port (the

`NodePort`). `:` could be used to contact the Job Manager

Service. `NodeIP` could be easily replaced with Kubernetes ApiServer address.

-You could find it in your kube config file.

+- `NodePort`:通过每个 Node 上的 IP 和静态端口(`NodePort`)暴露服务。`:`

可以用来连接 JobManager 服务。`NodeIP` 可以很容易地用 Kubernetes ApiServer 地址替换。

+你可以在 kube 配置文件找到它。

-- `LoadBalancer`: Default value, exposes the service externally using a cloud

provider’s load balancer.

-Since the cloud provider and Kubernetes needs some time to prepare the load

balancer, you may get a `NodePort` JobManager Web Interface in the client log.

-You can use `kubectl get services/` to get EXTERNAL-IP and then

construct the load balancer JobManager Web Interface manually

`http://:8081`.

+- `LoadBalancer`:默认值,使用云提供商的负载均衡器在外部暴露服务。

Review comment:

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [flink] JingsongLi commented on a change in pull request #12283: [FLINK-16975][documentation] Add docs for FileSystem connector

JingsongLi commented on a change in pull request #12283: URL: https://github.com/apache/flink/pull/12283#discussion_r429594503 ## File path: docs/dev/table/connectors/filesystem.md ## @@ -0,0 +1,352 @@ +--- +title: "Hadoop FileSystem Connector" +nav-title: Hadoop FileSystem Review comment: It is for Flink FS, forgot to modify title. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (FLINK-13733) FlinkKafkaInternalProducerITCase.testHappyPath fails on Travis

[

https://issues.apache.org/jira/browse/FLINK-13733?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17115011#comment-17115011

]

Jiangjie Qin commented on FLINK-13733:

--

I am not completely sure what caused the problem. One guess is that the

consumer was not able to connect to the broker to begin with. If so, this is

more of a connectivity issue of the environment. I am adding some debugging

code here to give more information in case this happen again.

> FlinkKafkaInternalProducerITCase.testHappyPath fails on Travis

> --

>

> Key: FLINK-13733

> URL: https://issues.apache.org/jira/browse/FLINK-13733

> Project: Flink

> Issue Type: Bug

> Components: Connectors / Kafka, Tests

>Affects Versions: 1.9.0, 1.10.0, 1.11.0

>Reporter: Till Rohrmann

>Assignee: Jiangjie Qin

>Priority: Critical

> Labels: test-stability

> Fix For: 1.11.0

>

> Attachments: 20200421.13.tar.gz

>

>

> The {{FlinkKafkaInternalProducerITCase.testHappyPath}} fails on Travis with

> {code}

> Test

> testHappyPath(org.apache.flink.streaming.connectors.kafka.FlinkKafkaInternalProducerITCase)

> failed with:

> java.util.NoSuchElementException

> at

> org.apache.kafka.common.utils.AbstractIterator.next(AbstractIterator.java:52)

> at

> org.apache.flink.shaded.guava18.com.google.common.collect.Iterators.getOnlyElement(Iterators.java:302)

> at

> org.apache.flink.shaded.guava18.com.google.common.collect.Iterables.getOnlyElement(Iterables.java:289)

> at

> org.apache.flink.streaming.connectors.kafka.FlinkKafkaInternalProducerITCase.assertRecord(FlinkKafkaInternalProducerITCase.java:169)

> at

> org.apache.flink.streaming.connectors.kafka.FlinkKafkaInternalProducerITCase.testHappyPath(FlinkKafkaInternalProducerITCase.java:70)

> at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

> at

> sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

> at

> sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> at java.lang.reflect.Method.invoke(Method.java:498)

> at

> org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:50)

> at

> org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12)

> at

> org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:47)

> at

> org.junit.internal.runners.statements.InvokeMethod.evaluate(InvokeMethod.java:17)

> at

> org.junit.internal.runners.statements.FailOnTimeout$CallableStatement.call(FailOnTimeout.java:298)

> at

> org.junit.internal.runners.statements.FailOnTimeout$CallableStatement.call(FailOnTimeout.java:292)

> at java.util.concurrent.FutureTask.run(FutureTask.java:266)

> at java.lang.Thread.run(Thread.java:748)

> {code}

> https://api.travis-ci.org/v3/job/571870358/log.txt

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [flink] klion26 commented on a change in pull request #12296: [FLINK-17814][chinese-translation]Translate native kubernetes document to Chinese

klion26 commented on a change in pull request #12296:

URL: https://github.com/apache/flink/pull/12296#discussion_r429517344

##

File path: docs/ops/deployment/native_kubernetes.zh.md

##

@@ -24,43 +24,41 @@ specific language governing permissions and limitations

under the License.

-->

-This page describes how to deploy a Flink session cluster natively on

[Kubernetes](https://kubernetes.io).

+本页面描述了如何在 [Kubernetes](https://kubernetes.io) 原生的部署 Flink session 集群。

* This will be replaced by the TOC

{:toc}

-Flink's native Kubernetes integration is still experimental. There may be

changes in the configuration and CLI flags in latter versions.

+Flink 的原生 Kubernetes 集成仍处于试验阶段。在以后的版本中,配置和 CLI flags 可能会发生变化。

-## Requirements

+## 要求

-- Kubernetes 1.9 or above.

-- KubeConfig, which has access to list, create, delete pods and services,

configurable via `~/.kube/config`. You can verify permissions by running

`kubectl auth can-i pods`.

-- Kubernetes DNS enabled.

-- A service Account with [RBAC](#rbac) permissions to create, delete pods.

+- Kubernetes 版本 1.9 或以上。

+- KubeConfig 可以访问列表、删除 pods 和 services,可以通过`~/.kube/config` 配置。你可以通过运行

`kubectl auth can-i pods` 来验证权限。

Review comment:

```suggestion

- KubeConfig 可以访问列表、删除 pods 和 services,可以通过 `~/.kube/config` 配置。你可以通过运行

`kubectl auth can-i pods` 来验证权限。

```

这里是说 KubeConfig 可以正确的 `list`,`ceate` 以及 `delete` pod 吧

##

File path: docs/ops/deployment/native_kubernetes.zh.md

##

@@ -168,13 +166,13 @@ appender.console.layout.type = PatternLayout

appender.console.layout.pattern = %d{-MM-dd HH:mm:ss,SSS} %-5p %-60c %x -

%m%n

{% endhighlight %}

-If the pod is running, you can use `kubectl exec -it bash` to tunnel

in and view the logs or debug the process.

+如果 pod 正在运行,可以使用 `kubectl exec -it bash` 进入 pod 并查看日志或调试进程。

-## Flink Kubernetes Application

+## Flink Kubernetes 应用程序

-### Start Flink Application

+### 启动 Flink 应用程序

-Application mode allows users to create a single image containing their Job

and the Flink runtime, which will automatically create and destroy cluster

components as needed. The Flink community provides base docker images

[customized](docker.html#customize-flink-image) for any use case.

+应用程序模式允许用户创建单个镜像,其中包含他们的作业和 Flink 运行时,该镜像将根据所需自动创建和销毁集群组件。Flink 社区为任何用例提供了基础镜像

[customized](docker.html#customize-flink-image)。

Review comment:

`该镜像将根据所需` -> `该镜像将按需` 会好一些吗?

这里 `docker images customized for any use case` 是指适用任何场景下的基础镜像?

##

File path: docs/ops/deployment/native_kubernetes.zh.md

##

@@ -193,66 +191,66 @@ $ ./bin/flink run-application -p 8 -t

kubernetes-application \

local:///opt/flink/usrlib/my-flink-job.jar

{% endhighlight %}

-Note: Only "local" is supported as schema for application mode. This assumes

that the jar is located in the image, not the Flink client.

+注意:应用程序模式只支持 "local" 作为 schema。假设 jar 位于镜像中,而不是 Flink 客户端中。

-Note: All the jars in the "$FLINK_HOME/usrlib" directory in the image will be

added to user classpath.

+注意:镜像的"$FLINK_HOME/usrlib" 目录下的所有 jar 将会被加到用户 classpath 中。

-### Stop Flink Application

+### 停止 Flink 应用程序

-When an application is stopped, all Flink cluster resources are automatically

destroyed.

-As always, Jobs may stop when manually canceled or, in the case of bounded

Jobs, complete.

+当应用程序停止时,所有 Flink 集群资源都会自动销毁。

+与往常一样,在手动取消作业或完成作业的情况下,作业可能会停止。

{% highlight bash %}

$ ./bin/flink cancel -t kubernetes-application

-Dkubernetes.cluster-id=

{% endhighlight %}

-## Kubernetes concepts

+## Kubernetes 概念

-### Namespaces

+### 命名空间

-[Namespaces in

Kubernetes](https://kubernetes.io/docs/concepts/overview/working-with-objects/namespaces/)

are a way to divide cluster resources between multiple users (via resource

quota).

-It is similar to the queue concept in Yarn cluster. Flink on Kubernetes can

use namespaces to launch Flink clusters.

-The namespace can be specified using the `-Dkubernetes.namespace=default`

argument when starting a Flink cluster.

+[Kubernetes

中的命名空间](https://kubernetes.io/docs/concepts/overview/working-with-objects/namespaces/)是一种在多个用户之间划分集群资源的方法(通过资源配额)。

+它类似于 Yarn 集群中的队列概念。Flink on Kubernetes 可以使用命名空间来启动 Flink 集群。

+启动 Flink 集群时,可以使用`-Dkubernetes.namespace=default` 参数来指定命名空间。

-[ResourceQuota](https://kubernetes.io/docs/concepts/policy/resource-quotas/)

provides constraints that limit aggregate resource consumption per namespace.

-It can limit the quantity of objects that can be created in a namespace by

type, as well as the total amount of compute resources that may be consumed by

resources in that project.

+[资源配额](https://kubernetes.io/docs/concepts/policy/resource-quotas/)提供了限制每个命名空间的合计资源消耗的约束。

+它可以按类型限制可在命名空间中创建的对象数量,以及该项目中的资源可能消耗的计算资源总量。

-### RBAC

+### 基于角色的访问控制

-Role-based access control

([RBAC](https://kubernetes.io/docs/reference/access-authn-authz/rbac/)) is a

method of regulating access to compute or network resources based on the roles

of individual

[jira] [Updated] (FLINK-17899) Integrate FLIP-126 Timestamps and Watermarking with FLIP-27 sources

[

https://issues.apache.org/jira/browse/FLINK-17899?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Stephan Ewen updated FLINK-17899:

-

Description:

*Preambel:* This whole discussion is to some extend only necessary, because in

the {{SourceReader}}, we pass the {{SourceOutput}} as a parameter to the

{{pollNext(...)}} method. However, this design follows some deeper runtime

pipeline design, and is not easy to change at this stage.

There are some principle design choices here:

*(1) Do we make Timestamps and Watermarks purely a feature of the library

(ConnectorBase), or do we integrate it with the core (SourceOperator).*

Making it purely a responsibility of the ConnectorBase would have the advantage

of keeping the SourceOperator simple. However, there is value in integrating

this with the SourceOperator.

- Implementations that are not using the ConnectorBase (like simple

collection- or iterator-based sources) would automatically get access to the

plug-able TimestampExtractors and WatermarkGenerators.

- When executing batch programs, the SourceOperator can transparently inject a

"no-op" WatermarkGenerator so make sure no Watermarks are generated during the

batch execution. Given that batch sources are very performance sensitive, it

seems useful to not even run the watermark generator logic, rather than later

dropping the watermarks.

- In a future version, we may want to implement "global watermark holds"

generated my the Enumerators: The enumerator tells the readers how far they may

advance their local watermarks. This can help to not prematurely advance the

watermark based on a split's records when other splits have data overlapping

with older ranges. An example where this is commonly the case is the streaming

file source.

*(2) Is the per-partition watermarking purely a feature of the library

(ConnectorBase), or do we integrate it with the core (SourceOperator).*

I believe we need to solve this on the same level as the previous question:

- Once a connector instantiates the per-partition watermark generators, the

main output (through which the SourceReader emits the records) must not run its

watermark generator any more. Otherwise we extract watermarks also on the

merged stream, which messes things up. So having the per-partition watermark

generators simply in the ConnectorBase and emit transparently through an

unchanged main output would not work.

- So, if we decide to implement watermarks support in the core

(SourceOperator), we would need to offer the per-partition watermarking

utilities on that level as well.

- Along a similar line of thoughts as in the previous point, the batch

execution can optimize the watermark extraction by supplying no-op extractors

also for the per-partition extractors (which will most likely bear the bulk of

the load in the connectors).

*(3) How would an integration of WatermarkGenerators with the SourceOperator

look like?*

Rather straightforward, the SourceOperator instantiates a SourceOutput that

internally runs the timestamp extractor and watermark generator and emits to

the DataOutput that the operator emits to.

*(4) How would an integration of the per-split WatermarkGenerators look like?*

I would propose to introduce a class {{ReaderMainOutput}} which extends

{{SourceOutput}} and. The {{SourceReader}} should accept a {{ReaderMainOutput}}

instead of a {{SourceOutput}}.

{code:java}

public interface ReaderMainOutput extends SourceOutput {

@Override

void collect(T record);

@Override

void collect(T record, long timestamp);

SourceOutput createOutputForSplit(String splitId);

void releaseOutputForSplit(String splitId);

}

{code}

was:

*Preambel:* This whole discussion is to some extend only necessary, because in

the {{SourceReader}}, we pass the {{SourceOutput}} as a parameter to the

{{pollNext(...)}} method. However, this design follows some deeper runtime

pipeline design, and is not easy to change at this stage.

There are some principle design choices here:

*(1) Do we make Timestamps and Watermarks purely a feature of the library

(ConnectorBase), or do we integrate it with the core (SourceOperator).*

Making it purely a responsibility of the ConnectorBase would have the advantage

of keeping the SourceOperator simple. However, there is value in integrating

this with the SourceOperator.

- Implementations that are not using the ConnectorBase (like simple

collection- or iterator-based sources) would automatically get access to the

plug-able TimestampExtractors and WatermarkGenerators.

- When executing batch programs, the SourceOperator can transparently inject a

"no-op" WatermarkGenerator so make sure no Watermarks are generated during the

batch execution. Given that batch sources are very performance sensitive, it

seems useful to not even run the watermark generator logic, rather than later

dropping the

[jira] [Updated] (FLINK-17899) Integrate FLIP-126 Timestamps and Watermarking with FLIP-27 sources

[

https://issues.apache.org/jira/browse/FLINK-17899?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Stephan Ewen updated FLINK-17899:

-

Description:

*Preambel:* This whole discussion is to some extend only necessary, because in

the {{SourceReader}}, we pass the {{SourceOutput}} as a parameter to the

{{pollNext(...)}} method. However, this design follows some deeper runtime

pipeline design, and is not easy to change at this stage.

There are some principle design choices here:

*(1) Do we make Timestamps and Watermarks purely a feature of the library

(ConnectorBase), or do we integrate it with the core (SourceOperator).*

Making it purely a responsibility of the ConnectorBase would have the advantage

of keeping the SourceOperator simple. However, there is value in integrating

this with the SourceOperator.

- Implementations that are not using the ConnectorBase (like simple

collection- or iterator-based sources) would automatically get access to the

plug-able TimestampExtractors and WatermarkGenerators.

- When executing batch programs, the SourceOperator can transparently inject a

"no-op" WatermarkGenerator so make sure no Watermarks are generated during the

batch execution. Given that batch sources are very performance sensitive, it

seems useful to not even run the watermark generator logic, rather than later

dropping the watermarks.

- In a future version, we may want to implement "global watermark holds"

generated my the Enumerators: The enumerator tells the readers how far they may

advance their local watermarks. This can help to not prematurely advance the

watermark based on a split's records when other splits have data overlapping

with older ranges. An example where this is commonly the case is the streaming

file source.

*(2) Is the per-partition watermarking purely a feature of the library

(ConnectorBase), or do we integrate it with the core (SourceOperator).*

I believe we need to solve this on the same level as the previous question:

- Once a connector instantiates the per-partition watermark generators, the

main output (through which the SourceReader emits the records) must not run its

watermark generator any more. Otherwise we extract watermarks also on the

merged stream, which messes things up. So having the per-partition watermark

generators simply in the ConnectorBase and emit transparently through an

unchanged main output would not work.

- So, if we decide to implement watermarks support in the core

(SourceOperator), we would need to offer the per-partition watermarking

utilities on that level as well.

- Along a similar line of thoughts as in the previous point, the batch

execution can optimize the watermark extraction by supplying no-op extractors

also for the per-partition extractors (which will most likely bear the bulk of

the load in the connectors).

*(3) How would an integration of WatermarkGenerators with the SourceOperator

look like?*

Rather straightforward, the SourceOperator instantiates a SourceOutput that

internally runs the timestamp extractor and watermark generator and emits to

the DataOutput that the operator emits to.

*(4) How would an integration of the per-split WatermarkGenerators look like?*

I would propose to add a method to introduce a class {{ReaderMainOutput}} which

extends {{SourceOutput}} and. The {{SourceReader}} should accept a

{{ReaderMainOutput}} instead of a {{SourceOutput}}.

{code:java}

public interface ReaderMainOutput extends SourceOutput {

@Override

void collect(T record);

@Override

void collect(T record, long timestamp);

SourceOutput createOutputForSplit(String splitId);

void releaseOutputForSplit(String splitId);

}

{code}

was:

*Preambel:* This whole discussion is to some extend only necessary, because in

the {{SourceReader}}, we pass the {{SourceOutput}} as a parameter to the

{{pollNext(...)}} method. However, this design follows some deeper runtime

pipeline design, and is not easy to change at this stage.

There are some principle design choices here:

*(1) Do we make Timestamps and Watermarks purely a feature of the library

(ConnectorBase), or do we integrate it with the core (SourceOperator).*

Making it purely a responsibility of the ConnectorBase would have the advantage

of keeping the SourceOperator simple. However, there is value in integrating

this with the SourceOperator.

- Implementations that are not using the ConnectorBase (like simple

collection- or iterator-based sources) would automatically get access to the

plug-able TimestampExtractors and WatermarkGenerators.

- When executing batch programs, the SourceOperator can transparently inject a

"no-op" WatermarkGenerator so make sure no Watermarks are generated during the

batch execution. Given that batch sources are very performance sensitive, it

seems useful to not even run the watermark generator logic, rather than later

[jira] [Assigned] (FLINK-17897) Resolve stability annotations discussion for FLIP-27 in 1.11

[

https://issues.apache.org/jira/browse/FLINK-17897?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Stephan Ewen reassigned FLINK-17897:

Assignee: Stephan Ewen (was: Jiangjie Qin)

> Resolve stability annotations discussion for FLIP-27 in 1.11

> -

>

> Key: FLINK-17897

> URL: https://issues.apache.org/jira/browse/FLINK-17897

> Project: Flink

> Issue Type: Sub-task

> Components: API / DataStream

>Reporter: Stephan Ewen

>Assignee: Stephan Ewen

>Priority: Blocker

> Fix For: 1.11.0

>

>

> Currently the interfaces from the FLIP-27 sources are all labeled as

> {{@Public}}.

> Given that FLIP-27 is going to be in a "beta" version in the 1.11 release, we

> are discussing to downgrade the stability to {{@PublicEvolving}}.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Created] (FLINK-17899) Integrate FLIP-126 Timestamps and Watermarking with FLIP-27 sources

Stephan Ewen created FLINK-17899:

Summary: Integrate FLIP-126 Timestamps and Watermarking with

FLIP-27 sources

Key: FLINK-17899

URL: https://issues.apache.org/jira/browse/FLINK-17899

Project: Flink

Issue Type: Sub-task

Components: API / DataStream

Reporter: Stephan Ewen

Assignee: Stephan Ewen

*Preambel:* This whole discussion is to some extend only necessary, because in

the {{SourceReader}}, we pass the {{SourceOutput}} as a parameter to the

{{pollNext(...)}} method. However, this design follows some deeper runtime

pipeline design, and is not easy to change at this stage.

There are some principle design choices here:

*(1) Do we make Timestamps and Watermarks purely a feature of the library

(ConnectorBase), or do we integrate it with the core (SourceOperator).*

Making it purely a responsibility of the ConnectorBase would have the advantage

of keeping the SourceOperator simple. However, there is value in integrating

this with the SourceOperator.

- Implementations that are not using the ConnectorBase (like simple

collection- or iterator-based sources) would automatically get access to the

plug-able TimestampExtractors and WatermarkGenerators.

- When executing batch programs, the SourceOperator can transparently inject a

"no-op" WatermarkGenerator so make sure no Watermarks are generated during the

batch execution. Given that batch sources are very performance sensitive, it

seems useful to not even run the watermark generator logic, rather than later

dropping the watermarks.

- In a future version, we may want to implement "global watermark holds"

generated my the Enumerators: The enumerator tells the readers how far they may

advance their local watermarks. This can help to not prematurely advance the

watermark based on a split's records when other splits have data overlapping

with older ranges. An example where this is commonly the case is the streaming

file source.

*(2) Is the per-partition watermarking purely a feature of the library

(ConnectorBase), or do we integrate it with the core (SourceOperator).*

I believe we need to solve this on the same level as the previous question:

- Once a connector instantiates the per-partition watermark generators, the

main output (through which the SourceReader emits the records) must not run its

watermark generator any more. Otherwise we extract watermarks also on the

merged stream, which messes things up. So having the per-partition watermark

generators simply in the ConnectorBase and emit transparently through an

unchanged main output would not work.

- So, if we decide to implement watermarks support in the core

(SourceOperator), we would need to offer the per-partition watermarking

utilities on that level as well.

- Along a similar line of thoughts as in the previous point, the batch

execution can optimize the watermark extraction by supplying no-op extractors

also for the per-partition extractors (which will most likely bear the bulk of

the load in the connectors).

*(3) How would an integration of WatermarkGenerators with the SourceOperator

look like?*

Rather straightforward, the SourceOperator instantiates a SourceOutput that

internally runs the timestamp extractor and watermark generator and emits to

the DataOutput that the operator emits to.

*(4) How would an integration of the per-split WatermarkGenerators look like?*

I would propose to add a method to the {{SourceReaderContext}}:

{{SplitAwareOutputs createSourceAwareOutputs()}}

The {{SplitAwareOutputs}} looks the following way:

{code:java}

public interface SplitAwareOutputs {

SourceOutput createOutputForSplit(String splitId);

void releaseOutputForSplit(String splitId);

}

{code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Assigned] (FLINK-17898) Remove Exceptions from signatures of SourceOutput methods

[

https://issues.apache.org/jira/browse/FLINK-17898?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Stephan Ewen reassigned FLINK-17898:

Assignee: Stephan Ewen

> Remove Exceptions from signatures of SourceOutput methods

> -

>

> Key: FLINK-17898

> URL: https://issues.apache.org/jira/browse/FLINK-17898

> Project: Flink

> Issue Type: Sub-task

> Components: API / DataStream

>Reporter: Stephan Ewen

>Assignee: Stephan Ewen

>Priority: Blocker

> Fix For: 1.11.0

>

>

> The {{collect(...)}} methods in {{SourceOutput}} currently declare that they

> can {{trow Exception}}.

> That was originally introduced, because it can be the case when pushing

> records into the chain.

> However, one can argue that the Source Reader that calls these methods is in

> no way supposed to handle these exceptions, because they come from downstream

> operator failures. Because of that, we should go for unchecked exceptions and

> handle downstream exceptions via {{ExceptionInChainedOperatorException}}.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Updated] (FLINK-17898) Remove Exceptions from signatures of SourceOutput methods

[

https://issues.apache.org/jira/browse/FLINK-17898?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Stephan Ewen updated FLINK-17898:

-

Description:

The {{collect(...)}} methods in {{SourceOutput}} currently declare that they

can {{trow Exception}}.

That was originally introduced, because it can be the case when pushing records

into the chain.

However, one can argue that the Source Reader that calls these methods is in no

way supposed to handle these exceptions, because they come from downstream

operator failures. Because of that, we should go for unchecked exceptions and

handle downstream exceptions via {{ExceptionInChainedOperatorException}}.

> Remove Exceptions from signatures of SourceOutput methods

> -

>

> Key: FLINK-17898

> URL: https://issues.apache.org/jira/browse/FLINK-17898

> Project: Flink

> Issue Type: Sub-task

> Components: API / DataStream

>Reporter: Stephan Ewen

>Priority: Blocker

> Fix For: 1.11.0

>

>

> The {{collect(...)}} methods in {{SourceOutput}} currently declare that they

> can {{trow Exception}}.

> That was originally introduced, because it can be the case when pushing

> records into the chain.

> However, one can argue that the Source Reader that calls these methods is in

> no way supposed to handle these exceptions, because they come from downstream

> operator failures. Because of that, we should go for unchecked exceptions and

> handle downstream exceptions via {{ExceptionInChainedOperatorException}}.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Updated] (FLINK-17898) Remove Exceptions from signatures of SourceOutput methods

[ https://issues.apache.org/jira/browse/FLINK-17898?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Stephan Ewen updated FLINK-17898: - Priority: Blocker (was: Major) > Remove Exceptions from signatures of SourceOutput methods > - > > Key: FLINK-17898 > URL: https://issues.apache.org/jira/browse/FLINK-17898 > Project: Flink > Issue Type: Sub-task > Components: API / DataStream >Reporter: Stephan Ewen >Priority: Blocker > Fix For: 1.11.0 > > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Created] (FLINK-17898) Remove Exceptions from signatures of SourceOutput methods

Stephan Ewen created FLINK-17898: Summary: Remove Exceptions from signatures of SourceOutput methods Key: FLINK-17898 URL: https://issues.apache.org/jira/browse/FLINK-17898 Project: Flink Issue Type: Sub-task Components: API / DataStream Reporter: Stephan Ewen Fix For: 1.11.0 -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Created] (FLINK-17897) Resolve stability annotations discussion for FLIP-27 in 1.11

Stephan Ewen created FLINK-17897:

Summary: Resolve stability annotations discussion for FLIP-27 in

1.11

Key: FLINK-17897

URL: https://issues.apache.org/jira/browse/FLINK-17897

Project: Flink

Issue Type: Sub-task

Components: API / DataStream

Reporter: Stephan Ewen

Assignee: Jiangjie Qin

Fix For: 1.11.0

Currently the interfaces from the FLIP-27 sources are all labeled as

{{@Public}}.

Given that FLIP-27 is going to be in a "beta" version in the 1.11 release, we

are discussing to downgrade the stability to {{@PublicEvolving}}.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [flink] zhijiangW opened a new pull request #12302: [hotfix][formatting] Fix the checkstyle issue of missing a javadoc comment in DummyNoOpOperator

zhijiangW opened a new pull request #12302: URL: https://github.com/apache/flink/pull/12302 ## What is the purpose of the change Fix the checkstyle issue of missing a javadoc comment in DummyNoOpOperator, which would break the creation of release-1.11-rc1. ## Brief change log Add javadoc comment for DummyNoOpOperator ## Verifying this change This change is a trivial rework / code cleanup without any test coverage. ## Does this pull request potentially affect one of the following parts: - Dependencies (does it add or upgrade a dependency): (yes / **no**) - The public API, i.e., is any changed class annotated with `@Public(Evolving)`: (yes / **no**) - The serializers: (yes / **no** / don't know) - The runtime per-record code paths (performance sensitive): (yes / **no** / don't know) - Anything that affects deployment or recovery: JobManager (and its components), Checkpointing, Kubernetes/Yarn/Mesos, ZooKeeper: (yes / **no** / don't know) - The S3 file system connector: (yes / **no** / don't know) ## Documentation - Does this pull request introduce a new feature? (yes / **no**) - If yes, how is the feature documented? (**not applicable** / docs / JavaDocs / not documented) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] zhijiangW merged pull request #12302: [hotfix][formatting] Fix the checkstyle issue of missing a javadoc comment in DummyNoOpOperator

zhijiangW merged pull request #12302: URL: https://github.com/apache/flink/pull/12302 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #12301: [FLINK-16572] [pubsub,e2e] Add check to see if adding a timeout to th…

flinkbot edited a comment on pull request #12301: URL: https://github.com/apache/flink/pull/12301#issuecomment-633042244 ## CI report: * a3b8f38cc685f05413dde8016aa1714ac1742e20 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=2069) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (FLINK-17820) Memory threshold is ignored for channel state

[

https://issues.apache.org/jira/browse/FLINK-17820?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17114859#comment-17114859

]

Stephan Ewen commented on FLINK-17820:

--

Regarding changing the behavior of {{FsCheckpointStateOutputStream#flush()}} -

I cannot say if there is some part of the code at the moment that assumes files

are created upon flushing. The tests guard this behavior, so it may very well

be.

> Memory threshold is ignored for channel state

> -

>

> Key: FLINK-17820

> URL: https://issues.apache.org/jira/browse/FLINK-17820

> Project: Flink

> Issue Type: Bug

> Components: Runtime / Checkpointing, Runtime / Task

>Affects Versions: 1.11.0

>Reporter: Roman Khachatryan

>Assignee: Roman Khachatryan

>Priority: Critical

> Labels: pull-request-available

> Fix For: 1.11.0

>

>

> Config parameter state.backend.fs.memory-threshold is ignored for channel

> state. Causing each subtask to have a file per checkpoint. Regardless of the

> size of channel state (of this subtask).

> This also causes slow cleanup and delays the next checkpoint.

>

> The problem is that {{ChannelStateCheckpointWriter.finishWriteAndResult}}

> calls flush(); which actually flushes the data on disk.

>

> From FSDataOutputStream.flush Javadoc:

> A completed flush does not mean that the data is necessarily persistent. Data

> persistence can is only assumed after calls to close() or sync().

>

> Possible solutions:

> 1. not to flush in {{ChannelStateCheckpointWriter.finishWriteAndResult (which

> can lead to data loss in a wrapping stream).}}

> {{2. change }}{{FsCheckpointStateOutputStream.flush behavior}}

> {{3. wrap }}{{FsCheckpointStateOutputStream to prevent flush}}{{}}{{}}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (FLINK-17820) Memory threshold is ignored for channel state

[

https://issues.apache.org/jira/browse/FLINK-17820?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17114849#comment-17114849

]

Stephan Ewen commented on FLINK-17820:

--

I see. You cannot rely on {{close()}} here as well, because that closes the

underlying stream in a "close for dispose" manner. That is indeed a bit of a

design shortcoming in the {{CheckpointStateOutputStream}} class.

My feeling is that this assumption of {{DataOutputStream}} being non-buffering

is made in some other parts of the code.

What do you think about adding a test that guards this assumption?

We also have the {{DataOutputViewStreamWrapper}} class which is an extension of

{{DataOutputStream}} - we could use that, guard it with a "does not buffer"

test and if we ever find it actually buffers, then we need to implement the

methods directly, rather than inherit from {{DataOutputStream}}.

> Memory threshold is ignored for channel state

> -

>

> Key: FLINK-17820

> URL: https://issues.apache.org/jira/browse/FLINK-17820

> Project: Flink

> Issue Type: Bug

> Components: Runtime / Checkpointing, Runtime / Task

>Affects Versions: 1.11.0

>Reporter: Roman Khachatryan

>Assignee: Roman Khachatryan

>Priority: Critical

> Labels: pull-request-available

> Fix For: 1.11.0

>

>

> Config parameter state.backend.fs.memory-threshold is ignored for channel

> state. Causing each subtask to have a file per checkpoint. Regardless of the

> size of channel state (of this subtask).

> This also causes slow cleanup and delays the next checkpoint.

>

> The problem is that {{ChannelStateCheckpointWriter.finishWriteAndResult}}

> calls flush(); which actually flushes the data on disk.

>

> From FSDataOutputStream.flush Javadoc:

> A completed flush does not mean that the data is necessarily persistent. Data

> persistence can is only assumed after calls to close() or sync().

>

> Possible solutions:

> 1. not to flush in {{ChannelStateCheckpointWriter.finishWriteAndResult (which

> can lead to data loss in a wrapping stream).}}

> {{2. change }}{{FsCheckpointStateOutputStream.flush behavior}}

> {{3. wrap }}{{FsCheckpointStateOutputStream to prevent flush}}{{}}{{}}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (FLINK-17470) Flink task executor process permanently hangs on `flink-daemon.sh stop`, deletes PID file

[

https://issues.apache.org/jira/browse/FLINK-17470?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17114846#comment-17114846

]

Stephan Ewen commented on FLINK-17470:

--

Have you run Flink 1.9 on the exact same version? And does Flink 1.9 still shut

down the JVM properly, while 1.10 does not?

> Flink task executor process permanently hangs on `flink-daemon.sh stop`,

> deletes PID file

> -

>

> Key: FLINK-17470

> URL: https://issues.apache.org/jira/browse/FLINK-17470

> Project: Flink

> Issue Type: Bug

> Components: Runtime / Task

>Affects Versions: 1.10.0

> Environment:

> {code:java}

> $ uname -a

> Linux hostname.local 3.10.0-1062.9.1.el7.x86_64 #1 SMP Fri Dec 6 15:49:49 UTC

> 2019 x86_64 x86_64 x86_64 GNU/Linux

> $ lsb_release -a

> LSB Version: :core-4.1-amd64:core-4.1-noarch

> Distributor ID: CentOS

> Description: CentOS Linux release 7.7.1908 (Core)

> Release: 7.7.1908

> Codename: Core

> {code}

> Flink version 1.10

>

>Reporter: Hunter Herman

>Priority: Major

> Attachments: flink_jstack.log, flink_mixed_jstack.log

>

>

> Hi Flink team!

> We've attempted to upgrade our flink 1.9 cluster to 1.10, but are

> experiencing reproducible instability on shutdown. Speciically, it appears

> that the `kill` issued in the `stop` case of flink-daemon.sh is causing the

> task executor process to hang permanently. Specifically, the process seems to

> be hanging in the

> `org.apache.flink.runtime.util.JvmShutdownSafeguard$DelayedTerminator.run` in

> a `Thread.sleep()` call. I think this is a bizarre behavior. Also note that

> every thread in the process is BLOCKED. on a `pthread_cond_wait` call. Is

> this an OS level issue? Banging my head on a wall here. See attached stack

> traces for details.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [flink] StephanEwen commented on pull request #12035: [FLINK-17569][FileSystemsems]support ViewFileSystem when wait lease revoke of hadoop filesystem

StephanEwen commented on pull request #12035: URL: https://github.com/apache/flink/pull/12035#issuecomment-633078631 Thank you for looking into the test. Unfortunately, this PR now contains a very large amount of unrelated code format changes and we cannot review or merge it like that [1]. These re-formattings hide the real important changes, hide root causes for line changes, in the git history, etc. Please make sure your IDE does not auto-format classes on save or so. The PR must really include only the relevant changes, not unrelated additional changes. [1] https://flink.apache.org/contributing/contribute-code.html This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Closed] (FLINK-17854) Use InputStatus directly in user-facing async input APIs (like source readers)

[

https://issues.apache.org/jira/browse/FLINK-17854?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Stephan Ewen closed FLINK-17854.

> Use InputStatus directly in user-facing async input APIs (like source readers)

> --

>

> Key: FLINK-17854

> URL: https://issues.apache.org/jira/browse/FLINK-17854

> Project: Flink

> Issue Type: Improvement

> Components: API / Core

>Reporter: Stephan Ewen

>Assignee: Stephan Ewen

>Priority: Major

> Labels: pull-request-available

> Fix For: 1.11.0

>

>

> The flink-runtime uses the {{InputStatus}} enum in its

> {{PushingAsyncDataInput}}.

> The flink-core {{SourceReader}} has a separate enum with the same purpose.

> In the {{SourceOperator}} we need to bridge between these two, which is

> clumsy and a bit inefficient.

> We can simply make {{InputStatus}} part of {{flink-core}} I/O packages and

> use it in the {{SourceReader}}, to avoid having to bridge it and the runtime

> part.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Resolved] (FLINK-17854) Use InputStatus directly in user-facing async input APIs (like source readers)

[

https://issues.apache.org/jira/browse/FLINK-17854?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Stephan Ewen resolved FLINK-17854.

--

Resolution: Fixed

Fixed in 1.11.0 via

- 17d3f05ff5d2c286610386e751cafaf4837b2151

- a0abaafd8bd066ecaf0040cc0b021e1821156dae

Fixed in 1.12.0 (master) via

- d2559d14ac8f814219a3e4c5e81b77c36b610688

- d0f9892c58fdb819dc66737b49d7a1f04dde2036

> Use InputStatus directly in user-facing async input APIs (like source readers)

> --

>

> Key: FLINK-17854

> URL: https://issues.apache.org/jira/browse/FLINK-17854

> Project: Flink

> Issue Type: Improvement

> Components: API / Core

>Reporter: Stephan Ewen

>Assignee: Stephan Ewen

>Priority: Major

> Labels: pull-request-available

> Fix For: 1.11.0

>

>

> The flink-runtime uses the {{InputStatus}} enum in its

> {{PushingAsyncDataInput}}.

> The flink-core {{SourceReader}} has a separate enum with the same purpose.

> In the {{SourceOperator}} we need to bridge between these two, which is

> clumsy and a bit inefficient.

> We can simply make {{InputStatus}} part of {{flink-core}} I/O packages and

> use it in the {{SourceReader}}, to avoid having to bridge it and the runtime

> part.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [flink] StephanEwen commented on pull request #12271: [FLINK-17854][core] Let SourceReader use InputStatus directly

StephanEwen commented on pull request #12271: URL: https://github.com/apache/flink/pull/12271#issuecomment-633076719 Manually merged in d0f9892c58fdb819dc66737b49d7a1f04dde2036 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] StephanEwen closed pull request #12271: [FLINK-17854][core] Let SourceReader use InputStatus directly

StephanEwen closed pull request #12271: URL: https://github.com/apache/flink/pull/12271 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] StephanEwen commented on pull request #11196: [FLINK-16246][connector kinesis] Exclude AWS SDK MBean registry from Kinesis build

StephanEwen commented on pull request #11196: URL: https://github.com/apache/flink/pull/11196#issuecomment-633076862 Closing this in favor of an approach based on a cleanup hook. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] StephanEwen closed pull request #11196: [FLINK-16246][connector kinesis] Exclude AWS SDK MBean registry from Kinesis build

StephanEwen closed pull request #11196: URL: https://github.com/apache/flink/pull/11196 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] StephanEwen commented on pull request #12271: [FLINK-17854][core] Let SourceReader use InputStatus directly

StephanEwen commented on pull request #12271: URL: https://github.com/apache/flink/pull/12271#issuecomment-633076233 Thank you for reviewing this. Merging... This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (FLINK-16468) BlobClient rapid retrieval retries on failure opens too many sockets

[

https://issues.apache.org/jira/browse/FLINK-16468?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17114792#comment-17114792

]

Gary Yao commented on FLINK-16468:

--

[~longtimer] No problem, take care!

> BlobClient rapid retrieval retries on failure opens too many sockets

>

>

> Key: FLINK-16468

> URL: https://issues.apache.org/jira/browse/FLINK-16468

> Project: Flink

> Issue Type: Bug

> Components: Runtime / Coordination

>Affects Versions: 1.8.3, 1.9.2, 1.10.0

> Environment: Linux ubuntu servers running, patch current latest

> Ubuntu patch current release java 8 JRE

>Reporter: Jason Kania

>Priority: Major

> Fix For: 1.11.0

>

>

> In situations where the BlobClient retrieval fails as in the following log,

> rapid retries will exhaust the open sockets. All the retries happen within a

> few milliseconds.

> {noformat}

> 2020-03-06 17:19:07,116 ERROR org.apache.flink.runtime.blob.BlobClient -

> Failed to fetch BLOB

> cddd17ef76291dd60eee9fd36085647a/p-bcd61652baba25d6863cf17843a2ef64f4c801d5-c1781532477cf65ff1c1e7d72dccabc7

> from aaa-1/10.0.1.1:45145 and store it under

> /tmp/blobStore-7328ed37-8bc7-4af7-a56c-474e264157c9/incoming/temp-0004

> Retrying...

> {noformat}

> The above is output repeatedly until the following error occurs:

> {noformat}

> java.io.IOException: Could not connect to BlobServer at address

> aaa-1/10.0.1.1:45145

> at org.apache.flink.runtime.blob.BlobClient.(BlobClient.java:100)

> at

> org.apache.flink.runtime.blob.BlobClient.downloadFromBlobServer(BlobClient.java:143)

> at

> org.apache.flink.runtime.blob.AbstractBlobCache.getFileInternal(AbstractBlobCache.java:181)

> at

> org.apache.flink.runtime.blob.PermanentBlobCache.getFile(PermanentBlobCache.java:202)

> at

> org.apache.flink.runtime.execution.librarycache.BlobLibraryCacheManager.registerTask(BlobLibraryCacheManager.java:120)

> at

> org.apache.flink.runtime.taskmanager.Task.createUserCodeClassloader(Task.java:915)

> at org.apache.flink.runtime.taskmanager.Task.doRun(Task.java:595)

> at org.apache.flink.runtime.taskmanager.Task.run(Task.java:530)

> at java.lang.Thread.run(Thread.java:748)

> Caused by: java.net.SocketException: Too many open files

> at java.net.Socket.createImpl(Socket.java:478)

> at java.net.Socket.connect(Socket.java:605)

> at org.apache.flink.runtime.blob.BlobClient.(BlobClient.java:95)

> ... 8 more

> {noformat}

> The retries should have some form of backoff in this situation to avoid

> flooding the logs and exhausting other resources on the server.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [flink] flinkbot edited a comment on pull request #12301: [FLINK-16572] [pubsub,e2e] Add check to see if adding a timeout to th…

flinkbot edited a comment on pull request #12301: URL: https://github.com/apache/flink/pull/12301#issuecomment-633042244 ## CI report: * a3b8f38cc685f05413dde8016aa1714ac1742e20 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=2069) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot commented on pull request #12301: [FLINK-16572] [pubsub,e2e] Add check to see if adding a timeout to th…

flinkbot commented on pull request #12301: URL: https://github.com/apache/flink/pull/12301#issuecomment-633042244 ## CI report: * a3b8f38cc685f05413dde8016aa1714ac1742e20 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (FLINK-16572) CheckPubSubEmulatorTest is flaky on Azure

[ https://issues.apache.org/jira/browse/FLINK-16572?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17114716#comment-17114716 ] Richard Deurwaarder commented on FLINK-16572: - Apologies for the delay [~rmetzger], I had some struggles getting flink to built locally for some reason, which doesn't help in quickly adding some changes.. :P I've added a retry in the test to see if after 60s it does find the missing message: [https://github.com/apache/flink/pull/12301] Not something we should want in permanent testing code, but this should give some information to see if the publishing part or the pulling part is going wrong. > CheckPubSubEmulatorTest is flaky on Azure > - > > Key: FLINK-16572 > URL: https://issues.apache.org/jira/browse/FLINK-16572 > Project: Flink > Issue Type: Bug > Components: Connectors / Google Cloud PubSub, Tests >Affects Versions: 1.11.0 >Reporter: Aljoscha Krettek >Assignee: Richard Deurwaarder >Priority: Critical > Labels: pull-request-available, test-stability > Fix For: 1.11.0 > > Time Spent: 10m > Remaining Estimate: 0h > > Log: > https://dev.azure.com/aljoschakrettek/Flink/_build/results?buildId=56=logs=1f3ed471-1849-5d3c-a34c-19792af4ad16=ce095137-3e3b-5f73-4b79-c42d3d5f8283=7842 -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] flinkbot commented on pull request #12301: [FLINK-16572] [pubsub,e2e] Add check to see if adding a timeout to th…

flinkbot commented on pull request #12301: URL: https://github.com/apache/flink/pull/12301#issuecomment-633035319 Thanks a lot for your contribution to the Apache Flink project. I'm the @flinkbot. I help the community to review your pull request. We will use this comment to track the progress of the review. ## Automated Checks Last check on commit a3b8f38cc685f05413dde8016aa1714ac1742e20 (Sat May 23 11:49:20 UTC 2020) **Warnings:** * No documentation files were touched! Remember to keep the Flink docs up to date! Mention the bot in a comment to re-run the automated checks. ## Review Progress * ❓ 1. The [description] looks good. * ❓ 2. There is [consensus] that the contribution should go into to Flink. * ❓ 3. Needs [attention] from. * ❓ 4. The change fits into the overall [architecture]. * ❓ 5. Overall code [quality] is good. Please see the [Pull Request Review Guide](https://flink.apache.org/contributing/reviewing-prs.html) for a full explanation of the review process. The Bot is tracking the review progress through labels. Labels are applied according to the order of the review items. For consensus, approval by a Flink committer of PMC member is required Bot commands The @flinkbot bot supports the following commands: - `@flinkbot approve description` to approve one or more aspects (aspects: `description`, `consensus`, `architecture` and `quality`) - `@flinkbot approve all` to approve all aspects - `@flinkbot approve-until architecture` to approve everything until `architecture` - `@flinkbot attention @username1 [@username2 ..]` to require somebody's attention - `@flinkbot disapprove architecture` to remove an approval you gave earlier This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] Xeli closed pull request #12300: [FLINK-16572] CheckPubSubEmulatorTest is flaky on Azure

Xeli closed pull request #12300: URL: https://github.com/apache/flink/pull/12300 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] Xeli opened a new pull request #12301: [FLINK-16572] [pubsub,e2e] Add check to see if adding a timeout to th…

Xeli opened a new pull request #12301: URL: https://github.com/apache/flink/pull/12301 This MR adds some extra logging to pubsub e2e test. Because it's quite hard to reproduce locally we want to try and see what these extra logs tells us when it naturally happens again on azure. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot commented on pull request #12300: [FLINK-16572] CheckPubSubEmulatorTest is flaky on Azure

flinkbot commented on pull request #12300: URL: https://github.com/apache/flink/pull/12300#issuecomment-633032908 Thanks a lot for your contribution to the Apache Flink project. I'm the @flinkbot. I help the community to review your pull request. We will use this comment to track the progress of the review. ## Automated Checks Last check on commit 9649219b364af728bbefcc59a5aa477b23511b21 (Sat May 23 11:36:10 UTC 2020) **Warnings:** * No documentation files were touched! Remember to keep the Flink docs up to date! Mention the bot in a comment to re-run the automated checks. ## Review Progress * ❓ 1. The [description] looks good. * ❓ 2. There is [consensus] that the contribution should go into to Flink. * ❓ 3. Needs [attention] from. * ❓ 4. The change fits into the overall [architecture]. * ❓ 5. Overall code [quality] is good. Please see the [Pull Request Review Guide](https://flink.apache.org/contributing/reviewing-prs.html) for a full explanation of the review process. The Bot is tracking the review progress through labels. Labels are applied according to the order of the review items. For consensus, approval by a Flink committer of PMC member is required Bot commands The @flinkbot bot supports the following commands: - `@flinkbot approve description` to approve one or more aspects (aspects: `description`, `consensus`, `architecture` and `quality`) - `@flinkbot approve all` to approve all aspects - `@flinkbot approve-until architecture` to approve everything until `architecture` - `@flinkbot attention @username1 [@username2 ..]` to require somebody's attention - `@flinkbot disapprove architecture` to remove an approval you gave earlier This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] Xeli opened a new pull request #12300: [FLINK-16572] CheckPubSubEmulatorTest is flaky on Azure

Xeli opened a new pull request #12300: URL: https://github.com/apache/flink/pull/12300 This MR adds some extra logging to pubsub e2e test. Because it's quite hard to reproduce locally we want to try and see what these extra logs tells us when it naturally happens again on azure. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Closed] (FLINK-17303) Return TableResult for Python TableEnvironment

[

https://issues.apache.org/jira/browse/FLINK-17303?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Hequn Cheng closed FLINK-17303.

---

Resolution: Resolved

> Return TableResult for Python TableEnvironment

> --

>

> Key: FLINK-17303

> URL: https://issues.apache.org/jira/browse/FLINK-17303

> Project: Flink

> Issue Type: Improvement

> Components: API / Python

>Reporter: godfrey he

>Assignee: Nicholas Jiang

>Priority: Major

> Labels: pull-request-available

> Fix For: 1.12.0

>

>

> [FLINK-16366|https://issues.apache.org/jira/browse/FLINK-16366] supports

> executing a statement and returning a {{TableResult}} object, which could get

> {{JobClient}} (to associates the submitted Flink job), collect the execution

> result, or print the execution result. In Python, we should also introduce

> python TableResult class to make sure consistent with Java.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (FLINK-17303) Return TableResult for Python TableEnvironment

[

https://issues.apache.org/jira/browse/FLINK-17303?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17114639#comment-17114639

]

Hequn Cheng commented on FLINK-17303:

-

Resolved in 1.12.0 via 6186069e22dd60757942968a41322f55bce4cbfb

> Return TableResult for Python TableEnvironment

> --

>

> Key: FLINK-17303

> URL: https://issues.apache.org/jira/browse/FLINK-17303

> Project: Flink

> Issue Type: Improvement

> Components: API / Python

>Reporter: godfrey he

>Assignee: Nicholas Jiang

>Priority: Major

> Labels: pull-request-available

> Fix For: 1.12.0

>

>

> [FLINK-16366|https://issues.apache.org/jira/browse/FLINK-16366] supports

> executing a statement and returning a {{TableResult}} object, which could get

> {{JobClient}} (to associates the submitted Flink job), collect the execution

> result, or print the execution result. In Python, we should also introduce

> python TableResult class to make sure consistent with Java.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [flink] hequn8128 closed pull request #12246: [FLINK-17303][python] Return TableResult for Python TableEnvironment

hequn8128 closed pull request #12246: URL: https://github.com/apache/flink/pull/12246 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (FLINK-17891) FlinkYarnSessionCli sets wrong execution.target type

[ https://issues.apache.org/jira/browse/FLINK-17891?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17114622#comment-17114622 ] Shangwen Tang commented on FLINK-17891: --- Hi [~lzljs3620320] [~jark] , Can you assign this task to me ? I'll try to fix it. Thanks~ > FlinkYarnSessionCli sets wrong execution.target type > - > > Key: FLINK-17891 > URL: https://issues.apache.org/jira/browse/FLINK-17891 > Project: Flink > Issue Type: Bug > Components: Deployment / YARN >Affects Versions: 1.11.0 >Reporter: Shangwen Tang >Priority: Major > Attachments: image-2020-05-23-00-59-32-702.png, > image-2020-05-23-01-00-19-549.png > > > I submitted a flink session job at the local YARN cluster, and I found that > the *execution.target* is of the wrong type, which should be of yarn-session > type > !image-2020-05-23-00-59-32-702.png|width=545,height=75! > !image-2020-05-23-01-00-19-549.png|width=544,height=94! > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] KurtYoung commented on a change in pull request #12283: [FLINK-16975][documentation] Add docs for FileSystem connector

KurtYoung commented on a change in pull request #12283:

URL: https://github.com/apache/flink/pull/12283#discussion_r429526249

##

File path: docs/dev/table/connectors/filesystem.md

##

@@ -0,0 +1,352 @@

+---

+title: "Hadoop FileSystem Connector"

+nav-title: Hadoop FileSystem

+nav-parent_id: connectors

+nav-pos: 5

+---

+

+

+* This will be replaced by the TOC

+{:toc}

+

+This connector provides access to partitioned files in filesystems

+supported by the [Flink FileSystem abstraction]({{

site.baseurl}}/ops/filesystems/index.html).

+

+The file system connector itself is included in Flink and does not require an

additional dependency.

+A corresponding format needs to be specified for reading and writing rows from

and to a file system.

+

+The file system connector allows for reading and writing from a local or

distributed filesystem. A filesystem can be defined as:

+

+

+

+{% highlight sql %}

+CREATE TABLE MyUserTable (

+ column_name1 INT,

+ column_name2 STRING,

+ ...

+ part_name1 INT,

+ part_name2 STRING

+) PARTITIONED BY (part_name1, part_name2) WITH (

+ 'connector' = 'filesystem', -- required: specify to connector type

+ 'path' = 'file:///path/to/whatever', -- required: path to a directory

+ 'format' = '...', -- required: file system connector

requires to specify a format,

+-- Please refer to Table Formats

+-- section for more details.s

+ 'partition.default-name' = '...', -- optional: default partition name in

case the dynamic partition

+-- column value is null/empty string.

+

+ -- optional: the option to enable shuffle data by dynamic partition fields

in sink phase, this can greatly

+ -- reduce the number of file for filesystem sink but may lead data skew, the

default value is disabled.

+ 'sink.shuffle-by-partition.enable' = '...',

+ ...

+)

+{% endhighlight %}

+

+

+

+Attention Make sure to include [Flink

File System specific dependencies]({{ site.baseurl

}}/internals/filesystems.html).

+

+Attention File system sources for

streaming are only experimental. In the future, we will support actual

streaming use cases, i.e., partition and directory monitoring.

+

+## Partition files

+