Repository: spark Updated Branches: refs/heads/branch-2.4 c9bb83a7d -> 2c700ee30

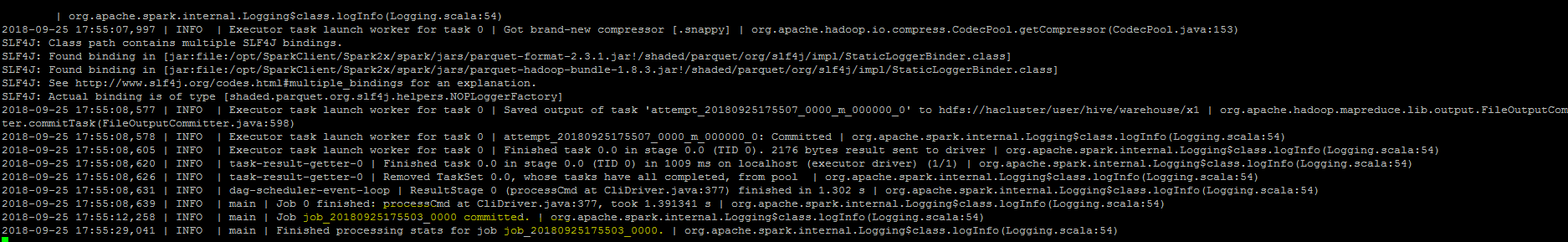

[SPARK-25521][SQL] Job id showing null in the logs when insert into command Job is finished. ## What changes were proposed in this pull request? ``As part of insert command in FileFormatWriter, a job context is created for handling the write operation , While initializing the job context using setupJob() API in HadoopMapReduceCommitProtocol , we set the jobid in the Jobcontext configuration.In FileFormatWriter since we are directly getting the jobId from the map reduce JobContext the job id will come as null while adding the log. As a solution we shall get the jobID from the configuration of the map reduce Jobcontext.`` ## How was this patch tested? Manually, verified the logs after the changes.  Closes #22572 from sujith71955/master_log_issue. Authored-by: s71955 <sujithchacko.2...@gmail.com> Signed-off-by: Wenchen Fan <wenc...@databricks.com> (cherry picked from commit 459700727fadf3f35a211eab2ffc8d68a4a1c39a) Signed-off-by: Wenchen Fan <wenc...@databricks.com> Project: http://git-wip-us.apache.org/repos/asf/spark/repo Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/2c700ee3 Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/2c700ee3 Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/2c700ee3 Branch: refs/heads/branch-2.4 Commit: 2c700ee30d7fe7c7fdc7dbfe697ef5f41bd17215 Parents: c9bb83a Author: s71955 <sujithchacko.2...@gmail.com> Authored: Fri Oct 5 13:09:16 2018 +0800 Committer: Wenchen Fan <wenc...@databricks.com> Committed: Fri Oct 5 16:51:59 2018 +0800 ---------------------------------------------------------------------- .../spark/sql/execution/datasources/FileFormatWriter.scala | 6 +++--- 1 file changed, 3 insertions(+), 3 deletions(-) ---------------------------------------------------------------------- http://git-wip-us.apache.org/repos/asf/spark/blob/2c700ee3/sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/FileFormatWriter.scala ---------------------------------------------------------------------- diff --git a/sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/FileFormatWriter.scala b/sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/FileFormatWriter.scala index 7c6ab4b..774fe38 100644 --- a/sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/FileFormatWriter.scala +++ b/sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/FileFormatWriter.scala @@ -183,15 +183,15 @@ object FileFormatWriter extends Logging { val commitMsgs = ret.map(_.commitMsg) committer.commitJob(job, commitMsgs) - logInfo(s"Job ${job.getJobID} committed.") + logInfo(s"Write Job ${description.uuid} committed.") processStats(description.statsTrackers, ret.map(_.summary.stats)) - logInfo(s"Finished processing stats for job ${job.getJobID}.") + logInfo(s"Finished processing stats for write job ${description.uuid}.") // return a set of all the partition paths that were updated during this job ret.map(_.summary.updatedPartitions).reduceOption(_ ++ _).getOrElse(Set.empty) } catch { case cause: Throwable => - logError(s"Aborting job ${job.getJobID}.", cause) + logError(s"Aborting job ${description.uuid}.", cause) committer.abortJob(job) throw new SparkException("Job aborted.", cause) } --------------------------------------------------------------------- To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org