juraa14 opened a new issue, #27012: URL: https://github.com/apache/airflow/issues/27012

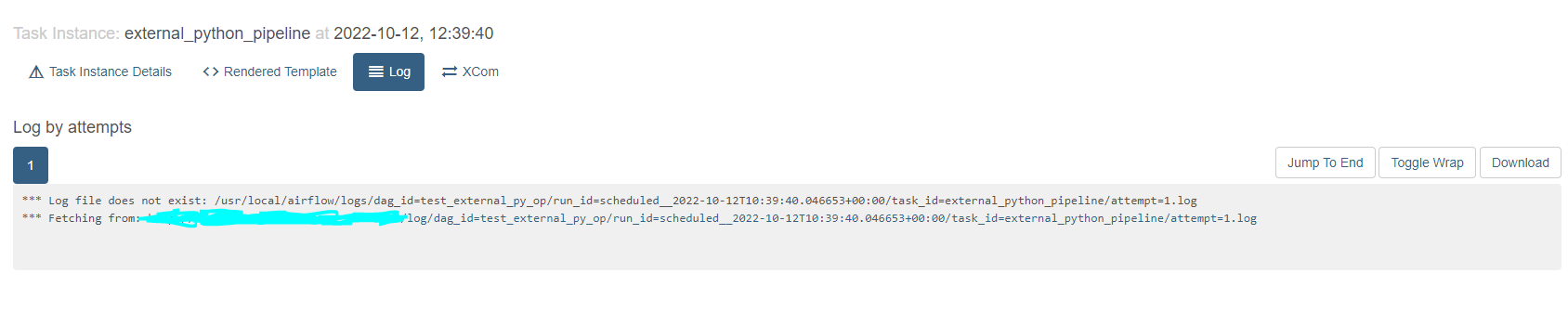

### Apache Airflow version 2.4.1 ### What happened When using the external python operator for running tasks inside a different environment, logs do not appear for the task instance. The log files are always empty if the task is successful. If the task fails, one gets very rudimentary logs about the process command failing. Both environments use the same Python version (3.9.10) and Airflow uses Celery executor ### What you think should happen instead The External Operator is great for cases where you might have multiple environments that require other packages and versions, so they don't mix up with the airflow environment and so other teams could potentially use their own environments inside DAGs. Unfortunately, the External Python Operator does not provide any meaningful logs when the task fails or succeeds. An example of a successful task without logs:  In case a task fails, the following log will appear (regardless of the actual error): ```[2022-10-12, 11:23:05 CEST] {taskinstance.py:1851} ERROR - Task failed with exception Traceback (most recent call last): File "/usr/local/airflow_env/lib/python3.9/site-packages/airflow/decorators/base.py", line 188, in execute return_value = super().execute(context) File "/usr/local/airflow_env/lib/python3.9/site-packages/airflow/operators/python.py", line 370, in execute return super().execute(context=serializable_context) File "/usr/local/airflow_env/lib/python3.9/site-packages/airflow/operators/python.py", line 175, in execute return_value = self.execute_callable() File "/usr/local/airflow_env/lib/python3.9/site-packages/airflow/operators/python.py", line 678, in execute_callable return self._execute_python_callable_in_subprocess(python_path, tmp_path) File "/usr/local/airflow_env/lib/python3.9/site-packages/airflow/operators/python.py", line 426, in _execute_python_callable_in_subprocess execute_in_subprocess( File "/usr/local/airflow_env/lib/python3.9/site-packages/airflow/utils/process_utils.py", line 168, in execute_in_subprocess execute_in_subprocess_with_kwargs(cmd, cwd=cwd) File "/usr/local/airflow_env/lib/python3.9/site-packages/airflow/utils/process_utils.py", line 191, in execute_in_subprocess_with_kwargs raise subprocess.CalledProcessError(exit_code, cmd) subprocess.CalledProcessError: Command '['/usr/local/aiops_env/bin/python', '/tmp/tmdclodljc4/script.py', '/tmp/tmdclodljc4/script.in', '/tmp/tmdclodljc4/script.out', '/tmp/tmdclodljc4/string_args.txt']' returned non-zero exit status 1. [2022-10-12, 11:23:05 CEST] {standard_task_runner.py:102} ERROR - Failed to execute job 1976894 for task external_python_pipeline (Command '['/usr/local/aiops_env/bin/python', '/tmp/tmdclodljc4/script.py', '/tmp/tmdclodljc4/script.in', '/tmp/tmdclodljc4/script.out', '/tmp/tmdclodljc4/string_args.txt']' returned non-zero exit status 1.; 23728)``` ### How to reproduce Using celery executor, so the task is ran on another machine. Simply run a DAG that uses the External Python Operator. A simple hello world will reproduce the issue ### Operating System CentOS 7 ### Versions of Apache Airflow Providers apache-airflow-providers-celery==3.0.0 apache-airflow-providers-common-sql==1.2.0 apache-airflow-providers-ftp==3.1.0 apache-airflow-providers-http==4.0.0 apache-airflow-providers-imap==3.0.0 apache-airflow-providers-postgres==5.2.2 ### Deployment Virtualenv installation ### Deployment details _No response_ ### Anything else I have not yet gone into the details, but am willing to contribute! ### Are you willing to submit PR? - [X] Yes I am willing to submit a PR! ### Code of Conduct - [X] I agree to follow this project's [Code of Conduct](https://github.com/apache/airflow/blob/main/CODE_OF_CONDUCT.md) -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: [email protected] For queries about this service, please contact Infrastructure at: [email protected]