yebai1105 opened a new issue #12693: URL: https://github.com/apache/pulsar/issues/12693

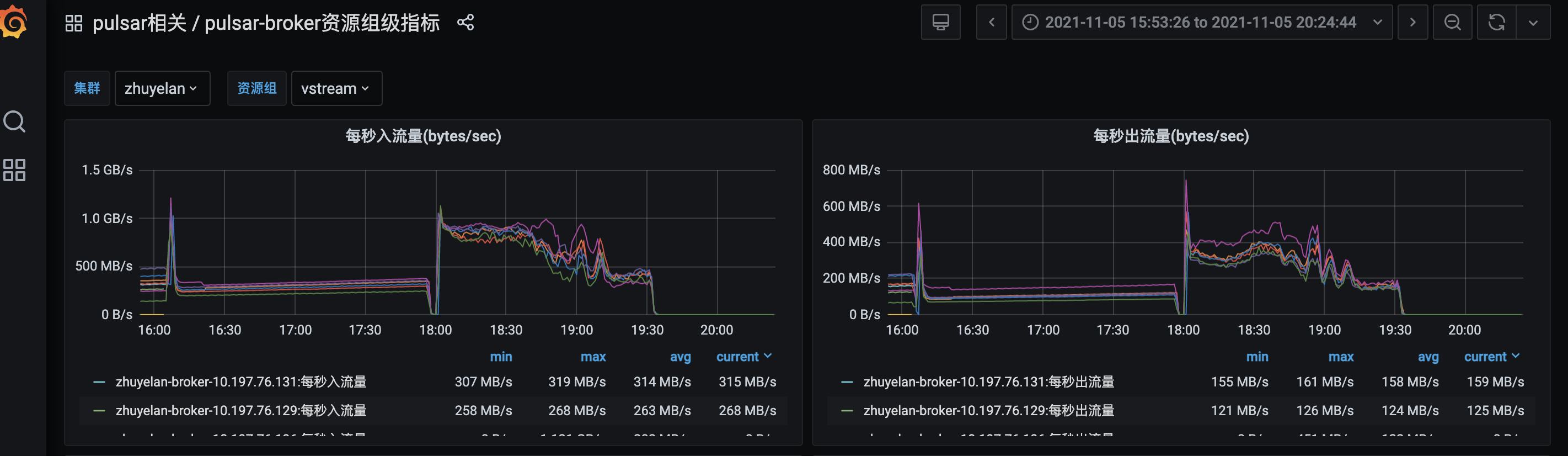

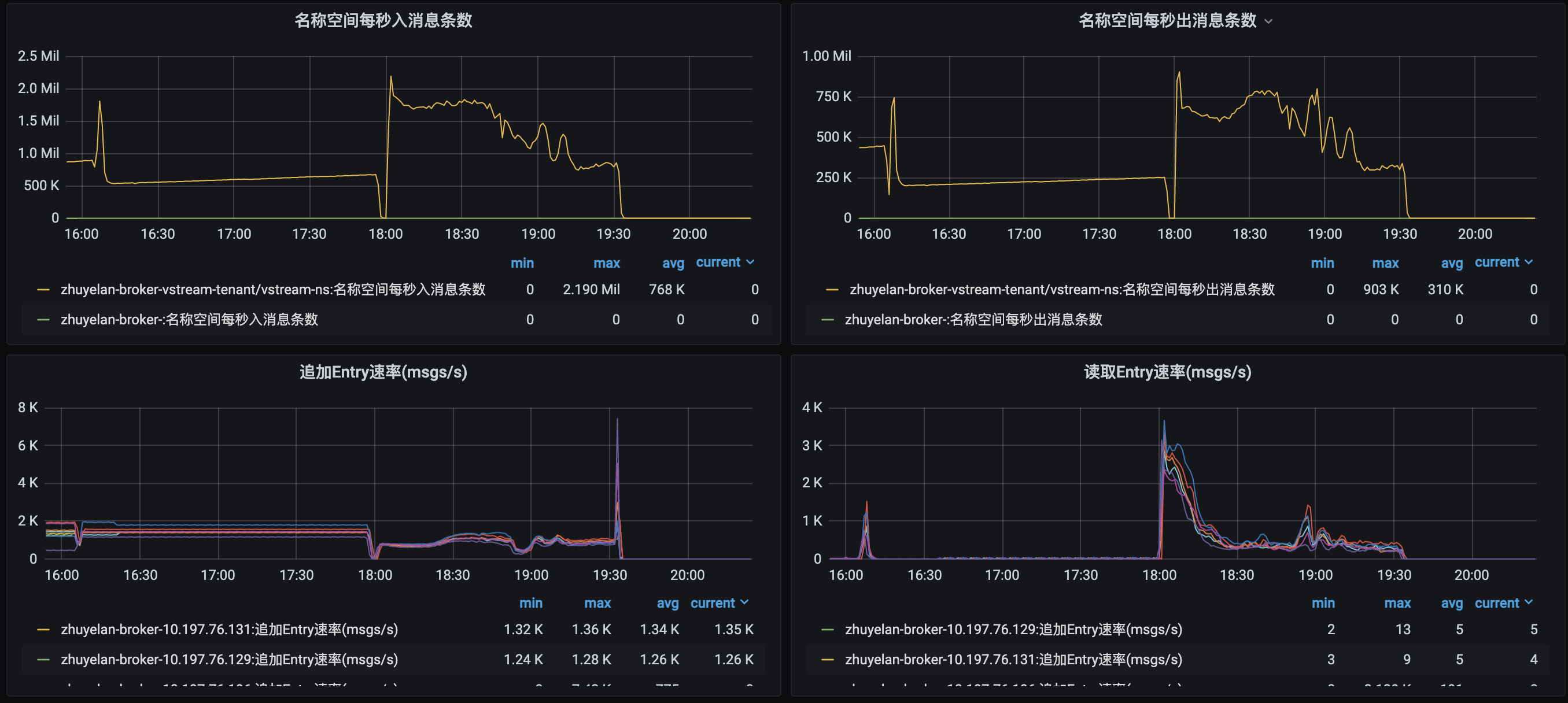

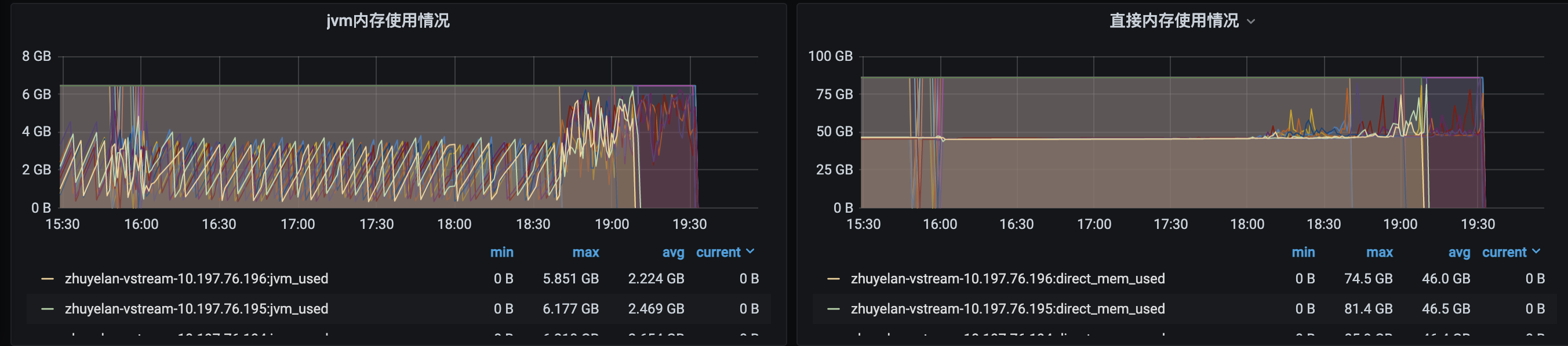

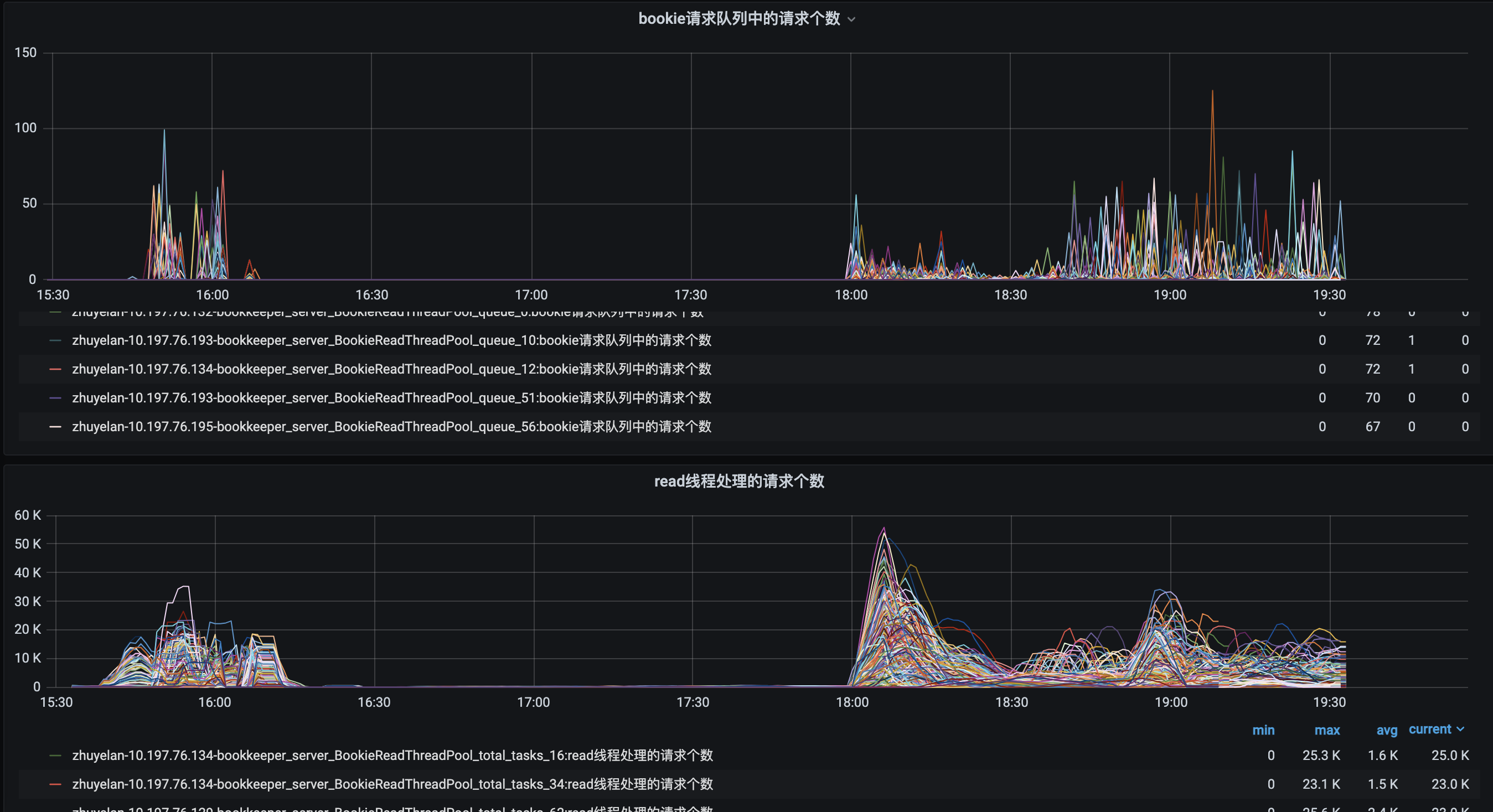

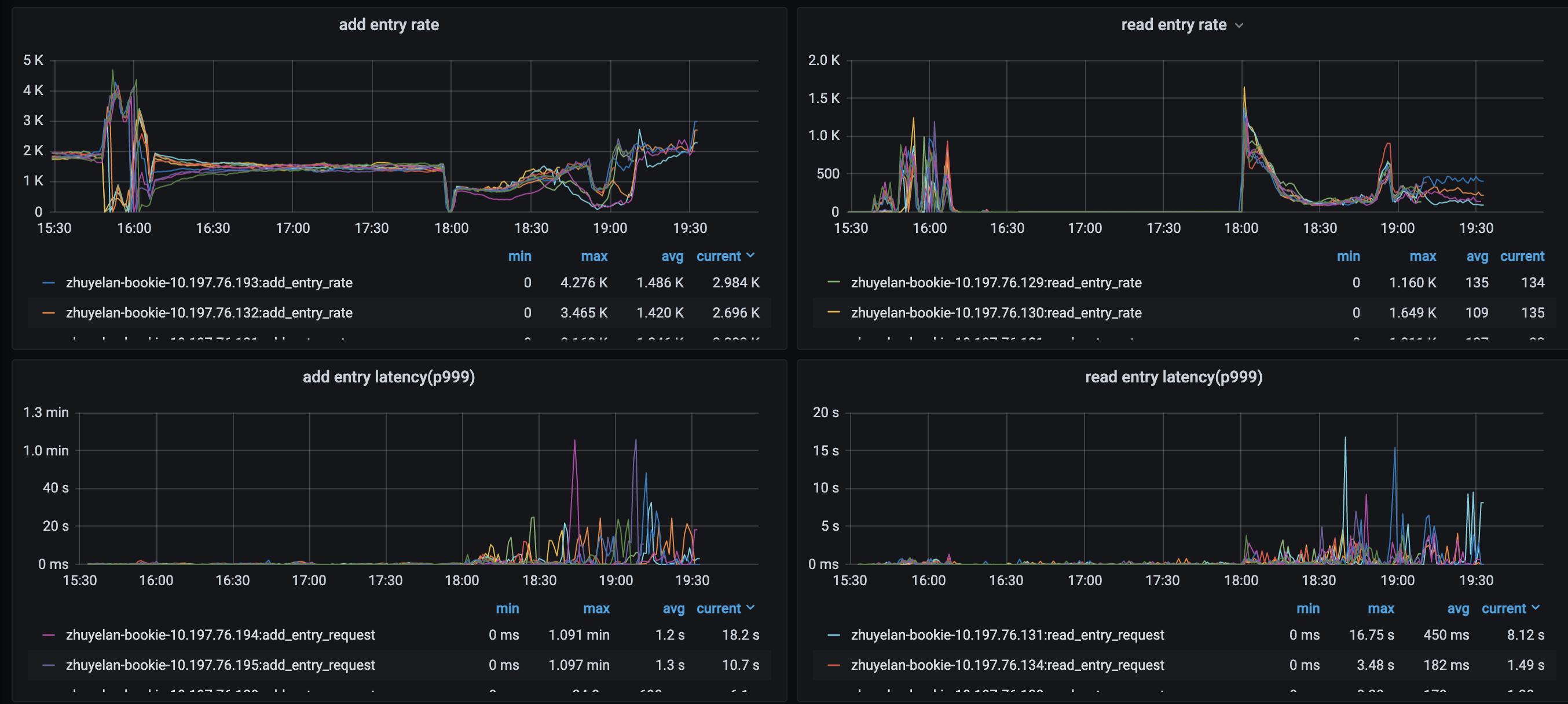

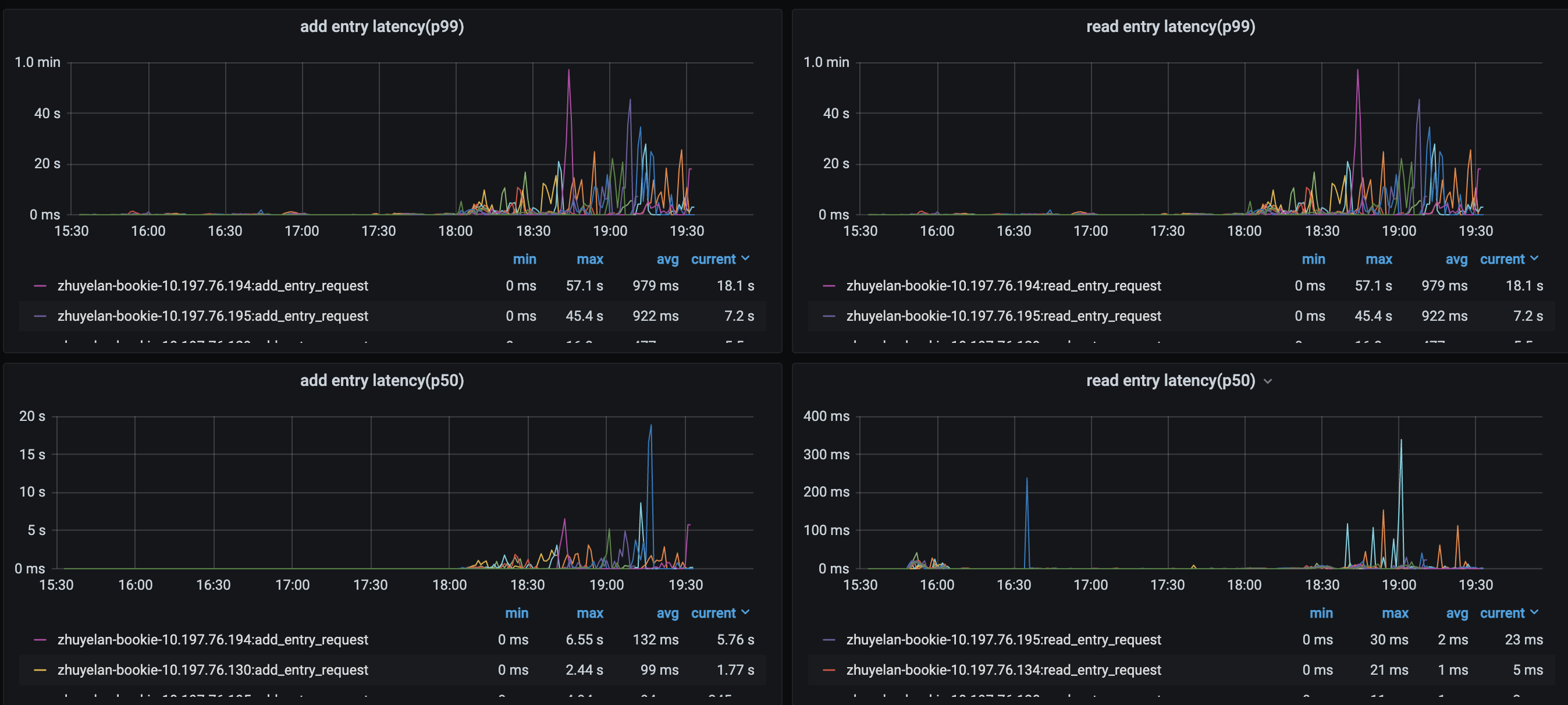

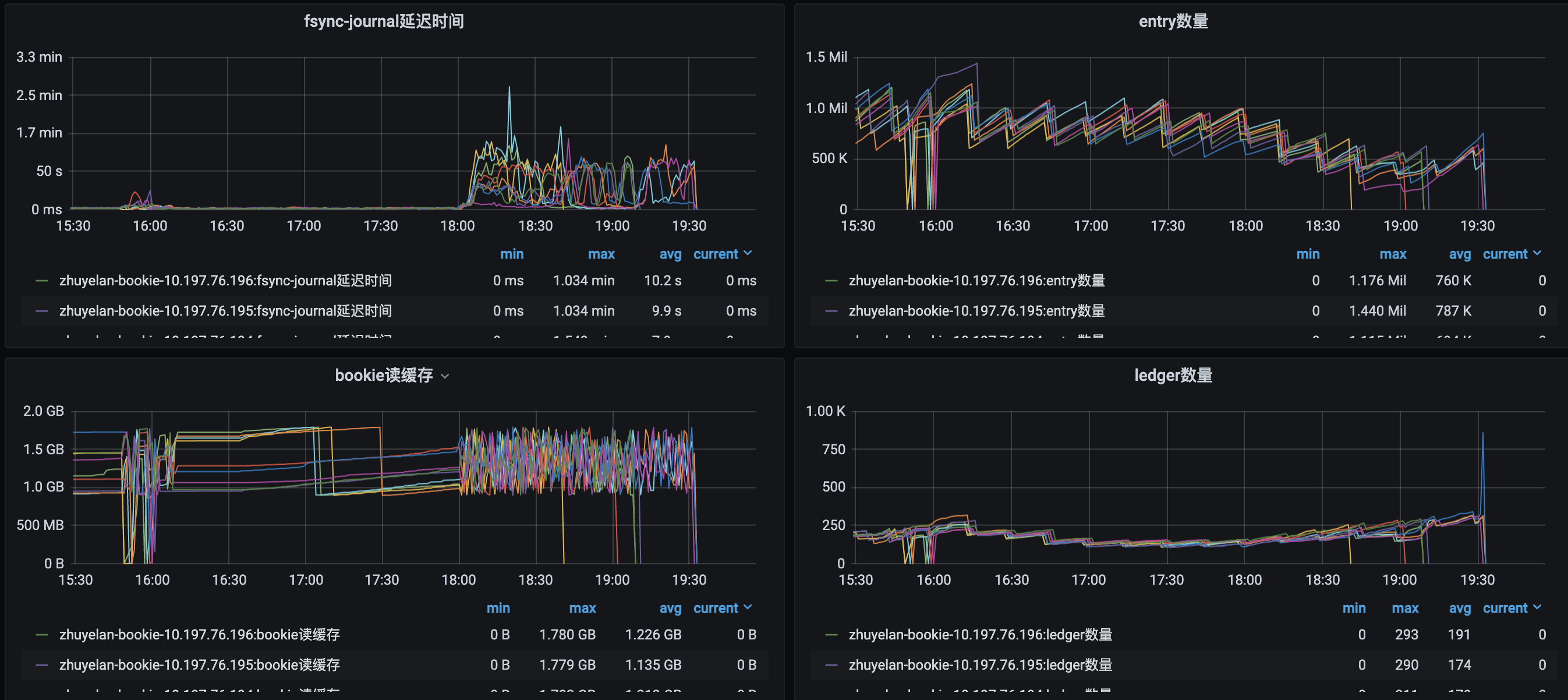

**1、操作:** 线上一共9台broker机器,顺序操作kill -9 10.197.76.129、10.197.76.130、10.197.76.131这三台机器broker进程(操作时间:2021-11-05 16:05) **2、使用版本信息** 客户端: pulsar-flink-connector版本:1.13.1 flink版本1.13.2 服务端:2.8.1 **3、总体现象:** flink任务重启,重启后checkpoint无法提交,且在达到checkpoint重试失败容忍值后(本次测试flink任务cp容忍值是10次),任务全局重启,任务重启后由于flink开始追数据流量突增(bookie从平均600M/s到最高2.3G/s,broker从平均300M/s到最高1.1G/s),Bookie集群(9台机器)陆续由于内存溢出全部挂掉。 **4、服务端现象分析** 4.1 broker监控: 2021-11-05 16:05后客户端任务重启,重启后无法提交checkpoint,在110分钟左右达到checkpoint失败最大容忍值后(任务checkpoint的超时时间为10min),17:56任务全局重启,重启后flink任务开始由于延迟开始追数据,流量翻倍(还在服务端能够承接流量范围内),后续又陆续导致bookie节点全部挂掉。     4.2 bookie监控        bookie日志中出现内存溢出日志: `19:08:27.857 [BookKeeperClientWorker-OrderedExecutor-62-0] ERROR org.apache.bookkeeper.client.LedgerFragmentReplicator - BK error writing entry for ledgerId: 932862, entryId: 5330, bookie: 10.197.76.196:3181 org.apache.bookkeeper.client.BKException$BKTimeoutException: Bookie operation timeout at org.apache.bookkeeper.client.BKException.create(BKException.java:122) ~[org.apache.bookkeeper-bookkeeper-server-4.14.2.jar:4.14.2] at org.apache.bookkeeper.client.LedgerFragmentReplicator$2.writeComplete(LedgerFragmentReplicator.java:318) ~[org.apache.bookkeeper-bookkeeper-server-4.14.2.jar:4.14.2] at org.apache.bookkeeper.proto.PerChannelBookieClient$AddCompletion.writeComplete(PerChannelBookieClient.java:2151) ~[org.apache.bookkeeper-bookkeeper-server-4.14.2.jar:4.14.2] at org.apache.bookkeeper.proto.PerChannelBookieClient$AddCompletion.lambda$errorOut$0(PerChannelBookieClient.java:2174) ~[org.apache.bookkeeper-bookkeeper-server-4.14.2.jar:4.14.2] at org.apache.bookkeeper.proto.PerChannelBookieClient$CompletionValue$1.safeRun(PerChannelBookieClient.java:1663) [org.apache.bookkeeper-bookkeeper-server-4.14.2.jar:4.14.2] at org.apache.bookkeeper.common.util.SafeRunnable.run(SafeRunnable.java:36) [org.apache.bookkeeper-bookkeeper-common-4.14.2.jar:4.14.2] at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) [?:1.8.0_192] at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) [?:1.8.0_192] at io.netty.util.concurrent.FastThreadLocalRunnable.run(FastThreadLocalRunnable.java:30) [io.netty-netty-common-4.1.66.Final.jar:4.1.66.Final] at java.lang.Thread.run(Thread.java:748) [?:1.8.0_192] 19:08:27.857 [BookKeeperClientWorker-OrderedExecutor-62-0] ERROR org.apache.bookkeeper.proto.BookkeeperInternalCallbacks - Error in multi callback : -23 19:08:27.862 [BookieWriteThreadPool-OrderedExecutor-38-0] INFO org.apache.bookkeeper.bookie.storage.ldb.SingleDirectoryDbLedgerStorage - Write cache is full, triggering flush 19:08:28.046 [bookie-io-1-41] ERROR org.apache.bookkeeper.proto.BookieRequestHandler - Unhandled exception occurred in I/O thread or handler on [id: 0x548699f5, L:/10.197.76.129:3181 - R:/10.197.76.193:39020] io.netty.util.internal.OutOfDirectMemoryError: failed to allocate 16777216 byte(s) of direct memory (used: 85916123151, max: 85899345920) at io.netty.util.internal.PlatformDependent.incrementMemoryCounter(PlatformDependent.java:802) ~[io.netty-netty-common-4.1.66.Final.jar:4.1.66.Final] at io.netty.util.internal.PlatformDependent.allocateDirectNoCleaner(PlatformDependent.java:731) ~[io.netty-netty-common-4.1.66.Final.jar:4.1.66.Final] at io.netty.buffer.PoolArena$DirectArena.allocateDirect(PoolArena.java:632) ~[io.netty-netty-buffer-4.1.66.Final.jar:4.1.66.Final] at io.netty.buffer.PoolArena$DirectArena.newChunk(PoolArena.java:607) ~[io.netty-netty-buffer-4.1.66.Final.jar:4.1.66.Final] at io.netty.buffer.PoolArena.allocateNormal(PoolArena.java:202) ~[io.netty-netty-buffer-4.1.66.Final.jar:4.1.66.Final] at io.netty.buffer.PoolArena.tcacheAllocateNormal(PoolArena.java:186) ~[io.netty-netty-buffer-4.1.66.Final.jar:4.1.66.Final] at io.netty.buffer.PoolArena.allocate(PoolArena.java:136) ~[io.netty-netty-buffer-4.1.66.Final.jar:4.1.66.Final] at io.netty.buffer.PoolArena.reallocate(PoolArena.java:286) ~[io.netty-netty-buffer-4.1.66.Final.jar:4.1.66.Final] at io.netty.buffer.PooledByteBuf.capacity(PooledByteBuf.java:118) ~[io.netty-netty-buffer-4.1.66.Final.jar:4.1.66.Final] at io.netty.buffer.AbstractByteBuf.ensureWritable0(AbstractByteBuf.java:305) ~[io.netty-netty-buffer-4.1.66.Final.jar:4.1.66.Final] at io.netty.buffer.AbstractByteBuf.ensureWritable(AbstractByteBuf.java:280) ~[io.netty-netty-buffer-4.1.66.Final.jar:4.1.66.Final] at io.netty.buffer.AbstractByteBuf.writeBytes(AbstractByteBuf.java:1103) ~[io.netty-netty-buffer-4.1.66.Final.jar:4.1.66.Final] at io.netty.handler.codec.ByteToMessageDecoder$1.cumulate(ByteToMessageDecoder.java:99) ~[io.netty-netty-codec-4.1.66.Final.jar:4.1.66.Final] at io.netty.handler.codec.ByteToMessageDecoder.channelRead(ByteToMessageDecoder.java:274) ~[io.netty-netty-codec-4.1.66.Final.jar:4.1.66.Final] at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379) [io.netty-netty-transport-4.1.66.Final.jar:4.1.66.Final] at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365) [io.netty-netty-transport-4.1.66.Final.jar:4.1.66.Final] at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357) [io.netty-netty-transport-4.1.66.Final.jar:4.1.66.Final] at io.netty.channel.DefaultChannelPipeline$HeadContext.channelRead(DefaultChannelPipeline.java:1410) [io.netty-netty-transport-4.1.66.Final.jar:4.1.66.Final] at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379) [io.netty-netty-transport-4.1.66.Final.jar:4.1.66.Final] at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365) [io.netty-netty-transport-4.1.66.Final.jar:4.1.66.Final] at io.netty.channel.DefaultChannelPipeline.fireChannelRead(DefaultChannelPipeline.java:919) [io.netty-netty-transport-4.1.66.Final.jar:4.1.66.Final] at io.netty.channel.epoll.AbstractEpollStreamChannel$EpollStreamUnsafe.epollInReady(AbstractEpollStreamChannel.java:795) [io.netty-netty-transport-native-epoll-4.1.66.Final.jar:4.1.66.Final] at io.netty.channel.epoll.AbstractEpollChannel$AbstractEpollUnsafe$1.run(AbstractEpollChannel.java:425) [io.netty-netty-transport-native-epoll-4.1.66.Final.jar:4.1.66.Final] at io.netty.util.concurrent.AbstractEventExecutor.safeExecute(AbstractEventExecutor.java:164) [io.netty-netty-common-4.1.66.Final.jar:4.1.66.Final] at io.netty.util.concurrent.SingleThreadEventExecutor.runAllTasks(SingleThreadEventExecutor.java:469) [io.netty-netty-common-4.1.66.Final.jar:4.1.66.Final] at io.netty.channel.epoll.EpollEventLoop.run(EpollEventLoop.java:384) [io.netty-netty-transport-native-epoll-4.1.66.Final.jar:4.1.66.Final] at io.netty.util.concurrent.SingleThreadEventExecutor$4.run(SingleThreadEventExecutor.java:986) [io.netty-netty-common-4.1.66.Final.jar:4.1.66.Final]` **5、服务端现象分析** 5.1.当开始kill broker机器时,任务出现connection closed.  5.2 任务尝试重连被kill掉的节点,但是无法建立连接,一直报错。(部分producer尝试重连后被关闭)   5.3 部分producer在尝试重连后,出现消息发送超时  5.4 zhuyelan-30-42884这个producer向10.197.76.194这台broker建立连接。但是无后续消息发送日志。  5.5 新的producer生成  `2021-11-05 16:06:19,022 INFO org.apache.flink.runtime.taskexecutor.slot.TaskSlotTableImpl [] - Activate slot 80cee93db1b07a700ec59fbede94ac0b. 2021-11-05 16:06:19,023 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor [] - Received task Source: Custom Source -> Sink: Unnamed (3/120)#19 (d0e0d9d0211a4b935fe9e9cd751a2505), deploy into slot with allocation id 80cee93db1b07a700ec59fbede94ac0b. 2021-11-05 16:06:19,023 INFO org.apache.flink.runtime.taskmanager.Task [] - Source: Custom Source -> Sink: Unnamed (3/120)#19 (d0e0d9d0211a4b935fe9e9cd751a2505) switched from CREATED to DEPLOYING. 2021-11-05 16:06:19,023 INFO org.apache.flink.runtime.taskmanager.Task [] - Loading JAR files for task Source: Custom Source -> Sink: Unnamed (3/120)#19 (d0e0d9d0211a4b935fe9e9cd751a2505) [DEPLOYING]. 2021-11-05 16:06:19,024 WARN org.apache.flink.streaming.runtime.tasks.StreamTask [] - filesystem state backend has been deprecated. Please use 'hashmap' state backend instead. 2021-11-05 16:06:19,024 INFO org.apache.flink.streaming.runtime.tasks.StreamTask [] - State backend is set to heap memory org.apache.flink.runtime.state.hashmap.HashMapStateBackend@19ce20a4 2021-11-05 16:06:19,024 WARN org.apache.flink.configuration.Configuration [] - Config uses deprecated configuration key 'state.backend.fs.memory-threshold' instead of proper key 'state.storage.fs.memory-threshold' 2021-11-05 16:06:19,024 INFO org.apache.flink.streaming.runtime.tasks.StreamTask [] - Checkpoint storage is set to 'filesystem': (checkpoints "hdfs://bj04-yinghua/flink/flink-checkpoints/216348862735") 2021-11-05 16:06:19,024 INFO org.apache.flink.runtime.taskmanager.Task [] - Source: Custom Source -> Sink: Unnamed (3/120)#19 (d0e0d9d0211a4b935fe9e9cd751a2505) switched from DEPLOYING to INITIALIZING. 2021-11-05 16:06:19,026 WARN org.apache.flink.runtime.state.StateBackendLoader [] - filesystem state backend has been deprecated. Please use 'hashmap' state backend instead. 2021-11-05 16:06:19,026 INFO org.apache.flink.runtime.state.StateBackendLoader [] - State backend is set to heap memory org.apache.flink.runtime.state.hashmap.HashMapStateBackend@112be991 2021-11-05 16:06:19,026 INFO org.apache.flink.streaming.api.functions.sink.TwoPhaseCommitSinkFunction [] - FlinkPulsarSink 3/120 - restoring state 2021-11-05 16:06:19,026 INFO org.apache.flink.streaming.api.functions.sink.TwoPhaseCommitSinkFunction [] - FlinkPulsarSink 3/120 committed recovered transaction TransactionHolder{handle=PulsarTransactionState this state is not in transactional mode, transactionStartTime=1636099392673} 2021-11-05 16:06:19,026 INFO org.apache.flink.streaming.api.functions.sink.TwoPhaseCommitSinkFunction [] - FlinkPulsarSink 3/120 aborted recovered transaction TransactionHolder{handle=PulsarTransactionState this state is not in transactional mode, transactionStartTime=1636099452669} 2021-11-05 16:06:19,026 INFO org.apache.flink.streaming.api.functions.sink.TwoPhaseCommitSinkFunction [] - FlinkPulsarSink 3/120 - no state to restore 2021-11-05 16:06:19,026 INFO org.apache.flink.streaming.connectors.pulsar.FlinkPulsarSinkBase [] - current initialize state :TransactionHolder{handle=PulsarTransactionState this state is not in transactional mode, transactionStartTime=1636099452669}-[TransactionHolder{handle=PulsarTransactionState this state is not in transactional mode, transactionStartTime=1636099392673}] 2021-11-05 16:06:19,080 WARN org.apache.flink.runtime.taskmanager.Task [] - Source: Custom Source -> Sink: Unnamed (3/120)#19 (d0e0d9d0211a4b935fe9e9cd751a2505) switched from INITIALIZING to FAILED with failure cause: java.lang.RuntimeException: Failed to get schema information for persistent://vstream-tenant/vstream-ns/ng_onrt-stsdk.vivo.com.cn at org.apache.flink.streaming.connectors.pulsar.internal.SchemaUtils.uploadPulsarSchema(SchemaUtils.java:65) at org.apache.flink.streaming.connectors.pulsar.FlinkPulsarSinkBase.uploadSchema(FlinkPulsarSinkBase.java:322) at org.apache.flink.streaming.connectors.pulsar.FlinkPulsarSinkBase.open(FlinkPulsarSinkBase.java:287) at org.apache.flink.streaming.connectors.pulsar.FlinkPulsarSink.open(FlinkPulsarSink.java:41) at org.apache.flink.api.common.functions.util.FunctionUtils.openFunction(FunctionUtils.java:34) at org.apache.flink.streaming.api.operators.AbstractUdfStreamOperator.open(AbstractUdfStreamOperator.java:102) at org.apache.flink.streaming.api.operators.StreamSink.open(StreamSink.java:46) at org.apache.flink.streaming.runtime.tasks.OperatorChain.initializeStateAndOpenOperators(OperatorChain.java:442) at org.apache.flink.streaming.runtime.tasks.StreamTask.restoreGates(StreamTask.java:582) at org.apache.flink.streaming.runtime.tasks.StreamTaskActionExecutor$SynchronizedStreamTaskActionExecutor.call(StreamTaskActionExecutor.java:100) at org.apache.flink.streaming.runtime.tasks.StreamTask.executeRestore(StreamTask.java:562) at org.apache.flink.streaming.runtime.tasks.StreamTask.runWithCleanUpOnFail(StreamTask.java:647) at org.apache.flink.streaming.runtime.tasks.StreamTask.restore(StreamTask.java:537) at org.apache.flink.runtime.taskmanager.Task.doRun(Task.java:759) at org.apache.flink.runtime.taskmanager.Task.run(Task.java:566) at java.lang.Thread.run(Thread.java:748) Caused by: org.apache.pulsar.client.admin.PulsarAdminException: java.util.concurrent.CompletionException: org.apache.pulsar.client.admin.internal.http.AsyncHttpConnector$RetryException: Could not complete the operation. Number of retries has been exhausted. Failed reason: Connection refused: /10.197.76.131:8080 at org.apache.pulsar.client.admin.internal.BaseResource.getApiException(BaseResource.java:247) at org.apache.pulsar.client.admin.internal.SchemasImpl$1.failed(SchemasImpl.java:85) at org.apache.pulsar.shade.org.glassfish.jersey.client.JerseyInvocation$1.failed(JerseyInvocation.java:882) at org.apache.pulsar.shade.org.glassfish.jersey.client.ClientRuntime.processFailure(ClientRuntime.java:247) at org.apache.pulsar.shade.org.glassfish.jersey.client.ClientRuntime.processFailure(ClientRuntime.java:242) at org.apache.pulsar.shade.org.glassfish.jersey.client.ClientRuntime.access$100(ClientRuntime.java:62) at org.apache.pulsar.shade.org.glassfish.jersey.client.ClientRuntime$2.lambda$failure$1(ClientRuntime.java:178) at org.apache.pulsar.shade.org.glassfish.jersey.internal.Errors$1.call(Errors.java:248) at org.apache.pulsar.shade.org.glassfish.jersey.internal.Errors$1.call(Errors.java:244) at org.apache.pulsar.shade.org.glassfish.jersey.internal.Errors.process(Errors.java:292) at org.apache.pulsar.shade.org.glassfish.jersey.internal.Errors.process(Errors.java:274) at org.apache.pulsar.shade.org.glassfish.jersey.internal.Errors.process(Errors.java:244) at org.apache.pulsar.shade.org.glassfish.jersey.process.internal.RequestScope.runInScope(RequestScope.java:288) at org.apache.pulsar.shade.org.glassfish.jersey.client.ClientRuntime$2.failure(ClientRuntime.java:178) at org.apache.pulsar.client.admin.internal.http.AsyncHttpConnector.lambda$apply$1(AsyncHttpConnector.java:204) at java.util.concurrent.CompletableFuture.uniWhenComplete(CompletableFuture.java:760) at java.util.concurrent.CompletableFuture$UniWhenComplete.tryFire(CompletableFuture.java:736) at java.util.concurrent.CompletableFuture.postComplete(CompletableFuture.java:474) at java.util.concurrent.CompletableFuture.completeExceptionally(CompletableFuture.java:1977) at org.apache.pulsar.client.admin.internal.http.AsyncHttpConnector.lambda$retryOperation$4(AsyncHttpConnector.java:247) at java.util.concurrent.CompletableFuture.uniWhenComplete(CompletableFuture.java:760) at java.util.concurrent.CompletableFuture$UniWhenComplete.tryFire(CompletableFuture.java:736) at java.util.concurrent.CompletableFuture.postComplete(CompletableFuture.java:474) at java.util.concurrent.CompletableFuture.completeExceptionally(CompletableFuture.java:1977) at org.apache.pulsar.shade.org.asynchttpclient.netty.NettyResponseFuture.abort(NettyResponseFuture.java:273) at org.apache.pulsar.shade.org.asynchttpclient.netty.channel.NettyConnectListener.onFailure(NettyConnectListener.java:181) at org.apache.pulsar.shade.org.asynchttpclient.netty.channel.NettyChannelConnector$1.onFailure(NettyChannelConnector.java:108) at org.apache.pulsar.shade.org.asynchttpclient.netty.SimpleChannelFutureListener.operationComplete(SimpleChannelFutureListener.java:28) at org.apache.pulsar.shade.org.asynchttpclient.netty.SimpleChannelFutureListener.operationComplete(SimpleChannelFutureListener.java:20) at org.apache.pulsar.shade.io.netty.util.concurrent.DefaultPromise.notifyListener0(DefaultPromise.java:578) at org.apache.pulsar.shade.io.netty.util.concurrent.DefaultPromise.notifyListeners0(DefaultPromise.java:571) at org.apache.pulsar.shade.io.netty.util.concurrent.DefaultPromise.notifyListenersNow(DefaultPromise.java:550) at org.apache.pulsar.shade.io.netty.util.concurrent.DefaultPromise.notifyListeners(DefaultPromise.java:491) at org.apache.pulsar.shade.io.netty.util.concurrent.DefaultPromise.setValue0(DefaultPromise.java:616) at org.apache.pulsar.shade.io.netty.util.concurrent.DefaultPromise.setFailure0(DefaultPromise.java:609) at org.apache.pulsar.shade.io.netty.util.concurrent.DefaultPromise.tryFailure(DefaultPromise.java:117) at org.apache.pulsar.shade.io.netty.channel.nio.AbstractNioChannel$AbstractNioUnsafe.fulfillConnectPromise(AbstractNioChannel.java:321) at org.apache.pulsar.shade.io.netty.channel.nio.AbstractNioChannel$AbstractNioUnsafe.finishConnect(AbstractNioChannel.java:337) at org.apache.pulsar.shade.io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:707) at org.apache.pulsar.shade.io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:655) at org.apache.pulsar.shade.io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:581) at org.apache.pulsar.shade.io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:493) at org.apache.pulsar.shade.io.netty.util.concurrent.SingleThreadEventExecutor$4.run(SingleThreadEventExecutor.java:989) at org.apache.pulsar.shade.io.netty.util.internal.ThreadExecutorMap$2.run(ThreadExecutorMap.java:74) at org.apache.pulsar.shade.io.netty.util.concurrent.FastThreadLocalRunnable.run(FastThreadLocalRunnable.java:30) ... 1 more Caused by: java.util.concurrent.CompletionException: org.apache.pulsar.client.admin.internal.http.AsyncHttpConnector$RetryException: Could not complete the operation. Number of retries has been exhausted. Failed reason: Connection refused: /10.197.76.131:8080 at java.util.concurrent.CompletableFuture.encodeThrowable(CompletableFuture.java:292) at java.util.concurrent.CompletableFuture.completeThrowable(CompletableFuture.java:308) at java.util.concurrent.CompletableFuture.orApply(CompletableFuture.java:1371) at java.util.concurrent.CompletableFuture$OrApply.tryFire(CompletableFuture.java:1350) ... 29 more Caused by: org.apache.pulsar.client.admin.internal.http.AsyncHttpConnector$RetryException: Could not complete the operation. Number of retries has been exhausted. Failed reason: Connection refused: /10.197.76.131:8080 at org.apache.pulsar.client.admin.internal.http.AsyncHttpConnector.lambda$retryOperation$4(AsyncHttpConnector.java:249) ... 26 more Caused by: java.net.ConnectException: Connection refused: /10.197.76.131:8080 at org.apache.pulsar.shade.org.asynchttpclient.netty.channel.NettyConnectListener.onFailure(NettyConnectListener.java:179) ... 20 more Caused by: org.apache.pulsar.shade.io.netty.channel.AbstractChannel$AnnotatedConnectException: Connection refused: /10.197.76.131:8080 Caused by: java.net.ConnectException: Connection refused at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method) at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:717) at org.apache.pulsar.shade.io.netty.channel.socket.nio.NioSocketChannel.doFinishConnect(NioSocketChannel.java:330) at org.apache.pulsar.shade.io.netty.channel.nio.AbstractNioChannel$AbstractNioUnsafe.finishConnect(AbstractNioChannel.java:334) at org.apache.pulsar.shade.io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:707) at org.apache.pulsar.shade.io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:655) at org.apache.pulsar.shade.io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:581) at org.apache.pulsar.shade.io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:493) at org.apache.pulsar.shade.io.netty.util.concurrent.SingleThreadEventExecutor$4.run(SingleThreadEventExecutor.java:989) at org.apache.pulsar.shade.io.netty.util.internal.ThreadExecutorMap$2.run(ThreadExecutorMap.java:74) at org.apache.pulsar.shade.io.netty.util.concurrent.FastThreadLocalRunnable.run(FastThreadLocalRunnable.java:30) at java.lang.Thread.run(Thread.java:748) 2021-11-05 16:06:19,080 INFO org.apache.flink.runtime.taskmanager.Task [] - Freeing task resources for Source: Custom Source -> Sink: Unnamed (3/120)#19 (d0e0d9d0211a4b935fe9e9cd751a2505). 2021-11-05 16:06:19,082 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor [] - Un-registering task and sending final execution state FAILED to JobManager for task Source: Custom Source -> Sink: Unnamed (3/120)#19 d0e0d9d0211a4b935fe9e9cd751a2505.` -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: [email protected] For queries about this service, please contact Infrastructure at: [email protected]