AnandInguva commented on code in PR #25200:

URL: https://github.com/apache/beam/pull/25200#discussion_r1089176428

##########

sdks/python/apache_beam/ml/inference/base.py:

##########

@@ -62,16 +63,40 @@

_OUTPUT_TYPE = TypeVar('_OUTPUT_TYPE')

KeyT = TypeVar('KeyT')

-PredictionResult = NamedTuple(

- 'PredictionResult', [

- ('example', _INPUT_TYPE),

- ('inference', _OUTPUT_TYPE),

- ])

+

+# We use NamedTuple to define the structure of the PredictionResult,

+# however, as support for generic NamedTuples is not available in Python

+# versions prior to 3.11, we use the __new__ method to provide default

+# values for the fields while maintaining backwards compatibility.

+class PredictionResult(NamedTuple('PredictionResult',

+ [('example', _INPUT_TYPE),

+ ('inference', _OUTPUT_TYPE),

+ ('model_id', Optional[str])])):

+ __slots__ = ()

+

+ def __new__(cls, example, inference, model_id=None):

+ return super().__new__(cls, example, inference, model_id)

+

+

PredictionResult.__doc__ = """A NamedTuple containing both input and output

from the inference."""

PredictionResult.example.__doc__ = """The input example."""

PredictionResult.inference.__doc__ = """Results for the inference on the model

for the given example."""

+PredictionResult.model_id.__doc__ = """Model ID used to run the prediction."""

+

+

+class ModelMetdata(NamedTuple):

Review Comment:

Sounds good. I can add this to the future TODO list. It could be useful for

Pytorch and XGBoost.

Tensorflow on the other hand can load the whole model without requiring any

class, which could use this feature very well.

##########

sdks/python/apache_beam/ml/inference/pytorch_inference.py:

##########

@@ -38,15 +38,21 @@

'PytorchModelHandlerKeyedTensor',

]

-TensorInferenceFn = Callable[

- [Sequence[torch.Tensor], torch.nn.Module, str, Optional[Dict[str, Any]]],

- Iterable[PredictionResult]]

+TensorInferenceFn = Callable[[

+ Sequence[torch.Tensor],

+ torch.nn.Module,

+ torch.device,

+ Optional[Dict[str, Any]],

+ Optional[str]

+],

+ Iterable[PredictionResult]]

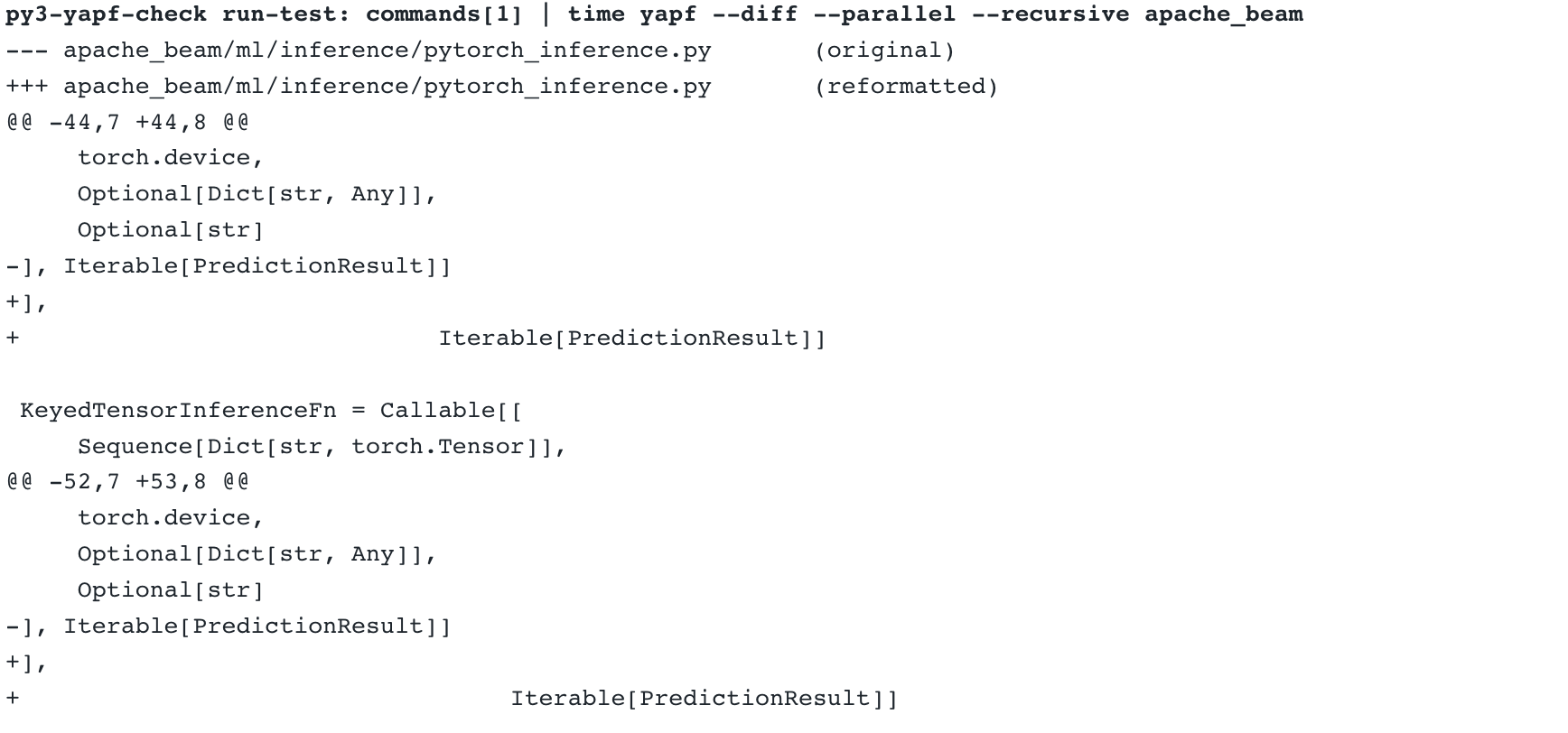

Review Comment:

The formatter is not letting me change it.

##########

sdks/python/apache_beam/ml/inference/base.py:

##########

@@ -62,16 +63,40 @@

_OUTPUT_TYPE = TypeVar('_OUTPUT_TYPE')

KeyT = TypeVar('KeyT')

-PredictionResult = NamedTuple(

- 'PredictionResult', [

- ('example', _INPUT_TYPE),

- ('inference', _OUTPUT_TYPE),

- ])

+

+# We use NamedTuple to define the structure of the PredictionResult,

+# however, as support for generic NamedTuples is not available in Python

+# versions prior to 3.11, we use the __new__ method to provide default

+# values for the fields while maintaining backwards compatibility.

+class PredictionResult(NamedTuple('PredictionResult',

+ [('example', _INPUT_TYPE),

+ ('inference', _OUTPUT_TYPE),

+ ('model_id', Optional[str])])):

+ __slots__ = ()

Review Comment:

Following from here[1] and here[2]

[1]

https://docs.python.org/3/library/collections.html#collections.namedtuple:~:text=The%20subclass%20shown%20above%20sets%20__slots__%20to%20an%20empty%20tuple.%20This%20helps%20keep%20memory%20requirements%20low%20by%20preventing%20the%20creation%20of%20instance%20dictionaries.

[2] go/python-tips/002#default-values

##########

sdks/python/apache_beam/ml/inference/pytorch_inference.py:

##########

@@ -38,15 +38,21 @@

'PytorchModelHandlerKeyedTensor',

]

-TensorInferenceFn = Callable[

- [Sequence[torch.Tensor], torch.nn.Module, str, Optional[Dict[str, Any]]],

- Iterable[PredictionResult]]

+TensorInferenceFn = Callable[[

+ Sequence[torch.Tensor],

+ torch.nn.Module,

+ torch.device,

+ Optional[Dict[str, Any]],

+ Optional[str]

+],

+ Iterable[PredictionResult]]

Review Comment:

Oh, that happened by my auto formatter. I can check it. Thanks

##########

sdks/python/apache_beam/ml/inference/base.py:

##########

@@ -62,16 +63,40 @@

_OUTPUT_TYPE = TypeVar('_OUTPUT_TYPE')

KeyT = TypeVar('KeyT')

-PredictionResult = NamedTuple(

- 'PredictionResult', [

- ('example', _INPUT_TYPE),

- ('inference', _OUTPUT_TYPE),

- ])

+

+# We use NamedTuple to define the structure of the PredictionResult,

+# however, as support for generic NamedTuples is not available in Python

+# versions prior to 3.11, we use the __new__ method to provide default

+# values for the fields while maintaining backwards compatibility.

+class PredictionResult(NamedTuple('PredictionResult',

+ [('example', _INPUT_TYPE),

+ ('inference', _OUTPUT_TYPE),

+ ('model_id', Optional[str])])):

+ __slots__ = ()

+

+ def __new__(cls, example, inference, model_id=None):

+ return super().__new__(cls, example, inference, model_id)

+

+

PredictionResult.__doc__ = """A NamedTuple containing both input and output

from the inference."""

PredictionResult.example.__doc__ = """The input example."""

PredictionResult.inference.__doc__ = """Results for the inference on the model

for the given example."""

+PredictionResult.model_id.__doc__ = """Model ID used to run the prediction."""

Review Comment:

Actually, I am tagging it to the `PredictionResult` even for a standard

batch pipeline. This could add value for the user and it is optional so won't

be a breaking change.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: [email protected]

For queries about this service, please contact Infrastructure at:

[email protected]