[

https://issues.apache.org/jira/browse/HDFS-16972?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17709078#comment-17709078

]

ASF GitHub Bot commented on HDFS-16972:

---------------------------------------

umamaheswararao commented on PR #5532:

URL: https://github.com/apache/hadoop/pull/5532#issuecomment-1498000753

When I was debugging, here the call trace:

cleanFile:128, FileWithSnapshotFeature

(org.apache.hadoop.hdfs.server.namenode.snapshot)

cleanSubtree:755, INodeFile (org.apache.hadoop.hdfs.server.namenode)

destroyDstSubtree:409, DirectoryWithSnapshotFeature

(org.apache.hadoop.hdfs.server.namenode.snapshot)

destroyDstSubtree:405, DirectoryWithSnapshotFeature

(org.apache.hadoop.hdfs.server.namenode.snapshot)

destroyDstSubtree:405, DirectoryWithSnapshotFeature

(org.apache.hadoop.hdfs.server.namenode.snapshot)

destroyDstSubtree:435, DirectoryWithSnapshotFeature

(org.apache.hadoop.hdfs.server.namenode.snapshot)

destroyDstSubtree:435, DirectoryWithSnapshotFeature

(org.apache.hadoop.hdfs.server.namenode.snapshot)

destroyAndCollectBlocks:847, INodeReference$DstReference

(org.apache.hadoop.hdfs.server.namenode)

cleanDirectory:762, DirectoryWithSnapshotFeature

(org.apache.hadoop.hdfs.server.namenode.snapshot)

cleanSubtree:848, INodeDirectory (org.apache.hadoop.hdfs.server.namenode)

cleanSubtree:314, INodeReference (org.apache.hadoop.hdfs.server.namenode)

cleanSubtree:659, INodeReference$WithName

(org.apache.hadoop.hdfs.server.namenode)

cleanSubtreeRecursively:819, INodeDirectory

(org.apache.hadoop.hdfs.server.namenode)

cleanDirectory:734, DirectoryWithSnapshotFeature

(org.apache.hadoop.hdfs.server.namenode.snapshot)

cleanSubtree:848, INodeDirectory (org.apache.hadoop.hdfs.server.namenode)

cleanSubtreeRecursively:819, INodeDirectory

(org.apache.hadoop.hdfs.server.namenode)

cleanDirectory:734, DirectoryWithSnapshotFeature

(org.apache.hadoop.hdfs.server.namenode.snapshot)

cleanSubtree:848, INodeDirectory (org.apache.hadoop.hdfs.server.namenode)

cleanSubtreeRecursively:819, INodeDirectory

(org.apache.hadoop.hdfs.server.namenode)

cleanDirectory:734, DirectoryWithSnapshotFeature

(org.apache.hadoop.hdfs.server.namenode.snapshot)

cleanSubtree:848, INodeDirectory (org.apache.hadoop.hdfs.server.namenode)

cleanSubtreeRecursively:819, INodeDirectory

(org.apache.hadoop.hdfs.server.namenode)

cleanSubtree:866, INodeDirectory (org.apache.hadoop.hdfs.server.namenode)

cleanSubtreeRecursively:819, INodeDirectory

(org.apache.hadoop.hdfs.server.namenode)

cleanSubtree:866, INodeDirectory (org.apache.hadoop.hdfs.server.namenode)

cleanSubtreeRecursively:819, INodeDirectory

(org.apache.hadoop.hdfs.server.namenode)

cleanSubtree:866, INodeDirectory (org.apache.hadoop.hdfs.server.namenode)

cleanSubtreeRecursively:819, INodeDirectory

(org.apache.hadoop.hdfs.server.namenode)

cleanDirectory:734, DirectoryWithSnapshotFeature

(org.apache.hadoop.hdfs.server.namenode.snapshot)

cleanSubtree:848, INodeDirectory (org.apache.hadoop.hdfs.server.namenode)

removeSnapshot:266, DirectorySnapshottableFeature

(org.apache.hadoop.hdfs.server.namenode.snapshot)

removeSnapshot:298, INodeDirectory (org.apache.hadoop.hdfs.server.namenode)

deleteSnapshot:541, SnapshotManager

(org.apache.hadoop.hdfs.server.namenode.snapshot)

deleteSnapshot:280, FSDirSnapshotOp (org.apache.hadoop.hdfs.server.namenode)

deleteSnapshot:264, FSDirSnapshotOp (org.apache.hadoop.hdfs.server.namenode)

deleteSnapshot:7012, FSNamesystem (org.apache.hadoop.hdfs.server.namenode)

testValidation1:80, TestFsImageValidation

(org.apache.hadoop.hdfs.server.namenode)

invoke0:-1, NativeMethodAccessorImpl (sun.reflect)

invoke:62, NativeMethodAccessorImpl (sun.reflect)

invoke:43, DelegatingMethodAccessorImpl (sun.reflect)

invoke:498, Method (java.lang.reflect)

runReflectiveCall:50, FrameworkMethod$1 (org.junit.runners.model)

run:12, ReflectiveCallable (org.junit.internal.runners.model)

invokeExplosively:47, FrameworkMethod (org.junit.runners.model)

evaluate:17, InvokeMethod (org.junit.internal.runners.statements)

runLeaf:325, ParentRunner (org.junit.runners)

runChild:78, BlockJUnit4ClassRunner (org.junit.runners)

runChild:57, BlockJUnit4ClassRunner (org.junit.runners)

run:290, ParentRunner$3 (org.junit.runners)

schedule:71, ParentRunner$1 (org.junit.runners)

runChildren:288, ParentRunner (org.junit.runners)

access$000:58, ParentRunner (org.junit.runners)

evaluate:268, ParentRunner$2 (org.junit.runners)

run:363, ParentRunner (org.junit.runners)

run:137, JUnitCore (org.junit.runner)

startRunnerWithArgs:69, JUnit4IdeaTestRunner (com.intellij.junit4)

execute:38, IdeaTestRunner$Repeater$1 (com.intellij.rt.junit)

repeat:11, TestsRepeater (com.intellij.rt.execution.junit)

startRunnerWithArgs:35, IdeaTestRunner$Repeater (com.intellij.rt.junit)

prepareStreamsAndStart:232, JUnitStarter (com.intellij.rt.junit)

main:55, JUnitStarter (com.intellij.rt.junit)

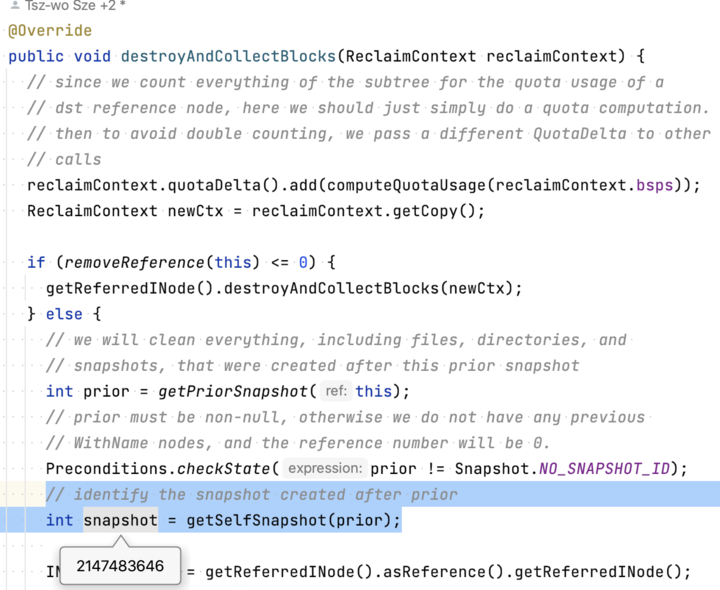

This is the place this patch is trying to fix the issue. My other question

is, when call reaches here, the snapshot id is CURRENT_STATE_ID, which

represent current state. Why are we proceeding to clean subtree originally with

CURRENT_STATE_ID. Could you provide more details on that? Below image showing

where we started using CURRENT_STATE_ID

> Delete a snapshot may deleteCurrentFile

> ---------------------------------------

>

> Key: HDFS-16972

> URL: https://issues.apache.org/jira/browse/HDFS-16972

> Project: Hadoop HDFS

> Issue Type: Bug

> Components: snapshots

> Reporter: Tsz-wo Sze

> Assignee: Tsz-wo Sze

> Priority: Major

> Labels: pull-request-available

>

> We found one case the when deleting a snapshot (with ordered snapshot

> deletion disabled), it can incorrectly delete some files in the current state.

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

---------------------------------------------------------------------

To unsubscribe, e-mail: [email protected]

For additional commands, e-mail: [email protected]