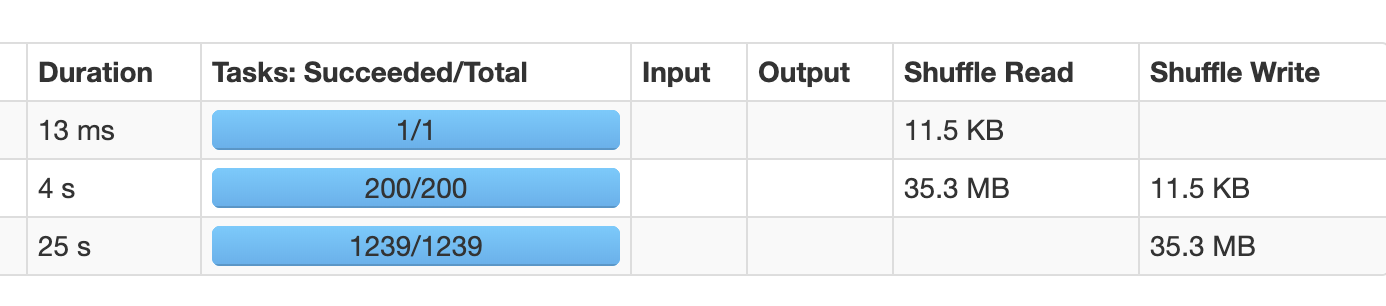

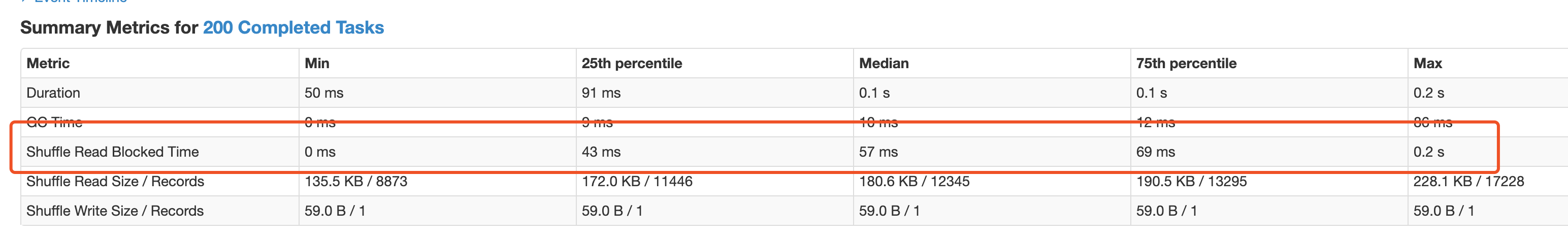

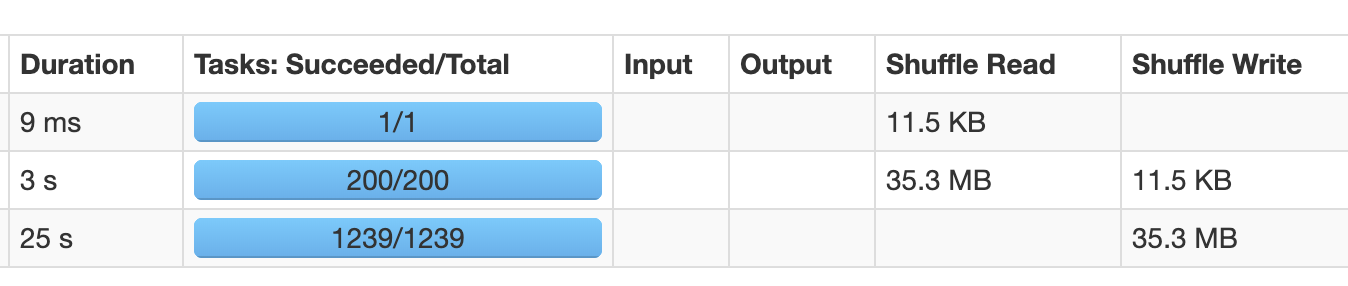

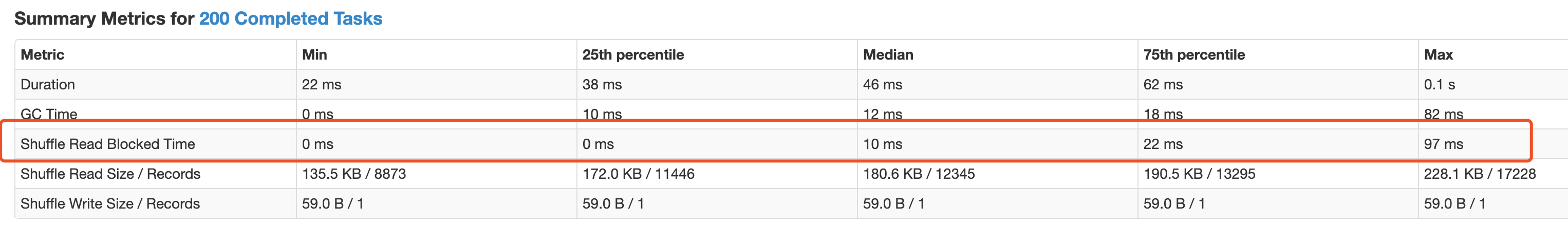

cloud-fan commented on issue #22173: [SPARK-24355] Spark external shuffle server improvement to better handle block fetch requests. URL: https://github.com/apache/spark/pull/22173#issuecomment-572459561 Unfortunately, I'm not able to minimize our internal workload, so I switch to TPCDS to show the perf regression. data: TPCDS table `store_sales` with scale factor 99. It's 3.5GB, 1233 files query: `sql("select count(distinct ss_list_price) from store_sales where ss_quantity == 5").show` spark: latest master, "local-cluster[2, 4, 19968]" env: m4-4xlarge Since it's too many changes to revert this commit, I simply remove the `await` in `ChunkFetchRequestHandler`, which effectively reverts this feature. With `await` removed, the query runs 4% faster, which is not much. But if you look at the web UI and check the task metrics, shuffle read time is significantly reduced if we remove `await`. The master branch:  and the second stage  With `await` removed:  and the second stage  The shuffle read is about 3x faster with `await` removed.

---------------------------------------------------------------- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: [email protected] With regards, Apache Git Services --------------------------------------------------------------------- To unsubscribe, e-mail: [email protected] For additional commands, e-mail: [email protected]