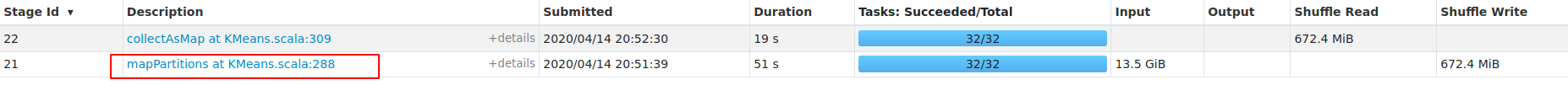

zhengruifeng edited a comment on issue #27758: [SPARK-31007][ML][WIP] KMeans optimization based on triangle-inequality URL: https://github.com/apache/spark/pull/27758#issuecomment-613425865 I made a update to optimize the computation of statistics, if `k` and/or `numFeatures` are too large, compute the statistics distributedly. I retest this impl today, and I use SparkUI to profile the performance: testcode: ```scala import org.apache.spark.ml.linalg._ import org.apache.spark.ml.clustering._ var df = spark.read.format("libsvm").load("/data1/Datasets/webspam/webspam_wc_normalized_trigram.svm.10k").repartition(2) df.persist() (0 until 4).foreach{ _ => df = df.union(df) } df.count Seq(4,8,16,32).foreach{ k => new KMeans().setK(k).setMaxIter(5).fit(df) } ``` I recoded both the duration at each iteration and the _Stage_ of prediction:  results: Test on webspam | This PR(k=4) | This PR(k=8) | This PR(k=16) | This PR(k=32) | Master(k=4) | Master(k=8) | Master(k=16) | Master(k=32) -- | -- | -- | -- | -- | -- | -- | -- | -- Average iteration (sec) | 9.2+0.0 | 15.8+0.1 | 31.4+0.5 | 63.6+2 | 9.8 | 16.4 | 34.6 | 78.3 Average Prediction Stage | 6 | 10.1 | 20.6 | 44.4 | 6 | 10.8 | 22.8 | 57.2 `63.6+2` here means it took 2sec to compute those statistics distributedly, which is faster than the previous commit (computing statstics in the driver) which took about 9sec.  When `k=4,8` the speedup is not significant, when `k=16,32` it is about 10%~30% faster in prediction Stage. It shows that the large `k` is, the realtively faster this new impl is, that is because for large `k` there is more chances to trigger the short circuits.

---------------------------------------------------------------- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: [email protected] With regards, Apache Git Services --------------------------------------------------------------------- To unsubscribe, e-mail: [email protected] For additional commands, e-mail: [email protected]