Fokko commented on a change in pull request #28754:

URL: https://github.com/apache/spark/pull/28754#discussion_r436713728

##########

File path:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/util/DateTimeUtils.scala

##########

@@ -420,6 +420,10 @@ object DateTimeUtils {

Instant.ofEpochSecond(secs, mos * NANOS_PER_MICROS)

}

+ def daysToInstant(daysSinceEpoch: SQLDate): Instant = {

+ Instant.ofEpochSecond(daysSinceEpoch * SECONDS_PER_DAY)

Review comment:

Can you elaborate? This function is the seconds equivalent of the one

directly above:

```scala

def microsToInstant(us: Long): Instant = {

val secs = Math.floorDiv(us, MICROS_PER_SECOND)

// Unfolded Math.floorMod(us, MICROS_PER_SECOND) to reuse the result of

// the above calculation of `secs` via `floorDiv`.

val mos = us - secs * MICROS_PER_SECOND

Instant.ofEpochSecond(secs, mos * NANOS_PER_MICROS)

}

```

I assume that the data is written as UTC, and we apply the timezone when

reading:

```

def convertTz(ts: SQLTimestamp, fromZone: ZoneId, toZone: ZoneId):

SQLTimestamp = {

val rebasedDateTime =

microsToInstant(ts).atZone(toZone).toLocalDateTime.atZone(fromZone)

instantToMicros(rebasedDateTime.toInstant)

}

```

So if it is 1 hour after midnight, and you're in UTC-2, it will subtract a

single day.

##########

File path:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/util/DateTimeUtils.scala

##########

@@ -420,6 +420,10 @@ object DateTimeUtils {

Instant.ofEpochSecond(secs, mos * NANOS_PER_MICROS)

}

+ def daysToInstant(daysSinceEpoch: SQLDate): Instant = {

+ Instant.ofEpochSecond(daysSinceEpoch * SECONDS_PER_DAY)

Review comment:

Can you elaborate? This function is the seconds equivalent of the one

directly above:

```scala

def microsToInstant(us: Long): Instant = {

val secs = Math.floorDiv(us, MICROS_PER_SECOND)

// Unfolded Math.floorMod(us, MICROS_PER_SECOND) to reuse the result of

// the above calculation of `secs` via `floorDiv`.

val mos = us - secs * MICROS_PER_SECOND

Instant.ofEpochSecond(secs, mos * NANOS_PER_MICROS)

}

```

I assume that the data is written as UTC, and we apply the timezone when

reading:

```scala

def convertTz(ts: SQLTimestamp, fromZone: ZoneId, toZone: ZoneId):

SQLTimestamp = {

val rebasedDateTime =

microsToInstant(ts).atZone(toZone).toLocalDateTime.atZone(fromZone)

instantToMicros(rebasedDateTime.toInstant)

}

```

So if it is 1 hour after midnight, and you're in UTC-2, it will subtract a

single day.

##########

File path:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/util/DateTimeUtils.scala

##########

@@ -420,6 +420,10 @@ object DateTimeUtils {

Instant.ofEpochSecond(secs, mos * NANOS_PER_MICROS)

}

+ def daysToInstant(daysSinceEpoch: SQLDate): Instant = {

+ Instant.ofEpochSecond(daysSinceEpoch * SECONDS_PER_DAY)

Review comment:

So, if I understand it correctly, you don't want to compensate for the

timezone while casting from a DoubleType to a DateType?

##########

File path:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/util/DateTimeUtils.scala

##########

@@ -420,6 +420,10 @@ object DateTimeUtils {

Instant.ofEpochSecond(secs, mos * NANOS_PER_MICROS)

}

+ def daysToInstant(daysSinceEpoch: SQLDate): Instant = {

+ Instant.ofEpochSecond(daysSinceEpoch * SECONDS_PER_DAY)

Review comment:

Thanks for your input, less is more :)

##########

File path:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/aggregate/Average.scala

##########

@@ -40,10 +40,17 @@ case class Average(child: Expression) extends

DeclarativeAggregate with Implicit

override def children: Seq[Expression] = child :: Nil

- override def inputTypes: Seq[AbstractDataType] = Seq(NumericType)

+ override def inputTypes: Seq[AbstractDataType] = Seq(NumericType, DateType)

Review comment:

Sure, that makes sense. See the details below, let me know if I'm

missing something, but I don't think there is a real consensus on the subject.

# Postgres

For postgres, it is just unsupported

```

postgres@366ecc8a0fb9:/$ psql

psql (12.3 (Debian 12.3-1.pgdg100+1))

Type "help" for help.

postgres=# SELECT CAST(CAST('2020-01-01' AS DATE) AS decimal);

ERROR: cannot cast type date to numeric

LINE 1: SELECT CAST(CAST('2020-01-01' AS DATE) AS decimal);

^

postgres=# SELECT CAST(CAST('2020-01-01' AS DATE) AS integer);

ERROR: cannot cast type date to integer

LINE 1: SELECT CAST(CAST('2020-01-01' AS DATE) AS integer);

^

The way to get the epoch in days is:

postgres=# SELECT EXTRACT(DAYS FROM (now() - '1970-01-01'));

date_part

-----------

18422

(1 row)

```

# MySQL

For MySQL it will convert it automatically to a YYYYMMDD format:

```

mysql> SELECT CAST(CAST('2020-01-01' AS DATE) AS decimal);

+---------------------------------------------+

| CAST(CAST('2020-01-01' AS DATE) AS decimal) |

+---------------------------------------------+

| 20200101 |

+---------------------------------------------+

1 row in set (0.00 sec)

```

Converting to an int is not allowed:

```

mysql> SELECT CAST(CAST('2020-01-01' AS DATE) AS int);

ERROR 1064 (42000): You have an error in your SQL syntax; check the manual

that corresponds to your MySQL server version for the right syntax to use near

'int)' at line 1

mysql> SELECT CAST(CAST('2020-01-01' AS DATE) AS bigint);

ERROR 1064 (42000): You have an error in your SQL syntax; check the manual

that corresponds to your MySQL server version for the right syntax to use near

'bigint)' at line 1

```

# BigQuery

Unsupported

https://cloud.google.com/bigquery/docs/reference/standard-sql/conversion_rules

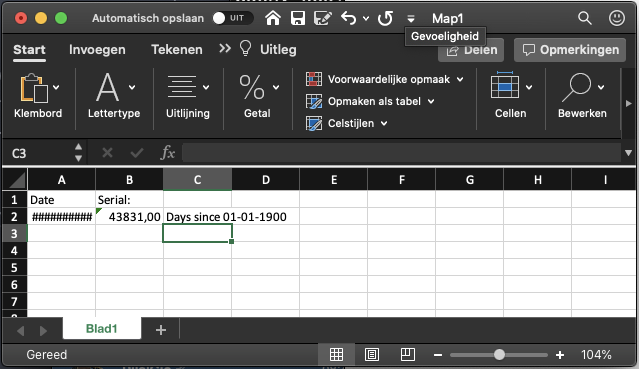

# Excel

The greatest DBMS of them all:

Which is the epoch since 01-01-1900 :)

##########

File path:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/aggregate/Average.scala

##########

@@ -40,10 +40,17 @@ case class Average(child: Expression) extends

DeclarativeAggregate with Implicit

override def children: Seq[Expression] = child :: Nil

- override def inputTypes: Seq[AbstractDataType] = Seq(NumericType)

+ override def inputTypes: Seq[AbstractDataType] = Seq(NumericType, DateType)

Review comment:

Sure, that makes sense. See the details below, let me know if I'm

missing something, but I don't think there is a real consensus on the subject.

# Postgres

For postgres, it is just unsupported

```

postgres@366ecc8a0fb9:/$ psql

psql (12.3 (Debian 12.3-1.pgdg100+1))

Type "help" for help.

postgres=# SELECT CAST(CAST('2020-01-01' AS DATE) AS decimal);

ERROR: cannot cast type date to numeric

LINE 1: SELECT CAST(CAST('2020-01-01' AS DATE) AS decimal);

^

postgres=# SELECT CAST(CAST('2020-01-01' AS DATE) AS integer);

ERROR: cannot cast type date to integer

LINE 1: SELECT CAST(CAST('2020-01-01' AS DATE) AS integer);

^

The way to get the epoch in days is:

postgres=# SELECT EXTRACT(DAYS FROM (now() - '1970-01-01'));

date_part

-----------

18422

(1 row)

```

# MySQL

For MySQL it will convert it automatically to a YYYYMMDD format:

```

mysql> SELECT CAST(CAST('2020-01-01' AS DATE) AS decimal);

+---------------------------------------------+

| CAST(CAST('2020-01-01' AS DATE) AS decimal) |

+---------------------------------------------+

| 20200101 |

+---------------------------------------------+

1 row in set (0.00 sec)

```

Converting to an int is not allowed:

```

mysql> SELECT CAST(CAST('2020-01-01' AS DATE) AS int);

ERROR 1064 (42000): You have an error in your SQL syntax; check the manual

that corresponds to your MySQL server version for the right syntax to use near

'int)' at line 1

mysql> SELECT CAST(CAST('2020-01-01' AS DATE) AS bigint);

ERROR 1064 (42000): You have an error in your SQL syntax; check the manual

that corresponds to your MySQL server version for the right syntax to use near

'bigint)' at line 1

```

# BigQuery

Unsupported

https://cloud.google.com/bigquery/docs/reference/standard-sql/conversion_rules

# Excel

The greatest DBMS of them all:

Which is the epoch since 01-01-1900 :)

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

[email protected]

---------------------------------------------------------------------

To unsubscribe, e-mail: [email protected]

For additional commands, e-mail: [email protected]