zhengruifeng commented on pull request #29018:

URL: https://github.com/apache/spark/pull/29018#issuecomment-654587121

test code:

```scala

import org.apache.spark.ml.classification._

import org.apache.spark.storage.StorageLevel

val df = spark.read.option("numFeatures",

"2000").format("libsvm").load("/data1/Datasets/epsilon/epsilon_normalized.t").withColumn("label",

(col("label")+1)/2)

df.persist(StorageLevel.MEMORY_AND_DISK)

df.count

new RandomForestClassifier().fit(df)

val dt = new RandomForestClassifier().setMaxDepth(10).setNumTrees(100)

val start = System.currentTimeMillis; Seq.range(0, 10).foreach{_ => val

model = dt.fit(df)}; val end = System.currentTimeMillis; end - start

```

results:

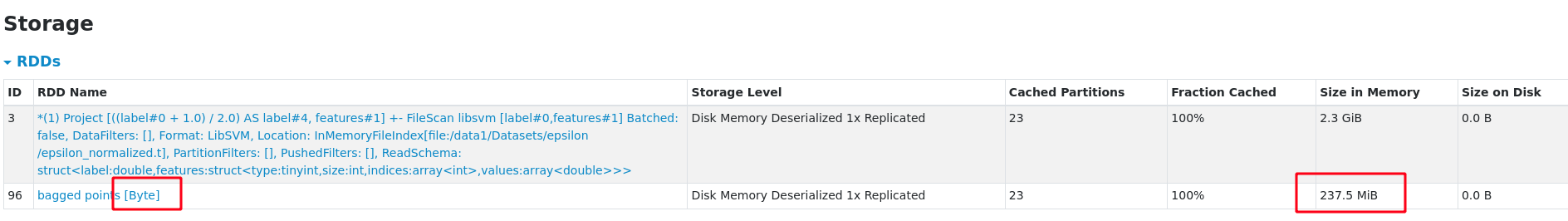

this PR:

duration = 1356042

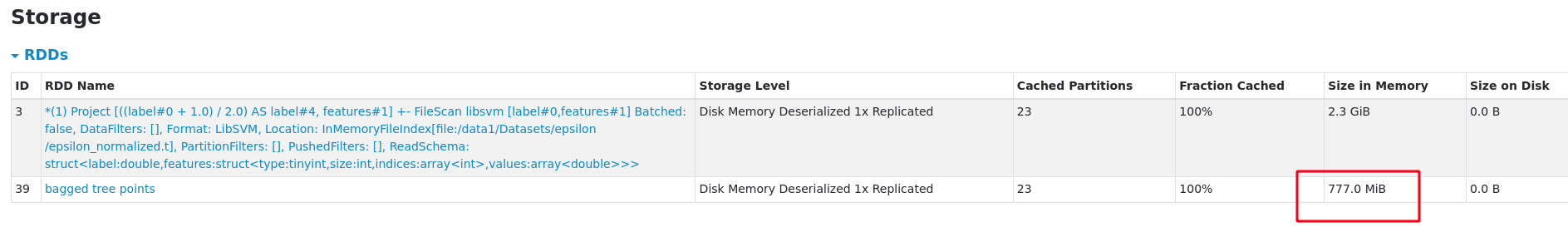

Master:

duration = 1245078

The RAM to persisit training datset was reduced from 777M to 237M. However,

there was a performance regression, this PR is about 8% slower than master in

above test.

@srowen @WeichenXu123 @huaxingao How do you think about this? and is there

some way to avoid this performace regression?

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

[email protected]

---------------------------------------------------------------------

To unsubscribe, e-mail: [email protected]

For additional commands, e-mail: [email protected]