SaurabhChawla100 commented on pull request #31974: URL: https://github.com/apache/spark/pull/31974#issuecomment-808298090

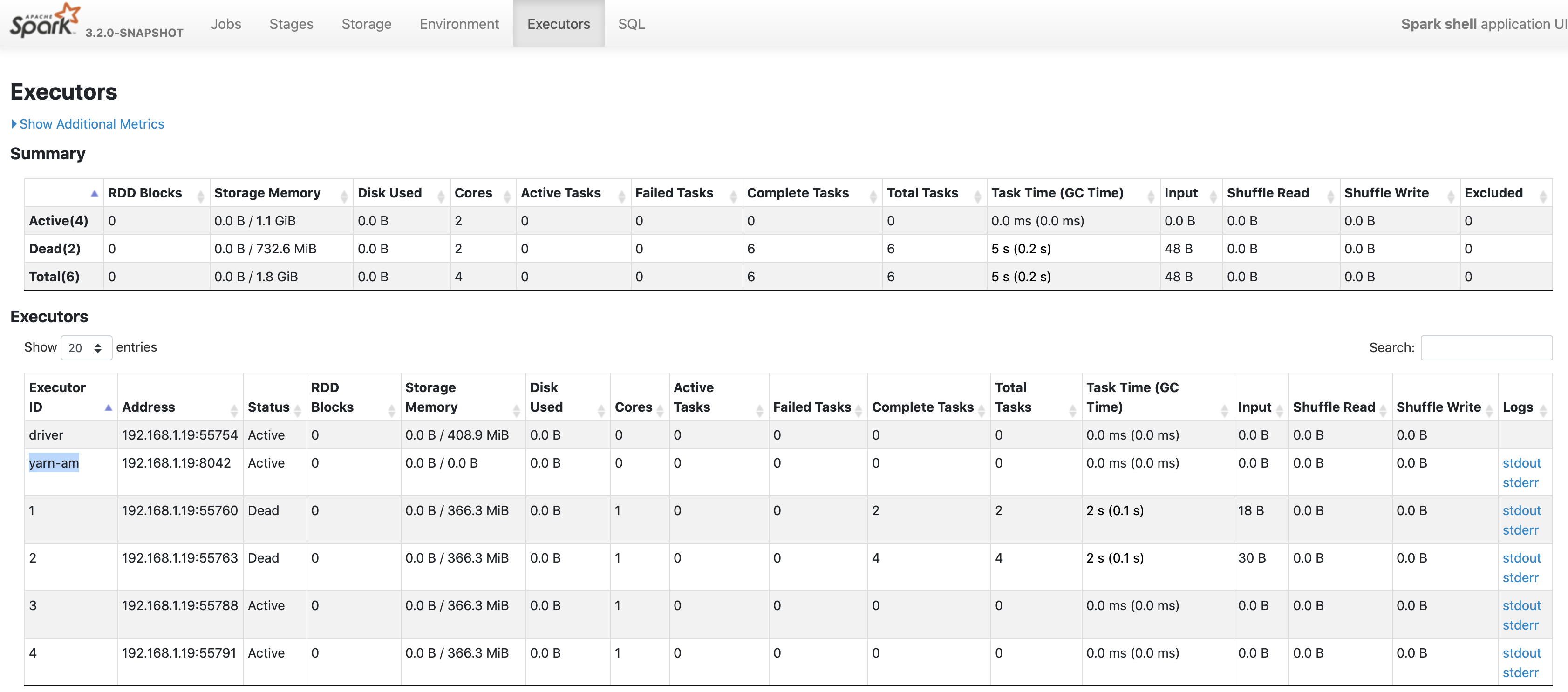

> What if the ApplicationMaster has many failovers, do we also need to check those logs? As of now here showing the last active Spark Application Master only For example if the Application is running - Having container id - container_1616753530325_0013_01_000001 Spark Application is manually killed using kill <ProcessID> New Application Master started by retry/attempt mechanism having container Id - container_1616753530325_0013_02_000001 On receiving the new start event Spark UI will have the updated log link of new Container only container_1616753530325_0013_02_000001  -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: [email protected] --------------------------------------------------------------------- To unsubscribe, e-mail: [email protected] For additional commands, e-mail: [email protected]