Github user yaooqinn commented on the issue:

https://github.com/apache/spark/pull/18666

@gatorsmile would you plz take a look at this.

this pr mainly want to close HiveSessionState explicitly to delete

`hive.downloaded.resources.dir` which points to `"${system:java.io.tmpdir}" +

File.separator + "${hive.session.id}_resources"` by default

`hive.exec.local.scratchdir` which points to `"${system:java.io.tmpdir}" +

File.separator + "${system:user.name}"` by default and some other dirs which

used only for hive but without deleting hook on shutdown.

the below code is how HiveSessionState create

`hive.downloaded.resources.dir`, `isCleanUp` is set to `false`.

```scala

// 3. Download resources dir

path = new Path(HiveConf.getVar(conf,

HiveConf.ConfVars.DOWNLOADED_RESOURCES_DIR));

createPath(conf, path, scratchDirPermission, true, **isCleanUp** = false);

````

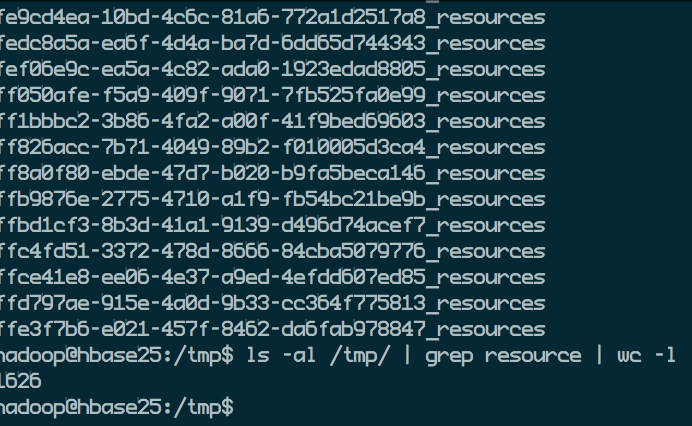

Plenty of unused dirs left after submit a lot of Hive supported spark

applications.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: [email protected]

For additional commands, e-mail: [email protected]