GitHub user cloud-fan opened a pull request:

https://github.com/apache/spark/pull/21573

revert [SPARK-21743][SQL] top-most limit should not cause memory leak

## What changes were proposed in this pull request?

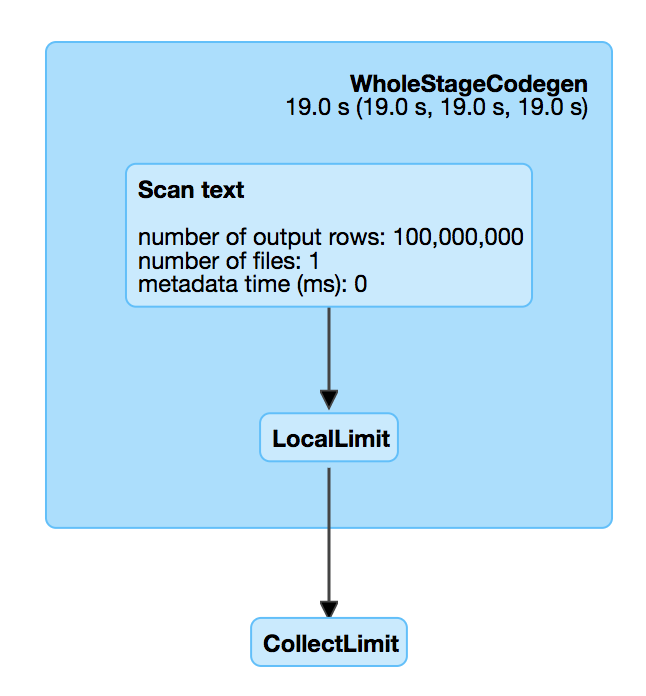

There is a performance regression in Spark 2.3. When we read a big

compressed text file which is un-splittable(e.g. gz), and then take the first

record, Spark will scan all the data in the text file which is very slow. For

example, `spark.read.text("/tmp/test.csv.gz").head(1)`, we can check out the

SQL UI and see that the file is fully scanned.

This is introduced by #18955 , which adds a LocalLimit to the query when

executing `Dataset.head`. The foundamental problem is, `Limit` is not well

whole-stage-codegened. It keeps consuming the input even if we have already hit

the limitation.

However, if we just fix LIMIT whole-stage-codegen, the memory leak test

will fail, as we don't fully consume the inputs to trigger the resource cleanup.

To fix it completely, we should do the following

1. fix LIMIT whole-stage-codegen, stop consuming inputs after hitting the

limitation.

2. in whole-stage-codegen, provide a way to release resource of the parant

operator, and apply it in LIMIT

3. automatically release resource when task ends.

Howere this is a non-trivial change, and is risky to backport to Spark 2.3.

This PR proposes to revert #18955 in Spark 2.3. The memory leak is not a

big issue. When task ends, Spark will release all the pages allocated by this

task, which is kind of releasing most of the resources.

I'll submit a exhaustive fix to master later.

## How was this patch tested?

N/A

You can merge this pull request into a Git repository by running:

$ git pull https://github.com/cloud-fan/spark limit

Alternatively you can review and apply these changes as the patch at:

https://github.com/apache/spark/pull/21573.patch

To close this pull request, make a commit to your master/trunk branch

with (at least) the following in the commit message:

This closes #21573

----

commit 2b20b3c2ac5e7312097ba23e4c3b130317d56f26

Author: Wenchen Fan <wenchen@...>

Date: 2018-06-15T00:51:00Z

revert [SPARK-21743][SQL] top-most limit should not cause memory leak

----

---

---------------------------------------------------------------------

To unsubscribe, e-mail: [email protected]

For additional commands, e-mail: [email protected]