Github user wangyum commented on a diff in the pull request:

https://github.com/apache/spark/pull/21782#discussion_r203613170

--- Diff:

sql/core/src/test/scala/org/apache/spark/sql/execution/benchmark/FilterPushdownBenchmark.scala

---

@@ -394,6 +394,41 @@ class FilterPushdownBenchmark extends SparkFunSuite

with BenchmarkBeforeAndAfter

}

}

}

+

+ ignore("Pushdown benchmark for RANGE PARTITION BY/DISTRIBUTE BY") {

--- End diff --

The range partition is better sorted, so the RowGroups can be skipped more

when filter. This is an example:

```scala

test("SPARK-24816") {

withTable("tbl") {

withSQLConf(SQLConf.SHUFFLE_PARTITIONS.key -> "4") {

spark.range(100).createTempView("tbl")

spark.sql("select * from tbl DISTRIBUTE BY id SORT BY

id").write.parquet("/tmp/spark/parquet/hash")

spark.sql("select * from tbl RANGE PARTITION BY id SORT BY

id").write.parquet("/tmp/spark/parquet/range")

}

}

}

```

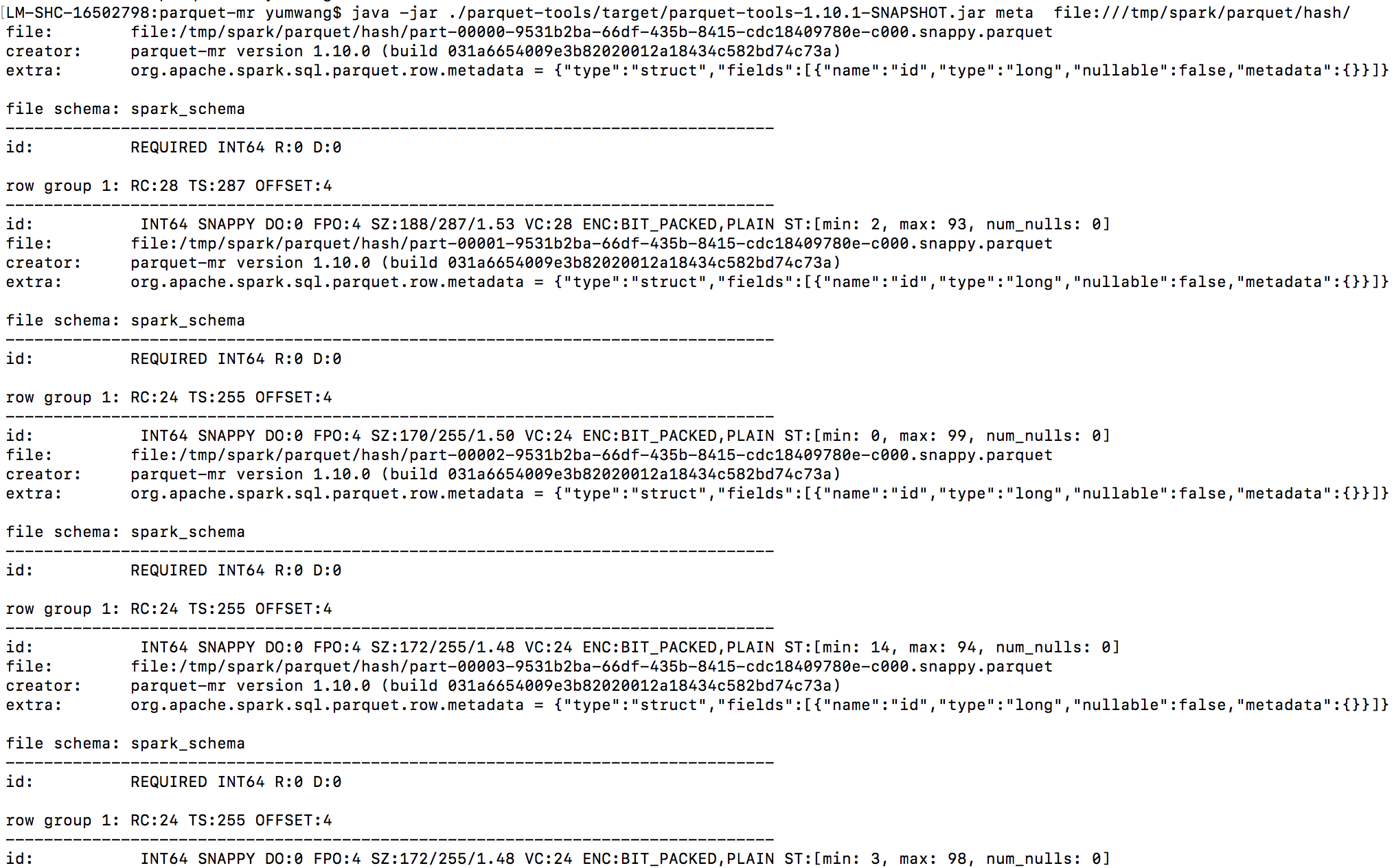

Column statistics info after `HashPartitioning`:

File | id column statistics

--- | ---

part-00000 | min: 2, max: 93

part-00001 | min: 0, max: 99

part-00002 | min: 14, max: 94

part-00003 | min: 3, max: 98

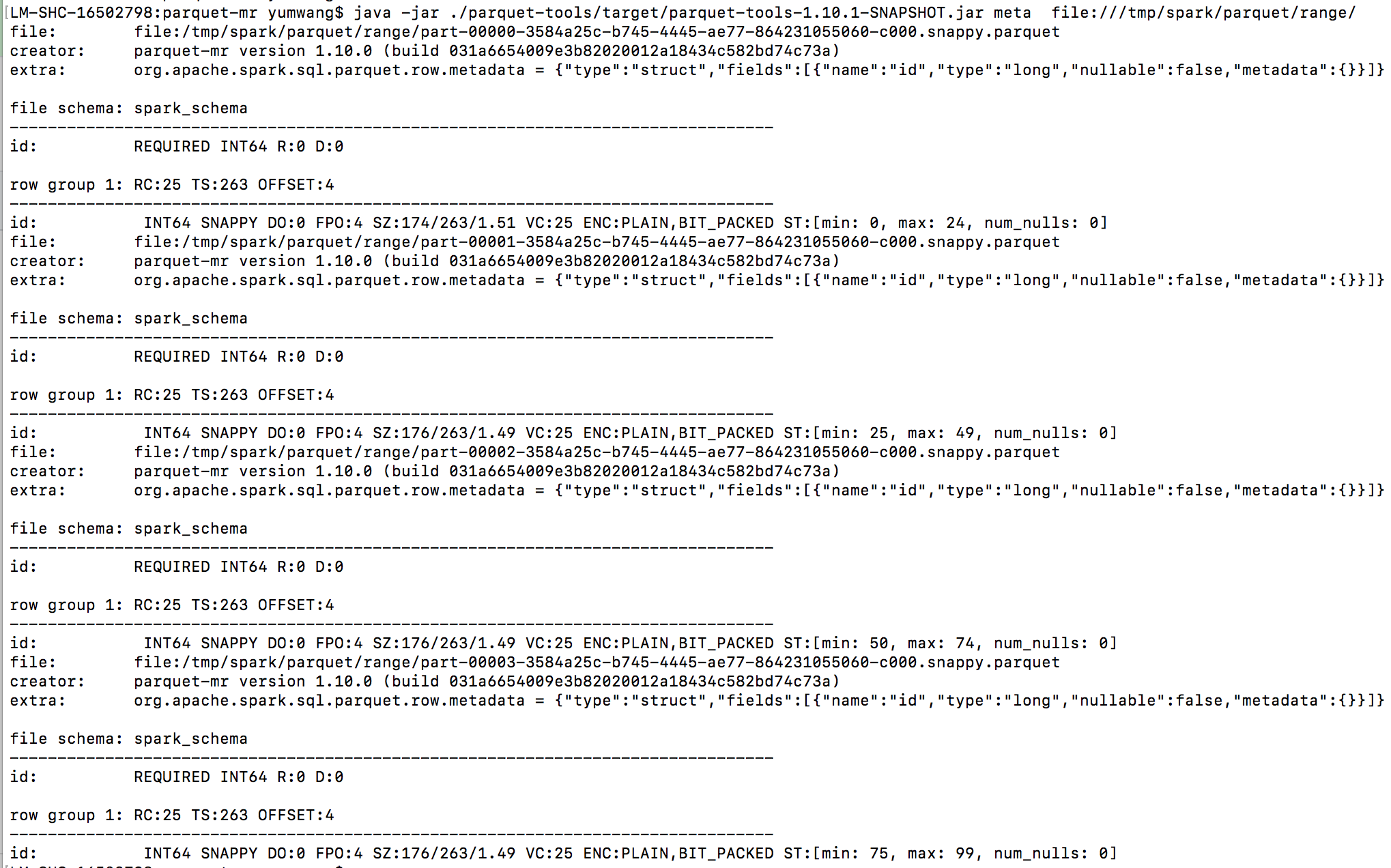

Column statistics info after `RangePartitioning`:

file | id column statistics

--- | ---

part-00000 | min: 0, max: 24

part-00001 | min: 25, max: 49

part-00002 | min: 50, max: 74

part-00003 | min: 75, max: 99

# File meta after `HashPartitioning`:

# File meta after `RangePartitioning`:

---

---------------------------------------------------------------------

To unsubscribe, e-mail: [email protected]

For additional commands, e-mail: [email protected]