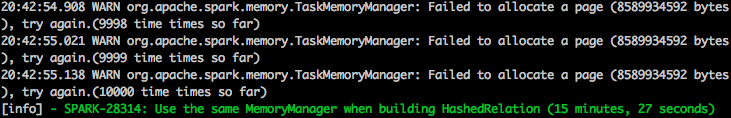

cxzl25 commented on issue #25084: [SPARK-28314][SQL] Use the same MemoryManager when building HashedRelation URL: https://github.com/apache/spark/pull/25084#issuecomment-510474426 Thanks to @JoshRosen for explaining that we can't use env's memory manager at the moment. I simply implemented the call to ```TaskMemoryManager#allocatePage``` record depth. Throw ```SparkOutOfMemoryError``` if more than 10000 times. 10000 times does take a while, and the cpu load becomes a bit high. However, this will get 10000 logs with failed allocations. I am not sure how many times to throw an exception is reasonable. Also, can we respect the current configuration to create a dummy ```TaskMemoryManager``` instead of using Long.MaxValue ? ```scala Val memoryManager: MemoryManager = UnifiedMemoryManager(conf, numUsableCores) ```

---------------------------------------------------------------- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: [email protected] With regards, Apache Git Services --------------------------------------------------------------------- To unsubscribe, e-mail: [email protected] For additional commands, e-mail: [email protected]