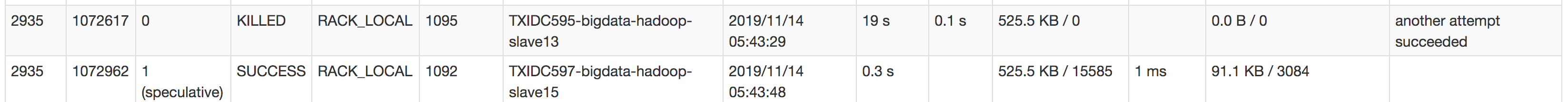

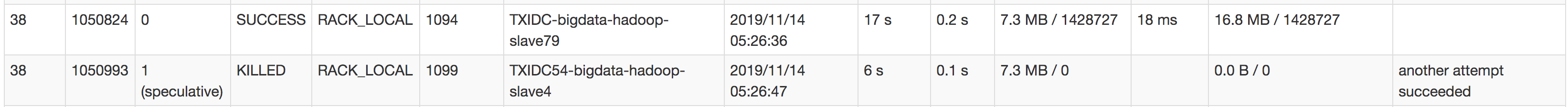

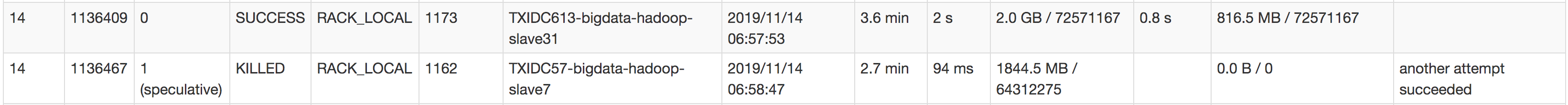

Deegue opened a new pull request #26541: [SPARK-29786][SQL] Optimize speculation performance by minimum runtime limit URL: https://github.com/apache/spark/pull/26541 ### What changes were proposed in this pull request? The minimum runtime to speculation used to be a fixed value 100ms. It means tasks finished in seconds will also be speculated and more executors will be required. To resolve this, we add `spark.speculation.minRuntime` to control the minimum runtime limit of speculation. We can reduce normal tasks to be speculated by adjusting `spark.speculation.minRuntime`. _**Example:**_ Tasks that don't need to be speculated:  and  Tasks are more likely to go wrong and need to be speculated: (especially those shuffle tasks with large amount of data and will cost minutes even hours)  ### Why are the changes needed? To improve speculation performance by reducing speculated tasks which don't need to be speculated actually. ### Does this PR introduce any user-facing change? No. ### How was this patch tested? Unit tests.

---------------------------------------------------------------- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: [email protected] With regards, Apache Git Services --------------------------------------------------------------------- To unsubscribe, e-mail: [email protected] For additional commands, e-mail: [email protected]