[GitHub] spark issue #21863: [SPARK-18874][SQL][FOLLOW-UP] Improvement type mismatche...

Github user gatorsmile commented on the issue: https://github.com/apache/spark/pull/21863 Thanks! Merged to master. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21822: [SPARK-24865] Remove AnalysisBarrier

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21822 **[Test build #93533 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/93533/testReport)** for PR 21822 at commit [`f2f1a97`](https://github.com/apache/spark/commit/f2f1a97e447a41e8b9b6c094376d32b32af00991). * This patch passes all tests. * This patch merges cleanly. * This patch adds no public classes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21822: [SPARK-24865] Remove AnalysisBarrier

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21822 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21822: [SPARK-24865] Remove AnalysisBarrier

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21822 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/93533/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21863: [SPARK-18874][SQL][FOLLOW-UP] Improvement type mi...

Github user asfgit closed the pull request at: https://github.com/apache/spark/pull/21863 --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21869: [SPARK-24891][FOLLOWUP][HOT-FIX][2.3] Fix the Compilatio...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21869 **[Test build #93534 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/93534/testReport)** for PR 21869 at commit [`a45bf36`](https://github.com/apache/spark/commit/a45bf360d923e8b187f6579b4a73d24a9222198a). * This patch **fails due to an unknown error code, -9**. * This patch merges cleanly. * This patch adds no public classes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21834: [SPARK-22814][SQL] Support Date/Timestamp in a JDBC part...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21834 **[Test build #93532 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/93532/testReport)** for PR 21834 at commit [`1041a38`](https://github.com/apache/spark/commit/1041a38571eb4daf66a23d37d5bf51a1abb8d74c). * This patch **fails due to an unknown error code, -9**. * This patch merges cleanly. * This patch adds no public classes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21834: [SPARK-22814][SQL] Support Date/Timestamp in a JDBC part...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21834 Test FAILed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/93532/ Test FAILed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21869: [SPARK-24891][FOLLOWUP][HOT-FIX][2.3] Fix the Compilatio...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21869 Merged build finished. Test FAILed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21834: [SPARK-22814][SQL] Support Date/Timestamp in a JDBC part...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21834 Merged build finished. Test FAILed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21869: [SPARK-24891][FOLLOWUP][HOT-FIX][2.3] Fix the Compilatio...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21869 Test FAILed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/93534/ Test FAILed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21869: [SPARK-24891][FOLLOWUP][HOT-FIX][2.3] Fix the Compilatio...

Github user gatorsmile commented on the issue: https://github.com/apache/spark/pull/21869 retest this please --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21869: [SPARK-24891][FOLLOWUP][HOT-FIX][2.3] Fix the Compilatio...

Github user gatorsmile commented on the issue: https://github.com/apache/spark/pull/21869 cc @maryannxue @gengliangwang --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21834: [SPARK-22814][SQL] Support Date/Timestamp in a JDBC part...

Github user maropu commented on the issue: https://github.com/apache/spark/pull/21834 retest this please --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21866: [SPARK-24768][FollowUp][SQL]Avro migration followup: cha...

Github user gatorsmile commented on the issue: https://github.com/apache/spark/pull/21866 ok to test --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21866: [SPARK-24768][FollowUp][SQL]Avro migration followup: cha...

Github user gatorsmile commented on the issue: https://github.com/apache/spark/pull/21866 retest this please --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21869: [SPARK-24891][FOLLOWUP][HOT-FIX][2.3] Fix the Compilatio...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21869 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21834: [SPARK-22814][SQL] Support Date/Timestamp in a JDBC part...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21834 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution-unified/1303/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21834: [SPARK-22814][SQL] Support Date/Timestamp in a JDBC part...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21834 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21869: [SPARK-24891][FOLLOWUP][HOT-FIX][2.3] Fix the Compilatio...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21869 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution-unified/1302/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21866: [SPARK-24768][FollowUp][SQL]Avro migration followup: cha...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21866 **[Test build #93536 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/93536/testReport)** for PR 21866 at commit [`cff6f2a`](https://github.com/apache/spark/commit/cff6f2a0459e8cc4e48f28bde8103ea44ce5a1ab). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21834: [SPARK-22814][SQL] Support Date/Timestamp in a JDBC part...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21834 **[Test build #93537 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/93537/testReport)** for PR 21834 at commit [`1041a38`](https://github.com/apache/spark/commit/1041a38571eb4daf66a23d37d5bf51a1abb8d74c). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21869: [SPARK-24891][FOLLOWUP][HOT-FIX][2.3] Fix the Compilatio...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21869 **[Test build #93535 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/93535/testReport)** for PR 21869 at commit [`a45bf36`](https://github.com/apache/spark/commit/a45bf360d923e8b187f6579b4a73d24a9222198a). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21803: [SPARK-24849][SPARK-24911][SQL] Converting a value of St...

Github user gatorsmile commented on the issue: https://github.com/apache/spark/pull/21803 @MaxGekk Please include the test case for SHOW CREATE TABLE. Thanks! --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21866: [SPARK-24768][FollowUp][SQL]Avro migration followup: cha...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21866 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution-unified/1304/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21866: [SPARK-24768][FollowUp][SQL]Avro migration followup: cha...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21866 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21850: [SPARK-24892] [SQL] Simplify `CaseWhen` to `If` when the...

Github user gatorsmile commented on the issue: https://github.com/apache/spark/pull/21850 Personally, I do not think we need this extra case. > If primitive has more opportunities for further optimization. Could you explain more? --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21870: Branch 2.3

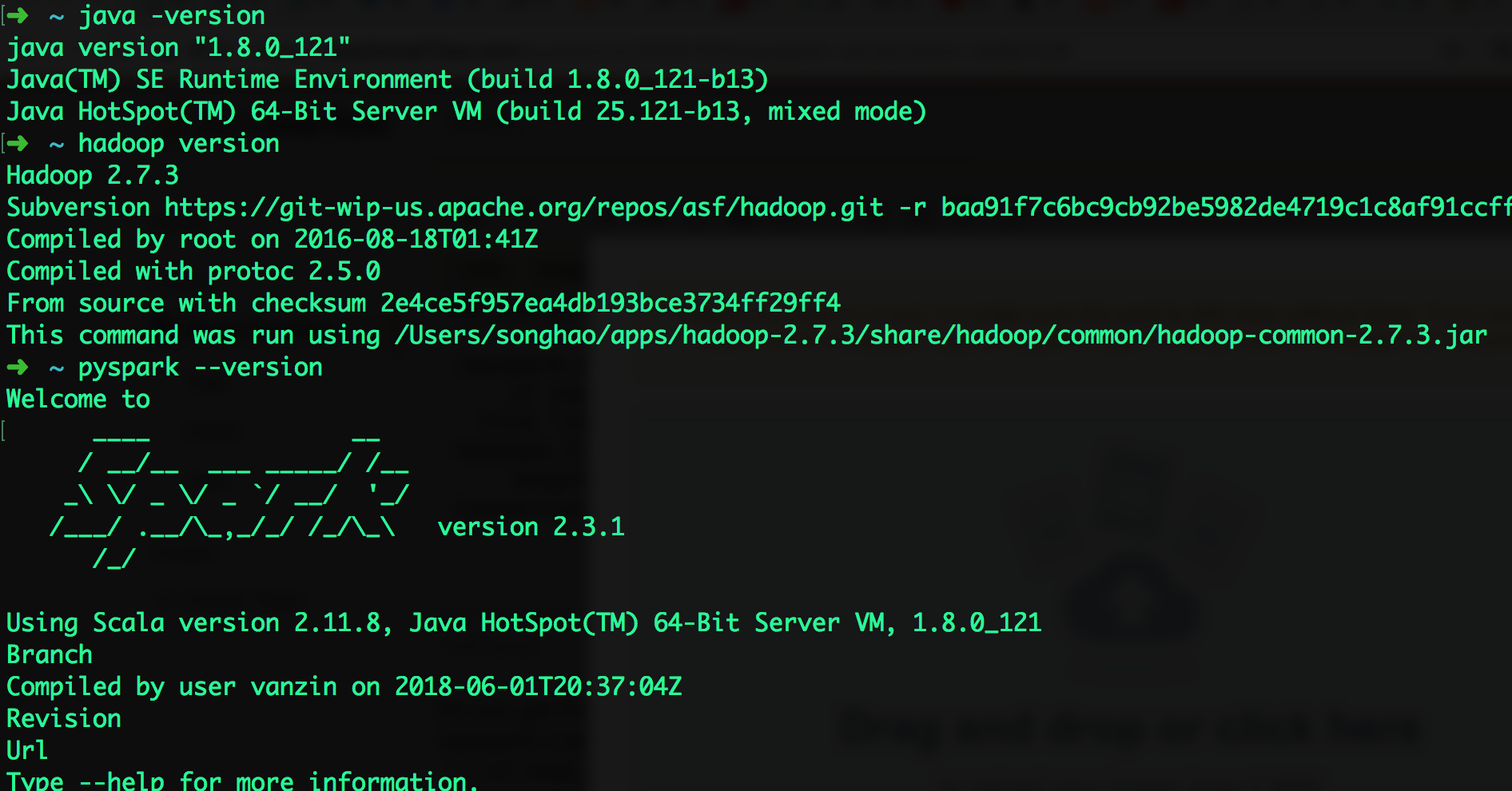

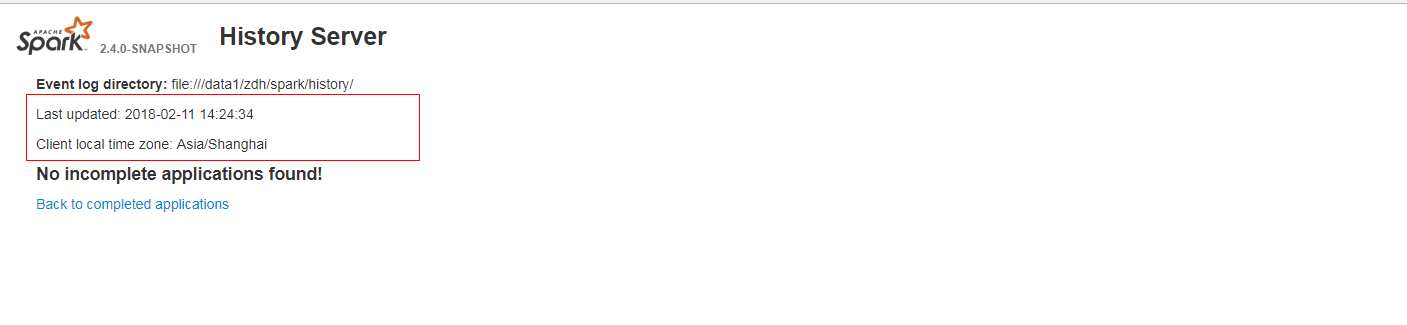

GitHub user lovezeropython opened a pull request: https://github.com/apache/spark/pull/21870 Branch 2.3 EOFError # ConnectionResetError: [Errno 54] Connection reset by peer (Please fill in changes proposed in this fix) ``` /pyspark.zip/pyspark/worker.py", line 255, in main if read_int(infile) == SpecialLengths.END_OF_STREAM: File "/Users/songhao/apps/spark-2.3.1-bin-hadoop2.7/python/lib/pyspark.zip/pyspark/serializers.py", line 683, in read_int length = stream.read(4) ConnectionResetError: [Errno 54] Connection reset by peerenter code here ```  You can merge this pull request into a Git repository by running: $ git pull https://github.com/apache/spark branch-2.3 Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/21870.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #21870 commit 3737c3d32bb92e73cadaf3b1b9759d9be00b288d Author: gatorsmile Date: 2018-02-13T06:05:13Z [SPARK-20090][FOLLOW-UP] Revert the deprecation of `names` in PySpark ## What changes were proposed in this pull request? Deprecating the field `name` in PySpark is not expected. This PR is to revert the change. ## How was this patch tested? N/A Author: gatorsmile Closes #20595 from gatorsmile/removeDeprecate. (cherry picked from commit 407f67249639709c40c46917700ed6dd736daa7d) Signed-off-by: hyukjinkwon commit 1c81c0c626f115fbfe121ad6f6367b695e9f3b5f Author: guoxiaolong Date: 2018-02-13T12:23:10Z [SPARK-23384][WEB-UI] When it has no incomplete(completed) applications found, the last updated time is not formatted and client local time zone is not show in history server web ui. ## What changes were proposed in this pull request? When it has no incomplete(completed) applications found, the last updated time is not formatted and client local time zone is not show in history server web ui. It is a bug. fix before:  fix after:  ## How was this patch tested? (Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests) (If this patch involves UI changes, please attach a screenshot; otherwise, remove this) Please review http://spark.apache.org/contributing.html before opening a pull request. Author: guoxiaolong Closes #20573 from guoxiaolongzte/SPARK-23384. (cherry picked from commit 300c40f50ab4258d697f06a814d1491dc875c847) Signed-off-by: Sean Owen commit dbb1b399b6cf8372a3659c472f380142146b1248 Author: huangtengfei Date: 2018-02-13T15:59:21Z [SPARK-23053][CORE] taskBinarySerialization and task partitions calculate in DagScheduler.submitMissingTasks should keep the same RDD checkpoint status ## What changes were proposed in this pull request? When we run concurrent jobs using the same rdd which is marked to do checkpoint. If one job has finished running the job, and start the process of RDD.doCheckpoint, while another job is submitted, then submitStage and submitMissingTasks will be called. In [submitMissingTasks](https://github.com/apache/spark/blob/master/core/src/main/scala/org/apache/spark/scheduler/DAGScheduler.scala#L961), will serialize taskBinaryBytes and calculate task partitions which are both affected by the status of checkpoint, if the former is calculated before doCheckpoint finished, while the latter is calculated after doCheckpoint finished, when run task, rdd.compute will be called, for some rdds with particular partition type such as [UnionRDD](https://github.com/apache/spark/blob/master/core/src/main/scala/org/apache/spark/rdd/UnionRDD.scala) who will do partition type cast, will get a ClassCastException because the part params is actually a CheckpointRDDPartition. This error occurs because rdd.doCheckpoint occurs in the same thread that called sc.runJob, while the task serialization occurs in the DAGSchedulers event loop. ## How was this patch tested? the exist uts and also add a test case in DAGScheduerSuite to show the exception case. Author: huangtengfei Closes #20244 from ivoson/branch-taskpart-mistype. (cherry picked from commit 091a000d27f324de8c5c527880854ecfcf5de9a4) Signed-off-by: Imran Rashid commit ab01ba718c7752b564e801a1ea546aedc2055dc0 Author: Bogdan Raducanu Date: 2018-02-13T17:49:52Z [SPARK-23316][SQL]

[GitHub] spark issue #21870: Branch 2.3

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21870 Can one of the admins verify this patch? --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21860: [SPARK-24901][SQL]Merge the codegen of RegularHashMap an...

Github user heary-cao commented on the issue: https://github.com/apache/spark/pull/21860 @kiszk, I have add a ignore test case to verifies the newly added code generation. can you help me to review it if you have some time. thanks. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21870: Branch 2.3

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21870 Can one of the admins verify this patch? --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21870: Branch 2.3

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21870 Can one of the admins verify this patch? --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21857: [SPARK-21274][SQL] Implement EXCEPT ALL clause.

Github user ueshin commented on a diff in the pull request:

https://github.com/apache/spark/pull/21857#discussion_r204977789

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/Optimizer.scala

---

@@ -1275,6 +1276,64 @@ object ReplaceExceptWithAntiJoin extends

Rule[LogicalPlan] {

}

}

+/**

+ * Replaces logical [[ExceptAll]] operator using a combination of Union,

Aggregate

+ * and Generate operator.

+ *

+ * Input Query :

+ * {{{

+ *SELECT c1 FROM ut1 EXCEPT ALL SELECT c1 FROM ut2

+ * }}}

+ *

+ * Rewritten Query:

+ * {{{

+ * SELECT c1

+ * FROM (

+ * SELECT replicate_rows(sum_val, c1) AS (sum_val, c1)

+ * FROM (

+ * SELECT c1, cnt, sum_val

--- End diff --

nit: there is no `cnt`?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #19691: [SPARK-14922][SPARK-17732][SQL]ALTER TABLE DROP P...

Github user DazhuangSu commented on a diff in the pull request:

https://github.com/apache/spark/pull/19691#discussion_r205013855

--- Diff:

sql/core/src/main/scala/org/apache/spark/sql/execution/command/ddl.scala ---

@@ -510,40 +511,86 @@ case class AlterTableRenamePartitionCommand(

*

* The syntax of this command is:

* {{{

- * ALTER TABLE table DROP [IF EXISTS] PARTITION spec1[, PARTITION spec2,

...] [PURGE];

+ * ALTER TABLE table DROP [IF EXISTS] PARTITION (spec1, expr1)

+ * [, PARTITION (spec2, expr2), ...] [PURGE];

* }}}

*/

case class AlterTableDropPartitionCommand(

tableName: TableIdentifier,

-specs: Seq[TablePartitionSpec],

+partitions: Seq[(TablePartitionSpec, Seq[Expression])],

ifExists: Boolean,

purge: Boolean,

retainData: Boolean)

- extends RunnableCommand {

+ extends RunnableCommand with PredicateHelper {

override def run(sparkSession: SparkSession): Seq[Row] = {

val catalog = sparkSession.sessionState.catalog

val table = catalog.getTableMetadata(tableName)

+val resolver = sparkSession.sessionState.conf.resolver

DDLUtils.verifyAlterTableType(catalog, table, isView = false)

DDLUtils.verifyPartitionProviderIsHive(sparkSession, table, "ALTER

TABLE DROP PARTITION")

-val normalizedSpecs = specs.map { spec =>

- PartitioningUtils.normalizePartitionSpec(

-spec,

-table.partitionColumnNames,

-table.identifier.quotedString,

-sparkSession.sessionState.conf.resolver)

+val toDrop = partitions.flatMap { partition =>

+ if (partition._1.isEmpty && !partition._2.isEmpty) {

+// There are only expressions in this drop condition.

+extractFromPartitionFilter(partition._2, catalog, table, resolver)

+ } else if (!partition._1.isEmpty && partition._2.isEmpty) {

+// There are only partitionSpecs in this drop condition.

+extractFromPartitionSpec(partition._1, table, resolver)

+ } else if (!partition._1.isEmpty && !partition._2.isEmpty) {

+// This drop condition has both partitionSpecs and expressions.

+extractFromPartitionFilter(partition._2, catalog, table,

resolver).intersect(

--- End diff --

@mgaido91 I understand your point, yes it would be inefficient. I will work

on this soon

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21869: [SPARK-24891][FOLLOWUP][HOT-FIX][2.3] Fix the Compilatio...

Github user ueshin commented on the issue: https://github.com/apache/spark/pull/21869 LGTM, pending Jenkins. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21320: [SPARK-4502][SQL] Parquet nested column pruning -...

Github user mallman commented on a diff in the pull request:

https://github.com/apache/spark/pull/21320#discussion_r205020970

--- Diff:

sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/parquet/ParquetReadSupport.scala

---

@@ -71,9 +80,22 @@ private[parquet] class ParquetReadSupport(val convertTz:

Option[TimeZone])

StructType.fromString(schemaString)

}

-val parquetRequestedSchema =

+val clippedParquetSchema =

ParquetReadSupport.clipParquetSchema(context.getFileSchema,

catalystRequestedSchema)

+val parquetRequestedSchema = if (parquetMrCompatibility) {

+ // Parquet-mr will throw an exception if we try to read a superset

of the file's schema.

--- End diff --

This change is not part of this PR.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21320: [SPARK-4502][SQL] Parquet nested column pruning -...

Github user mallman commented on a diff in the pull request:

https://github.com/apache/spark/pull/21320#discussion_r205021140

--- Diff:

sql/core/src/test/scala/org/apache/spark/sql/execution/datasources/parquet/ParquetSchemaPruningSuite.scala

---

@@ -0,0 +1,156 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.sql.execution.datasources.parquet

+

+import java.io.File

+

+import org.apache.spark.sql.{QueryTest, Row}

+import org.apache.spark.sql.execution.FileSchemaPruningTest

+import org.apache.spark.sql.internal.SQLConf

+import org.apache.spark.sql.test.SharedSQLContext

+

+class ParquetSchemaPruningSuite

+extends QueryTest

+with ParquetTest

+with FileSchemaPruningTest

+with SharedSQLContext {

--- End diff --

No comment.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21320: [SPARK-4502][SQL] Parquet nested column pruning -...

Github user mallman commented on a diff in the pull request:

https://github.com/apache/spark/pull/21320#discussion_r205021282

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/planning/ProjectionOverSchema.scala

---

@@ -0,0 +1,62 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.sql.catalyst.planning

+

+import org.apache.spark.sql.catalyst.expressions._

+import org.apache.spark.sql.types._

+

+/**

+ * A Scala extractor that projects an expression over a given schema. Data

types,

+ * field indexes and field counts of complex type extractors and attributes

+ * are adjusted to fit the schema. All other expressions are left as-is.

This

+ * class is motivated by columnar nested schema pruning.

+ */

+case class ProjectionOverSchema(schema: StructType) {

--- End diff --

Okay. So...

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21320: [SPARK-4502][SQL] Parquet nested column pruning -...

Github user mallman commented on a diff in the pull request:

https://github.com/apache/spark/pull/21320#discussion_r205021469

--- Diff:

sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/parquet/ParquetSchemaPruning.scala

---

@@ -0,0 +1,153 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.sql.execution.datasources.parquet

+

+import org.apache.spark.sql.catalyst.expressions.{And, Attribute,

Expression, NamedExpression}

+import org.apache.spark.sql.catalyst.planning.{PhysicalOperation,

ProjectionOverSchema, SelectedField}

+import org.apache.spark.sql.catalyst.plans.logical.{Filter, LogicalPlan,

Project}

+import org.apache.spark.sql.catalyst.rules.Rule

+import org.apache.spark.sql.execution.datasources.{HadoopFsRelation,

LogicalRelation}

+import org.apache.spark.sql.internal.SQLConf

+import org.apache.spark.sql.types.{ArrayType, DataType, MapType,

StructField, StructType}

+

+/**

+ * Prunes unnecessary Parquet columns given a [[PhysicalOperation]] over a

+ * [[ParquetRelation]]. By "Parquet column", we mean a column as defined

in the

+ * Parquet format. In Spark SQL, a root-level Parquet column corresponds

to a

+ * SQL column, and a nested Parquet column corresponds to a

[[StructField]].

+ */

+private[sql] object ParquetSchemaPruning extends Rule[LogicalPlan] {

+ override def apply(plan: LogicalPlan): LogicalPlan =

+if (SQLConf.get.nestedSchemaPruningEnabled) {

+ apply0(plan)

+} else {

+ plan

+}

+

+ private def apply0(plan: LogicalPlan): LogicalPlan =

+plan transformDown {

+ case op @ PhysicalOperation(projects, filters,

+ l @ LogicalRelation(hadoopFsRelation @ HadoopFsRelation(_, _,

+dataSchema, _, parquetFormat: ParquetFileFormat, _), _, _, _))

=>

+val projectionRootFields = projects.flatMap(getRootFields)

+val filterRootFields = filters.flatMap(getRootFields)

+val requestedRootFields = (projectionRootFields ++

filterRootFields).distinct

+

+// If [[requestedRootFields]] includes a nested field, continue.

Otherwise,

+// return [[op]]

+if (requestedRootFields.exists { case RootField(_, derivedFromAtt)

=> !derivedFromAtt }) {

+ val prunedSchema = requestedRootFields

+.map { case RootField(field, _) => StructType(Array(field)) }

+.reduceLeft(_ merge _)

+ val dataSchemaFieldNames = dataSchema.fieldNames.toSet

+ val prunedDataSchema =

+StructType(prunedSchema.filter(f =>

dataSchemaFieldNames.contains(f.name)))

+

+ // If the data schema is different from the pruned data schema,

continue. Otherwise,

+ // return [[op]]. We effect this comparison by counting the

number of "leaf" fields in

+ // each schemata, assuming the fields in [[prunedDataSchema]]

are a subset of the fields

+ // in [[dataSchema]].

+ if (countLeaves(dataSchema) > countLeaves(prunedDataSchema)) {

+val prunedParquetRelation =

+ hadoopFsRelation.copy(dataSchema =

prunedDataSchema)(hadoopFsRelation.sparkSession)

+

+// We need to replace the expression ids of the pruned

relation output attributes

+// with the expression ids of the original relation output

attributes so that

+// references to the original relation's output are not broken

+val outputIdMap = l.output.map(att => (att.name,

att.exprId)).toMap

+val prunedRelationOutput =

+ prunedParquetRelation

+.schema

+.toAttributes

+.map {

+ case att if outputIdMap.contains(att.name) =>

+att.withExprId(outputIdMap(att.name))

+ case att => att

+}

+val prunedRelation =

+ l.copy(relation = prunedParquetRe

[GitHub] spark pull request #21320: [SPARK-4502][SQL] Parquet nested column pruning -...

Github user mallman commented on a diff in the pull request: https://github.com/apache/spark/pull/21320#discussion_r205021712 --- Diff: sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/planning/SelectedFieldSuite.scala --- @@ -0,0 +1,388 @@ +/* + * Licensed to the Apache Software Foundation (ASF) under one or more + * contributor license agreements. See the NOTICE file distributed with + * this work for additional information regarding copyright ownership. + * The ASF licenses this file to You under the Apache License, Version 2.0 + * (the "License"); you may not use this file except in compliance with + * the License. You may obtain a copy of the License at + * + *http://www.apache.org/licenses/LICENSE-2.0 + * + * Unless required by applicable law or agreed to in writing, software + * distributed under the License is distributed on an "AS IS" BASIS, + * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. + * See the License for the specific language governing permissions and + * limitations under the License. + */ + +package org.apache.spark.sql.catalyst.planning + +import org.scalatest.BeforeAndAfterAll +import org.scalatest.exceptions.TestFailedException + +import org.apache.spark.SparkFunSuite +import org.apache.spark.sql.catalyst.dsl.plans._ +import org.apache.spark.sql.catalyst.expressions.NamedExpression +import org.apache.spark.sql.catalyst.parser.CatalystSqlParser +import org.apache.spark.sql.catalyst.plans.logical.LocalRelation +import org.apache.spark.sql.types._ + +// scalastyle:off line.size.limit --- End diff -- No comment. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21320: [SPARK-4502][SQL] Parquet nested column pruning -...

Github user mallman commented on a diff in the pull request:

https://github.com/apache/spark/pull/21320#discussion_r205022799

--- Diff:

sql/core/src/test/scala/org/apache/spark/sql/execution/datasources/parquet/ParquetSchemaPruningSuite.scala

---

@@ -0,0 +1,156 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.sql.execution.datasources.parquet

+

+import java.io.File

+

+import org.apache.spark.sql.{QueryTest, Row}

+import org.apache.spark.sql.execution.FileSchemaPruningTest

+import org.apache.spark.sql.internal.SQLConf

+import org.apache.spark.sql.test.SharedSQLContext

+

+class ParquetSchemaPruningSuite

+extends QueryTest

+with ParquetTest

+with FileSchemaPruningTest

+with SharedSQLContext {

+ case class FullName(first: String, middle: String, last: String)

+ case class Contact(name: FullName, address: String, pets: Int, friends:

Array[FullName] = Array(),

+relatives: Map[String, FullName] = Map())

+

+ val contacts =

+Contact(FullName("Jane", "X.", "Doe"), "123 Main Street", 1) ::

+Contact(FullName("John", "Y.", "Doe"), "321 Wall Street", 3) :: Nil

+

+ case class Name(first: String, last: String)

+ case class BriefContact(name: Name, address: String)

+

+ val briefContacts =

+BriefContact(Name("Janet", "Jones"), "567 Maple Drive") ::

+BriefContact(Name("Jim", "Jones"), "6242 Ash Street") :: Nil

+

+ case class ContactWithDataPartitionColumn(name: FullName, address:

String, pets: Int,

+friends: Array[FullName] = Array(), relatives: Map[String, FullName] =

Map(), p: Int)

+

+ case class BriefContactWithDataPartitionColumn(name: Name, address:

String, p: Int)

+

+ val contactsWithDataPartitionColumn =

+contacts.map { case Contact(name, address, pets, friends, relatives) =>

+ ContactWithDataPartitionColumn(name, address, pets, friends,

relatives, 1) }

+ val briefContactsWithDataPartitionColumn =

+briefContacts.map { case BriefContact(name: Name, address: String) =>

+ BriefContactWithDataPartitionColumn(name, address, 2) }

+

+ testSchemaPruning("select a single complex field") {

+val query = sql("select name.middle from contacts")

+checkScanSchemata(query, "struct>")

+checkAnswer(query, Row("X.") :: Row("Y.") :: Row(null) :: Row(null) ::

Nil)

+ }

+

+ testSchemaPruning("select a single complex field and the partition

column") {

+val query = sql("select name.middle, p from contacts")

+checkScanSchemata(query, "struct>")

+checkAnswer(query, Row("X.", 1) :: Row("Y.", 1) :: Row(null, 2) ::

Row(null, 2) :: Nil)

+ }

+

+ ignore("partial schema intersection - select missing subfield") {

+val query = sql("select name.middle, address from contacts where p=2")

+checkScanSchemata(query,

"struct,address:string>")

+checkAnswer(query,

+ Row(null, "567 Maple Drive") ::

+ Row(null, "6242 Ash Street") :: Nil)

+ }

+

+ testSchemaPruning("no unnecessary schema pruning") {

+val query =

+ sql("select name.last, name.middle, name.first, relatives[''].last,

" +

+"relatives[''].middle, relatives[''].first, friends[0].last,

friends[0].middle, " +

+"friends[0].first, pets, address from contacts where p=2")

+// We've selected every field in the schema. Therefore, no schema

pruning should be performed.

+// We check this by asserting that the scanned schema of the query is

identical to the schema

+// of the contacts relation, even though the fields are selected in

different orders.

+checkScanSchemata(query,

+

"struct,address:string,pets:int,"

+

+ "friends:array>," +

+

"relatives:map>>")

+checkAnswer(query,

+ Row("Jones", null, "Janet", null, null, null, null, null, null,

null, "567 Maple Drive") ::

+ Row("Jones", null, "Jim", null, null, null, null, null, null, null,

"6242 Ash

[GitHub] spark pull request #21320: [SPARK-4502][SQL] Parquet nested column pruning -...

Github user mallman commented on a diff in the pull request:

https://github.com/apache/spark/pull/21320#discussion_r205022895

--- Diff:

sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/parquet/ParquetSchemaPruning.scala

---

@@ -0,0 +1,153 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.sql.execution.datasources.parquet

+

+import org.apache.spark.sql.catalyst.expressions.{And, Attribute,

Expression, NamedExpression}

+import org.apache.spark.sql.catalyst.planning.{PhysicalOperation,

ProjectionOverSchema, SelectedField}

+import org.apache.spark.sql.catalyst.plans.logical.{Filter, LogicalPlan,

Project}

+import org.apache.spark.sql.catalyst.rules.Rule

+import org.apache.spark.sql.execution.datasources.{HadoopFsRelation,

LogicalRelation}

+import org.apache.spark.sql.internal.SQLConf

+import org.apache.spark.sql.types.{ArrayType, DataType, MapType,

StructField, StructType}

+

+/**

+ * Prunes unnecessary Parquet columns given a [[PhysicalOperation]] over a

+ * [[ParquetRelation]]. By "Parquet column", we mean a column as defined

in the

+ * Parquet format. In Spark SQL, a root-level Parquet column corresponds

to a

+ * SQL column, and a nested Parquet column corresponds to a

[[StructField]].

+ */

+private[sql] object ParquetSchemaPruning extends Rule[LogicalPlan] {

+ override def apply(plan: LogicalPlan): LogicalPlan =

+if (SQLConf.get.nestedSchemaPruningEnabled) {

+ apply0(plan)

+} else {

+ plan

+}

+

+ private def apply0(plan: LogicalPlan): LogicalPlan =

+plan transformDown {

+ case op @ PhysicalOperation(projects, filters,

+ l @ LogicalRelation(hadoopFsRelation @ HadoopFsRelation(_, _,

+dataSchema, _, parquetFormat: ParquetFileFormat, _), _, _, _))

=>

+val projectionRootFields = projects.flatMap(getRootFields)

+val filterRootFields = filters.flatMap(getRootFields)

+val requestedRootFields = (projectionRootFields ++

filterRootFields).distinct

+

+// If [[requestedRootFields]] includes a nested field, continue.

Otherwise,

+// return [[op]]

+if (requestedRootFields.exists { case RootField(_, derivedFromAtt)

=> !derivedFromAtt }) {

+ val prunedSchema = requestedRootFields

+.map { case RootField(field, _) => StructType(Array(field)) }

+.reduceLeft(_ merge _)

+ val dataSchemaFieldNames = dataSchema.fieldNames.toSet

+ val prunedDataSchema =

+StructType(prunedSchema.filter(f =>

dataSchemaFieldNames.contains(f.name)))

+

+ // If the data schema is different from the pruned data schema,

continue. Otherwise,

+ // return [[op]]. We effect this comparison by counting the

number of "leaf" fields in

+ // each schemata, assuming the fields in [[prunedDataSchema]]

are a subset of the fields

+ // in [[dataSchema]].

+ if (countLeaves(dataSchema) > countLeaves(prunedDataSchema)) {

--- End diff --

No comment.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21850: [SPARK-24892] [SQL] Simplify `CaseWhen` to `If` w...

Github user ueshin commented on a diff in the pull request:

https://github.com/apache/spark/pull/21850#discussion_r205022884

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/expressions.scala

---

@@ -414,6 +414,16 @@ object SimplifyConditionals extends Rule[LogicalPlan]

with PredicateHelper {

// these branches can be pruned away

val (h, t) = branches.span(_._1 != TrueLiteral)

CaseWhen( h :+ t.head, None)

+

+ case CaseWhen(branches, elseValue) if branches.length == 1 =>

+// Using pattern matching like `CaseWhen((cond, branchValue) ::

Nil, elseValue)` will not

+// work since the implementation of `branches` can be

`ArrayBuffer`. A full test is in

--- End diff --

How about:

```scala

case CaseWhen(Seq((cond, trueValue)), elseValue) =>

```

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21139: [SPARK-24066][SQL]Add a window exchange rule to eliminat...

Github user heary-cao commented on the issue: https://github.com/apache/spark/pull/21139 @hvanhovell, can you help review it again if you have some time, thanks. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21320: [SPARK-4502][SQL] Parquet nested column pruning -...

Github user mallman commented on a diff in the pull request:

https://github.com/apache/spark/pull/21320#discussion_r205022974

--- Diff:

sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/planning/SelectedFieldSuite.scala

---

@@ -0,0 +1,388 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.sql.catalyst.planning

+

+import org.scalatest.BeforeAndAfterAll

+import org.scalatest.exceptions.TestFailedException

+

+import org.apache.spark.SparkFunSuite

+import org.apache.spark.sql.catalyst.dsl.plans._

+import org.apache.spark.sql.catalyst.expressions.NamedExpression

+import org.apache.spark.sql.catalyst.parser.CatalystSqlParser

+import org.apache.spark.sql.catalyst.plans.logical.LocalRelation

+import org.apache.spark.sql.types._

+

+// scalastyle:off line.size.limit

+class SelectedFieldSuite extends SparkFunSuite with BeforeAndAfterAll {

+ // The test schema as a tree string, i.e. `schema.treeString`

+ // root

+ // |-- col1: string (nullable = false)

+ // |-- col2: struct (nullable = true)

+ // ||-- field1: integer (nullable = true)

+ // ||-- field2: array (nullable = true)

+ // |||-- element: integer (containsNull = false)

+ // ||-- field3: array (nullable = false)

+ // |||-- element: struct (containsNull = true)

+ // ||||-- subfield1: integer (nullable = true)

+ // ||||-- subfield2: integer (nullable = true)

+ // ||||-- subfield3: array (nullable = true)

+ // |||||-- element: integer (containsNull = true)

+ // ||-- field4: map (nullable = true)

+ // |||-- key: string

+ // |||-- value: struct (valueContainsNull = false)

+ // ||||-- subfield1: integer (nullable = true)

+ // ||||-- subfield2: array (nullable = true)

+ // |||||-- element: integer (containsNull = false)

+ // ||-- field5: array (nullable = false)

+ // |||-- element: struct (containsNull = true)

+ // ||||-- subfield1: struct (nullable = false)

+ // |||||-- subsubfield1: integer (nullable = true)

+ // |||||-- subsubfield2: integer (nullable = true)

+ // ||||-- subfield2: struct (nullable = true)

+ // |||||-- subsubfield1: struct (nullable = true)

+ // ||||||-- subsubsubfield1: string (nullable =

true)

+ // |||||-- subsubfield2: integer (nullable = true)

+ // ||-- field6: struct (nullable = true)

+ // |||-- subfield1: string (nullable = false)

+ // |||-- subfield2: string (nullable = true)

+ // ||-- field7: struct (nullable = true)

+ // |||-- subfield1: struct (nullable = true)

+ // ||||-- subsubfield1: integer (nullable = true)

+ // ||||-- subsubfield2: integer (nullable = true)

+ // ||-- field8: map (nullable = true)

+ // |||-- key: string

+ // |||-- value: array (valueContainsNull = false)

+ // ||||-- element: struct (containsNull = true)

+ // |||||-- subfield1: integer (nullable = true)

+ // |||||-- subfield2: array (nullable = true)

+ // ||||||-- element: integer (containsNull = false)

+ // ||-- field9: map (nullable = true)

+ // |||-- key: string

+ // |||-- value: integer (valueContainsNull = false)

+ // |-- col3: array (nullable = false)

+ // ||-- element: struct (containsNull = false)

+ // |||-- field1: struct (nullable = true)

+ // ||||-- subfield1: integer (nullable = false)

+ // ||||-- subfield2: integer (nullable = true)

+ // |||-- field2: map (nullable = true)

+ // ||||-- key: string

+ // ||||-- value: integer (valueContainsN

[GitHub] spark issue #21320: [SPARK-4502][SQL] Parquet nested column pruning - founda...

Github user mallman commented on the issue: https://github.com/apache/spark/pull/21320 > Regarding #21320 (comment), can you at least set this enable by default and see if some existing tests are broken or not? I have no intention to at this point, no. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21650: [SPARK-24624][SQL][PYTHON] Support mixture of Pyt...

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/21650#discussion_r205025311

--- Diff: python/pyspark/sql/tests.py ---

@@ -5060,6 +5049,147 @@ def test_type_annotation(self):

df = self.spark.range(1).select(pandas_udf(f=_locals['noop'],

returnType='bigint')('id'))

self.assertEqual(df.first()[0], 0)

+def test_mixed_udf(self):

+import pandas as pd

+from pyspark.sql.functions import col, udf, pandas_udf

+

+df = self.spark.range(0, 1).toDF('v')

+

+# Test mixture of multiple UDFs and Pandas UDFs

+

+@udf('int')

+def f1(x):

+assert type(x) == int

+return x + 1

+

+@pandas_udf('int')

+def f2(x):

+assert type(x) == pd.Series

+return x + 10

+

+@udf('int')

+def f3(x):

+assert type(x) == int

+return x + 100

+

+@pandas_udf('int')

+def f4(x):

+assert type(x) == pd.Series

+return x + 1000

+

+# Test mixed udfs in a single projection

+df1 = df \

+.withColumn('f1', f1(col('v'))) \

+.withColumn('f2', f2(col('v'))) \

+.withColumn('f3', f3(col('v'))) \

+.withColumn('f4', f4(col('v'))) \

+.withColumn('f2_f1', f2(col('f1'))) \

+.withColumn('f3_f1', f3(col('f1'))) \

+.withColumn('f4_f1', f4(col('f1'))) \

+.withColumn('f3_f2', f3(col('f2'))) \

+.withColumn('f4_f2', f4(col('f2'))) \

+.withColumn('f4_f3', f4(col('f3'))) \

+.withColumn('f3_f2_f1', f3(col('f2_f1'))) \

+.withColumn('f4_f2_f1', f4(col('f2_f1'))) \

+.withColumn('f4_f3_f1', f4(col('f3_f1'))) \

+.withColumn('f4_f3_f2', f4(col('f3_f2'))) \

+.withColumn('f4_f3_f2_f1', f4(col('f3_f2_f1')))

+

+# Test mixed udfs in a single expression

+df2 = df \

+.withColumn('f1', f1(col('v'))) \

+.withColumn('f2', f2(col('v'))) \

+.withColumn('f3', f3(col('v'))) \

+.withColumn('f4', f4(col('v'))) \

+.withColumn('f2_f1', f2(f1(col('v' \

+.withColumn('f3_f1', f3(f1(col('v' \

+.withColumn('f4_f1', f4(f1(col('v' \

+.withColumn('f3_f2', f3(f2(col('v' \

+.withColumn('f4_f2', f4(f2(col('v' \

+.withColumn('f4_f3', f4(f3(col('v' \

+.withColumn('f3_f2_f1', f3(f2(f1(col('v') \

+.withColumn('f4_f2_f1', f4(f2(f1(col('v') \

+.withColumn('f4_f3_f1', f4(f3(f1(col('v') \

+.withColumn('f4_f3_f2', f4(f3(f2(col('v') \

+.withColumn('f4_f3_f2_f1', f4(f3(f2(f1(col('v'))

+

+# expected result

+df3 = df \

+.withColumn('f1', df['v'] + 1) \

+.withColumn('f2', df['v'] + 10) \

+.withColumn('f3', df['v'] + 100) \

+.withColumn('f4', df['v'] + 1000) \

+.withColumn('f2_f1', df['v'] + 11) \

+.withColumn('f3_f1', df['v'] + 101) \

+.withColumn('f4_f1', df['v'] + 1001) \

+.withColumn('f3_f2', df['v'] + 110) \

+.withColumn('f4_f2', df['v'] + 1010) \

+.withColumn('f4_f3', df['v'] + 1100) \

+.withColumn('f3_f2_f1', df['v'] + 111) \

+.withColumn('f4_f2_f1', df['v'] + 1011) \

+.withColumn('f4_f3_f1', df['v'] + 1101) \

+.withColumn('f4_f3_f2', df['v'] + 1110) \

+.withColumn('f4_f3_f2_f1', df['v'] + )

+

+self.assertEquals(df3.collect(), df1.collect())

+self.assertEquals(df3.collect(), df2.collect())

+

+def test_mixed_udf_and_sql(self):

+import pandas as pd

+from pyspark.sql.functions import udf, pandas_udf

+

+df = self.spark.range(0, 1).toDF('v')

+

+# Test mixture of UDFs, Pandas UDFs and SQL expression.

+

+@udf('int')

+def f1(x):

+assert type(x) == int

+return x + 1

+

+def f2(x):

+return x + 10

+

+@pandas_udf('int')

+def f3(x):

+assert type(x) == pd.Series

+return x + 100

+

+df1 = df.withColumn('f1', f1(df['v'])) \

+.withColumn('f2', f2(df['v'])) \

+.withColumn('f3', f3(df['v'])) \

+.withColumn('f1_f2', f1(f2(df['v']))) \

+.withColumn('f1_f3', f1(f3(df['v']))) \

+.w

[GitHub] spark pull request #21650: [SPARK-24624][SQL][PYTHON] Support mixture of Pyt...

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/21650#discussion_r205024958

--- Diff: python/pyspark/sql/tests.py ---

@@ -5060,6 +5049,147 @@ def test_type_annotation(self):

df = self.spark.range(1).select(pandas_udf(f=_locals['noop'],

returnType='bigint')('id'))

self.assertEqual(df.first()[0], 0)

+def test_mixed_udf(self):

+import pandas as pd

+from pyspark.sql.functions import col, udf, pandas_udf

+

+df = self.spark.range(0, 1).toDF('v')

+

+# Test mixture of multiple UDFs and Pandas UDFs

+

+@udf('int')

+def f1(x):

+assert type(x) == int

+return x + 1

+

+@pandas_udf('int')

+def f2(x):

+assert type(x) == pd.Series

+return x + 10

+

+@udf('int')

+def f3(x):

+assert type(x) == int

+return x + 100

+

+@pandas_udf('int')

+def f4(x):

+assert type(x) == pd.Series

+return x + 1000

+

+# Test mixed udfs in a single projection

+df1 = df \

+.withColumn('f1', f1(col('v'))) \

+.withColumn('f2', f2(col('v'))) \

+.withColumn('f3', f3(col('v'))) \

+.withColumn('f4', f4(col('v'))) \

+.withColumn('f2_f1', f2(col('f1'))) \

+.withColumn('f3_f1', f3(col('f1'))) \

--- End diff --

This looks testing udf + udf

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21650: [SPARK-24624][SQL][PYTHON] Support mixture of Pyt...

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/21650#discussion_r205025755

--- Diff: python/pyspark/sql/tests.py ---

@@ -5487,6 +5617,22 @@ def dummy_pandas_udf(df):

F.col('temp0.key') ==

F.col('temp1.key'))

self.assertEquals(res.count(), 5)

+def test_mixed_scalar_udfs_followed_by_grouby_apply(self):

+# Test Pandas UDF and scalar Python UDF followed by groupby apply

+from pyspark.sql.functions import udf, pandas_udf, PandasUDFType

+import pandas as pd

--- End diff --

not a big deal at all really .. but I would swap the import order

(thridparty, pyspark)

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21650: [SPARK-24624][SQL][PYTHON] Support mixture of Pyt...

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/21650#discussion_r205030035

--- Diff: python/pyspark/sql/tests.py ---

@@ -5060,6 +5049,147 @@ def test_type_annotation(self):

df = self.spark.range(1).select(pandas_udf(f=_locals['noop'],

returnType='bigint')('id'))

self.assertEqual(df.first()[0], 0)

+def test_mixed_udf(self):

+import pandas as pd

+from pyspark.sql.functions import col, udf, pandas_udf

+

+df = self.spark.range(0, 1).toDF('v')

+

+# Test mixture of multiple UDFs and Pandas UDFs

+

+@udf('int')

+def f1(x):

+assert type(x) == int

+return x + 1

+

+@pandas_udf('int')

+def f2(x):

+assert type(x) == pd.Series

+return x + 10

+

+@udf('int')

+def f3(x):

+assert type(x) == int

+return x + 100

+

+@pandas_udf('int')

+def f4(x):

+assert type(x) == pd.Series

+return x + 1000

+

+# Test mixed udfs in a single projection

+df1 = df \

+.withColumn('f1', f1(col('v'))) \

+.withColumn('f2', f2(col('v'))) \

+.withColumn('f3', f3(col('v'))) \

+.withColumn('f4', f4(col('v'))) \

+.withColumn('f2_f1', f2(col('f1'))) \

+.withColumn('f3_f1', f3(col('f1'))) \

+.withColumn('f4_f1', f4(col('f1'))) \

+.withColumn('f3_f2', f3(col('f2'))) \

+.withColumn('f4_f2', f4(col('f2'))) \

+.withColumn('f4_f3', f4(col('f3'))) \

+.withColumn('f3_f2_f1', f3(col('f2_f1'))) \

+.withColumn('f4_f2_f1', f4(col('f2_f1'))) \

+.withColumn('f4_f3_f1', f4(col('f3_f1'))) \

+.withColumn('f4_f3_f2', f4(col('f3_f2'))) \

+.withColumn('f4_f3_f2_f1', f4(col('f3_f2_f1')))

+

+# Test mixed udfs in a single expression

+df2 = df \

+.withColumn('f1', f1(col('v'))) \

+.withColumn('f2', f2(col('v'))) \

+.withColumn('f3', f3(col('v'))) \

+.withColumn('f4', f4(col('v'))) \

+.withColumn('f2_f1', f2(f1(col('v' \

+.withColumn('f3_f1', f3(f1(col('v' \

+.withColumn('f4_f1', f4(f1(col('v' \

+.withColumn('f3_f2', f3(f2(col('v' \

+.withColumn('f4_f2', f4(f2(col('v' \

+.withColumn('f4_f3', f4(f3(col('v' \

+.withColumn('f3_f2_f1', f3(f2(f1(col('v') \

+.withColumn('f4_f2_f1', f4(f2(f1(col('v') \

+.withColumn('f4_f3_f1', f4(f3(f1(col('v') \

+.withColumn('f4_f3_f2', f4(f3(f2(col('v') \

+.withColumn('f4_f3_f2_f1', f4(f3(f2(f1(col('v'))

+

+# expected result

+df3 = df \

+.withColumn('f1', df['v'] + 1) \

+.withColumn('f2', df['v'] + 10) \

+.withColumn('f3', df['v'] + 100) \

+.withColumn('f4', df['v'] + 1000) \

+.withColumn('f2_f1', df['v'] + 11) \

+.withColumn('f3_f1', df['v'] + 101) \

+.withColumn('f4_f1', df['v'] + 1001) \

+.withColumn('f3_f2', df['v'] + 110) \

+.withColumn('f4_f2', df['v'] + 1010) \

+.withColumn('f4_f3', df['v'] + 1100) \

+.withColumn('f3_f2_f1', df['v'] + 111) \

+.withColumn('f4_f2_f1', df['v'] + 1011) \

+.withColumn('f4_f3_f1', df['v'] + 1101) \

+.withColumn('f4_f3_f2', df['v'] + 1110) \

+.withColumn('f4_f3_f2_f1', df['v'] + )

+

+self.assertEquals(df3.collect(), df1.collect())

+self.assertEquals(df3.collect(), df2.collect())

+

+def test_mixed_udf_and_sql(self):

+import pandas as pd

+from pyspark.sql.functions import udf, pandas_udf

+

+df = self.spark.range(0, 1).toDF('v')

+

+# Test mixture of UDFs, Pandas UDFs and SQL expression.

+

+@udf('int')

+def f1(x):

+assert type(x) == int

+return x + 1

+

+def f2(x):

--- End diff --

Ah, I see why it looks confusing. Can we add an assert here too (check if

it's a column)?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21320: [SPARK-4502][SQL] Parquet nested column pruning - founda...

Github user mallman commented on the issue: https://github.com/apache/spark/pull/21320 > gentle ping @mallman since the code freeze is close Outside of my primary occupation, my top priority on this PR right now is investigating https://github.com/apache/spark/pull/21320#issuecomment-396498487. I don't think I'm going to get a test file from the OP, so I'm going to try to reproduce the issue on my own. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20949: [SPARK-19018][SQL] Add support for custom encoding on cs...

Github user crafty-coder commented on the issue: https://github.com/apache/spark/pull/20949 @HyukjinKwon and @MaxGekk thanks for your help in this PR! My JIRA Id is also **crafty-coder** --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21863: [SPARK-18874][SQL][FOLLOW-UP] Improvement type mismatche...

Github user mgaido91 commented on the issue: https://github.com/apache/spark/pull/21863 a late LGTM --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21871: [SPARK-24916][SQL] Fix type coercion for IN expre...

GitHub user wangyum opened a pull request: https://github.com/apache/spark/pull/21871 [SPARK-24916][SQL] Fix type coercion for IN expression with subquery ## What changes were proposed in this pull request? The below SQL will throw `AnalysisException`. but it can success on Spark 2.1.x. This pr fix this issue. ```sql CREATE TEMPORARY VIEW t4 AS SELECT * FROM VALUES (CAST(1 AS DOUBLE), CAST(2 AS STRING), CAST(3 AS STRING)) AS t1(t4a, t4b, t4c); CREATE TEMPORARY VIEW t5 AS SELECT * FROM VALUES (CAST(1 AS DECIMAL(18, 0)), CAST(2 AS STRING), CAST(3 AS BIGINT)) AS t1(t5a, t5b, t5c); SELECT * FROM t4 WHERE (t4a, t4b, t4c) IN (SELECT t5a, t5b, t5c FROM t5); ``` ## How was this patch tested? unit tests You can merge this pull request into a Git repository by running: $ git pull https://github.com/wangyum/spark SPARK-24916 Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/21871.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #21871 commit d60185b159ffc1a0d74a8c5bfba0c11ceac4241b Author: Yuming Wang Date: 2018-07-25T07:33:45Z default: findCommonTypeForBinaryComparison(l.dataType, r.dataType, conf).orElse(findTightestCommonType(l.dataType, r.dataType)) commit 8a118b5bdf63a7f4b0f0033c0783aa220c9c1eb1 Author: Yuming Wang Date: 2018-07-25T07:37:03Z findCommonTypeForBinaryComparison(l.dataType, r.dataType, conf).orElse(findWiderTypeForTwo(l.dataType, r.dataType)) commit c306810f0a0e701e6b46434db75bbd9813c7337c Author: Yuming Wang Date: 2018-07-25T07:39:40Z findWiderTypeForTwo(l.dataType, r.dataType) commit daa120e15153c77c17a7966df7b727fcea4bb02b Author: Yuming Wang Date: 2018-07-25T07:42:46Z findCommonTypeForBinaryComparison(l.dataType, r.dataType, conf).orElse(findWiderTypeWithoutStringPromotionForTwo(l.dataType, r.dataType)) commit c84ba4d9823a50953f560e110638a9d4e094b17a Author: Yuming Wang Date: 2018-07-25T07:45:43Z findWiderTypeWithoutStringPromotionForTwo(l.dataType, r.dataType) commit bc41b99b7548a22db1ed278fda1c741fd08b78ef Author: Yuming Wang Date: 2018-07-25T08:03:26Z findCommonTypeForBinaryComparison(l.dataType, r.dataType, conf).orElse(findTightestCommonType(l.dataType, r.dataType)).orElse(findWiderTypeForDecimal(l.dataType, r.dataType)) commit 8ef142f78c22b980fe60d836c56d7d18d221a958 Author: Yuming Wang Date: 2018-07-25T09:27:51Z Fix type coercion for IN expression with subquery --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21871: [SPARK-24916][SQL] Fix type coercion for IN expression w...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21871 **[Test build #93538 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/93538/testReport)** for PR 21871 at commit [`8ef142f`](https://github.com/apache/spark/commit/8ef142f78c22b980fe60d836c56d7d18d221a958). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21871: [SPARK-24916][SQL] Fix type coercion for IN expression w...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21871 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution-unified/1305/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21871: [SPARK-24916][SQL] Fix type coercion for IN expression w...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21871 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21403: [SPARK-24341][SQL] Support only IN subqueries with the s...

Github user mgaido91 commented on the issue: https://github.com/apache/spark/pull/21403 @maryannxue that is feasible too and indeed it was the original implementation I did, I switched to this approach according to [this discussion](https://github.com/apache/spark/pull/21403#discussion_r198826199): the goal was to avoid to change the `In` signature and the parsing logic in the many places. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #19691: [SPARK-14922][SPARK-17732][SQL]ALTER TABLE DROP P...

Github user mgaido91 commented on a diff in the pull request:

https://github.com/apache/spark/pull/19691#discussion_r205047473

--- Diff:

sql/core/src/main/scala/org/apache/spark/sql/execution/command/ddl.scala ---

@@ -510,40 +511,86 @@ case class AlterTableRenamePartitionCommand(

*

* The syntax of this command is:

* {{{

- * ALTER TABLE table DROP [IF EXISTS] PARTITION spec1[, PARTITION spec2,

...] [PURGE];

+ * ALTER TABLE table DROP [IF EXISTS] PARTITION (spec1, expr1)

+ * [, PARTITION (spec2, expr2), ...] [PURGE];

* }}}

*/

case class AlterTableDropPartitionCommand(

tableName: TableIdentifier,

-specs: Seq[TablePartitionSpec],

+partitions: Seq[(TablePartitionSpec, Seq[Expression])],

ifExists: Boolean,

purge: Boolean,

retainData: Boolean)

- extends RunnableCommand {

+ extends RunnableCommand with PredicateHelper {

override def run(sparkSession: SparkSession): Seq[Row] = {

val catalog = sparkSession.sessionState.catalog

val table = catalog.getTableMetadata(tableName)

+val resolver = sparkSession.sessionState.conf.resolver

DDLUtils.verifyAlterTableType(catalog, table, isView = false)

DDLUtils.verifyPartitionProviderIsHive(sparkSession, table, "ALTER

TABLE DROP PARTITION")

-val normalizedSpecs = specs.map { spec =>

- PartitioningUtils.normalizePartitionSpec(

-spec,

-table.partitionColumnNames,

-table.identifier.quotedString,

-sparkSession.sessionState.conf.resolver)

+val toDrop = partitions.flatMap { partition =>

+ if (partition._1.isEmpty && !partition._2.isEmpty) {

+// There are only expressions in this drop condition.

+extractFromPartitionFilter(partition._2, catalog, table, resolver)

+ } else if (!partition._1.isEmpty && partition._2.isEmpty) {

+// There are only partitionSpecs in this drop condition.

+extractFromPartitionSpec(partition._1, table, resolver)

+ } else if (!partition._1.isEmpty && !partition._2.isEmpty) {

+// This drop condition has both partitionSpecs and expressions.

+extractFromPartitionFilter(partition._2, catalog, table,

resolver).intersect(

--- End diff --

thank you @DazhuangSu

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21403: [SPARK-24341][SQL] Support only IN subqueries with the s...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21403 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution-unified/1306/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21403: [SPARK-24341][SQL] Support only IN subqueries with the s...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21403 Build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21403: [SPARK-24341][SQL] Support only IN subqueries with the s...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21403 **[Test build #93539 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/93539/testReport)** for PR 21403 at commit [`0412829`](https://github.com/apache/spark/commit/04128292e6d145ec608166b532c960cac72a500c). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21871: [SPARK-24916][SQL] Fix type coercion for IN expression w...

Github user mgaido91 commented on the issue: https://github.com/apache/spark/pull/21871 I think this is basically the same of what I proposed in https://github.com/apache/spark/pull/19635. Unfortunately, that PR got a bit stuck... --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21858: [SPARK-24899][SQL][DOC] Add example of monotonica...

Github user jaceklaskowski commented on a diff in the pull request:

https://github.com/apache/spark/pull/21858#discussion_r205058875

--- Diff: sql/core/src/main/scala/org/apache/spark/sql/functions.scala ---

@@ -1150,16 +1150,48 @@ object functions {

/**

* A column expression that generates monotonically increasing 64-bit

integers.

*

- * The generated ID is guaranteed to be monotonically increasing and

unique, but not consecutive.

+ * The generated IDs are guaranteed to be monotonically increasing and

unique, but not

+ * consecutive (unless all rows are in the same single partition which

you rarely want due to

+ * the volume of the data).

* The current implementation puts the partition ID in the upper 31

bits, and the record number

* within each partition in the lower 33 bits. The assumption is that

the data frame has

* less than 1 billion partitions, and each partition has less than 8

billion records.

*

- * As an example, consider a `DataFrame` with two partitions, each with

3 records.

- * This expression would return the following IDs:

- *

* {{{

- * 0, 1, 2, 8589934592 (1L << 33), 8589934593, 8589934594.

+ * // Create a dataset with four partitions, each with two rows.

+ * val q = spark.range(start = 0, end = 8, step = 1, numPartitions = 4)

+ *

+ * // Make sure that every partition has the same number of rows

+ * q.mapPartitions(rows => Iterator(rows.size)).foreachPartition(rows =>

assert(rows.next == 2))

+ * q.select(monotonically_increasing_id).show

--- End diff --

I thought about explaining the "internals" of the operator through a more

involved example and actually thought about removing the line 1166 (but

forgot). I think the following lines make for a very in-depth explanation and

use other operators in use.

In other words, I'm in favour of removing the line 1166 and leaving the

others with no changes. Possible?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21803: [SPARK-24849][SPARK-24911][SQL] Converting a value of St...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21803 **[Test build #93540 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/93540/testReport)** for PR 21803 at commit [`60f663d`](https://github.com/apache/spark/commit/60f663d7b12fcb3141eff774a9120f049d837112). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21869: [SPARK-24891][FOLLOWUP][HOT-FIX][2.3] Fix the Compilatio...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21869 **[Test build #93535 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/93535/testReport)** for PR 21869 at commit [`a45bf36`](https://github.com/apache/spark/commit/a45bf360d923e8b187f6579b4a73d24a9222198a). * This patch passes all tests. * This patch merges cleanly. * This patch adds no public classes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21869: [SPARK-24891][FOLLOWUP][HOT-FIX][2.3] Fix the Compilatio...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21869 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21869: [SPARK-24891][FOLLOWUP][HOT-FIX][2.3] Fix the Compilatio...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21869 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/93535/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21650: [SPARK-24624][SQL][PYTHON] Support mixture of Pyt...

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/21650#discussion_r205061160

--- Diff:

sql/core/src/main/scala/org/apache/spark/sql/execution/python/ExtractPythonUDFs.scala

---

@@ -94,36 +95,59 @@ object ExtractPythonUDFFromAggregate extends

Rule[LogicalPlan] {

*/

object ExtractPythonUDFs extends Rule[SparkPlan] with PredicateHelper {

- private def hasPythonUDF(e: Expression): Boolean = {

+ private def hasScalarPythonUDF(e: Expression): Boolean = {

e.find(PythonUDF.isScalarPythonUDF).isDefined

}

- private def canEvaluateInPython(e: PythonUDF): Boolean = {

-e.children match {

- // single PythonUDF child could be chained and evaluated in Python

- case Seq(u: PythonUDF) => canEvaluateInPython(u)

- // Python UDF can't be evaluated directly in JVM

- case children => !children.exists(hasPythonUDF)

+ private def canEvaluateInPython(e: PythonUDF, evalType: Int): Boolean = {

+if (e.evalType != evalType) {

--- End diff --

Can we rename this function or write a comment since Scalar both Vectorized

UDF and normal UDF can be evaluated in Python each but it returns `false` in

this case?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21650: [SPARK-24624][SQL][PYTHON] Support mixture of Python UDF...

Github user HyukjinKwon commented on the issue: https://github.com/apache/spark/pull/21650 I'm okay with https://github.com/apache/spark/pull/21650#issuecomment-407506043's way too but should be really simplified. Either way LGTM. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21858: [SPARK-24899][SQL][DOC] Add example of monotonica...

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/21858#discussion_r205062686

--- Diff: sql/core/src/main/scala/org/apache/spark/sql/functions.scala ---

@@ -1150,16 +1150,48 @@ object functions {

/**

* A column expression that generates monotonically increasing 64-bit

integers.

*

- * The generated ID is guaranteed to be monotonically increasing and

unique, but not consecutive.

+ * The generated IDs are guaranteed to be monotonically increasing and

unique, but not

+ * consecutive (unless all rows are in the same single partition which

you rarely want due to

+ * the volume of the data).

* The current implementation puts the partition ID in the upper 31

bits, and the record number

* within each partition in the lower 33 bits. The assumption is that

the data frame has

* less than 1 billion partitions, and each partition has less than 8

billion records.

*

- * As an example, consider a `DataFrame` with two partitions, each with

3 records.

- * This expression would return the following IDs:

- *

* {{{

- * 0, 1, 2, 8589934592 (1L << 33), 8589934593, 8589934594.

+ * // Create a dataset with four partitions, each with two rows.

+ * val q = spark.range(start = 0, end = 8, step = 1, numPartitions = 4)

+ *

+ * // Make sure that every partition has the same number of rows

+ * q.mapPartitions(rows => Iterator(rows.size)).foreachPartition(rows =>

assert(rows.next == 2))

+ * q.select(monotonically_increasing_id).show