[GitHub] [airflow] dhuang commented on issue #6794: [AIRFLOW-6231] Display DAG run conf in the list view

dhuang commented on issue #6794: [AIRFLOW-6231] Display DAG run conf in the list view URL: https://github.com/apache/airflow/pull/6794#issuecomment-615572227 Apologies for abandoning this, moved over to list view as suggested! PR description updated with screenshots. Made it searchable as well as I think it can be useful and also makes it consistent with both list/add views. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Commented] (AIRFLOW-5548) REST API: get DAGs

[ https://issues.apache.org/jira/browse/AIRFLOW-5548?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17086306#comment-17086306 ] ASF GitHub Bot commented on AIRFLOW-5548: - stale[bot] commented on pull request #6652: [AIRFLOW-5548] [AIRFLOW-5550] REST API enhancement - dag info, task … URL: https://github.com/apache/airflow/pull/6652 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org > REST API: get DAGs > -- > > Key: AIRFLOW-5548 > URL: https://issues.apache.org/jira/browse/AIRFLOW-5548 > Project: Apache Airflow > Issue Type: New Feature > Components: api >Affects Versions: 2.0.0 >Reporter: Norbert Biczo >Assignee: Matt Buell >Priority: Minor > > Like the already implemented [get > pools|[https://airflow.apache.org/api.html#get--api-experimental-pools]] but > with the DAGs. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [airflow] stale[bot] closed pull request #6652: [AIRFLOW-5548] [AIRFLOW-5550] REST API enhancement - dag info, task …

stale[bot] closed pull request #6652: [AIRFLOW-5548] [AIRFLOW-5550] REST API enhancement - dag info, task … URL: https://github.com/apache/airflow/pull/6652 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] mik-laj edited a comment on issue #8432: Provide GCP credentials in Bash/Python operators

mik-laj edited a comment on issue #8432: Provide GCP credentials in Bash/Python operators URL: https://github.com/apache/airflow/pull/8432#issuecomment-615567459 As I started thinking about it for a long time, we can create a `get_subprocess_context_manager` method in ``hook`` and also use the ``get_hook`` method here. I'm afraid it might be overenginnering. However, if you agree with me that we should use the composition, I can try to do it. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] mik-laj edited a comment on issue #8432: Provide GCP credentials in Bash/Python operators

mik-laj edited a comment on issue #8432: Provide GCP credentials in Bash/Python operators URL: https://github.com/apache/airflow/pull/8432#issuecomment-615567459 As I started thinking about it for a long time, we can create a `get_subprocess_context` method and also use the ``get_hook`` method here. I'm afraid it might be overenginnering. However, if you agree with me that we should use the composition, I can try to do it. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] mik-laj edited a comment on issue #8432: Provide GCP credentials in Bash/Python operators

mik-laj edited a comment on issue #8432: Provide GCP credentials in Bash/Python operators URL: https://github.com/apache/airflow/pull/8432#issuecomment-615567459 As I started thinking about it for a long time, we can create a `get_subprocess_context`` method and also use the ``get_hook`` method here. I'm afraid it might be overenginnering. However, if you agree with me that we should use the composition, I can try to do it. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] mik-laj commented on issue #8432: Provide GCP credentials in Bash/Python operators

mik-laj commented on issue #8432: Provide GCP credentials in Bash/Python operators URL: https://github.com/apache/airflow/pull/8432#issuecomment-615567459 As I started thinking about it for a long time, we can create a `get_subprocess_context`` method and also use the ``get_hook`` method here. I'm afraid it might be overenginnering. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] mik-laj edited a comment on issue #8432: Provide GCP credentials in Bash/Python operators

mik-laj edited a comment on issue #8432: Provide GCP credentials in Bash/Python operators URL: https://github.com/apache/airflow/pull/8432#issuecomment-615560449 @potiuk This contradicts the whole idea and the need for this operator. BashOperator and PythonOperaator are very useful because it is universal. Bash and Python are also built by compositions. New applications are installed on the system and can be used by any tool. If we inherit and make customization GCP-specific, we will limit its functionality. It will no longer be a universal operator. You will only be able to use it with one provider. I think this is a similar problem to ``Connection.get_hook``. https://github.com/apache/airflow/blob/master/airflow/models/connection.py#L301 This method is useful because it is universal and can be used regardless of the provider. In the future, if we need to separate the core and providers, we can extend this class with a plugin. A plugin that will add new parameters to the class. Like the get_hook method, it should use the plugin mechanism. I hope that in the future new parameters will be added for other cloud providers, e.g. AWS. ```python cross_platform_task = BashOperator( task_id='gcloud', bash_command=( 'gsutil cp gs//bucket/a.txt a.txt && aws s3 cp test.txt s3://mybucket/test2.txt' ), gcp_conn_id=GCP_PROJECT_ID, aws_conn_id=AWS_PROJECT_ID, ) ``` Then it will still be a universal operator and we will not build a vendor-lock for one providers. From an architectural point of view. Here the use of inheritance will be bad, but we should composition. Inheritance will limit these operators too much. I invite you to read the article.https://en.wikipedia.org/wiki/Composition_over_inheritance I will only cite one fragment. >Note that multiple inheritance is dangerous if not implemented carefully, as it can lead to the diamond problem. One solution to avoid this is to create classes such as **VisibleAndSolid**, **VisibleAndMovable**, **VisibleAndSolidAndMovable**, etc. for every needed combination, though this leads to a large amount of repetitive code. If we replace some words, we have our problem. >Note that multiple inheritance is dangerous if not implemented carefully, as it can lead to the diamond problem. One solution to avoid this is to create classes such as **GoogleAndAws**, **GoogleAndAzure**, **AwsAndAzureAndGoogle**, etc. for every needed combination, though this leads to a large amount of repetitive code. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] mik-laj edited a comment on issue #8432: Provide GCP credentials in Bash/Python operators

mik-laj edited a comment on issue #8432: Provide GCP credentials in Bash/Python operators URL: https://github.com/apache/airflow/pull/8432#issuecomment-615560449 @potiuk This contradicts the whole idea and the need for this operator. BashOperator and PythonOperaator are very useful because it is universal. Bash and Python are also built by compositions. New applications are installed on the system and can be used by any tool. If we inherit and make customization GCP-specific, we will limit its functionality. It will no longer be a universal operator. You will only be able to use it with one provider. I think this is a similar problem to ``Connection.get_hook``. https://github.com/apache/airflow/blob/master/airflow/models/connection.py#L301 This method is useful because it is universal and can be used regardless of the provider. In the future, if we need to separate the core and providers, we can extend this class with a plugin. A plugin that will add new parameters to the class. Like the get_hook method, it should use the plugin mechanism. I hope that in the future new parameters will be added for other cloud providers, e.g. AWS. ```python cross_platform_task = BashOperator( task_id='gcloud', bash_command=( 'gsutil cp gs//bucket/a.txt a.txt && aws s3 cp test.txt s3://mybucket/test2.txt' ), gcp_conn_id=GCP_PROJECT_ID, aws_conn_id=AWS_PROJECT_ID, ) ``` Then it will still be a universal operator and we will not build a vendor-lock for one providers. From an architectural point of view. Here the use of inheritance will be bad, but we should composition. Inheritance will limit these operators too much. I invite you to read the article.https://en.wikipedia.org/wiki/Composition_over_inheritance I will only cite one fragment. >Note that multiple inheritance is dangerous if not implemented carefully, as it can lead to the diamond problem. One solution to avoid this is to create classes such as **VisibleAndSolid**, **VisibleAndMovable**, **VisibleAndSolidAndMovable**, etc. for every needed combination, though this leads to a large amount of repetitive code. If we replace some words, we have our problem. >Note that multiple inheritance is dangerous if not implemented carefully, as it can lead to the diamond problem. One solution to avoid this is to create classes such as **GoogleAndAws**, **GoogleAndAzure**, **AwsAndAzureAndGoogle**, etc. for every needed combination, though this leads to a large amount of repetitive code. However, this is one of the parts that should be resolved by [AIP-8](https://cwiki.apache.org/confluence/pages/viewpage.action?pageId=100827303). We do not have enough use cases yet. It will be very difficult to build abstractions if we only support GCP. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] mik-laj edited a comment on issue #8432: Provide GCP credentials in Bash/Python operators

mik-laj edited a comment on issue #8432: Provide GCP credentials in Bash/Python operators URL: https://github.com/apache/airflow/pull/8432#issuecomment-615560449 @potiuk This contradicts the whole idea and the need for this operator. BashOperator and PythonOperaator are very useful because it is universal. Bash and Python are also built by compositions. New applications are installed on the system and can be used by any tool. If we inherit and make customization GCP-specific, we will limit its functionality. It will no longer be a universal operator. You will only be able to use it with one provider. I think this is a similar problem to ``Connection.get_hook``. https://github.com/apache/airflow/blob/master/airflow/models/connection.py#L301 This method is useful because it is universal and can be used regardless of the provider. In the future, if we need to separate the core and providers, we can extend this class with a plugin. A plugin that will add new parameters to the class. Like the get_hook method, it should use the plugin mechanism. I hope that in the future new parameters will be added for other cloud providers, e.g. AWS. ```python cross_platform_task = BashOperator( task_id='gcloud', bash_command=( 'gsutil cp gs//bucket/a.txt a.txt && aws s3 cp test.txt s3://mybucket/test2.txt' ), gcp_conn_id=GCP_PROJECT_ID, aws_conn_id=AWS_PROJECT_ID, ) ``` Then it will still be a universal operator and we will not build a vendor-lock for one providers. From an architectural point of view. Here the use of inheritance will be bad, but we should composition. Inheritance will limit these operators too much. I invite you to read the article.https://en.wikipedia.org/wiki/Composition_over_inheritance I will only cite one fragment. >Note that multiple inheritance is dangerous if not implemented carefully, as it can lead to the diamond problem. One solution to avoid this is to create classes such as **VisibleAndSolid**, **VisibleAndMovable**, **VisibleAndSolidAndMovable**, etc. for every needed combination, though this leads to a large amount of repetitive code. If we replace some words, we have our problem. >Note that multiple inheritance is dangerous if not implemented carefully, as it can lead to the diamond problem. One solution to avoid this is to create classes such as **GoogleAndAws**, **GoogleAndAzure**, **AwsAndAzureAndGoogle**, etc. for every needed combination, though this leads to a large amount of repetitive code. However, this is one of the parts that should be resolved by [AIP-8](https://cwiki.apache.org/confluence/pages/viewpage.action?pageId=100827303). This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] mik-laj commented on issue #8432: Provide GCP credentials in Bash/Python operators

mik-laj commented on issue #8432: Provide GCP credentials in Bash/Python operators URL: https://github.com/apache/airflow/pull/8432#issuecomment-615560449 @potiuk This contradicts the whole idea and the need for this operator. BashOperator and PythonOperaator are very useful because it is universal. Bash and Python are also built by compositions. New applications are installed on the system and can be used by any tool. If we inherit and make customization GCP-specific, we will limit its functionality. It will no longer be a universal operator. You will only be able to use it with one provider. I think this is a similar problem to ``Connection.get_hook``. https://github.com/apache/airflow/blob/master/airflow/models/connection.py#L301 This method is useful because it is universal and can be used regardless of the provider. In the future, if we need to separate the core and providers, we can extend this class with a plugin. A plugin that will add new parameters to the class. Like the get_hook method, it should use the plugin mechanism. From an architectural point of view. Here the use of inheritance will be bad, but we should composition. Inheritance will limit these operators too much. More information: https://en.wikipedia.org/wiki/Composition_over_inheritance I hope that in the future new parameters will be added for other cloud providers, e.g. AWS. ``` cross_platform_task = BashOperator( task_id='gcloud', bash_command=( 'gsutil cp gs//bucket/a.txt a.txt && aws s3 cp test.txt s3://mybucket/test2.txt' ), gcp_conn_id=GCP_PROJECT_ID, aws_conn_id=AWS_PROJECT_ID, ) ``` Then it will still be a universal operator and we will not build a vendor-lock for one providers. However, this is one of the parts that should be resolved by [AIP-8](https://cwiki.apache.org/confluence/pages/viewpage.action?pageId=100827303). This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] mik-laj edited a comment on issue #8432: Provide GCP credentials in Bash/Python operators

mik-laj edited a comment on issue #8432: Provide GCP credentials in Bash/Python operators URL: https://github.com/apache/airflow/pull/8432#issuecomment-615560449 @potiuk This contradicts the whole idea and the need for this operator. BashOperator and PythonOperaator are very useful because it is universal. Bash and Python are also built by compositions. New applications are installed on the system and can be used by any tool. If we inherit and make customization GCP-specific, we will limit its functionality. It will no longer be a universal operator. You will only be able to use it with one provider. I think this is a similar problem to ``Connection.get_hook``. https://github.com/apache/airflow/blob/master/airflow/models/connection.py#L301 This method is useful because it is universal and can be used regardless of the provider. In the future, if we need to separate the core and providers, we can extend this class with a plugin. A plugin that will add new parameters to the class. Like the get_hook method, it should use the plugin mechanism. From an architectural point of view. Here the use of inheritance will be bad, but we should composition. Inheritance will limit these operators too much. More information: https://en.wikipedia.org/wiki/Composition_over_inheritance I hope that in the future new parameters will be added for other cloud providers, e.g. AWS. ```python cross_platform_task = BashOperator( task_id='gcloud', bash_command=( 'gsutil cp gs//bucket/a.txt a.txt && aws s3 cp test.txt s3://mybucket/test2.txt' ), gcp_conn_id=GCP_PROJECT_ID, aws_conn_id=AWS_PROJECT_ID, ) ``` Then it will still be a universal operator and we will not build a vendor-lock for one providers. However, this is one of the parts that should be resolved by [AIP-8](https://cwiki.apache.org/confluence/pages/viewpage.action?pageId=100827303). This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] potiuk commented on issue #8432: Provide GCP credentials in Bash/Python operators

potiuk commented on issue #8432: Provide GCP credentials in Bash/Python operators URL: https://github.com/apache/airflow/pull/8432#issuecomment-615544056 I think it should be a gcp_bash_operator.py deriving from Bash operator and it should be in providers/google. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Commented] (AIRFLOW-5156) Add other authentication mechanisms to HttpHook

[

https://issues.apache.org/jira/browse/AIRFLOW-5156?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17086231#comment-17086231

]

ASF GitHub Bot commented on AIRFLOW-5156:

-

potiuk commented on pull request #8429: [AIRFLOW-5156] Added auth type to

HttpHook

URL: https://github.com/apache/airflow/pull/8429

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Add other authentication mechanisms to HttpHook

> ---

>

> Key: AIRFLOW-5156

> URL: https://issues.apache.org/jira/browse/AIRFLOW-5156

> Project: Apache Airflow

> Issue Type: Improvement

> Components: hooks

>Affects Versions: 1.10.4

>Reporter: Joshua Kornblum

>Assignee: Rohit S S

>Priority: Minor

>

> It looks like the only supported authentication for HttpHooks is basic auth.

> The hook code shows

> {quote}_if conn.login:_

> _session.auth = (conn.login, conn.password)_

> {quote}

> requests library supports any auth that inherits AuthBase – in my scenario we

> need ntlmauth for API on IIS server.

> [https://2.python-requests.org/en/master/user/advanced/#custom-authentication]

> I would suggest option to pass auth object in constructor then add to if/else

> control flow like

> {quote}_if self.auth is not None:_

> _session.auth = self.auth_

> _elif conn.login:_

> _session.auth = (conn.login, conn.password)_

> {quote}

> One would have to fetch the connection themselves and then fill out auth and

> then pass that to hook which is flexible although a little awkard.

> {quote}api_conn = BaseHook().get_connection('my_api')

> auth = HttpNtlmAuth(api_conn.login, api_conn.password)

> HttpSensor(task_id='sensing', auth=auth, )

> {quote}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (AIRFLOW-5156) Add other authentication mechanisms to HttpHook

[

https://issues.apache.org/jira/browse/AIRFLOW-5156?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17086232#comment-17086232

]

ASF subversion and git services commented on AIRFLOW-5156:

--

Commit d61a476da3a649bf2c1d347b9cb3abc62eae3ce9 in airflow's branch

refs/heads/master from S S Rohit

[ https://gitbox.apache.org/repos/asf?p=airflow.git;h=d61a476 ]

[AIRFLOW-5156] Added auth type to HttpHook (#8429)

> Add other authentication mechanisms to HttpHook

> ---

>

> Key: AIRFLOW-5156

> URL: https://issues.apache.org/jira/browse/AIRFLOW-5156

> Project: Apache Airflow

> Issue Type: Improvement

> Components: hooks

>Affects Versions: 1.10.4

>Reporter: Joshua Kornblum

>Assignee: Rohit S S

>Priority: Minor

>

> It looks like the only supported authentication for HttpHooks is basic auth.

> The hook code shows

> {quote}_if conn.login:_

> _session.auth = (conn.login, conn.password)_

> {quote}

> requests library supports any auth that inherits AuthBase – in my scenario we

> need ntlmauth for API on IIS server.

> [https://2.python-requests.org/en/master/user/advanced/#custom-authentication]

> I would suggest option to pass auth object in constructor then add to if/else

> control flow like

> {quote}_if self.auth is not None:_

> _session.auth = self.auth_

> _elif conn.login:_

> _session.auth = (conn.login, conn.password)_

> {quote}

> One would have to fetch the connection themselves and then fill out auth and

> then pass that to hook which is flexible although a little awkard.

> {quote}api_conn = BaseHook().get_connection('my_api')

> auth = HttpNtlmAuth(api_conn.login, api_conn.password)

> HttpSensor(task_id='sensing', auth=auth, )

> {quote}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [airflow] potiuk merged pull request #8429: [AIRFLOW-5156] Added auth type to HttpHook

potiuk merged pull request #8429: [AIRFLOW-5156] Added auth type to HttpHook URL: https://github.com/apache/airflow/pull/8429 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] codecov-io edited a comment on issue #8432: Provide GCP credentials in Bash/Python operators

codecov-io edited a comment on issue #8432: Provide GCP credentials in Bash/Python operators URL: https://github.com/apache/airflow/pull/8432#issuecomment-615537382 # [Codecov](https://codecov.io/gh/apache/airflow/pull/8432?src=pr=h1) Report > Merging [#8432](https://codecov.io/gh/apache/airflow/pull/8432?src=pr=desc) into [master](https://codecov.io/gh/apache/airflow/commit/96df427e07601e331afd6990ce7613b2026acfe0=desc) will **decrease** coverage by `0.00%`. > The diff coverage is `0.00%`. [](https://codecov.io/gh/apache/airflow/pull/8432?src=pr=tree) ```diff @@Coverage Diff@@ ## master #8432 +/- ## = - Coverage6.23% 6.22% -0.01% = Files 946 950 +4 Lines 45661 45723 +62 = Hits 28462846 - Misses 42815 42877 +62 ``` | [Impacted Files](https://codecov.io/gh/apache/airflow/pull/8432?src=pr=tree) | Coverage Δ | | |---|---|---| | [...rflow/example\_dags/example\_google\_bash\_operator.py](https://codecov.io/gh/apache/airflow/pull/8432/diff?src=pr=tree#diff-YWlyZmxvdy9leGFtcGxlX2RhZ3MvZXhhbXBsZV9nb29nbGVfYmFzaF9vcGVyYXRvci5weQ==) | `0.00% <0.00%> (ø)` | | | [...dags/example\_google\_bash\_operator\_custom\_script.py](https://codecov.io/gh/apache/airflow/pull/8432/diff?src=pr=tree#diff-YWlyZmxvdy9leGFtcGxlX2RhZ3MvZXhhbXBsZV9nb29nbGVfYmFzaF9vcGVyYXRvcl9jdXN0b21fc2NyaXB0LnB5) | `0.00% <0.00%> (ø)` | | | [...low/example\_dags/example\_google\_python\_operator.py](https://codecov.io/gh/apache/airflow/pull/8432/diff?src=pr=tree#diff-YWlyZmxvdy9leGFtcGxlX2RhZ3MvZXhhbXBsZV9nb29nbGVfcHl0aG9uX29wZXJhdG9yLnB5) | `0.00% <0.00%> (ø)` | | | [airflow/operators/bash.py](https://codecov.io/gh/apache/airflow/pull/8432/diff?src=pr=tree#diff-YWlyZmxvdy9vcGVyYXRvcnMvYmFzaC5weQ==) | `0.00% <0.00%> (ø)` | | | [airflow/operators/python.py](https://codecov.io/gh/apache/airflow/pull/8432/diff?src=pr=tree#diff-YWlyZmxvdy9vcGVyYXRvcnMvcHl0aG9uLnB5) | `0.00% <0.00%> (ø)` | | | [airflow/utils/documentation.py](https://codecov.io/gh/apache/airflow/pull/8432/diff?src=pr=tree#diff-YWlyZmxvdy91dGlscy9kb2N1bWVudGF0aW9uLnB5) | `0.00% <0.00%> (ø)` | | | [airflow/www/app.py](https://codecov.io/gh/apache/airflow/pull/8432/diff?src=pr=tree#diff-YWlyZmxvdy93d3cvYXBwLnB5) | `0.00% <0.00%> (ø)` | | | ... and [1 more](https://codecov.io/gh/apache/airflow/pull/8432/diff?src=pr=tree-more) | | -- [Continue to review full report at Codecov](https://codecov.io/gh/apache/airflow/pull/8432?src=pr=continue). > **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta) > `Δ = absolute (impact)`, `ø = not affected`, `? = missing data` > Powered by [Codecov](https://codecov.io/gh/apache/airflow/pull/8432?src=pr=footer). Last update [96df427...235382f](https://codecov.io/gh/apache/airflow/pull/8432?src=pr=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments). This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] codecov-io edited a comment on issue #8432: Provide GCP credentials in Bash/Python operators

codecov-io edited a comment on issue #8432: Provide GCP credentials in Bash/Python operators URL: https://github.com/apache/airflow/pull/8432#issuecomment-615537382 # [Codecov](https://codecov.io/gh/apache/airflow/pull/8432?src=pr=h1) Report > Merging [#8432](https://codecov.io/gh/apache/airflow/pull/8432?src=pr=desc) into [master](https://codecov.io/gh/apache/airflow/commit/96df427e07601e331afd6990ce7613b2026acfe0=desc) will **decrease** coverage by `0.00%`. > The diff coverage is `0.00%`. [](https://codecov.io/gh/apache/airflow/pull/8432?src=pr=tree) ```diff @@Coverage Diff@@ ## master #8432 +/- ## = - Coverage6.23% 6.22% -0.01% = Files 946 950 +4 Lines 45661 45723 +62 = Hits 28462846 - Misses 42815 42877 +62 ``` | [Impacted Files](https://codecov.io/gh/apache/airflow/pull/8432?src=pr=tree) | Coverage Δ | | |---|---|---| | [...rflow/example\_dags/example\_google\_bash\_operator.py](https://codecov.io/gh/apache/airflow/pull/8432/diff?src=pr=tree#diff-YWlyZmxvdy9leGFtcGxlX2RhZ3MvZXhhbXBsZV9nb29nbGVfYmFzaF9vcGVyYXRvci5weQ==) | `0.00% <0.00%> (ø)` | | | [...dags/example\_google\_bash\_operator\_custom\_script.py](https://codecov.io/gh/apache/airflow/pull/8432/diff?src=pr=tree#diff-YWlyZmxvdy9leGFtcGxlX2RhZ3MvZXhhbXBsZV9nb29nbGVfYmFzaF9vcGVyYXRvcl9jdXN0b21fc2NyaXB0LnB5) | `0.00% <0.00%> (ø)` | | | [...low/example\_dags/example\_google\_python\_operator.py](https://codecov.io/gh/apache/airflow/pull/8432/diff?src=pr=tree#diff-YWlyZmxvdy9leGFtcGxlX2RhZ3MvZXhhbXBsZV9nb29nbGVfcHl0aG9uX29wZXJhdG9yLnB5) | `0.00% <0.00%> (ø)` | | | [airflow/operators/bash.py](https://codecov.io/gh/apache/airflow/pull/8432/diff?src=pr=tree#diff-YWlyZmxvdy9vcGVyYXRvcnMvYmFzaC5weQ==) | `0.00% <0.00%> (ø)` | | | [airflow/operators/python.py](https://codecov.io/gh/apache/airflow/pull/8432/diff?src=pr=tree#diff-YWlyZmxvdy9vcGVyYXRvcnMvcHl0aG9uLnB5) | `0.00% <0.00%> (ø)` | | | [airflow/utils/documentation.py](https://codecov.io/gh/apache/airflow/pull/8432/diff?src=pr=tree#diff-YWlyZmxvdy91dGlscy9kb2N1bWVudGF0aW9uLnB5) | `0.00% <0.00%> (ø)` | | | [airflow/www/app.py](https://codecov.io/gh/apache/airflow/pull/8432/diff?src=pr=tree#diff-YWlyZmxvdy93d3cvYXBwLnB5) | `0.00% <0.00%> (ø)` | | | ... and [1 more](https://codecov.io/gh/apache/airflow/pull/8432/diff?src=pr=tree-more) | | -- [Continue to review full report at Codecov](https://codecov.io/gh/apache/airflow/pull/8432?src=pr=continue). > **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta) > `Δ = absolute (impact)`, `ø = not affected`, `? = missing data` > Powered by [Codecov](https://codecov.io/gh/apache/airflow/pull/8432?src=pr=footer). Last update [96df427...235382f](https://codecov.io/gh/apache/airflow/pull/8432?src=pr=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments). This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] codecov-io edited a comment on issue #8432: Provide GCP credentials in Bash/Python operators

codecov-io edited a comment on issue #8432: Provide GCP credentials in Bash/Python operators URL: https://github.com/apache/airflow/pull/8432#issuecomment-615537382 # [Codecov](https://codecov.io/gh/apache/airflow/pull/8432?src=pr=h1) Report > Merging [#8432](https://codecov.io/gh/apache/airflow/pull/8432?src=pr=desc) into [master](https://codecov.io/gh/apache/airflow/commit/96df427e07601e331afd6990ce7613b2026acfe0=desc) will **decrease** coverage by `0.00%`. > The diff coverage is `0.00%`. [](https://codecov.io/gh/apache/airflow/pull/8432?src=pr=tree) ```diff @@Coverage Diff@@ ## master #8432 +/- ## = - Coverage6.23% 6.22% -0.01% = Files 946 950 +4 Lines 45661 45723 +62 = Hits 28462846 - Misses 42815 42877 +62 ``` | [Impacted Files](https://codecov.io/gh/apache/airflow/pull/8432?src=pr=tree) | Coverage Δ | | |---|---|---| | [...rflow/example\_dags/example\_google\_bash\_operator.py](https://codecov.io/gh/apache/airflow/pull/8432/diff?src=pr=tree#diff-YWlyZmxvdy9leGFtcGxlX2RhZ3MvZXhhbXBsZV9nb29nbGVfYmFzaF9vcGVyYXRvci5weQ==) | `0.00% <0.00%> (ø)` | | | [...dags/example\_google\_bash\_operator\_custom\_script.py](https://codecov.io/gh/apache/airflow/pull/8432/diff?src=pr=tree#diff-YWlyZmxvdy9leGFtcGxlX2RhZ3MvZXhhbXBsZV9nb29nbGVfYmFzaF9vcGVyYXRvcl9jdXN0b21fc2NyaXB0LnB5) | `0.00% <0.00%> (ø)` | | | [...low/example\_dags/example\_google\_python\_operator.py](https://codecov.io/gh/apache/airflow/pull/8432/diff?src=pr=tree#diff-YWlyZmxvdy9leGFtcGxlX2RhZ3MvZXhhbXBsZV9nb29nbGVfcHl0aG9uX29wZXJhdG9yLnB5) | `0.00% <0.00%> (ø)` | | | [airflow/operators/bash.py](https://codecov.io/gh/apache/airflow/pull/8432/diff?src=pr=tree#diff-YWlyZmxvdy9vcGVyYXRvcnMvYmFzaC5weQ==) | `0.00% <0.00%> (ø)` | | | [airflow/operators/python.py](https://codecov.io/gh/apache/airflow/pull/8432/diff?src=pr=tree#diff-YWlyZmxvdy9vcGVyYXRvcnMvcHl0aG9uLnB5) | `0.00% <0.00%> (ø)` | | | [airflow/utils/documentation.py](https://codecov.io/gh/apache/airflow/pull/8432/diff?src=pr=tree#diff-YWlyZmxvdy91dGlscy9kb2N1bWVudGF0aW9uLnB5) | `0.00% <0.00%> (ø)` | | | [airflow/www/app.py](https://codecov.io/gh/apache/airflow/pull/8432/diff?src=pr=tree#diff-YWlyZmxvdy93d3cvYXBwLnB5) | `0.00% <0.00%> (ø)` | | | ... and [1 more](https://codecov.io/gh/apache/airflow/pull/8432/diff?src=pr=tree-more) | | -- [Continue to review full report at Codecov](https://codecov.io/gh/apache/airflow/pull/8432?src=pr=continue). > **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta) > `Δ = absolute (impact)`, `ø = not affected`, `? = missing data` > Powered by [Codecov](https://codecov.io/gh/apache/airflow/pull/8432?src=pr=footer). Last update [96df427...235382f](https://codecov.io/gh/apache/airflow/pull/8432?src=pr=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments). This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] codecov-io commented on issue #8432: Provide GCP credentials in Bash/Python operators

codecov-io commented on issue #8432: Provide GCP credentials in Bash/Python operators URL: https://github.com/apache/airflow/pull/8432#issuecomment-615537382 # [Codecov](https://codecov.io/gh/apache/airflow/pull/8432?src=pr=h1) Report > Merging [#8432](https://codecov.io/gh/apache/airflow/pull/8432?src=pr=desc) into [master](https://codecov.io/gh/apache/airflow/commit/96df427e07601e331afd6990ce7613b2026acfe0=desc) will **decrease** coverage by `0.00%`. > The diff coverage is `0.00%`. [](https://codecov.io/gh/apache/airflow/pull/8432?src=pr=tree) ```diff @@Coverage Diff@@ ## master #8432 +/- ## = - Coverage6.23% 6.22% -0.01% = Files 946 950 +4 Lines 45661 45723 +62 = Hits 28462846 - Misses 42815 42877 +62 ``` | [Impacted Files](https://codecov.io/gh/apache/airflow/pull/8432?src=pr=tree) | Coverage Δ | | |---|---|---| | [...rflow/example\_dags/example\_google\_bash\_operator.py](https://codecov.io/gh/apache/airflow/pull/8432/diff?src=pr=tree#diff-YWlyZmxvdy9leGFtcGxlX2RhZ3MvZXhhbXBsZV9nb29nbGVfYmFzaF9vcGVyYXRvci5weQ==) | `0.00% <0.00%> (ø)` | | | [...dags/example\_google\_bash\_operator\_custom\_script.py](https://codecov.io/gh/apache/airflow/pull/8432/diff?src=pr=tree#diff-YWlyZmxvdy9leGFtcGxlX2RhZ3MvZXhhbXBsZV9nb29nbGVfYmFzaF9vcGVyYXRvcl9jdXN0b21fc2NyaXB0LnB5) | `0.00% <0.00%> (ø)` | | | [...low/example\_dags/example\_google\_python\_operator.py](https://codecov.io/gh/apache/airflow/pull/8432/diff?src=pr=tree#diff-YWlyZmxvdy9leGFtcGxlX2RhZ3MvZXhhbXBsZV9nb29nbGVfcHl0aG9uX29wZXJhdG9yLnB5) | `0.00% <0.00%> (ø)` | | | [airflow/operators/bash.py](https://codecov.io/gh/apache/airflow/pull/8432/diff?src=pr=tree#diff-YWlyZmxvdy9vcGVyYXRvcnMvYmFzaC5weQ==) | `0.00% <0.00%> (ø)` | | | [airflow/operators/python.py](https://codecov.io/gh/apache/airflow/pull/8432/diff?src=pr=tree#diff-YWlyZmxvdy9vcGVyYXRvcnMvcHl0aG9uLnB5) | `0.00% <0.00%> (ø)` | | | [airflow/utils/documentation.py](https://codecov.io/gh/apache/airflow/pull/8432/diff?src=pr=tree#diff-YWlyZmxvdy91dGlscy9kb2N1bWVudGF0aW9uLnB5) | `0.00% <0.00%> (ø)` | | | [airflow/www/app.py](https://codecov.io/gh/apache/airflow/pull/8432/diff?src=pr=tree#diff-YWlyZmxvdy93d3cvYXBwLnB5) | `0.00% <0.00%> (ø)` | | | ... and [1 more](https://codecov.io/gh/apache/airflow/pull/8432/diff?src=pr=tree-more) | | -- [Continue to review full report at Codecov](https://codecov.io/gh/apache/airflow/pull/8432?src=pr=continue). > **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta) > `Δ = absolute (impact)`, `ø = not affected`, `? = missing data` > Powered by [Codecov](https://codecov.io/gh/apache/airflow/pull/8432?src=pr=footer). Last update [96df427...235382f](https://codecov.io/gh/apache/airflow/pull/8432?src=pr=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments). This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] mik-laj opened a new pull request #8432: Provide GCP credentials in Bash/Python operators

mik-laj opened a new pull request #8432: Provide GCP credentials in Bash/Python operators URL: https://github.com/apache/airflow/pull/8432 --- Make sure to mark the boxes below before creating PR: [x] - [X] Description above provides context of the change - [X] Unit tests coverage for changes (not needed for documentation changes) - [X] Commits follow "[How to write a good git commit message](http://chris.beams.io/posts/git-commit/)" - [X] Relevant documentation is updated including usage instructions. - [X] I will engage committers as explained in [Contribution Workflow Example](https://github.com/apache/airflow/blob/master/CONTRIBUTING.rst#contribution-workflow-example). --- In case of fundamental code change, Airflow Improvement Proposal ([AIP](https://cwiki.apache.org/confluence/display/AIRFLOW/Airflow+Improvements+Proposals)) is needed. In case of a new dependency, check compliance with the [ASF 3rd Party License Policy](https://www.apache.org/legal/resolved.html#category-x). In case of backwards incompatible changes please leave a note in [UPDATING.md](https://github.com/apache/airflow/blob/master/UPDATING.md). Read the [Pull Request Guidelines](https://github.com/apache/airflow/blob/master/CONTRIBUTING.rst#pull-request-guidelines) for more information. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] casassg commented on issue #8052: [AIP-31] Create XComArg model

casassg commented on issue #8052: [AIP-31] Create XComArg model URL: https://github.com/apache/airflow/issues/8052#issuecomment-615531173 I've been a bit overwhelmed with the work from home situation. Will try to get to this on the weekend or next week. Otherwise, please feel free to take it from me This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] dedunumax commented on issue #8418: Update docker operator network documentation

dedunumax commented on issue #8418: Update docker operator network documentation URL: https://github.com/apache/airflow/issues/8418#issuecomment-615487650 I added the link on docker documentation. network_method is used to define Docker network drivers as I understood. Correct me if I am wrong @pablosjv. Thank you. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow-site] dedunumax opened a new pull request #266: Improve documentation on DockerOperator

dedunumax opened a new pull request #266: Improve documentation on DockerOperator URL: https://github.com/apache/airflow-site/pull/266 https://github.com/apache/airflow/issues/8418 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] alexsstock commented on a change in pull request #8256: updated _write_args on PythonVirtualenvOperator

alexsstock commented on a change in pull request #8256: updated _write_args on PythonVirtualenvOperator URL: https://github.com/apache/airflow/pull/8256#discussion_r410483125 ## File path: airflow/operators/python_operator.py ## @@ -330,13 +330,28 @@ def _write_string_args(self, filename): def _write_args(self, input_filename): # serialize args to file +if self.use_dill: +serializer = dill +else: +serializer = pickle +# some args from context can't be loaded in virtual env +invalid_args = set(['dag', 'task', 'ti']) Review comment: This helped me thanks @maganaluis This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Commented] (AIRFLOW-3347) Unable to configure Kubernetes secrets through environment

[

https://issues.apache.org/jira/browse/AIRFLOW-3347?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17086061#comment-17086061

]

Kaxil Naik commented on AIRFLOW-3347:

-

Duplicate of https://issues.apache.org/jira/browse/AIRFLOW-5030 . Solved by

https://github.com/apache/airflow/pull/5650

> Unable to configure Kubernetes secrets through environment

> --

>

> Key: AIRFLOW-3347

> URL: https://issues.apache.org/jira/browse/AIRFLOW-3347

> Project: Apache Airflow

> Issue Type: Bug

> Components: configuration, executors

>Affects Versions: 1.10.0

>Reporter: Chris Bandy

>Priority: Major

> Labels: kubernetes

>

> We configure Airflow through environment variables. While setting up the

> Kubernetes Executor, we wanted to pass the SQL Alchemy connection string to

> workers by including it the {{kubernetes_secrets}} section of config.

> Unfortunately, even with

> {{AIRFLOW_\_KUBERNETES_SECRETS_\_AIRFLOW_\_CORE_\_SQL_ALCHEMY_CONN}} set in

> the scheduler environment, the worker gets no environment secret environment

> variables.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Closed] (AIRFLOW-3347) Unable to configure Kubernetes secrets through environment

[

https://issues.apache.org/jira/browse/AIRFLOW-3347?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Kaxil Naik closed AIRFLOW-3347.

---

Resolution: Duplicate

> Unable to configure Kubernetes secrets through environment

> --

>

> Key: AIRFLOW-3347

> URL: https://issues.apache.org/jira/browse/AIRFLOW-3347

> Project: Apache Airflow

> Issue Type: Bug

> Components: configuration, executors

>Affects Versions: 1.10.0

>Reporter: Chris Bandy

>Priority: Major

> Labels: kubernetes

>

> We configure Airflow through environment variables. While setting up the

> Kubernetes Executor, we wanted to pass the SQL Alchemy connection string to

> workers by including it the {{kubernetes_secrets}} section of config.

> Unfortunately, even with

> {{AIRFLOW_\_KUBERNETES_SECRETS_\_AIRFLOW_\_CORE_\_SQL_ALCHEMY_CONN}} set in

> the scheduler environment, the worker gets no environment secret environment

> variables.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [airflow] khyurri commented on a change in pull request #8430: Improve idempodency in CloudDataTransferServiceCreateJobOperator

khyurri commented on a change in pull request #8430: Improve idempodency in

CloudDataTransferServiceCreateJobOperator

URL: https://github.com/apache/airflow/pull/8430#discussion_r410444548

##

File path:

airflow/providers/google/cloud/hooks/cloud_storage_transfer_service.py

##

@@ -98,6 +105,22 @@ class GcpTransferOperationStatus:

NEGATIVE_STATUSES = {GcpTransferOperationStatus.FAILED,

GcpTransferOperationStatus.ABORTED}

+def gen_job_name(job_name: str) -> str:

+"""

+Adds unique suffix to job name. If suffix already exists, updates it.

+Suffix — current timestamp

+:param job_name:

+:rtype job_name: str

+:return:

+"""

+split = job_name.split("_")

+uniq = str(int(time.time()))

+if len(split) > 1 and re.compile("^[0-9]{10}$").match(split[-1]):

+split[-1] = uniq

+return "_".join(split)

+return "_".join([job_name, uniq])

Review comment:

Sure we can, i've coded it before your advise. I'll fix it

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [airflow] ephraimbuddy commented on issue #8272: Cloud Life Sciences operator and hook

ephraimbuddy commented on issue #8272: Cloud Life Sciences operator and hook URL: https://github.com/apache/airflow/issues/8272#issuecomment-615427650 Thank you so much. These are more than enough. I really appreciate! This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] mik-laj edited a comment on issue #8272: Cloud Life Sciences operator and hook

mik-laj edited a comment on issue #8272: Cloud Life Sciences operator and hook

URL: https://github.com/apache/airflow/issues/8272#issuecomment-615426469

@ephraimbuddy Google Cloud has two types of libraries.

* Native python library - https://github.com/googleapis/google-cloud-python

It exists for most, but not for all services. These are recommended. libraries.

Most often they use Protobuf for communication.

* Discovery based - https://github.com/googleapis/google-api-python-client

These are libraries that are automatically generated based on the API

specification (called the discovery document) at the time of use There are

always Googlle services for everyone and they have all the options - it's

always fresh. For communication uses HTTP only

We don't have a native library for this library, so we need to use

[google-api-client-python](https://github.com/googleapis/google-api-python-client)..

In order to initialize the library, you should use the following code.

```python

from googleapiclient.discovery import build

service = build('lifesciences', 'v2beta', ...)

```

Unfortunately, there is no documentation for this library, but you can build

a client and check what methods exist in this API using ipdb

Documentation for other service is available here:

https://github.com/googleapis/google-api-python-client/blob/master/docs/dyn/index.md

Here is an example of how to check documentation for dataflow.

```python

from googleapiclient.discovery import build

dataflow_service = build('dataflow', 'v1b3')

projects_resource = dataflow_service.projects()

locations_resource = projects_resource.locations()

flex_templates_resource = locations_resource.flexTemplates()

print(flex_templates_resource.launch.__doc__)

```

These APIs are automatically generated based on the REST API, so you can

check the general idea and required arguments in the REST API documentation for

the Life Science service.

https://cloud.google.com/life-sciences/docs/reference/rest

If you looking for example hook, you should look at Cloud Build:

https://github.com/apache/airflow/blob/master/airflow/providers/google/cloud/hooks/cloud_build.py

It still uses discovery-based client

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [airflow] mik-laj commented on issue #8272: Cloud Life Sciences operator and hook

mik-laj commented on issue #8272: Cloud Life Sciences operator and hook

URL: https://github.com/apache/airflow/issues/8272#issuecomment-615426469

@ephraimbuddy Google Cloud has two types of libraries.

* Native python library - https://github.com/googleapis/google-cloud-python

It exists for most, but not for all services. These are recommended. libraries.

Most often they use Protobuf for communication.

* Discovery based - https://github.com/googleapis/google-api-python-client

These are libraries that are automatically generated based on the API

specification (called the discovery document) at the time of use There are

always Googlle services for everyone and they have all the options - it's

always fresh. For communication uses HTTP only

We don't have a native library for this library, so we need to use

[google-api-client-python](https://github.com/googleapis/google-api-python-client)..

In order to initialize the library, you should use the following code.

```python

from googleapiclient.discovery import build

service = build('lifesciences', 'v2beta', ...)

```

Unfortunately, there is no documentation for this library, but you can build

a client and check what methods exist in this API using ipdb

Documentation for other service is available here:

https://github.com/googleapis/google-api-python-client/blob/master/docs/dyn/index.md

Here is an example of how to check documentation for dataflow.

```python

from googleapiclient.discovery import build

dataflow_service = build('dataflow', 'v1b3')

projects_resource = dataflow_service.projects()

locations_resource = projects_resource.locations()

flex_templates_resource = locations_resource.flexTemplates()

print(flex_templates_resource.launch.__doc__)

```

These APIs are automatically generated based on the REST API, so you can

check the general idea and required arguments in the REST API documentation for

the Life Science service.

https://cloud.google.com/life-sciences/docs/reference/rest

If you looking for example hook, you should look at Cloud Build:

https://github.com/apache/airflow/blob/master/airflow/providers/google/cloud/hooks/cloud_build.py

It still uses discovery.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [airflow] ephraimbuddy commented on issue #8272: Cloud Life Sciences operator and hook

ephraimbuddy commented on issue #8272: Cloud Life Sciences operator and hook URL: https://github.com/apache/airflow/issues/8272#issuecomment-615416283 Hi @mik-laj , Please can you point me to the python library for this Cloud Life Science. I have been looking for it and can't find it. Sorry for any inconveniences this may cause. Thanks This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] dhuang commented on a change in pull request #8423: Fix Snowflake hook conn id

dhuang commented on a change in pull request #8423: Fix Snowflake hook conn id URL: https://github.com/apache/airflow/pull/8423#discussion_r410395250 ## File path: tests/providers/snowflake/hooks/test_snowflake.py ## @@ -102,6 +102,7 @@ def test_get_conn_params(self): 'warehouse': 'af_wh', 'region': 'af_region', 'role': 'af_role'} +self.assertEqual(self.db_hook.snowflake_conn_id, 'snowflake_default') Review comment: Hmm yeah that could've been the intent. However, with the way it was implemented it, I believe it also would have overwrote an explicit `snowflake_conn_id` passed into the hook/operator on initialization. But good call, I think it does make sense to note this in `UPDATING.md`, added. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] dhuang commented on issue #8422: Add Snowflake system test

dhuang commented on issue #8422: Add Snowflake system test URL: https://github.com/apache/airflow/pull/8422#issuecomment-615381527 > Ech. some static check failures :( 臘♂️ Sorry, fixed and all static checks passed locally. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] turbaszek commented on a change in pull request #8430: Improve idempodency in CloudDataTransferServiceCreateJobOperator

turbaszek commented on a change in pull request #8430: Improve idempodency in

CloudDataTransferServiceCreateJobOperator

URL: https://github.com/apache/airflow/pull/8430#discussion_r410337665

##

File path:

airflow/providers/google/cloud/hooks/cloud_storage_transfer_service.py

##

@@ -98,6 +105,22 @@ class GcpTransferOperationStatus:

NEGATIVE_STATUSES = {GcpTransferOperationStatus.FAILED,

GcpTransferOperationStatus.ABORTED}

+def gen_job_name(job_name: str) -> str:

+"""

+Adds unique suffix to job name. If suffix already exists, updates it.

+Suffix — current timestamp

+:param job_name:

+:rtype job_name: str

+:return:

+"""

+split = job_name.split("_")

+uniq = str(int(time.time()))

+if len(split) > 1 and re.compile("^[0-9]{10}$").match(split[-1]):

+split[-1] = uniq

+return "_".join(split)

+return "_".join([job_name, uniq])

Review comment:

Can't we just return `return f"{job_name}_{uniq}`?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [airflow] potiuk commented on issue #8417: Increase max password length in Airflow Connections

potiuk commented on issue #8417: Increase max password length in Airflow Connections URL: https://github.com/apache/airflow/issues/8417#issuecomment-615328426 Indeed :). If you are looking for Password length change, you'd not find it :) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

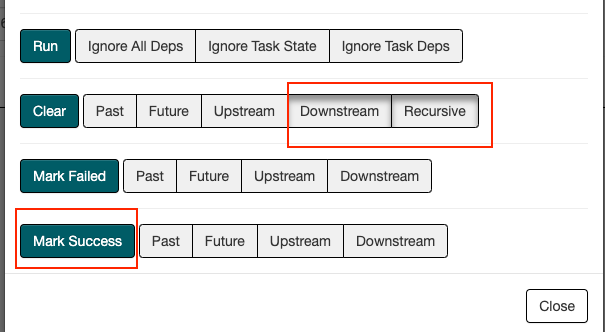

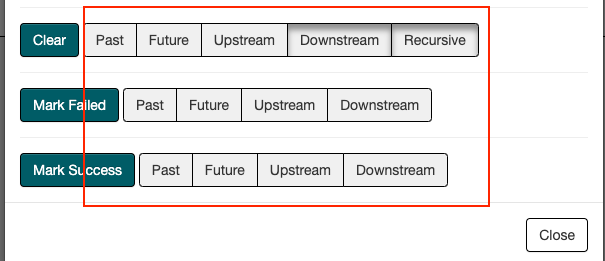

[GitHub] [airflow] KevinKobi opened a new issue #8431: Mark Success/Failure from the UI uses wrong settings

KevinKobi opened a new issue #8431: Mark Success/Failure from the UI uses wrong settings URL: https://github.com/apache/airflow/issues/8431 **What happened**: In the UI the default behavior is Downstream and Recursive set for Clear:  these preset choices should effect only clear button but they effect all the others. By choosing `Mark success` or `Mark Failed` it will take the choice of `Downstream` and `Recursive` from `Clear` unless removing them explicitly. **What you expected to happen**: Each row has it's own settings. As the UI shows Mark Success has it's own Downstream Upstream buttons. It should use it's own not other rows settings. As alternative, at the end the window perform only ONE action (Clear, Mark Success, Mark Failure) the block of 4 * 3 = 12 buttons is not needed:  It can have just 4 buttons for all the three actions (Clear, Mark Success, Mark Failed) and Clear can have extra setting of Recursive. I'm not UX expert so there might be better way to do this but it feels that there is redundancy with the buttons (which also create this bug) **How to reproduce it**: 1. Add any DAG 2. in the UI click on any task to open the menu 3. Choose `Mark success` or `Mark Failure` it will show you list of all tasks met with `Recursive` and `Downsteam` though you did not choose these settings. 4. Go back, remove the preset of `Recursive` and `Downsteam` from `Clear` row. Click on `Mark success` or `Mark Failure` now it will show you only the task you choose. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] mik-laj removed a comment on issue #8399: WIP: Improve idempodency in CloudDataTransferServiceCreateJobOperator

mik-laj removed a comment on issue #8399: WIP: Improve idempodency in CloudDataTransferServiceCreateJobOperator URL: https://github.com/apache/airflow/pull/8399#issuecomment-615319640 @turbaszek If the job has been completed, do not perform it again. This is a serious problem when user does backfill. If the user wants to run the task multiple times, the user should not provide any ID and a new ID will be generated each time. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] mik-laj commented on issue #8399: WIP: Improve idempodency in CloudDataTransferServiceCreateJobOperator

mik-laj commented on issue #8399: WIP: Improve idempodency in CloudDataTransferServiceCreateJobOperator URL: https://github.com/apache/airflow/pull/8399#issuecomment-615319640 @turbaszek If the job has been completed, do not perform it again. This is a serious problem when user does backfill. If the user wants to run the task multiple times, the user should not provide any ID and a new ID will be generated each time. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] potiuk commented on issue #8420: DockerSwarmOperator always pulls docker image

potiuk commented on issue #8420: DockerSwarmOperator always pulls docker image URL: https://github.com/apache/airflow/issues/8420#issuecomment-615317677 Feel free to make a PR :). The issue Llooks very VALID :) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] CodingJonas commented on issue #8420: DockerSwarmOperator always pulls docker image

CodingJonas commented on issue #8420: DockerSwarmOperator always pulls docker image URL: https://github.com/apache/airflow/issues/8420#issuecomment-615317105 The invalid tag was set automatically, and I didn't find a way to change it. I must have done something wrong when I created the issue. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] kaxil commented on issue #8417: Increase max password length in Airflow Connections

kaxil commented on issue #8417: Increase max password length in Airflow Connections URL: https://github.com/apache/airflow/issues/8417#issuecomment-615313567 > Thanks! I was working with 1.10.4 version. > > However, I couldn't find that issue in the [changelog for 1.10.7](https://airflow.apache.org/docs/stable/changelog.html#airflow-1-10-7-2019-12-24). Is the changelog updated? Or Am I not looking at the correct place? It is not nicely worded by it is at https://airflow.apache.org/docs/stable/changelog.html#id9  >[AIRFLOW-6185] SQLAlchemy Connection model schema not aligned with Alembic schema (#6754) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] kaxil edited a comment on issue #8417: Increase max password length in Airflow Connections

kaxil edited a comment on issue #8417: Increase max password length in Airflow Connections URL: https://github.com/apache/airflow/issues/8417#issuecomment-615313567 > Thanks! I was working with 1.10.4 version. > > However, I couldn't find that issue in the [changelog for 1.10.7](https://airflow.apache.org/docs/stable/changelog.html#airflow-1-10-7-2019-12-24). Is the changelog updated? Or Am I not looking at the correct place? It is not nicely worded but it is at https://airflow.apache.org/docs/stable/changelog.html#id9  >[AIRFLOW-6185] SQLAlchemy Connection model schema not aligned with Alembic schema (#6754) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] mik-laj commented on issue #8414: Use repeated arguments in pytest

mik-laj commented on issue #8414: Use repeated arguments in pytest URL: https://github.com/apache/airflow/pull/8414#issuecomment-615312646 Go ahead and do it. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] mik-laj closed pull request #8414: Use repeated arguments in pytest

mik-laj closed pull request #8414: Use repeated arguments in pytest URL: https://github.com/apache/airflow/pull/8414 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] pablosjv commented on issue #8417: Increase max password length in Airflow Connections

pablosjv commented on issue #8417: Increase max password length in Airflow Connections URL: https://github.com/apache/airflow/issues/8417#issuecomment-615312159 Thanks! I was working with 1.10.4 version. However, I couldn't find that issue in the [changelog for 1.10.7](https://airflow.apache.org/docs/stable/changelog.html#airflow-1-10-7-2019-12-24). Is the changelog updated? Or Am I not looking at the correct place? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] ashb commented on a change in pull request #8423: Fix Snowflake hook conn id

ashb commented on a change in pull request #8423: Fix Snowflake hook conn id URL: https://github.com/apache/airflow/pull/8423#discussion_r410298189 ## File path: tests/providers/snowflake/hooks/test_snowflake.py ## @@ -102,6 +102,7 @@ def test_get_conn_params(self): 'warehouse': 'af_wh', 'region': 'af_region', 'role': 'af_role'} +self.assertEqual(self.db_hook.snowflake_conn_id, 'snowflake_default') Review comment: I _think_ that this is actually a change in the default, and `snowflake_conn_id` was the previous default. Do you think it's worth changing this default? If so it needs a note in UPDATING.md This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] kaxil merged pull request #8413: Add back-compat modules from 1.10.10 for SecretsBackends

kaxil merged pull request #8413: Add back-compat modules from 1.10.10 for SecretsBackends URL: https://github.com/apache/airflow/pull/8413 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] potiuk commented on issue #8418: Update docker operator network documentation

potiuk commented on issue #8418: Update docker operator network documentation URL: https://github.com/apache/airflow/issues/8418#issuecomment-615302186 How about you add it yourself ? @pablosjv. Just create a PR! This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] khyurri opened a new pull request #8430: Improve idempodency in CloudDataTransferServiceCreateJobOperator

khyurri opened a new pull request #8430: Improve idempodency in

CloudDataTransferServiceCreateJobOperator

URL: https://github.com/apache/airflow/pull/8430

This PR Resolves #8285

1. If `body.name` is passed `CloudDataTransferServiceCreateJobOperator`

became idempotent

2. If transfer `body.name` has been soft deleted, operator became *not

idempotent*. Every run name will have unique suffix (`name_{unix_time_stamp}`)

DRAFT PR: https://github.com/apache/airflow/pull/8399 (sorry, i've deleted

branch, my mistake :( )

---

Make sure to mark the boxes below before creating PR: [x]

- [x] Description above provides context of the change

- [x] Unit tests coverage for changes (not needed for documentation changes)

- [x] Commits follow "[How to write a good git commit

message](http://chris.beams.io/posts/git-commit/)"

- [x] Relevant documentation is updated including usage instructions.

- [x] I will engage committers as explained in [Contribution Workflow

Example](https://github.com/apache/airflow/blob/master/CONTRIBUTING.rst#contribution-workflow-example).

---

In case of fundamental code change, Airflow Improvement Proposal

([AIP](https://cwiki.apache.org/confluence/display/AIRFLOW/Airflow+Improvements+Proposals))

is needed.

In case of a new dependency, check compliance with the [ASF 3rd Party

License Policy](https://www.apache.org/legal/resolved.html#category-x).

In case of backwards incompatible changes please leave a note in

[UPDATING.md](https://github.com/apache/airflow/blob/master/UPDATING.md).

Read the [Pull Request

Guidelines](https://github.com/apache/airflow/blob/master/CONTRIBUTING.rst#pull-request-guidelines)

for more information.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [airflow] potiuk merged pull request #8419: fixed typo in confirm script

potiuk merged pull request #8419: fixed typo in confirm script URL: https://github.com/apache/airflow/pull/8419 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] potiuk commented on issue #8420: DockerSwarmOperator always pulls docker image

potiuk commented on issue #8420: DockerSwarmOperator always pulls docker image URL: https://github.com/apache/airflow/issues/8420#issuecomment-615301042 Why invalid? Looks like it's quite valid point :). Did you change your mind ? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] potiuk commented on a change in pull request #8393: Bring back CI optimisations

potiuk commented on a change in pull request #8393: Bring back CI optimisations URL: https://github.com/apache/airflow/pull/8393#discussion_r410288174 ## File path: .github/workflows/ci.yml ## @@ -80,134 +80,152 @@ jobs: name: Build docs runs-on: ubuntu-latest env: - TRAVIS_JOB_NAME: "Build documentation" PYTHON_VERSION: 3.6 + CI_JOB_TYPE: "Documentation" steps: - uses: actions/checkout@master - - name: "Build documentation" + - name: "Build CI image" +run: ./scripts/ci/ci_prepare_image_on_ci.sh + - name: "Build docs" run: ./scripts/ci/ci_docs.sh tests-p36-postgres-integrations: -name: "Tests [Postgres9.6][Py3.6][integrations]" +name: "[Pg9.6][Py3.6][integrations]" runs-on: ubuntu-latest needs: [statics, statics-tests] env: - TRAVIS_JOB_NAME: "Tests [Postgres9.6][Py3.6][integrations]" BACKEND: postgres PYTHON_VERSION: 3.6 POSTGRES_VERSION: 9.6 ENABLED_INTEGRATIONS: "cassandra kerberos mongo openldap rabbitmq redis" RUN_INTEGRATION_TESTS: all + CI_JOB_TYPE: "Tests" steps: - uses: actions/checkout@master - - name: "Tests [Postgres9.6][Py3.6][integrations]" + - name: "Build CI image" +run: ./scripts/ci/ci_prepare_image_on_ci.sh + - name: "Tests" run: ./scripts/ci/ci_run_airflow_testing.sh tests-p36-postgres-providers: -name: "Tests [Postgres10][Py3.6][providers]" +name: "[Pg10][Py3.6][prov]" runs-on: ubuntu-latest needs: [statics, statics-tests] env: - TRAVIS_JOB_NAME: "Tests [Postgres10][Py3.6][providers]" BACKEND: postgres POSTGRES_VERSION: 10 PYTHON_VERSION: 3.6 + CI_JOB_TYPE: "Tests" steps: - uses: actions/checkout@master - - name: "Tests [Postgres10][Py3.6][providers]" + - name: "Build CI image" +run: ./scripts/ci/ci_prepare_image_on_ci.sh + - name: "Tests" run: ./scripts/ci/ci_run_airflow_testing.sh tests/providers tests-p36-postgres-core: -name: "Tests [Postgres9.6][Py3.6][core]" +name: "[Pg9.6][Py3.6][core]" runs-on: ubuntu-latest needs: [statics, statics-tests] env: - TRAVIS_JOB_NAME: "Tests [Postgres9.6][Py3.6][core]" BACKEND: postgres POSTGRES_VERSION: 9.6 PYTHON_VERSION: 3.6 + CI_JOB_TYPE: "Tests" steps: - uses: actions/checkout@master - - name: "Tests [Postgres9.6][Py3.6][core]" + - name: "Build CI image" +run: ./scripts/ci/ci_prepare_image_on_ci.sh + - name: "Tests" run: ./scripts/ci/ci_run_airflow_testing.sh --ignore=tests/providers tests-p37-sqlite-integrations: -name: "Tests [Sqlite][3.7][integrations]" +name: "[Sqlite][3.7][int]" runs-on: ubuntu-latest needs: [statics, statics-tests] env: - TRAVIS_JOB_NAME: "Tests [Sqlite][3.7][integrations]" BACKEND: sqlite PYTHON_VERSION: 3.7 ENABLED_INTEGRATIONS: "cassandra kerberos mongo openldap rabbitmq redis" RUN_INTEGRATION_TESTS: all + CI_JOB_TYPE: "Tests" steps: - uses: actions/checkout@master - - name: "Tests [Sqlite][3.7][integrations]" + - name: "Build CI image" +run: ./scripts/ci/ci_prepare_image_on_ci.sh + - name: "Tests" run: ./scripts/ci/ci_run_airflow_testing.sh tests-p36-sqlite: -name: "Tests [Sqlite][Py3.6]" +name: "[Sqlite][Py3.6]" runs-on: ubuntu-latest needs: [statics, statics-tests] env: - TRAVIS_JOB_NAME: "Tests [Sqlite][Py3.6]" BACKEND: sqlite PYTHON_VERSION: 3.6 + CI_JOB_TYPE: "Tests" steps: - uses: actions/checkout@master - - name: "Tests [Sqlite][Py3.6]" + - name: "Build CI image" +run: ./scripts/ci/ci_prepare_image_on_ci.sh + - name: "Tests" run: ./scripts/ci/ci_run_airflow_testing.sh tests-p36-mysql-integrations: -name: "Tests [MySQL][Py3.6][integrations]" +name: "[MySQL5.7][Py3.6][int]" runs-on: ubuntu-latest needs: [statics, statics-tests] env: - TRAVIS_JOB_NAME: "Tests [MySQL][Py3.6][integrations]" BACKEND: sqlite PYTHON_VERSION: 3.6 MYSQL_VERSION: 5.7 ENABLED_INTEGRATIONS: "cassandra kerberos mongo openldap rabbitmq redis" RUN_INTEGRATION_TESTS: all + CI_JOB_TYPE: "Tests" steps: - uses: actions/checkout@master - - name: "Tests [MySQL][Py3.6][integrations]" + - name: "Build CI image" +run: ./scripts/ci/ci_prepare_image_on_ci.sh + - name: "Tests" run: ./scripts/ci/ci_run_airflow_testing.sh tests-p36-mysql-providers: -name: "Tests [MySQL5.7][Py3.7][providers][kerberos]" +name: "[MySQL5.7][Py3.7][prov][kerb]" runs-on: ubuntu-latest needs: [statics, statics-tests] env: - TRAVIS_JOB_NAME: "Tests [MySQL5.7][Py3.7][providers][kerberos]" BACKEND: mysql

[GitHub] [airflow] potiuk commented on issue #8422: Add Snowflake system test

potiuk commented on issue #8422: Add Snowflake system test URL: https://github.com/apache/airflow/pull/8422#issuecomment-615299109 Ech. some static check failures :( This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] kaxil merged pull request #8412: Remove duplicate dependency ('curl') from Dockerfile

kaxil merged pull request #8412: Remove duplicate dependency ('curl') from

Dockerfile

URL: https://github.com/apache/airflow/pull/8412

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [airflow] potiuk closed issue #8424: Exceptions inconsistent with UI and logfile

potiuk closed issue #8424: Exceptions inconsistent with UI and logfile URL: https://github.com/apache/airflow/issues/8424 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] potiuk commented on issue #8424: Exceptions inconsistent with UI and logfile

potiuk commented on issue #8424: Exceptions inconsistent with UI and logfile URL: https://github.com/apache/airflow/issues/8424#issuecomment-615295670 I think this has not enough details. I am not even sure whether those are related. There is no information on how to reproduce it. Please open it again with all the details if you want anyone to take a look at it. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] khyurri closed pull request #8399: WIP: Improve idempodency in CloudDataTransferServiceCreateJobOperator

khyurri closed pull request #8399: WIP: Improve idempodency in CloudDataTransferServiceCreateJobOperator URL: https://github.com/apache/airflow/pull/8399 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] potiuk commented on issue #8428: Create isacko

potiuk commented on issue #8428: Create isacko URL: https://github.com/apache/airflow/pull/8428#issuecomment-615291957 ? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] potiuk closed pull request #8428: Create isacko

potiuk closed pull request #8428: Create isacko URL: https://github.com/apache/airflow/pull/8428 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Commented] (AIRFLOW-5577) Dags Filter_by_owner is missing in RBAC