[GitHub] r39132 closed pull request #3879: [AIRFLOW-XXX] Add Bombora Inc using Airflow

r39132 closed pull request #3879: [AIRFLOW-XXX] Add Bombora Inc using Airflow URL: https://github.com/apache/incubator-airflow/pull/3879 This is a PR merged from a forked repository. As GitHub hides the original diff on merge, it is displayed below for the sake of provenance: As this is a foreign pull request (from a fork), the diff is supplied below (as it won't show otherwise due to GitHub magic): diff --git a/README.md b/README.md index 16fb2f4250..5b1b6b7d4e 100644 --- a/README.md +++ b/README.md @@ -116,6 +116,7 @@ Currently **officially** using Airflow: 1. [Bluecore](https://www.bluecore.com) [[@JLDLaughlin](https://github.com/JLDLaughlin)] 1. [Boda Telecom Suite - CE](https://github.com/bodastage/bts-ce) [[@erssebaggala](https://github.com/erssebaggala), [@bodastage](https://github.com/bodastage)] 1. [Bodastage Solutions](http://bodastage.com) [[@erssebaggala](https://github.com/erssebaggala), [@bodastage](https://github.com/bodastage)] +1. [Bombora Inc](https://bombora.com/) [[@jeffkpayne](https://github.com/jeffkpayne), [@TheOriginalAlex](https://github.com/TheOriginalAlex)] 1. [Bonnier Broadcasting](http://www.bonnierbroadcasting.com) [[@wileeam](https://github.com/wileeam)] 1. [BounceX](http://www.bouncex.com) [[@JoshFerge](https://github.com/JoshFerge), [@hudsonrio](https://github.com/hudsonrio), [@ronniekritou](https://github.com/ronniekritou)] 1. [Branch](https://branch.io) [[@sdebarshi](https://github.com/sdebarshi), [@dmitrig01](https://github.com/dmitrig01)] This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] codecov-io edited a comment on issue #3879: [AIRFLOW-XXX] Add Bombora Inc using Airflow

codecov-io edited a comment on issue #3879: [AIRFLOW-XXX] Add Bombora Inc using Airflow URL: https://github.com/apache/incubator-airflow/pull/3879#issuecomment-420147353 # [Codecov](https://codecov.io/gh/apache/incubator-airflow/pull/3879?src=pr=h1) Report > Merging [#3879](https://codecov.io/gh/apache/incubator-airflow/pull/3879?src=pr=desc) into [master](https://codecov.io/gh/apache/incubator-airflow/commit/2318cea74d4f71fba353eaca9bb3c4fd3cdb06c0?src=pr=desc) will **increase** coverage by `<.01%`. > The diff coverage is `n/a`. [](https://codecov.io/gh/apache/incubator-airflow/pull/3879?src=pr=tree) ```diff @@Coverage Diff @@ ## master#3879 +/- ## == + Coverage 77.47% 77.48% +<.01% == Files 200 200 Lines 1585015850 == + Hits1228012281 +1 + Misses 3570 3569 -1 ``` | [Impacted Files](https://codecov.io/gh/apache/incubator-airflow/pull/3879?src=pr=tree) | Coverage Δ | | |---|---|---| | [airflow/models.py](https://codecov.io/gh/apache/incubator-airflow/pull/3879/diff?src=pr=tree#diff-YWlyZmxvdy9tb2RlbHMucHk=) | `88.79% <0%> (+0.04%)` | :arrow_up: | -- [Continue to review full report at Codecov](https://codecov.io/gh/apache/incubator-airflow/pull/3879?src=pr=continue). > **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta) > `Δ = absolute (impact)`, `ø = not affected`, `? = missing data` > Powered by [Codecov](https://codecov.io/gh/apache/incubator-airflow/pull/3879?src=pr=footer). Last update [2318cea...5959b61](https://codecov.io/gh/apache/incubator-airflow/pull/3879?src=pr=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments). This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] codecov-io commented on issue #3879: [AIRFLOW-XXX] Add Bombora Inc using Airflow

codecov-io commented on issue #3879: [AIRFLOW-XXX] Add Bombora Inc using Airflow URL: https://github.com/apache/incubator-airflow/pull/3879#issuecomment-420147353 # [Codecov](https://codecov.io/gh/apache/incubator-airflow/pull/3879?src=pr=h1) Report > Merging [#3879](https://codecov.io/gh/apache/incubator-airflow/pull/3879?src=pr=desc) into [master](https://codecov.io/gh/apache/incubator-airflow/commit/2318cea74d4f71fba353eaca9bb3c4fd3cdb06c0?src=pr=desc) will **increase** coverage by `<.01%`. > The diff coverage is `n/a`. [](https://codecov.io/gh/apache/incubator-airflow/pull/3879?src=pr=tree) ```diff @@Coverage Diff @@ ## master#3879 +/- ## == + Coverage 77.47% 77.48% +<.01% == Files 200 200 Lines 1585015850 == + Hits1228012281 +1 + Misses 3570 3569 -1 ``` | [Impacted Files](https://codecov.io/gh/apache/incubator-airflow/pull/3879?src=pr=tree) | Coverage Δ | | |---|---|---| | [airflow/models.py](https://codecov.io/gh/apache/incubator-airflow/pull/3879/diff?src=pr=tree#diff-YWlyZmxvdy9tb2RlbHMucHk=) | `88.79% <0%> (+0.04%)` | :arrow_up: | -- [Continue to review full report at Codecov](https://codecov.io/gh/apache/incubator-airflow/pull/3879?src=pr=continue). > **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta) > `Δ = absolute (impact)`, `ø = not affected`, `? = missing data` > Powered by [Codecov](https://codecov.io/gh/apache/incubator-airflow/pull/3879?src=pr=footer). Last update [2318cea...5959b61](https://codecov.io/gh/apache/incubator-airflow/pull/3879?src=pr=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments). This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] jeffkpayne commented on issue #3879: [AIRFLOW-XXX] Add Bombora Inc using Airflow

jeffkpayne commented on issue #3879: [AIRFLOW-XXX] Add Bombora Inc using Airflow URL: https://github.com/apache/incubator-airflow/pull/3879#issuecomment-420142299 @r39132 Gah... Sorry about that... This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Commented] (AIRFLOW-3001) Accumulative tis slow allocation of new schedule

[

https://issues.apache.org/jira/browse/AIRFLOW-3001?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16610026#comment-16610026

]

ASF GitHub Bot commented on AIRFLOW-3001:

-

ubermen opened a new pull request #3874: [AIRFLOW-3001] Add task_instance table

index 'ti_dag_date'

URL: https://github.com/apache/incubator-airflow/pull/3874

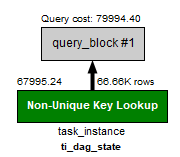

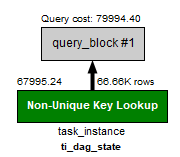

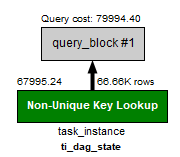

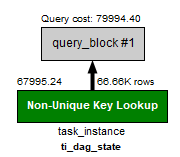

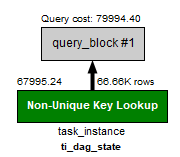

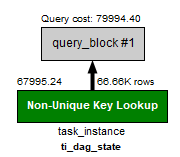

[ Description ]

There was no index composed of dag_id and execution_date. So, when scheduler

find all tis of dagrun like this "select * from task_instance where dag_id =

'some_id' and execution_date = '2018-09-01 ...'", this query will be using

ti_dag_state index (I was testing it in mysql workbench. I was expecting

'ti_state_lkp' but, it was not that case). Perhaps there's no problem when

range of execution_date is small (under 1000 dagrun), but I had experienced

slow allocation of tis when the dag had 1000+ accumulative dagrun. So, now I

was using airflow with adding new index ti_dag_date (dag_id, execution_date) on

task_instance table. I have attached result of my test :)

[ Test ]

models.py > DAG.run

jobs.py > BaseJob.run

jobs.py > BackfillJob._execute

jobs.py > BackfillJob._execute_for_run_dates

jobs.py > BackfillJob._task_instances_for_dag_run

models.py > DagRun.get_task_instances

tis = session.query(TI).filter(

TI.dag_id == self.dag_id,

TI.execution_date == self.execution_date,

)

### Jira

- [ ] My PR addresses the following [Airflow

Jira](https://issues.apache.org/jira/browse/AIRFLOW/) issues and references

them in the PR title. For example, "\[AIRFLOW-XXX\] My Airflow PR"

- https://issues.apache.org/jira/browse/AIRFLOW-XXX

- In case you are fixing a typo in the documentation you can prepend your

commit with \[AIRFLOW-XXX\], code changes always need a Jira issue.

### Description

- [ ] Here are some details about my PR, including screenshots of any UI

changes:

### Tests

- [ ] My PR adds the following unit tests __OR__ does not need testing for

this extremely good reason:

### Commits

- [ ] My commits all reference Jira issues in their subject lines, and I

have squashed multiple commits if they address the same issue. In addition, my

commits follow the guidelines from "[How to write a good git commit

message](http://chris.beams.io/posts/git-commit/)":

1. Subject is separated from body by a blank line

1. Subject is limited to 50 characters (not including Jira issue reference)

1. Subject does not end with a period

1. Subject uses the imperative mood ("add", not "adding")

1. Body wraps at 72 characters

1. Body explains "what" and "why", not "how"

### Documentation

- [ ] In case of new functionality, my PR adds documentation that describes

how to use it.

- When adding new operators/hooks/sensors, the autoclass documentation

generation needs to be added.

### Code Quality

- [ ] Passes `git diff upstream/master -u -- "*.py" | flake8 --diff`

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Accumulative tis slow allocation of new schedule

>

>

> Key: AIRFLOW-3001

> URL: https://issues.apache.org/jira/browse/AIRFLOW-3001

> Project: Apache Airflow

> Issue Type: Improvement

> Components: scheduler

>Affects Versions: 1.10.0

>Reporter: Jason Kim

>Assignee: Jason Kim

>Priority: Major

>

> I have created very long term schedule in short interval. (2~3 years as 10

> min interval)

> So, dag could be bigger and bigger as scheduling goes on.

> Finally, at critical point (I don't know exactly when it is), the allocation

> of new task_instances get slow and then almost stop.

> I found that in this point, many slow query logs had occurred. (I was using

> mysql as meta repository)

> queries like this

> "SELECT * FROM task_instance WHERE dag_id = 'some_dag_id' AND execution_date

> = ''2018-09-01 00:00:00"

> I could resolve this issue by adding new index consists of dag_id and

> execution_date.

> So, I wanted 1.10 branch to be modified to create task_instance table with

> the index.

> Thanks.

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Commented] (AIRFLOW-3001) Accumulative tis slow allocation of new schedule

[

https://issues.apache.org/jira/browse/AIRFLOW-3001?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16610025#comment-16610025

]

ASF GitHub Bot commented on AIRFLOW-3001:

-

ubermen closed pull request #3874: [AIRFLOW-3001] Add task_instance table index

'ti_dag_date'

URL: https://github.com/apache/incubator-airflow/pull/3874

This is a PR merged from a forked repository.

As GitHub hides the original diff on merge, it is displayed below for

the sake of provenance:

As this is a foreign pull request (from a fork), the diff is supplied

below (as it won't show otherwise due to GitHub magic):

diff --git

a/airflow/migrations/versions/82c452539eeb_add_index_to_taskinstance.py

b/airflow/migrations/versions/82c452539eeb_add_index_to_taskinstance.py

new file mode 100644

index 00..2f89181bb5

--- /dev/null

+++ b/airflow/migrations/versions/82c452539eeb_add_index_to_taskinstance.py

@@ -0,0 +1,42 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+

+"""add index to taskinstance

+

+Revision ID: 82c452539eeb

+Revises: 9635ae0956e7

+Create Date: 2018-09-10 17:04:32.058103

+

+"""

+

+# revision identifiers, used by Alembic.

+revision = '82c452539eeb'

+down_revision = '9635ae0956e7'

+branch_labels = None

+depends_on = None

+

+from alembic import op

+import sqlalchemy as sa

+

+

+def upgrade():

+op.create_index('ti_dag_date', 'task_instance', ['dag_id',

'execution_date'], unique=False)

+

+

+def downgrade():

+op.drop_index('ti_dag_date', table_name='task_instance')

diff --git a/airflow/models.py b/airflow/models.py

index 2096785b41..c41f2a9dbe 100755

--- a/airflow/models.py

+++ b/airflow/models.py

@@ -880,6 +880,7 @@ class TaskInstance(Base, LoggingMixin):

__table_args__ = (

Index('ti_dag_state', dag_id, state),

+Index('ti_dag_date', dag_id, execution_date),

Index('ti_state', state),

Index('ti_state_lkp', dag_id, task_id, execution_date, state),

Index('ti_pool', pool, state, priority_weight),

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Accumulative tis slow allocation of new schedule

>

>

> Key: AIRFLOW-3001

> URL: https://issues.apache.org/jira/browse/AIRFLOW-3001

> Project: Apache Airflow

> Issue Type: Improvement

> Components: scheduler

>Affects Versions: 1.10.0

>Reporter: Jason Kim

>Assignee: Jason Kim

>Priority: Major

>

> I have created very long term schedule in short interval. (2~3 years as 10

> min interval)

> So, dag could be bigger and bigger as scheduling goes on.

> Finally, at critical point (I don't know exactly when it is), the allocation

> of new task_instances get slow and then almost stop.

> I found that in this point, many slow query logs had occurred. (I was using

> mysql as meta repository)

> queries like this

> "SELECT * FROM task_instance WHERE dag_id = 'some_dag_id' AND execution_date

> = ''2018-09-01 00:00:00"

> I could resolve this issue by adding new index consists of dag_id and

> execution_date.

> So, I wanted 1.10 branch to be modified to create task_instance table with

> the index.

> Thanks.

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[GitHub] ubermen opened a new pull request #3874: [AIRFLOW-3001] Add task_instance table index 'ti_dag_date'

ubermen opened a new pull request #3874: [AIRFLOW-3001] Add task_instance table

index 'ti_dag_date'

URL: https://github.com/apache/incubator-airflow/pull/3874

[ Description ]

There was no index composed of dag_id and execution_date. So, when scheduler

find all tis of dagrun like this "select * from task_instance where dag_id =

'some_id' and execution_date = '2018-09-01 ...'", this query will be using

ti_dag_state index (I was testing it in mysql workbench. I was expecting

'ti_state_lkp' but, it was not that case). Perhaps there's no problem when

range of execution_date is small (under 1000 dagrun), but I had experienced

slow allocation of tis when the dag had 1000+ accumulative dagrun. So, now I

was using airflow with adding new index ti_dag_date (dag_id, execution_date) on

task_instance table. I have attached result of my test :)

[ Test ]

models.py > DAG.run

jobs.py > BaseJob.run

jobs.py > BackfillJob._execute

jobs.py > BackfillJob._execute_for_run_dates

jobs.py > BackfillJob._task_instances_for_dag_run

models.py > DagRun.get_task_instances

tis = session.query(TI).filter(

TI.dag_id == self.dag_id,

TI.execution_date == self.execution_date,

)

### Jira

- [ ] My PR addresses the following [Airflow

Jira](https://issues.apache.org/jira/browse/AIRFLOW/) issues and references

them in the PR title. For example, "\[AIRFLOW-XXX\] My Airflow PR"

- https://issues.apache.org/jira/browse/AIRFLOW-XXX

- In case you are fixing a typo in the documentation you can prepend your

commit with \[AIRFLOW-XXX\], code changes always need a Jira issue.

### Description

- [ ] Here are some details about my PR, including screenshots of any UI

changes:

### Tests

- [ ] My PR adds the following unit tests __OR__ does not need testing for

this extremely good reason:

### Commits

- [ ] My commits all reference Jira issues in their subject lines, and I

have squashed multiple commits if they address the same issue. In addition, my

commits follow the guidelines from "[How to write a good git commit

message](http://chris.beams.io/posts/git-commit/)":

1. Subject is separated from body by a blank line

1. Subject is limited to 50 characters (not including Jira issue reference)

1. Subject does not end with a period

1. Subject uses the imperative mood ("add", not "adding")

1. Body wraps at 72 characters

1. Body explains "what" and "why", not "how"

### Documentation

- [ ] In case of new functionality, my PR adds documentation that describes

how to use it.

- When adding new operators/hooks/sensors, the autoclass documentation

generation needs to be added.

### Code Quality

- [ ] Passes `git diff upstream/master -u -- "*.py" | flake8 --diff`

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] ubermen closed pull request #3874: [AIRFLOW-3001] Add task_instance table index 'ti_dag_date'

ubermen closed pull request #3874: [AIRFLOW-3001] Add task_instance table index

'ti_dag_date'

URL: https://github.com/apache/incubator-airflow/pull/3874

This is a PR merged from a forked repository.

As GitHub hides the original diff on merge, it is displayed below for

the sake of provenance:

As this is a foreign pull request (from a fork), the diff is supplied

below (as it won't show otherwise due to GitHub magic):

diff --git

a/airflow/migrations/versions/82c452539eeb_add_index_to_taskinstance.py

b/airflow/migrations/versions/82c452539eeb_add_index_to_taskinstance.py

new file mode 100644

index 00..2f89181bb5

--- /dev/null

+++ b/airflow/migrations/versions/82c452539eeb_add_index_to_taskinstance.py

@@ -0,0 +1,42 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+

+"""add index to taskinstance

+

+Revision ID: 82c452539eeb

+Revises: 9635ae0956e7

+Create Date: 2018-09-10 17:04:32.058103

+

+"""

+

+# revision identifiers, used by Alembic.

+revision = '82c452539eeb'

+down_revision = '9635ae0956e7'

+branch_labels = None

+depends_on = None

+

+from alembic import op

+import sqlalchemy as sa

+

+

+def upgrade():

+op.create_index('ti_dag_date', 'task_instance', ['dag_id',

'execution_date'], unique=False)

+

+

+def downgrade():

+op.drop_index('ti_dag_date', table_name='task_instance')

diff --git a/airflow/models.py b/airflow/models.py

index 2096785b41..c41f2a9dbe 100755

--- a/airflow/models.py

+++ b/airflow/models.py

@@ -880,6 +880,7 @@ class TaskInstance(Base, LoggingMixin):

__table_args__ = (

Index('ti_dag_state', dag_id, state),

+Index('ti_dag_date', dag_id, execution_date),

Index('ti_state', state),

Index('ti_state_lkp', dag_id, task_id, execution_date, state),

Index('ti_pool', pool, state, priority_weight),

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] r39132 commented on a change in pull request #3879: [AIRFLOW-XXX] Add Bombora Inc using Airflow

r39132 commented on a change in pull request #3879: [AIRFLOW-XXX] Add Bombora Inc using Airflow URL: https://github.com/apache/incubator-airflow/pull/3879#discussion_r216525697 ## File path: README.md ## @@ -117,6 +117,7 @@ Currently **officially** using Airflow: 1. [Boda Telecom Suite - CE](https://github.com/bodastage/bts-ce) [[@erssebaggala](https://github.com/erssebaggala), [@bodastage](https://github.com/bodastage)] 1. [Bodastage Solutions](http://bodastage.com) [[@erssebaggala](https://github.com/erssebaggala), [@bodastage](https://github.com/bodastage)] 1. [Bonnier Broadcasting](http://www.bonnierbroadcasting.com) [[@wileeam](https://github.com/wileeam)] +1. [Bombora Inc](https://bombora.com/) [[@jeffkpayne](https://github.com/jeffkpayne), [@TheOriginalAlex](https://github.com/TheOriginalAlex)] Review comment: @jeffkpayne Bombora should go above Bonnier per alphabetic ordering! This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] r39132 commented on issue #3879: [AIRFLOW-XXX] Add Bombora Inc using Airflow

r39132 commented on issue #3879: [AIRFLOW-XXX] Add Bombora Inc using Airflow URL: https://github.com/apache/incubator-airflow/pull/3879#issuecomment-420119747 @jeffkpayne Bombara should go above Bonnier per alphabetic ordering! This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] r39132 removed a comment on issue #3879: [AIRFLOW-XXX] Add Bombora Inc using Airflow

r39132 removed a comment on issue #3879: [AIRFLOW-XXX] Add Bombora Inc using Airflow URL: https://github.com/apache/incubator-airflow/pull/3879#issuecomment-420119747 @jeffkpayne Bombara should go above Bonnier per alphabetic ordering! This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] XD-DENG edited a comment on issue #3834: [AIRFLOW-2965] CLI tool to show the next execution datetime

XD-DENG edited a comment on issue #3834: [AIRFLOW-2965] CLI tool to show the next execution datetime URL: https://github.com/apache/incubator-airflow/pull/3834#issuecomment-420113869 Sure! But I may only start to look into that later, since I got ideas for a few other potential PR to work on at this moment, which may be prioritized. This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] XD-DENG commented on issue #3834: [AIRFLOW-2965] CLI tool to show the next execution datetime

XD-DENG commented on issue #3834: [AIRFLOW-2965] CLI tool to show the next execution datetime URL: https://github.com/apache/incubator-airflow/pull/3834#issuecomment-420113869 Sure! But I may only start to look into that later, since I got ideas for a few other potential PR to work on at this moment, which may prioritized. This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Updated] (AIRFLOW-3036) Upgrading to Airflow 1.10 not possible using GCP Cloud SQL for MYSQL

[

https://issues.apache.org/jira/browse/AIRFLOW-3036?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Smith Mathieu updated AIRFLOW-3036:

---

Description:

The upgrade path to airflow 1.10 seems impossible for users of MySQL in

Google's Cloud SQL service given new mysql requirements for 1.10.

When executing "airflow upgradedb"

```

INFO [alembic.runtime.migration] Running upgrade d2ae31099d61 -> 0e2a74e0fc9f,

Add time zone awareness

Traceback (most recent call last):

File "/usr/local/bin/airflow", line 32, in

args.func(args)

File "/usr/local/lib/python3.6/site-packages/airflow/bin/cli.py", line 1002,

in initdb

db_utils.initdb(settings.RBAC)

File "/usr/local/lib/python3.6/site-packages/airflow/utils/db.py", line 92, in

initdb

upgradedb()

File "/usr/local/lib/python3.6/site-packages/airflow/utils/db.py", line 346,

in upgradedb

command.upgrade(config, 'heads')

File "/usr/local/lib/python3.6/site-packages/alembic/command.py", line 174, in

upgrade

script.run_env()

File "/usr/local/lib/python3.6/site-packages/alembic/script/base.py", line

416, in run_env

util.load_python_file(self.dir, 'env.py')

File "/usr/local/lib/python3.6/site-packages/alembic/util/pyfiles.py", line

93, in load_python_file

module = load_module_py(module_id, path)

File "/usr/local/lib/python3.6/site-packages/alembic/util/compat.py", line 68,

in load_module_py

module_id, path).load_module(module_id)

File "", line 399, in _check_name_wrapper

File "", line 823, in load_module

File "", line 682, in load_module

File "", line 265, in _load_module_shim

File "", line 684, in _load

File "", line 665, in _load_unlocked

File "", line 678, in exec_module

File "", line 219, in _call_with_frames_removed

File "/usr/local/lib/python3.6/site-packages/airflow/migrations/env.py", line

91, in

run_migrations_online()

File "/usr/local/lib/python3.6/site-packages/airflow/migrations/env.py", line

86, in run_migrations_online

context.run_migrations()

File "", line 8, in run_migrations

File "/usr/local/lib/python3.6/site-packages/alembic/runtime/environment.py",

line 807, in run_migrations

self.get_context().run_migrations(**kw)

File "/usr/local/lib/python3.6/site-packages/alembic/runtime/migration.py",

line 321, in run_migrations

step.migration_fn(**kw)

File

"/usr/local/lib/python3.6/site-packages/airflow/migrations/versions/0e2a74e0fc9f_add_time_zone_awareness.py",

line 46, in upgrade

raise Exception("Global variable explicit_defaults_for_timestamp needs to be

on (1) for mysql")

Exception: Global variable explicit_defaults_for_timestamp needs to be on (1)

for mysql

```

Reading documentation for upgrading to airflow 1.10, it seems the requirement

for explicit_defaults_for_timestamp=1 was intentional.

However, MySQL on Google Cloud SQL does not support configuring this variable

and it is off by default. Users of MySQL and Cloud SQL do not have an upgrade

path to 1.10. Alas, so close to the mythical Kubernetes Executor.

In GCP, Cloud SQL is _the_ hosted MySQL solution.

[https://cloud.google.com/sql/docs/mysql/flags]

was:

The upgrade path to airflow 1.10 seems impossible for users of MySQL in

Google's Cloud SQL service given new mysql requirements for 1.10.

When executing "airflow upgradedb"

```

INFO [alembic.runtime.migration] Running upgrade d2ae31099d61 -

0e2a74e0fc9f, Add time zone awareness

Traceback (most recent call last):

File "/usr/local/bin/airflow", line 32, in module

args.func(args)

File "/usr/local/lib/python3.6/site-packages/airflow/bin/cli.py", line 1002,

in initdb

db_utils.initdb(settings.RBAC)

File "/usr/local/lib/python3.6/site-packages/airflow/utils/db.py", line 92, in

initdb

upgradedb()

File "/usr/local/lib/python3.6/site-packages/airflow/utils/db.py", line 346,

in upgradedb

command.upgrade(config, 'heads')

File "/usr/local/lib/python3.6/site-packages/alembic/command.py", line 174, in

upgrade

script.run_env()

File "/usr/local/lib/python3.6/site-packages/alembic/script/base.py", line

416, in run_env

util.load_python_file(self.dir, 'env.py')

File "/usr/local/lib/python3.6/site-packages/alembic/util/pyfiles.py", line

93, in load_python_file

module = load_module_py(module_id, path)

File "/usr/local/lib/python3.6/site-packages/alembic/util/compat.py", line 68,

in load_module_py

module_id, path).load_module(module_id)

File "frozen importlib._bootstrap_external", line 399, in

_check_name_wrapper

File "frozen importlib._bootstrap_external", line 823, in load_module

File "frozen importlib._bootstrap_external", line 682, in load_module

File "frozen importlib._bootstrap", line 265, in _load_module_shim

File "frozen importlib._bootstrap", line 684, in _load

File "frozen importlib._bootstrap", line 665, in _load_unlocked

File "frozen importlib._bootstrap_external", line 678, in exec_module

File "frozen importlib._bootstrap", line 219, in

_call_with_frames_removed

File

[jira] [Created] (AIRFLOW-3036) Upgrading to Airflow 1.10 not possible using GCP Cloud SQL for MYSQL

Smith Mathieu created AIRFLOW-3036:

--

Summary: Upgrading to Airflow 1.10 not possible using GCP Cloud

SQL for MYSQL

Key: AIRFLOW-3036

URL: https://issues.apache.org/jira/browse/AIRFLOW-3036

Project: Apache Airflow

Issue Type: Bug

Components: core, db

Affects Versions: 1.10.0

Environment: Google Cloud Platform, Google Kubernetes Engine, Airflow

1.10 on Debian Stretch, Google Cloud SQL MySQL

Reporter: Smith Mathieu

The upgrade path to airflow 1.10 seems impossible for users of MySQL in

Google's Cloud SQL service given new mysql requirements for 1.10.

When executing "airflow upgradedb"

```

INFO [alembic.runtime.migration] Running upgrade d2ae31099d61 -

0e2a74e0fc9f, Add time zone awareness

Traceback (most recent call last):

File "/usr/local/bin/airflow", line 32, in module

args.func(args)

File "/usr/local/lib/python3.6/site-packages/airflow/bin/cli.py", line 1002,

in initdb

db_utils.initdb(settings.RBAC)

File "/usr/local/lib/python3.6/site-packages/airflow/utils/db.py", line 92, in

initdb

upgradedb()

File "/usr/local/lib/python3.6/site-packages/airflow/utils/db.py", line 346,

in upgradedb

command.upgrade(config, 'heads')

File "/usr/local/lib/python3.6/site-packages/alembic/command.py", line 174, in

upgrade

script.run_env()

File "/usr/local/lib/python3.6/site-packages/alembic/script/base.py", line

416, in run_env

util.load_python_file(self.dir, 'env.py')

File "/usr/local/lib/python3.6/site-packages/alembic/util/pyfiles.py", line

93, in load_python_file

module = load_module_py(module_id, path)

File "/usr/local/lib/python3.6/site-packages/alembic/util/compat.py", line 68,

in load_module_py

module_id, path).load_module(module_id)

File "frozen importlib._bootstrap_external", line 399, in

_check_name_wrapper

File "frozen importlib._bootstrap_external", line 823, in load_module

File "frozen importlib._bootstrap_external", line 682, in load_module

File "frozen importlib._bootstrap", line 265, in _load_module_shim

File "frozen importlib._bootstrap", line 684, in _load

File "frozen importlib._bootstrap", line 665, in _load_unlocked

File "frozen importlib._bootstrap_external", line 678, in exec_module

File "frozen importlib._bootstrap", line 219, in

_call_with_frames_removed

File "/usr/local/lib/python3.6/site-packages/airflow/migrations/env.py", line

91, in module

run_migrations_online()

File "/usr/local/lib/python3.6/site-packages/airflow/migrations/env.py", line

86, in run_migrations_online

context.run_migrations()

File "string", line 8, in run_migrations

File "/usr/local/lib/python3.6/site-packages/alembic/runtime/environment.py",

line 807, in run_migrations

self.get_context().run_migrations(**kw)

File "/usr/local/lib/python3.6/site-packages/alembic/runtime/migration.py",

line 321, in run_migrations

step.migration_fn(**kw)

File

"/usr/local/lib/python3.6/site-packages/airflow/migrations/versions/0e2a74e0fc9f_add_time_zone_awareness.py",

line 46, in upgrade

raise Exception("Global variable explicit_defaults_for_timestamp needs to be

on (1) for mysql")

Exception: Global variable explicit_defaults_for_timestamp needs to be on (1)

for mysql

```

Reading documentation for upgrading to airflow 1.10, it seems the requirement

for explicit_defaults_for_timestamp=1 was intentional.

However, MySQL on Google Cloud SQL does not support configuring this variable

and it is off by default. Users of MySQL and Cloud SQL do not have an upgrade

path to 1.10

In GCP, Cloud SQL is _the_ hosted MySQL solution.

https://cloud.google.com/sql/docs/mysql/flags

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Updated] (AIRFLOW-3035) gcp_dataproc_hook should treat CANCELLED job state consistently

[

https://issues.apache.org/jira/browse/AIRFLOW-3035?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Jeffrey Payne updated AIRFLOW-3035:

---

Priority: Minor (was: Major)

> gcp_dataproc_hook should treat CANCELLED job state consistently

> ---

>

> Key: AIRFLOW-3035

> URL: https://issues.apache.org/jira/browse/AIRFLOW-3035

> Project: Apache Airflow

> Issue Type: Bug

> Components: contrib

>Affects Versions: 1.10.0, 2.0.0, 1.10.1

>Reporter: Jeffrey Payne

>Assignee: Jeffrey Payne

>Priority: Minor

> Labels: dataproc

>

> When a DP job is cancelled, {{gcp_dataproc_hook.py}} does not treat the

> {{CENCELLED}} state in a consistent and non-intuitive manner:

> # The API internal to {{gcp_dataproc_hook.py}} returns {{False}} from

> {{_DataProcJob.wait_for_done()}}, resulting in {{raise_error()}} being called

> for cancelled jobs, yet {{raise_error()}} only raises {{Exception}} if the

> job state is {{ERROR}}.

> # The end result from the perspective of the {{dataproc_operator.py}} for a

> cancelled job is that the job succeeded, which results in the success

> callback being called. This seems strange to me, as a "cancelled" job is

> rarely considered successful, in my experience.

> Simply changing {{raise_error()}} from:

> {code:python}

> if 'ERROR' == self.job['status']['state']:

> {code}

> to

> {code:python}

> if self.job['status']['state'] in ('ERROR', 'CANCELLED'):

> {code}

> would fix both of these...

> Another, perhaps better, option would be to have the dataproc job operators

> accept a list of {{error_states}} that could be passed into

> {{raise_error()}}, allowing the caller to determine which states should

> result in "failure" of the task. I would lean towards that option.

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Updated] (AIRFLOW-3035) gcp_dataproc_hook should treat CANCELLED job state consistently

[

https://issues.apache.org/jira/browse/AIRFLOW-3035?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Jeffrey Payne updated AIRFLOW-3035:

---

Issue Type: Improvement (was: Bug)

> gcp_dataproc_hook should treat CANCELLED job state consistently

> ---

>

> Key: AIRFLOW-3035

> URL: https://issues.apache.org/jira/browse/AIRFLOW-3035

> Project: Apache Airflow

> Issue Type: Improvement

> Components: contrib

>Affects Versions: 1.10.0, 2.0.0, 1.10.1

>Reporter: Jeffrey Payne

>Assignee: Jeffrey Payne

>Priority: Minor

> Labels: dataproc

>

> When a DP job is cancelled, {{gcp_dataproc_hook.py}} does not treat the

> {{CENCELLED}} state in a consistent and non-intuitive manner:

> # The API internal to {{gcp_dataproc_hook.py}} returns {{False}} from

> {{_DataProcJob.wait_for_done()}}, resulting in {{raise_error()}} being called

> for cancelled jobs, yet {{raise_error()}} only raises {{Exception}} if the

> job state is {{ERROR}}.

> # The end result from the perspective of the {{dataproc_operator.py}} for a

> cancelled job is that the job succeeded, which results in the success

> callback being called. This seems strange to me, as a "cancelled" job is

> rarely considered successful, in my experience.

> Simply changing {{raise_error()}} from:

> {code:python}

> if 'ERROR' == self.job['status']['state']:

> {code}

> to

> {code:python}

> if self.job['status']['state'] in ('ERROR', 'CANCELLED'):

> {code}

> would fix both of these...

> Another, perhaps better, option would be to have the dataproc job operators

> accept a list of {{error_states}} that could be passed into

> {{raise_error()}}, allowing the caller to determine which states should

> result in "failure" of the task. I would lean towards that option.

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[GitHub] jeffkpayne opened a new pull request #3879: [AIRFLOW-XXX] Add Bombora Inc using Airflow

jeffkpayne opened a new pull request #3879: [AIRFLOW-XXX] Add Bombora Inc using

Airflow

URL: https://github.com/apache/incubator-airflow/pull/3879

Make sure you have checked _all_ steps below.

### Jira

- [ ] My PR addresses the following [Airflow

Jira](https://issues.apache.org/jira/browse/AIRFLOW/) issues and references

them in the PR title. For example, "\[AIRFLOW-XXX\] My Airflow PR"

- https://issues.apache.org/jira/browse/AIRFLOW-XXX

- In case you are fixing a typo in the documentation you can prepend your

commit with \[AIRFLOW-XXX\], code changes always need a Jira issue.

### Description

- [ ] Here are some details about my PR, including screenshots of any UI

changes:

### Tests

- [ ] My PR adds the following unit tests __OR__ does not need testing for

this extremely good reason:

### Commits

- [ ] My commits all reference Jira issues in their subject lines, and I

have squashed multiple commits if they address the same issue. In addition, my

commits follow the guidelines from "[How to write a good git commit

message](http://chris.beams.io/posts/git-commit/)":

1. Subject is separated from body by a blank line

1. Subject is limited to 50 characters (not including Jira issue reference)

1. Subject does not end with a period

1. Subject uses the imperative mood ("add", not "adding")

1. Body wraps at 72 characters

1. Body explains "what" and "why", not "how"

### Documentation

- [ ] In case of new functionality, my PR adds documentation that describes

how to use it.

- When adding new operators/hooks/sensors, the autoclass documentation

generation needs to be added.

### Code Quality

- [ ] Passes `git diff upstream/master -u -- "*.py" | flake8 --diff`

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] r39132 commented on issue #3834: [AIRFLOW-2965] CLI tool to show the next execution datetime

r39132 commented on issue #3834: [AIRFLOW-2965] CLI tool to show the next execution datetime URL: https://github.com/apache/incubator-airflow/pull/3834#issuecomment-420104265 That's a fair point. If you can think of a treatment that makes good use of the real-estate (e.g. on hover, show the next run), that might work around this constraint. This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] feng-tao commented on issue #3878: [AIRFLOW-3034]: Update Readme : Add slack link, remove Gitter

feng-tao commented on issue #3878: [AIRFLOW-3034]: Update Readme : Add slack link, remove Gitter URL: https://github.com/apache/incubator-airflow/pull/3878#issuecomment-420100410 hey @r39132 , there are some -1 on the vote thread you started. Have we decided the final decision of retiring gitter? If that's the case, the pr lgtm. This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] r39132 commented on issue #1906: [AIRFLOW-536] Schedule all pending DAG runs in a single scheduler loop

r39132 commented on issue #1906: [AIRFLOW-536] Schedule all pending DAG runs in a single scheduler loop URL: https://github.com/apache/incubator-airflow/pull/1906#issuecomment-420099175 @vijaysbhat Please rebase and let me know if you still want a review! This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] r39132 commented on issue #2055: [AIRFLOW-417] Show useful error message for missing DAG in URL

r39132 commented on issue #2055: [AIRFLOW-417] Show useful error message for missing DAG in URL URL: https://github.com/apache/incubator-airflow/pull/2055#issuecomment-420099102 @vijaysbhat Please rebase and let me know if you still want a review! This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] XD-DENG edited a comment on issue #3834: [AIRFLOW-2965] CLI tool to show the next execution datetime

XD-DENG edited a comment on issue #3834: [AIRFLOW-2965] CLI tool to show the next execution datetime URL: https://github.com/apache/incubator-airflow/pull/3834#issuecomment-420097902 Thanks @r39132 . I’ll follow up on the documentation should necessary. Regarding adding this to UI->DAG List, I’m conservative about this idea since: - it may make the interface too crowded - it’s not really necessary as we already have “ last run” and “schedule” in the table. It is very straightforward for us to refer the next execution in UI scenario. “Next_execution” feature is only necessary in command line interface in my opinion, as information in command line is much less visual. May you let me know your thought? Thanks! This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] r39132 commented on issue #2129: [AIRFLOW-952] Allow deleting extra field in connection UI

r39132 commented on issue #2129: [AIRFLOW-952] Allow deleting extra field in connection UI URL: https://github.com/apache/incubator-airflow/pull/2129#issuecomment-420099031 @vijaysbhat Please rebase and let me know if you still want a review! This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] r39132 closed pull request #2140: WIP - Waiting endpoint

r39132 closed pull request #2140: WIP - Waiting endpoint

URL: https://github.com/apache/incubator-airflow/pull/2140

This is a PR merged from a forked repository.

As GitHub hides the original diff on merge, it is displayed below for

the sake of provenance:

As this is a foreign pull request (from a fork), the diff is supplied

below (as it won't show otherwise due to GitHub magic):

diff --git a/airflow/models.py b/airflow/models.py

index 37f88230a0..7eeaae6ee5 100755

--- a/airflow/models.py

+++ b/airflow/models.py

@@ -1019,7 +1019,7 @@ def key(self):

"""

return self.dag_id, self.task_id, self.execution_date

-def set_state(self, state, session):

+def set_state(self, state, session):

self.state = state

self.start_date = datetime.now()

self.end_date = datetime.now()

@@ -1393,13 +1393,17 @@ def signal_handler(signum, frame):

Stats.incr('operator_successes_{}'.format(

self.task.__class__.__name__), 1, 1)

self.state = State.SUCCESS

+if (task.on_success_set_state_to

+and task.on_success_set_state_to in State.hold()):

+self.state = task.on_success_set_state_to

+

except AirflowSkipException:

self.state = State.SKIPPED

except (Exception, KeyboardInterrupt) as e:

self.handle_failure(e, test_mode, context)

raise

-# Recording SUCCESS

+# Recording SUCCESS or on_success_set_state_to

self.end_date = datetime.now()

self.set_duration()

if not test_mode:

@@ -1883,6 +1887,12 @@ class derived from this one results in the creation of a

task object,

:type resources: dict

:param run_as_user: unix username to impersonate while running the task

:type run_as_user: str

+:param on_success_set_state_to: sets the exit state of the operator to this

+state. Can be used to allow an external event to trigger continuation

+:type on_success_set_state_to: str

+:param on_standby_timeout: the amount of time the scheduler waits on the

+external trigger. Will be converted to seconds.

+:type on_standby_timeout: timedelta

"""

# For derived classes to define which fields will get jinjaified

@@ -1925,6 +1935,8 @@ def __init__(

trigger_rule=TriggerRule.ALL_SUCCESS,

resources=None,

run_as_user=None,

+on_success_set_state_to=None,

+on_standby_timeout=timedelta(3600),

*args,

**kwargs):

@@ -1976,6 +1988,11 @@ def __init__(

self.execution_timeout = execution_timeout

self.on_failure_callback = on_failure_callback

self.on_success_callback = on_success_callback

+self.on_success_set_state_to = on_success_set_state_to

+if isinstance(on_standby_timeout, timedelta):

+self.on_standby_timeout = on_standby_timeout

+else:

+self.on_standby_timeout = timedelta(seconds=retry_delay)

self.on_retry_callback = on_retry_callback

if isinstance(retry_delay, timedelta):

self.retry_delay = retry_delay

@@ -2019,6 +2036,7 @@ def __init__(

'on_failure_callback',

'on_success_callback',

'on_retry_callback',

+'on_success_set_state',

}

def __eq__(self, other):

diff --git a/airflow/ti_deps/deps/onstandby_dep.py

b/airflow/ti_deps/deps/onstandby_dep.py

new file mode 100644

index 00..ad8c579d65

--- /dev/null

+++ b/airflow/ti_deps/deps/onstandby_dep.py

@@ -0,0 +1,57 @@

+# -*- coding: utf-8 -*-

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+from datetime import datetime

+

+from airflow.ti_deps.deps.base_ti_dep import BaseTIDep

+from airflow.utils.db import provide_session

+from airflow.utils.state import State

+

+

+class OnStandByDep(BaseTIDep):

+"""

+Determines if a task's upstream tasks are in a state that allows a given

task instance

+to run.

+"""

+NAME = "On Standby"

+IGNOREABLE = True

+IS_TASK_DEP = True

+

+@provide_session

+def _get_dep_statuses(self, ti, session, dep_context):

+if dep_context.ignore_ti_state:

+yield self._passing_status(

+reason="Context specified that state should be ignored.")

+return

+

+if ti.state is not State.ON_STANDBY:

+yield self._passing_status(

+reason="The

[GitHub] r39132 commented on issue #2140: WIP - Waiting endpoint

r39132 commented on issue #2140: WIP - Waiting endpoint URL: https://github.com/apache/incubator-airflow/pull/2140#issuecomment-420098512 @bolkedebruin I'm closing this PR for now. Please reopen when you start actively working on it again. This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] XD-DENG commented on issue #3834: [AIRFLOW-2965] CLI tool to show the next execution datetime

XD-DENG commented on issue #3834: [AIRFLOW-2965] CLI tool to show the next execution datetime URL: https://github.com/apache/incubator-airflow/pull/3834#issuecomment-420097902 Thanks @r39132 . Regarding adding this to UI->DAG List, I’m conservative about this idea since: - it may make the interface too crowded - it’s not really necessary as we already have “ last run” and “schedule” in the table. It is very straightforward for us to refer the next execution in UI scenario. “Next_execution” feature is only necessary in command line interface in my opinion, as information in command line is much less visual. May you let me know your thought? Thanks! This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Closed] (AIRFLOW-2965) Add CLI command to find the next dag run.

[

https://issues.apache.org/jira/browse/AIRFLOW-2965?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Siddharth Anand closed AIRFLOW-2965.

Resolution: Fixed

> Add CLI command to find the next dag run.

> -

>

> Key: AIRFLOW-2965

> URL: https://issues.apache.org/jira/browse/AIRFLOW-2965

> Project: Apache Airflow

> Issue Type: Task

>Affects Versions: 1.10.0

>Reporter: jack

>Assignee: Xiaodong DENG

>Priority: Minor

>

> I have a dag with the following properties:

> {code:java}

> dag = DAG(

> dag_id='mydag',

> default_args=args,

> schedule_interval='0 1 * * *',

> max_active_runs=1,

> catchup=False){code}

>

>

> This runs great.

> Last run is: 2018-08-26 01:00 (start date is 2018-08-27 01:00)

>

> Now it's 2018-08-27 17:55 I decided to change my dag to:

>

> {code:java}

> dag = DAG(

> dag_id='mydag',

> default_args=args,

> schedule_interval='0 23 * * *',

> max_active_runs=1,

> catchup=False){code}

>

> Now, I have no idea when will be the next dag run.

> Will it be today at 23:00? I can't be sure when the cycle is complete. I'm

> not even sure that this change will do what I wish.

> I'm sure you guys are expert and you can answer this question but most of us

> wouldn't know.

>

> The scheduler has the knowledge when the dag is available for running. All

> I'm asking is to take that knowledge and create a CLI command that I will

> give the dag_id and it will tell me the next date/hour which my dag will be

> runnable.

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Commented] (AIRFLOW-2965) Add CLI command to find the next dag run.

[

https://issues.apache.org/jira/browse/AIRFLOW-2965?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16609889#comment-16609889

]

ASF GitHub Bot commented on AIRFLOW-2965:

-

r39132 closed pull request #3834: [AIRFLOW-2965] CLI tool to show the next

execution datetime

URL: https://github.com/apache/incubator-airflow/pull/3834

This is a PR merged from a forked repository.

As GitHub hides the original diff on merge, it is displayed below for

the sake of provenance:

As this is a foreign pull request (from a fork), the diff is supplied

below (as it won't show otherwise due to GitHub magic):

diff --git a/airflow/bin/cli.py b/airflow/bin/cli.py

index e22427cf40..c38116e6c0 100644

--- a/airflow/bin/cli.py

+++ b/airflow/bin/cli.py

@@ -551,6 +551,31 @@ def dag_state(args):

print(dr[0].state if len(dr) > 0 else None)

+@cli_utils.action_logging

+def next_execution(args):

+"""

+Returns the next execution datetime of a DAG at the command line.

+>>> airflow next_execution tutorial

+2018-08-31 10:38:00

+"""

+dag = get_dag(args)

+

+if dag.is_paused:

+print("[INFO] Please be reminded this DAG is PAUSED now.")

+

+if dag.latest_execution_date:

+next_execution_dttm = dag.following_schedule(dag.latest_execution_date)

+

+if next_execution_dttm is None:

+print("[WARN] No following schedule can be found. " +

+ "This DAG may have schedule interval '@once' or `None`.")

+

+print(next_execution_dttm)

+else:

+print("[WARN] Only applicable when there is execution record found for

the DAG.")

+print(None)

+

+

@cli_utils.action_logging

def list_dags(args):

dagbag = DagBag(process_subdir(args.subdir))

@@ -1986,6 +2011,11 @@ class CLIFactory(object):

'func': sync_perm,

'help': "Update existing role's permissions.",

'args': tuple(),

+},

+{

+'func': next_execution,

+'help': "Get the next execution datetime of a DAG.",

+'args': ('dag_id', 'subdir')

}

)

subparsers_dict = {sp['func'].__name__: sp for sp in subparsers}

diff --git a/tests/cli/test_cli.py b/tests/cli/test_cli.py

index 616b9a0f16..93ec0576e6 100644

--- a/tests/cli/test_cli.py

+++ b/tests/cli/test_cli.py

@@ -20,9 +20,12 @@

import unittest

+from datetime import datetime, timedelta, time

from mock import patch, Mock, MagicMock

from time import sleep

import psutil

+import pytz

+import subprocess

from argparse import Namespace

from airflow import settings

from airflow.bin.cli import get_num_ready_workers_running, run, get_dag

@@ -165,3 +168,80 @@ def test_local_run(self):

ti.refresh_from_db()

state = ti.current_state()

self.assertEqual(state, State.SUCCESS)

+

+def test_next_execution(self):

+# A scaffolding function

+def reset_dr_db(dag_id):

+session = Session()

+dr = session.query(models.DagRun).filter_by(dag_id=dag_id)

+dr.delete()

+session.commit()

+session.close()

+

+EXAMPLE_DAGS_FOLDER = os.path.join(

+os.path.dirname(

+os.path.dirname(

+os.path.dirname(os.path.realpath(__file__))

+)

+),

+"airflow/example_dags"

+)

+

+dagbag = models.DagBag(dag_folder=EXAMPLE_DAGS_FOLDER,

+ include_examples=False)

+dag_ids = ['example_bash_operator', # schedule_interval is '0 0 * * *'

+ 'latest_only', # schedule_interval is timedelta(hours=4)

+ 'example_python_operator', # schedule_interval=None

+ 'example_xcom'] # schedule_interval="@once"

+

+# The details below is determined by the schedule_interval of example

DAGs

+now = timezone.utcnow()

+next_execution_time_for_dag1 = pytz.utc.localize(

+datetime.combine(

+now.date() + timedelta(days=1),

+time(0)

+)

+)

+next_execution_time_for_dag2 = now + timedelta(hours=4)

+expected_output = [str(next_execution_time_for_dag1),

+ str(next_execution_time_for_dag2),

+ "None",

+ "None"]

+

+for i in range(len(dag_ids)):

+dag_id = dag_ids[i]

+

+# Clear dag run so no execution history fo each DAG

+reset_dr_db(dag_id)

+

+p = subprocess.Popen(["airflow", "next_execution", dag_id,

+ "--subdir", EXAMPLE_DAGS_FOLDER],

+ stdout=subprocess.PIPE)

+p.wait()

+stdout = []

+for line in p.stdout:

+stdout.append(str(line.decode("utf-8").rstrip()))

+

+# `next_execution`

[GitHub] r39132 closed pull request #3834: [AIRFLOW-2965] CLI tool to show the next execution datetime

r39132 closed pull request #3834: [AIRFLOW-2965] CLI tool to show the next

execution datetime

URL: https://github.com/apache/incubator-airflow/pull/3834

This is a PR merged from a forked repository.

As GitHub hides the original diff on merge, it is displayed below for

the sake of provenance:

As this is a foreign pull request (from a fork), the diff is supplied

below (as it won't show otherwise due to GitHub magic):

diff --git a/airflow/bin/cli.py b/airflow/bin/cli.py

index e22427cf40..c38116e6c0 100644

--- a/airflow/bin/cli.py

+++ b/airflow/bin/cli.py

@@ -551,6 +551,31 @@ def dag_state(args):

print(dr[0].state if len(dr) > 0 else None)

+@cli_utils.action_logging

+def next_execution(args):

+"""

+Returns the next execution datetime of a DAG at the command line.

+>>> airflow next_execution tutorial

+2018-08-31 10:38:00

+"""

+dag = get_dag(args)

+

+if dag.is_paused:

+print("[INFO] Please be reminded this DAG is PAUSED now.")

+

+if dag.latest_execution_date:

+next_execution_dttm = dag.following_schedule(dag.latest_execution_date)

+

+if next_execution_dttm is None:

+print("[WARN] No following schedule can be found. " +

+ "This DAG may have schedule interval '@once' or `None`.")

+

+print(next_execution_dttm)

+else:

+print("[WARN] Only applicable when there is execution record found for

the DAG.")

+print(None)

+

+

@cli_utils.action_logging

def list_dags(args):

dagbag = DagBag(process_subdir(args.subdir))

@@ -1986,6 +2011,11 @@ class CLIFactory(object):

'func': sync_perm,

'help': "Update existing role's permissions.",

'args': tuple(),

+},

+{

+'func': next_execution,

+'help': "Get the next execution datetime of a DAG.",

+'args': ('dag_id', 'subdir')

}

)

subparsers_dict = {sp['func'].__name__: sp for sp in subparsers}

diff --git a/tests/cli/test_cli.py b/tests/cli/test_cli.py

index 616b9a0f16..93ec0576e6 100644

--- a/tests/cli/test_cli.py

+++ b/tests/cli/test_cli.py

@@ -20,9 +20,12 @@

import unittest

+from datetime import datetime, timedelta, time

from mock import patch, Mock, MagicMock

from time import sleep

import psutil

+import pytz

+import subprocess

from argparse import Namespace

from airflow import settings

from airflow.bin.cli import get_num_ready_workers_running, run, get_dag

@@ -165,3 +168,80 @@ def test_local_run(self):

ti.refresh_from_db()

state = ti.current_state()

self.assertEqual(state, State.SUCCESS)

+

+def test_next_execution(self):

+# A scaffolding function

+def reset_dr_db(dag_id):

+session = Session()

+dr = session.query(models.DagRun).filter_by(dag_id=dag_id)

+dr.delete()

+session.commit()

+session.close()

+

+EXAMPLE_DAGS_FOLDER = os.path.join(

+os.path.dirname(

+os.path.dirname(

+os.path.dirname(os.path.realpath(__file__))

+)

+),

+"airflow/example_dags"

+)

+

+dagbag = models.DagBag(dag_folder=EXAMPLE_DAGS_FOLDER,

+ include_examples=False)

+dag_ids = ['example_bash_operator', # schedule_interval is '0 0 * * *'

+ 'latest_only', # schedule_interval is timedelta(hours=4)

+ 'example_python_operator', # schedule_interval=None

+ 'example_xcom'] # schedule_interval="@once"

+

+# The details below is determined by the schedule_interval of example

DAGs

+now = timezone.utcnow()

+next_execution_time_for_dag1 = pytz.utc.localize(

+datetime.combine(

+now.date() + timedelta(days=1),

+time(0)

+)

+)

+next_execution_time_for_dag2 = now + timedelta(hours=4)

+expected_output = [str(next_execution_time_for_dag1),

+ str(next_execution_time_for_dag2),

+ "None",

+ "None"]

+

+for i in range(len(dag_ids)):

+dag_id = dag_ids[i]

+

+# Clear dag run so no execution history fo each DAG

+reset_dr_db(dag_id)

+

+p = subprocess.Popen(["airflow", "next_execution", dag_id,

+ "--subdir", EXAMPLE_DAGS_FOLDER],

+ stdout=subprocess.PIPE)

+p.wait()

+stdout = []

+for line in p.stdout:

+stdout.append(str(line.decode("utf-8").rstrip()))

+

+# `next_execution` function is inapplicable if no execution record

found

+# It prints `None` in such cases

+self.assertEqual(stdout[-1], "None")

+

+dag = dagbag.dags[dag_id]

+# Create a DagRun for each DAG, to prepare

[GitHub] r39132 commented on issue #3834: [AIRFLOW-2965] CLI tool to show the next execution datetime

r39132 commented on issue #3834: [AIRFLOW-2965] CLI tool to show the next execution datetime URL: https://github.com/apache/incubator-airflow/pull/3834#issuecomment-420089890 @XD-DENG This works great. It may be worth documenting that the next execution is based on the last execution which was started, but not necessarily completed or completed successfully. Happy to look at that documentation as a follow-on PR if folks agree it's not obvious. Also, you may want to update the UI (the dags list) with a next execution column! So, add a `Next Run` similar to the `Last Run` that exists today. https://user-images.githubusercontent.com/581734/45328997-2dab3080-b513-11e8-91fe-32d40c3b7757.png;> This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Commented] (AIRFLOW-3035) gcp_dataproc_hook should treat CANCELLED job state consistently

[

https://issues.apache.org/jira/browse/AIRFLOW-3035?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16609884#comment-16609884

]

Jeffrey Payne commented on AIRFLOW-3035:

One other nitpick, the message passed into {{raise_error()}} from {{submit()}}

on line references "DataProcTask", which is inconsistent with the rest of the

naming in the {{gcp_dataproc_hook.py}}.

> gcp_dataproc_hook should treat CANCELLED job state consistently

> ---

>

> Key: AIRFLOW-3035

> URL: https://issues.apache.org/jira/browse/AIRFLOW-3035

> Project: Apache Airflow

> Issue Type: Bug

> Components: contrib

>Affects Versions: 1.10.0, 2.0.0, 1.10.1

>Reporter: Jeffrey Payne

>Assignee: Jeffrey Payne

>Priority: Major

> Labels: dataproc

>

> When a DP job is cancelled, {{gcp_dataproc_hook.py}} does not treat the

> {{CENCELLED}} state in a consistent and non-intuitive manner:

> # The API internal to {{gcp_dataproc_hook.py}} returns {{False}} from

> {{_DataProcJob.wait_for_done()}}, resulting in {{raise_error()}} being called

> for cancelled jobs, yet {{raise_error()}} only raises {{Exception}} if the

> job state is {{ERROR}}.

> # The end result from the perspective of the {{dataproc_operator.py}} for a

> cancelled job is that the job succeeded, which results in the success

> callback being called. This seems strange to me, as a "cancelled" job is

> rarely considered successful, in my experience.

> Simply changing {{raise_error()}} from:

> {code:python}

> if 'ERROR' == self.job['status']['state']:

> {code}

> to

> {code:python}

> if self.job['status']['state'] in ('ERROR', 'CANCELLED'):

> {code}

> would fix both of these...

> Another, perhaps better, option would be to have the dataproc job operators

> accept a list of {{error_states}} that could be passed into

> {{raise_error()}}, allowing the caller to determine which states should

> result in "failure" of the task. I would lean towards that option.

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Updated] (AIRFLOW-3035) gcp_dataproc_hook should treat CANCELLED job state consistently

[

https://issues.apache.org/jira/browse/AIRFLOW-3035?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Jeffrey Payne updated AIRFLOW-3035:

---

Description:

When a DP job is cancelled, {{gcp_dataproc_hook.py}} does not treat the

{{CENCELLED}} state in a consistent and non-intuitive manner:

# The API internal to {{gcp_dataproc_hook.py}} returns {{False}} from

{{_DataProcJob.wait_for_done()}}, resulting in {{raise_error()}} being called

for cancelled jobs, yet {{raise_error()}} only raises {{Exception}} if the job

state is {{ERROR}}.

# The end result from the perspective of the {{dataproc_operator.py}} for a

cancelled job is that the job succeeded, which results in the success callback

being called. This seems strange to me, as a "cancelled" job is rarely

considered successful, in my experience.

Simply changing {{raise_error()}} from:

{code:python}

if 'ERROR' == self.job['status']['state']:

{code}

to

{code:python}

if self.job['status']['state'] in ('ERROR', 'CANCELLED'):

{code}

would fix both of these...

Another, perhaps better, option would be to have the dataproc job operators

accept a list of {{error_states}} that could be passed into {{raise_error()}},

allowing the caller to determine which states should result in "failure" of the

task. I would lean towards that option.

was:

When a DP job is cancelled, {{gcp_dataproc_hook.py}} does not treat the

{{CENCELLED}} state in a consistent and non-intuitive manner:

# The API internal to {{gcp_dataproc_hook.py}} returns {{False}} from

{{_DataProcJob.wait_for_done()}}, resulting in {{raise_error()}} being called

for cancelled jobs, yet {{raise_error()}} only raises {{Exception}} if the job

state is {{ERROR}}.

# The end result from the perspective of the {{dataproc_operator.py}} for a

cancelled job is that the job succeeded, which results in the success callback

being called. This seems strange to me, as a "cancelled" job is rarely

considered successful, in my experience.

Simply changing {{raise_error()}} from:

{code:python}

if 'ERROR' == self.job['status']['state']:

{code}

to

{code:python}

if self.job['status']['state'] in ('ERROR', 'CANCELLED'):

{code}

would fix both of these...

Another, perhaps better, option would be to have the dataproc job operators

accept a list of {{error_states}} that would result in {{raise_error()}} being

called, allowing the caller to determine which states should result in

"failure" of the task. I would lean towards that option.

> gcp_dataproc_hook should treat CANCELLED job state consistently

> ---

>

> Key: AIRFLOW-3035

> URL: https://issues.apache.org/jira/browse/AIRFLOW-3035

> Project: Apache Airflow

> Issue Type: Bug

> Components: contrib

>Affects Versions: 1.10.0, 2.0.0, 1.10.1

>Reporter: Jeffrey Payne

>Assignee: Jeffrey Payne

>Priority: Major

> Labels: dataproc

>

> When a DP job is cancelled, {{gcp_dataproc_hook.py}} does not treat the

> {{CENCELLED}} state in a consistent and non-intuitive manner:

> # The API internal to {{gcp_dataproc_hook.py}} returns {{False}} from

> {{_DataProcJob.wait_for_done()}}, resulting in {{raise_error()}} being called

> for cancelled jobs, yet {{raise_error()}} only raises {{Exception}} if the

> job state is {{ERROR}}.

> # The end result from the perspective of the {{dataproc_operator.py}} for a

> cancelled job is that the job succeeded, which results in the success

> callback being called. This seems strange to me, as a "cancelled" job is

> rarely considered successful, in my experience.

> Simply changing {{raise_error()}} from:

> {code:python}

> if 'ERROR' == self.job['status']['state']:

> {code}

> to

> {code:python}

> if self.job['status']['state'] in ('ERROR', 'CANCELLED'):

> {code}

> would fix both of these...

> Another, perhaps better, option would be to have the dataproc job operators

> accept a list of {{error_states}} that could be passed into

> {{raise_error()}}, allowing the caller to determine which states should

> result in "failure" of the task. I would lean towards that option.

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Updated] (AIRFLOW-3035) gcp_dataproc_hook should treat CANCELLED job state consistently

[

https://issues.apache.org/jira/browse/AIRFLOW-3035?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Jeffrey Payne updated AIRFLOW-3035:

---

Description:

When a DP job is cancelled, {{gcp_dataproc_hook.py}} does not treat the

{{CENCELLED}} state in a consistent and non-intuitive manner:

# The API internal to {{gcp_dataproc_hook.py}} returns {{False}} from

{{_DataProcJob.wait_for_done()}}, resulting in {{raise_error()}} being called

for cancelled jobs, yet {{raise_error()}} only raises {{Exception}} if the job

state is {{ERROR}}.

# The end result from the perspective of the {{dataproc_operator.py}} for a

cancelled job is that the job succeeded, which results in the success callback

being called. This seems strange to me, as a "cancelled" job is rarely

considered successful, in my experience.

Simply changing {{raise_error()}} from:

{code:python}

if 'ERROR' == self.job['status']['state']:

{code}

to

{code:python}

if self.job['status']['state'] in ('ERROR', 'CANCELLED'):

{code}

would fix both of these...

Another, perhaps better, option would be to have the dataproc job operators

accept a list of {{error_states}} that would result in {{raise_error()}} being

called, allowing the caller to determine which states should result in

"failure" of the task. I would lean towards that option.

was:

When a DP job is cancelled, {{gcp_dataproc_hook.py}} does not treat the

{{CENCELLED}} state in a consistent and non-intuitive manner:

# The API internal to {{gcp_dataproc_hook.py}} returns {{False}} from

{{_DataProcJob.wait_for_done()}}, resulting in {{raise_error()}} being called

for cancelled jobs, yet {{raise_error()}} only raises {{Exception}} if the job

state is {{ERROR}}.

# The end result from the perspective of the {{dataproc_operator.py}} for a

cancelled job is that the job succeeded, which results in the success callback

being called. This seems strange to me, as a "cancelled" job is rarely

considered successful, in my experience.

Simply changing {{raise_error()}} from:

{code:python}

if 'ERROR' == self.job['status']['state']:

{code}

to

{code:python}

if self.job['status']['state'] in ('ERROR', 'CANCELLED'):

{code}

would fix both of these...

> gcp_dataproc_hook should treat CANCELLED job state consistently

> ---

>

> Key: AIRFLOW-3035

> URL: https://issues.apache.org/jira/browse/AIRFLOW-3035

> Project: Apache Airflow

> Issue Type: Bug

> Components: contrib

>Affects Versions: 1.10.0, 2.0.0, 1.10.1

>Reporter: Jeffrey Payne

>Assignee: Jeffrey Payne

>Priority: Major

> Labels: dataproc

>

> When a DP job is cancelled, {{gcp_dataproc_hook.py}} does not treat the

> {{CENCELLED}} state in a consistent and non-intuitive manner:

> # The API internal to {{gcp_dataproc_hook.py}} returns {{False}} from

> {{_DataProcJob.wait_for_done()}}, resulting in {{raise_error()}} being called

> for cancelled jobs, yet {{raise_error()}} only raises {{Exception}} if the

> job state is {{ERROR}}.

> # The end result from the perspective of the {{dataproc_operator.py}} for a

> cancelled job is that the job succeeded, which results in the success

> callback being called. This seems strange to me, as a "cancelled" job is

> rarely considered successful, in my experience.

> Simply changing {{raise_error()}} from:

> {code:python}

> if 'ERROR' == self.job['status']['state']:

> {code}

> to

> {code:python}

> if self.job['status']['state'] in ('ERROR', 'CANCELLED'):

> {code}

> would fix both of these...

> Another, perhaps better, option would be to have the dataproc job operators

> accept a list of {{error_states}} that would result in {{raise_error()}}

> being called, allowing the caller to determine which states should result in

> "failure" of the task. I would lean towards that option.

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Assigned] (AIRFLOW-3035) gcp_dataproc_hook should treat CANCELLED job state consistently

[

https://issues.apache.org/jira/browse/AIRFLOW-3035?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Jeffrey Payne reassigned AIRFLOW-3035:

--

Assignee: Jeffrey Payne

> gcp_dataproc_hook should treat CANCELLED job state consistently

> ---

>

> Key: AIRFLOW-3035

> URL: https://issues.apache.org/jira/browse/AIRFLOW-3035

> Project: Apache Airflow

> Issue Type: Bug

> Components: contrib

>Affects Versions: 1.10.0, 2.0.0, 1.10.1

>Reporter: Jeffrey Payne

>Assignee: Jeffrey Payne

>Priority: Major

> Labels: dataproc

>

> When a DP job is cancelled, {{gcp_dataproc_hook.py}} does not treat the

> {{CENCELLED}} state in a consistent and non-intuitive manner: