[GitHub] [incubator-doris] imay commented on issue #2242: Is that will be better create table with column default enable to be null?

imay commented on issue #2242: Is that will be better create table with column default enable to be null? URL: https://github.com/apache/incubator-doris/issues/2242#issuecomment-556961195 For me, I think it is OK to change default from not null to NULL. Because it will not bring more discomfort, nullable is superset of not null. And most database default is null, such as MySQL and Postgresql. If the default is NULL, it will make load easier for beginners. So I will vote +1 for default NULL. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@doris.apache.org For additional commands, e-mail: commits-h...@doris.apache.org

[GitHub] [incubator-doris] caiconghui edited a comment on issue #2242: Is that will be better create table with column default enable to be null?

caiconghui edited a comment on issue #2242: Is that will be better create table with column default enable to be null? URL: https://github.com/apache/incubator-doris/issues/2242#issuecomment-556955387 @imay @morningman Maybe default nullable column may confuse users except hadoop users. In many database system, such as kudu, hive, mysql, the default nullable column is a common use case, we get data from hdfs, kafka, which cannot ensure that column data not null, actually, null value for many columns is very common in production enviroment. And kudu, parquet, orc file also process with nullable column well. It is also ok for us to mark every non key column nullable explicitly if column not nullable default feature is so much important for doris on scan performance. But the specail design should be noted in the document, because normal users may not think too much when create table, and may load data failed because of data not allowed null default. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@doris.apache.org For additional commands, e-mail: commits-h...@doris.apache.org

[GitHub] [incubator-doris] caiconghui commented on issue #2242: Is that will be better create table with column default enable to be null?

caiconghui commented on issue #2242: Is that will be better create table with column default enable to be null? URL: https://github.com/apache/incubator-doris/issues/2242#issuecomment-556955387 Maybe default nullable column may confuse users except hadoop users. In many database system, such as kudu, hive, mysql, the default nullable column is a common use case, we get data from hdfs, kafka, which cannot ensure that column data not null, actually, null value for many columns is very common in production enviroment. And kudu, parquet, orc file also process with nullable column well. It is also ok for us to mark every non key column nullable explicitly if column not nullable default feature is so much important for doris on scan performance. But the specail design should be noted in the document, because normal users may not think too much when create table, and may load data failed because of data not allowed null default. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@doris.apache.org For additional commands, e-mail: commits-h...@doris.apache.org

[GitHub] [incubator-doris] lingbin commented on issue #2249: add a mechanism for FE to collect the table's format statistics

lingbin commented on issue #2249: add a mechanism for FE to collect the table's format statistics URL: https://github.com/apache/incubator-doris/issues/2249#issuecomment-556953137 This is a one-time job, which means that the tool is no longer needed after the feature is fully online, so we only need to add an external tool. This tool should be able to view the status of the segment from the following three dimensions 1. cluster(i.e. total summary) 2. table 3. be This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@doris.apache.org For additional commands, e-mail: commits-h...@doris.apache.org

[GitHub] [incubator-doris] morningman commented on a change in pull request #2241: Fix some bugs about load label

morningman commented on a change in pull request #2241: Fix some bugs about load label URL: https://github.com/apache/incubator-doris/pull/2241#discussion_r348920708 ## File path: fe/src/main/java/org/apache/doris/load/loadv2/LoadManager.java ## @@ -106,7 +109,7 @@ public void createLoadJobFromStmt(LoadStmt stmt, String originStmt) throws DdlEx + "please retry later."); } loadJob = BrokerLoadJob.fromLoadStmt(stmt, originStmt); -createLoadJob(loadJob); +addLoadJob(loadJob); Review comment: 少侠好眼力! This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@doris.apache.org For additional commands, e-mail: commits-h...@doris.apache.org

[GitHub] [incubator-doris] vagetablechicken commented on a change in pull request #2235: Support setting properties for storage_root_path

vagetablechicken commented on a change in pull request #2235: Support setting

properties for storage_root_path

URL: https://github.com/apache/incubator-doris/pull/2235#discussion_r348917646

##

File path: be/src/olap/options.h

##

@@ -26,14 +26,25 @@

namespace doris {

struct StorePath {

-StorePath() : capacity_bytes(-1) { }

-StorePath(const std::string& path_, int64_t capacity_bytes_)

-: path(path_), capacity_bytes(capacity_bytes_) { }

+StorePath() : capacity_bytes(-1), storage_medium(TStorageMedium::HDD) { }

+StorePath(const std::string _, int64_t capacity_bytes_)

Review comment:

ok, I chanced to see this, mistakely think that Doris align pointer to the

right

https://github.com/apache/incubator-doris/blob/42a4fff562090fc40bcab48684b4a61614f8d8f8/be/src/tools/meta_tool.cpp#L105

I'll fix it.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: commits-unsubscr...@doris.apache.org

For additional commands, e-mail: commits-h...@doris.apache.org

[GitHub] [incubator-doris] imay commented on a change in pull request #2235: Support setting properties for storage_root_path

imay commented on a change in pull request #2235: Support setting properties

for storage_root_path

URL: https://github.com/apache/incubator-doris/pull/2235#discussion_r348880244

##

File path: be/src/tools/meta_tool.cpp

##

@@ -139,8 +140,14 @@ int main(int argc, char **argv) {

std::cout << "invalid root path:" << FLAGS_root_path << ", error:

" << st.to_string() << std::endl;

return -1;

}

+doris::StorePath path;

+auto res = parse_root_path(root_path.string(), );

+if (res != OLAP_SUCCESS){

+std::cout << "parse root path failed:" << root_path.string()

<< std::endl;

Review comment:

```suggestion

std::cout << "parse root path failed:" << root_path.string() <<

std::endl;

```

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: commits-unsubscr...@doris.apache.org

For additional commands, e-mail: commits-h...@doris.apache.org

[GitHub] [incubator-doris] imay commented on a change in pull request #2235: Support setting properties for storage_root_path

imay commented on a change in pull request #2235: Support setting properties

for storage_root_path

URL: https://github.com/apache/incubator-doris/pull/2235#discussion_r348880272

##

File path: be/src/tools/meta_tool.cpp

##

@@ -139,8 +140,14 @@ int main(int argc, char **argv) {

std::cout << "invalid root path:" << FLAGS_root_path << ", error:

" << st.to_string() << std::endl;

return -1;

}

+doris::StorePath path;

+auto res = parse_root_path(root_path.string(), );

+if (res != OLAP_SUCCESS){

+std::cout << "parse root path failed:" << root_path.string()

<< std::endl;

+return -1;

Review comment:

```suggestion

return -1;

```

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: commits-unsubscr...@doris.apache.org

For additional commands, e-mail: commits-h...@doris.apache.org

[GitHub] [incubator-doris] manannan2017 commented on issue #2259: cast decimal,query large precision or small precision, the result precision is same as creating table

manannan2017 commented on issue #2259: cast decimal,query large precision or small precision, the result precision is same as creating table URL: https://github.com/apache/incubator-doris/issues/2259#issuecomment-556923502 #2135 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@doris.apache.org For additional commands, e-mail: commits-h...@doris.apache.org

[GitHub] [incubator-doris] manannan2017 opened a new issue #2259: cast decimal,query large precision or small precision, the result precision is same as creating table

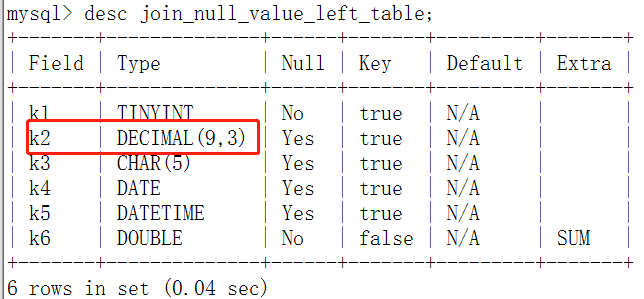

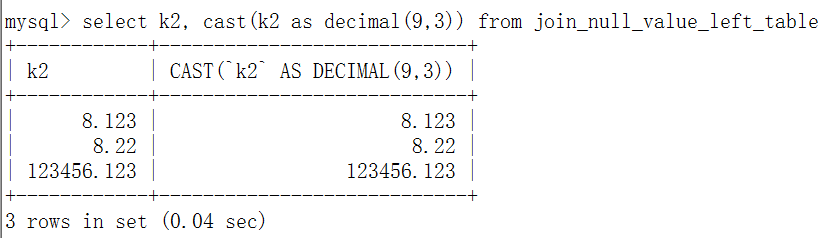

manannan2017 opened a new issue #2259: cast decimal,query large precision or small precision, the result precision is same as creating table URL: https://github.com/apache/incubator-doris/issues/2259 1、table k2 decimal(9,3)  2、sql  3、excepted select cast(k2 as decimal(9,3)) from table: 8.123 8.220 123456.123 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@doris.apache.org For additional commands, e-mail: commits-h...@doris.apache.org

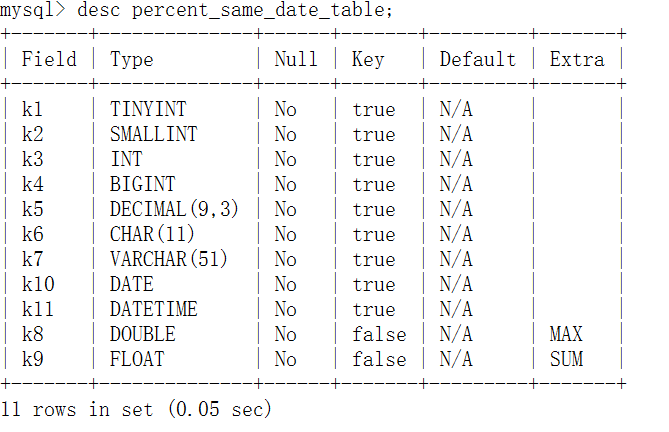

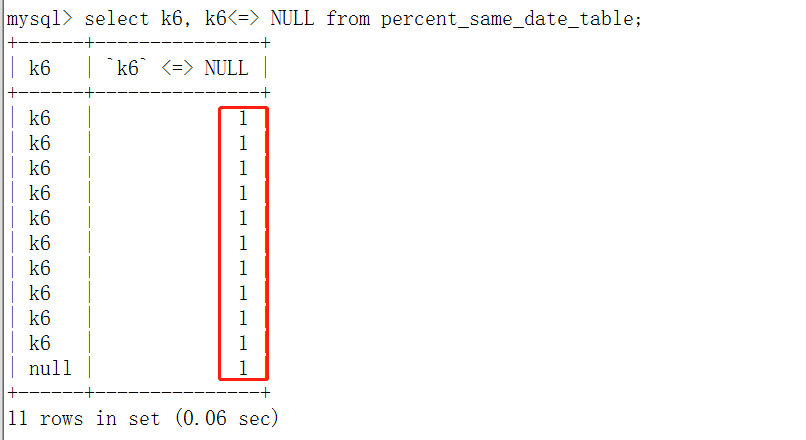

[GitHub] [incubator-doris] manannan2017 opened a new issue #2258: when using <=> operator,i meet some problems

manannan2017 opened a new issue #2258: when using <=> operator,i meet some

problems

URL: https://github.com/apache/incubator-doris/issues/2258

1、sql:

select cast('2019-09-09' as int) <=> NULL, cast('2019' as int) <=> NULL;

ERROR 1064 (HY000): Unexpected exception: null

excepted equal:

select NULL <=> NULL, 2019 <=> NULL;

2、select 'a' <=> NULL, '2019-09-09' <=> NULL, 'true' <=> NULL, 78.367 <=>

NULL;

ERROR 5012 (HY000): 'a' is not a number

excepted res:

0 0 0 0

3、table describe:

k6 is char

#2136

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: commits-unsubscr...@doris.apache.org

For additional commands, e-mail: commits-h...@doris.apache.org

[GitHub] [incubator-doris] EmmyMiao87 commented on a change in pull request #2241: Fix some bugs about load label

EmmyMiao87 commented on a change in pull request #2241: Fix some bugs about load label URL: https://github.com/apache/incubator-doris/pull/2241#discussion_r348896948 ## File path: fe/src/main/java/org/apache/doris/load/loadv2/LoadManager.java ## @@ -106,7 +109,7 @@ public void createLoadJobFromStmt(LoadStmt stmt, String originStmt) throws DdlEx + "please retry later."); } loadJob = BrokerLoadJob.fromLoadStmt(stmt, originStmt); -createLoadJob(loadJob); +addLoadJob(loadJob); Review comment: Where is add callback? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@doris.apache.org For additional commands, e-mail: commits-h...@doris.apache.org

[GitHub] [incubator-doris] imay commented on a change in pull request #2235: Support setting properties for storage_root_path

imay commented on a change in pull request #2235: Support setting properties

for storage_root_path

URL: https://github.com/apache/incubator-doris/pull/2235#discussion_r348875456

##

File path: be/src/olap/options.h

##

@@ -26,14 +26,25 @@

namespace doris {

struct StorePath {

-StorePath() : capacity_bytes(-1) { }

-StorePath(const std::string& path_, int64_t capacity_bytes_)

-: path(path_), capacity_bytes(capacity_bytes_) { }

+StorePath() : capacity_bytes(-1), storage_medium(TStorageMedium::HDD) { }

+StorePath(const std::string _, int64_t capacity_bytes_)

Review comment:

Doris align both reference and pointer to the left.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: commits-unsubscr...@doris.apache.org

For additional commands, e-mail: commits-h...@doris.apache.org

[GitHub] [incubator-doris] vagetablechicken commented on a change in pull request #2235: Support setting properties for storage_root_path

vagetablechicken commented on a change in pull request #2235: Support setting

properties for storage_root_path

URL: https://github.com/apache/incubator-doris/pull/2235#discussion_r348874505

##

File path: be/src/olap/options.h

##

@@ -26,14 +26,25 @@

namespace doris {

struct StorePath {

-StorePath() : capacity_bytes(-1) { }

-StorePath(const std::string& path_, int64_t capacity_bytes_)

-: path(path_), capacity_bytes(capacity_bytes_) { }

+StorePath() : capacity_bytes(-1), storage_medium(TStorageMedium::HDD) { }

+StorePath(const std::string _, int64_t capacity_bytes_)

Review comment:

So Doris align pointer to the right, and reference to the left? I think

that's not a good idea.

In clang-format, pointer and reference alignment style is a single option,

"PointerAlignment".

http://clang.llvm.org/docs/ClangFormatStyleOptions.html

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: commits-unsubscr...@doris.apache.org

For additional commands, e-mail: commits-h...@doris.apache.org

[GitHub] [incubator-doris] morningman closed pull request #2241: Fix some bugs about load label

morningman closed pull request #2241: Fix some bugs about load label URL: https://github.com/apache/incubator-doris/pull/2241 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@doris.apache.org For additional commands, e-mail: commits-h...@doris.apache.org

[GitHub] [incubator-doris] morningman opened a new pull request #2241: Fix some bugs about load label

morningman opened a new pull request #2241: Fix some bugs about load label URL: https://github.com/apache/incubator-doris/pull/2241 1. `dbIdToTxnLabels` in `GlobalTransactionMgr` should be consistent with `idToTransactionState`, not only contains running or finished transactions' labels. 2. callback id should be removed when replaying transaction abort or visible edit log. 3. `LabelAlreadyUsed` exception should be thrown before adding load job. Otherwise, there will be lots of CANCELLED load jobs when reason "label already used". 4. LoadTimeoutChecker should check txn's status before deciding weather to cancel the job, instead of only checking job' state. ISSUE #2240 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@doris.apache.org For additional commands, e-mail: commits-h...@doris.apache.org

[GitHub] [incubator-doris] morningman commented on issue #2222: Publish version immediately after txt commited

morningman commented on issue #: Publish version immediately after txt commited URL: https://github.com/apache/incubator-doris/pull/#issuecomment-556859778 @yiguolei Plz review this PR This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@doris.apache.org For additional commands, e-mail: commits-h...@doris.apache.org

[incubator-doris] branch master updated: Add schema hash to tablet proc info (#2257)

This is an automated email from the ASF dual-hosted git repository.

morningman pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/incubator-doris.git

View the commit online:

https://github.com/apache/incubator-doris/commit/9c85a04580bcadc3b5da91f380334a860c1fdc7e

The following commit(s) were added to refs/heads/master by this push:

new 9c85a04 Add schema hash to tablet proc info (#2257)

9c85a04 is described below

commit 9c85a04580bcadc3b5da91f380334a860c1fdc7e

Author: LingBin

AuthorDate: Wed Nov 20 20:06:30 2019 -0600

Add schema hash to tablet proc info (#2257)

---

fe/src/main/java/org/apache/doris/common/proc/TabletsProcDir.java | 6 --

1 file changed, 4 insertions(+), 2 deletions(-)

diff --git a/fe/src/main/java/org/apache/doris/common/proc/TabletsProcDir.java

b/fe/src/main/java/org/apache/doris/common/proc/TabletsProcDir.java

index 3690dad..a19a498 100644

--- a/fe/src/main/java/org/apache/doris/common/proc/TabletsProcDir.java

+++ b/fe/src/main/java/org/apache/doris/common/proc/TabletsProcDir.java

@@ -40,7 +40,7 @@ import java.util.List;

*/

public class TabletsProcDir implements ProcDirInterface {

public static final ImmutableList TITLE_NAMES = new

ImmutableList.Builder()

-.add("TabletId").add("ReplicaId").add("BackendId").add("Version")

+

.add("TabletId").add("ReplicaId").add("BackendId").add("SchemaHash").add("Version")

.add("VersionHash").add("LstSuccessVersion").add("LstSuccessVersionHash")

.add("LstFailedVersion").add("LstFailedVersionHash").add("LstFailedTime")

.add("DataSize").add("RowCount").add("State")

@@ -50,7 +50,7 @@ public class TabletsProcDir implements ProcDirInterface {

private Database db;

private MaterializedIndex index;

-

+

public TabletsProcDir(Database db, MaterializedIndex index) {

this.db = db;

this.index = index;

@@ -71,6 +71,7 @@ public class TabletsProcDir implements ProcDirInterface {

tabletInfo.add(tabletId);

tabletInfo.add(-1); // replica id

tabletInfo.add(-1); // backend id

+tabletInfo.add(-1); // schema hash

tabletInfo.add("N/A"); // host name

tabletInfo.add(-1); // version

tabletInfo.add(-1); // version hash

@@ -101,6 +102,7 @@ public class TabletsProcDir implements ProcDirInterface {

tabletInfo.add(tabletId);

tabletInfo.add(replica.getId());

tabletInfo.add(replica.getBackendId());

+tabletInfo.add(replica.getSchemaHash());

tabletInfo.add(replica.getVersion());

tabletInfo.add(replica.getVersionHash());

tabletInfo.add(replica.getLastSuccessVersion());

-

To unsubscribe, e-mail: commits-unsubscr...@doris.apache.org

For additional commands, e-mail: commits-h...@doris.apache.org

[GitHub] [incubator-doris] morningman merged pull request #2257: Add schema hash to tablet proc info

morningman merged pull request #2257: Add schema hash to tablet proc info URL: https://github.com/apache/incubator-doris/pull/2257 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@doris.apache.org For additional commands, e-mail: commits-h...@doris.apache.org

[GitHub] [incubator-doris] wuyunfeng commented on a change in pull request #2228: add spark-doris-connector extension

wuyunfeng commented on a change in pull request #2228: add

spark-doris-connector extension

URL: https://github.com/apache/incubator-doris/pull/2228#discussion_r348853682

##

File path:

extension/spark-doris-connector/src/main/java/org/apache/doris/spark/rest/RestService.java

##

@@ -0,0 +1,462 @@

+// Licensed to the Apache Software Foundation (ASF) under one

+// or more contributor license agreements. See the NOTICE file

+// distributed with this work for additional information

+// regarding copyright ownership. The ASF licenses this file

+// to you under the Apache License, Version 2.0 (the

+// "License"); you may not use this file except in compliance

+// with the License. You may obtain a copy of the License at

+//

+// http://www.apache.org/licenses/LICENSE-2.0

+//

+// Unless required by applicable law or agreed to in writing,

+// software distributed under the License is distributed on an

+// "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+// KIND, either express or implied. See the License for the

+// specific language governing permissions and limitations

+// under the License.

+

+package org.apache.doris.spark.rest;

+

+import static org.apache.doris.spark.cfg.ConfigurationOptions.DORIS_FENODES;

+import static

org.apache.doris.spark.cfg.ConfigurationOptions.DORIS_FILTER_QUERY;

+import static org.apache.doris.spark.cfg.ConfigurationOptions.DORIS_READ_FIELD;

+import static

org.apache.doris.spark.cfg.ConfigurationOptions.DORIS_REQUEST_AUTH_PASSWORD;

+import static

org.apache.doris.spark.cfg.ConfigurationOptions.DORIS_REQUEST_AUTH_USER;

+import static

org.apache.doris.spark.cfg.ConfigurationOptions.DORIS_TABLET_SIZE;

+import static

org.apache.doris.spark.cfg.ConfigurationOptions.DORIS_TABLET_SIZE_DEFAULT;

+import static

org.apache.doris.spark.cfg.ConfigurationOptions.DORIS_TABLET_SIZE_MIN;

+import static

org.apache.doris.spark.cfg.ConfigurationOptions.DORIS_TABLE_IDENTIFIER;

+import static org.apache.doris.spark.util.ErrorMessages.CONNECT_FAILED_MESSAGE;

+import static

org.apache.doris.spark.util.ErrorMessages.ILLEGAL_ARGUMENT_MESSAGE;

+import static

org.apache.doris.spark.util.ErrorMessages.PARSE_NUMBER_FAILED_MESSAGE;

+import static

org.apache.doris.spark.util.ErrorMessages.SHOULD_NOT_HAPPEN_MESSAGE;

+

+import java.io.IOException;

+import java.io.Serializable;

+import java.nio.charset.StandardCharsets;

+import java.util.ArrayList;

+import java.util.Arrays;

+import java.util.Collections;

+import java.util.HashMap;

+import java.util.HashSet;

+import java.util.List;

+import java.util.Map;

+import java.util.Set;

+

+import org.apache.commons.lang3.StringUtils;

+import org.apache.doris.spark.cfg.ConfigurationOptions;

+import org.apache.doris.spark.cfg.Settings;

+import org.apache.doris.spark.exception.ConnectedFailedException;

+import org.apache.doris.spark.exception.DorisException;

+import org.apache.doris.spark.exception.IllegalArgumentException;

+import org.apache.doris.spark.exception.ShouldNeverHappenException;

+import org.apache.doris.spark.rest.models.QueryPlan;

+import org.apache.doris.spark.rest.models.Schema;

+import org.apache.doris.spark.rest.models.Tablet;

+import org.apache.http.HttpStatus;

+import org.apache.http.auth.AuthScope;

+import org.apache.http.auth.UsernamePasswordCredentials;

+import org.apache.http.client.CredentialsProvider;

+import org.apache.http.client.config.RequestConfig;

+import org.apache.http.client.methods.CloseableHttpResponse;

+import org.apache.http.client.methods.HttpGet;

+import org.apache.http.client.methods.HttpPost;

+import org.apache.http.client.methods.HttpRequestBase;

+import org.apache.http.client.protocol.HttpClientContext;

+import org.apache.http.entity.StringEntity;

+import org.apache.http.impl.client.BasicCredentialsProvider;

+import org.apache.http.impl.client.CloseableHttpClient;

+import org.apache.http.impl.client.HttpClients;

+import org.apache.http.util.EntityUtils;

+import org.codehaus.jackson.JsonParseException;

+import org.codehaus.jackson.map.JsonMappingException;

+import org.codehaus.jackson.map.ObjectMapper;

+import org.slf4j.Logger;

+

+import com.google.common.annotations.VisibleForTesting;

+

+/**

+ * Service for communicate with Doris FE.

+ */

+public class RestService implements Serializable {

+public final static int REST_RESPONSE_STATUS_OK = 200;

+private static final String API_PREFIX = "/api";

+private static final String SCHEMA = "_schema";

+private static final String QUERY_PLAN = "_query_plan";

+

+/**

+ * send request to Doris FE and get response json string.

+ * @param cfg configuration of request

+ * @param request {@link HttpRequestBase} real request

+ * @param logger {@link Logger}

+ * @return Doris FE response in json string

+ * @throws ConnectedFailedException throw when cannot connect to Doris FE

+ */

+private static String send(Settings cfg, HttpRequestBase request, Logger

logger) throws

+ConnectedFailedException {

[GitHub] [incubator-doris] wuyunfeng commented on a change in pull request #2228: add spark-doris-connector extension

wuyunfeng commented on a change in pull request #2228: add

spark-doris-connector extension

URL: https://github.com/apache/incubator-doris/pull/2228#discussion_r348851661

##

File path:

extension/spark-doris-connector/src/main/java/org/apache/doris/spark/cfg/ConfigurationOptions.java

##

@@ -0,0 +1,55 @@

+// Licensed to the Apache Software Foundation (ASF) under one

+// or more contributor license agreements. See the NOTICE file

+// distributed with this work for additional information

+// regarding copyright ownership. The ASF licenses this file

+// to you under the Apache License, Version 2.0 (the

+// "License"); you may not use this file except in compliance

+// with the License. You may obtain a copy of the License at

+//

+// http://www.apache.org/licenses/LICENSE-2.0

+//

+// Unless required by applicable law or agreed to in writing,

+// software distributed under the License is distributed on an

+// "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+// KIND, either express or implied. See the License for the

+// specific language governing permissions and limitations

+// under the License.

+

+package org.apache.doris.spark.cfg;

+

+public interface ConfigurationOptions {

+// doris fe node address

+String DORIS_FENODES = "doris.fenodes";

+

+String DORIS_DEFAULT_CLUSTER = "default_cluster";

+

+String TABLE_IDENTIFIER = "table.identifier";

+String DORIS_TABLE_IDENTIFIER = "doris.table.identifier";

+String DORIS_READ_FIELD = "doris.read.field";

+String DORIS_FILTER_QUERY = "doris.filter.query";

+String DORIS_FILTER_QUERY_IN_VALUE_MAX = "doris.filter.query.in.value.max";

Review comment:

```suggestion

String DORIS_FILTER_QUERY_IN_VALUE_MAX =

"doris.filter.query.in.max_count";

```

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: commits-unsubscr...@doris.apache.org

For additional commands, e-mail: commits-h...@doris.apache.org

[GitHub] [incubator-doris] wuyunfeng commented on a change in pull request #2228: add spark-doris-connector extension

wuyunfeng commented on a change in pull request #2228: add

spark-doris-connector extension

URL: https://github.com/apache/incubator-doris/pull/2228#discussion_r348851150

##

File path:

extension/spark-doris-connector/src/main/java/org/apache/doris/spark/backend/BackendClient.java

##

@@ -0,0 +1,219 @@

+// Licensed to the Apache Software Foundation (ASF) under one

+// or more contributor license agreements. See the NOTICE file

+// distributed with this work for additional information

+// regarding copyright ownership. The ASF licenses this file

+// to you under the Apache License, Version 2.0 (the

+// "License"); you may not use this file except in compliance

+// with the License. You may obtain a copy of the License at

+//

+// http://www.apache.org/licenses/LICENSE-2.0

+//

+// Unless required by applicable law or agreed to in writing,

+// software distributed under the License is distributed on an

+// "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+// KIND, either express or implied. See the License for the

+// specific language governing permissions and limitations

+// under the License.

+

+package org.apache.doris.spark.backend;

+

+import org.apache.doris.spark.cfg.ConfigurationOptions;

+import org.apache.doris.spark.exception.ConnectedFailedException;

+import org.apache.doris.spark.util.ErrorMessages;

+import org.apache.doris.spark.cfg.Settings;

+import org.apache.doris.spark.serialization.Routing;

+import org.apache.doris.thrift.TDorisExternalService;

+import org.apache.doris.thrift.TScanBatchResult;

+import org.apache.doris.thrift.TScanCloseParams;

+import org.apache.doris.thrift.TScanCloseResult;

+import org.apache.doris.thrift.TScanNextBatchParams;

+import org.apache.doris.thrift.TScanOpenParams;

+import org.apache.doris.thrift.TScanOpenResult;

+import org.apache.doris.thrift.TStatusCode;

+import org.apache.thrift.TException;

+import org.apache.thrift.protocol.TBinaryProtocol;

+import org.apache.thrift.protocol.TProtocol;

+import org.apache.thrift.transport.TSocket;

+import org.apache.thrift.transport.TTransport;

+import org.apache.thrift.transport.TTransportException;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+/**

+ * Client to request Doris BE

+ */

+public class BackendClient {

+private static Logger logger =

LoggerFactory.getLogger(BackendClient.class);

+

+private Routing routing;

+

+private TDorisExternalService.Client client;

+private TTransport transport;

+

+private boolean isConnected = false;

+private final int retries;

+private final int socketTimeout;

+private final int connectTimeout;

+

+public BackendClient(Routing routing, Settings settings) throws

ConnectedFailedException {

+this.routing = routing;

+this.connectTimeout =

settings.getIntegerProperty(ConfigurationOptions.DORIS_REQUEST_CONNECT_TIMEOUT_MS,

+ConfigurationOptions.DORIS_REQUEST_CONNECT_TIMEOUT_DEFAULT);

+this.socketTimeout =

settings.getIntegerProperty(ConfigurationOptions.DORIS_REQUEST_READ_TIMEOUT_MS,

+ConfigurationOptions.DORIS_REQUEST_READ_TIMEOUT_DEFAULT);

+this.retries =

settings.getIntegerProperty(ConfigurationOptions.DORIS_REQUEST_RETRIES,

+ConfigurationOptions.DORIS_REQUEST_RETRIES_DEFAULT);

+logger.trace("connect timeout set to '{}'.", this.connectTimeout);

+logger.trace("socket timeout set to '{}'.", this.socketTimeout);

+logger.trace("retries set to '{}'.", this.retries);

+open();

+}

+

+private void open() throws ConnectedFailedException {

+logger.trace("Open client to Doris BE '{}'.", routing);

+TException ex = null;

+for (int attempt = 0; !isConnected && attempt < retries; ++attempt) {

+logger.debug("Attempt {} to connect {}.", attempt, routing);

+TBinaryProtocol.Factory factory = new TBinaryProtocol.Factory();

+transport = new TSocket(routing.getHost(), routing.getPort(),

socketTimeout, connectTimeout);

+TProtocol protocol = factory.getProtocol(transport);

+client = new TDorisExternalService.Client(protocol);

+try {

+logger.trace("Connect status before open transport to {} is

'{}'.", routing, isConnected);

+if (!transport.isOpen()) {

+transport.open();

+isConnected = true;

+}

+} catch (TTransportException e) {

+logger.warn(ErrorMessages.CONNECT_FAILED_MESSAGE, routing, e);

+ex = e;

+}

+if (isConnected) {

+logger.info("Success connect to {}.", routing);

+break;

+}

+}

+if (!isConnected) {

+logger.error(ErrorMessages.CONNECT_FAILED_MESSAGE, routing);

+throw new ConnectedFailedException(routing.toString(), ex);

+}

+}

+

+private void close() {

+

[GitHub] [incubator-doris] wuyunfeng commented on a change in pull request #2228: add spark-doris-connector extension

wuyunfeng commented on a change in pull request #2228: add

spark-doris-connector extension

URL: https://github.com/apache/incubator-doris/pull/2228#discussion_r348851898

##

File path:

extension/spark-doris-connector/src/main/java/org/apache/doris/spark/cfg/ConfigurationOptions.java

##

@@ -0,0 +1,55 @@

+// Licensed to the Apache Software Foundation (ASF) under one

+// or more contributor license agreements. See the NOTICE file

+// distributed with this work for additional information

+// regarding copyright ownership. The ASF licenses this file

+// to you under the Apache License, Version 2.0 (the

+// "License"); you may not use this file except in compliance

+// with the License. You may obtain a copy of the License at

+//

+// http://www.apache.org/licenses/LICENSE-2.0

+//

+// Unless required by applicable law or agreed to in writing,

+// software distributed under the License is distributed on an

+// "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+// KIND, either express or implied. See the License for the

+// specific language governing permissions and limitations

+// under the License.

+

+package org.apache.doris.spark.cfg;

+

+public interface ConfigurationOptions {

+// doris fe node address

+String DORIS_FENODES = "doris.fenodes";

+

+String DORIS_DEFAULT_CLUSTER = "default_cluster";

+

+String TABLE_IDENTIFIER = "table.identifier";

+String DORIS_TABLE_IDENTIFIER = "doris.table.identifier";

+String DORIS_READ_FIELD = "doris.read.field";

+String DORIS_FILTER_QUERY = "doris.filter.query";

+String DORIS_FILTER_QUERY_IN_VALUE_MAX = "doris.filter.query.in.value.max";

+int DORIS_FILTER_QUERY_IN_VALUE_UPPER_LIMIT = 1;

+

+String DORIS_USER = "doris.user";

+String DORIS_REQUEST_AUTH_USER = "doris.request.auth.user";

+// use password to save doris.request.auth.password

+// reuse credentials mask method in spark

ExternalCatalogUtils#maskCredentials

+String DORIS_PASSWORD = "doris.password";

+String DORIS_REQUEST_AUTH_PASSWORD = "doris.request.auth.password";

+

+String DORIS_REQUEST_RETRIES = "doris.request.retries";

+String DORIS_REQUEST_CONNECT_TIMEOUT_MS =

"doris.request.connect.timeout.ms";

+String DORIS_REQUEST_READ_TIMEOUT_MS = "doris.request.read.timeout.ms";

+int DORIS_REQUEST_RETRIES_DEFAULT = 3;

+int DORIS_REQUEST_CONNECT_TIMEOUT_DEFAULT = 30 * 1000;

Review comment:

Can you comment the time unit for these time setting?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: commits-unsubscr...@doris.apache.org

For additional commands, e-mail: commits-h...@doris.apache.org

[GitHub] [incubator-doris] wuyunfeng commented on a change in pull request #2228: add spark-doris-connector extension

wuyunfeng commented on a change in pull request #2228: add

spark-doris-connector extension

URL: https://github.com/apache/incubator-doris/pull/2228#discussion_r348850375

##

File path:

extension/spark-doris-connector/src/main/java/org/apache/doris/spark/backend/BackendClient.java

##

@@ -0,0 +1,219 @@

+// Licensed to the Apache Software Foundation (ASF) under one

+// or more contributor license agreements. See the NOTICE file

+// distributed with this work for additional information

+// regarding copyright ownership. The ASF licenses this file

+// to you under the Apache License, Version 2.0 (the

+// "License"); you may not use this file except in compliance

+// with the License. You may obtain a copy of the License at

+//

+// http://www.apache.org/licenses/LICENSE-2.0

+//

+// Unless required by applicable law or agreed to in writing,

+// software distributed under the License is distributed on an

+// "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+// KIND, either express or implied. See the License for the

+// specific language governing permissions and limitations

+// under the License.

+

+package org.apache.doris.spark.backend;

+

+import org.apache.doris.spark.cfg.ConfigurationOptions;

+import org.apache.doris.spark.exception.ConnectedFailedException;

+import org.apache.doris.spark.util.ErrorMessages;

+import org.apache.doris.spark.cfg.Settings;

+import org.apache.doris.spark.serialization.Routing;

+import org.apache.doris.thrift.TDorisExternalService;

+import org.apache.doris.thrift.TScanBatchResult;

+import org.apache.doris.thrift.TScanCloseParams;

+import org.apache.doris.thrift.TScanCloseResult;

+import org.apache.doris.thrift.TScanNextBatchParams;

+import org.apache.doris.thrift.TScanOpenParams;

+import org.apache.doris.thrift.TScanOpenResult;

+import org.apache.doris.thrift.TStatusCode;

+import org.apache.thrift.TException;

+import org.apache.thrift.protocol.TBinaryProtocol;

+import org.apache.thrift.protocol.TProtocol;

+import org.apache.thrift.transport.TSocket;

+import org.apache.thrift.transport.TTransport;

+import org.apache.thrift.transport.TTransportException;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+/**

+ * Client to request Doris BE

+ */

+public class BackendClient {

+private static Logger logger =

LoggerFactory.getLogger(BackendClient.class);

+

+private Routing routing;

+

+private TDorisExternalService.Client client;

+private TTransport transport;

+

+private boolean isConnected = false;

+private final int retries;

+private final int socketTimeout;

+private final int connectTimeout;

+

+public BackendClient(Routing routing, Settings settings) throws

ConnectedFailedException {

+this.routing = routing;

+this.connectTimeout =

settings.getIntegerProperty(ConfigurationOptions.DORIS_REQUEST_CONNECT_TIMEOUT_MS,

+ConfigurationOptions.DORIS_REQUEST_CONNECT_TIMEOUT_DEFAULT);

+this.socketTimeout =

settings.getIntegerProperty(ConfigurationOptions.DORIS_REQUEST_READ_TIMEOUT_MS,

+ConfigurationOptions.DORIS_REQUEST_READ_TIMEOUT_DEFAULT);

+this.retries =

settings.getIntegerProperty(ConfigurationOptions.DORIS_REQUEST_RETRIES,

+ConfigurationOptions.DORIS_REQUEST_RETRIES_DEFAULT);

+logger.trace("connect timeout set to '{}'.", this.connectTimeout);

Review comment:

merge these trace log

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: commits-unsubscr...@doris.apache.org

For additional commands, e-mail: commits-h...@doris.apache.org

[GitHub] [incubator-doris] wuyunfeng commented on a change in pull request #2228: add spark-doris-connector extension

wuyunfeng commented on a change in pull request #2228: add

spark-doris-connector extension

URL: https://github.com/apache/incubator-doris/pull/2228#discussion_r348850825

##

File path:

extension/spark-doris-connector/src/main/java/org/apache/doris/spark/backend/BackendClient.java

##

@@ -0,0 +1,219 @@

+// Licensed to the Apache Software Foundation (ASF) under one

+// or more contributor license agreements. See the NOTICE file

+// distributed with this work for additional information

+// regarding copyright ownership. The ASF licenses this file

+// to you under the Apache License, Version 2.0 (the

+// "License"); you may not use this file except in compliance

+// with the License. You may obtain a copy of the License at

+//

+// http://www.apache.org/licenses/LICENSE-2.0

+//

+// Unless required by applicable law or agreed to in writing,

+// software distributed under the License is distributed on an

+// "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+// KIND, either express or implied. See the License for the

+// specific language governing permissions and limitations

+// under the License.

+

+package org.apache.doris.spark.backend;

+

+import org.apache.doris.spark.cfg.ConfigurationOptions;

+import org.apache.doris.spark.exception.ConnectedFailedException;

+import org.apache.doris.spark.util.ErrorMessages;

+import org.apache.doris.spark.cfg.Settings;

+import org.apache.doris.spark.serialization.Routing;

+import org.apache.doris.thrift.TDorisExternalService;

+import org.apache.doris.thrift.TScanBatchResult;

+import org.apache.doris.thrift.TScanCloseParams;

+import org.apache.doris.thrift.TScanCloseResult;

+import org.apache.doris.thrift.TScanNextBatchParams;

+import org.apache.doris.thrift.TScanOpenParams;

+import org.apache.doris.thrift.TScanOpenResult;

+import org.apache.doris.thrift.TStatusCode;

+import org.apache.thrift.TException;

+import org.apache.thrift.protocol.TBinaryProtocol;

+import org.apache.thrift.protocol.TProtocol;

+import org.apache.thrift.transport.TSocket;

+import org.apache.thrift.transport.TTransport;

+import org.apache.thrift.transport.TTransportException;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+/**

+ * Client to request Doris BE

+ */

+public class BackendClient {

+private static Logger logger =

LoggerFactory.getLogger(BackendClient.class);

+

+private Routing routing;

+

+private TDorisExternalService.Client client;

+private TTransport transport;

+

+private boolean isConnected = false;

+private final int retries;

+private final int socketTimeout;

+private final int connectTimeout;

+

+public BackendClient(Routing routing, Settings settings) throws

ConnectedFailedException {

+this.routing = routing;

+this.connectTimeout =

settings.getIntegerProperty(ConfigurationOptions.DORIS_REQUEST_CONNECT_TIMEOUT_MS,

+ConfigurationOptions.DORIS_REQUEST_CONNECT_TIMEOUT_DEFAULT);

+this.socketTimeout =

settings.getIntegerProperty(ConfigurationOptions.DORIS_REQUEST_READ_TIMEOUT_MS,

+ConfigurationOptions.DORIS_REQUEST_READ_TIMEOUT_DEFAULT);

+this.retries =

settings.getIntegerProperty(ConfigurationOptions.DORIS_REQUEST_RETRIES,

+ConfigurationOptions.DORIS_REQUEST_RETRIES_DEFAULT);

+logger.trace("connect timeout set to '{}'.", this.connectTimeout);

+logger.trace("socket timeout set to '{}'.", this.socketTimeout);

+logger.trace("retries set to '{}'.", this.retries);

+open();

+}

+

+private void open() throws ConnectedFailedException {

+logger.trace("Open client to Doris BE '{}'.", routing);

+TException ex = null;

+for (int attempt = 0; !isConnected && attempt < retries; ++attempt) {

+logger.debug("Attempt {} to connect {}.", attempt, routing);

+TBinaryProtocol.Factory factory = new TBinaryProtocol.Factory();

+transport = new TSocket(routing.getHost(), routing.getPort(),

socketTimeout, connectTimeout);

+TProtocol protocol = factory.getProtocol(transport);

+client = new TDorisExternalService.Client(protocol);

+try {

+logger.trace("Connect status before open transport to {} is

'{}'.", routing, isConnected);

+if (!transport.isOpen()) {

+transport.open();

+isConnected = true;

+}

+} catch (TTransportException e) {

+logger.warn(ErrorMessages.CONNECT_FAILED_MESSAGE, routing, e);

+ex = e;

+}

+if (isConnected) {

+logger.info("Success connect to {}.", routing);

+break;

+}

+}

+if (!isConnected) {

+logger.error(ErrorMessages.CONNECT_FAILED_MESSAGE, routing);

+throw new ConnectedFailedException(routing.toString(), ex);

+}

+}

+

+private void close() {

+

[GitHub] [incubator-doris] wuyunfeng commented on a change in pull request #2228: add spark-doris-connector extension

wuyunfeng commented on a change in pull request #2228: add

spark-doris-connector extension

URL: https://github.com/apache/incubator-doris/pull/2228#discussion_r348853199

##

File path:

extension/spark-doris-connector/src/main/java/org/apache/doris/spark/rest/RestService.java

##

@@ -0,0 +1,462 @@

+// Licensed to the Apache Software Foundation (ASF) under one

+// or more contributor license agreements. See the NOTICE file

+// distributed with this work for additional information

+// regarding copyright ownership. The ASF licenses this file

+// to you under the Apache License, Version 2.0 (the

+// "License"); you may not use this file except in compliance

+// with the License. You may obtain a copy of the License at

+//

+// http://www.apache.org/licenses/LICENSE-2.0

+//

+// Unless required by applicable law or agreed to in writing,

+// software distributed under the License is distributed on an

+// "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+// KIND, either express or implied. See the License for the

+// specific language governing permissions and limitations

+// under the License.

+

+package org.apache.doris.spark.rest;

+

+import static org.apache.doris.spark.cfg.ConfigurationOptions.DORIS_FENODES;

+import static

org.apache.doris.spark.cfg.ConfigurationOptions.DORIS_FILTER_QUERY;

+import static org.apache.doris.spark.cfg.ConfigurationOptions.DORIS_READ_FIELD;

+import static

org.apache.doris.spark.cfg.ConfigurationOptions.DORIS_REQUEST_AUTH_PASSWORD;

+import static

org.apache.doris.spark.cfg.ConfigurationOptions.DORIS_REQUEST_AUTH_USER;

+import static

org.apache.doris.spark.cfg.ConfigurationOptions.DORIS_TABLET_SIZE;

+import static

org.apache.doris.spark.cfg.ConfigurationOptions.DORIS_TABLET_SIZE_DEFAULT;

+import static

org.apache.doris.spark.cfg.ConfigurationOptions.DORIS_TABLET_SIZE_MIN;

+import static

org.apache.doris.spark.cfg.ConfigurationOptions.DORIS_TABLE_IDENTIFIER;

+import static org.apache.doris.spark.util.ErrorMessages.CONNECT_FAILED_MESSAGE;

+import static

org.apache.doris.spark.util.ErrorMessages.ILLEGAL_ARGUMENT_MESSAGE;

+import static

org.apache.doris.spark.util.ErrorMessages.PARSE_NUMBER_FAILED_MESSAGE;

+import static

org.apache.doris.spark.util.ErrorMessages.SHOULD_NOT_HAPPEN_MESSAGE;

+

+import java.io.IOException;

+import java.io.Serializable;

+import java.nio.charset.StandardCharsets;

+import java.util.ArrayList;

+import java.util.Arrays;

+import java.util.Collections;

+import java.util.HashMap;

+import java.util.HashSet;

+import java.util.List;

+import java.util.Map;

+import java.util.Set;

+

+import org.apache.commons.lang3.StringUtils;

+import org.apache.doris.spark.cfg.ConfigurationOptions;

+import org.apache.doris.spark.cfg.Settings;

+import org.apache.doris.spark.exception.ConnectedFailedException;

+import org.apache.doris.spark.exception.DorisException;

+import org.apache.doris.spark.exception.IllegalArgumentException;

+import org.apache.doris.spark.exception.ShouldNeverHappenException;

+import org.apache.doris.spark.rest.models.QueryPlan;

+import org.apache.doris.spark.rest.models.Schema;

+import org.apache.doris.spark.rest.models.Tablet;

+import org.apache.http.HttpStatus;

+import org.apache.http.auth.AuthScope;

+import org.apache.http.auth.UsernamePasswordCredentials;

+import org.apache.http.client.CredentialsProvider;

+import org.apache.http.client.config.RequestConfig;

+import org.apache.http.client.methods.CloseableHttpResponse;

+import org.apache.http.client.methods.HttpGet;

+import org.apache.http.client.methods.HttpPost;

+import org.apache.http.client.methods.HttpRequestBase;

+import org.apache.http.client.protocol.HttpClientContext;

+import org.apache.http.entity.StringEntity;

+import org.apache.http.impl.client.BasicCredentialsProvider;

+import org.apache.http.impl.client.CloseableHttpClient;

+import org.apache.http.impl.client.HttpClients;

+import org.apache.http.util.EntityUtils;

+import org.codehaus.jackson.JsonParseException;

+import org.codehaus.jackson.map.JsonMappingException;

+import org.codehaus.jackson.map.ObjectMapper;

+import org.slf4j.Logger;

+

+import com.google.common.annotations.VisibleForTesting;

+

+/**

+ * Service for communicate with Doris FE.

+ */

+public class RestService implements Serializable {

+public final static int REST_RESPONSE_STATUS_OK = 200;

+private static final String API_PREFIX = "/api";

+private static final String SCHEMA = "_schema";

+private static final String QUERY_PLAN = "_query_plan";

+

+/**

+ * send request to Doris FE and get response json string.

+ * @param cfg configuration of request

+ * @param request {@link HttpRequestBase} real request

+ * @param logger {@link Logger}

+ * @return Doris FE response in json string

+ * @throws ConnectedFailedException throw when cannot connect to Doris FE

+ */

+private static String send(Settings cfg, HttpRequestBase request, Logger

logger) throws

+ConnectedFailedException {

[GitHub] [incubator-doris] wuyunfeng commented on a change in pull request #2228: add spark-doris-connector extension

wuyunfeng commented on a change in pull request #2228: add

spark-doris-connector extension

URL: https://github.com/apache/incubator-doris/pull/2228#discussion_r348851944

##

File path:

extension/spark-doris-connector/src/main/java/org/apache/doris/spark/cfg/ConfigurationOptions.java

##

@@ -0,0 +1,55 @@

+// Licensed to the Apache Software Foundation (ASF) under one

+// or more contributor license agreements. See the NOTICE file

+// distributed with this work for additional information

+// regarding copyright ownership. The ASF licenses this file

+// to you under the Apache License, Version 2.0 (the

+// "License"); you may not use this file except in compliance

+// with the License. You may obtain a copy of the License at

+//

+// http://www.apache.org/licenses/LICENSE-2.0

+//

+// Unless required by applicable law or agreed to in writing,

+// software distributed under the License is distributed on an

+// "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+// KIND, either express or implied. See the License for the

+// specific language governing permissions and limitations

+// under the License.

+

+package org.apache.doris.spark.cfg;

+

+public interface ConfigurationOptions {

+// doris fe node address

+String DORIS_FENODES = "doris.fenodes";

+

+String DORIS_DEFAULT_CLUSTER = "default_cluster";

+

+String TABLE_IDENTIFIER = "table.identifier";

+String DORIS_TABLE_IDENTIFIER = "doris.table.identifier";

+String DORIS_READ_FIELD = "doris.read.field";

+String DORIS_FILTER_QUERY = "doris.filter.query";

+String DORIS_FILTER_QUERY_IN_VALUE_MAX = "doris.filter.query.in.value.max";

+int DORIS_FILTER_QUERY_IN_VALUE_UPPER_LIMIT = 1;

+

+String DORIS_USER = "doris.user";

+String DORIS_REQUEST_AUTH_USER = "doris.request.auth.user";

+// use password to save doris.request.auth.password

+// reuse credentials mask method in spark

ExternalCatalogUtils#maskCredentials

+String DORIS_PASSWORD = "doris.password";

+String DORIS_REQUEST_AUTH_PASSWORD = "doris.request.auth.password";

+

+String DORIS_REQUEST_RETRIES = "doris.request.retries";

+String DORIS_REQUEST_CONNECT_TIMEOUT_MS =

"doris.request.connect.timeout.ms";

+String DORIS_REQUEST_READ_TIMEOUT_MS = "doris.request.read.timeout.ms";

+int DORIS_REQUEST_RETRIES_DEFAULT = 3;

+int DORIS_REQUEST_CONNECT_TIMEOUT_DEFAULT = 30 * 1000;

+int DORIS_REQUEST_READ_TIMEOUT_DEFAULT = 30 * 1000;

Review comment:

same above

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: commits-unsubscr...@doris.apache.org

For additional commands, e-mail: commits-h...@doris.apache.org

[GitHub] [incubator-doris] lingbin opened a new pull request #2257: Add schema hash to tablet proc info

lingbin opened a new pull request #2257: Add schema hash to tablet proc info URL: https://github.com/apache/incubator-doris/pull/2257 #2249 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@doris.apache.org For additional commands, e-mail: commits-h...@doris.apache.org

[GitHub] [incubator-doris] imay commented on a change in pull request #2235: Support setting properties for storage_root_path

imay commented on a change in pull request #2235: Support setting properties

for storage_root_path

URL: https://github.com/apache/incubator-doris/pull/2235#discussion_r348430812

##

File path: be/src/olap/options.cpp

##

@@ -29,36 +29,96 @@ namespace doris {

// compatible with old multi path configuration:

// /path1,2014;/path2,2048

-OLAPStatus parse_conf_store_paths(

-const std::string& config_path,

-std::vector* paths) {

+OLAPStatus parse_root_path(const std::string& root_path, StorePath* path) {

+std::vector tmp_vec;

+boost::split(tmp_vec, root_path, boost::is_any_of(","),

+ boost::token_compress_on);

+

+// parse root path name

+boost::trim(tmp_vec[0]);

+tmp_vec[0].erase(tmp_vec[0].find_last_not_of("/") + 1);

+if (tmp_vec[0].empty() || tmp_vec[0][0] != '/') {

+LOG(WARNING) << "invalid store path. path=" << tmp_vec[0];

+return OLAP_ERR_INPUT_PARAMETER_ERROR;

+}

+path->path = tmp_vec[0];

+

+// parse root path capacity and storage medium

+std::string capacity_str, medium_str;

+

+boost::filesystem::path boost_path = tmp_vec[0];

+std::string extension =

+boost::filesystem::canonical(boost_path).extension().string();

+if (!extension.empty()) {

+medium_str = extension.substr(1);

+}

+

+for (int i = 1; i < tmp_vec.size(); i++) {

+// : or

+std::string property, value;

+std::size_t found = tmp_vec[i].find(':');

+if (found != std::string::npos) {

+property = boost::trim_copy(tmp_vec[i].substr(0, found));

+value = boost::trim_copy(tmp_vec[i].substr(found + 1));

+} else {

+// only supports setting capacity

+property = "capacity";

+value = boost::trim_copy(tmp_vec[i]);

+}

+if (property == "capacity") {

Review comment:

```suggestion

if (boost::iequals(property, "capacity")) {

```

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: commits-unsubscr...@doris.apache.org

For additional commands, e-mail: commits-h...@doris.apache.org

[GitHub] [incubator-doris] imay commented on a change in pull request #2235: Support setting properties for storage_root_path

imay commented on a change in pull request #2235: Support setting properties

for storage_root_path

URL: https://github.com/apache/incubator-doris/pull/2235#discussion_r348428536

##

File path: be/src/olap/options.cpp

##

@@ -29,36 +29,96 @@ namespace doris {

// compatible with old multi path configuration:

// /path1,2014;/path2,2048

-OLAPStatus parse_conf_store_paths(

-const std::string& config_path,

-std::vector* paths) {

+OLAPStatus parse_root_path(const std::string& root_path, StorePath* path) {

+std::vector tmp_vec;

+boost::split(tmp_vec, root_path, boost::is_any_of(","),

+ boost::token_compress_on);

+

+// parse root path name

+boost::trim(tmp_vec[0]);

+tmp_vec[0].erase(tmp_vec[0].find_last_not_of("/") + 1);

+if (tmp_vec[0].empty() || tmp_vec[0][0] != '/') {

+LOG(WARNING) << "invalid store path. path=" << tmp_vec[0];

+return OLAP_ERR_INPUT_PARAMETER_ERROR;

+}

+path->path = tmp_vec[0];

+

+// parse root path capacity and storage medium

+std::string capacity_str, medium_str;

+

+boost::filesystem::path boost_path = tmp_vec[0];

+std::string extension =

+boost::filesystem::canonical(boost_path).extension().string();

+if (!extension.empty()) {

+medium_str = extension.substr(1);

+}

+

+for (int i = 1; i < tmp_vec.size(); i++) {

+// : or

+std::string property, value;

+std::size_t found = tmp_vec[i].find(':');

+if (found != std::string::npos) {

+property = boost::trim_copy(tmp_vec[i].substr(0, found));

+value = boost::trim_copy(tmp_vec[i].substr(found + 1));

+} else {

+// only supports setting capacity

+property = "capacity";

+value = boost::trim_copy(tmp_vec[i]);

+}

+if (property == "capacity") {

+capacity_str = value;

+} else if (property == "medium") {

+// property 'medium' has a higher priority than the extension of

+// path, so it can override medium_str

+medium_str = value;

+} else {

+LOG(WARNING) << "invalid property of store path, " << property;

+return OLAP_ERR_INPUT_PARAMETER_ERROR;

+}

+}

+

+path->capacity_bytes = -1;

+if (!capacity_str.empty()) {

+if (!valid_signed_number(capacity_str) ||

+strtol(capacity_str.c_str(), NULL, 10) < 0) {

+LOG(WARNING) << "invalid capacity of store path, capacity="

+ << capacity_str;

+return OLAP_ERR_INPUT_PARAMETER_ERROR;

+}

+path->capacity_bytes =

+strtol(capacity_str.c_str(), NULL, 10) * GB_EXCHANGE_BYTE;

+}

+

+path->storage_medium = TStorageMedium::HDD;

+if (!medium_str.empty()) {

+if (boost::iequals(medium_str, "ssd")) {

+path->storage_medium = TStorageMedium::SSD;

+} else if (boost::iequals(medium_str, "hdd")) {

+path->storage_medium = TStorageMedium::HDD;

+} else {

+LOG(WARNING) << "invalid storage medium. medium=" << medium_str;

+return OLAP_ERR_INPUT_PARAMETER_ERROR;

+}

+}

+return OLAP_SUCCESS;

+}

+

+OLAPStatus parse_conf_store_paths(const std::string& config_path,

+ std::vector* paths) {

try {

std::vector item_vec;

-boost::split(item_vec, config_path, boost::is_any_of(";"),

boost::token_compress_on);

+boost::split(item_vec, config_path, boost::is_any_of(";"),

+ boost::token_compress_on);

for (auto& item : item_vec) {

-std::vector tmp_vec;

-boost::split(tmp_vec, item, boost::is_any_of(","),

boost::token_compress_on);

-

-// parse root path name

-boost::trim(tmp_vec[0]);

-tmp_vec[0].erase(tmp_vec[0].find_last_not_of("/") + 1);

-if (tmp_vec[0].empty() || tmp_vec[0][0] != '/') {

-LOG(WARNING) << "invalid store path. path=" << tmp_vec[0];

+StorePath path;

+auto res = parse_root_path(item, );

+if (res != OLAP_SUCCESS) {

+LOG(WARNING) << "get config store path failed. path="

+ << config_path;

return OLAP_ERR_INPUT_PARAMETER_ERROR;

}

-

-// parse root path capacity

-int64_t capacity_bytes = -1;

-if (tmp_vec.size() > 1) {

-if (!valid_signed_number(tmp_vec[1])

-|| strtol(tmp_vec[1].c_str(), NULL, 10) < 0) {

-LOG(WARNING) << "invalid capacity of store path,

capacity=" << tmp_vec[1];

-return OLAP_ERR_INPUT_PARAMETER_ERROR;

-}

-capacity_bytes = strtol(tmp_vec[1].c_str(), NULL, 10) *

GB_EXCHANGE_BYTE;

-}

-

-paths->emplace_back(tmp_vec[0], capacity_bytes);

+

[GitHub] [incubator-doris] imay commented on a change in pull request #2235: Support setting properties for storage_root_path

imay commented on a change in pull request #2235: Support setting properties

for storage_root_path

URL: https://github.com/apache/incubator-doris/pull/2235#discussion_r348426225

##

File path: be/src/olap/options.h

##

@@ -26,14 +26,25 @@

namespace doris {

struct StorePath {

-StorePath() : capacity_bytes(-1) { }

-StorePath(const std::string& path_, int64_t capacity_bytes_)

-: path(path_), capacity_bytes(capacity_bytes_) { }

+StorePath() : capacity_bytes(-1), storage_medium(TStorageMedium::HDD) { }

+StorePath(const std::string _, int64_t capacity_bytes_)

Review comment:

```suggestion

StorePath(const std::string& path_, int64_t capacity_bytes_)

```

Doris prefer `const std::string& path` to `const std::string `

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: commits-unsubscr...@doris.apache.org

For additional commands, e-mail: commits-h...@doris.apache.org

[GitHub] [incubator-doris] imay commented on a change in pull request #2235: Support setting properties for storage_root_path

imay commented on a change in pull request #2235: Support setting properties

for storage_root_path

URL: https://github.com/apache/incubator-doris/pull/2235#discussion_r348430521

##

File path: be/src/olap/options.cpp

##

@@ -29,36 +29,96 @@ namespace doris {

// compatible with old multi path configuration:

// /path1,2014;/path2,2048

-OLAPStatus parse_conf_store_paths(

-const std::string& config_path,

-std::vector* paths) {

+OLAPStatus parse_root_path(const std::string& root_path, StorePath* path) {

+std::vector tmp_vec;

+boost::split(tmp_vec, root_path, boost::is_any_of(","),

+ boost::token_compress_on);

+

+// parse root path name

+boost::trim(tmp_vec[0]);

+tmp_vec[0].erase(tmp_vec[0].find_last_not_of("/") + 1);

+if (tmp_vec[0].empty() || tmp_vec[0][0] != '/') {

+LOG(WARNING) << "invalid store path. path=" << tmp_vec[0];

+return OLAP_ERR_INPUT_PARAMETER_ERROR;

+}

+path->path = tmp_vec[0];

+

+// parse root path capacity and storage medium

+std::string capacity_str, medium_str;

+

+boost::filesystem::path boost_path = tmp_vec[0];

Review comment:

should do this operation in a try catch block to avoid exception

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: commits-unsubscr...@doris.apache.org

For additional commands, e-mail: commits-h...@doris.apache.org

[GitHub] [incubator-doris] imay commented on a change in pull request #2235: Support setting properties for storage_root_path

imay commented on a change in pull request #2235: Support setting properties

for storage_root_path

URL: https://github.com/apache/incubator-doris/pull/2235#discussion_r348429090

##

File path: be/src/olap/options.h

##

@@ -26,14 +26,25 @@

namespace doris {

struct StorePath {

-StorePath() : capacity_bytes(-1) { }

-StorePath(const std::string& path_, int64_t capacity_bytes_)

-: path(path_), capacity_bytes(capacity_bytes_) { }

+StorePath() : capacity_bytes(-1), storage_medium(TStorageMedium::HDD) { }

+StorePath(const std::string _, int64_t capacity_bytes_)

+: path(path_),

+ capacity_bytes(capacity_bytes_),

+ storage_medium(TStorageMedium::HDD) { }

+StorePath(const std::string _, int64_t capacity_bytes_,

+ TStorageMedium::type storage_medium_)

+: path(path_),

+ capacity_bytes(capacity_bytes_),

+ storage_medium(storage_medium_) { }

std::string path;

int64_t capacity_bytes;

+TStorageMedium::type storage_medium;

};

-OLAPStatus parse_conf_store_paths(const std::string& config_path,

std::vector* path);

+OLAPStatus parse_root_path(const std::string _path, StorePath *path);

Review comment:

better to add some comment for this function to let other known what this

function does.

```suggestion

OLAPStatus parse_root_path(const std::string& root_path, StorePath* path);

```

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: commits-unsubscr...@doris.apache.org

For additional commands, e-mail: commits-h...@doris.apache.org

[GitHub] [incubator-doris] morningman commented on issue #2242: Is that will be better create table with column default enable to be null?

morningman commented on issue #2242: Is that will be better create table with column default enable to be null? URL: https://github.com/apache/incubator-doris/issues/2242#issuecomment-555959059 I am not sure it is a good idea to change the default behavior of a system back and forth. We just change the default column type to NOT NULL not long ago(which is a not good, I admitted, but for performance, we did it). And now, changing it back will confuse all current users... This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@doris.apache.org For additional commands, e-mail: commits-h...@doris.apache.org

[GitHub] [incubator-doris] imay merged pull request #2254: Fix bug for showing columns from non exist table doesn't prompt error

imay merged pull request #2254: Fix bug for showing columns from non exist table doesn't prompt error URL: https://github.com/apache/incubator-doris/pull/2254 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@doris.apache.org For additional commands, e-mail: commits-h...@doris.apache.org

[incubator-doris] branch master updated (d72fbdf -> 88236de)

This is an automated email from the ASF dual-hosted git repository. zhaoc pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/incubator-doris.git. from d72fbdf Support bitmap index build (#2050) add 88236de Fix bug for showing columns from non exist table doesn't prompt error (#2254) No new revisions were added by this update. Summary of changes: fe/src/main/java/org/apache/doris/qe/ShowExecutor.java | 4 1 file changed, 4 insertions(+) - To unsubscribe, e-mail: commits-unsubscr...@doris.apache.org For additional commands, e-mail: commits-h...@doris.apache.org

[GitHub] [incubator-doris] vinson0526 closed pull request #2228: add spark-doris-connector extension

vinson0526 closed pull request #2228: add spark-doris-connector extension URL: https://github.com/apache/incubator-doris/pull/2228 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@doris.apache.org For additional commands, e-mail: commits-h...@doris.apache.org

[GitHub] [incubator-doris] vinson0526 opened a new pull request #2228: add spark-doris-connector extension

vinson0526 opened a new pull request #2228: add spark-doris-connector extension URL: https://github.com/apache/incubator-doris/pull/2228 Spark-Doris-Connector for Spark to query data from Doris. More info in: https://github.com/apache/incubator-doris/issues/1525 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@doris.apache.org For additional commands, e-mail: commits-h...@doris.apache.org

[GitHub] [incubator-doris] imay closed pull request #2254: Fix bug for showing columns from non exist table doesn't prompt error

imay closed pull request #2254: Fix bug for showing columns from non exist table doesn't prompt error URL: https://github.com/apache/incubator-doris/pull/2254 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@doris.apache.org For additional commands, e-mail: commits-h...@doris.apache.org

[GitHub] [incubator-doris] caiconghui opened a new pull request #2254: Fix bug for showing columns from non exist table doesn't prompt error

caiconghui opened a new pull request #2254: Fix bug for showing columns from non exist table doesn't prompt error URL: https://github.com/apache/incubator-doris/pull/2254 when we execute show columns statement for non exist table, the response from doris is nothing, which may make user confused This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@doris.apache.org For additional commands, e-mail: commits-h...@doris.apache.org

[GitHub] [incubator-doris] vinson0526 commented on a change in pull request #2228: add spark-doris-connector extension

vinson0526 commented on a change in pull request #2228: add

spark-doris-connector extension

URL: https://github.com/apache/incubator-doris/pull/2228#discussion_r348347413

##

File path:

extension/spark-doris-connector/src/main/java/org/apache/doris/spark/rest/RestService.java

##

@@ -0,0 +1,464 @@

+// Licensed to the Apache Software Foundation (ASF) under one

+// or more contributor license agreements. See the NOTICE file

+// distributed with this work for additional information

+// regarding copyright ownership. The ASF licenses this file

+// to you under the Apache License, Version 2.0 (the

+// "License"); you may not use this file except in compliance

+// with the License. You may obtain a copy of the License at

+//

+// http://www.apache.org/licenses/LICENSE-2.0

+//

+// Unless required by applicable law or agreed to in writing,

+// software distributed under the License is distributed on an

+// "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+// KIND, either express or implied. See the License for the

+// specific language governing permissions and limitations

+// under the License.

+

+package org.apache.doris.spark.rest;

+

+import static org.apache.doris.spark.cfg.ConfigurationOptions.DORIS_FENODES;

+import static

org.apache.doris.spark.cfg.ConfigurationOptions.DORIS_FILTER_QUERY;

+import static org.apache.doris.spark.cfg.ConfigurationOptions.DORIS_READ_FIELD;

+import static