[GitHub] [hudi] prashantwason commented on a change in pull request #3836: [HUDI-2591] Bootstrap metadata table only if upgrade / downgrade is not required.

prashantwason commented on a change in pull request #3836:

URL: https://github.com/apache/hudi/pull/3836#discussion_r745363631

##

File path:

hudi-client/hudi-client-common/src/main/java/org/apache/hudi/metadata/HoodieBackedTableMetadataWriter.java

##

@@ -217,8 +222,10 @@ public HoodieBackedTableMetadata metadata() {

* Initialize the metadata table if it does not exist.

*

* If the metadata table did not exist, then file and partition listing is

used to bootstrap the table.

+ * @param instantInProgressTimestamp Timestap of an instant in progress on

the dataset. This instant is ignored

+ * while deciding to bootstrap the

metadata table.

*/

- protected abstract void initialize(HoodieEngineContext engineContext);

+ protected abstract void initialize(HoodieEngineContext engineContext,

Option instantInProgressTimestamp);

Review comment:

sure. renamed.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] prashantwason commented on a change in pull request #3836: [HUDI-2591] Bootstrap metadata table only if upgrade / downgrade is not required.

prashantwason commented on a change in pull request #3836:

URL: https://github.com/apache/hudi/pull/3836#discussion_r745360466

##

File path:

hudi-client/hudi-spark-client/src/main/java/org/apache/hudi/client/SparkRDDWriteClient.java

##

@@ -83,22 +83,31 @@ public SparkRDDWriteClient(HoodieEngineContext context,

HoodieWriteConfig client

@Deprecated

public SparkRDDWriteClient(HoodieEngineContext context, HoodieWriteConfig

writeConfig, boolean rollbackPending) {

-super(context, writeConfig);

+this(context, writeConfig, Option.empty());

}

@Deprecated

public SparkRDDWriteClient(HoodieEngineContext context, HoodieWriteConfig

writeConfig, boolean rollbackPending,

Option timelineService) {

-super(context, writeConfig, timelineService);

+this(context, writeConfig, timelineService);

}

public SparkRDDWriteClient(HoodieEngineContext context, HoodieWriteConfig

writeConfig,

Option timelineService) {

super(context, writeConfig, timelineService);

+bootstrapMetadataTable(Option.empty());

+ }

+

+ private void bootstrapMetadataTable(Option

instantInProgressTimestamp) {

Review comment:

renamed to initializeMetadataTable

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #3671: [HUDI-2418] add HiveSchemaProvider

hudi-bot commented on pull request #3671: URL: https://github.com/apache/hudi/pull/3671#issuecomment-963890670 ## CI report: * eafa89d286b157d626d93704d8d549801b41ba68 Azure: [SUCCESS](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=3239) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot removed a comment on pull request #3671: [HUDI-2418] add HiveSchemaProvider

hudi-bot removed a comment on pull request #3671: URL: https://github.com/apache/hudi/pull/3671#issuecomment-963866868 ## CI report: * 2ceab6429c3f1fec97fe5af18ca2494f5dcc3e7e Azure: [SUCCESS](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=3224) * eafa89d286b157d626d93704d8d549801b41ba68 Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=3239) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (HUDI-2715) The BitCaskDiskMap iterator may cause memory leak

[ https://issues.apache.org/jira/browse/HUDI-2715?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17440948#comment-17440948 ] Danny Chen commented on HUDI-2715: -- Fixed via master branch: e057a10499729301ebe96d7cd54902b113af1811 > The BitCaskDiskMap iterator may cause memory leak > - > > Key: HUDI-2715 > URL: https://issues.apache.org/jira/browse/HUDI-2715 > Project: Apache Hudi > Issue Type: Task > Components: Flink Integration >Reporter: Danny Chen >Assignee: Danny Chen >Priority: Major > Labels: pull-request-available > Fix For: 0.10.0 > > -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Resolved] (HUDI-2715) The BitCaskDiskMap iterator may cause memory leak

[ https://issues.apache.org/jira/browse/HUDI-2715?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Danny Chen resolved HUDI-2715. -- > The BitCaskDiskMap iterator may cause memory leak > - > > Key: HUDI-2715 > URL: https://issues.apache.org/jira/browse/HUDI-2715 > Project: Apache Hudi > Issue Type: Task > Components: Flink Integration >Reporter: Danny Chen >Assignee: Danny Chen >Priority: Major > Labels: pull-request-available > Fix For: 0.10.0 > > -- This message was sent by Atlassian Jira (v8.20.1#820001)

[hudi] branch master updated: [HUDI-2715] The BitCaskDiskMap iterator may cause memory leak (#3951)

This is an automated email from the ASF dual-hosted git repository.

danny0405 pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/hudi.git

The following commit(s) were added to refs/heads/master by this push:

new e057a10 [HUDI-2715] The BitCaskDiskMap iterator may cause memory leak

(#3951)

e057a10 is described below

commit e057a10499729301ebe96d7cd54902b113af1811

Author: Danny Chan

AuthorDate: Tue Nov 9 15:40:00 2021 +0800

[HUDI-2715] The BitCaskDiskMap iterator may cause memory leak (#3951)

---

.../hudi/table/action/compact/HoodieCompactor.java | 3 ++-

.../apache/hudi/common/util/ClosableIterator.java | 31 ++

.../common/util/collection/BitCaskDiskMap.java | 10 ++-

.../common/util/collection/LazyFileIterable.java | 7 ++---

.../table/format/mor/MergeOnReadInputFormat.java | 7 +

5 files changed, 47 insertions(+), 11 deletions(-)

diff --git

a/hudi-client/hudi-client-common/src/main/java/org/apache/hudi/table/action/compact/HoodieCompactor.java

b/hudi-client/hudi-client-common/src/main/java/org/apache/hudi/table/action/compact/HoodieCompactor.java

index ad05876..419f88e 100644

---

a/hudi-client/hudi-client-common/src/main/java/org/apache/hudi/table/action/compact/HoodieCompactor.java

+++

b/hudi-client/hudi-client-common/src/main/java/org/apache/hudi/table/action/compact/HoodieCompactor.java

@@ -181,6 +181,7 @@ public abstract class HoodieCompactor im

.withBitCaskDiskMapCompressionEnabled(config.getCommonConfig().isBitCaskDiskMapCompressionEnabled())

.build();

if (!scanner.iterator().hasNext()) {

+ scanner.close();

return new ArrayList<>();

}

@@ -198,6 +199,7 @@ public abstract class HoodieCompactor im

result = compactionHandler.handleInsert(instantTime,

operation.getPartitionPath(), operation.getFileId(),

scanner.getRecords());

}

+scanner.close();

Iterable> resultIterable = () -> result;

return StreamSupport.stream(resultIterable.spliterator(),

false).flatMap(Collection::stream).peek(s -> {

s.getStat().setTotalUpdatedRecordsCompacted(scanner.getNumMergedRecordsInLog());

@@ -212,7 +214,6 @@ public abstract class HoodieCompactor im

RuntimeStats runtimeStats = new RuntimeStats();

runtimeStats.setTotalScanTime(scanner.getTotalTimeTakenToReadAndMergeBlocks());

s.getStat().setRuntimeStats(runtimeStats);

- scanner.close();

}).collect(toList());

}

diff --git

a/hudi-common/src/main/java/org/apache/hudi/common/util/ClosableIterator.java

b/hudi-common/src/main/java/org/apache/hudi/common/util/ClosableIterator.java

new file mode 100644

index 000..9e1d0c2

--- /dev/null

+++

b/hudi-common/src/main/java/org/apache/hudi/common/util/ClosableIterator.java

@@ -0,0 +1,31 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hudi.common.util;

+

+import java.util.Iterator;

+

+/**

+ * An iterator that give a chance to release resources.

+ *

+ * @param The return type

+ */

+public interface ClosableIterator extends Iterator, AutoCloseable {

+ @Override

+ void close(); // override to not throw exception

+}

diff --git

a/hudi-common/src/main/java/org/apache/hudi/common/util/collection/BitCaskDiskMap.java

b/hudi-common/src/main/java/org/apache/hudi/common/util/collection/BitCaskDiskMap.java

index 5f78fa3..289901d 100644

---

a/hudi-common/src/main/java/org/apache/hudi/common/util/collection/BitCaskDiskMap.java

+++

b/hudi-common/src/main/java/org/apache/hudi/common/util/collection/BitCaskDiskMap.java

@@ -20,6 +20,7 @@ package org.apache.hudi.common.util.collection;

import org.apache.hudi.common.fs.SizeAwareDataOutputStream;

import org.apache.hudi.common.util.BufferedRandomAccessFile;

+import org.apache.hudi.common.util.ClosableIterator;

import org.apache.hudi.common.util.SerializationUtils;

import org.apache.hudi.common.util.SpillableMapUtils;

import org.apache.hudi.exception.HoodieException;

@@ -38,9 +39,11 @@ import java.io.InputStream;

import java.io.RandomAccessFile;

import java.io.Serializable;

import java.util.AbstractMap;

+import java.util.ArrayList;

import java.util.Collection;

import java.util.HashSet;

[GitHub] [hudi] danny0405 merged pull request #3951: [HUDI-2715] The BitCaskDiskMap iterator may cause memory leak

danny0405 merged pull request #3951: URL: https://github.com/apache/hudi/pull/3951 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] xiarixiaoyao commented on pull request #3952: [HUDI-2102][WIP] support hilbert curve for hudi.

xiarixiaoyao commented on pull request #3952: URL: https://github.com/apache/hudi/pull/3952#issuecomment-963871181 @vinothchandar Hilbert curve has better data aggregation characteristics, while Z curve has worse data aggregation characteristics. The mapping process of Hilbert curve is complex, and the mapping process of Z curve is simple. High order Hilbert computation is very complex and time-consuming, and z-order is used more in spatio-temporal database. i will paste perf test later -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot removed a comment on pull request #3671: [HUDI-2418] add HiveSchemaProvider

hudi-bot removed a comment on pull request #3671: URL: https://github.com/apache/hudi/pull/3671#issuecomment-963865781 ## CI report: * 2ceab6429c3f1fec97fe5af18ca2494f5dcc3e7e Azure: [SUCCESS](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=3224) * eafa89d286b157d626d93704d8d549801b41ba68 UNKNOWN Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #3671: [HUDI-2418] add HiveSchemaProvider

hudi-bot commented on pull request #3671: URL: https://github.com/apache/hudi/pull/3671#issuecomment-963866868 ## CI report: * 2ceab6429c3f1fec97fe5af18ca2494f5dcc3e7e Azure: [SUCCESS](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=3224) * eafa89d286b157d626d93704d8d549801b41ba68 Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=3239) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot removed a comment on pull request #3671: [HUDI-2418] add HiveSchemaProvider

hudi-bot removed a comment on pull request #3671: URL: https://github.com/apache/hudi/pull/3671#issuecomment-963514430 ## CI report: * 2ceab6429c3f1fec97fe5af18ca2494f5dcc3e7e Azure: [SUCCESS](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=3224) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #3671: [HUDI-2418] add HiveSchemaProvider

hudi-bot commented on pull request #3671: URL: https://github.com/apache/hudi/pull/3671#issuecomment-963865781 ## CI report: * 2ceab6429c3f1fec97fe5af18ca2494f5dcc3e7e Azure: [SUCCESS](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=3224) * eafa89d286b157d626d93704d8d549801b41ba68 UNKNOWN Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] YannByron commented on a change in pull request #3936: [WIP][HUDI-2706] refactor spark-sql to make consistent with DataFrame api

YannByron commented on a change in pull request #3936:

URL: https://github.com/apache/hudi/pull/3936#discussion_r745326932

##

File path:

hudi-spark-datasource/hudi-spark/src/main/scala/org/apache/spark/sql/hudi/command/CreateHoodieTableAsSelectCommand.scala

##

@@ -73,9 +75,10 @@ case class CreateHoodieTableAsSelectCommand(

// Execute the insert query

try {

+ val tblProperties = table.storage.properties ++ table.properties

Review comment:

good idea.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #3857: [WIP][HUDI-2332] Add clustering and compaction in Kafka Connect Sink

hudi-bot commented on pull request #3857: URL: https://github.com/apache/hudi/pull/3857#issuecomment-963860479 ## CI report: * 7983f30cba280fd58848bd363bdf67a7f1467b26 Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=3238) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot removed a comment on pull request #3857: [WIP][HUDI-2332] Add clustering and compaction in Kafka Connect Sink

hudi-bot removed a comment on pull request #3857: URL: https://github.com/apache/hudi/pull/3857#issuecomment-963838745 ## CI report: * 4ed0cb6d22e74ef6185bedda83b6f547839de05b Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=3040) * 7983f30cba280fd58848bd363bdf67a7f1467b26 Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=3238) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] YannByron commented on a change in pull request #3936: [WIP][HUDI-2706] refactor spark-sql to make consistent with DataFrame api

YannByron commented on a change in pull request #3936:

URL: https://github.com/apache/hudi/pull/3936#discussion_r745325819

##

File path:

hudi-spark-datasource/hudi-spark/src/main/scala/org/apache/spark/sql/hudi/command/AlterHoodieTableDropPartitionCommand.scala

##

@@ -104,10 +104,6 @@ extends RunnableCommand {

PARTITIONPATH_FIELD.key -> tableConfig.getPartitionFieldProp

)

}

-

-val parameters = HoodieWriterUtils.parametersWithWriteDefaults(optParams)

-val translatedOptions =

DataSourceWriteOptions.translateSqlOptions(parameters)

-translatedOptions

Review comment:

Appending default parameters and translating sql options will be

executed in `HoodieSparkSqlWriter.mergeParamsAndGetHoodieConfig`. In Sql

Command, the raw parameters passing to `HoodieSparkSqlWriter.write` will be

used to check conflicts between parameters.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Commented] (HUDI-2500) Spark datasource delete not working on Spark SQL created table

[

https://issues.apache.org/jira/browse/HUDI-2500?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17440917#comment-17440917

]

Yann Byron commented on HUDI-2500:

--

[~xushiyan]

I reproduce this issue on the master branch.

That refresh table before the last `select` can work.

The reason is sql will execute `refresh` when finish delete.

So i think it don't need to fix.

> Spark datasource delete not working on Spark SQL created table

> --

>

> Key: HUDI-2500

> URL: https://issues.apache.org/jira/browse/HUDI-2500

> Project: Apache Hudi

> Issue Type: Sub-task

> Components: Spark Integration

>Reporter: Raymond Xu

>Assignee: Yann Byron

>Priority: Blocker

> Labels: sev:critical

> Fix For: 0.10.0

>

>

> Original issue [https://github.com/apache/hudi/issues/3670]

>

> Script to re-produce

> {code:java}

> val sparkSourceTablePath = s"${tmp.getCanonicalPath}/test_spark_table"

> val sparkSourceTableName = "test_spark_table"

> val hudiTablePath = s"${tmp.getCanonicalPath}/test_hudi_table"

> val hudiTableName = "test_hudi_table"

> println("0 - prepare source data")

> spark.createDataFrame(Seq(

> ("100", "2015-01-01", "2015-01-01T13:51:39.340396Z"),

> ("101", "2015-01-01", "2015-01-01T12:14:58.597216Z"),

> ("102", "2015-01-01", "2015-01-01T13:51:40.417052Z"),

> ("103", "2015-01-01", "2015-01-01T13:51:40.519832Z"),

> ("104", "2015-01-02", "2015-01-01T12:15:00.512679Z"),

> ("105", "2015-01-02", "2015-01-01T13:51:42.248818Z")

> )).toDF("id", "creation_date", "last_update_time")

> .withColumn("creation_date", expr("cast(creation_date as date)"))

> .withColumn("id", expr("cast(id as bigint)"))

> .write

> .option("path", sparkSourceTablePath)

> .mode("overwrite")

> .format("parquet")

> .saveAsTable(sparkSourceTableName)

> println("1 - CTAS to load data to Hudi")

> val hudiOptions = Map[String, String](

> HoodieWriteConfig.TBL_NAME.key() -> hudiTableName,

> DataSourceWriteOptions.TABLE_NAME.key() -> hudiTableName,

> DataSourceWriteOptions.TABLE_TYPE.key() -> "COPY_ON_WRITE",

> DataSourceWriteOptions.RECORDKEY_FIELD.key() -> "id",

> DataSourceWriteOptions.KEYGENERATOR_CLASS_NAME.key() ->

> classOf[ComplexKeyGenerator].getCanonicalName,

> DataSourceWriteOptions.PAYLOAD_CLASS_NAME.key() ->

> classOf[DefaultHoodieRecordPayload].getCanonicalName,

> DataSourceWriteOptions.PARTITIONPATH_FIELD.key() -> "creation_date",

> DataSourceWriteOptions.PRECOMBINE_FIELD.key() -> "last_update_time",

> HoodieWriteConfig.INSERT_PARALLELISM_VALUE.key() -> "1",

> HoodieWriteConfig.UPSERT_PARALLELISM_VALUE.key() -> "1",

> HoodieWriteConfig.BULKINSERT_PARALLELISM_VALUE.key() -> "1",

> HoodieWriteConfig.FINALIZE_WRITE_PARALLELISM_VALUE.key() -> "1",

> HoodieWriteConfig.DELETE_PARALLELISM_VALUE.key() -> "1"

> )

> spark.sql(

> s"""create table if not exists $hudiTableName using hudi

> | location '$hudiTablePath'

> | options (

> | type = 'cow',

> | primaryKey = 'id',

> | preCombineField = 'last_update_time'

> | )

> | partitioned by (creation_date)

> | AS

> | select id, last_update_time, creation_date from

> $sparkSourceTableName

> | """.stripMargin)

> println("2 - Hudi table has all records")

> spark.sql(s"select * from $hudiTableName").show(100)

> println("3 - pick 105 to delete")

> val rec105 = spark.sql(s"select * from $hudiTableName where id = 105")

> rec105.show()

> println("4 - issue delete (Spark SQL)")

> spark.sql(s"delete from $hudiTableName where id = 105")

> println("5 - 105 is deleted")

> spark.sql(s"select * from $hudiTableName").show(100)

> println("6 - pick 104 to delete")

> val rec104 = spark.sql(s"select * from $hudiTableName where id = 104")

> rec104.show()

> println("7 - issue delete (DataSource)")

> rec104.write

> .format("hudi")

> .options(hudiOptions)

> .option(DataSourceWriteOptions.OPERATION.key(), "delete")

> .option(DataSourceWriteOptions.PAYLOAD_CLASS_NAME.key(),

> classOf[EmptyHoodieRecordPayload].getCanonicalName)

> .mode(SaveMode.Append)

> .save(hudiTablePath)

> println("8 - 104 should be deleted")

> spark.sql(s"select * from $hudiTableName").show(100)

> {code}

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[GitHub] [hudi] hudi-bot removed a comment on pull request #3857: [WIP][HUDI-2332] Add clustering and compaction in Kafka Connect Sink

hudi-bot removed a comment on pull request #3857: URL: https://github.com/apache/hudi/pull/3857#issuecomment-963837867 ## CI report: * 4ed0cb6d22e74ef6185bedda83b6f547839de05b Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=3040) * 7983f30cba280fd58848bd363bdf67a7f1467b26 UNKNOWN Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #3857: [WIP][HUDI-2332] Add clustering and compaction in Kafka Connect Sink

hudi-bot commented on pull request #3857: URL: https://github.com/apache/hudi/pull/3857#issuecomment-963838745 ## CI report: * 4ed0cb6d22e74ef6185bedda83b6f547839de05b Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=3040) * 7983f30cba280fd58848bd363bdf67a7f1467b26 Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=3238) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #3857: [WIP][HUDI-2332] Add clustering and compaction in Kafka Connect Sink

hudi-bot commented on pull request #3857: URL: https://github.com/apache/hudi/pull/3857#issuecomment-963837867 ## CI report: * 4ed0cb6d22e74ef6185bedda83b6f547839de05b Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=3040) * 7983f30cba280fd58848bd363bdf67a7f1467b26 UNKNOWN Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot removed a comment on pull request #3857: [WIP][HUDI-2332] Add clustering and compaction in Kafka Connect Sink

hudi-bot removed a comment on pull request #3857: URL: https://github.com/apache/hudi/pull/3857#issuecomment-961588637 ## CI report: * 4ed0cb6d22e74ef6185bedda83b6f547839de05b Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=3040) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (HUDI-2332) Implement scheduling of compaction/ clustering for Kafka Connect

[

https://issues.apache.org/jira/browse/HUDI-2332?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17440909#comment-17440909

]

Ethan Guo commented on HUDI-2332:

-

clusteringjob.properties:

{code:java}

hoodie.datasource.write.recordkey.field=volume

hoodie.datasource.write.partitionpath.field=date

hoodie.deltastreamer.schemaprovider.registry.url=http://localhost:8081/subjects/hudi-test-topic/versions/latest

hoodie.clustering.plan.strategy.target.file.max.bytes=1073741824

hoodie.clustering.plan.strategy.small.file.limit=629145600

hoodie.clustering.execution.strategy.class=org.apache.hudi.client.clustering.run.strategy.SparkSortAndSizeExecutionStrategy

hoodie.clustering.plan.strategy.sort.columns=volume

hoodie.write.concurrency.mode=single_writer {code}

> Implement scheduling of compaction/ clustering for Kafka Connect

>

>

> Key: HUDI-2332

> URL: https://issues.apache.org/jira/browse/HUDI-2332

> Project: Apache Hudi

> Issue Type: Sub-task

>Reporter: Rajesh Mahindra

>Assignee: Ethan Guo

>Priority: Blocker

> Labels: pull-request-available

> Fix For: 0.10.0

>

>

> * Implement compaction/ clustering etc. from Java client

> * Schedule from Coordinator

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[GitHub] [hudi] xushiyan commented on pull request #3936: [WIP][HUDI-2706] refactor spark-sql to make consistent with DataFrame api

xushiyan commented on pull request #3936: URL: https://github.com/apache/hudi/pull/3936#issuecomment-963824070 @YannByron just did a rough pass over the changes. will do another round on details soon. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Assigned] (HUDI-2717) Test and certify inline file system in S3 and hdfs

[ https://issues.apache.org/jira/browse/HUDI-2717?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] sivabalan narayanan reassigned HUDI-2717: - Assignee: sivabalan narayanan > Test and certify inline file system in S3 and hdfs > -- > > Key: HUDI-2717 > URL: https://issues.apache.org/jira/browse/HUDI-2717 > Project: Apache Hudi > Issue Type: Bug >Reporter: sivabalan narayanan >Assignee: sivabalan narayanan >Priority: Blocker > Fix For: 0.10.0 > > > Test and certify inline file system in S3 and hdfs -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Created] (HUDI-2717) Test and certify inline file system in S3 and hdfs

sivabalan narayanan created HUDI-2717: - Summary: Test and certify inline file system in S3 and hdfs Key: HUDI-2717 URL: https://issues.apache.org/jira/browse/HUDI-2717 Project: Apache Hudi Issue Type: Bug Reporter: sivabalan narayanan Fix For: 0.10.0 Test and certify inline file system in S3 and hdfs -- This message was sent by Atlassian Jira (v8.20.1#820001)

[GitHub] [hudi] xushiyan commented on a change in pull request #3936: [WIP][HUDI-2706] refactor spark-sql to make consistent with DataFrame api

xushiyan commented on a change in pull request #3936:

URL: https://github.com/apache/hudi/pull/3936#discussion_r745241470

##

File path:

hudi-spark-datasource/hudi-spark/src/main/scala/org/apache/spark/sql/hudi/HoodieOptionConfig.scala

##

@@ -102,6 +107,8 @@ object HoodieOptionConfig {

private lazy val reverseValueMapping = valueMapping.map(f => f._2 -> f._1)

+ def withDefaultSqlOption(options: Map[String, String]): Map[String, String]

= defaultSqlOption ++ options

Review comment:

```suggestion

def withDefaultSqlOptions(options: Map[String, String]): Map[String,

String] = defaultSqlOptions ++ options

```

##

File path:

hudi-spark-datasource/hudi-spark/src/main/scala/org/apache/spark/sql/hudi/command/AlterHoodieTableDropPartitionCommand.scala

##

@@ -104,10 +104,6 @@ extends RunnableCommand {

PARTITIONPATH_FIELD.key -> tableConfig.getPartitionFieldProp

)

}

-

-val parameters = HoodieWriterUtils.parametersWithWriteDefaults(optParams)

-val translatedOptions =

DataSourceWriteOptions.translateSqlOptions(parameters)

-translatedOptions

Review comment:

can you clarify the reason why these no longer needed?

##

File path:

hudi-spark-datasource/hudi-spark/src/main/scala/org/apache/spark/sql/hudi/HoodieOptionConfig.scala

##

@@ -136,16 +142,19 @@ object HoodieOptionConfig {

options.map(kv => tableConfigKeyToSqlKey.getOrElse(kv._1, kv._1) ->

reverseValueMapping.getOrElse(kv._2, kv._2))

}

- private lazy val defaultTableConfig: Map[String, String] = {

+ private lazy val defaultSqlOption: Map[String, String] = {

Review comment:

```suggestion

private lazy val defaultSqlOptions: Map[String, String] = {

```

##

File path:

hudi-spark-datasource/hudi-spark/src/main/scala/org/apache/hudi/HoodieWriterUtils.scala

##

@@ -102,4 +106,73 @@ object HoodieWriterUtils {

properties.putAll(mapAsJavaMap(parameters))

new HoodieConfig(properties)

}

+

+ def getRealKeyGenerator(hoodieConfig: HoodieConfig): String = {

+val kg = hoodieConfig.getString(KEYGENERATOR_CLASS_NAME.key())

+if (classOf[SqlKeyGenerator].getCanonicalName == kg) {

+ hoodieConfig.getString(SqlKeyGenerator.ORIGIN_KEYGEN_CLASS_NAME)

+} else {

+ kg

+}

+ }

+

+ // Detects conflicts between new parameters and existing table configurations

Review comment:

```suggestion

/**

* Detects conflicts between new parameters and existing table

configurations

*/

```

##

File path:

hudi-spark-datasource/hudi-spark/src/main/scala/org/apache/spark/sql/hudi/command/CreateHoodieTableAsSelectCommand.scala

##

@@ -73,9 +75,10 @@ case class CreateHoodieTableAsSelectCommand(

// Execute the insert query

try {

+ val tblProperties = table.storage.properties ++ table.properties

Review comment:

have seen this needed repeated. can we consider making a

`HoodieCatalogTable` to encapsulate this and other hudi specific logic inside,

e.g. validation, options transform, etc

##

File path:

hudi-common/src/main/java/org/apache/hudi/common/model/DefaultHoodieRecordPayload.java

##

@@ -113,7 +113,7 @@ protected boolean needUpdatingPersistedRecord(IndexedRecord

currentValue,

Object persistedOrderingVal = getNestedFieldVal((GenericRecord)

currentValue,

properties.getProperty(HoodiePayloadProps.PAYLOAD_ORDERING_FIELD_PROP_KEY),

true);

Comparable incomingOrderingVal = (Comparable)

getNestedFieldVal((GenericRecord) incomingRecord,

-

properties.getProperty(HoodiePayloadProps.PAYLOAD_ORDERING_FIELD_PROP_KEY),

false);

+

properties.getProperty(HoodiePayloadProps.PAYLOAD_ORDERING_FIELD_PROP_KEY),

true);

Review comment:

so we return null if not found ordering/precombine key. Shall we make

its parent class behave in similar way?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Assigned] (HUDI-2716) Fix inline FileSystem work with any FileSystem

[ https://issues.apache.org/jira/browse/HUDI-2716?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] sivabalan narayanan reassigned HUDI-2716: - Assignee: Manoj Govindassamy > Fix inline FileSystem work with any FileSystem > -- > > Key: HUDI-2716 > URL: https://issues.apache.org/jira/browse/HUDI-2716 > Project: Apache Hudi > Issue Type: Bug >Affects Versions: 0.10.0 >Reporter: sivabalan narayanan >Assignee: Manoj Govindassamy >Priority: Major > > with S3, path's format is "s3a://path" which has 2 slashes "//" after the > scheme. Inline file system couldn't handle it. resolved path after inline -> > unwrapping inline comes to "s3a:/path". -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Updated] (HUDI-2716) Fix inline FileSystem work with any FileSystem

[ https://issues.apache.org/jira/browse/HUDI-2716?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] sivabalan narayanan updated HUDI-2716: -- Fix Version/s: 0.10.0 > Fix inline FileSystem work with any FileSystem > -- > > Key: HUDI-2716 > URL: https://issues.apache.org/jira/browse/HUDI-2716 > Project: Apache Hudi > Issue Type: Bug >Affects Versions: 0.10.0 >Reporter: sivabalan narayanan >Assignee: Manoj Govindassamy >Priority: Major > Fix For: 0.10.0 > > > with S3, path's format is "s3a://path" which has 2 slashes "//" after the > scheme. Inline file system couldn't handle it. resolved path after inline -> > unwrapping inline comes to "s3a:/path". -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Created] (HUDI-2716) Fix inline FileSystem work with any FileSystem

sivabalan narayanan created HUDI-2716: - Summary: Fix inline FileSystem work with any FileSystem Key: HUDI-2716 URL: https://issues.apache.org/jira/browse/HUDI-2716 Project: Apache Hudi Issue Type: Bug Affects Versions: 0.10.0 Reporter: sivabalan narayanan with S3, path's format is "s3a://path" which has 2 slashes "//" after the scheme. Inline file system couldn't handle it. resolved path after inline -> unwrapping inline comes to "s3a:/path". -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Updated] (HUDI-2716) Fix inline FileSystem work with any FileSystem

[ https://issues.apache.org/jira/browse/HUDI-2716?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] sivabalan narayanan updated HUDI-2716: -- Priority: Blocker (was: Major) > Fix inline FileSystem work with any FileSystem > -- > > Key: HUDI-2716 > URL: https://issues.apache.org/jira/browse/HUDI-2716 > Project: Apache Hudi > Issue Type: Bug >Affects Versions: 0.10.0 >Reporter: sivabalan narayanan >Assignee: Manoj Govindassamy >Priority: Blocker > Fix For: 0.10.0 > > > with S3, path's format is "s3a://path" which has 2 slashes "//" after the > scheme. Inline file system couldn't handle it. resolved path after inline -> > unwrapping inline comes to "s3a:/path". -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Updated] (HUDI-2370) Supports data encryption

[ https://issues.apache.org/jira/browse/HUDI-2370?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Raymond Xu updated HUDI-2370: - Fix Version/s: (was: 0.10.0) > Supports data encryption > > > Key: HUDI-2370 > URL: https://issues.apache.org/jira/browse/HUDI-2370 > Project: Apache Hudi > Issue Type: New Feature >Reporter: liujinhui >Assignee: liujinhui >Priority: Blocker > Labels: pull-request-available > > Data security is becoming more and more important, if hudi can support > encryption, it is very welcome > 1. Specify column encryption > 2. Support footer encryption > 3. Custom encrypted client interface(Provide memory-based encryption client > by default) > 4. Specify the encryption key > > When querying, you need to pass the relevant key or obtain query permission > based on the client's encrypted interface. If it fails, the result cannot be > returned. > 1. When querying non-encrypted fields, the key is not passed, and the data > is returned normally > 2. When querying encrypted fields, the key is not passed and the data is not > returned > 3. When the encrypted field is queried, the key is passed, and the data is > returned normally > 4. When querying all fields, the key is not passed and no result is > returned. If passed, the data returns normally > > Start with COW first -- This message was sent by Atlassian Jira (v8.20.1#820001)

[GitHub] [hudi] jadireddi commented on pull request #3929: [HUDI-2697] Make multi table delta streamer to use thread pool for table sync asynchronously.

jadireddi commented on pull request #3929: URL: https://github.com/apache/hudi/pull/3929#issuecomment-963813120 Thank you Vinoth. Done unit testing only. Will make changes to trigger based on data availability . It would be really helpful if we have a test environment. @pratyakshsharma can you please help me with the steps to set it up. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot removed a comment on pull request #3748: [HUDI-2516] Upgrade JUnit to 5.8.1

hudi-bot removed a comment on pull request #3748: URL: https://github.com/apache/hudi/pull/3748#issuecomment-963787491 ## CI report: * d12fd12372ec2f6c07399863491ca0d6c5691536 Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=3222) * 4fbc75d8510edab1397afa5e84e7afa8d87e2f75 Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=3236) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #3748: [HUDI-2516] Upgrade JUnit to 5.8.1

hudi-bot commented on pull request #3748: URL: https://github.com/apache/hudi/pull/3748#issuecomment-963812962 ## CI report: * 4fbc75d8510edab1397afa5e84e7afa8d87e2f75 Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=3236) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Assigned] (HUDI-2325) Implement and test Hive Sync support for Kafka Connect

[ https://issues.apache.org/jira/browse/HUDI-2325?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Rajesh Mahindra reassigned HUDI-2325: - Assignee: Rajesh Mahindra (was: Ethan Guo) > Implement and test Hive Sync support for Kafka Connect > -- > > Key: HUDI-2325 > URL: https://issues.apache.org/jira/browse/HUDI-2325 > Project: Apache Hudi > Issue Type: Sub-task >Reporter: Rajesh Mahindra >Assignee: Rajesh Mahindra >Priority: Blocker > Labels: pull-request-available > Fix For: 0.10.0 > > -- This message was sent by Atlassian Jira (v8.20.1#820001)

[GitHub] [hudi] hudi-bot removed a comment on pull request #3952: [HUDI-2102][WIP] support hilbert curve for hudi.

hudi-bot removed a comment on pull request #3952: URL: https://github.com/apache/hudi/pull/3952#issuecomment-963784924 ## CI report: * 4a3305e70773578729177c6ee863d52ecf31ee39 Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=3235) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #3952: [HUDI-2102][WIP] support hilbert curve for hudi.

hudi-bot commented on pull request #3952: URL: https://github.com/apache/hudi/pull/3952#issuecomment-963806283 ## CI report: * 4a3305e70773578729177c6ee863d52ecf31ee39 Azure: [SUCCESS](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=3235) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] danny0405 commented on pull request #3203: [HUDI-2086] Refactor hive mor_incremental_view

danny0405 commented on pull request #3203: URL: https://github.com/apache/hudi/pull/3203#issuecomment-963803515 I'm going to merge it soon ~ -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (HUDI-2319) Integrate hudi with dbt (data build tool)

[

https://issues.apache.org/jira/browse/HUDI-2319?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

sivabalan narayanan updated HUDI-2319:

--

Priority: Critical (was: Blocker)

> Integrate hudi with dbt (data build tool)

> -

>

> Key: HUDI-2319

> URL: https://issues.apache.org/jira/browse/HUDI-2319

> Project: Apache Hudi

> Issue Type: New Feature

> Components: Usability

>Reporter: Vinoth Govindarajan

>Assignee: Vinoth Govindarajan

>Priority: Critical

> Labels: integration

> Fix For: 0.10.0

>

>

> dbt (data build tool) enables analytics engineers to transform data in their

> warehouses by simply writing select statements. dbt handles turning these

> select statements into tables and views.

> dbt does the {{T}} in {{ELT}} (Extract, Load, Transform) processes – it

> doesn’t extract or load data, but it’s extremely good at transforming data

> that’s already loaded into your warehouse.

>

> dbt currently supports only delta file format, there are few folks in the dbt

> community asking for hudi integration, moreover, adding dbt integration will

> make it easier to create derived datasets for any data engineer/analyst.

>

>

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Resolved] (HUDI-2710) Update the document for flink hudi quick start

[ https://issues.apache.org/jira/browse/HUDI-2710?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Danny Chen resolved HUDI-2710. -- > Update the document for flink hudi quick start > -- > > Key: HUDI-2710 > URL: https://issues.apache.org/jira/browse/HUDI-2710 > Project: Apache Hudi > Issue Type: Task > Components: Flink Integration >Reporter: Danny Chen >Priority: Major > Labels: pull-request-available > Fix For: 0.10.0 > > -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Assigned] (HUDI-2710) Update the document for flink hudi quick start

[ https://issues.apache.org/jira/browse/HUDI-2710?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Danny Chen reassigned HUDI-2710: Assignee: Danny Chen > Update the document for flink hudi quick start > -- > > Key: HUDI-2710 > URL: https://issues.apache.org/jira/browse/HUDI-2710 > Project: Apache Hudi > Issue Type: Task > Components: Flink Integration >Reporter: Danny Chen >Assignee: Danny Chen >Priority: Major > Labels: pull-request-available > Fix For: 0.10.0 > > -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Commented] (HUDI-2710) Update the document for flink hudi quick start

[ https://issues.apache.org/jira/browse/HUDI-2710?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17440886#comment-17440886 ] Danny Chen commented on HUDI-2710: -- Fixed via master branch: 805a21b7b28dd0ebe0ddd350226cf100d8505aa7 > Update the document for flink hudi quick start > -- > > Key: HUDI-2710 > URL: https://issues.apache.org/jira/browse/HUDI-2710 > Project: Apache Hudi > Issue Type: Task > Components: Flink Integration >Reporter: Danny Chen >Priority: Major > Labels: pull-request-available > Fix For: 0.10.0 > > -- This message was sent by Atlassian Jira (v8.20.1#820001)

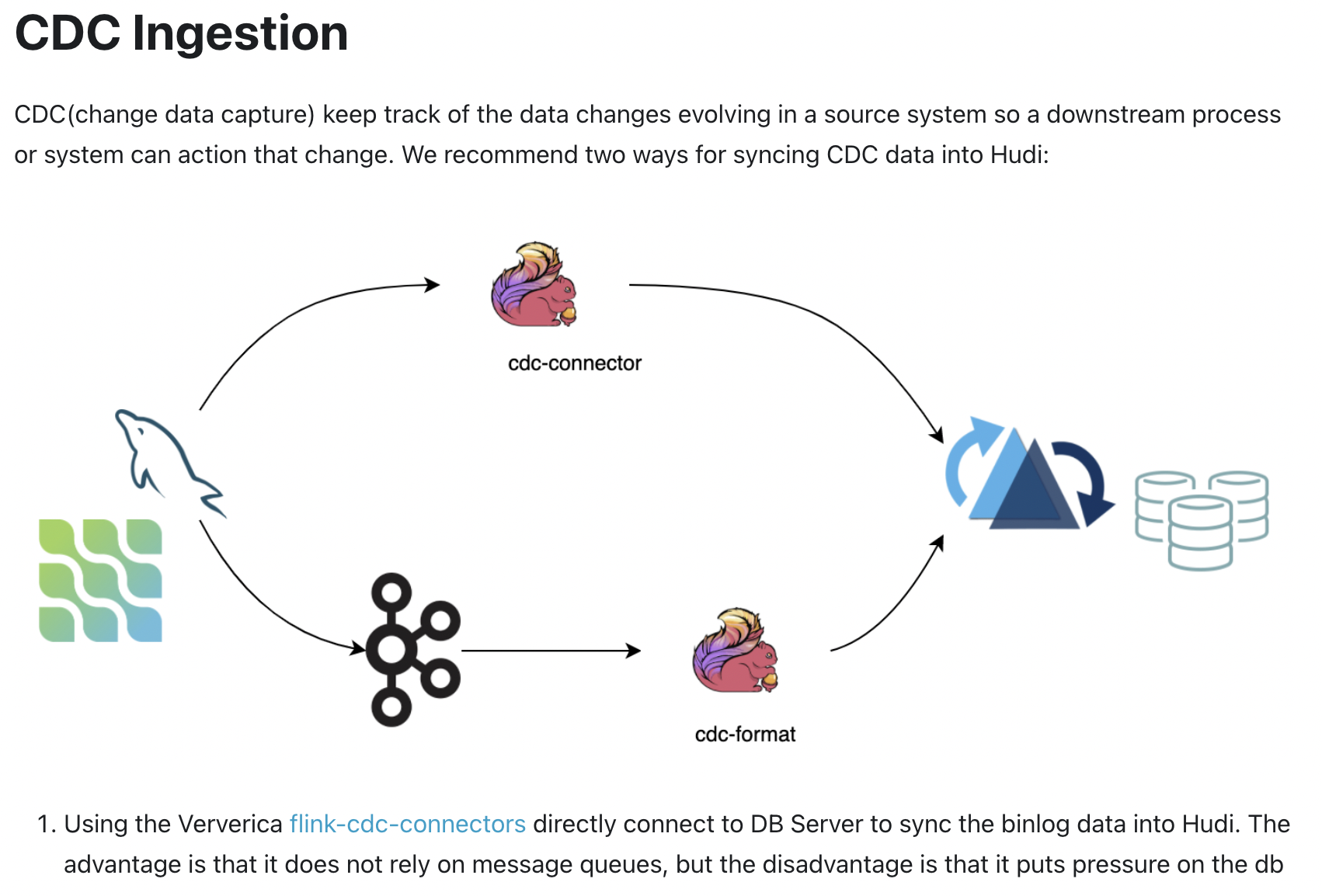

[hudi] branch asf-site updated: [HUDI-2710] Update the document for flink hudi quick start (#3943)

This is an automated email from the ASF dual-hosted git repository. danny0405 pushed a commit to branch asf-site in repository https://gitbox.apache.org/repos/asf/hudi.git The following commit(s) were added to refs/heads/asf-site by this push: new 805a21b [HUDI-2710] Update the document for flink hudi quick start (#3943) 805a21b is described below commit 805a21b7b28dd0ebe0ddd350226cf100d8505aa7 Author: Danny Chan AuthorDate: Tue Nov 9 12:00:45 2021 +0800 [HUDI-2710] Update the document for flink hudi quick start (#3943) --- website/docs/flink-quick-start-guide.md | 53 +++- website/static/assets/images/cdc-2-hudi.png | Bin 0 -> 689096 bytes 2 files changed, 45 insertions(+), 8 deletions(-) diff --git a/website/docs/flink-quick-start-guide.md b/website/docs/flink-quick-start-guide.md index af6c2d6..3f4d4a9 100644 --- a/website/docs/flink-quick-start-guide.md +++ b/website/docs/flink-quick-start-guide.md @@ -223,7 +223,7 @@ allocated with enough memory, we can try to set these memory options. | `write.bucket_assign.tasks` | The parallelism of bucket assigner operators. No default value, using Flink `parallelism.default` | [`parallelism.default`](#parallelism) | Increases the parallelism also increases the number of buckets, thus the number of small files (small buckets) | | `write.index_boostrap.tasks` | The parallelism of index bootstrap. Increasing parallelism can speed up the efficiency of the bootstrap stage. The bootstrap stage will block checkpointing. Therefore, it is necessary to set more checkpoint failure tolerance times. Default using Flink `parallelism.default` | [`parallelism.default`](#parallelism) | It only take effect when `index.bootsrap.enabled` is `true` | | `read.tasks` | The parallelism of read operators (batch and stream). Default `4` | `4` | | -| `compaction.tasks` | The parallelism of online compaction. Default `10` | `10` | `Online compaction` will occupy the resources of the write task. It is recommended to use [`offline compaction`](#offline-compaction) | +| `compaction.tasks` | The parallelism of online compaction. Default `4` | `4` | `Online compaction` will occupy the resources of the write task. It is recommended to use [`offline compaction`](#offline-compaction) | ### Compaction @@ -243,7 +243,7 @@ Turn off online compaction by setting `compaction.async.enabled` = `false`, but | `compaction.delta_commits` | Max delta commits needed to trigger compaction, default `5` commits | `5` | -- | | `compaction.delta_seconds` | Max delta seconds time needed to trigger compaction, default `1` hour | `3600` | -- | | `compaction.max_memory` | Max memory in MB for compaction spillable map, default `100MB` | `100` | If your have sufficient resources, recommend to adjust to `1024MB` | -| `compaction.target_io` | Target IO per compaction (both read and write), default `5GB`| `5120` | The default value for `offline compaction` is `500GB` | +| `compaction.target_io` | Target IO per compaction (both read and write), default `500GB`| `512000` | -- | ## Memory Optimization @@ -265,6 +265,23 @@ logs. `compaction.tasks` controls the parallelism of compaction tasks. desired memory size `write.task.max.size`. For example, taskManager has `4GB` of memory running two write tasks, so each write task can be allocated with `2GB` memory. Please reserve some buffers because the network buffer and other types of tasks on taskManager (such as `BucketAssignFunction`) will also consume memory. +## CDC Ingestion +CDC(change data capture) keep track of the data changes evolving in a source system so a downstream process or system can action that change. +We recommend two ways for syncing CDC data into Hudi: + + + +1. Using the Ververica [flink-cdc-connectors](https://github.com/ververica/flink-cdc-connectors) directly connect to DB Server to sync the binlog data into Hudi. +The advantage is that it does not rely on message queues, but the disadvantage is that it puts pressure on the db server; +2. Consume data from a message queue (for e.g, the Kafka) using the flink cdc format, the advantage is that it is highly scalable, +but the disadvantage is that it relies on message queues. + +:::note +- If the upstream data cannot guarantee the order, you need to specify option `write.precombine.field` explicitly; +- The MOR table can not handle DELETEs in event time sequence now, thus causing data loss. You better switch on the changelog mode through +option `changelog.enabled`. +::: + ## Bulk Insert For the demand of snapshot data import. If the snapshot data comes from other data sources, use the `bulk_insert` mode to quickly @@ -352,18 +369,38 @@ the compaction options: [`compaction.delta_commits`](#compaction) and [`compacti ::: -## Insert Mode +## Append Mode + +If INSERT operation is used for ingestion, for COW table, there is no merging of

[GitHub] [hudi] danny0405 merged pull request #3943: [HUDI-2710] Update the document for flink hudi quick start

danny0405 merged pull request #3943: URL: https://github.com/apache/hudi/pull/3943 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (HUDI-2655) Non partitioned dataset with metadata fails

[

https://issues.apache.org/jira/browse/HUDI-2655?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17440885#comment-17440885

]

sivabalan narayanan commented on HUDI-2655:

---

sure. I will try to reproduce again. If I couldn't, will close it out.

> Non partitioned dataset with metadata fails

> ---

>

> Key: HUDI-2655

> URL: https://issues.apache.org/jira/browse/HUDI-2655

> Project: Apache Hudi

> Issue Type: Sub-task

>Reporter: sivabalan narayanan

>Assignee: sivabalan narayanan

>Priority: Blocker

> Fix For: 0.10.0

>

> Attachments: image-2021-11-08-16-39-14-952.png

>

>

> likely when compaction kicks in within metadata table, record key is empty.

> When I tried w/ deltastreamer job, I hit the exception after 10+ commits in

> non-partitioned data table

>

> {code:java}

> Caused by: org.apache.hudi.exception.HoodieUpsertException: Failed to merge

> old record into new file for key from old file

> file:/tmp/hudi-deltastreamer-op/impressions_cow/.hoodie/metadata/files/files-_0-782-654_20211029073337001.hfile

> to new file

> file:/tmp/hudi-deltastreamer-op/impressions_cow/.hoodie/metadata/files/files-_0-1086-913_20211029073358001.hfile

> with writerSchema {

> "type" : "record",

> "name" : "HoodieMetadataRecord",

> "namespace" : "org.apache.hudi.avro.model",

> "doc" : "A record saved within the Metadata Table",

> "fields" : [ {

> "name" : "_hoodie_commit_time",

> "type" : [ "null", "string" ],

> "doc" : "",

> "default" : null

> }, {

> "name" : "_hoodie_commit_seqno",

> "type" : [ "null", "string" ],

> "doc" : "",

> "default" : null

> }, {

> "name" : "_hoodie_record_key",

> "type" : [ "null", "string" ],

> "doc" : "",

> "default" : null

> }, {

> "name" : "_hoodie_partition_path",

> "type" : [ "null", "string" ],

> "doc" : "",

> "default" : null

> }, {

> "name" : "_hoodie_file_name",

> "type" : [ "null", "string" ],

> "doc" : "",

> "default" : null

> }, {

> "name" : "key",

> "type" : {

> "type" : "string",

> "avro.java.string" : "String"

> }

> }, {

> "name" : "type",

> "type" : "int",

> "doc" : "Type of the metadata record"

> }, {

> "name" : "filesystemMetadata",

> "type" : [ "null", {

> "type" : "map",

> "values" : {

> "type" : "record",

> "name" : "HoodieMetadataFileInfo",

> "fields" : [ {

> "name" : "size",

> "type" : "long",

> "doc" : "Size of the file"

> }, {

> "name" : "isDeleted",

> "type" : "boolean",

> "doc" : "True if this file has been deleted"

> } ]

> },

> "avro.java.string" : "String"

> } ],

> "doc" : "Contains information about partitions and files within the

> dataset"

> } ]

> }

> at

> org.apache.hudi.io.HoodieMergeHandle.write(HoodieMergeHandle.java:349)

> at

> org.apache.hudi.io.HoodieSortedMergeHandle.write(HoodieSortedMergeHandle.java:104)

> at

> org.apache.hudi.table.action.commit.AbstractMergeHelper$UpdateHandler.consumeOneRecord(AbstractMergeHelper.java:122)

> at

> org.apache.hudi.table.action.commit.AbstractMergeHelper$UpdateHandler.consumeOneRecord(AbstractMergeHelper.java:112)

> at

> org.apache.hudi.common.util.queue.BoundedInMemoryQueueConsumer.consume(BoundedInMemoryQueueConsumer.java:37)

> at

> org.apache.hudi.common.util.queue.BoundedInMemoryExecutor.lambda$null$2(BoundedInMemoryExecutor.java:121)

> at java.util.concurrent.FutureTask.run(FutureTask.java:266)

> ... 3 more

> Caused by: java.lang.IllegalArgumentException: key length must be > 0

> at org.apache.hadoop.util.bloom.HashFunction.hash(HashFunction.java:114)

> at org.apache.hadoop.util.bloom.BloomFilter.add(BloomFilter.java:122)

> at

> org.apache.hudi.common.bloom.InternalDynamicBloomFilter.add(InternalDynamicBloomFilter.java:94)

> at

> org.apache.hudi.common.bloom.HoodieDynamicBoundedBloomFilter.add(HoodieDynamicBoundedBloomFilter.java:81)

> at

> org.apache.hudi.io.storage.HoodieHFileWriter.writeAvro(HoodieHFileWriter.java:119)

> at

> org.apache.hudi.io.HoodieMergeHandle.write(HoodieMergeHandle.java:344)

> ... 9 more

> {code}

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[GitHub] [hudi] danny0405 commented on pull request #3943: [HUDI-2710] Update the document for flink hudi quick start

danny0405 commented on pull request #3943: URL: https://github.com/apache/hudi/pull/3943#issuecomment-963794359  -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #3944: [HUDI-2495] resolve inconsistent key generation for timestamp types b…

hudi-bot commented on pull request #3944: URL: https://github.com/apache/hudi/pull/3944#issuecomment-963794113 ## CI report: * 75d2c6ca1cb274a810aff7fcf450dbd46183768a Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=3234) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot removed a comment on pull request #3944: [HUDI-2495] resolve inconsistent key generation for timestamp types b…

hudi-bot removed a comment on pull request #3944: URL: https://github.com/apache/hudi/pull/3944#issuecomment-963778929 ## CI report: * d345dd70c71ea2aab383e19558d330ca3bfc1a75 Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=3214) * 75d2c6ca1cb274a810aff7fcf450dbd46183768a Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=3234) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] xiarixiaoyao commented on pull request #3203: [HUDI-2086] Refactor hive mor_incremental_view

xiarixiaoyao commented on pull request #3203: URL: https://github.com/apache/hudi/pull/3203#issuecomment-963790451 @codope Will #3630 fix this? this pr cannot solve this problem. let's open a new jira to track this issue. and try to resolve it in other pr。 @codope @danny0405 Since the above question has nothing to do with this PR, Does this PR still need to be verified before merging -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #3951: [HUDI-2715] The BitCaskDiskMap iterator may cause memory leak

hudi-bot commented on pull request #3951: URL: https://github.com/apache/hudi/pull/3951#issuecomment-963788502 ## CI report: * e101bdf961b4416e7f5e91a51adec17ac0b8c12b Azure: [SUCCESS](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=3233) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot removed a comment on pull request #3951: [HUDI-2715] The BitCaskDiskMap iterator may cause memory leak

hudi-bot removed a comment on pull request #3951: URL: https://github.com/apache/hudi/pull/3951#issuecomment-963762257 ## CI report: * e101bdf961b4416e7f5e91a51adec17ac0b8c12b Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=3233) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #3748: [HUDI-2516] Upgrade JUnit to 5.8.1

hudi-bot commented on pull request #3748: URL: https://github.com/apache/hudi/pull/3748#issuecomment-963787491 ## CI report: * d12fd12372ec2f6c07399863491ca0d6c5691536 Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=3222) * 4fbc75d8510edab1397afa5e84e7afa8d87e2f75 Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=3236) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot removed a comment on pull request #3748: [HUDI-2516] Upgrade JUnit to 5.8.1

hudi-bot removed a comment on pull request #3748: URL: https://github.com/apache/hudi/pull/3748#issuecomment-963786595 ## CI report: * d12fd12372ec2f6c07399863491ca0d6c5691536 Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=3222) * 4fbc75d8510edab1397afa5e84e7afa8d87e2f75 UNKNOWN Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #3748: [HUDI-2516] Upgrade JUnit to 5.8.1

hudi-bot commented on pull request #3748: URL: https://github.com/apache/hudi/pull/3748#issuecomment-963786595 ## CI report: * d12fd12372ec2f6c07399863491ca0d6c5691536 Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=3222) * 4fbc75d8510edab1397afa5e84e7afa8d87e2f75 UNKNOWN Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot removed a comment on pull request #3748: [HUDI-2516] Upgrade JUnit to 5.8.1

hudi-bot removed a comment on pull request #3748: URL: https://github.com/apache/hudi/pull/3748#issuecomment-963437381 ## CI report: * d12fd12372ec2f6c07399863491ca0d6c5691536 Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=3222) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] nsivabalan commented on a change in pull request #3836: [HUDI-2591] Bootstrap metadata table only if upgrade / downgrade is not required.

nsivabalan commented on a change in pull request #3836:

URL: https://github.com/apache/hudi/pull/3836#discussion_r745263375

##

File path:

hudi-client/hudi-client-common/src/main/java/org/apache/hudi/metadata/HoodieBackedTableMetadataWriter.java

##

@@ -217,8 +222,10 @@ public HoodieBackedTableMetadata metadata() {

* Initialize the metadata table if it does not exist.

*

* If the metadata table did not exist, then file and partition listing is

used to bootstrap the table.

+ * @param instantInProgressTimestamp Timestap of an instant in progress on

the dataset. This instant is ignored

+ * while deciding to bootstrap the

metadata table.

*/

- protected abstract void initialize(HoodieEngineContext engineContext);

+ protected abstract void initialize(HoodieEngineContext engineContext,

Option instantInProgressTimestamp);

Review comment:

minor: may be we can rename to inflightInstantTimestamp.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] nsivabalan commented on a change in pull request #3836: [HUDI-2591] Bootstrap metadata table only if upgrade / downgrade is not required.

nsivabalan commented on a change in pull request #3836:

URL: https://github.com/apache/hudi/pull/3836#discussion_r745261515

##

File path:

hudi-client/hudi-spark-client/src/main/java/org/apache/hudi/client/SparkRDDWriteClient.java

##

@@ -83,22 +83,31 @@ public SparkRDDWriteClient(HoodieEngineContext context,

HoodieWriteConfig client

@Deprecated

public SparkRDDWriteClient(HoodieEngineContext context, HoodieWriteConfig

writeConfig, boolean rollbackPending) {

-super(context, writeConfig);

+this(context, writeConfig, Option.empty());

}

@Deprecated

public SparkRDDWriteClient(HoodieEngineContext context, HoodieWriteConfig

writeConfig, boolean rollbackPending,

Option timelineService) {

-super(context, writeConfig, timelineService);

+this(context, writeConfig, timelineService);

}

public SparkRDDWriteClient(HoodieEngineContext context, HoodieWriteConfig

writeConfig,

Option timelineService) {

super(context, writeConfig, timelineService);

+bootstrapMetadataTable(Option.empty());

+ }

+

+ private void bootstrapMetadataTable(Option

instantInProgressTimestamp) {

Review comment:

not sure if we can call it as bootstrap metadata table. this is

essentially initializing metadata table. internal impl could do bootstrap if

applicable.

##

File path:

hudi-client/hudi-spark-client/src/main/java/org/apache/hudi/client/SparkRDDWriteClient.java

##

@@ -95,10 +95,19 @@ public SparkRDDWriteClient(HoodieEngineContext context,

HoodieWriteConfig writeC

public SparkRDDWriteClient(HoodieEngineContext context, HoodieWriteConfig

writeConfig,

Option timelineService) {

super(context, writeConfig, timelineService);

+bootstrapMetadataTable();

+ }

+

+ private void bootstrapMetadataTable() {

if (config.isMetadataTableEnabled()) {

- // If the metadata table does not exist, it should be bootstrapped here

- // TODO: Check if we can remove this requirement - auto bootstrap on

commit

-

SparkHoodieBackedTableMetadataWriter.create(context.getHadoopConf().get(),

config, context);

+ // Defer bootstrap if upgrade / downgrade is pending

+ HoodieTableMetaClient metaClient = createMetaClient(true);

+ UpgradeDowngrade upgradeDowngrade = new UpgradeDowngrade(

+ metaClient, config, context,

SparkUpgradeDowngradeHelper.getInstance());

+ if

(!upgradeDowngrade.needsUpgradeOrDowngrade(HoodieTableVersion.current())) {

Review comment:

thanks!

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #3952: [HUDI-2102][WIP] support hilbert curve for hudi.

hudi-bot commented on pull request #3952: URL: https://github.com/apache/hudi/pull/3952#issuecomment-963784924 ## CI report: * 4a3305e70773578729177c6ee863d52ecf31ee39 Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=3235) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot removed a comment on pull request #3952: [HUDI-2102][WIP] support hilbert curve for hudi.

hudi-bot removed a comment on pull request #3952: URL: https://github.com/apache/hudi/pull/3952#issuecomment-963783951 ## CI report: * 4a3305e70773578729177c6ee863d52ecf31ee39 UNKNOWN Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (HUDI-2526) Make spark.sql.parquet.writeLegacyFormat configurable

[ https://issues.apache.org/jira/browse/HUDI-2526?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Sagar Sumit updated HUDI-2526: -- Status: Closed (was: Patch Available) > Make spark.sql.parquet.writeLegacyFormat configurable > - > > Key: HUDI-2526 > URL: https://issues.apache.org/jira/browse/HUDI-2526 > Project: Apache Hudi > Issue Type: Sub-task >Reporter: Sagar Sumit >Assignee: Sagar Sumit >Priority: Blocker > Labels: pull-request-available > Fix For: 0.10.0 > > > From the community, > "I am observing that HUDI bulk inser in 0.9.0 version is not honoring > spark.sql.parquet.writeLegacyFormat=true > config. Can you suggest way to set this config. > Reason to use this config: > Current Bulk insert use spark dataframe writer and don't do avro conversion. > The decimal columns in my DF are written as INT32 type in parquet. > The upsert functionality which uses avro conversion is generating Fixed > Length byte array for decimal types which is failing with datatype mismatch." > The main reason is that the [config is > hardcoded|https://github.com/apache/hudi/blob/46808dcb1fe22491326a9e831dd4dde4c70796fb/hudi-client/hudi-spark-client/src/main/java/org/apache/hudi/io/storage/row/HoodieRowParquetWriteSupport.java#L48]. > We can make it configurable. -- This message was sent by Atlassian Jira (v8.20.1#820001)

[GitHub] [hudi] hudi-bot commented on pull request #3952: [HUDI-2102][WIP] support hilbert curve for hudi.

hudi-bot commented on pull request #3952: URL: https://github.com/apache/hudi/pull/3952#issuecomment-963783951 ## CI report: * 4a3305e70773578729177c6ee863d52ecf31ee39 UNKNOWN Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] vinothchandar commented on pull request #3952: [HUDI-2102][WIP] support hilbert curve for hudi.

vinothchandar commented on pull request #3952: URL: https://github.com/apache/hudi/pull/3952#issuecomment-963783688 IIUC hilbert curves are actually more prevalent in database systems. curious to see how it compares to z-order curves! -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] nsivabalan commented on pull request #3873: [HUDI-2634] Improved the metadata table bootstrap for very large tables.