[GitHub] [hudi] hughfdjackson commented on issue #2265: Arrays with nulls in them result in broken parquet files

hughfdjackson commented on issue #2265: URL: https://github.com/apache/hudi/issues/2265#issuecomment-754502750 @umehrot2 Thanks for looking into this - I'm taking a bit of hope from error message of the code you linked ;)  Have you had any further thoughts on this issue? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (HUDI-913) Update docs about KeyGenerator

[ https://issues.apache.org/jira/browse/HUDI-913?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] vinoyang updated HUDI-913: -- Status: Open (was: New) > Update docs about KeyGenerator > -- > > Key: HUDI-913 > URL: https://issues.apache.org/jira/browse/HUDI-913 > Project: Apache Hudi > Issue Type: Sub-task >Reporter: wangxianghu >Assignee: wangxianghu >Priority: Major > Labels: pull-request-available > > update default values about `KeyGenerator` > > > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (HUDI-913) Update docs about KeyGenerator

[ https://issues.apache.org/jira/browse/HUDI-913?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] vinoyang updated HUDI-913: -- Fix Version/s: 0.7.0 > Update docs about KeyGenerator > -- > > Key: HUDI-913 > URL: https://issues.apache.org/jira/browse/HUDI-913 > Project: Apache Hudi > Issue Type: Sub-task >Reporter: wangxianghu >Assignee: wangxianghu >Priority: Major > Labels: pull-request-available > Fix For: 0.7.0 > > > update default values about `KeyGenerator` > > > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Closed] (HUDI-913) Update docs about KeyGenerator

[ https://issues.apache.org/jira/browse/HUDI-913?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] vinoyang closed HUDI-913. - Resolution: Done > Update docs about KeyGenerator > -- > > Key: HUDI-913 > URL: https://issues.apache.org/jira/browse/HUDI-913 > Project: Apache Hudi > Issue Type: Sub-task >Reporter: wangxianghu >Assignee: wangxianghu >Priority: Major > Labels: pull-request-available > Fix For: 0.7.0 > > > update default values about `KeyGenerator` > > > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [hudi] codecov-io edited a comment on pull request #2374: [HUDI-845] Added locking capability to allow multiple writers

codecov-io edited a comment on pull request #2374: URL: https://github.com/apache/hudi/pull/2374#issuecomment-750782300 # [Codecov](https://codecov.io/gh/apache/hudi/pull/2374?src=pr=h1) Report > Merging [#2374](https://codecov.io/gh/apache/hudi/pull/2374?src=pr=desc) (21792c6) into [master](https://codecov.io/gh/apache/hudi/commit/698694a1571cdcc9848fc79aa34c8cbbf9662bc4?el=desc) (698694a) will **decrease** coverage by `40.19%`. > The diff coverage is `n/a`. [](https://codecov.io/gh/apache/hudi/pull/2374?src=pr=tree) ```diff @@ Coverage Diff @@ ## master#2374 +/- ## = - Coverage 50.23% 10.04% -40.20% + Complexity 2985 48 -2937 = Files 410 52 -358 Lines 18398 1852-16546 Branches 1884 223 -1661 = - Hits 9242 186 -9056 + Misses 8398 1653 -6745 + Partials758 13 -745 ``` | Flag | Coverage Δ | Complexity Δ | | |---|---|---|---| | hudicli | `?` | `?` | | | hudiclient | `?` | `?` | | | hudicommon | `?` | `?` | | | hudiflink | `?` | `?` | | | hudihadoopmr | `?` | `?` | | | hudisparkdatasource | `?` | `?` | | | hudisync | `?` | `?` | | | huditimelineservice | `?` | `?` | | | hudiutilities | `10.04% <ø> (-59.62%)` | `0.00 <ø> (ø)` | | Flags with carried forward coverage won't be shown. [Click here](https://docs.codecov.io/docs/carryforward-flags#carryforward-flags-in-the-pull-request-comment) to find out more. | [Impacted Files](https://codecov.io/gh/apache/hudi/pull/2374?src=pr=tree) | Coverage Δ | Complexity Δ | | |---|---|---|---| | [...va/org/apache/hudi/utilities/IdentitySplitter.java](https://codecov.io/gh/apache/hudi/pull/2374/diff?src=pr=tree#diff-aHVkaS11dGlsaXRpZXMvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvdXRpbGl0aWVzL0lkZW50aXR5U3BsaXR0ZXIuamF2YQ==) | `0.00% <0.00%> (-100.00%)` | `0.00% <0.00%> (-2.00%)` | | | [...va/org/apache/hudi/utilities/schema/SchemaSet.java](https://codecov.io/gh/apache/hudi/pull/2374/diff?src=pr=tree#diff-aHVkaS11dGlsaXRpZXMvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvdXRpbGl0aWVzL3NjaGVtYS9TY2hlbWFTZXQuamF2YQ==) | `0.00% <0.00%> (-100.00%)` | `0.00% <0.00%> (-3.00%)` | | | [...a/org/apache/hudi/utilities/sources/RowSource.java](https://codecov.io/gh/apache/hudi/pull/2374/diff?src=pr=tree#diff-aHVkaS11dGlsaXRpZXMvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvdXRpbGl0aWVzL3NvdXJjZXMvUm93U291cmNlLmphdmE=) | `0.00% <0.00%> (-100.00%)` | `0.00% <0.00%> (-4.00%)` | | | [.../org/apache/hudi/utilities/sources/AvroSource.java](https://codecov.io/gh/apache/hudi/pull/2374/diff?src=pr=tree#diff-aHVkaS11dGlsaXRpZXMvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvdXRpbGl0aWVzL3NvdXJjZXMvQXZyb1NvdXJjZS5qYXZh) | `0.00% <0.00%> (-100.00%)` | `0.00% <0.00%> (-1.00%)` | | | [.../org/apache/hudi/utilities/sources/JsonSource.java](https://codecov.io/gh/apache/hudi/pull/2374/diff?src=pr=tree#diff-aHVkaS11dGlsaXRpZXMvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvdXRpbGl0aWVzL3NvdXJjZXMvSnNvblNvdXJjZS5qYXZh) | `0.00% <0.00%> (-100.00%)` | `0.00% <0.00%> (-1.00%)` | | | [...rg/apache/hudi/utilities/sources/CsvDFSSource.java](https://codecov.io/gh/apache/hudi/pull/2374/diff?src=pr=tree#diff-aHVkaS11dGlsaXRpZXMvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvdXRpbGl0aWVzL3NvdXJjZXMvQ3N2REZTU291cmNlLmphdmE=) | `0.00% <0.00%> (-100.00%)` | `0.00% <0.00%> (-10.00%)` | | | [...g/apache/hudi/utilities/sources/JsonDFSSource.java](https://codecov.io/gh/apache/hudi/pull/2374/diff?src=pr=tree#diff-aHVkaS11dGlsaXRpZXMvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvdXRpbGl0aWVzL3NvdXJjZXMvSnNvbkRGU1NvdXJjZS5qYXZh) | `0.00% <0.00%> (-100.00%)` | `0.00% <0.00%> (-4.00%)` | | | [...apache/hudi/utilities/sources/JsonKafkaSource.java](https://codecov.io/gh/apache/hudi/pull/2374/diff?src=pr=tree#diff-aHVkaS11dGlsaXRpZXMvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvdXRpbGl0aWVzL3NvdXJjZXMvSnNvbkthZmthU291cmNlLmphdmE=) | `0.00% <0.00%> (-100.00%)` | `0.00% <0.00%> (-6.00%)` | | | [...pache/hudi/utilities/sources/ParquetDFSSource.java](https://codecov.io/gh/apache/hudi/pull/2374/diff?src=pr=tree#diff-aHVkaS11dGlsaXRpZXMvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvdXRpbGl0aWVzL3NvdXJjZXMvUGFycXVldERGU1NvdXJjZS5qYXZh) | `0.00% <0.00%> (-100.00%)` | `0.00% <0.00%> (-5.00%)` | | | [...lities/schema/SchemaProviderWithPostProcessor.java](https://codecov.io/gh/apache/hudi/pull/2374/diff?src=pr=tree#diff-aHVkaS11dGlsaXRpZXMvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvdXRpbGl0aWVzL3NjaGVtYS9TY2hlbWFQcm92aWRlcldpdGhQb3N0UHJvY2Vzc29yLmphdmE=) |

[GitHub] [hudi] wangxianghu opened a new pull request #2405: [HUDI-1506] Fix wrong exception thrown in HoodieAvroUtils

wangxianghu opened a new pull request #2405: URL: https://github.com/apache/hudi/pull/2405 ## *Tips* - *Thank you very much for contributing to Apache Hudi.* - *Please review https://hudi.apache.org/contributing.html before opening a pull request.* ## What is the purpose of the pull request *(For example: This pull request adds quick-start document.)* ## Brief change log *(for example:)* - *Modify AnnotationLocation checkstyle rule in checkstyle.xml* ## Verify this pull request *(Please pick either of the following options)* This pull request is a trivial rework / code cleanup without any test coverage. *(or)* This pull request is already covered by existing tests, such as *(please describe tests)*. (or) This change added tests and can be verified as follows: *(example:)* - *Added integration tests for end-to-end.* - *Added HoodieClientWriteTest to verify the change.* - *Manually verified the change by running a job locally.* ## Committer checklist - [ ] Has a corresponding JIRA in PR title & commit - [ ] Commit message is descriptive of the change - [ ] CI is green - [ ] Necessary doc changes done or have another open PR - [ ] For large changes, please consider breaking it into sub-tasks under an umbrella JIRA. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (HUDI-1506) Fix wrong exception thrown in HoodieAvroUtils

[

https://issues.apache.org/jira/browse/HUDI-1506?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

wangxianghu updated HUDI-1506:

--

Description:

{code:java}

//

Caused by: org.apache.spark.SparkException: Job aborted due to stage failure:

Task 4 in stage 4.0 failed 4 times, most recent failure: Lost task 4.3 in stage

4.0 (TID 24, al-prd-dtp-data-lake-10-0-88-26, executor 4):

org.apache.hudi.exception.HoodieException: etlDatetime(Part -etlDatetime) field

not found in record. Acceptable fields were :[vin, uuid, commercialType,

businessType, vehicleNo, plateColor, vehicleColor, engineId, nextFixDate,

feePrintId, transArea, createTime, updateTime, registerDate, curVehicleNo,

reportVehicleNo, model, checkDate, certifyDateA, certifyDateB, certificate,

transAgency, transAgencyNet, transDateStart, transDateStop, insurCom, insurNum,

insurType, insurCount, insurEff, insurExp, insurCreateTime, insurUpdateTime,

curVehicleCertno, reportVehicleCertno, seats, brand, vehicleType, fuelType,

engineDisplace, photo, enginePower, gpsBrand, gpsModel, gpsImei,

gpsInstallDate, curDriverUuid, reportDrivers, curTimeOn, curTimeOff, timeFrom,

timeTo, ownerName, fixState, checkState, photoId, photoIdUrl, fareType,

wheelBase, vehicleTec, vehicleSafe, lesseeName, lesseeCode, sdcOperationType,

hivePartition, etlDatetime]

{code}

we can see the `etlDatetime` do exist. it is caused by null value acturally

was:Caused by: org.apache.spark.SparkException: Job aborted due to stage

failure: Task 4 in stage 4.0 failed 4 times, most recent failure: Lost task 4.3

in stage 4.0 (TID 24, al-prd-dtp-data-lake-10-0-88-26, executor 4):

org.apache.hudi.exception.HoodieException: etlDatetime(Part -etlDatetime) field

not found in record. Acceptable fields were :[vin, uuid, commercialType,

businessType, vehicleNo, plateColor, vehicleColor, engineId, nextFixDate,

feePrintId, transArea, createTime, updateTime, registerDate, curVehicleNo,

reportVehicleNo, model, checkDate, certifyDateA, certifyDateB, certificate,

transAgency, transAgencyNet, transDateStart, transDateStop, insurCom, insurNum,

insurType, insurCount, insurEff, insurExp, insurCreateTime, insurUpdateTime,

curVehicleCertno, reportVehicleCertno, seats, brand, vehicleType, fuelType,

engineDisplace, photo, enginePower, gpsBrand, gpsModel, gpsImei,

gpsInstallDate, curDriverUuid, reportDrivers, curTimeOn, curTimeOff, timeFrom,

timeTo, ownerName, fixState, checkState, photoId, photoIdUrl, fareType,

wheelBase, vehicleTec, vehicleSafe, lesseeName, lesseeCode, sdcOperationType,

hivePartition, etlDatetime]

> Fix wrong exception thrown in HoodieAvroUtils

> -

>

> Key: HUDI-1506

> URL: https://issues.apache.org/jira/browse/HUDI-1506

> Project: Apache Hudi

> Issue Type: Bug

>Reporter: wangxianghu

>Assignee: wangxianghu

>Priority: Major

>

> {code:java}

> //

> Caused by: org.apache.spark.SparkException: Job aborted due to stage failure:

> Task 4 in stage 4.0 failed 4 times, most recent failure: Lost task 4.3 in

> stage 4.0 (TID 24, al-prd-dtp-data-lake-10-0-88-26, executor 4):

> org.apache.hudi.exception.HoodieException: etlDatetime(Part -etlDatetime)

> field not found in record. Acceptable fields were :[vin, uuid,

> commercialType, businessType, vehicleNo, plateColor, vehicleColor, engineId,

> nextFixDate, feePrintId, transArea, createTime, updateTime, registerDate,

> curVehicleNo, reportVehicleNo, model, checkDate, certifyDateA, certifyDateB,

> certificate, transAgency, transAgencyNet, transDateStart, transDateStop,

> insurCom, insurNum, insurType, insurCount, insurEff, insurExp,

> insurCreateTime, insurUpdateTime, curVehicleCertno, reportVehicleCertno,

> seats, brand, vehicleType, fuelType, engineDisplace, photo, enginePower,

> gpsBrand, gpsModel, gpsImei, gpsInstallDate, curDriverUuid, reportDrivers,

> curTimeOn, curTimeOff, timeFrom, timeTo, ownerName, fixState, checkState,

> photoId, photoIdUrl, fareType, wheelBase, vehicleTec, vehicleSafe,

> lesseeName, lesseeCode, sdcOperationType, hivePartition, etlDatetime]

> {code}

> we can see the `etlDatetime` do exist. it is caused by null value acturally

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [hudi] wangxianghu commented on pull request #2405: [HUDI-1506] Fix wrong exception thrown in HoodieAvroUtils

wangxianghu commented on pull request #2405: URL: https://github.com/apache/hudi/pull/2405#issuecomment-754644106 @yanghua please take a look when free This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] wangxianghu commented on pull request #2404: [MINOR] Add Jira URL and Mailing List

wangxianghu commented on pull request #2404: URL: https://github.com/apache/hudi/pull/2404#issuecomment-754643863 @yanghua please take a look when free This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] SureshK-T2S opened a new issue #2406: [SUPPORT] Deltastreamer - Property hoodie.datasource.write.partitionpath.field not found

SureshK-T2S opened a new issue #2406: URL: https://github.com/apache/hudi/issues/2406 I am attempting to create a hudi table using a parquet file on S3. The motivation for this approach is based on this Hudi blog: https://cwiki.apache.org/confluence/display/HUDI/2020/01/20/Change+Capture+Using+AWS+Database+Migration+Service+and+Hudi To first attempt usage of deltastreamer to ingest a full initial batch load, I attempted to use parquet files used in an aws blog at s3://athena-examples-us-west-2/elb/parquet/year=2015/month=1/day=1/ https://aws.amazon.com/blogs/aws/new-insert-update-delete-data-on-s3-with-amazon-emr-and-apache-hudi/ At first I used the spark shell on EMR to load the data into a dataframe and view it, this happens with no issues:  I then attempted to use Hudi Deltastreamer as per my understanding of the documentation, however I ran into a couple of issues. Steps to reproduce the behavior: 1. Ran the following: ``` spark-submit --class org.apache.hudi.utilities.deltastreamer.HoodieDeltaStreamer \ --packages org.apache.hudi:hudi-spark-bundle_2.11:0.6.0,org.apache.spark:spark-avro_2.11:2.4.4\ --master yarn --deploy-mode client \ /usr/lib/hudi/hudi-utilities-bundle.jar --table-type MERGE_ON_READ \ --source-ordering-field request_timestamp \ --source-class org.apache.hudi.utilities.sources.ParquetDFSSource \ --target-base-path s3://mysqlcdc-stream-prod/hudi_tryout/hudi_aws_test --target-table hudi_aws_test \ --hoodie-conf hoodie.datasource.write.recordkey.field=request_timestamp,hoodie.deltastreamer.source.dfs.root=s3://athena-examples-us-west-2/elb/parquet/year=2015/month=1/day=1,hoodie.datasource.write.partitionpath.field=request_timestamp:TIMESTAMP ``` Stacktrace: ```Exception in thread "main" java.io.IOException: Could not load key generator class org.apache.hudi.keygen.SimpleKeyGenerator at org.apache.hudi.DataSourceUtils.createKeyGenerator(DataSourceUtils.java:94) at org.apache.hudi.utilities.deltastreamer.DeltaSync.(DeltaSync.java:190) at org.apache.hudi.utilities.deltastreamer.HoodieDeltaStreamer$DeltaSyncService.(HoodieDeltaStreamer.java:552) at org.apache.hudi.utilities.deltastreamer.HoodieDeltaStreamer.(HoodieDeltaStreamer.java:129) at org.apache.hudi.utilities.deltastreamer.HoodieDeltaStreamer.(HoodieDeltaStreamer.java:99) at org.apache.hudi.utilities.deltastreamer.HoodieDeltaStreamer.main(HoodieDeltaStreamer.java:464) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:498) at org.apache.spark.deploy.JavaMainApplication.start(SparkApplication.scala:52) at org.apache.spark.deploy.SparkSubmit.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:853) at org.apache.spark.deploy.SparkSubmit.doRunMain$1(SparkSubmit.scala:161) at org.apache.spark.deploy.SparkSubmit.submit(SparkSubmit.scala:184) at org.apache.spark.deploy.SparkSubmit.doSubmit(SparkSubmit.scala:86) at org.apache.spark.deploy.SparkSubmit$$anon$2.doSubmit(SparkSubmit.scala:928) at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:937) at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala) Caused by: org.apache.hudi.exception.HoodieException: Unable to instantiate class at org.apache.hudi.common.util.ReflectionUtils.loadClass(ReflectionUtils.java:89) at org.apache.hudi.common.util.ReflectionUtils.loadClass(ReflectionUtils.java:98) at org.apache.hudi.DataSourceUtils.createKeyGenerator(DataSourceUtils.java:92) ... 17 more Caused by: java.lang.reflect.InvocationTargetException at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62) at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) at java.lang.reflect.Constructor.newInstance(Constructor.java:423) at org.apache.hudi.common.util.ReflectionUtils.loadClass(ReflectionUtils.java:87) ... 19 more Caused by: java.lang.IllegalArgumentException: Property hoodie.datasource.write.partitionpath.field not found at org.apache.hudi.common.config.TypedProperties.checkKey(TypedProperties.java:42) at org.apache.hudi.common.config.TypedProperties.getString(TypedProperties.java:47) at org.apache.hudi.keygen.SimpleKeyGenerator.(SimpleKeyGenerator.java:36) ... 24 more ```

[GitHub] [hudi] codecov-io commented on pull request #2404: [MINOR] Add Jira URL and Mailing List

codecov-io commented on pull request #2404: URL: https://github.com/apache/hudi/pull/2404#issuecomment-754638146 # [Codecov](https://codecov.io/gh/apache/hudi/pull/2404?src=pr=h1) Report > Merging [#2404](https://codecov.io/gh/apache/hudi/pull/2404?src=pr=desc) (fdeb851) into [master](https://codecov.io/gh/apache/hudi/commit/698694a1571cdcc9848fc79aa34c8cbbf9662bc4?el=desc) (698694a) will **decrease** coverage by `40.19%`. > The diff coverage is `n/a`. [](https://codecov.io/gh/apache/hudi/pull/2404?src=pr=tree) ```diff @@ Coverage Diff @@ ## master#2404 +/- ## = - Coverage 50.23% 10.04% -40.20% + Complexity 2985 48 -2937 = Files 410 52 -358 Lines 18398 1852-16546 Branches 1884 223 -1661 = - Hits 9242 186 -9056 + Misses 8398 1653 -6745 + Partials758 13 -745 ``` | Flag | Coverage Δ | Complexity Δ | | |---|---|---|---| | hudicli | `?` | `?` | | | hudiclient | `?` | `?` | | | hudicommon | `?` | `?` | | | hudiflink | `?` | `?` | | | hudihadoopmr | `?` | `?` | | | hudisparkdatasource | `?` | `?` | | | hudisync | `?` | `?` | | | huditimelineservice | `?` | `?` | | | hudiutilities | `10.04% <ø> (-59.62%)` | `0.00 <ø> (ø)` | | Flags with carried forward coverage won't be shown. [Click here](https://docs.codecov.io/docs/carryforward-flags#carryforward-flags-in-the-pull-request-comment) to find out more. | [Impacted Files](https://codecov.io/gh/apache/hudi/pull/2404?src=pr=tree) | Coverage Δ | Complexity Δ | | |---|---|---|---| | [...va/org/apache/hudi/utilities/IdentitySplitter.java](https://codecov.io/gh/apache/hudi/pull/2404/diff?src=pr=tree#diff-aHVkaS11dGlsaXRpZXMvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvdXRpbGl0aWVzL0lkZW50aXR5U3BsaXR0ZXIuamF2YQ==) | `0.00% <0.00%> (-100.00%)` | `0.00% <0.00%> (-2.00%)` | | | [...va/org/apache/hudi/utilities/schema/SchemaSet.java](https://codecov.io/gh/apache/hudi/pull/2404/diff?src=pr=tree#diff-aHVkaS11dGlsaXRpZXMvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvdXRpbGl0aWVzL3NjaGVtYS9TY2hlbWFTZXQuamF2YQ==) | `0.00% <0.00%> (-100.00%)` | `0.00% <0.00%> (-3.00%)` | | | [...a/org/apache/hudi/utilities/sources/RowSource.java](https://codecov.io/gh/apache/hudi/pull/2404/diff?src=pr=tree#diff-aHVkaS11dGlsaXRpZXMvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvdXRpbGl0aWVzL3NvdXJjZXMvUm93U291cmNlLmphdmE=) | `0.00% <0.00%> (-100.00%)` | `0.00% <0.00%> (-4.00%)` | | | [.../org/apache/hudi/utilities/sources/AvroSource.java](https://codecov.io/gh/apache/hudi/pull/2404/diff?src=pr=tree#diff-aHVkaS11dGlsaXRpZXMvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvdXRpbGl0aWVzL3NvdXJjZXMvQXZyb1NvdXJjZS5qYXZh) | `0.00% <0.00%> (-100.00%)` | `0.00% <0.00%> (-1.00%)` | | | [.../org/apache/hudi/utilities/sources/JsonSource.java](https://codecov.io/gh/apache/hudi/pull/2404/diff?src=pr=tree#diff-aHVkaS11dGlsaXRpZXMvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvdXRpbGl0aWVzL3NvdXJjZXMvSnNvblNvdXJjZS5qYXZh) | `0.00% <0.00%> (-100.00%)` | `0.00% <0.00%> (-1.00%)` | | | [...rg/apache/hudi/utilities/sources/CsvDFSSource.java](https://codecov.io/gh/apache/hudi/pull/2404/diff?src=pr=tree#diff-aHVkaS11dGlsaXRpZXMvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvdXRpbGl0aWVzL3NvdXJjZXMvQ3N2REZTU291cmNlLmphdmE=) | `0.00% <0.00%> (-100.00%)` | `0.00% <0.00%> (-10.00%)` | | | [...g/apache/hudi/utilities/sources/JsonDFSSource.java](https://codecov.io/gh/apache/hudi/pull/2404/diff?src=pr=tree#diff-aHVkaS11dGlsaXRpZXMvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvdXRpbGl0aWVzL3NvdXJjZXMvSnNvbkRGU1NvdXJjZS5qYXZh) | `0.00% <0.00%> (-100.00%)` | `0.00% <0.00%> (-4.00%)` | | | [...apache/hudi/utilities/sources/JsonKafkaSource.java](https://codecov.io/gh/apache/hudi/pull/2404/diff?src=pr=tree#diff-aHVkaS11dGlsaXRpZXMvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvdXRpbGl0aWVzL3NvdXJjZXMvSnNvbkthZmthU291cmNlLmphdmE=) | `0.00% <0.00%> (-100.00%)` | `0.00% <0.00%> (-6.00%)` | | | [...pache/hudi/utilities/sources/ParquetDFSSource.java](https://codecov.io/gh/apache/hudi/pull/2404/diff?src=pr=tree#diff-aHVkaS11dGlsaXRpZXMvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvdXRpbGl0aWVzL3NvdXJjZXMvUGFycXVldERGU1NvdXJjZS5qYXZh) | `0.00% <0.00%> (-100.00%)` | `0.00% <0.00%> (-5.00%)` | | | [...lities/schema/SchemaProviderWithPostProcessor.java](https://codecov.io/gh/apache/hudi/pull/2404/diff?src=pr=tree#diff-aHVkaS11dGlsaXRpZXMvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvdXRpbGl0aWVzL3NjaGVtYS9TY2hlbWFQcm92aWRlcldpdGhQb3N0UHJvY2Vzc29yLmphdmE=) | `0.00%

[GitHub] [hudi] codecov-io edited a comment on pull request #2379: [HUDI-1399] support a independent clustering spark job to asynchronously clustering

codecov-io edited a comment on pull request #2379: URL: https://github.com/apache/hudi/pull/2379#issuecomment-751244130 # [Codecov](https://codecov.io/gh/apache/hudi/pull/2379?src=pr=h1) Report > Merging [#2379](https://codecov.io/gh/apache/hudi/pull/2379?src=pr=desc) (70ffbba) into [master](https://codecov.io/gh/apache/hudi/commit/6cdf59d92b1c260abae82bba7d30d8ac280bddbf?el=desc) (6cdf59d) will **decrease** coverage by `42.58%`. > The diff coverage is `0.00%`. [](https://codecov.io/gh/apache/hudi/pull/2379?src=pr=tree) ```diff @@ Coverage Diff @@ ## master #2379 +/- ## - Coverage 52.23% 9.65% -42.59% + Complexity 2662 48 -2614 Files 335 53 -282 Lines 149811927-13054 Branches 1506 231 -1275 - Hits 7825 186 -7639 + Misses 65331728 -4805 + Partials623 13 -610 ``` | Flag | Coverage Δ | Complexity Δ | | |---|---|---|---| | hudicli | `?` | `?` | | | hudiclient | `?` | `?` | | | hudicommon | `?` | `?` | | | hudihadoopmr | `?` | `?` | | | huditimelineservice | `?` | `?` | | | hudiutilities | `9.65% <0.00%> (-60.01%)` | `0.00 <0.00> (ø)` | | Flags with carried forward coverage won't be shown. [Click here](https://docs.codecov.io/docs/carryforward-flags#carryforward-flags-in-the-pull-request-comment) to find out more. | [Impacted Files](https://codecov.io/gh/apache/hudi/pull/2379?src=pr=tree) | Coverage Δ | Complexity Δ | | |---|---|---|---| | [...org/apache/hudi/utilities/HoodieClusteringJob.java](https://codecov.io/gh/apache/hudi/pull/2379/diff?src=pr=tree#diff-aHVkaS11dGlsaXRpZXMvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvdXRpbGl0aWVzL0hvb2RpZUNsdXN0ZXJpbmdKb2IuamF2YQ==) | `0.00% <0.00%> (ø)` | `0.00 <0.00> (?)` | | | [...apache/hudi/utilities/deltastreamer/DeltaSync.java](https://codecov.io/gh/apache/hudi/pull/2379/diff?src=pr=tree#diff-aHVkaS11dGlsaXRpZXMvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvdXRpbGl0aWVzL2RlbHRhc3RyZWFtZXIvRGVsdGFTeW5jLmphdmE=) | `0.00% <0.00%> (-70.76%)` | `0.00 <0.00> (-49.00)` | | | [...va/org/apache/hudi/utilities/IdentitySplitter.java](https://codecov.io/gh/apache/hudi/pull/2379/diff?src=pr=tree#diff-aHVkaS11dGlsaXRpZXMvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvdXRpbGl0aWVzL0lkZW50aXR5U3BsaXR0ZXIuamF2YQ==) | `0.00% <0.00%> (-100.00%)` | `0.00% <0.00%> (-2.00%)` | | | [...va/org/apache/hudi/utilities/schema/SchemaSet.java](https://codecov.io/gh/apache/hudi/pull/2379/diff?src=pr=tree#diff-aHVkaS11dGlsaXRpZXMvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvdXRpbGl0aWVzL3NjaGVtYS9TY2hlbWFTZXQuamF2YQ==) | `0.00% <0.00%> (-100.00%)` | `0.00% <0.00%> (-3.00%)` | | | [...a/org/apache/hudi/utilities/sources/RowSource.java](https://codecov.io/gh/apache/hudi/pull/2379/diff?src=pr=tree#diff-aHVkaS11dGlsaXRpZXMvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvdXRpbGl0aWVzL3NvdXJjZXMvUm93U291cmNlLmphdmE=) | `0.00% <0.00%> (-100.00%)` | `0.00% <0.00%> (-4.00%)` | | | [.../org/apache/hudi/utilities/sources/AvroSource.java](https://codecov.io/gh/apache/hudi/pull/2379/diff?src=pr=tree#diff-aHVkaS11dGlsaXRpZXMvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvdXRpbGl0aWVzL3NvdXJjZXMvQXZyb1NvdXJjZS5qYXZh) | `0.00% <0.00%> (-100.00%)` | `0.00% <0.00%> (-1.00%)` | | | [.../org/apache/hudi/utilities/sources/JsonSource.java](https://codecov.io/gh/apache/hudi/pull/2379/diff?src=pr=tree#diff-aHVkaS11dGlsaXRpZXMvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvdXRpbGl0aWVzL3NvdXJjZXMvSnNvblNvdXJjZS5qYXZh) | `0.00% <0.00%> (-100.00%)` | `0.00% <0.00%> (-1.00%)` | | | [...rg/apache/hudi/utilities/sources/CsvDFSSource.java](https://codecov.io/gh/apache/hudi/pull/2379/diff?src=pr=tree#diff-aHVkaS11dGlsaXRpZXMvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvdXRpbGl0aWVzL3NvdXJjZXMvQ3N2REZTU291cmNlLmphdmE=) | `0.00% <0.00%> (-100.00%)` | `0.00% <0.00%> (-10.00%)` | | | [...g/apache/hudi/utilities/sources/JsonDFSSource.java](https://codecov.io/gh/apache/hudi/pull/2379/diff?src=pr=tree#diff-aHVkaS11dGlsaXRpZXMvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvdXRpbGl0aWVzL3NvdXJjZXMvSnNvbkRGU1NvdXJjZS5qYXZh) | `0.00% <0.00%> (-100.00%)` | `0.00% <0.00%> (-4.00%)` | | | [...apache/hudi/utilities/sources/JsonKafkaSource.java](https://codecov.io/gh/apache/hudi/pull/2379/diff?src=pr=tree#diff-aHVkaS11dGlsaXRpZXMvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvdXRpbGl0aWVzL3NvdXJjZXMvSnNvbkthZmthU291cmNlLmphdmE=) | `0.00% <0.00%> (-100.00%)` | `0.00% <0.00%> (-6.00%)` | | | ... and [312 more](https://codecov.io/gh/apache/hudi/pull/2379/diff?src=pr=tree-more)

[jira] [Created] (HUDI-1506) Fix wrong exception thrown in HoodieAvroUtils

wangxianghu created HUDI-1506: - Summary: Fix wrong exception thrown in HoodieAvroUtils Key: HUDI-1506 URL: https://issues.apache.org/jira/browse/HUDI-1506 Project: Apache Hudi Issue Type: Bug Reporter: wangxianghu Assignee: wangxianghu Caused by: org.apache.spark.SparkException: Job aborted due to stage failure: Task 4 in stage 4.0 failed 4 times, most recent failure: Lost task 4.3 in stage 4.0 (TID 24, al-prd-dtp-data-lake-10-0-88-26, executor 4): org.apache.hudi.exception.HoodieException: etlDatetime(Part -etlDatetime) field not found in record. Acceptable fields were :[vin, uuid, commercialType, businessType, vehicleNo, plateColor, vehicleColor, engineId, nextFixDate, feePrintId, transArea, createTime, updateTime, registerDate, curVehicleNo, reportVehicleNo, model, checkDate, certifyDateA, certifyDateB, certificate, transAgency, transAgencyNet, transDateStart, transDateStop, insurCom, insurNum, insurType, insurCount, insurEff, insurExp, insurCreateTime, insurUpdateTime, curVehicleCertno, reportVehicleCertno, seats, brand, vehicleType, fuelType, engineDisplace, photo, enginePower, gpsBrand, gpsModel, gpsImei, gpsInstallDate, curDriverUuid, reportDrivers, curTimeOn, curTimeOff, timeFrom, timeTo, ownerName, fixState, checkState, photoId, photoIdUrl, fareType, wheelBase, vehicleTec, vehicleSafe, lesseeName, lesseeCode, sdcOperationType, hivePartition, etlDatetime] -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (HUDI-1506) Fix wrong exception thrown in HoodieAvroUtils

[

https://issues.apache.org/jira/browse/HUDI-1506?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

ASF GitHub Bot updated HUDI-1506:

-

Labels: pull-request-available (was: )

> Fix wrong exception thrown in HoodieAvroUtils

> -

>

> Key: HUDI-1506

> URL: https://issues.apache.org/jira/browse/HUDI-1506

> Project: Apache Hudi

> Issue Type: Bug

>Reporter: wangxianghu

>Assignee: wangxianghu

>Priority: Major

> Labels: pull-request-available

>

> {code:java}

> //

> Caused by: org.apache.spark.SparkException: Job aborted due to stage failure:

> Task 4 in stage 4.0 failed 4 times, most recent failure: Lost task 4.3 in

> stage 4.0 (TID 24, al-prd-dtp-data-lake-10-0-88-26, executor 4):

> org.apache.hudi.exception.HoodieException: etlDatetime(Part -etlDatetime)

> field not found in record. Acceptable fields were :[vin, uuid,

> commercialType, businessType, vehicleNo, plateColor, vehicleColor, engineId,

> nextFixDate, feePrintId, transArea, createTime, updateTime, registerDate,

> curVehicleNo, reportVehicleNo, model, checkDate, certifyDateA, certifyDateB,

> certificate, transAgency, transAgencyNet, transDateStart, transDateStop,

> insurCom, insurNum, insurType, insurCount, insurEff, insurExp,

> insurCreateTime, insurUpdateTime, curVehicleCertno, reportVehicleCertno,

> seats, brand, vehicleType, fuelType, engineDisplace, photo, enginePower,

> gpsBrand, gpsModel, gpsImei, gpsInstallDate, curDriverUuid, reportDrivers,

> curTimeOn, curTimeOff, timeFrom, timeTo, ownerName, fixState, checkState,

> photoId, photoIdUrl, fareType, wheelBase, vehicleTec, vehicleSafe,

> lesseeName, lesseeCode, sdcOperationType, hivePartition, etlDatetime]

> {code}

> we can see the `etlDatetime` do exist. it is caused by null value acturally

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [hudi] wangxianghu opened a new pull request #2404: [MINOR] Add Jira URL and Mailing List

wangxianghu opened a new pull request #2404: URL: https://github.com/apache/hudi/pull/2404 ## *Tips* - *Thank you very much for contributing to Apache Hudi.* - *Please review https://hudi.apache.org/contributing.html before opening a pull request.* ## What is the purpose of the pull request *(For example: This pull request adds quick-start document.)* ## Brief change log *(for example:)* - *Modify AnnotationLocation checkstyle rule in checkstyle.xml* ## Verify this pull request *(Please pick either of the following options)* This pull request is a trivial rework / code cleanup without any test coverage. *(or)* This pull request is already covered by existing tests, such as *(please describe tests)*. (or) This change added tests and can be verified as follows: *(example:)* - *Added integration tests for end-to-end.* - *Added HoodieClientWriteTest to verify the change.* - *Manually verified the change by running a job locally.* ## Committer checklist - [ ] Has a corresponding JIRA in PR title & commit - [ ] Commit message is descriptive of the change - [ ] CI is green - [ ] Necessary doc changes done or have another open PR - [ ] For large changes, please consider breaking it into sub-tasks under an umbrella JIRA. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] yanghua merged pull request #2403: [HUDI-913] Update docs about KeyGenerator

yanghua merged pull request #2403: URL: https://github.com/apache/hudi/pull/2403 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[hudi] branch asf-site updated: [HUDI-913] Update docs about KeyGenerator (#2403)

This is an automated email from the ASF dual-hosted git repository.

vinoyang pushed a commit to branch asf-site

in repository https://gitbox.apache.org/repos/asf/hudi.git

The following commit(s) were added to refs/heads/asf-site by this push:

new ee00bd6 [HUDI-913] Update docs about KeyGenerator (#2403)

ee00bd6 is described below

commit ee00bd6689f151b7a6a1a197aced8699d373dd79

Author: wangxianghu

AuthorDate: Tue Jan 5 18:21:08 2021 +0800

[HUDI-913] Update docs about KeyGenerator (#2403)

---

docs/_docs/2_4_configurations.cn.md | 2 +-

docs/_docs/2_4_configurations.md| 2 +-

2 files changed, 2 insertions(+), 2 deletions(-)

diff --git a/docs/_docs/2_4_configurations.cn.md

b/docs/_docs/2_4_configurations.cn.md

index 9436971..07e8c02 100644

--- a/docs/_docs/2_4_configurations.cn.md

+++ b/docs/_docs/2_4_configurations.cn.md

@@ -83,7 +83,7 @@ inputDF.write()

如果设置为true,则生成基于Hive格式的partition目录:=

KEYGENERATOR_CLASS_OPT_KEY {#KEYGENERATOR_CLASS_OPT_KEY}

- 属性:`hoodie.datasource.write.keygenerator.class`,

默认值:`org.apache.hudi.SimpleKeyGenerator`

+ 属性:`hoodie.datasource.write.keygenerator.class`,

默认值:`org.apache.hudi.keygen.SimpleKeyGenerator`

键生成器类,实现从输入的`Row`对象中提取键

COMMIT_METADATA_KEYPREFIX_OPT_KEY {#COMMIT_METADATA_KEYPREFIX_OPT_KEY}

diff --git a/docs/_docs/2_4_configurations.md b/docs/_docs/2_4_configurations.md

index aa472dd..244688b 100644

--- a/docs/_docs/2_4_configurations.md

+++ b/docs/_docs/2_4_configurations.md

@@ -81,7 +81,7 @@ Actual value ontained by invoking .toString()

When set to true, partition folder names follow the

format of Hive partitions: =

KEYGENERATOR_CLASS_OPT_KEY {#KEYGENERATOR_CLASS_OPT_KEY}

- Property: `hoodie.datasource.write.keygenerator.class`, Default:

`org.apache.hudi.SimpleKeyGenerator`

+ Property: `hoodie.datasource.write.keygenerator.class`, Default:

`org.apache.hudi.keygen.SimpleKeyGenerator`

Key generator class, that implements will extract

the key out of incoming `Row` object

COMMIT_METADATA_KEYPREFIX_OPT_KEY {#COMMIT_METADATA_KEYPREFIX_OPT_KEY}

[GitHub] [hudi] liujinhui1994 closed pull request #2386: [HUDI-1160] Support update partial fields for CoW table

liujinhui1994 closed pull request #2386: URL: https://github.com/apache/hudi/pull/2386 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[hudi] branch asf-site updated: Travis CI build asf-site

This is an automated email from the ASF dual-hosted git repository. vinoth pushed a commit to branch asf-site in repository https://gitbox.apache.org/repos/asf/hudi.git The following commit(s) were added to refs/heads/asf-site by this push: new f7ca68a Travis CI build asf-site f7ca68a is described below commit f7ca68af86ff254adf064bd4becb4aca02a001cf Author: CI AuthorDate: Tue Jan 5 12:20:10 2021 + Travis CI build asf-site --- content/cn/docs/configurations.html | 2 +- content/docs/configurations.html| 2 +- 2 files changed, 2 insertions(+), 2 deletions(-) diff --git a/content/cn/docs/configurations.html b/content/cn/docs/configurations.html index 1456a71..57aa993 100644 --- a/content/cn/docs/configurations.html +++ b/content/cn/docs/configurations.html @@ -450,7 +450,7 @@ 如果设置为true,则生成基于Hive格式的partition目录:=/span KEYGENERATOR_CLASS_OPT_KEY -属性:hoodie.datasource.write.keygenerator.class, 默认值:org.apache.hudi.SimpleKeyGenerator +属性:hoodie.datasource.write.keygenerator.class, 默认值:org.apache.hudi.keygen.SimpleKeyGenerator 键生成器类,实现从输入的Row对象中提取键 COMMIT_METADATA_KEYPREFIX_OPT_KEY diff --git a/content/docs/configurations.html b/content/docs/configurations.html index c274063..ef5f099 100644 --- a/content/docs/configurations.html +++ b/content/docs/configurations.html @@ -459,7 +459,7 @@ Actual value ontained by invoking .toString() When set to true, partition folder names follow the format of Hive partitions: =/span KEYGENERATOR_CLASS_OPT_KEY -Property: hoodie.datasource.write.keygenerator.class, Default: org.apache.hudi.SimpleKeyGenerator +Property: hoodie.datasource.write.keygenerator.class, Default: org.apache.hudi.keygen.SimpleKeyGenerator Key generator class, that implements will extract the key out of incoming Row object COMMIT_METADATA_KEYPREFIX_OPT_KEY

[GitHub] [hudi] codecov-io commented on pull request #2405: [HUDI-1506] Fix wrong exception thrown in HoodieAvroUtils

codecov-io commented on pull request #2405: URL: https://github.com/apache/hudi/pull/2405#issuecomment-754665459 # [Codecov](https://codecov.io/gh/apache/hudi/pull/2405?src=pr=h1) Report > Merging [#2405](https://codecov.io/gh/apache/hudi/pull/2405?src=pr=desc) (b51e61e) into [master](https://codecov.io/gh/apache/hudi/commit/698694a1571cdcc9848fc79aa34c8cbbf9662bc4?el=desc) (698694a) will **decrease** coverage by `40.19%`. > The diff coverage is `n/a`. [](https://codecov.io/gh/apache/hudi/pull/2405?src=pr=tree) ```diff @@ Coverage Diff @@ ## master#2405 +/- ## = - Coverage 50.23% 10.04% -40.20% + Complexity 2985 48 -2937 = Files 410 52 -358 Lines 18398 1852-16546 Branches 1884 223 -1661 = - Hits 9242 186 -9056 + Misses 8398 1653 -6745 + Partials758 13 -745 ``` | Flag | Coverage Δ | Complexity Δ | | |---|---|---|---| | hudicli | `?` | `?` | | | hudiclient | `?` | `?` | | | hudicommon | `?` | `?` | | | hudiflink | `?` | `?` | | | hudihadoopmr | `?` | `?` | | | hudisparkdatasource | `?` | `?` | | | hudisync | `?` | `?` | | | huditimelineservice | `?` | `?` | | | hudiutilities | `10.04% <ø> (-59.62%)` | `0.00 <ø> (ø)` | | Flags with carried forward coverage won't be shown. [Click here](https://docs.codecov.io/docs/carryforward-flags#carryforward-flags-in-the-pull-request-comment) to find out more. | [Impacted Files](https://codecov.io/gh/apache/hudi/pull/2405?src=pr=tree) | Coverage Δ | Complexity Δ | | |---|---|---|---| | [...va/org/apache/hudi/utilities/IdentitySplitter.java](https://codecov.io/gh/apache/hudi/pull/2405/diff?src=pr=tree#diff-aHVkaS11dGlsaXRpZXMvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvdXRpbGl0aWVzL0lkZW50aXR5U3BsaXR0ZXIuamF2YQ==) | `0.00% <0.00%> (-100.00%)` | `0.00% <0.00%> (-2.00%)` | | | [...va/org/apache/hudi/utilities/schema/SchemaSet.java](https://codecov.io/gh/apache/hudi/pull/2405/diff?src=pr=tree#diff-aHVkaS11dGlsaXRpZXMvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvdXRpbGl0aWVzL3NjaGVtYS9TY2hlbWFTZXQuamF2YQ==) | `0.00% <0.00%> (-100.00%)` | `0.00% <0.00%> (-3.00%)` | | | [...a/org/apache/hudi/utilities/sources/RowSource.java](https://codecov.io/gh/apache/hudi/pull/2405/diff?src=pr=tree#diff-aHVkaS11dGlsaXRpZXMvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvdXRpbGl0aWVzL3NvdXJjZXMvUm93U291cmNlLmphdmE=) | `0.00% <0.00%> (-100.00%)` | `0.00% <0.00%> (-4.00%)` | | | [.../org/apache/hudi/utilities/sources/AvroSource.java](https://codecov.io/gh/apache/hudi/pull/2405/diff?src=pr=tree#diff-aHVkaS11dGlsaXRpZXMvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvdXRpbGl0aWVzL3NvdXJjZXMvQXZyb1NvdXJjZS5qYXZh) | `0.00% <0.00%> (-100.00%)` | `0.00% <0.00%> (-1.00%)` | | | [.../org/apache/hudi/utilities/sources/JsonSource.java](https://codecov.io/gh/apache/hudi/pull/2405/diff?src=pr=tree#diff-aHVkaS11dGlsaXRpZXMvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvdXRpbGl0aWVzL3NvdXJjZXMvSnNvblNvdXJjZS5qYXZh) | `0.00% <0.00%> (-100.00%)` | `0.00% <0.00%> (-1.00%)` | | | [...rg/apache/hudi/utilities/sources/CsvDFSSource.java](https://codecov.io/gh/apache/hudi/pull/2405/diff?src=pr=tree#diff-aHVkaS11dGlsaXRpZXMvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvdXRpbGl0aWVzL3NvdXJjZXMvQ3N2REZTU291cmNlLmphdmE=) | `0.00% <0.00%> (-100.00%)` | `0.00% <0.00%> (-10.00%)` | | | [...g/apache/hudi/utilities/sources/JsonDFSSource.java](https://codecov.io/gh/apache/hudi/pull/2405/diff?src=pr=tree#diff-aHVkaS11dGlsaXRpZXMvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvdXRpbGl0aWVzL3NvdXJjZXMvSnNvbkRGU1NvdXJjZS5qYXZh) | `0.00% <0.00%> (-100.00%)` | `0.00% <0.00%> (-4.00%)` | | | [...apache/hudi/utilities/sources/JsonKafkaSource.java](https://codecov.io/gh/apache/hudi/pull/2405/diff?src=pr=tree#diff-aHVkaS11dGlsaXRpZXMvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvdXRpbGl0aWVzL3NvdXJjZXMvSnNvbkthZmthU291cmNlLmphdmE=) | `0.00% <0.00%> (-100.00%)` | `0.00% <0.00%> (-6.00%)` | | | [...pache/hudi/utilities/sources/ParquetDFSSource.java](https://codecov.io/gh/apache/hudi/pull/2405/diff?src=pr=tree#diff-aHVkaS11dGlsaXRpZXMvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvdXRpbGl0aWVzL3NvdXJjZXMvUGFycXVldERGU1NvdXJjZS5qYXZh) | `0.00% <0.00%> (-100.00%)` | `0.00% <0.00%> (-5.00%)` | | | [...lities/schema/SchemaProviderWithPostProcessor.java](https://codecov.io/gh/apache/hudi/pull/2405/diff?src=pr=tree#diff-aHVkaS11dGlsaXRpZXMvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvdXRpbGl0aWVzL3NjaGVtYS9TY2hlbWFQcm92aWRlcldpdGhQb3N0UHJvY2Vzc29yLmphdmE=) | `0.00%

[GitHub] [hudi] vinothchandar commented on a change in pull request #2359: [HUDI-1486] Remove inflight rollback in hoodie writer

vinothchandar commented on a change in pull request #2359:

URL: https://github.com/apache/hudi/pull/2359#discussion_r551618729

##

File path:

hudi-client/hudi-client-common/src/main/java/org/apache/hudi/client/AbstractHoodieWriteClient.java

##

@@ -232,17 +250,18 @@ void emitCommitMetrics(String instantTime,

HoodieCommitMetadata metadata, String

* Main API to run bootstrap to hudi.

*/

public void bootstrap(Option> extraMetadata) {

-if (rollbackPending) {

- rollBackInflightBootstrap();

-}

+// TODO : MULTIWRITER -> check if failed bootstrap files can be cleaned

later

HoodieTable table =

getTableAndInitCtx(WriteOperationType.UPSERT,

HoodieTimeline.METADATA_BOOTSTRAP_INSTANT_TS);

+if (isEager(config.getFailedWritesCleanPolicy())) {

Review comment:

`config` is a member variable right? why do we pass it in to the checks?

Can we just do `eagerCleanFailedWrites()`, which does he if block and the call

to `rollbackFailedBootstrap()`?

##

File path:

hudi-client/hudi-client-common/src/main/java/org/apache/hudi/client/AbstractHoodieClient.java

##

@@ -70,6 +72,8 @@ protected AbstractHoodieClient(HoodieEngineContext context,

HoodieWriteConfig cl

this.config = clientConfig;

this.timelineServer = timelineServer;

shouldStopTimelineServer = !timelineServer.isPresent();

+this.heartbeatClient = new HoodieHeartbeatClient(this.fs, this.basePath,

+clientConfig.getHoodieClientHeartbeatIntervalInMs(),

clientConfig.getHoodieClientHeartbeatTolerableMisses());

Review comment:

rename to be shorter? drop the `HoodieClient` or `Hoodie` part in the

names?

##

File path:

hudi-client/hudi-client-common/src/main/java/org/apache/hudi/client/AbstractHoodieWriteClient.java

##

@@ -707,24 +739,51 @@ public void rollbackInflightCompaction(HoodieInstant

inflightInstant, HoodieTabl

}

/**

- * Cleanup all pending commits.

+ * Rollback all failed commits.

*/

- private void rollbackPendingCommits() {

+ public void rollbackFailedCommits() {

HoodieTable table = createTable(config, hadoopConf);

-HoodieTimeline inflightTimeline =

table.getMetaClient().getCommitsTimeline().filterPendingExcludingCompaction();

-List commits =

inflightTimeline.getReverseOrderedInstants().map(HoodieInstant::getTimestamp)

-.collect(Collectors.toList());

-for (String commit : commits) {

- if (HoodieTimeline.compareTimestamps(commit,

HoodieTimeline.LESSER_THAN_OR_EQUALS,

+List instantsToRollback = getInstantsToRollback(table);

+for (String instant : instantsToRollback) {

+ if (HoodieTimeline.compareTimestamps(instant,

HoodieTimeline.LESSER_THAN_OR_EQUALS,

HoodieTimeline.FULL_BOOTSTRAP_INSTANT_TS)) {

-rollBackInflightBootstrap();

+rollbackFailedBootstrap();

break;

} else {

-rollback(commit);

+rollback(instant);

+ }

+ // Delete the heartbeats from DFS

+ if (!isEager(config.getFailedWritesCleanPolicy())) {

+try {

+ this.heartbeatClient.delete(instant);

+} catch (IOException io) {

+ LOG.error(io);

+}

}

}

}

+ private List getInstantsToRollback(HoodieTable table) {

+if (isEager(config.getFailedWritesCleanPolicy())) {

+ HoodieTimeline inflightTimeline =

table.getMetaClient().getCommitsTimeline().filterPendingExcludingCompaction();

+ return

inflightTimeline.getReverseOrderedInstants().map(HoodieInstant::getTimestamp)

+ .collect(Collectors.toList());

+} else if (!isEager(config.getFailedWritesCleanPolicy())) {

+ return table.getMetaClient().getActiveTimeline()

+

.getCommitsTimeline().filterInflights().getReverseOrderedInstants().filter(instant

-> {

+try {

+ return

heartbeatClient.isHeartbeatExpired(instant.getTimestamp(),

System.currentTimeMillis());

Review comment:

drop the second argument to `isHeartbeatExpired()` and have it get the

current time epoch inside?

##

File path:

hudi-client/hudi-client-common/src/main/java/org/apache/hudi/client/AbstractHoodieWriteClient.java

##

@@ -707,24 +739,51 @@ public void rollbackInflightCompaction(HoodieInstant

inflightInstant, HoodieTabl

}

/**

- * Cleanup all pending commits.

+ * Rollback all failed commits.

*/

- private void rollbackPendingCommits() {

+ public void rollbackFailedCommits() {

HoodieTable table = createTable(config, hadoopConf);

-HoodieTimeline inflightTimeline =

table.getMetaClient().getCommitsTimeline().filterPendingExcludingCompaction();

-List commits =

inflightTimeline.getReverseOrderedInstants().map(HoodieInstant::getTimestamp)

-.collect(Collectors.toList());

-for (String commit : commits) {

- if (HoodieTimeline.compareTimestamps(commit,

HoodieTimeline.LESSER_THAN_OR_EQUALS,

+List instantsToRollback = getInstantsToRollback(table);

+

[jira] [Updated] (HUDI-1479) Replace FSUtils.getAllPartitionPaths() with HoodieTableMetadata#getAllPartitionPaths()

[

https://issues.apache.org/jira/browse/HUDI-1479?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Vinoth Chandar updated HUDI-1479:

-

Description:

*Change #1*

{code:java}

public static List getAllPartitionPaths(FileSystem fs, String

basePathStr, boolean useFileListingFromMetadata, boolean verifyListings,

boolean

assumeDatePartitioning) throws IOException {

if (assumeDatePartitioning) {

return getAllPartitionFoldersThreeLevelsDown(fs, basePathStr);

} else {

HoodieTableMetadata tableMetadata =

HoodieTableMetadata.create(fs.getConf(), basePathStr, "/tmp/",

useFileListingFromMetadata,

verifyListings, false, false);

return tableMetadata.getAllPartitionPaths();

}

}

{code}

is the current implementation, where `HoodieTableMetadata.create()` always

creates `HoodieBackedTableMetadata`. Instead we should create

`FileSystemBackedTableMetadata` if useFileListingFromMetadata==false anyways

*Change #2*

On master, we have the `HoodieEngineContext` abstraction, which allows for

parallel execution. We should consider moving it to `hudi-common` (its doable)

and then have `FileSystemBackedTableMetadata` redone such that it can do

parallelized listings using the passed in engine. either

HoodieSparkEngineContext or HoodieJavaEngineContext.

HoodieBackedTableMetadata#getPartitionsToFilesMapping has some parallelized

code. We should take one pass and see if that can be redone a bit as well.

*Change #3*

There are places, where we call fs.listStatus() directly. We should make them

go through the HoodieTable.getMetadata()... route as well. Essentially, all

listing should be concentrated to `FileSystemBackedTableMetadata`

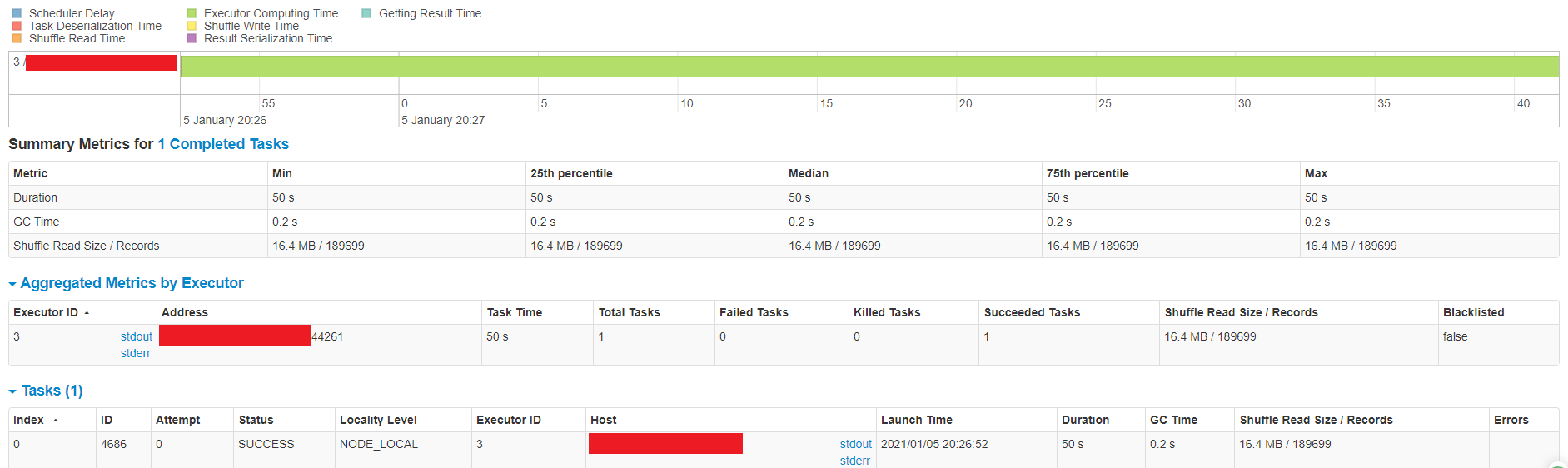

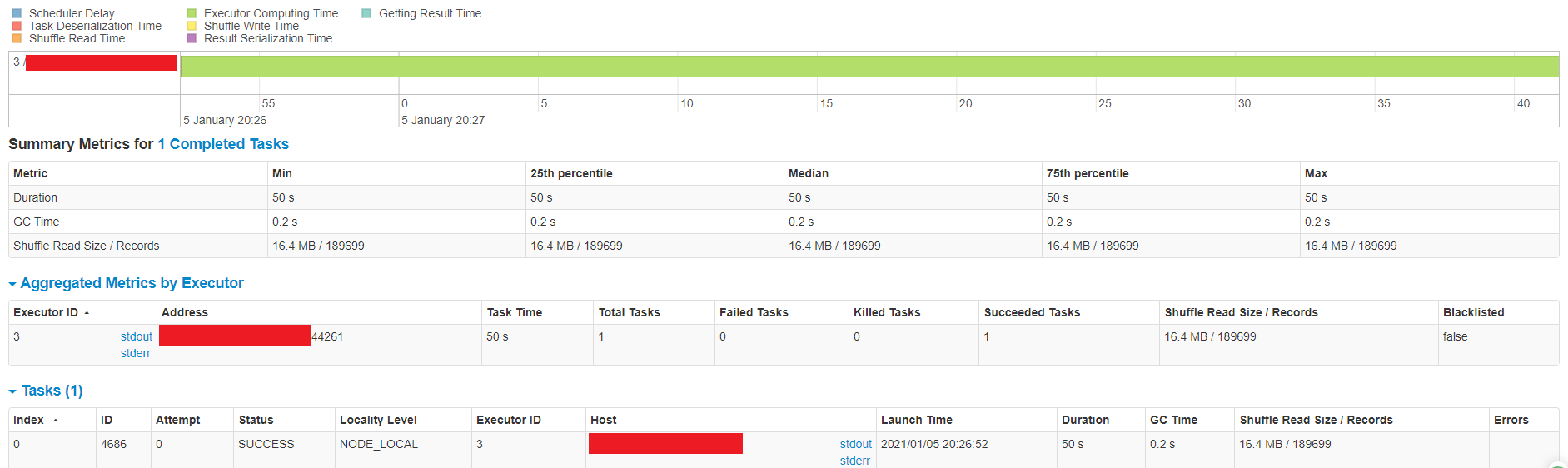

!image-2021-01-05-10-00-35-187.png!

was:

- Can be done once rfc-15 is merged into master.

- We will also use HoodieEngineContext and perform parallelized listing as

needed.

- HoodieBackedTableMetadata#getPartitionsToFilesMapping has some parallelized

code

- Chase down direct usages of FileSystemBackedTableMetadata and parallelize

them as well

> Replace FSUtils.getAllPartitionPaths() with

> HoodieTableMetadata#getAllPartitionPaths()

> --

>

> Key: HUDI-1479

> URL: https://issues.apache.org/jira/browse/HUDI-1479

> Project: Apache Hudi

> Issue Type: Sub-task

> Components: Code Cleanup

>Reporter: Vinoth Chandar

>Assignee: Vinoth Chandar

>Priority: Blocker

> Fix For: 0.7.0

>

> Attachments: image-2021-01-05-10-00-35-187.png

>

>

> *Change #1*

> {code:java}

> public static List getAllPartitionPaths(FileSystem fs, String

> basePathStr, boolean useFileListingFromMetadata, boolean verifyListings,

> boolean

> assumeDatePartitioning) throws IOException {

> if (assumeDatePartitioning) {

> return getAllPartitionFoldersThreeLevelsDown(fs, basePathStr);

> } else {

> HoodieTableMetadata tableMetadata =

> HoodieTableMetadata.create(fs.getConf(), basePathStr, "/tmp/",

> useFileListingFromMetadata,

> verifyListings, false, false);

> return tableMetadata.getAllPartitionPaths();

> }

> }

> {code}

> is the current implementation, where `HoodieTableMetadata.create()` always

> creates `HoodieBackedTableMetadata`. Instead we should create

> `FileSystemBackedTableMetadata` if useFileListingFromMetadata==false anyways

> *Change #2*

> On master, we have the `HoodieEngineContext` abstraction, which allows for

> parallel execution. We should consider moving it to `hudi-common` (its

> doable) and then have `FileSystemBackedTableMetadata` redone such that it can

> do parallelized listings using the passed in engine. either

> HoodieSparkEngineContext or HoodieJavaEngineContext.

> HoodieBackedTableMetadata#getPartitionsToFilesMapping has some parallelized

> code. We should take one pass and see if that can be redone a bit as well.

>

> *Change #3*

> There are places, where we call fs.listStatus() directly. We should make them

> go through the HoodieTable.getMetadata()... route as well. Essentially, all

> listing should be concentrated to `FileSystemBackedTableMetadata`

> !image-2021-01-05-10-00-35-187.png!

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Updated] (HUDI-1479) Replace FSUtils.getAllPartitionPaths() with HoodieTableMetadata#getAllPartitionPaths()

[ https://issues.apache.org/jira/browse/HUDI-1479?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Vinoth Chandar updated HUDI-1479: - Attachment: image-2021-01-05-10-00-35-187.png > Replace FSUtils.getAllPartitionPaths() with > HoodieTableMetadata#getAllPartitionPaths() > -- > > Key: HUDI-1479 > URL: https://issues.apache.org/jira/browse/HUDI-1479 > Project: Apache Hudi > Issue Type: Sub-task > Components: Code Cleanup >Reporter: Vinoth Chandar >Assignee: Vinoth Chandar >Priority: Blocker > Fix For: 0.7.0 > > Attachments: image-2021-01-05-10-00-35-187.png > > > - Can be done once rfc-15 is merged into master. > - We will also use HoodieEngineContext and perform parallelized listing as > needed. > - HoodieBackedTableMetadata#getPartitionsToFilesMapping has some > parallelized code > - Chase down direct usages of FileSystemBackedTableMetadata and parallelize > them as well -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [hudi] nsivabalan commented on a change in pull request #2400: [WIP] Some fixes to test suite framework. Adding clustering node

nsivabalan commented on a change in pull request #2400:

URL: https://github.com/apache/hudi/pull/2400#discussion_r552072132

##

File path: docker/demo/config/test-suite/complex-dag-cow.yaml

##

@@ -14,41 +14,47 @@

# See the License for the specific language governing permissions and

# limitations under the License.

dag_name: cow-long-running-example.yaml

-dag_rounds: 2

+dag_rounds: 1

Review comment:

ignore reviewing these two files for now:

complex-dag-cow.yaml

and complex-dag-mor.yaml

I am yet to fix these.

##

File path:

hudi-integ-test/src/main/java/org/apache/hudi/integ/testsuite/dag/nodes/ValidateDatasetNode.java

##

@@ -111,17 +111,19 @@ public void execute(ExecutionContext context) throws

Exception {

throw new AssertionError("Hudi contents does not match contents input

data. ");

}

-String database =

context.getWriterContext().getProps().getString(DataSourceWriteOptions.HIVE_DATABASE_OPT_KEY());

-String tableName =

context.getWriterContext().getProps().getString(DataSourceWriteOptions.HIVE_TABLE_OPT_KEY());

-log.warn("Validating hive table with db : " + database + " and table : " +

tableName);

-Dataset cowDf = session.sql("SELECT * FROM " + database + "." +

tableName);

-Dataset trimmedCowDf =

cowDf.drop(HoodieRecord.COMMIT_TIME_METADATA_FIELD).drop(HoodieRecord.COMMIT_SEQNO_METADATA_FIELD).drop(HoodieRecord.RECORD_KEY_METADATA_FIELD)

-

.drop(HoodieRecord.PARTITION_PATH_METADATA_FIELD).drop(HoodieRecord.FILENAME_METADATA_FIELD);

-intersectionDf = inputSnapshotDf.intersect(trimmedDf);

-// the intersected df should be same as inputDf. if not, there is some

mismatch.

-if (inputSnapshotDf.except(intersectionDf).count() != 0) {

- log.error("Data set validation failed for COW hive table. Total count in

hudi " + trimmedCowDf.count() + ", input df count " + inputSnapshotDf.count());

- throw new AssertionError("Hudi hive table contents does not match

contents input data. ");

+if (config.isValidateHive()) {

Review comment:

no changes except adding this if condition

##

File path:

hudi-integ-test/src/main/java/org/apache/hudi/integ/testsuite/generator/FlexibleSchemaRecordGenerationIterator.java

##

@@ -41,21 +46,17 @@

private GenericRecord lastRecord;

// Partition path field name

private Set partitionPathFieldNames;

- private String firstPartitionPathField;

public FlexibleSchemaRecordGenerationIterator(long maxEntriesToProduce,

String schema) {

this(maxEntriesToProduce,

GenericRecordFullPayloadGenerator.DEFAULT_PAYLOAD_SIZE, schema, null, 0);

}

public FlexibleSchemaRecordGenerationIterator(long maxEntriesToProduce, int

minPayloadSize, String schemaStr,

- List partitionPathFieldNames, int numPartitions) {

+ List partitionPathFieldNames, int partitionIndex) {

Review comment:

all changes in this file are part of reverting

e33a8f733c4a9a94479c166ad13ae9d53142cd3f

##

File path:

hudi-integ-test/src/main/java/org/apache/hudi/integ/testsuite/generator/DeltaGenerator.java

##

@@ -127,9 +127,13 @@ public DeltaGenerator(DFSDeltaConfig deltaOutputConfig,

JavaSparkContext jsc, Sp

return ws;

}

+ public int getBatchId() {

+return this.batchId;

+ }

+

public JavaRDD generateInserts(Config operation) {

int numPartitions = operation.getNumInsertPartitions();

-long recordsPerPartition = operation.getNumRecordsInsert();

+long recordsPerPartition = operation.getNumRecordsInsert() / numPartitions;

Review comment:

these are part of reverting e33a8f733c4a9a94479c166ad13ae9d53142cd3f

##

File path:

hudi-integ-test/src/main/java/org/apache/hudi/integ/testsuite/generator/DeltaGenerator.java

##

@@ -140,7 +144,7 @@ public DeltaGenerator(DFSDeltaConfig deltaOutputConfig,

JavaSparkContext jsc, Sp

JavaRDD inputBatch = jsc.parallelize(partitionIndexes,

numPartitions)

.mapPartitionsWithIndex((index, p) -> {

return new LazyRecordGeneratorIterator(new

FlexibleSchemaRecordGenerationIterator(recordsPerPartition,

- minPayloadSize, schemaStr, partitionPathFieldNames,

numPartitions));

+minPayloadSize, schemaStr, partitionPathFieldNames,

(Integer)index));

Review comment:

these are part of reverting e33a8f733c4a9a94479c166ad13ae9d53142cd3f

##

File path:

hudi-integ-test/src/main/java/org/apache/hudi/integ/testsuite/generator/GenericRecordFullPayloadGenerator.java

##

@@ -46,9 +46,9 @@

*/

public class GenericRecordFullPayloadGenerator implements Serializable {

- private static final Logger LOG =

LoggerFactory.getLogger(GenericRecordFullPayloadGenerator.class);

+ private static Logger LOG =

LoggerFactory.getLogger(GenericRecordFullPayloadGenerator.class);

Review comment:

all changes in this file are part of reverting

e33a8f733c4a9a94479c166ad13ae9d53142cd3f

##

File path:

[GitHub] [hudi] nsivabalan commented on pull request #2400: Some fixes and enhancements to test suite framework

nsivabalan commented on pull request #2400: URL: https://github.com/apache/hudi/pull/2400#issuecomment-754812328 @n3nash : Patch is ready for review. @satishkotha : I have added clustering node. Do check it out. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (HUDI-1459) Support for handling of REPLACE instants

[

https://issues.apache.org/jira/browse/HUDI-1459?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17259074#comment-17259074

]

Vinoth Chandar commented on HUDI-1459:

--

[~pwason] [~satishkotha]

several users reporting this when trying use SaveMode.Overwrite on spark data

source and turning on metadata table

{code}

org.apache.hudi.exception.HoodieException: Unknown type of action replacecommit

at

org.apache.hudi.metadata.HoodieTableMetadataUtil.convertInstantToMetaRecords(HoodieTableMetadataUtil.java:96)

at

org.apache.hudi.metadata.HoodieBackedTableMetadataWriter.syncFromInstants(HoodieBackedTableMetadataWriter.java:372)

at

org.apache.hudi.metadata.HoodieBackedTableMetadataWriter.(HoodieBackedTableMetadataWriter.java:120)

at

org.apache.hudi.metadata.SparkHoodieBackedTableMetadataWriter.(SparkHoodieBackedTableMetadataWriter.java:62)

at

org.apache.hudi.metadata.SparkHoodieBackedTableMetadataWriter.create(SparkHoodieBackedTableMetadataWriter.java:58)

at

org.apache.hudi.client.SparkRDDWriteClient.syncTableMetadata(SparkRDDWriteClient.java:406)

at

org.apache.hudi.client.AbstractHoodieWriteClient.postCommit(AbstractHoodieWriteClient.java:417)

at

org.apache.hudi.client.AbstractHoodieWriteClient.commitStats(AbstractHoodieWriteClient.java:189)

at

org.apache.hudi.client.SparkRDDWriteClient.commit(SparkRDDWriteClient.java:108)

at

org.apache.hudi.HoodieSparkSqlWriter$.commitAndPerformPostOperations(HoodieSparkSqlWriter.scala:439)

at org.apache.hudi.HoodieSparkSqlWriter$.write(HoodieSparkSqlWriter.scala:224)

at org.apache.hudi.DefaultSource.createRelation(DefaultSource.scala:129)

at

org.apache.spark.sql.execution.datasources.SaveIntoDataSourceCommand.run(SaveIntoDataSourceCommand.scala:45)

at

org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult$lzycompute(commands.scala:70)

at

org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult(commands.scala:68)

at

org.apache.spark.sql.execution.command.ExecutedCommandExec.doExecute(commands.scala:86)

at

org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:173)

at

org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:169)

at

org.apache.spark.sql.execution.SparkPlan$$anonfun$executeQuery$1.apply(SparkPlan.scala:197)

at

org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.sql.execution.SparkPlan.executeQuery(SparkPlan.scala:194)

at org.apache.spark.sql.execution.SparkPlan.execute(SparkPlan.scala:169)

at

org.apache.spark.sql.execution.QueryExecution.toRdd$lzycompute(QueryExecution.scala:114)

at

org.apache.spark.sql.execution.QueryExecution.toRdd(QueryExecution.scala:112)

at

org.apache.spark.sql.DataFrameWriter$$anonfun$runCommand$1.apply(DataFrameWriter.scala:696)

at

org.apache.spark.sql.DataFrameWriter$$anonfun$runCommand$1.apply(DataFrameWriter.scala:696)

at

org.apache.spark.sql.execution.SQLExecution$.org$apache$spark$sql$execution$SQLExecution$$executeQuery$1(SQLExecution.scala:83)

at

org.apache.spark.sql.execution.SQLExecution$$anonfun$withNewExecutionId$1$$anonfun$apply$1.apply(SQLExecution.scala:94)

at

org.apache.spark.sql.execution.QueryExecutionMetrics$.withMetrics(QueryExecutionMetrics.scala:141)

at

org.apache.spark.sql.execution.SQLExecution$.org$apache$spark$sql$execution$SQLExecution$$withMetrics(SQLExecution.scala:178)

at

org.apache.spark.sql.execution.SQLExecution$$anonfun$withNewExecutionId$1.apply(SQLExecution.scala:93)

at

org.apache.spark.sql.execution.SQLExecution$.withSQLConfPropagated(SQLExecution.scala:200)

at

org.apache.spark.sql.execution.SQLExecution$.withNewExecutionId(SQLExecution.scala:92)

at org.apache.spark.sql.DataFrameWriter.runCommand(DataFrameWriter.scala:696)

at

org.apache.spark.sql.DataFrameWriter.saveToV1Source(DataFrameWriter.scala:305)

at org.apache.spark.sql.DataFrameWriter.save(DataFrameWriter.scala:291)

at org.apache.spark.sql.DataFrameWriter.save(DataFrameWriter.scala:249)

... 57 elided

{code}

> Support for handling of REPLACE instants

>

>

> Key: HUDI-1459

> URL: https://issues.apache.org/jira/browse/HUDI-1459

> Project: Apache Hudi

> Issue Type: Sub-task

> Components: Writer Core

>Reporter: Vinoth Chandar

>Assignee: Prashant Wason

>Priority: Blocker

> Fix For: 0.7.0

>

>

> Once we rebase to master, we need to handle replace instants as well, as they

> show up on the timeline.

>

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Updated] (HUDI-1479) Replace FSUtils.getAllPartitionPaths() with HoodieTableMetadata#getAllPartitionPaths()

[

https://issues.apache.org/jira/browse/HUDI-1479?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Vinoth Chandar updated HUDI-1479:

-

Description:

*Change #1*

{code:java}

public static List getAllPartitionPaths(FileSystem fs, String

basePathStr, boolean useFileListingFromMetadata, boolean verifyListings,

boolean

assumeDatePartitioning) throws IOException {

if (assumeDatePartitioning) {

return getAllPartitionFoldersThreeLevelsDown(fs, basePathStr);

} else {

HoodieTableMetadata tableMetadata =

HoodieTableMetadata.create(fs.getConf(), basePathStr, "/tmp/",

useFileListingFromMetadata,

verifyListings, false, false);

return tableMetadata.getAllPartitionPaths();

}

}

{code}

is the current implementation, where `HoodieTableMetadata.create()` always

creates `HoodieBackedTableMetadata`. Instead we should create

`FileSystemBackedTableMetadata` if useFileListingFromMetadata==false anyways.

This helps address https://github.com/apache/hudi/pull/2398/files#r550709687

*Change #2*

On master, we have the `HoodieEngineContext` abstraction, which allows for

parallel execution. We should consider moving it to `hudi-common` (its doable)

and then have `FileSystemBackedTableMetadata` redone such that it can do

parallelized listings using the passed in engine. either

HoodieSparkEngineContext or HoodieJavaEngineContext.

HoodieBackedTableMetadata#getPartitionsToFilesMapping has some parallelized

code. We should take one pass and see if that can be redone a bit as well.

Food for thought: https://github.com/apache/hudi/pull/2398#discussion_r550711216

*Change #3*

There are places, where we call fs.listStatus() directly. We should make them

go through the HoodieTable.getMetadata()... route as well. Essentially, all

listing should be concentrated to `FileSystemBackedTableMetadata`

!image-2021-01-05-10-00-35-187.png!

was:

*Change #1*

{code:java}

public static List getAllPartitionPaths(FileSystem fs, String

basePathStr, boolean useFileListingFromMetadata, boolean verifyListings,

boolean

assumeDatePartitioning) throws IOException {

if (assumeDatePartitioning) {

return getAllPartitionFoldersThreeLevelsDown(fs, basePathStr);

} else {

HoodieTableMetadata tableMetadata =

HoodieTableMetadata.create(fs.getConf(), basePathStr, "/tmp/",

useFileListingFromMetadata,

verifyListings, false, false);

return tableMetadata.getAllPartitionPaths();

}

}

{code}

is the current implementation, where `HoodieTableMetadata.create()` always

creates `HoodieBackedTableMetadata`. Instead we should create

`FileSystemBackedTableMetadata` if useFileListingFromMetadata==false anyways

*Change #2*

On master, we have the `HoodieEngineContext` abstraction, which allows for

parallel execution. We should consider moving it to `hudi-common` (its doable)

and then have `FileSystemBackedTableMetadata` redone such that it can do

parallelized listings using the passed in engine. either

HoodieSparkEngineContext or HoodieJavaEngineContext.

HoodieBackedTableMetadata#getPartitionsToFilesMapping has some parallelized

code. We should take one pass and see if that can be redone a bit as well.

*Change #3*

There are places, where we call fs.listStatus() directly. We should make them

go through the HoodieTable.getMetadata()... route as well. Essentially, all

listing should be concentrated to `FileSystemBackedTableMetadata`

!image-2021-01-05-10-00-35-187.png!

> Replace FSUtils.getAllPartitionPaths() with

> HoodieTableMetadata#getAllPartitionPaths()

> --

>

> Key: HUDI-1479

> URL: https://issues.apache.org/jira/browse/HUDI-1479

> Project: Apache Hudi

> Issue Type: Sub-task

> Components: Code Cleanup

>Reporter: Vinoth Chandar

>Assignee: Vinoth Chandar

>Priority: Blocker

> Fix For: 0.7.0

>

> Attachments: image-2021-01-05-10-00-35-187.png

>

>

> *Change #1*

> {code:java}

> public static List getAllPartitionPaths(FileSystem fs, String

> basePathStr, boolean useFileListingFromMetadata, boolean verifyListings,

> boolean

> assumeDatePartitioning) throws IOException {

> if (assumeDatePartitioning) {

> return getAllPartitionFoldersThreeLevelsDown(fs, basePathStr);

> } else {

> HoodieTableMetadata tableMetadata =

> HoodieTableMetadata.create(fs.getConf(), basePathStr, "/tmp/",

> useFileListingFromMetadata,

> verifyListings, false, false);

> return tableMetadata.getAllPartitionPaths();

> }

> }

> {code}

> is the current implementation, where `HoodieTableMetadata.create()` always

> creates `HoodieBackedTableMetadata`.

[jira] [Commented] (HUDI-1308) Issues found during testing RFC-15

[

https://issues.apache.org/jira/browse/HUDI-1308?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17259072#comment-17259072

]

Vinoth Chandar commented on HUDI-1308:

--

More testing on S3 from [~vbalaji]

{code}

Caused by: org.apache.hudi.exception.HoodieIOException: getFileStatus on

s3://robinhood-encrypted-hudi-data-cove/dummy/balaji/sickle/public/client_ledger_clientledgerbalance/test5_v4/.hoodie/.aux/.bootstrap/.partitions/-----0_1-0-1_01.hfile:

com.amazonaws.SdkClientException: Unable to execute HTTP request: Timeout

waiting for connection from pool

{code}

> Issues found during testing RFC-15

> --

>

> Key: HUDI-1308

> URL: https://issues.apache.org/jira/browse/HUDI-1308

> Project: Apache Hudi

> Issue Type: Improvement

> Components: Writer Core

>Reporter: Balaji Varadarajan

>Assignee: Balaji Varadarajan

>Priority: Blocker

> Fix For: 0.7.0

>

>

> THis is an umbrella ticket containing all the issues found during testing

> RFC-15

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (HUDI-1479) Replace FSUtils.getAllPartitionPaths() with HoodieTableMetadata#getAllPartitionPaths()

[

https://issues.apache.org/jira/browse/HUDI-1479?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17259090#comment-17259090

]

Vinoth Chandar commented on HUDI-1479:

--

[~uditme] I have updated the description with detailed steps to do. I think

this will be a super impactful change. let me know if you are able to pick this

up.

> Replace FSUtils.getAllPartitionPaths() with

> HoodieTableMetadata#getAllPartitionPaths()

> --

>

> Key: HUDI-1479

> URL: https://issues.apache.org/jira/browse/HUDI-1479

> Project: Apache Hudi

> Issue Type: Sub-task

> Components: Code Cleanup

>Reporter: Vinoth Chandar

>Assignee: Vinoth Chandar

>Priority: Blocker

> Fix For: 0.7.0

>

> Attachments: image-2021-01-05-10-00-35-187.png

>

>

> *Change #1*

> {code:java}

> public static List getAllPartitionPaths(FileSystem fs, String

> basePathStr, boolean useFileListingFromMetadata, boolean verifyListings,

> boolean

> assumeDatePartitioning) throws IOException {

> if (assumeDatePartitioning) {

> return getAllPartitionFoldersThreeLevelsDown(fs, basePathStr);

> } else {

> HoodieTableMetadata tableMetadata =

> HoodieTableMetadata.create(fs.getConf(), basePathStr, "/tmp/",

> useFileListingFromMetadata,

> verifyListings, false, false);

> return tableMetadata.getAllPartitionPaths();

> }

> }

> {code}

> is the current implementation, where `HoodieTableMetadata.create()` always

> creates `HoodieBackedTableMetadata`. Instead we should create

> `FileSystemBackedTableMetadata` if useFileListingFromMetadata==false anyways.

> This helps address https://github.com/apache/hudi/pull/2398/files#r550709687

> *Change #2*

> On master, we have the `HoodieEngineContext` abstraction, which allows for

> parallel execution. We should consider moving it to `hudi-common` (its

> doable) and then have `FileSystemBackedTableMetadata` redone such that it can

> do parallelized listings using the passed in engine. either

> HoodieSparkEngineContext or HoodieJavaEngineContext.

> HoodieBackedTableMetadata#getPartitionsToFilesMapping has some parallelized

> code. We should take one pass and see if that can be redone a bit as well.

> Food for thought:

> https://github.com/apache/hudi/pull/2398#discussion_r550711216

>

> *Change #3*

> There are places, where we call fs.listStatus() directly. We should make them

> go through the HoodieTable.getMetadata()... route as well. Essentially, all

> listing should be concentrated to `FileSystemBackedTableMetadata`

> !image-2021-01-05-10-00-35-187.png!

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [hudi] codecov-io edited a comment on pull request #2379: [HUDI-1399] support a independent clustering spark job to asynchronously clustering

codecov-io edited a comment on pull request #2379: URL: https://github.com/apache/hudi/pull/2379#issuecomment-751244130 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] yanghua commented on a change in pull request #2405: [HUDI-1506] Fix wrong exception thrown in HoodieAvroUtils

yanghua commented on a change in pull request #2405:

URL: https://github.com/apache/hudi/pull/2405#discussion_r551988234

##

File path: hudi-common/src/main/java/org/apache/hudi/avro/HoodieAvroUtils.java

##

@@ -428,10 +429,14 @@ public static Object getNestedFieldVal(GenericRecord

record, String fieldName, b

if (returnNullIfNotFound) {

return null;

-} else {

+}

+

+if (recordSchema.getField(parts[i]) == null) {

Review comment:

Can it be `else if`?

##

File path: hudi-common/src/main/java/org/apache/hudi/avro/HoodieAvroUtils.java

##

@@ -428,10 +429,14 @@ public static Object getNestedFieldVal(GenericRecord

record, String fieldName, b

if (returnNullIfNotFound) {

return null;

-} else {

+}

+

+if (recordSchema.getField(parts[i]) == null) {

throw new HoodieException(

fieldName + "(Part -" + parts[i] + ") field not found in record.

Acceptable fields were :"

+

valueNode.getSchema().getFields().stream().map(Field::name).collect(Collectors.toList()));

Review comment:

Can we reuse `recordSchema ` here?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] codecov-io edited a comment on pull request #2379: [HUDI-1399] support a independent clustering spark job to asynchronously clustering