[GitHub] [incubator-mxnet] apeforest commented on a change in pull request #15625: Minor refactor of fully connected.

apeforest commented on a change in pull request #15625: Minor refactor of fully

connected.

URL: https://github.com/apache/incubator-mxnet/pull/15625#discussion_r307594376

##

File path: src/operator/nn/fully_connected-inl.h

##

@@ -75,6 +75,38 @@ struct FullyConnectedParam : public

dmlc::Parameter {

}

};

+/**

+ * Flatten additional dimensions after the first

+ * @tparam xpu

+ * @tparam DType

+ * @param tblob

+ * @param ctx

+ * @return 2 Dimensional Tensor with upper shapes collapsed

+ */

+template

+Tensor FlattenAs2DTail(const TBlob& tblob, const OpContext&

ctx) {

+ const TShape& shape = tblob.shape_;

+ Stream *stream = ctx.get_stream();

+ return tblob.get_with_shape(

+ Shape2(shape[0], shape.ProdShape(1, shape.ndim())), stream);

+}

+

+/**

+ * Flatten dimensions except last

+ * @tparam xpu

+ * @tparam DType

+ * @param tblob

+ * @param ctx

+ * @return 2 Dimensional tensor with front shapes collapsed

+ */

+template

+Tensor FlattenAs2DHead(const TBlob& tblob, const OpContext&

ctx) {

Review comment:

These two methods seem to be generic for all operators. Can we add them to a

common place, also are the function names easier to understand than original

code?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-mxnet] apeforest commented on a change in pull request #15625: Minor refactor of fully connected.

apeforest commented on a change in pull request #15625: Minor refactor of fully

connected.

URL: https://github.com/apache/incubator-mxnet/pull/15625#discussion_r307594482

##

File path: src/operator/nn/fully_connected-inl.h

##

@@ -134,59 +159,52 @@ void FCBackward(const OpContext , const

FullyConnectedParam ,

using namespace mshadow::expr;

// TODO(bing): check the BLAS Handle, be careful

// maybe need blas handle from context

- Stream *s = ctx.get_stream();

- const mxnet::TShape& ishape = in_data[fullc::kData].shape_;

- const mxnet::TShape& oshape = out_grad[fullc::kOut].shape_;

-

- Tensor wmat = in_data[fullc::kWeight].get(s);

- Tensor data, grad, gdata;

+ Stream *stream = ctx.get_stream();

+ Tensor wmat = in_data[fullc::kWeight].get(stream);

+ Tensor x, y_grad, x_grad;

if (!param.flatten) {

-data = in_data[fullc::kData].get_with_shape(

Review comment:

These variable names are used in many operators. Why rename them?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-mxnet] apeforest commented on a change in pull request #15625: Minor refactor of fully connected.

apeforest commented on a change in pull request #15625: Minor refactor of fully

connected.

URL: https://github.com/apache/incubator-mxnet/pull/15625#discussion_r307594376

##

File path: src/operator/nn/fully_connected-inl.h

##

@@ -75,6 +75,38 @@ struct FullyConnectedParam : public

dmlc::Parameter {

}

};

+/**

+ * Flatten additional dimensions after the first

+ * @tparam xpu

+ * @tparam DType

+ * @param tblob

+ * @param ctx

+ * @return 2 Dimensional Tensor with upper shapes collapsed

+ */

+template

+Tensor FlattenAs2DTail(const TBlob& tblob, const OpContext&

ctx) {

+ const TShape& shape = tblob.shape_;

+ Stream *stream = ctx.get_stream();

+ return tblob.get_with_shape(

+ Shape2(shape[0], shape.ProdShape(1, shape.ndim())), stream);

+}

+

+/**

+ * Flatten dimensions except last

+ * @tparam xpu

+ * @tparam DType

+ * @param tblob

+ * @param ctx

+ * @return 2 Dimensional tensor with front shapes collapsed

+ */

+template

+Tensor FlattenAs2DHead(const TBlob& tblob, const OpContext&

ctx) {

Review comment:

These two methods seem to be generic for all operators. Can we add them to a

common place?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-mxnet] apeforest commented on a change in pull request #14779: Fully connected, higher order grad

apeforest commented on a change in pull request #14779: Fully connected, higher order grad URL: https://github.com/apache/incubator-mxnet/pull/14779#discussion_r307593777 ## File path: tests/python/unittest/test_gluon.py ## @@ -21,7 +21,7 @@ import mxnet as mx from mxnet import gluon from mxnet.gluon import nn -from mxnet.test_utils import assert_almost_equal +from mxnet.test_utils import assert_almost_equal, same Review comment: But there is no change in the test in this file. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] apeforest commented on a change in pull request #14779: Fully connected, higher order grad

apeforest commented on a change in pull request #14779: Fully connected, higher

order grad

URL: https://github.com/apache/incubator-mxnet/pull/14779#discussion_r307593378

##

File path: src/operator/nn/fully_connected-inl.h

##

@@ -249,6 +285,114 @@ void FullyConnectedGradCompute(const nnvm::NodeAttrs&

attrs,

}

}

+

+

+///

+// Inputs are:

+// o_x_grad : head gradient for x_grad

+// o_w_grad : head gradient for w_grad

+// o_b_grad : if param.no_bias is false

+// o_y : head gradient of y

+//

+// outputs are:

+// o_y_grad : gradient of o_y

+// x_grad_grad : o_y * o_w_grad

+// w_grad_grad : o_y.T * o_x_grad

+// b_grad_grad: if param.no_bias is false

+//

+// For implementation details see this PR:

https://github.com/apache/incubator-mxnet/pull/14779

+

+/**

+ * Second order gradient for Fully Connected

+ * x_grad_grad = o_y * o_w_grad

+ * w_grad_grad = o_y.T * o_x_grad

+ *

+ * @tparam xpu

+ * @tparam DType

+ * @param attrs

+ * @param ctx

+ * @param inputs

+ * @param req

+ * @param outputs

+ */

+template

+void FullyConnectedGradGradCompute(const nnvm::NodeAttrs& attrs,

+ const OpContext& ctx,

+ const std::vector& inputs,

+ const std::vector& req,

+ const std::vector& outputs) {

+ using namespace std;

+ using namespace fullc;

+ Stream *stream = ctx.get_stream();

+ const FullyConnectedParam& param =

nnvm::get(attrs.parsed);

+ const size_t num_inputs = param.no_bias ? 3U : 4U;

+ // outputs are: o_x_grad, o_w_grad, o_y || o_x_grad, o_w_grad, o_b_grad,

o_y

+ const size_t num_outputs = 3U;

+ CHECK_EQ(inputs.size(), num_inputs);

+ CHECK_EQ(outputs.size(), num_outputs);

+ CHECK_EQ(req.size(), num_outputs);

+

+ // inputs

+ Tensor o_x_grad;

+ Tensor o_w_grad;

+ Tensor o_y;

+ // unused

+ // Tensor o_b_grad;

+

+ // outputs

+ Tensor o_y_grad;

+ TBlob o_y_grad_blob = outputs[kOyGrad];

+ Tensor x_grad_grad;

+ Tensor w_grad_grad;

+ Tensor b_grad_grad;

+ size_t o_y_idx = std::numeric_limits::max();

+ if (param.no_bias)

+o_y_idx = kOy;

+ else

+o_y_idx = kOyBias;

+ if (!param.flatten) {

+o_x_grad = FlattenAs2DHead(inputs[kOxGrad], ctx);

+o_w_grad = inputs[kOwGrad].get(stream);

+o_y = FlattenAs2DHead(inputs[o_y_idx], ctx);

+x_grad_grad = FlattenAs2DHead(outputs[kXGradGrad], ctx);

+w_grad_grad = FlattenAs2DHead(outputs[kWGradGrad], ctx);

+ } else {

+o_x_grad = FlattenAs2DTail(inputs[kOxGrad], ctx);

+o_w_grad = FlattenAs2DTail(inputs[kOwGrad], ctx);

+o_y = inputs[o_y_idx].get(stream);

+x_grad_grad = FlattenAs2DTail(outputs[kXGradGrad], ctx);

+w_grad_grad = FlattenAs2DTail(outputs[kWGradGrad], ctx);

+ }

+ linalg_gemm(o_y, o_w_grad, x_grad_grad, false, false, stream);

+ linalg_gemm(o_y, o_x_grad, w_grad_grad, true, false, stream);

+ // 3rd order not supported

+ Fill(stream, o_y_grad_blob, kWriteTo, static_cast(0));

+ /* TODO(larroy) bias is not supported yet as there's no bias input to

backward. Bias grad grad is

Review comment:

@sxjscience Could you please review if this is correct?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-mxnet] apeforest commented on a change in pull request #14779: Fully connected, higher order grad

apeforest commented on a change in pull request #14779: Fully connected, higher

order grad

URL: https://github.com/apache/incubator-mxnet/pull/14779#discussion_r307592893

##

File path: src/operator/nn/fully_connected-inl.h

##

@@ -47,7 +48,24 @@ namespace fullc {

enum FullyConnectedOpInputs {kData, kWeight, kBias};

enum FullyConnectedOpResource {kTempSpace};

enum FullyConnectedOpOutputs {kOut};

-} // fullc

+enum FullyConnectedGradGradOutputs {

Review comment:

nit: Can we keep the same code style as previous enums

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-mxnet] apeforest commented on a change in pull request #14779: Fully connected, higher order grad

apeforest commented on a change in pull request #14779: Fully connected, higher

order grad

URL: https://github.com/apache/incubator-mxnet/pull/14779#discussion_r307592850

##

File path: src/operator/nn/fully_connected-inl.h

##

@@ -47,7 +48,24 @@ namespace fullc {

enum FullyConnectedOpInputs {kData, kWeight, kBias};

enum FullyConnectedOpResource {kTempSpace};

enum FullyConnectedOpOutputs {kOut};

-} // fullc

+enum FullyConnectedGradGradOutputs {

+ kOyGrad,

+ kXGradGrad,

+ kWGradGrad,

+ kBGradGrad

+};

+enum Inputs {

Review comment:

A more specific name?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-mxnet] iblis17 commented on issue #15609: update julia install doc

iblis17 commented on issue #15609: update julia install doc URL: https://github.com/apache/incubator-mxnet/pull/15609#issuecomment-515312374 looks fine. Could you rebase to re-trigger CI again? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] iblis17 commented on issue #15609: update julia install doc

iblis17 commented on issue #15609: update julia install doc URL: https://github.com/apache/incubator-mxnet/pull/15609#issuecomment-515312328 http://mxnet-ci-doc.s3-accelerate.dualstack.amazonaws.com/PR-15609/4/install/index.html?platform=Linux=Julia=CPU This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] wuxun-zhang commented on issue #15659: Dropout produces wrong mask with MKL-DNN

wuxun-zhang commented on issue #15659: Dropout produces wrong mask with MKL-DNN URL: https://github.com/apache/incubator-mxnet/issues/15659#issuecomment-515312297 OK. I'll look into this issue. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] apeforest commented on a change in pull request #15285: Graph dumper

apeforest commented on a change in pull request #15285: Graph dumper

URL: https://github.com/apache/incubator-mxnet/pull/15285#discussion_r307590972

##

File path: src/imperative/imperative.cc

##

@@ -353,6 +356,11 @@ std::vector Imperative::Backward(

<< "There are no inputs in computation graph that require gradients.";

}

+ if (backward_graph_dump_enabled_) {

+std::cout << "Forward graph: " << std::endl;

Review comment:

Can we leave this to the developer? I think we can just have a function

DebugGraph() and not putting all the std::cout logic in a function block.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-mxnet] apeforest commented on a change in pull request #15285: Graph dumper

apeforest commented on a change in pull request #15285: Graph dumper

URL: https://github.com/apache/incubator-mxnet/pull/15285#discussion_r307590664

##

File path: include/mxnet/imperative.h

##

@@ -191,6 +191,7 @@ class Imperative {

std::atomic variable_count_{0};

/*! \brief default backward bulk size */

int backward_bulk_size_{0};

+ bool backward_graph_dump_enabled_{};

Review comment:

not needed.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-mxnet] apeforest commented on a change in pull request #15285: Graph dumper

apeforest commented on a change in pull request #15285: Graph dumper

URL: https://github.com/apache/incubator-mxnet/pull/15285#discussion_r307590642

##

File path: include/mxnet/imperative.h

##

@@ -160,8 +160,8 @@ class Imperative {

private:

friend class NDArray;

- /*! \brief make constructor protected. */

- Imperative() {

+ Imperative() :

+backward_graph_dump_enabled_(dmlc::GetEnv("MXNET_BACKWARD_GRAPH_DUMP",

false)) {

Review comment:

Why do we need a class member for a debugging utility?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-mxnet] kshitij12345 commented on a change in pull request #15109: [DOC] refine autograd docs

kshitij12345 commented on a change in pull request #15109: [DOC] refine autograd docs URL: https://github.com/apache/incubator-mxnet/pull/15109#discussion_r307589169 ## File path: docs/api/python/autograd/autograd.md ## @@ -76,7 +82,63 @@ Detailed tutorials are available in Part 1 of [the MXNet gluon book](http://gluon.mxnet.io/). +# Higher order gradient + +Some operators support higher order gradients. Meaning that you calculate the gradient of the +gradient. For this the operator's backward must be as well differentiable. Some operators support +differentiating multiple times, and others two, most just once. + +For calculating higher order gradients, we can use the `mx.autograd.grad` function while recording +and then call backward, or call `mx.autograd.grad` two times. If we do the later is important that +the first call uses `create_graph=True` and `retain_graph=True` and the second call uses +`create_graph=False` and `retain_graph=True`. Otherwise we will not get the results that we want. If Review comment: So I guess `retain` keeps the intermediate gradient buffer but not the node and `create_graph` keeps the nodes as well? Thanks for the pointer. Will try to look into the imperative.cc. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] zixuanweeei commented on a change in pull request #15621: [WIP] MKL-DNN LBR-GRU Inference Integration (FP32 LBR-GRU)

zixuanweeei commented on a change in pull request #15621: [WIP] MKL-DNN LBR-GRU Inference Integration (FP32 LBR-GRU) URL: https://github.com/apache/incubator-mxnet/pull/15621#discussion_r307588514 ## File path: src/operator/nn/mkldnn/mkldnn_rnn_impl.h ## @@ -118,7 +151,7 @@ static size_t GetMKLDNNRNNCacheMemorySize(int L, break; case rnn_enum::kGru: size = 2 * (D * (I + H) * 3 * H + (L - 1) * D * (D * H + H) * 3 * H + - L * D * 2 * N * H) + T * N * D * H + L * 2 * D * 3 * H + (L + 2) * D * 2 * N * H + + L * D * 2 * N * H) + T * N * D * H + L * 2 * D * 4 * H + (L + 2) * D * 2 * N * H + Review comment: @marcoabreu Sorry for the late update. I have renamed this part. Would you mind checking it again? Thanks. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] zixuanweeei commented on issue #15631: [Flaky test] Skip test_operator_gpu.test_convolution_independent_gradients

zixuanweeei commented on issue #15631: [Flaky test] Skip test_operator_gpu.test_convolution_independent_gradients URL: https://github.com/apache/incubator-mxnet/pull/15631#issuecomment-515308138 @TaoLv Please take some reviews on this PR again. Thanks. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] TaoLv commented on issue #15659: Dropout produces wrong mask with MKL-DNN

TaoLv commented on issue #15659: Dropout produces wrong mask with MKL-DNN URL: https://github.com/apache/incubator-mxnet/issues/15659#issuecomment-515307535 Some background: MKL-DNN itself doesn't support dropout primitive. When MXNet is built with MKL-DNN, it will use functions from VSL to implement dropout. It's kind of legacy code from MKL2017 integration. That's why it cannot be disabled by `MXNET_MKLDNN_ENABLED=0`. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] pengzhao-intel commented on issue #15518: [MKLDNN] Enable subgraph backend mkldnn by default.

pengzhao-intel commented on issue #15518: [MKLDNN] Enable subgraph backend mkldnn by default. URL: https://github.com/apache/incubator-mxnet/pull/15518#issuecomment-515297073 Merging now. @MXNet/community Feel free to ping me or @ZhennanQin if there are issues after the PR merging since the default behaviors are changed. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[incubator-mxnet] branch master updated (b00bb81 -> e98fea3)

This is an automated email from the ASF dual-hosted git repository. patriczhao pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/incubator-mxnet.git. from b00bb81 [Opperf] Add array rearrange operators to opperf (#15606) add e98fea3 [MKLDNN] Enable subgraph backend mkldnn by default. (#15518) No new revisions were added by this update. Summary of changes: cpp-package/example/inference/README.md| 3 - docs/faq/env_var.md| 3 +- docs/tutorials/c++/subgraphAPI.md | 11 +- docs/tutorials/mkldnn/MKLDNN_README.md | 18 +- example/quantization/README.md | 29 +- example/ssd/README.md | 2 - .../scala/org/apache/mxnet/OperatorSuite.scala | 8 +- src/c_api/c_api_symbolic.cc| 8 +- src/c_api/c_api_test.cc| 8 +- src/executor/graph_executor.cc | 399 + src/operator/subgraph/build_subgraph.cc| 25 +- src/operator/subgraph/default_subgraph_property.cc | 1 + .../subgraph/default_subgraph_property_v2.cc | 2 + .../subgraph/mkldnn/mkldnn_conv_property.h | 14 +- .../subgraph/mkldnn/mkldnn_subgraph_property.cc| 8 + src/operator/subgraph/subgraph_property.h | 115 +- src/operator/subgraph/tensorrt/tensorrt.cc | 2 + tests/python/mkl/test_quantization_mkldnn.py | 4 + tests/python/unittest/test_operator.py | 135 +++ 19 files changed, 504 insertions(+), 291 deletions(-)

[GitHub] [incubator-mxnet] pengzhao-intel commented on issue #15659: Dropout produces wrong mask with MKL-DNN

pengzhao-intel commented on issue #15659: Dropout produces wrong mask with MKL-DNN URL: https://github.com/apache/incubator-mxnet/issues/15659#issuecomment-515297883 @wuxun-zhang please take a look for this issue. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] larroy commented on issue #15285: Graph dumper

larroy commented on issue #15285: Graph dumper URL: https://github.com/apache/incubator-mxnet/pull/15285#issuecomment-515280608 @anirudh2290 @ptrendx @szha please review again, I removed the offending code. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] larroy edited a comment on issue #15285: Graph dumper

larroy edited a comment on issue #15285: Graph dumper URL: https://github.com/apache/incubator-mxnet/pull/15285#issuecomment-515280608 @anirudh2290 @ptrendx @szha please review again, I removed the uber engineered code. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] ngunauj commented on issue #15656: Can you give me a UpSampling's doc? 'bilinear'!!

ngunauj commented on issue #15656: Can you give me a UpSampling's doc? 'bilinear'!! URL: https://github.com/apache/incubator-mxnet/issues/15656#issuecomment-515278914 > The documentation seems to be incomplete. > https://mxnet.incubator.apache.org/api/python/ndarray/ndarray.html#mxnet.ndarray.UpSampling > > It doesn't mention about weight when using `bilinear`. > Digging into the code, > https://github.com/apache/incubator-mxnet/blob/8158ba4b0f1ebd696ec09a0b1aa6031bacb60740/src/operator/nn/upsampling.cc#L123-L173 > > It mentions about requiring the weight and also gives an example. > I have verified that the example works, > > ``` > from mxnet import nd > x = nd.ones((1,3,3,3)) # NCHW > w = nd.ones((3,1,4,4)) > upsampled = nd.UpSampling(x, w, scale=2, sample_type='bilinear', num_filter=3) > ``` ok i will try..try Thanks This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] ngunauj commented on issue #15656: Can you give me a UpSampling's doc? 'bilinear'!!

ngunauj commented on issue #15656: Can you give me a UpSampling's doc? 'bilinear'!! URL: https://github.com/apache/incubator-mxnet/issues/15656#issuecomment-515279181 > The documentation seems to be incomplete. > https://mxnet.incubator.apache.org/api/python/ndarray/ndarray.html#mxnet.ndarray.UpSampling > > It doesn't mention about weight when using `bilinear`. > Digging into the code, > https://github.com/apache/incubator-mxnet/blob/8158ba4b0f1ebd696ec09a0b1aa6031bacb60740/src/operator/nn/upsampling.cc#L123-L173 > > It mentions about requiring the weight and also gives an example. > I have verified that the example works, > > ``` > from mxnet import nd > x = nd.ones((1,3,3,3)) # NCHW > w = nd.ones((3,1,4,4)) > upsampled = nd.UpSampling(x, w, scale=2, sample_type='bilinear', num_filter=3) > ``` ok i will try..try Thanks @kshitij12345 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] ngunauj removed a comment on issue #15656: Can you give me a UpSampling's doc? 'bilinear'!!

ngunauj removed a comment on issue #15656: Can you give me a UpSampling's doc? 'bilinear'!! URL: https://github.com/apache/incubator-mxnet/issues/15656#issuecomment-515278914 > The documentation seems to be incomplete. > https://mxnet.incubator.apache.org/api/python/ndarray/ndarray.html#mxnet.ndarray.UpSampling > > It doesn't mention about weight when using `bilinear`. > Digging into the code, > https://github.com/apache/incubator-mxnet/blob/8158ba4b0f1ebd696ec09a0b1aa6031bacb60740/src/operator/nn/upsampling.cc#L123-L173 > > It mentions about requiring the weight and also gives an example. > I have verified that the example works, > > ``` > from mxnet import nd > x = nd.ones((1,3,3,3)) # NCHW > w = nd.ones((3,1,4,4)) > upsampled = nd.UpSampling(x, w, scale=2, sample_type='bilinear', num_filter=3) > ``` ok i will try..try Thanks This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] larroy commented on a change in pull request #15109: [DOC] refine autograd docs

larroy commented on a change in pull request #15109: [DOC] refine autograd docs URL: https://github.com/apache/incubator-mxnet/pull/15109#discussion_r307563953 ## File path: docs/api/python/autograd/autograd.md ## @@ -76,7 +82,63 @@ Detailed tutorials are available in Part 1 of [the MXNet gluon book](http://gluon.mxnet.io/). +# Higher order gradient + +Some operators support higher order gradients. Meaning that you calculate the gradient of the +gradient. For this the operator's backward must be as well differentiable. Some operators support +differentiating multiple times, and others two, most just once. + +For calculating higher order gradients, we can use the `mx.autograd.grad` function while recording +and then call backward, or call `mx.autograd.grad` two times. If we do the later is important that +the first call uses `create_graph=True` and `retain_graph=True` and the second call uses +`create_graph=False` and `retain_graph=True`. Otherwise we will not get the results that we want. If Review comment: @apeforest @kshitij12345 I don't think pasting the graph here helps because it doesn't change, it's only related to attached autograd to arrays from my point of view. There's a difference, you can retain and not create if you want to calculate gradients again. If you reuse the outputs in the graph you should create. The details are in imperative.cc Backward, but they have in fact slightly different behaviour. You can try yourself, but if you use heads=x_grad_grad in the third gradient, you need to have create=True in the previous call.  ``` with autograd.record(): y = op(x) x_grad = autograd.grad(heads=y, variables=x, head_grads=y_grad, create_graph=True, retain_graph=True)[0] x_grad_grad = autograd.grad(heads=x_grad, variables=x, head_grads=head_grad_grads, create_graph=False, retain_graph=True)[0] x_grad_grad_grad = autograd.grad(heads=x_grad, variables=x, head_grads=head_grad_grads, create_graph=False, retain_graph=True)[0] #x_grad.backward(head_grad_grads) ``` In any case I don't see this is a reason to block the PR. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] mseth10 commented on a change in pull request #15489: [WIP] Dynamic Library Loading Support

mseth10 commented on a change in pull request #15489: [WIP] Dynamic Library

Loading Support

URL: https://github.com/apache/incubator-mxnet/pull/15489#discussion_r307560868

##

File path: tests/python/unittest/test_library_loading.py

##

@@ -0,0 +1,29 @@

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+

+import mxnet as mx

+import os

+from mxnet.test_utils import download

+

+def test_library_loading():

+if (os.name=='posix'):

+lib = 'mylib.so'

+elif (os.name=='nt'):

+lib = 'mylib.dll'

+

+fname =

mx.test_utils.download('https://mxnet-demo-models.s3.amazonaws.com/lib_binary/'+lib)

Review comment:

Added.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-mxnet] mseth10 commented on a change in pull request #15489: [WIP] Dynamic Library Loading Support

mseth10 commented on a change in pull request #15489: [WIP] Dynamic Library

Loading Support

URL: https://github.com/apache/incubator-mxnet/pull/15489#discussion_r307560982

##

File path: src/common/library.cc

##

@@ -0,0 +1,89 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing,

+ * software distributed under the License is distributed on an

+ * "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+ * KIND, either express or implied. See the License for the

+ * specific language governing permissions and limitations

+ * under the License.

+ */

+

+/*!

+ * Copyright (c) 2015 by Contributors

+ * \file library.cc

+ * \brief Dynamically loading accelerator library

+ * and accessing its functions

+ */

+

+#if defined(_WIN32) || defined(_WIN64) || defined(__WINDOWS__)

+#include

+#else

+#include

+#endif

+

+#include "library.h"

+

+

+/*

+Loads the dynamic shared library file

+Parameter: Library file location

+Returns: handle for the loaded library, NULL if loading unsuccessful

+*/

+void* load_lib(const char* path) {

+ void *handle;

+#if defined(_WIN32) || defined(_WIN64) || defined(__WINDOWS__)

+ handle = LoadLibrary(path);

+ if (!handle) {

+char *lpMsgBuf;

+unsigned long dw = GetLastError();

+FormatMessage(

+FORMAT_MESSAGE_ALLOCATE_BUFFER |

+FORMAT_MESSAGE_FROM_SYSTEM |

+FORMAT_MESSAGE_IGNORE_INSERTS,

+NULL,

+dw,

+MAKELANGID(LANG_NEUTRAL, SUBLANG_DEFAULT),

+lpMsgBuf,

Review comment:

Done.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-mxnet] zachgk commented on issue #15662: cudnn Dropout reproducibility

zachgk commented on issue #15662: cudnn Dropout reproducibility URL: https://github.com/apache/incubator-mxnet/issues/15662#issuecomment-515275803 I did a quick recursive grep and found several other usages of the rand function. Here is the full list I found (some of these may be correct): - https://github.com/apache/incubator-mxnet/blob/master/src/operator/nn/dropout-inl.h#L95 - https://github.com/apache/incubator-mxnet/blob/master/src/operator/nn/dropout-inl.h#L491 - https://github.com/apache/incubator-mxnet/blob/master/src/operator/random/sampler.h#L110 - https://github.com/apache/incubator-mxnet/blob/master/src/operator/rnn-inl.h#L1551 - https://github.com/apache/incubator-mxnet/blob/master/src/operator/rnn_impl.h#L159 - https://github.com/apache/incubator-mxnet/blob/master/src/operator/rnn_impl.h#L1004 - https://github.com/apache/incubator-mxnet/blob/master/src/operator/rnn_impl.h#L1891 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] mseth10 commented on a change in pull request #15489: [WIP] Dynamic Library Loading Support

mseth10 commented on a change in pull request #15489: [WIP] Dynamic Library Loading Support URL: https://github.com/apache/incubator-mxnet/pull/15489#discussion_r307560428 ## File path: python/mxnet/library.py ## @@ -0,0 +1,51 @@ +# Licensed to the Apache Software Foundation (ASF) under one +# or more contributor license agreements. See the NOTICE file +# distributed with this work for additional information +# regarding copyright ownership. The ASF licenses this file +# to you under the Apache License, Version 2.0 (the +# "License"); you may not use this file except in compliance +# with the License. You may obtain a copy of the License at +# +# http://www.apache.org/licenses/LICENSE-2.0 +# +# Unless required by applicable law or agreed to in writing, +# software distributed under the License is distributed on an +# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY +# KIND, either express or implied. See the License for the +# specific language governing permissions and limitations +# under the License. + +# coding: utf-8 +"""Library management API of mxnet.""" +from __future__ import absolute_import +import ctypes +import os +from .base import _LIB +from .base import check_call + +def load(path): +"""Loads library dynamically. + +Parameters +- +path : Path to library .so file + +Returns +- +void +""" +#check if path exists +if not os.path.exists(path): Review comment: Added this check. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] larroy removed a comment on issue #15285: Graph dumper

larroy removed a comment on issue #15285: Graph dumper URL: https://github.com/apache/incubator-mxnet/pull/15285#issuecomment-515257152 It's ironic, the amount of questionable code that has gone in the repo, and having a single header data structure with tests is a big issue. No worries, I will remove it. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] larroy removed a comment on issue #15285: Graph dumper

larroy removed a comment on issue #15285: Graph dumper URL: https://github.com/apache/incubator-mxnet/pull/15285#issuecomment-515269966 Just curious, am I the only one who sees the design problems of the indexed graph class? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[incubator-mxnet-site] branch asf-site updated: Bump the publish timestamp.

This is an automated email from the ASF dual-hosted git repository. marcoabreu pushed a commit to branch asf-site in repository https://gitbox.apache.org/repos/asf/incubator-mxnet-site.git The following commit(s) were added to refs/heads/asf-site by this push: new 11f1c0c Bump the publish timestamp. 11f1c0c is described below commit 11f1c0c009c14acc11f1de5560982b7eb5a34064 Author: mxnet-ci AuthorDate: Fri Jul 26 01:17:40 2019 + Bump the publish timestamp. --- date.txt | 1 + 1 file changed, 1 insertion(+) diff --git a/date.txt b/date.txt new file mode 100644 index 000..a7cd79a --- /dev/null +++ b/date.txt @@ -0,0 +1 @@ +Fri Jul 26 01:17:40 UTC 2019

[GitHub] [incubator-mxnet] mxnet-label-bot commented on issue #15662: cudnn Dropout reproducibility

mxnet-label-bot commented on issue #15662: cudnn Dropout reproducibility URL: https://github.com/apache/incubator-mxnet/issues/15662#issuecomment-515271817 Hey, this is the MXNet Label Bot. Thank you for submitting the issue! I will try and suggest some labels so that the appropriate MXNet community members can help resolve it. Here are my recommended labels: Cuda, Bug This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] eric-haibin-lin opened a new issue #15662: cudnn Dropout reproducibility

eric-haibin-lin opened a new issue #15662: cudnn Dropout reproducibility URL: https://github.com/apache/incubator-mxnet/issues/15662 https://github.com/apache/incubator-mxnet/blob/master/src/operator/nn/dropout-inl.h#L491-L493 The seed of CUDNN dropout does not respect the random seed in mxnet This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] larroy commented on issue #15285: Graph dumper

larroy commented on issue #15285: Graph dumper URL: https://github.com/apache/incubator-mxnet/pull/15285#issuecomment-515269966 Just curious, am I the only one who sees the design problems of the indexed graph class? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] larroy commented on a change in pull request #15331: [fix] missing input log higher order.

larroy commented on a change in pull request #15331: [fix] missing input log

higher order.

URL: https://github.com/apache/incubator-mxnet/pull/15331#discussion_r307541582

##

File path: src/operator/tensor/elemwise_unary_op_basic.cc

##

@@ -1117,15 +1117,15 @@

MXNET_OPERATOR_REGISTER_BINARY_WITH_SPARSE_CPU_DR(_backward_log10,

unary_bwd)

.set_attr("FGradient",

[](const nnvm::NodePtr& n, const std::vector& ograds) {

-// ograds[0]: dL/dxgrad

+// ograds[0]: dL/dygrad

Review comment:

I think the notation is complicating us in excess as it gets pretty hairy.

It's the head gradient of the previous (output) node, which has shape of x, and

x_grad. So it has to be related to x, not y.

I think in Lagrange notation it would be $$F_{L_x}$$ (derivative of some

head function with respect to the derivative of the first loss wrt to x.

(x_grad).

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-mxnet] liav-teichner commented on issue #15486: mxnet profiler yields exception when multithreaded mxndarray IO operations occur in the background

liav-teichner commented on issue #15486: mxnet profiler yields exception when multithreaded mxndarray IO operations occur in the background URL: https://github.com/apache/incubator-mxnet/issues/15486#issuecomment-515259583 Hi, I am a co-worker of Aviv and would like to ask a further question: while we target moving to Gluon API and Gluon data loading, our current code uses Module API and DataIter classes. To achieve acceptable data loading performance we spawn child processes that load data from the disk, augment the data and use NDArray.save to save it to shared memory. The main process that executes the training loop has a background thread that continuously calls a single MXNet function - NDArray.load to load NDArray from shared memory. Will this result in unexpected behaviour? (I.e. having a single background thread that calld NDArray.load dyring training). If it does, what's the easiest way to replace it with code that won't result in unexpected behaviour? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] larroy commented on issue #15285: Graph dumper

larroy commented on issue #15285: Graph dumper URL: https://github.com/apache/incubator-mxnet/pull/15285#issuecomment-515257152 It's ironic, the amount of questionable code that has gone in the repo, and having a single header data structure with tests is a big issue. No worries, I will remove it. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] larroy commented on a change in pull request #15331: [fix] missing input log higher order.

larroy commented on a change in pull request #15331: [fix] missing input log

higher order.

URL: https://github.com/apache/incubator-mxnet/pull/15331#discussion_r307541582

##

File path: src/operator/tensor/elemwise_unary_op_basic.cc

##

@@ -1117,15 +1117,15 @@

MXNET_OPERATOR_REGISTER_BINARY_WITH_SPARSE_CPU_DR(_backward_log10,

unary_bwd)

.set_attr("FGradient",

[](const nnvm::NodePtr& n, const std::vector& ograds) {

-// ograds[0]: dL/dxgrad

+// ograds[0]: dL/dygrad

Review comment:

I think the notation is complicating us in excess as it gets pretty hairy.

It's the head gradient of the previous (output) node, which has shape of x, and

x_grad. So it has to be related to x, not y.

I think in Lagrange notation it would be $$F_{L_x}$$

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-mxnet] larroy commented on a change in pull request #15331: [fix] missing input log higher order.

larroy commented on a change in pull request #15331: [fix] missing input log

higher order.

URL: https://github.com/apache/incubator-mxnet/pull/15331#discussion_r307541582

##

File path: src/operator/tensor/elemwise_unary_op_basic.cc

##

@@ -1117,15 +1117,15 @@

MXNET_OPERATOR_REGISTER_BINARY_WITH_SPARSE_CPU_DR(_backward_log10,

unary_bwd)

.set_attr("FGradient",

[](const nnvm::NodePtr& n, const std::vector& ograds) {

-// ograds[0]: dL/dxgrad

+// ograds[0]: dL/dygrad

Review comment:

I think the notation is complicating us in excess as it gets pretty hairy.

It's the head gradient of the previous (output) node, which has shape of x, and

x_grad. So it has to be related to x, not y.

I think in Lagrange notation it would be $F_{L_x}$

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-mxnet] larroy commented on a change in pull request #15331: [fix] missing input log higher order.

larroy commented on a change in pull request #15331: [fix] missing input log

higher order.

URL: https://github.com/apache/incubator-mxnet/pull/15331#discussion_r307541582

##

File path: src/operator/tensor/elemwise_unary_op_basic.cc

##

@@ -1117,15 +1117,15 @@

MXNET_OPERATOR_REGISTER_BINARY_WITH_SPARSE_CPU_DR(_backward_log10,

unary_bwd)

.set_attr("FGradient",

[](const nnvm::NodePtr& n, const std::vector& ograds) {

-// ograds[0]: dL/dxgrad

+// ograds[0]: dL/dygrad

Review comment:

I think the notation is complicating us in excess as it gets pretty hairy.

It's the head gradient of the previous (output) node, which has shape of x, and

x_grad. So it has to be related to x, not y.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-mxnet] samskalicky commented on a change in pull request #15489: [WIP] Dynamic Library Loading Support

samskalicky commented on a change in pull request #15489: [WIP] Dynamic Library

Loading Support

URL: https://github.com/apache/incubator-mxnet/pull/15489#discussion_r307533906

##

File path: src/common/library.cc

##

@@ -0,0 +1,89 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing,

+ * software distributed under the License is distributed on an

+ * "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+ * KIND, either express or implied. See the License for the

+ * specific language governing permissions and limitations

+ * under the License.

+ */

+

+/*!

+ * Copyright (c) 2015 by Contributors

+ * \file library.cc

+ * \brief Dynamically loading accelerator library

+ * and accessing its functions

+ */

+

+#if defined(_WIN32) || defined(_WIN64) || defined(__WINDOWS__)

+#include

+#else

+#include

+#endif

+

+#include "library.h"

+

+

+/*

+Loads the dynamic shared library file

+Parameter: Library file location

+Returns: handle for the loaded library, NULL if loading unsuccessful

+*/

+void* load_lib(const char* path) {

+ void *handle;

+#if defined(_WIN32) || defined(_WIN64) || defined(__WINDOWS__)

+ handle = LoadLibrary(path);

+ if (!handle) {

+char *lpMsgBuf;

+unsigned long dw = GetLastError();

+FormatMessage(

+FORMAT_MESSAGE_ALLOCATE_BUFFER |

+FORMAT_MESSAGE_FROM_SYSTEM |

+FORMAT_MESSAGE_IGNORE_INSERTS,

+NULL,

+dw,

+MAKELANGID(LANG_NEUTRAL, SUBLANG_DEFAULT),

+lpMsgBuf,

Review comment:

we should follow the MS example and pass casting it to (LPSTR) ie.

(char*)

https://docs.microsoft.com/en-us/windows/win32/debug/retrieving-the-last-error-code

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-mxnet] samskalicky commented on a change in pull request #15489: [WIP] Dynamic Library Loading Support

samskalicky commented on a change in pull request #15489: [WIP] Dynamic Library

Loading Support

URL: https://github.com/apache/incubator-mxnet/pull/15489#discussion_r307533543

##

File path: tests/python/unittest/test_library_loading.py

##

@@ -0,0 +1,29 @@

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+

+import mxnet as mx

+import os

+from mxnet.test_utils import download

+

+def test_library_loading():

+if (os.name=='posix'):

+lib = 'mylib.so'

+elif (os.name=='nt'):

+lib = 'mylib.dll'

+

+fname =

mx.test_utils.download('https://mxnet-demo-models.s3.amazonaws.com/lib_binary/'+lib)

Review comment:

lets add this code after line 28:

```

fname = os.path.abspath(fname)

```

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-mxnet] samskalicky commented on a change in pull request #15489: [WIP] Dynamic Library Loading Support

samskalicky commented on a change in pull request #15489: [WIP] Dynamic Library

Loading Support

URL: https://github.com/apache/incubator-mxnet/pull/15489#discussion_r307533408

##

File path: python/mxnet/library.py

##

@@ -0,0 +1,51 @@

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+

+# coding: utf-8

+"""Library management API of mxnet."""

+from __future__ import absolute_import

+import ctypes

+import os

+from .base import _LIB

+from .base import check_call

+

+def load(path):

+"""Loads library dynamically.

+

+Parameters

+-

+path : Path to library .so file

+

+Returns

+-

+void

+"""

+#check if path exists

+if not os.path.exists(path):

Review comment:

lets also require users to path an absolute path, so that we dont have to

worry about when a user gives a path and the dynamic loader cannot find it. If

we force users to provide an absolute path, it should always be found by the

dynamic loader when we call dlopen.

we can check by doing:

```

if not os.path.isabs(path):

print('load path "%s" is not an absolute path' % path)

return

```

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-mxnet] ChaiBapchya edited a comment on issue #15593: Large Index Support for Slice

ChaiBapchya edited a comment on issue #15593: Large Index Support for Slice URL: https://github.com/apache/incubator-mxnet/pull/15593#issuecomment-515239154 How about using `repeat` operator for converting any dimension array to 1D? ``` >> mx.nd.repeat(mx.nd.array([[[1,2],[3,4]]]),repeats=1) [1. 2. 3. 4.] ``` ``` >>> mx.nd.repeat(mx.nd.array([[[1,2],[3,4]],[[5,6],[7,8]]]),repeats=1) [1. 2. 3. 4. 5. 6. 7. 8.] ``` This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] ChaiBapchya commented on issue #15593: Large Index Support for Slice

ChaiBapchya commented on issue #15593: Large Index Support for Slice URL: https://github.com/apache/incubator-mxnet/pull/15593#issuecomment-515239154 How about using `repeat` operator for converting any dimension array to 1D? ``` >> mx.nd.repeat(mx.nd.array([[[1,2],[3,4]]]),repeats=1) [1. 2. 3. 4.] ``` This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] access2rohit commented on issue #15593: Large Index Support for Slice

access2rohit commented on issue #15593: Large Index Support for Slice URL: https://github.com/apache/incubator-mxnet/pull/15593#issuecomment-515236970 @apeforest : MXNet NDArray flatten still flattens to 2d array instead of 1D array. Can you suggest which operator to use in order to flatten to 1D ? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] ChaiBapchya opened a new pull request #15661: [OpPerf] PDF Random ops break the utility

ChaiBapchya opened a new pull request #15661: [OpPerf] PDF Random ops break the utility URL: https://github.com/apache/incubator-mxnet/pull/15661 ## Description ## Newly add PDF Random ops - https://github.com/apache/incubator-mxnet/pull/14617 Caused the opperf.py to error out due to parameter issues. Hence added new param `sample` to handle that ## Checklist ## ### Essentials ### Please feel free to remove inapplicable items for your PR. - [x] Changes are complete (i.e. I finished coding on this PR) - [x] To the my best knowledge, examples are either not affected by this change, or have been fixed to be compatible with this change ### Changes ### - [ ] added param ## Comments ## Ran `python benchmark/opperf/opperf.py` successfully without error on CPU & GPU This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[incubator-mxnet] branch master updated: [Opperf] Add array rearrange operators to opperf (#15606)

This is an automated email from the ASF dual-hosted git repository. skm pushed a commit to branch master in repository https://gitbox.apache.org/repos/asf/incubator-mxnet.git The following commit(s) were added to refs/heads/master by this push: new b00bb81 [Opperf] Add array rearrange operators to opperf (#15606) b00bb81 is described below commit b00bb81fd4346196d7e059e3b051d1c74ba6e572 Author: Chaitanya Prakash Bapat AuthorDate: Thu Jul 25 15:12:42 2019 -0700 [Opperf] Add array rearrange operators to opperf (#15606) * add array rearrange operators to opperf * Trigger notification * 4d tensor, param support * new line * add alias logic --- benchmark/opperf/nd_operations/README.md | 5 -- benchmark/opperf/nd_operations/array_rearrange.py | 57 ++ benchmark/opperf/nd_operations/binary_operators.py | 4 +- benchmark/opperf/opperf.py | 4 ++ benchmark/opperf/rules/default_params.py | 15 +- benchmark/opperf/utils/op_registry_utils.py| 22 + benchmark/opperf/utils/profiler_utils.py | 20 ++-- 7 files changed, 116 insertions(+), 11 deletions(-) diff --git a/benchmark/opperf/nd_operations/README.md b/benchmark/opperf/nd_operations/README.md index b98a0d3..321158c 100644 --- a/benchmark/opperf/nd_operations/README.md +++ b/benchmark/opperf/nd_operations/README.md @@ -66,9 +66,7 @@ 46. linalg_extractdiag 47. sgd_mom_update 48. SequenceLast -50. flip 51. SequenceReverse -52. swapaxes 53. SVMOutput 54. linalg_trsm 55. where @@ -82,7 +80,6 @@ 63. mp_sgd_mom_update 64. choose_element_0index 65. tile -66. space_to_depth 67. gather_nd 69. SequenceMask 70. reshape_like @@ -110,14 +107,12 @@ 94. broadcast_like 95. Embedding 96. linalg_makediag -97. transpose 98. linalg_syrk 99. squeeze 101. ROIPooling 102. ftrl_update 103. SliceChannel 104. slice_like -105. depth_to_space 106. linalg_maketrian 108. pad 109. LayerNorm diff --git a/benchmark/opperf/nd_operations/array_rearrange.py b/benchmark/opperf/nd_operations/array_rearrange.py new file mode 100644 index 000..151127c --- /dev/null +++ b/benchmark/opperf/nd_operations/array_rearrange.py @@ -0,0 +1,57 @@ +# Licensed to the Apache Software Foundation (ASF) under one +# or more contributor license agreements. See the NOTICE file +# distributed with this work for additional information +# regarding copyright ownership. The ASF licenses this file +# to you under the Apache License, Version 2.0 (the +# "License"); you may not use this file except in compliance +# with the License. You may obtain a copy of the License at +# +# http://www.apache.org/licenses/LICENSE-2.0 +# +# Unless required by applicable law or agreed to in writing, +# software distributed under the License is distributed on an +# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY +# KIND, either express or implied. See the License for the +# specific language governing permissions and limitations +# under the License. + +import mxnet as mx +from benchmark.opperf.utils.benchmark_utils import run_op_benchmarks +from benchmark.opperf.utils.op_registry_utils import get_all_rearrange_operators + +"""Performance benchmark tests for MXNet NDArray Rearrange Operators. + +1. transpose +2. swapaxes +3. flip +4. depth_to_space +5. space_to_depth +""" + + +def run_rearrange_operators_benchmarks(ctx=mx.cpu(), dtype='float32', warmup=25, runs=100): +"""Runs benchmarks with the given context and precision (dtype) for all the +rearrange operators in MXNet. + +Parameters +-- +ctx: mx.ctx +Context to run benchmarks +dtype: str, default 'float32' +Precision to use for benchmarks +warmup: int, default 25 +Number of times to run for warmup +runs: int, default 100 +Number of runs to capture benchmark results + +Returns +--- +Dictionary of results. Key -> Name of the operator, Value -> Benchmark results. + +""" +# Fetch all optimizer operators +mx_rearrange_ops = get_all_rearrange_operators() + +# Run benchmarks +mx_rearrange_op_results = run_op_benchmarks(mx_rearrange_ops, dtype, ctx, warmup, runs) +return mx_rearrange_op_results diff --git a/benchmark/opperf/nd_operations/binary_operators.py b/benchmark/opperf/nd_operations/binary_operators.py index cca8f9d..1898f5d 100644 --- a/benchmark/opperf/nd_operations/binary_operators.py +++ b/benchmark/opperf/nd_operations/binary_operators.py @@ -39,7 +39,7 @@ from benchmark.opperf.utils.op_registry_utils import get_all_broadcast_binary_op def run_mx_binary_broadcast_operators_benchmarks(ctx=mx.cpu(), dtype='float32', warmup=25, runs=100): -"""Runs benchmarks with the given context and precision (dtype)for all the binary +"""Runs benchmarks with the given context and precision (dtype) for all the binary broadcast operators in MXNet.

[GitHub] [incubator-mxnet] sandeep-krishnamurthy merged pull request #15606: [Opperf] Add array rearrange operators to opperf

sandeep-krishnamurthy merged pull request #15606: [Opperf] Add array rearrange operators to opperf URL: https://github.com/apache/incubator-mxnet/pull/15606 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

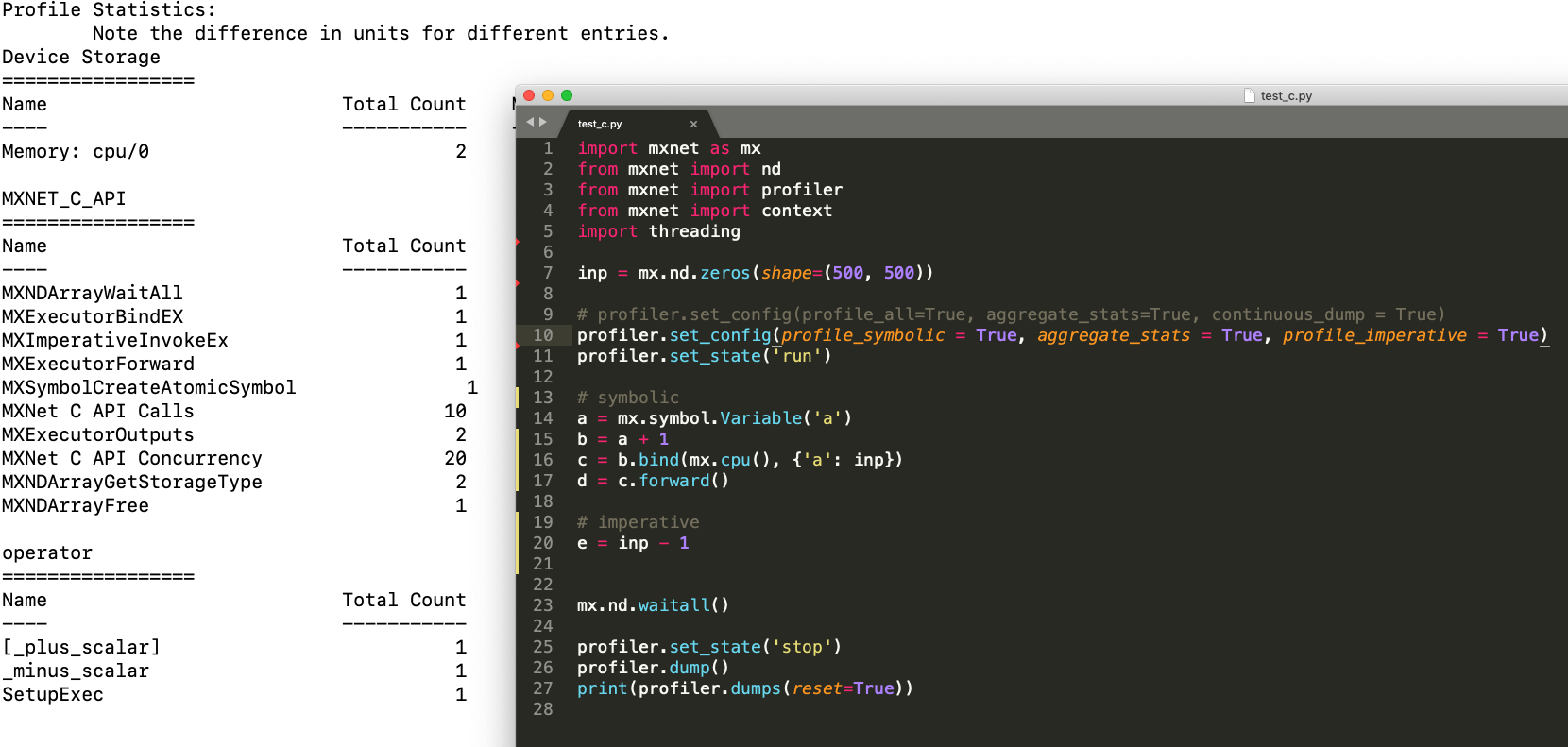

[GitHub] [incubator-mxnet] Zha0q1 edited a comment on issue #9686: [Discussion] MXNet 2.0 Roadmap (was: APIs that might be a good idea to break in 2.0)

Zha0q1 edited a comment on issue #9686: [Discussion] MXNet 2.0 Roadmap (was: APIs that might be a good idea to break in 2.0) URL: https://github.com/apache/incubator-mxnet/issues/9686#issuecomment-515231825 I would like to bring up one issue with profiler: currently, there is a flag `kSimbolic` that supposedly should control whether to profiler operators called in symbolic mode. However, there is rarely a use case where users would want to set it to `False`; also, in the backend, this flag is not used at all, meaning that even if it's set to `False`, the profiler will still profiler symbolic operators. I have a issue about this: https://github.com/apache/incubator-mxnet/issues/15658. I think maybe we should have this flag removed in 2.0 to avoid confusion? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] Zha0q1 commented on issue #9686: [Discussion] MXNet 2.0 Roadmap (was: APIs that might be a good idea to break in 2.0)

Zha0q1 commented on issue #9686: [Discussion] MXNet 2.0 Roadmap (was: APIs that might be a good idea to break in 2.0) URL: https://github.com/apache/incubator-mxnet/issues/9686#issuecomment-515231825 I would like to bring up one issue with profiler: currently, there is a flag `kSimbolic` that supposedly should control whether to profiler operators called in symbolic mode. However, there is rarely a use case where users would want to set it to `False`; also, in the backend, this flag is not used at all, meaning that even if it's set to `False`, the profiler will still profiler symbolic operators. I have a issue about this: https://github.com/apache/incubator-mxnet/issues/15658. I think maybe we should have this flag removed in 2.0? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] larry77 commented on issue #15649: Installation Fails on Debian Stable Machine

larry77 commented on issue #15649: Installation Fails on Debian Stable Machine

URL:

https://github.com/apache/incubator-mxnet/issues/15649#issuecomment-515231136

Hi,

And thanks!

The installation from source eventually fails for the same reasons, look at

this

g++ -std=gnu++11 -shared -L/usr/lib/R/lib -Wl,-z,relro -o mxnet.so

executor.o export.o im2rec.o io.o kvstore.o mxnet.o ndarray.o symbol.o -llapack

-lblas -L/usr/lib/R/lib -lR

make[1]: Leaving directory '/home/lorenzo/incubator-mxnet/R-package/src'

installing to /usr/local/lib/R/site-library/00LOCK-R-package/00new/mxnet/libs

** R

** demo

** inst

** byte-compile and prepare package for lazy loading

Note: ... may be used in an incorrect context

** help

No man pages found in package ‘mxnet’

*** installing help indices

** building package indices

** installing vignettes

** testing if installed package can be loaded from temporary location

[1] "Loading local: inst/libs/libmxnet.so"

Error: package or namespace load failed for ‘mxnet’:

.onLoad failed in loadNamespace() for 'mxnet', details:

call: dyn.load("R-package/inst/libs/libmxnet.so", local = FALSE)

error: unable to load shared object

'/home/lorenzo/incubator-mxnet/R-package/R-package/inst/libs/libmxnet.so':

/home/lorenzo/incubator-mxnet/R-package/R-package/inst/libs/libmxnet.so:

cannot open shared object file: No such file or directory

Error: loading failed

Execution halted

ERROR: loading failed

* removing ‘/usr/local/lib/R/site-library/mxnet’

make: *** [Makefile:701: rpkg] Error 1

Banging my head against the wall

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-mxnet] Zha0q1 closed issue #15658: profile_symbolic flag not working in profiler

Zha0q1 closed issue #15658: profile_symbolic flag not working in profiler URL: https://github.com/apache/incubator-mxnet/issues/15658 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] zachgk commented on issue #15649: Installation Fails on Debian Stable Machine

zachgk commented on issue #15649: Installation Fails on Debian Stable Machine URL: https://github.com/apache/incubator-mxnet/issues/15649#issuecomment-515223474 Try running this which might fix the cuda.h problem. So, you are updating the default configuration instead of using the two lines instead of it (it doesn't load the default configuration if the config.mk file exists). ``` cp make/config.mk config.mk echo "USE_OPENCV = 1" >> ./config.mk echo "USE_BLAS = openblas" >> ./config.mk ``` @aaronmarkham or @anirudhacharya, do you know what is wrong with the R installation? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] zachgk opened a new pull request #15660: Bump Scala version to 1.6

zachgk opened a new pull request #15660: Bump Scala version to 1.6 URL: https://github.com/apache/incubator-mxnet/pull/15660 Fix API deprecation versions that were changed in #15072 @roywei @lanking520 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] Zha0q1 edited a comment on issue #15069: Profiler RFC: Introducing new APIs

Zha0q1 edited a comment on issue #15069: Profiler RFC: Introducing new APIs URL: https://github.com/apache/incubator-mxnet/issues/15069#issuecomment-515213970 > optionally adding json output seems reasonable. during development of this feature, the intel folks were asking for more aggregation of the output, such as convolution ops for different input shapes, for instance, which seems perfectly fair (there’s a discussion issue about it somewhere). Anyway, whatever y’all decide to do, try to keep open the extensibility to stuff like that (which would lend itself more to json output than text output). However, I still think it’s important to have simple text output available if desired, since it gives a nice, clean one-look overview. Thanks for the tips! We have added support for sorting and json in this PR: https://github.com/apache/incubator-mxnet/pull/15132. Basically, we have added three new parameters to `dumps`, namely `sort_by`, `ascending`, and `format` by which you can control the aggregate stats output. We are keeping the old table view as it is clear to human; json format is intended for use cases where you want to parse the aggregate stats with a script This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] Zha0q1 commented on issue #15069: Profiler RFC: Introducing new APIs

Zha0q1 commented on issue #15069: Profiler RFC: Introducing new APIs URL: https://github.com/apache/incubator-mxnet/issues/15069#issuecomment-515213970 > optionally adding json output seems reasonable. during development of this feature, the intel folks were asking for more aggregation of the output, such as convolution ops for different input shapes, for instance, which seems perfectly fair (there’s a discussion issue about it somewhere). Anyway, whatever y’all decide to do, try to keep open the extensibility to stuff like that (which would lend itself more to json output than text output). However, I still think it’s important to have simple text output available if desired, since it gives a nice, clean one-look overview. Thanks for the tips! We have added support for sorting and json in this PR: https://github.com/apache/incubator-mxnet/pull/15132. Basically, we have added three new parameters to `dumps`, namely `sort_by`, `ascending`, and `format` by which you can control the aggregate stats output. We are keeping the old table view as it is clear to human; json format is intended for use cases where you want to parse the aggregate stats atomatically This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] cjolivier01 commented on issue #15069: Profiler RFC: Introducing new APIs

cjolivier01 commented on issue #15069: Profiler RFC: Introducing new APIs URL: https://github.com/apache/incubator-mxnet/issues/15069#issuecomment-515211810 output was loosely based on pytorch, btw. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] larry77 commented on issue #15649: Installation Fails on Debian Stable Machine

larry77 commented on issue #15649: Installation Fails on Debian Stable Machine URL: https://github.com/apache/incubator-mxnet/issues/15649#issuecomment-515211654 Hello, And thanks for helping out. I have the files ~$ ls mxnet/lib/libmxnet.so -rwxr-xr-x 1 lorenzo lorenzo 71740832 Jul 24 17:25 mxnet/lib/libmxnet.so ~$ ls mxnet/R-package/inst/libs/libmxnet.so -rwxr-xr-x 1 root root 71740832 Jul 24 21:57 mxnet/R-package/inst/libs/libmxnet.so What I do not have is /home/lorenzo/mxnet/R-package/R-package/inst/libs/libmxnet.so because I do not have an R-package directory nested inside another R-package directory. This is the content of my R-package ls R-package/ total 40 drwxr-xr-x 2 lorenzo lorenzo 213 Jul 24 17:11 demo -rw-r--r-- 1 lorenzo lorenzo 1036 Jul 24 17:11 DESCRIPTION -rw-r--r-- 1 lorenzo lorenzo 507 Jul 24 17:11 dummy.NAMESPACE drwxr-xr-x 4 rootroot 45 Jul 24 18:06 inst -rw-r--r-- 1 lorenzo lorenzo 11350 Jul 24 17:11 LICENSE -rw-r--r-- 1 rootroot 520 Jul 25 22:45 NAMESPACE drwxr-xr-x 2 lorenzo lorenzo 4096 Jul 24 17:11 R -rw-r--r-- 1 lorenzo lorenzo 1170 Jul 24 17:11 README.md drwxr-xr-x 2 lorenzo lorenzo 4096 Jul 24 18:07 src drwxr-xr-x 3 lorenzo lorenzo30 Jul 24 17:11 tests drwxr-xr-x 2 lorenzo lorenzo 4096 Jul 24 17:11 vignettes And I did not alter anything by hand in the structure of the directory. All I did was git clone --recursive https://github.com/apache/incubator-mxnet.git mxnet cd mxnet/docs/install ./install_mxnet_ubuntu_python.sh ./install_mxnet_ubuntu_r.sh Could it be a trivial problem, meaning that everything is fine but for some reason R gets confused and looks for the library in the wrong place? ### As to building from source according to $ git clone --recursive https://github.com/apache/incubator-mxnet $ cd incubator-mxnet $ echo "USE_OPENCV = 1" >> ./config.mk $ echo "USE_BLAS = openblas" >> ./config.mk $ make -j $(nproc) I have a problem: the process fails due to the absence of cuda.h, but I would like to build for the CPU only. Any suggestion is very welcome! This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] cjolivier01 commented on issue #15069: Profiler RFC: Introducing new APIs