[incubator-mxnet-site] branch asf-site updated: Bump the publish timestamp.

This is an automated email from the ASF dual-hosted git repository. aaronmarkham pushed a commit to branch asf-site in repository https://gitbox.apache.org/repos/asf/incubator-mxnet-site.git The following commit(s) were added to refs/heads/asf-site by this push: new 64477e4 Bump the publish timestamp. 64477e4 is described below commit 64477e41768f9862a499426a2343fba1f5cea290 Author: mxnet-ci AuthorDate: Sun Mar 1 06:42:27 2020 + Bump the publish timestamp. --- date.txt | 1 + 1 file changed, 1 insertion(+) diff --git a/date.txt b/date.txt new file mode 100644 index 000..cb4579e --- /dev/null +++ b/date.txt @@ -0,0 +1 @@ +Sun Mar 1 06:42:27 UTC 2020

[GitHub] [incubator-mxnet] pengzhao-intel commented on issue #17702: Support projection feature for LSTM on CPU (Only Inference)

pengzhao-intel commented on issue #17702: Support projection feature for LSTM on CPU (Only Inference) URL: https://github.com/apache/incubator-mxnet/pull/17702#issuecomment-593059183 Thanks for all of your great works. I will merge PR after passed CI. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] ChaiBapchya edited a comment on issue #17449: [Large Tensor] Implemented LT flag for OpPerf testing

ChaiBapchya edited a comment on issue #17449: [Large Tensor] Implemented LT flag for OpPerf testing URL: https://github.com/apache/incubator-mxnet/pull/17449#issuecomment-593053525 While full opperf suite was run initially (and has been linked in the description) was full opperf run after the subsequent commits? like new ops added and merges? Could you paste opperf results after commit 256ad70 Coz right now, with master (cuda, cudnn ON) full opperf suite runs into error for lamb_update_phase1 ``` Traceback (most recent call last): File "incubator-mxnet/benchmark/opperf/opperf.py", line 213, in sys.exit(main()) File "incubator-mxnet/benchmark/opperf/opperf.py", line 193, in main benchmark_results = run_all_mxnet_operator_benchmarks(ctx=ctx, dtype=dtype, profiler=profiler, int64_tensor=int64_tensor, warmup=warmup, runs=runs) File "incubator-mxnet/benchmark/opperf/opperf.py", line 111, in run_all_mxnet_operator_benchmarks mxnet_operator_benchmark_results.append(run_optimizer_operators_benchmarks(ctx=ctx, dtype=dtype, profiler=profiler, int64_tensor=int64_tensor, warmup=warmup, runs=runs)) File "/home/ubuntu/incubator-mxnet/benchmark/opperf/nd_operations/nn_optimizer_operators.py", line 142, in run_optimizer_operators_benchmarks mx_optimizer_op_results = run_op_benchmarks(mx_optimizer_ops, dtype, ctx, profiler, int64_tensor, warmup, runs) File "/home/ubuntu/incubator-mxnet/benchmark/opperf/utils/benchmark_utils.py", line 210, in run_op_benchmarks warmup=warmup, runs=runs) File "/home/ubuntu/incubator-mxnet/benchmark/opperf/utils/benchmark_utils.py", line 177, in run_performance_test benchmark_result = _run_nd_operator_performance_test(op, inputs, run_backward, warmup, runs, kwargs_list, profiler) File "/home/ubuntu/incubator-mxnet/benchmark/opperf/utils/benchmark_utils.py", line 114, in _run_nd_operator_performance_test _, _ = benchmark_helper_func(op, warmup, **kwargs_list[0]) File "/home/ubuntu/incubator-mxnet/benchmark/opperf/utils/profiler_utils.py", line 200, in cpp_profile_it res = func(*args, **kwargs) File "/home/ubuntu/incubator-mxnet/benchmark/opperf/utils/ndarray_utils.py", line 97, in nd_forward_and_profile res = op(**kwargs_new) File "", line 113, in lamb_update_phase1 File "/home/ubuntu/incubator-mxnet/python/mxnet/_ctypes/ndarray.py", line 91, in _imperative_invoke ctypes.byref(out_stypes))) File "/home/ubuntu/incubator-mxnet/python/mxnet/base.py", line 246, in check_call raise get_last_ffi_error() mxnet.base.MXNetError: MXNetError: Required parameter wd of float is not presented, in operator lamb_update_phase1(name="", t="1", rescale_grad="0.4", epsilon="1e-08", beta2="0.1", beta1="0.1") *** Error in `python': corrupted double-linked list: 0x55b58a93f6c0 *** ``` The PR which introduced lamb_update_phase1 to opperf https://github.com/apache/incubator-mxnet/pull/17542 worked for CUDA CUDNN ON but now it doesn't. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] ChaiBapchya edited a comment on issue #17449: [Large Tensor] Implemented LT flag for OpPerf testing

ChaiBapchya edited a comment on issue #17449: [Large Tensor] Implemented LT flag for OpPerf testing URL: https://github.com/apache/incubator-mxnet/pull/17449#issuecomment-593053525 While full opperf suite was run initially (and has been linked in the description) was full opperf run after the subsequent commits? like new ops added and merges? Could you paste opperf results after commit 56ad70 Coz right now, with master (cuda, cudnn ON) full opperf suite runs into error for lamb_update_phase1 ``` Traceback (most recent call last): File "incubator-mxnet/benchmark/opperf/opperf.py", line 213, in sys.exit(main()) File "incubator-mxnet/benchmark/opperf/opperf.py", line 193, in main benchmark_results = run_all_mxnet_operator_benchmarks(ctx=ctx, dtype=dtype, profiler=profiler, int64_tensor=int64_tensor, warmup=warmup, runs=runs) File "incubator-mxnet/benchmark/opperf/opperf.py", line 111, in run_all_mxnet_operator_benchmarks mxnet_operator_benchmark_results.append(run_optimizer_operators_benchmarks(ctx=ctx, dtype=dtype, profiler=profiler, int64_tensor=int64_tensor, warmup=warmup, runs=runs)) File "/home/ubuntu/incubator-mxnet/benchmark/opperf/nd_operations/nn_optimizer_operators.py", line 142, in run_optimizer_operators_benchmarks mx_optimizer_op_results = run_op_benchmarks(mx_optimizer_ops, dtype, ctx, profiler, int64_tensor, warmup, runs) File "/home/ubuntu/incubator-mxnet/benchmark/opperf/utils/benchmark_utils.py", line 210, in run_op_benchmarks warmup=warmup, runs=runs) File "/home/ubuntu/incubator-mxnet/benchmark/opperf/utils/benchmark_utils.py", line 177, in run_performance_test benchmark_result = _run_nd_operator_performance_test(op, inputs, run_backward, warmup, runs, kwargs_list, profiler) File "/home/ubuntu/incubator-mxnet/benchmark/opperf/utils/benchmark_utils.py", line 114, in _run_nd_operator_performance_test _, _ = benchmark_helper_func(op, warmup, **kwargs_list[0]) File "/home/ubuntu/incubator-mxnet/benchmark/opperf/utils/profiler_utils.py", line 200, in cpp_profile_it res = func(*args, **kwargs) File "/home/ubuntu/incubator-mxnet/benchmark/opperf/utils/ndarray_utils.py", line 97, in nd_forward_and_profile res = op(**kwargs_new) File "", line 113, in lamb_update_phase1 File "/home/ubuntu/incubator-mxnet/python/mxnet/_ctypes/ndarray.py", line 91, in _imperative_invoke ctypes.byref(out_stypes))) File "/home/ubuntu/incubator-mxnet/python/mxnet/base.py", line 246, in check_call raise get_last_ffi_error() mxnet.base.MXNetError: MXNetError: Required parameter wd of float is not presented, in operator lamb_update_phase1(name="", t="1", rescale_grad="0.4", epsilon="1e-08", beta2="0.1", beta1="0.1") *** Error in `python': corrupted double-linked list: 0x55b58a93f6c0 *** ``` The PR which introduced lamb_update_phase1 to opperf https://github.com/apache/incubator-mxnet/pull/17542 worked for CUDA CUDNN ON but now it doesn't. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] ChaiBapchya commented on issue #17449: [Large Tensor] Implemented LT flag for OpPerf testing

ChaiBapchya commented on issue #17449: [Large Tensor] Implemented LT flag for OpPerf testing URL: https://github.com/apache/incubator-mxnet/pull/17449#issuecomment-593053525 While full opperf suite was run initially (and has been linked in the description) was full opperf run after the subsequent commits? like new ops added and merges? Coz right now, with master (cuda, cudnn ON) full opperf suite runs into error for lamb_update_phase1 ``` Traceback (most recent call last): File "incubator-mxnet/benchmark/opperf/opperf.py", line 213, in sys.exit(main()) File "incubator-mxnet/benchmark/opperf/opperf.py", line 193, in main benchmark_results = run_all_mxnet_operator_benchmarks(ctx=ctx, dtype=dtype, profiler=profiler, int64_tensor=int64_tensor, warmup=warmup, runs=runs) File "incubator-mxnet/benchmark/opperf/opperf.py", line 111, in run_all_mxnet_operator_benchmarks mxnet_operator_benchmark_results.append(run_optimizer_operators_benchmarks(ctx=ctx, dtype=dtype, profiler=profiler, int64_tensor=int64_tensor, warmup=warmup, runs=runs)) File "/home/ubuntu/incubator-mxnet/benchmark/opperf/nd_operations/nn_optimizer_operators.py", line 142, in run_optimizer_operators_benchmarks mx_optimizer_op_results = run_op_benchmarks(mx_optimizer_ops, dtype, ctx, profiler, int64_tensor, warmup, runs) File "/home/ubuntu/incubator-mxnet/benchmark/opperf/utils/benchmark_utils.py", line 210, in run_op_benchmarks warmup=warmup, runs=runs) File "/home/ubuntu/incubator-mxnet/benchmark/opperf/utils/benchmark_utils.py", line 177, in run_performance_test benchmark_result = _run_nd_operator_performance_test(op, inputs, run_backward, warmup, runs, kwargs_list, profiler) File "/home/ubuntu/incubator-mxnet/benchmark/opperf/utils/benchmark_utils.py", line 114, in _run_nd_operator_performance_test _, _ = benchmark_helper_func(op, warmup, **kwargs_list[0]) File "/home/ubuntu/incubator-mxnet/benchmark/opperf/utils/profiler_utils.py", line 200, in cpp_profile_it res = func(*args, **kwargs) File "/home/ubuntu/incubator-mxnet/benchmark/opperf/utils/ndarray_utils.py", line 97, in nd_forward_and_profile res = op(**kwargs_new) File "", line 113, in lamb_update_phase1 File "/home/ubuntu/incubator-mxnet/python/mxnet/_ctypes/ndarray.py", line 91, in _imperative_invoke ctypes.byref(out_stypes))) File "/home/ubuntu/incubator-mxnet/python/mxnet/base.py", line 246, in check_call raise get_last_ffi_error() mxnet.base.MXNetError: MXNetError: Required parameter wd of float is not presented, in operator lamb_update_phase1(name="", t="1", rescale_grad="0.4", epsilon="1e-08", beta2="0.1", beta1="0.1") *** Error in `python': corrupted double-linked list: 0x55b58a93f6c0 *** ``` The PR which introduced lamb_update_phase1 to opperf https://github.com/apache/incubator-mxnet/pull/17542 worked for CUDA CUDNN ON but now it doesn't. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] leezu opened a new pull request #17732: Update 3rdparty/ps-lite

leezu opened a new pull request #17732: Update 3rdparty/ps-lite URL: https://github.com/apache/incubator-mxnet/pull/17732 Fixes #17299 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] leezu commented on issue #17658: Update website, README and NEWS with 1.6.0

leezu commented on issue #17658: Update website, README and NEWS with 1.6.0 URL: https://github.com/apache/incubator-mxnet/pull/17658#issuecomment-593048237 Retriggered windows gpu pipeline This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] leezu commented on issue #17658: Update website, README and NEWS with 1.6.0

leezu commented on issue #17658: Update website, README and NEWS with 1.6.0 URL: https://github.com/apache/incubator-mxnet/pull/17658#issuecomment-593048160 > What is the timeline for getting Windows pip packages ready? They need to be built manually. @yajiedesign was helping with that. @yajiedesign do you have an ETA? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] zixuanweeei edited a comment on issue #17632: [Large Tensor] Fixed RNN op

zixuanweeei edited a comment on issue #17632: [Large Tensor] Fixed RNN op URL: https://github.com/apache/incubator-mxnet/pull/17632#issuecomment-593045926 > @zixuanweeei Could you please take a look at the changes? Seems need coordinate with the changes in #17702. Let's wait for @connorgoggins updating type to `size_t` for some variables representing the size and his feedback. Overall looks good. We can get this merged first. Due to the fact that the `hidden_size`/`state_size` are still remaining to `int` type, I think there is not much stuff to do for `projection_size` if no further concern. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] zixuanweeei commented on issue #17632: [Large Tensor] Fixed RNN op

zixuanweeei commented on issue #17632: [Large Tensor] Fixed RNN op URL: https://github.com/apache/incubator-mxnet/pull/17632#issuecomment-593045926 > @zixuanweeei Could you please take a look at the changes? Seems need coordinate with the changes in #17702. Let's wait for @connorgoggins updating type to `size_t` for some variables representing the size and his feedback. Overall looks good. We can get this merged first. Due to the fact that the `hidden_size`/`state_size` are still remaining to `int` type, I think there is not much stuff to do for `projection_size` if no further consideration. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] zixuanweeei commented on a change in pull request #17632: [Large Tensor] Fixed RNN op

zixuanweeei commented on a change in pull request #17632: [Large Tensor] Fixed RNN op URL: https://github.com/apache/incubator-mxnet/pull/17632#discussion_r386071456 ## File path: src/operator/rnn_impl.h ## @@ -146,15 +146,15 @@ void LstmForwardTraining(DType* ws, Tensor hx(hx_ptr, Shape3(total_layers, N, H)); Tensor cx(cx_ptr, Shape3(total_layers, N, H)); const int b_size = 2 * H * 4; Review comment: If I understand it correctly, this is also the reason that `hidden_size` is remaining to be `int` type, right? If so, `b_size` here, representing the total size of i2h/h2h bias of four gates, still has some overflow risks. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] zixuanweeei commented on a change in pull request #17702: Support projection feature for LSTM on CPU (Only Inference)

zixuanweeei commented on a change in pull request #17702: Support projection

feature for LSTM on CPU (Only Inference)

URL: https://github.com/apache/incubator-mxnet/pull/17702#discussion_r386069714

##

File path: src/operator/rnn_impl.h

##

@@ -219,7 +220,10 @@ void LstmForwardInferenceSingleLayer(DType* ws,

DType* cy_ptr) {

using namespace mshadow;

const Tensor wx(w_ptr, Shape2(H * 4, I));

- const Tensor wh(w_ptr + I * H * 4, Shape2(H * 4, H));

+ const Tensor wh(w_ptr + I * H * 4, Shape2(H * 4, (P ? P :

H)));

+ Tensor whr(w_ptr, Shape2(1, 1));

+ if (P > 0)

+whr = Tensor(wh.dptr_ + P * 4 * H, Shape2(P, H));

Review comment:

Put them into the same line, as well as L236.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-mxnet] zixuanweeei commented on a change in pull request #17702: Support projection feature for LSTM on CPU (Only Inference)

zixuanweeei commented on a change in pull request #17702: Support projection

feature for LSTM on CPU (Only Inference)

URL: https://github.com/apache/incubator-mxnet/pull/17702#discussion_r386069647

##

File path: src/operator/rnn.cc

##

@@ -385,7 +399,9 @@ The definition of GRU here is slightly different from

paper but compatible with

})

.set_attr("FInferShape", RNNShape)

.set_attr("FInferType", RNNType)

+#if MXNET_USE_MKLDNN == 1

Review comment:

Done. The default storage type inference function will be executed when

FInferStorageType is not registered.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-mxnet] zixuanweeei commented on a change in pull request #17702: Support projection feature for LSTM on CPU (Only Inference)

zixuanweeei commented on a change in pull request #17702: Support projection

feature for LSTM on CPU (Only Inference)

URL: https://github.com/apache/incubator-mxnet/pull/17702#discussion_r386069654

##

File path: src/operator/rnn.cc

##

@@ -427,7 +443,9 @@ NNVM_REGISTER_OP(_backward_RNN)

.set_attr_parser(ParamParser)

.set_attr("TIsLayerOpBackward", true)

.set_attr("TIsBackward", true)

+#if MXNET_USE_MKLDNN == 1

Review comment:

Done. Thanks.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-mxnet] ChaiBapchya commented on issue #17487: [OpPerf] Consolidate array manipulation related operators

ChaiBapchya commented on issue #17487: [OpPerf] Consolidate array manipulation related operators URL: https://github.com/apache/incubator-mxnet/pull/17487#issuecomment-593025725 CPU : Entire OpPerf suite https://gist.github.com/ChaiBapchya/5b5365563b12d5156515ebc62c3032c0 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[incubator-mxnet-site] branch asf-site updated: Bump the publish timestamp.

This is an automated email from the ASF dual-hosted git repository. aaronmarkham pushed a commit to branch asf-site in repository https://gitbox.apache.org/repos/asf/incubator-mxnet-site.git The following commit(s) were added to refs/heads/asf-site by this push: new f2263db Bump the publish timestamp. f2263db is described below commit f2263db0d6fa3bb7248d3c8bee2033960259350e Author: mxnet-ci AuthorDate: Sun Mar 1 00:42:40 2020 + Bump the publish timestamp. --- date.txt | 1 + 1 file changed, 1 insertion(+) diff --git a/date.txt b/date.txt new file mode 100644 index 000..eaabb32 --- /dev/null +++ b/date.txt @@ -0,0 +1 @@ +Sun Mar 1 00:42:40 UTC 2020

[GitHub] [incubator-mxnet] oleg-trott commented on issue #17472: Memory corruption issue with unravel_index

oleg-trott commented on issue #17472: Memory corruption issue with unravel_index URL: https://github.com/apache/incubator-mxnet/issues/17472#issuecomment-593006992 I can confirm it here too (mxnet 1.5.1 on Linux) ``` free(): invalid pointer Aborted (core dumped) ``` This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] ptrendx commented on issue #17658: Update website, README and NEWS with 1.6.0

ptrendx commented on issue #17658: Update website, README and NEWS with 1.6.0 URL: https://github.com/apache/incubator-mxnet/pull/17658#issuecomment-593000714 > Get Started for Windows is not covered in this PR. So users visiting https://mxnet.apache.org/get_started?version=v1.6.0=windows=python=gpu=pip; would see empty content. > > Could you include a paragraph about pip package for 1.6.0 not being available yet? That will be better than showing blank instructions. What is the timeline for getting Windows pip packages ready? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] oleg-trott edited a comment on issue #17684: The output of the ReLU layer in MXNET is different from that in tensorflow and cntk

oleg-trott edited a comment on issue #17684: The output of the ReLU layer in MXNET is different from that in tensorflow and cntk URL: https://github.com/apache/incubator-mxnet/issues/17684#issuecomment-592997242 @braindotai > As given [here](https://mxnet.apache.org/api/python/docs/api/gluon/model_zoo/index.html) make sure that you are normalizing your image as below I don't think the normalization is the culprit, if the previous layer outputs match. Keras probably uses the same network weights with all backends. @Justobe I don't have the other frameworks installed, so I can't reproduce this, but my suggestion is: check the inputs to `relu`. Since it's a very simple function ``` x * (x > 0) ``` it should be easy to check that the output is what it's supposed to be. If it is not, use the input and output of `conv1_relu` to try to create a reproducible case that doesn't need other frameworks. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] oleg-trott commented on issue #17684: The output of the ReLU layer in MXNET is different from that in tensorflow and cntk

oleg-trott commented on issue #17684: The output of the ReLU layer in MXNET is different from that in tensorflow and cntk URL: https://github.com/apache/incubator-mxnet/issues/17684#issuecomment-592997242 @braindotai > As given [here](https://mxnet.apache.org/api/python/docs/api/gluon/model_zoo/index.html) make sure that you are normalizing your image as below I don't think the normalization is the culprit, if the previous layer outputs match. Keras probably uses the same network weights with all backends. @Justobe I don't have the other frameworks installed, so I can't reproduce this, but my suggestion is: check the inputs to `relu`. Since it's a very simple function ``` x * (x > 0) ``` it should be easy to check that the output is what it's supposed to be. If not, use the input and output to try to create a reproducible case that doesn't need other frameworks. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[incubator-mxnet] branch master updated: Fix get_started pip instructions (#17725)

This is an automated email from the ASF dual-hosted git repository.

lausen pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/incubator-mxnet.git

The following commit(s) were added to refs/heads/master by this push:

new 10a12d5 Fix get_started pip instructions (#17725)

10a12d5 is described below

commit 10a12d59f67ad21032a94b1721aaf9b96fddac85

Author: Leonard Lausen

AuthorDate: Sat Feb 29 13:09:12 2020 -0800

Fix get_started pip instructions (#17725)

The class attribute in the respective .md files should match one of the

versions

listed in the version dropdown specified in get_started.html

---

docs/static_site/src/_includes/get_started/linux/python/cpu/pip.md | 6 +++---

docs/static_site/src/_includes/get_started/linux/python/gpu/pip.md | 6 +++---

docs/static_site/src/_includes/get_started/macos/python/cpu/pip.md | 6 +++---

.../static_site/src/_includes/get_started/windows/python/cpu/pip.md | 6 +++---

.../static_site/src/_includes/get_started/windows/python/gpu/pip.md | 6 +++---

5 files changed, 15 insertions(+), 15 deletions(-)

diff --git a/docs/static_site/src/_includes/get_started/linux/python/cpu/pip.md

b/docs/static_site/src/_includes/get_started/linux/python/cpu/pip.md

index 40eeb9c..08901ae 100644

--- a/docs/static_site/src/_includes/get_started/linux/python/cpu/pip.md

+++ b/docs/static_site/src/_includes/get_started/linux/python/cpu/pip.md

@@ -1,6 +1,6 @@

Run the following command:

-

+

{% highlight bash %}

$ pip install mxnet

{% endhighlight %}

@@ -13,7 +13,7 @@ in the https://mxnet.io/api/faq/perf#intel-cpu;>MXNet tuning guide.

$ pip install mxnet-mkl

{% endhighlight %}

-

+

@@ -118,4 +118,4 @@ $ pip install --pre mxnet-mkl -f

https://dist.mxnet.io/python/all

-{% include /get_started/pip_snippet.md %}

\ No newline at end of file

+{% include /get_started/pip_snippet.md %}

diff --git a/docs/static_site/src/_includes/get_started/linux/python/gpu/pip.md

b/docs/static_site/src/_includes/get_started/linux/python/gpu/pip.md

index f348621..8848edd 100644

--- a/docs/static_site/src/_includes/get_started/linux/python/gpu/pip.md

+++ b/docs/static_site/src/_includes/get_started/linux/python/gpu/pip.md

@@ -1,11 +1,11 @@

Run the following command:

-

+

{% highlight bash %}

$ pip install mxnet-cu101

{% endhighlight %}

-

+

{% highlight bash %}

@@ -71,4 +71,4 @@ $ pip install --pre mxnet-cu102 -f

https://dist.mxnet.io/python/all

{% include /get_started/pip_snippet.md %}

-{% include /get_started/gpu_snippet.md %}

\ No newline at end of file

+{% include /get_started/gpu_snippet.md %}

diff --git a/docs/static_site/src/_includes/get_started/macos/python/cpu/pip.md

b/docs/static_site/src/_includes/get_started/macos/python/cpu/pip.md

index a74910e..35c3b78 100644

--- a/docs/static_site/src/_includes/get_started/macos/python/cpu/pip.md

+++ b/docs/static_site/src/_includes/get_started/macos/python/cpu/pip.md

@@ -1,11 +1,11 @@

Run the following command:

-

+

{% highlight bash %}

$ pip install mxnet

{% endhighlight %}

-

+

{% highlight bash %}

@@ -70,4 +70,4 @@ $ pip install --pre mxnet -f https://dist.mxnet.io/python/all

-{% include /get_started/pip_snippet.md %}

\ No newline at end of file

+{% include /get_started/pip_snippet.md %}

diff --git

a/docs/static_site/src/_includes/get_started/windows/python/cpu/pip.md

b/docs/static_site/src/_includes/get_started/windows/python/cpu/pip.md

index 60a4b6a..7061794 100644

--- a/docs/static_site/src/_includes/get_started/windows/python/cpu/pip.md

+++ b/docs/static_site/src/_includes/get_started/windows/python/cpu/pip.md

@@ -1,12 +1,12 @@

Run the following command:

-

+

{% highlight bash %}

$ pip install mxnet

{% endhighlight %}

-

+

{% highlight bash %}

@@ -70,4 +70,4 @@ $ pip install --pre mxnet -f https://dist.mxnet.io/python/all

{% include /get_started/pip_snippet.md %}

-{% include /get_started/gpu_snippet.md %}

\ No newline at end of file

+{% include /get_started/gpu_snippet.md %}

diff --git

a/docs/static_site/src/_includes/get_started/windows/python/gpu/pip.md

b/docs/static_site/src/_includes/get_started/windows/python/gpu/pip.md

index cb57b13..194a5a3 100644

--- a/docs/static_site/src/_includes/get_started/windows/python/gpu/pip.md

+++ b/docs/static_site/src/_includes/get_started/windows/python/gpu/pip.md

@@ -1,12 +1,12 @@

Run the following command:

-

+

{% highlight bash %}

$ pip install mxnet-cu101

{% endhighlight %}

-

+

{% highlight bash %}

@@ -71,4 +71,4 @@ $ pip install --pre mxnet-cu102 -f

https://dist.mxnet.io/python/all

{% include /get_started/pip_snippet.md %}

-{% include /get_started/gpu_snippet.md %}

\ No newline at end of file

+{% include /get_started/gpu_snippet.md %}

[GitHub] [incubator-mxnet] leezu merged pull request #17725: Fix get_started pip instructions

leezu merged pull request #17725: Fix get_started pip instructions URL: https://github.com/apache/incubator-mxnet/pull/17725 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] szha commented on issue #17701: [RFC] New Branches for MXNet 1.x, 1.7.x, and 2.x

szha commented on issue #17701: [RFC] New Branches for MXNet 1.x, 1.7.x, and 2.x URL: https://github.com/apache/incubator-mxnet/issues/17701#issuecomment-592984631 Contributors are encouraged to create PR against master branch. Since master branch is the development branch for 2.0, backward incompatible changes will be allowed, while 1.x branch only allows backward compatible changes. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[incubator-mxnet-site] branch asf-site updated: Bump the publish timestamp.

This is an automated email from the ASF dual-hosted git repository. aaronmarkham pushed a commit to branch asf-site in repository https://gitbox.apache.org/repos/asf/incubator-mxnet-site.git The following commit(s) were added to refs/heads/asf-site by this push: new 5f128b7 Bump the publish timestamp. 5f128b7 is described below commit 5f128b78adb43f9e7fe3fb040e72179ed93cf7ce Author: mxnet-ci AuthorDate: Sat Feb 29 18:40:07 2020 + Bump the publish timestamp. --- date.txt | 1 + 1 file changed, 1 insertion(+) diff --git a/date.txt b/date.txt new file mode 100644 index 000..5be2e7e --- /dev/null +++ b/date.txt @@ -0,0 +1 @@ +Sat Feb 29 18:40:07 UTC 2020

[GitHub] [incubator-mxnet] leezu opened a new issue #17731: Large Tensor nightly test broken

leezu opened a new issue #17731: Large Tensor nightly test broken URL: https://github.com/apache/incubator-mxnet/issues/17731 `[2020-02-28T20:55:33.342Z] mxnet.base.MXNetError: MXNetError: Shape inconsistent, Provided = [43], inferred shape=[5032704]` http://jenkins.mxnet-ci.amazon-ml.com/blue/organizations/jenkins/NightlyTestsForBinaries/detail/master/612/pipeline The test ran on https://github.com/apache/incubator-mxnet/commit/b6002fd7e66651eae87026f076a1c42c1cb93db5, ie on the commit prior to the switch to cmake build was merged. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] leezu commented on a change in pull request #17725: Fix get_started pip instructions

leezu commented on a change in pull request #17725: Fix get_started pip

instructions

URL: https://github.com/apache/incubator-mxnet/pull/17725#discussion_r386042558

##

File path: docs/static_site/src/_includes/get_started/windows/python/cpu/pip.md

##

@@ -70,4 +70,4 @@ $ pip install --pre mxnet -f https://dist.mxnet.io/python/all

{% include /get_started/pip_snippet.md %}

-{% include /get_started/gpu_snippet.md %}

Review comment:

Yes. My editor ensures each file has eol by default

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-mxnet] sxjscience merged pull request #17400: [MXNET-#16167] Refactor Optimizer

sxjscience merged pull request #17400: [MXNET-#16167] Refactor Optimizer URL: https://github.com/apache/incubator-mxnet/pull/17400 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] ptrendx commented on a change in pull request #17725: Fix get_started pip instructions

ptrendx commented on a change in pull request #17725: Fix get_started pip

instructions

URL: https://github.com/apache/incubator-mxnet/pull/17725#discussion_r386041095

##

File path: docs/static_site/src/_includes/get_started/windows/python/cpu/pip.md

##

@@ -70,4 +70,4 @@ $ pip install --pre mxnet -f https://dist.mxnet.io/python/all

{% include /get_started/pip_snippet.md %}

-{% include /get_started/gpu_snippet.md %}

Review comment:

I bet it is eol character - those md files had no eol before

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-mxnet] dorijanf commented on issue #17730: 1.6.0 installation error using pip on windows platform

dorijanf commented on issue #17730: 1.6.0 installation error using pip on windows platform URL: https://github.com/apache/incubator-mxnet/issues/17730#issuecomment-592965293 If I'm not mistaken this is the same issue https://github.com/apache/incubator-mxnet/issues/17719 Apparently it's being fixed. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

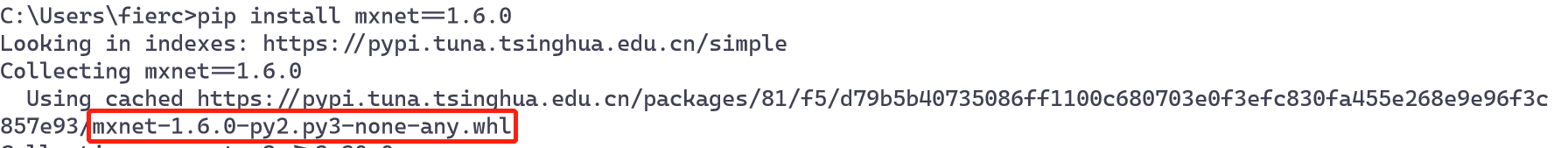

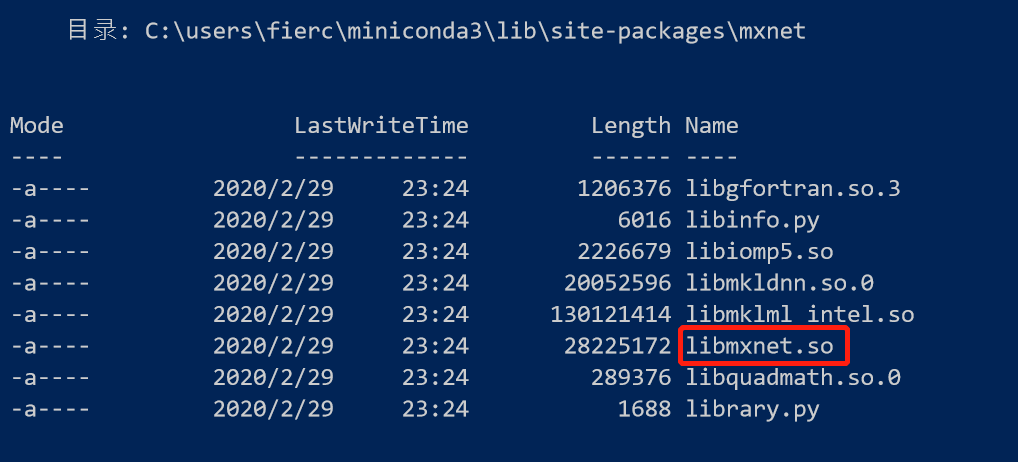

[GitHub] [incubator-mxnet] fierceX opened a new issue #17730: 1.6.0 installation error using pip on windows platform

fierceX opened a new issue #17730: 1.6.0 installation error using pip on windows platform URL: https://github.com/apache/incubator-mxnet/issues/17730 ## Description After installing using pip on the windows platform, the import reports an error. I found that the installation did not look like a package for the windwos platform. ```bash RuntimeError: Cannot find the MXNet library. List of candidates: c:\users\fierc\miniconda3\lib\site-packages\mxnet\libmxnet.dll c:\users\fierc\miniconda3\lib\site-packages\mxnet\../../lib/libmxnet.dll c:\users\fierc\miniconda3\lib\site-packages\mxnet\../../build/libmxnet.dll c:\users\fierc\miniconda3\lib\site-packages\mxnet\../../build\libmxnet.dll c:\users\fierc\miniconda3\lib\site-packages\mxnet\../../build\Release\libmxnet.dll c:\users\fierc\miniconda3\lib\site-packages\mxnet\../../windows/x64\Release\libmxnet.dll ```   This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] Bumblebee269 opened a new issue #17729: ONNX import does not work

Bumblebee269 opened a new issue #17729: ONNX import does not work

URL: https://github.com/apache/incubator-mxnet/issues/17729

## Description

Trying to open an onnx model, generates an error: load_model(...)

Looking into the code of mxnet/contrib/onnx/onnx2mx/import_model.py, it

seems that instead of load_model(...), the method load(load) should be invoked.

### Error Message

Exception message generated on line 58 in import_model.py:

module 'onnx' has no attribute 'load_model'

## To Reproduce

(If you developed your own code, please provide a short script that

reproduces the error. For existing examples, please provide link.)

```

import mxnet as mx

import mxnet.contrib.onnx as onnx_mxnet

import onnx

sym, arg, aux =

onnx_mxnet.import_model("C:\\Users\\Fred\Downloads\\dummy_model.onnx")

mx.viz.plot_network(sym, node_attrs={"shape":"oval","fixedsize":"false"})

```

### Steps to reproduce

(Paste the commands you ran that produced the error.)

1.

2.

## What have you tried to solve it?

1.

2.

## Environment

Windows 10, 64-bit, VS2017, Anaconda-3 64 bit, mxnet 1.4.1

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-mxnet] TaoLv commented on issue #17701: [RFC] New Branches for MXNet 1.x, 1.7.x, and 2.x

TaoLv commented on issue #17701: [RFC] New Branches for MXNet 1.x, 1.7.x, and 2.x URL: https://github.com/apache/incubator-mxnet/issues/17701#issuecomment-592947107 Nice. It's an essential step towards MXNet 2.0. Curious to know that do we still encourage contributors to create PR against the master branch? And in what strategy we need pick a commit to branch 1.x? Or they need create two PRs against two branches simultaneously? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] TaoLv commented on issue #17632: [Large Tensor] Fixed RNN op

TaoLv commented on issue #17632: [Large Tensor] Fixed RNN op URL: https://github.com/apache/incubator-mxnet/pull/17632#issuecomment-592945035 @zixuanweeei Could you please take a look at the changes? Seems need coordinate with the changes in #17702. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[incubator-mxnet] branch master updated: [MKLDNN] apply MKLDNNRun to quantized_act/transpose (#17689)

This is an automated email from the ASF dual-hosted git repository.

taolv pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/incubator-mxnet.git

The following commit(s) were added to refs/heads/master by this push:

new 88b3051 [MKLDNN] apply MKLDNNRun to quantized_act/transpose (#17689)

88b3051 is described below

commit 88b3051f290c994daed5cac7c9724319b7b6aba0

Author: Wuxun Zhang

AuthorDate: Sat Feb 29 21:17:38 2020 +0800

[MKLDNN] apply MKLDNNRun to quantized_act/transpose (#17689)

* apply MKLDNNRun to quantized_act/transpose ops

* run CI

---

src/operator/quantization/mkldnn/mkldnn_quantized_act.cc | 2 +-

src/operator/tensor/matrix_op.cc | 2 +-

2 files changed, 2 insertions(+), 2 deletions(-)

diff --git a/src/operator/quantization/mkldnn/mkldnn_quantized_act.cc

b/src/operator/quantization/mkldnn/mkldnn_quantized_act.cc

index bc69cb5..86acac8 100644

--- a/src/operator/quantization/mkldnn/mkldnn_quantized_act.cc

+++ b/src/operator/quantization/mkldnn/mkldnn_quantized_act.cc

@@ -40,7 +40,7 @@ static void MKLDNNQuantizedActForward(const nnvm::NodeAttrs&

attrs,

<< "_contrib_quantized_act op only supports uint8 and int8 as input "

"type";

- MKLDNNActivationForward(attrs, ctx, in_data[0], req[0], out_data[0]);

+ MKLDNNRun(MKLDNNActivationForward, attrs, ctx, in_data[0], req[0],

out_data[0]);

out_data[1].data().dptr()[0] = in_data[1].data().dptr()[0];

out_data[2].data().dptr()[0] = in_data[2].data().dptr()[0];

}

diff --git a/src/operator/tensor/matrix_op.cc b/src/operator/tensor/matrix_op.cc

index f00caf3..9e63730 100644

--- a/src/operator/tensor/matrix_op.cc

+++ b/src/operator/tensor/matrix_op.cc

@@ -289,7 +289,7 @@ static void TransposeComputeExCPU(const nnvm::NodeAttrs&

attrs,

CHECK_EQ(outputs.size(), 1U);

if (SupportMKLDNNTranspose(param, inputs[0]) && req[0] == kWriteTo) {

-MKLDNNTransposeForward(attrs, ctx, inputs[0], req[0], outputs[0]);

+MKLDNNRun(MKLDNNTransposeForward, attrs, ctx, inputs[0], req[0],

outputs[0]);

return;

}

FallBackCompute(Transpose, attrs, ctx, inputs, req, outputs);

[GitHub] [incubator-mxnet] TaoLv merged pull request #17689: [MKLDNN] apply MKLDNNRun to quantized_act/transpose

TaoLv merged pull request #17689: [MKLDNN] apply MKLDNNRun to quantized_act/transpose URL: https://github.com/apache/incubator-mxnet/pull/17689 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] szha commented on issue #17701: [RFC] New Branches for MXNet 1.x, 1.7.x, and 2.x

szha commented on issue #17701: [RFC] New Branches for MXNet 1.x, 1.7.x, and 2.x URL: https://github.com/apache/incubator-mxnet/issues/17701#issuecomment-592944338 cc @apache/mxnet-committers This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] TaoLv commented on a change in pull request #17707: [MKLDNN] Remove overhead of sg_mkldnn_fullyconnected op

TaoLv commented on a change in pull request #17707: [MKLDNN] Remove overhead of

sg_mkldnn_fullyconnected op

URL: https://github.com/apache/incubator-mxnet/pull/17707#discussion_r386027412

##

File path: src/operator/subgraph/mkldnn/mkldnn_fc.cc

##

@@ -143,20 +138,11 @@ void SgMKLDNNFCOp::Forward(const OpContext ,

}

min_data = in_data[base_num_inputs +

quantized_fullc::kDataMin].data().dptr()[0];

max_data = in_data[base_num_inputs +

quantized_fullc::kDataMax].data().dptr()[0];

-if (!mkldnn_param.enable_float_output) {

- total_num_outputs = base_num_outputs * 3;

-}

}

- CHECK_EQ(in_data.size(), total_num_inputs);

- CHECK_EQ(out_data.size(), total_num_outputs);

-

- NDArray data = in_data[fullc::kData];

- NDArray weight = in_data[fullc::kWeight];

- NDArray output = out_data[fullc::kOut];

- MKLDNNFCFlattenData(default_param, );

- if (initialized_ && mkldnn_param.quantized) {

-if (channel_wise) {

+ if (initialized_ && mkldnn_param.quantized &&

+ dmlc::GetEnv("MXNET_MKLDNN_QFC_DYNAMIC_PARAMS", 0)) {

Review comment:

Is this new?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-mxnet] TaoLv commented on a change in pull request #17702: Support projection feature for LSTM on CPU (Only Inference)

TaoLv commented on a change in pull request #17702: Support projection feature

for LSTM on CPU (Only Inference)

URL: https://github.com/apache/incubator-mxnet/pull/17702#discussion_r386026804

##

File path: src/operator/rnn.cc

##

@@ -385,7 +399,9 @@ The definition of GRU here is slightly different from

paper but compatible with

})

.set_attr("FInferShape", RNNShape)

.set_attr("FInferType", RNNType)

+#if MXNET_USE_MKLDNN == 1

Review comment:

Let's merge this check with the one at L407.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-mxnet] TaoLv commented on a change in pull request #17702: Support projection feature for LSTM on CPU (Only Inference)

TaoLv commented on a change in pull request #17702: Support projection feature

for LSTM on CPU (Only Inference)

URL: https://github.com/apache/incubator-mxnet/pull/17702#discussion_r386027073

##

File path: src/operator/rnn_impl.h

##

@@ -219,7 +220,10 @@ void LstmForwardInferenceSingleLayer(DType* ws,

DType* cy_ptr) {

using namespace mshadow;

const Tensor wx(w_ptr, Shape2(H * 4, I));

- const Tensor wh(w_ptr + I * H * 4, Shape2(H * 4, H));

+ const Tensor wh(w_ptr + I * H * 4, Shape2(H * 4, (P ? P :

H)));

+ Tensor whr(w_ptr, Shape2(1, 1));

+ if (P > 0)

+whr = Tensor(wh.dptr_ + P * 4 * H, Shape2(P, H));

Review comment:

Let's put this into the same line of `if (P > 0)` or add `{. .. }` for it,

like what you're doing at L236.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-mxnet] TaoLv commented on a change in pull request #17702: Support projection feature for LSTM on CPU (Only Inference)

TaoLv commented on a change in pull request #17702: Support projection feature

for LSTM on CPU (Only Inference)

URL: https://github.com/apache/incubator-mxnet/pull/17702#discussion_r386026951

##

File path: src/operator/rnn.cc

##

@@ -427,7 +443,9 @@ NNVM_REGISTER_OP(_backward_RNN)

.set_attr_parser(ParamParser)

.set_attr("TIsLayerOpBackward", true)

.set_attr("TIsBackward", true)

+#if MXNET_USE_MKLDNN == 1

Review comment:

Same here, merge this check with the one at L450.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-mxnet] TaoLv commented on a change in pull request #17702: Support projection feature for LSTM on CPU (Only Inference)

TaoLv commented on a change in pull request #17702: Support projection feature

for LSTM on CPU (Only Inference)

URL: https://github.com/apache/incubator-mxnet/pull/17702#discussion_r386026898

##

File path: src/operator/rnn.cc

##

@@ -385,7 +399,9 @@ The definition of GRU here is slightly different from

paper but compatible with

})

.set_attr("FInferShape", RNNShape)

.set_attr("FInferType", RNNType)

+#if MXNET_USE_MKLDNN == 1

Review comment:

Make sure you know that, if MKL-DNN is not used, FInferStorageType will not

be registered.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[incubator-mxnet-site] branch asf-site updated: Bump the publish timestamp.

This is an automated email from the ASF dual-hosted git repository. aaronmarkham pushed a commit to branch asf-site in repository https://gitbox.apache.org/repos/asf/incubator-mxnet-site.git The following commit(s) were added to refs/heads/asf-site by this push: new 787dc7b Bump the publish timestamp. 787dc7b is described below commit 787dc7b228c62a247bb4f456423b4da9e6da92f5 Author: mxnet-ci AuthorDate: Sat Feb 29 12:40:22 2020 + Bump the publish timestamp. --- date.txt | 1 + 1 file changed, 1 insertion(+) diff --git a/date.txt b/date.txt new file mode 100644 index 000..301827f --- /dev/null +++ b/date.txt @@ -0,0 +1 @@ +Sat Feb 29 12:40:22 UTC 2020

[GitHub] [incubator-mxnet] pedrooct commented on issue #16379: Fail to run example code with Raspberry Pi

pedrooct commented on issue #16379: Fail to run example code with Raspberry Pi URL: https://github.com/apache/incubator-mxnet/issues/16379#issuecomment-592936643 > hi, i'm getting the same error as you on my raspberry pi 3b, until Friday i will compile mxnet and try to create a wheel. I will give my best ! To compile with no issues for the raspberry pi, at least for what i tested, add to the cmake command this parameters: **- DCMAKE_C_COMPILER=gcc-4.9 -DCMAKE_CXX_COMPILER=g++-4.9.** This will force the use of version 4.9. I tested using the demo.py file and a yolo.py example. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] braindotai commented on issue #17684: The output of the ReLU layer in MXNET is different from that in tensorflow and cntk

braindotai commented on issue #17684: The output of the ReLU layer in MXNET is different from that in tensorflow and cntk URL: https://github.com/apache/incubator-mxnet/issues/17684#issuecomment-592934161 As given [here](https://mxnet.apache.org/api/python/docs/api/gluon/model_zoo/index.html) make sure that you are normalizing your image as below ```python image = image/255 normalized = mx.image.color_normalize(image, mean=mx.nd.array([0.485, 0.456, 0.406]), std=mx.nd.array([0.229, 0.224, 0.225])) ``` This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[incubator-mxnet] branch master updated: [Large Tensor] Implemented LT flag for OpPerf testing (#17449)

This is an automated email from the ASF dual-hosted git repository. apeforest pushed a commit to branch master in repository https://gitbox.apache.org/repos/asf/incubator-mxnet.git The following commit(s) were added to refs/heads/master by this push: new 95c5189 [Large Tensor] Implemented LT flag for OpPerf testing (#17449) 95c5189 is described below commit 95c5189cc7f1a6d8a45fde65f500d74b8fa02c53 Author: Connor Goggins AuthorDate: Sat Feb 29 00:43:08 2020 -0800 [Large Tensor] Implemented LT flag for OpPerf testing (#17449) * Passing large_tensor parameter down * Adding large tensor testing functionality for convolutional operators * Added large tensor test functionality for conv ops * Fixing sizing for conv ops * Added gemm large tensor, print on conv * Updated input for gemm ops and print statements * Fixed deconv large tensor test * Added bias for deconv * Added test functionality for nn_activation and nn_basic ops * Fixed deconv bias, implemented large tensor test logic for general ops, added default data for large tensor test * Dropped unnecessary print statements * Fixed lint errors * Added large_tensor parameter to existing function descriptions, added descriptions for functions missing descriptions * Adding docs, changed large_tensor to int64_tensor for clarity * Added warmup/runs to gemm ops, debugging process failure * Resolved merge conficts, added default params and input switching functionality * Dynamic input handling for default inputs, additional custom data for int64 * Fixed RPD issue * Everything through reduction ops working * Passing large_tensor parameter down * Adding large tensor testing functionality for convolutional operators * Added large tensor test functionality for conv ops * Fixing sizing for conv ops * Added gemm large tensor, print on conv * Updated input for gemm ops and print statements * Fixed deconv large tensor test * Added bias for deconv * Added test functionality for nn_activation and nn_basic ops * Fixed deconv bias, implemented large tensor test logic for general ops, added default data for large tensor test * Dropped unnecessary print statements * Fixed lint errors * Added large_tensor parameter to existing function descriptions, added descriptions for functions missing descriptions * Adding docs, changed large_tensor to int64_tensor for clarity * Added warmup/runs to gemm ops, debugging process failure * Resolved merge conficts, added default params and input switching functionality * Dynamic input handling for default inputs, additional custom data for int64 * Fixed RPD issue * Everything through reduction ops working * Random sampling & loss ops working * Added indices, depth, ravel_data in default_params * Added indexing ops - waiting for merge on ravel * Added optimizer ops * All misc ops working * All NN Basic ops working * Fixed LT input for ROIPooling * Refactored NN Conv tests * Added test for inline optimizer ops * Dropping extra tests to decrease execution time * Switching to inline tests for RNN to support additional modes * Added state_cell as NDArray param, removed linalg testing for int64 tensor * Cleaned up styling * Fixed conv and deconv tests * Retrigger CI for continuous build * Cleaned up GEMM op inputs * Dropped unused param from default_params --- benchmark/opperf/nd_operations/array_rearrange.py | 8 +- benchmark/opperf/nd_operations/binary_operators.py | 26 +- benchmark/opperf/nd_operations/gemm_operators.py | 84 +++-- .../opperf/nd_operations/indexing_routines.py | 8 +- benchmark/opperf/nd_operations/linalg_operators.py | 8 +- benchmark/opperf/nd_operations/misc_operators.py | 73 ++-- .../nd_operations/nn_activation_operators.py | 10 +- .../opperf/nd_operations/nn_basic_operators.py | 78 - .../opperf/nd_operations/nn_conv_operators.py | 287 +++- .../opperf/nd_operations/nn_loss_operators.py | 8 +- .../opperf/nd_operations/nn_optimizer_operators.py | 66 ++-- .../nd_operations/random_sampling_operators.py | 8 +- .../opperf/nd_operations/reduction_operators.py| 8 +- .../nd_operations/sorting_searching_operators.py | 8 +- benchmark/opperf/nd_operations/unary_operators.py | 26 +- benchmark/opperf/opperf.py | 56 ++-- benchmark/opperf/rules/default_params.py | 371 - benchmark/opperf/utils/benchmark_utils.py | 4 +- benchmark/opperf/utils/op_registry_utils.py| 57 ++--

[GitHub] [incubator-mxnet] apeforest merged pull request #17449: [Large Tensor] Implemented LT flag for OpPerf testing

apeforest merged pull request #17449: [Large Tensor] Implemented LT flag for OpPerf testing URL: https://github.com/apache/incubator-mxnet/pull/17449 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[incubator-mxnet] branch master updated: [Large Tensor] Fix cumsum op (#17677)

This is an automated email from the ASF dual-hosted git repository.

apeforest pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/incubator-mxnet.git

The following commit(s) were added to refs/heads/master by this push:

new 2527553 [Large Tensor] Fix cumsum op (#17677)

2527553 is described below

commit 2527553e8c8bf34d919e21bb4f37e2e13b6b6834

Author: Connor Goggins

AuthorDate: Sat Feb 29 00:37:33 2020 -0800

[Large Tensor] Fix cumsum op (#17677)

* Implemented fix and nightly test for cumsum

* Changed IType to index_t

* Also changed in backward

* Reverting to IType

* Added type assertion on first element to force evaluation of output

NDArray

* Reverted to IType in relevant places

* Last reversion

* Changed type assertion to value check

---

src/operator/numpy/np_cumsum-inl.h | 24

tests/nightly/test_large_array.py | 11 +++

2 files changed, 23 insertions(+), 12 deletions(-)

diff --git a/src/operator/numpy/np_cumsum-inl.h

b/src/operator/numpy/np_cumsum-inl.h

index 375d83b..65e6581 100644

--- a/src/operator/numpy/np_cumsum-inl.h

+++ b/src/operator/numpy/np_cumsum-inl.h

@@ -60,17 +60,17 @@ struct CumsumParam : public dmlc::Parameter {

struct cumsum_forward {

template

- MSHADOW_XINLINE static void Map(int i,

+ MSHADOW_XINLINE static void Map(index_t i,

OType *out,

const IType *in,

- const int middle,

- const int trailing) {

-int left = i / trailing, right = i % trailing;

-int offset = left * middle * trailing + right;

+ const index_t middle,

+ const index_t trailing) {

+index_t left = i / trailing, right = i % trailing;

+index_t offset = left * middle * trailing + right;

const IType *lane_in = in + offset;

OType *lane_out = out + offset;

lane_out[0] = OType(lane_in[0]);

-for (int j = 1; j < middle; ++j) {

+for (index_t j = 1; j < middle; ++j) {

lane_out[j * trailing] = lane_out[(j - 1) * trailing] + OType(lane_in[j

* trailing]);

}

}

@@ -125,17 +125,17 @@ void CumsumForward(const nnvm::NodeAttrs& attrs,

struct cumsum_backward {

template

- MSHADOW_XINLINE static void Map(int i,

+ MSHADOW_XINLINE static void Map(index_t i,

IType *igrad,

const OType *ograd,

- const int middle,

- const int trailing) {

-int left = i / trailing, right = i % trailing;

-int offset = left * middle * trailing + right;

+ const index_t middle,

+ const index_t trailing) {

+index_t left = i / trailing, right = i % trailing;

+index_t offset = left * middle * trailing + right;

const OType *lane_ograd = ograd + offset;

IType *lane_igrad = igrad + offset;

lane_igrad[(middle - 1) * trailing] = IType(lane_ograd[(middle - 1) *

trailing]);

-for (int j = middle - 2; j >= 0; --j) {

+for (index_t j = middle - 2; j >= 0; --j) {

lane_igrad[j * trailing] = lane_igrad[(j + 1) * trailing] +

IType(lane_ograd[j * trailing]);

}

}

diff --git a/tests/nightly/test_large_array.py

b/tests/nightly/test_large_array.py

index ee57f17..222c452 100644

--- a/tests/nightly/test_large_array.py

+++ b/tests/nightly/test_large_array.py

@@ -504,6 +504,16 @@ def test_nn():

assert out.shape[0] == LARGE_TENSOR_SHAPE

+def check_cumsum():

+a = nd.ones((LARGE_X, SMALL_Y))

+axis = 1

+

+res = nd.cumsum(a=a, axis=axis)

+

+assert res.shape[0] == LARGE_X

+assert res.shape[1] == SMALL_Y

+assert res[0][SMALL_Y - 1] == 50.

+

check_gluon_embedding()

check_fully_connected()

check_dense()

@@ -527,6 +537,7 @@ def test_nn():

check_embedding()

check_spatial_transformer()

check_ravel()

+check_cumsum()

def test_tensor():

[GitHub] [incubator-mxnet] apeforest merged pull request #17677: [Large Tensor] Fix cumsum op

apeforest merged pull request #17677: [Large Tensor] Fix cumsum op URL: https://github.com/apache/incubator-mxnet/pull/17677 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] huifengguo opened a new issue #17728: Poor performance when we use symbol.(.., .., stype="row-sparse") to define the variable

huifengguo opened a new issue #17728: Poor performance when we use

symbol.(..,..,stype="row-sparse") to define the variable

URL: https://github.com/apache/incubator-mxnet/issues/17728

## Description

Task: CTR prediction

Model: DCN

Dataset: Criteo

Everything is same except the definition of embedding variable:

```

// default

embed_weight = mx.symbol.Variable('embed_weight', stype='default')

// row_sparse

embed_weight = mx.symbol.Variable('embed_weight', stype='row_sparse')

```

`

embed = mx.symbol.Embedding(data=ids, weight=embed_weight,

input_dim=20,

output_dim=hidden_units[0], sparse_grad=True)

`

## Occurrences

When stype="default", the result of AUC is 0.802,

while the test result of AUC is 0.794 when stype="row-sparse".

## What have you tried to solve it?

1.

2.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services