[jira] [Commented] (BAHIR-85) Redis Sink Connector should allow update of command without reinstatiation

[ https://issues.apache.org/jira/browse/BAHIR-85?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17077007#comment-17077007 ] ASF GitHub Bot commented on BAHIR-85: - eskabetxe commented on issue #60: BAHIR-85: make it possible to change additional key without restarting URL: https://github.com/apache/bahir-flink/pull/60#issuecomment-610225699 @lresende I help you This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org > Redis Sink Connector should allow update of command without reinstatiation > --- > > Key: BAHIR-85 > URL: https://issues.apache.org/jira/browse/BAHIR-85 > Project: Bahir > Issue Type: Improvement > Components: Flink Streaming Connectors >Reporter: Atharva Inamdar >Priority: Major > > ref: FLINK-5478 > `getCommandDescription()` gets called when RedisSink is instantiated. This > happens only once and thus doesn't allow the command to be updated during run > time. > Use Case: > As a dev I want to store some data by day. So each key will have some date > specified. this will change over course of time. for example: > `counts_for_148426560` for 2017-01-13. This is not limited to any > particular command. > connector: > https://github.com/apache/bahir-flink/blob/master/flink-connector-redis/src/main/java/org/apache/flink/streaming/connectors/redis/RedisSink.java#L114 > I wish `getCommandDescription()` could be called in `invoke()` so that the > key can be updated without having to restart. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (BAHIR-85) Redis Sink Connector should allow update of command without reinstatiation

[ https://issues.apache.org/jira/browse/BAHIR-85?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17076861#comment-17076861 ] ASF GitHub Bot commented on BAHIR-85: - lresende commented on issue #60: BAHIR-85: make it possible to change additional key without restarting URL: https://github.com/apache/bahir-flink/pull/60#issuecomment-610154539 Any volunteers to help with a release ? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org > Redis Sink Connector should allow update of command without reinstatiation > --- > > Key: BAHIR-85 > URL: https://issues.apache.org/jira/browse/BAHIR-85 > Project: Bahir > Issue Type: Improvement > Components: Flink Streaming Connectors >Reporter: Atharva Inamdar >Priority: Major > > ref: FLINK-5478 > `getCommandDescription()` gets called when RedisSink is instantiated. This > happens only once and thus doesn't allow the command to be updated during run > time. > Use Case: > As a dev I want to store some data by day. So each key will have some date > specified. this will change over course of time. for example: > `counts_for_148426560` for 2017-01-13. This is not limited to any > particular command. > connector: > https://github.com/apache/bahir-flink/blob/master/flink-connector-redis/src/main/java/org/apache/flink/streaming/connectors/redis/RedisSink.java#L114 > I wish `getCommandDescription()` could be called in `invoke()` so that the > key can be updated without having to restart. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (BAHIR-85) Redis Sink Connector should allow update of command without reinstatiation

[ https://issues.apache.org/jira/browse/BAHIR-85?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17076852#comment-17076852 ] ASF GitHub Bot commented on BAHIR-85: - Zentopia commented on issue #60: BAHIR-85: make it possible to change additional key without restarting URL: https://github.com/apache/bahir-flink/pull/60#issuecomment-610145753 It has been merged. But the version in maven central is still 1.0. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org > Redis Sink Connector should allow update of command without reinstatiation > --- > > Key: BAHIR-85 > URL: https://issues.apache.org/jira/browse/BAHIR-85 > Project: Bahir > Issue Type: Improvement > Components: Flink Streaming Connectors >Reporter: Atharva Inamdar >Priority: Major > > ref: FLINK-5478 > `getCommandDescription()` gets called when RedisSink is instantiated. This > happens only once and thus doesn't allow the command to be updated during run > time. > Use Case: > As a dev I want to store some data by day. So each key will have some date > specified. this will change over course of time. for example: > `counts_for_148426560` for 2017-01-13. This is not limited to any > particular command. > connector: > https://github.com/apache/bahir-flink/blob/master/flink-connector-redis/src/main/java/org/apache/flink/streaming/connectors/redis/RedisSink.java#L114 > I wish `getCommandDescription()` could be called in `invoke()` so that the > key can be updated without having to restart. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (BAHIR-85) Redis Sink Connector should allow update of command without reinstatiation

[ https://issues.apache.org/jira/browse/BAHIR-85?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17076847#comment-17076847 ] ASF GitHub Bot commented on BAHIR-85: - Zentopia commented on issue #60: BAHIR-85: make it possible to change additional key without restarting URL: https://github.com/apache/bahir-flink/pull/60#issuecomment-610145753 Any updates on this? We need it. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org > Redis Sink Connector should allow update of command without reinstatiation > --- > > Key: BAHIR-85 > URL: https://issues.apache.org/jira/browse/BAHIR-85 > Project: Bahir > Issue Type: Improvement > Components: Flink Streaming Connectors >Reporter: Atharva Inamdar >Priority: Major > > ref: FLINK-5478 > `getCommandDescription()` gets called when RedisSink is instantiated. This > happens only once and thus doesn't allow the command to be updated during run > time. > Use Case: > As a dev I want to store some data by day. So each key will have some date > specified. this will change over course of time. for example: > `counts_for_148426560` for 2017-01-13. This is not limited to any > particular command. > connector: > https://github.com/apache/bahir-flink/blob/master/flink-connector-redis/src/main/java/org/apache/flink/streaming/connectors/redis/RedisSink.java#L114 > I wish `getCommandDescription()` could be called in `invoke()` so that the > key can be updated without having to restart. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (BAHIR-220) Add redis descriptor to make redis connection as a table

[

https://issues.apache.org/jira/browse/BAHIR-220?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17059135#comment-17059135

]

ASF GitHub Bot commented on BAHIR-220:

--

lresende commented on pull request #72: [BAHIR-220] Add redis descriptor to

make redis connection as a table

URL: https://github.com/apache/bahir-flink/pull/72

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Add redis descriptor to make redis connection as a table

>

>

> Key: BAHIR-220

> URL: https://issues.apache.org/jira/browse/BAHIR-220

> Project: Bahir

> Issue Type: Improvement

> Components: Flink Streaming Connectors

>Affects Versions: Flink-1.0

>Reporter: yuemeng

>Priority: Major

>

> currently, for Flink-1.9.0, we can use the catalog to store our stream table

> source and sink

> for Redis connector, it should exist a Redis table sink so we can register it

> to catalog, and use Redis as a table in SQL environment

> {code}

> Redis redis = new Redis()

> .mode(RedisVadidator.REDIS_CLUSTER)

> .command(RedisCommand.INCRBY_EX.name())

> .ttl(10)

> .property(RedisVadidator.REDIS_NODES, REDIS_HOST+ ":" +

> REDIS_PORT);

> tableEnvironment

> .connect(redis).withSchema(new Schema()

> .field("k", TypeInformation.of(String.class))

> .field("v", TypeInformation.of(Long.class)))

> .registerTableSink("redis");

> tableEnvironment.sqlUpdate("insert into redis select k, v from t1");

> env.execute("Test Redis Table");

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (BAHIR-220) Add redis descriptor to make redis connection as a table

[

https://issues.apache.org/jira/browse/BAHIR-220?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17052388#comment-17052388

]

ASF GitHub Bot commented on BAHIR-220:

--

eskabetxe commented on issue #72: [BAHIR-220] Add redis descriptor to make

redis connection as a table

URL: https://github.com/apache/bahir-flink/pull/72#issuecomment-595364359

@lresende LGTM

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Add redis descriptor to make redis connection as a table

>

>

> Key: BAHIR-220

> URL: https://issues.apache.org/jira/browse/BAHIR-220

> Project: Bahir

> Issue Type: Improvement

> Components: Flink Streaming Connectors

>Affects Versions: Flink-1.0

>Reporter: yuemeng

>Priority: Major

>

> currently, for Flink-1.9.0, we can use the catalog to store our stream table

> source and sink

> for Redis connector, it should exist a Redis table sink so we can register it

> to catalog, and use Redis as a table in SQL environment

> {code}

> Redis redis = new Redis()

> .mode(RedisVadidator.REDIS_CLUSTER)

> .command(RedisCommand.INCRBY_EX.name())

> .ttl(10)

> .property(RedisVadidator.REDIS_NODES, REDIS_HOST+ ":" +

> REDIS_PORT);

> tableEnvironment

> .connect(redis).withSchema(new Schema()

> .field("k", TypeInformation.of(String.class))

> .field("v", TypeInformation.of(Long.class)))

> .registerTableSink("redis");

> tableEnvironment.sqlUpdate("insert into redis select k, v from t1");

> env.execute("Test Redis Table");

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (BAHIR-222) Update Readme with details of SQL Streaming SQS connector

[ https://issues.apache.org/jira/browse/BAHIR-222?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17006589#comment-17006589 ] ASF GitHub Bot commented on BAHIR-222: -- abhishekd0907 commented on issue #96: [BAHIR-222] Update Readme with details of SQL Streaming SQS connector URL: https://github.com/apache/bahir/pull/96#issuecomment-570117872 sure will do @lresende. just wanted to confirm if the code changes required will be similar to these 2 commits: [Update doc script with newly added extensions](https://github.com/apache/bahir-website/commit/0995f1ef61f0ad7c10e44bf3fd5beb661c09f3af) [Add template for new pubnub spark extension](https://github.com/apache/bahir-website/commit/a4e3d3d356bba45faf8c120be999339470d40178) Is that all or am I missing out something? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org > Update Readme with details of SQL Streaming SQS connector > - > > Key: BAHIR-222 > URL: https://issues.apache.org/jira/browse/BAHIR-222 > Project: Bahir > Issue Type: Task > Components: Spark Structured Streaming Connectors >Affects Versions: Not Applicable >Reporter: Abhishek Dixit >Assignee: Abhishek Dixit >Priority: Major > Fix For: Spark-2.4.0 > > > Adding link to SQL Streaming SQS connector in BAHIR Readme. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (BAHIR-222) Update Readme with details of SQL Streaming SQS connector

[ https://issues.apache.org/jira/browse/BAHIR-222?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17005817#comment-17005817 ] ASF GitHub Bot commented on BAHIR-222: -- lresende commented on pull request #96: [BAHIR-222] Update Readme with details of SQL Streaming SQS connector URL: https://github.com/apache/bahir/pull/96 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org > Update Readme with details of SQL Streaming SQS connector > - > > Key: BAHIR-222 > URL: https://issues.apache.org/jira/browse/BAHIR-222 > Project: Bahir > Issue Type: Task > Components: Spark Structured Streaming Connectors >Affects Versions: Not Applicable >Reporter: Abhishek Dixit >Assignee: Abhishek Dixit >Priority: Major > Fix For: Not Applicable > > > Adding link to SQL Streaming SQS connector in BAHIR Readme. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (BAHIR-222) Update Readme with details of SQL Streaming SQS connector

[ https://issues.apache.org/jira/browse/BAHIR-222?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17005815#comment-17005815 ] ASF GitHub Bot commented on BAHIR-222: -- lresende commented on issue #96: [BAHIR-222] Update Readme with details of SQL Streaming SQS connector URL: https://github.com/apache/bahir/pull/96#issuecomment-569800815 Could you please also update the website (github.com/apache/bahir-website) and add correspondent links to the SQS connector. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org > Update Readme with details of SQL Streaming SQS connector > - > > Key: BAHIR-222 > URL: https://issues.apache.org/jira/browse/BAHIR-222 > Project: Bahir > Issue Type: Task > Components: Spark Structured Streaming Connectors >Affects Versions: Not Applicable >Reporter: Abhishek Dixit >Assignee: Abhishek Dixit >Priority: Major > Fix For: Not Applicable > > > Adding link to SQL Streaming SQS connector in BAHIR Readme. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (BAHIR-213) Faster S3 file Source for Structured Streaming with SQS

[ https://issues.apache.org/jira/browse/BAHIR-213?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17005128#comment-17005128 ] ASF GitHub Bot commented on BAHIR-213: -- abhishekd0907 commented on issue #91: [BAHIR-213] Faster S3 file Source for Structured Streaming with SQS URL: https://github.com/apache/bahir/pull/91#issuecomment-569581591 Thanks @lresende This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org > Faster S3 file Source for Structured Streaming with SQS > --- > > Key: BAHIR-213 > URL: https://issues.apache.org/jira/browse/BAHIR-213 > Project: Bahir > Issue Type: New Feature > Components: Spark Structured Streaming Connectors >Affects Versions: Spark-2.4.0 >Reporter: Abhishek Dixit >Assignee: Abhishek Dixit >Priority: Major > Fix For: Spark-2.4.0 > > > Using FileStreamSource to read files from a S3 bucket has problems both in > terms of costs and latency: > * *Latency:* Listing all the files in S3 buckets every microbatch can be > both slow and resource intensive. > * *Costs:* Making List API requests to S3 every microbatch can be costly. > The solution is to use Amazon Simple Queue Service (SQS) which lets you find > new files written to S3 bucket without the need to list all the files every > microbatch. > S3 buckets can be configured to send notification to an Amazon SQS Queue on > Object Create / Object Delete events. For details see AWS documentation here > [Configuring S3 Event > Notifications|https://docs.aws.amazon.com/AmazonS3/latest/dev/NotificationHowTo.html] > > Spark can leverage this to find new files written to S3 bucket by reading > notifications from SQS queue instead of listing files every microbatch. > I hope to contribute changes proposed in [this pull > request|https://github.com/apache/spark/pull/24934] to Apache Bahir as > suggested by [gaborgsomogyi|https://github.com/gaborgsomogyi] > [here|https://github.com/apache/spark/pull/24934#issuecomment-511389130] -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (BAHIR-213) Faster S3 file Source for Structured Streaming with SQS

[ https://issues.apache.org/jira/browse/BAHIR-213?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17005127#comment-17005127 ] ASF GitHub Bot commented on BAHIR-213: -- abhishekd0907 commented on issue #91: [BAHIR-213] Faster S3 file Source for Structured Streaming with SQS URL: https://github.com/apache/bahir/pull/91#issuecomment-569581591 Thank @lresende This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org > Faster S3 file Source for Structured Streaming with SQS > --- > > Key: BAHIR-213 > URL: https://issues.apache.org/jira/browse/BAHIR-213 > Project: Bahir > Issue Type: New Feature > Components: Spark Structured Streaming Connectors >Affects Versions: Spark-2.4.0 >Reporter: Abhishek Dixit >Assignee: Abhishek Dixit >Priority: Major > Fix For: Spark-2.4.0 > > > Using FileStreamSource to read files from a S3 bucket has problems both in > terms of costs and latency: > * *Latency:* Listing all the files in S3 buckets every microbatch can be > both slow and resource intensive. > * *Costs:* Making List API requests to S3 every microbatch can be costly. > The solution is to use Amazon Simple Queue Service (SQS) which lets you find > new files written to S3 bucket without the need to list all the files every > microbatch. > S3 buckets can be configured to send notification to an Amazon SQS Queue on > Object Create / Object Delete events. For details see AWS documentation here > [Configuring S3 Event > Notifications|https://docs.aws.amazon.com/AmazonS3/latest/dev/NotificationHowTo.html] > > Spark can leverage this to find new files written to S3 bucket by reading > notifications from SQS queue instead of listing files every microbatch. > I hope to contribute changes proposed in [this pull > request|https://github.com/apache/spark/pull/24934] to Apache Bahir as > suggested by [gaborgsomogyi|https://github.com/gaborgsomogyi] > [here|https://github.com/apache/spark/pull/24934#issuecomment-511389130] -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (BAHIR-222) Update Readme with details of SQL Streaming SQS connector

[ https://issues.apache.org/jira/browse/BAHIR-222?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17004734#comment-17004734 ] ASF GitHub Bot commented on BAHIR-222: -- abhishekd0907 commented on pull request #96: [BAHIR-222] Update Readme with details of SQL Streaming SQS connector URL: https://github.com/apache/bahir/pull/96 Adding link to SQL Streaming SQS connector in BAHIR README.md This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org > Update Readme with details of SQL Streaming SQS connector > - > > Key: BAHIR-222 > URL: https://issues.apache.org/jira/browse/BAHIR-222 > Project: Bahir > Issue Type: Task > Components: Spark Structured Streaming Connectors >Affects Versions: Not Applicable >Reporter: Abhishek Dixit >Assignee: Abhishek Dixit >Priority: Major > Fix For: Not Applicable > > > Adding link to SQL Streaming SQS connector in BAHIR Readme. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (BAHIR-213) Faster S3 file Source for Structured Streaming with SQS

[ https://issues.apache.org/jira/browse/BAHIR-213?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17004573#comment-17004573 ] ASF GitHub Bot commented on BAHIR-213: -- lresende commented on pull request #91: [BAHIR-213] Faster S3 file Source for Structured Streaming with SQS URL: https://github.com/apache/bahir/pull/91 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org > Faster S3 file Source for Structured Streaming with SQS > --- > > Key: BAHIR-213 > URL: https://issues.apache.org/jira/browse/BAHIR-213 > Project: Bahir > Issue Type: New Feature > Components: Spark Structured Streaming Connectors >Affects Versions: Spark-2.4.0 >Reporter: Abhishek Dixit >Priority: Major > > Using FileStreamSource to read files from a S3 bucket has problems both in > terms of costs and latency: > * *Latency:* Listing all the files in S3 buckets every microbatch can be > both slow and resource intensive. > * *Costs:* Making List API requests to S3 every microbatch can be costly. > The solution is to use Amazon Simple Queue Service (SQS) which lets you find > new files written to S3 bucket without the need to list all the files every > microbatch. > S3 buckets can be configured to send notification to an Amazon SQS Queue on > Object Create / Object Delete events. For details see AWS documentation here > [Configuring S3 Event > Notifications|https://docs.aws.amazon.com/AmazonS3/latest/dev/NotificationHowTo.html] > > Spark can leverage this to find new files written to S3 bucket by reading > notifications from SQS queue instead of listing files every microbatch. > I hope to contribute changes proposed in [this pull > request|https://github.com/apache/spark/pull/24934] to Apache Bahir as > suggested by [gaborgsomogyi|https://github.com/gaborgsomogyi] > [here|https://github.com/apache/spark/pull/24934#issuecomment-511389130] -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (BAHIR-213) Faster S3 file Source for Structured Streaming with SQS

[ https://issues.apache.org/jira/browse/BAHIR-213?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17004075#comment-17004075 ] ASF GitHub Bot commented on BAHIR-213: -- lresende commented on issue #91: [BAHIR-213] Faster S3 file Source for Structured Streaming with SQS URL: https://github.com/apache/bahir/pull/91#issuecomment-569241249 @abhishekd0907 Thanks, I will wait a day or so in case @steveloughran can say something, otherwise, I will go ahead and merge this and we can iterate on master when @steveloughran is better and available. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org > Faster S3 file Source for Structured Streaming with SQS > --- > > Key: BAHIR-213 > URL: https://issues.apache.org/jira/browse/BAHIR-213 > Project: Bahir > Issue Type: New Feature > Components: Spark Structured Streaming Connectors >Affects Versions: Spark-2.4.0 >Reporter: Abhishek Dixit >Priority: Major > > Using FileStreamSource to read files from a S3 bucket has problems both in > terms of costs and latency: > * *Latency:* Listing all the files in S3 buckets every microbatch can be > both slow and resource intensive. > * *Costs:* Making List API requests to S3 every microbatch can be costly. > The solution is to use Amazon Simple Queue Service (SQS) which lets you find > new files written to S3 bucket without the need to list all the files every > microbatch. > S3 buckets can be configured to send notification to an Amazon SQS Queue on > Object Create / Object Delete events. For details see AWS documentation here > [Configuring S3 Event > Notifications|https://docs.aws.amazon.com/AmazonS3/latest/dev/NotificationHowTo.html] > > Spark can leverage this to find new files written to S3 bucket by reading > notifications from SQS queue instead of listing files every microbatch. > I hope to contribute changes proposed in [this pull > request|https://github.com/apache/spark/pull/24934] to Apache Bahir as > suggested by [gaborgsomogyi|https://github.com/gaborgsomogyi] > [here|https://github.com/apache/spark/pull/24934#issuecomment-511389130] -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (BAHIR-213) Faster S3 file Source for Structured Streaming with SQS

[ https://issues.apache.org/jira/browse/BAHIR-213?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16987877#comment-16987877 ] ASF GitHub Bot commented on BAHIR-213: -- steveloughran commented on issue #91: [BAHIR-213] Faster S3 file Source for Structured Streaming with SQS URL: https://github.com/apache/bahir/pull/91#issuecomment-561650867 I'm not directly ignoring you, just some problems are stop me doing much coding right now. I had hoped to do a PoC what this would look like against hadoop-3.2.1 I'm so drive whatever changes needed to be done there to help this (e.g delegation tokens to support the SQS), plus some tests. But its not going to happen this year -sorry. Similarly, I'm cutting back on approximately all my reviews. Anything involving typing basically. I do think it's important -and I also think somebody needs to look at spark streaming checkpointing -against S3 put-with-overwrite works the way rename doesn't. Just not going to be me. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org > Faster S3 file Source for Structured Streaming with SQS > --- > > Key: BAHIR-213 > URL: https://issues.apache.org/jira/browse/BAHIR-213 > Project: Bahir > Issue Type: New Feature > Components: Spark Structured Streaming Connectors >Affects Versions: Spark-2.4.0 >Reporter: Abhishek Dixit >Priority: Major > > Using FileStreamSource to read files from a S3 bucket has problems both in > terms of costs and latency: > * *Latency:* Listing all the files in S3 buckets every microbatch can be > both slow and resource intensive. > * *Costs:* Making List API requests to S3 every microbatch can be costly. > The solution is to use Amazon Simple Queue Service (SQS) which lets you find > new files written to S3 bucket without the need to list all the files every > microbatch. > S3 buckets can be configured to send notification to an Amazon SQS Queue on > Object Create / Object Delete events. For details see AWS documentation here > [Configuring S3 Event > Notifications|https://docs.aws.amazon.com/AmazonS3/latest/dev/NotificationHowTo.html] > > Spark can leverage this to find new files written to S3 bucket by reading > notifications from SQS queue instead of listing files every microbatch. > I hope to contribute changes proposed in [this pull > request|https://github.com/apache/spark/pull/24934] to Apache Bahir as > suggested by [gaborgsomogyi|https://github.com/gaborgsomogyi] > [here|https://github.com/apache/spark/pull/24934#issuecomment-511389130] -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (BAHIR-213) Faster S3 file Source for Structured Streaming with SQS

[ https://issues.apache.org/jira/browse/BAHIR-213?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16986278#comment-16986278 ] ASF GitHub Bot commented on BAHIR-213: -- abhishekd0907 commented on issue #91: [BAHIR-213] Faster S3 file Source for Structured Streaming with SQS URL: https://github.com/apache/bahir/pull/91#issuecomment-560538377 > @abhishekd0907 could you please rebase to latest master to make sure we get a green build. Otherwise, looks ok to me. @lresende i see that master build is also failing https://travis-ci.org/apache/bahir/builds/584684002?utm_source=github_status_medium=notification probably due to this `Detected Maven Version: 3.5.2 is not in the allowed range 3.5.4. ` This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org > Faster S3 file Source for Structured Streaming with SQS > --- > > Key: BAHIR-213 > URL: https://issues.apache.org/jira/browse/BAHIR-213 > Project: Bahir > Issue Type: New Feature > Components: Spark Structured Streaming Connectors >Affects Versions: Spark-2.4.0 >Reporter: Abhishek Dixit >Priority: Major > > Using FileStreamSource to read files from a S3 bucket has problems both in > terms of costs and latency: > * *Latency:* Listing all the files in S3 buckets every microbatch can be > both slow and resource intensive. > * *Costs:* Making List API requests to S3 every microbatch can be costly. > The solution is to use Amazon Simple Queue Service (SQS) which lets you find > new files written to S3 bucket without the need to list all the files every > microbatch. > S3 buckets can be configured to send notification to an Amazon SQS Queue on > Object Create / Object Delete events. For details see AWS documentation here > [Configuring S3 Event > Notifications|https://docs.aws.amazon.com/AmazonS3/latest/dev/NotificationHowTo.html] > > Spark can leverage this to find new files written to S3 bucket by reading > notifications from SQS queue instead of listing files every microbatch. > I hope to contribute changes proposed in [this pull > request|https://github.com/apache/spark/pull/24934] to Apache Bahir as > suggested by [gaborgsomogyi|https://github.com/gaborgsomogyi] > [here|https://github.com/apache/spark/pull/24934#issuecomment-511389130] -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (BAHIR-213) Faster S3 file Source for Structured Streaming with SQS

[ https://issues.apache.org/jira/browse/BAHIR-213?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16985188#comment-16985188 ] ASF GitHub Bot commented on BAHIR-213: -- lresende commented on issue #91: [BAHIR-213] Faster S3 file Source for Structured Streaming with SQS URL: https://github.com/apache/bahir/pull/91#issuecomment-559875628 @abhishekd0907 could you please rebase to latest master to make sure we get a green build. Otherwise, looks ok to me. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org > Faster S3 file Source for Structured Streaming with SQS > --- > > Key: BAHIR-213 > URL: https://issues.apache.org/jira/browse/BAHIR-213 > Project: Bahir > Issue Type: New Feature > Components: Spark Structured Streaming Connectors >Affects Versions: Spark-2.4.0 >Reporter: Abhishek Dixit >Priority: Major > > Using FileStreamSource to read files from a S3 bucket has problems both in > terms of costs and latency: > * *Latency:* Listing all the files in S3 buckets every microbatch can be > both slow and resource intensive. > * *Costs:* Making List API requests to S3 every microbatch can be costly. > The solution is to use Amazon Simple Queue Service (SQS) which lets you find > new files written to S3 bucket without the need to list all the files every > microbatch. > S3 buckets can be configured to send notification to an Amazon SQS Queue on > Object Create / Object Delete events. For details see AWS documentation here > [Configuring S3 Event > Notifications|https://docs.aws.amazon.com/AmazonS3/latest/dev/NotificationHowTo.html] > > Spark can leverage this to find new files written to S3 bucket by reading > notifications from SQS queue instead of listing files every microbatch. > I hope to contribute changes proposed in [this pull > request|https://github.com/apache/spark/pull/24934] to Apache Bahir as > suggested by [gaborgsomogyi|https://github.com/gaborgsomogyi] > [here|https://github.com/apache/spark/pull/24934#issuecomment-511389130] -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (BAHIR-220) Add redis descriptor to make redis connection as a table

[

https://issues.apache.org/jira/browse/BAHIR-220?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16977300#comment-16977300

]

ASF GitHub Bot commented on BAHIR-220:

--

hzyuemeng1 commented on pull request #72: [BAHIR-220] Add redis descriptor to

make redis connection as a table

URL: https://github.com/apache/bahir-flink/pull/72

currently, for Flink-1.9.0, we can use the catalog to store our stream table

source and sink meta.

for Redis connector, it should exist a Redis table sink so we can register

it to catalog, and use Redis as a table in SQL environment

```

Redis redis = new Redis()

.mode(RedisVadidator.REDIS_CLUSTER)

.command(RedisCommand.INCRBY_EX.name())

.ttl(10)

.property(RedisVadidator.REDIS_NODES, REDIS_HOST+ ":" +

REDIS_PORT);

tableEnvironment

.connect(redis).withSchema(new Schema()

.field("k", TypeInformation.of(String.class))

.field("v", TypeInformation.of(Long.class)))

.registerTableSink("redis");

tableEnvironment.sqlUpdate("insert into redis select k, v from t1");

env.execute("Test Redis Table");

```

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Add redis descriptor to make redis connection as a table

>

>

> Key: BAHIR-220

> URL: https://issues.apache.org/jira/browse/BAHIR-220

> Project: Bahir

> Issue Type: Improvement

> Components: Flink Streaming Connectors

>Affects Versions: Flink-1.0

>Reporter: yuemeng

>Priority: Major

>

> currently, for Flink-1.9.0, we can use the catalog to store our stream table

> source and sink meta.

> for Redis connector, it should exist a Redis table sink so we can register it

> to catalog, and use Redis as a table in SQL environment

> {code}

> Redis redis = new Redis()

> .mode(RedisVadidator.REDIS_CLUSTER)

> .command(RedisCommand.INCRBY_EX.name())

> .ttl(10)

> .property(RedisVadidator.REDIS_NODES, REDIS_HOST+ ":" +

> REDIS_PORT);

> tableEnvironment

> .connect(redis).withSchema(new Schema()

> .field("k", TypeInformation.of(String.class))

> .field("v", TypeInformation.of(Long.class)))

> .registerTableSink("redis");

> tableEnvironment.sqlUpdate("insert into redis select k, v from t1");

> env.execute("Test Redis Table");

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (BAHIR-213) Faster S3 file Source for Structured Streaming with SQS

[ https://issues.apache.org/jira/browse/BAHIR-213?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16966315#comment-16966315 ] ASF GitHub Bot commented on BAHIR-213: -- lresende commented on issue #91: [BAHIR-213] Faster S3 file Source for Structured Streaming with SQS URL: https://github.com/apache/bahir/pull/91#issuecomment-549194053 ping @steveloughran This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org > Faster S3 file Source for Structured Streaming with SQS > --- > > Key: BAHIR-213 > URL: https://issues.apache.org/jira/browse/BAHIR-213 > Project: Bahir > Issue Type: New Feature > Components: Spark Structured Streaming Connectors >Affects Versions: Spark-2.4.0 >Reporter: Abhishek Dixit >Priority: Major > > Using FileStreamSource to read files from a S3 bucket has problems both in > terms of costs and latency: > * *Latency:* Listing all the files in S3 buckets every microbatch can be > both slow and resource intensive. > * *Costs:* Making List API requests to S3 every microbatch can be costly. > The solution is to use Amazon Simple Queue Service (SQS) which lets you find > new files written to S3 bucket without the need to list all the files every > microbatch. > S3 buckets can be configured to send notification to an Amazon SQS Queue on > Object Create / Object Delete events. For details see AWS documentation here > [Configuring S3 Event > Notifications|https://docs.aws.amazon.com/AmazonS3/latest/dev/NotificationHowTo.html] > > Spark can leverage this to find new files written to S3 bucket by reading > notifications from SQS queue instead of listing files every microbatch. > I hope to contribute changes proposed in [this pull > request|https://github.com/apache/spark/pull/24934] to Apache Bahir as > suggested by [gaborgsomogyi|https://github.com/gaborgsomogyi] > [here|https://github.com/apache/spark/pull/24934#issuecomment-511389130] -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (BAHIR-155) Add expire to redis sink

[

https://issues.apache.org/jira/browse/BAHIR-155?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16949646#comment-16949646

]

ASF GitHub Bot commented on BAHIR-155:

--

tutss commented on issue #66: [BAHIR-155] TTL to HSET and SETEX command

URL: https://github.com/apache/bahir-flink/pull/66#issuecomment-541148390

my apache jira username is **tutss**

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Add expire to redis sink

> -

>

> Key: BAHIR-155

> URL: https://issues.apache.org/jira/browse/BAHIR-155

> Project: Bahir

> Issue Type: Wish

> Components: Flink Streaming Connectors

>Affects Versions: Flink-1.0

>Reporter: miki haiat

>Priority: Major

> Labels: features

> Fix For: Flink-Next

>

>

> I have a scenario that im collection some MD and aggregate the result by

> time .

> for example Each HSET of each window can create different values

> by adding expiry i can guarantee that the key is holding only the current

> window values

> im thinking to change the the interface signuter

>

> {code:java}

> void hset(String key, String hashField, String value);

> void set(String key, String value);

> //to this

> void hset(String key, String hashField, String value,int expire);

> void set(String key, String value,int expire);

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (BAHIR-155) Add expire to redis sink

[

https://issues.apache.org/jira/browse/BAHIR-155?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16949520#comment-16949520

]

ASF GitHub Bot commented on BAHIR-155:

--

lresende commented on issue #66: [BAHIR-155] TTL to HSET and SETEX command

URL: https://github.com/apache/bahir-flink/pull/66#issuecomment-541097293

@tutss , please let me know your apache jira account so I can assign the

jira as resolved by you.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Add expire to redis sink

> -

>

> Key: BAHIR-155

> URL: https://issues.apache.org/jira/browse/BAHIR-155

> Project: Bahir

> Issue Type: Wish

> Components: Flink Streaming Connectors

>Affects Versions: Flink-1.0

>Reporter: miki haiat

>Priority: Major

> Labels: features

> Fix For: Flink-1.0

>

>

> I have a scenario that im collection some MD and aggregate the result by

> time .

> for example Each HSET of each window can create different values

> by adding expiry i can guarantee that the key is holding only the current

> window values

> im thinking to change the the interface signuter

>

> {code:java}

> void hset(String key, String hashField, String value);

> void set(String key, String value);

> //to this

> void hset(String key, String hashField, String value,int expire);

> void set(String key, String value,int expire);

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (BAHIR-155) Add expire to redis sink

[

https://issues.apache.org/jira/browse/BAHIR-155?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16949515#comment-16949515

]

ASF GitHub Bot commented on BAHIR-155:

--

lresende commented on pull request #66: [BAHIR-155] TTL to HSET and SETEX

command

URL: https://github.com/apache/bahir-flink/pull/66

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Add expire to redis sink

> -

>

> Key: BAHIR-155

> URL: https://issues.apache.org/jira/browse/BAHIR-155

> Project: Bahir

> Issue Type: Wish

> Components: Flink Streaming Connectors

>Affects Versions: Flink-1.0

>Reporter: miki haiat

>Priority: Major

> Labels: features

> Fix For: Flink-1.0

>

>

> I have a scenario that im collection some MD and aggregate the result by

> time .

> for example Each HSET of each window can create different values

> by adding expiry i can guarantee that the key is holding only the current

> window values

> im thinking to change the the interface signuter

>

> {code:java}

> void hset(String key, String hashField, String value);

> void set(String key, String value);

> //to this

> void hset(String key, String hashField, String value,int expire);

> void set(String key, String value,int expire);

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (BAHIR-155) Add expire to redis sink

[

https://issues.apache.org/jira/browse/BAHIR-155?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16949482#comment-16949482

]

ASF GitHub Bot commented on BAHIR-155:

--

eskabetxe commented on issue #66: [BAHIR-155] TTL to HSET and SETEX command

URL: https://github.com/apache/bahir-flink/pull/66#issuecomment-541078180

LGTM @lresende

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Add expire to redis sink

> -

>

> Key: BAHIR-155

> URL: https://issues.apache.org/jira/browse/BAHIR-155

> Project: Bahir

> Issue Type: Wish

> Components: Flink Streaming Connectors

>Affects Versions: Flink-1.0

>Reporter: miki haiat

>Priority: Major

> Labels: features

> Fix For: Flink-1.0

>

>

> I have a scenario that im collection some MD and aggregate the result by

> time .

> for example Each HSET of each window can create different values

> by adding expiry i can guarantee that the key is holding only the current

> window values

> im thinking to change the the interface signuter

>

> {code:java}

> void hset(String key, String hashField, String value);

> void set(String key, String value);

> //to this

> void hset(String key, String hashField, String value,int expire);

> void set(String key, String value,int expire);

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (BAHIR-155) Add expire to redis sink

[

https://issues.apache.org/jira/browse/BAHIR-155?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16949035#comment-16949035

]

ASF GitHub Bot commented on BAHIR-155:

--

lresende commented on issue #66: [BAHIR-155] TTL to HSET and SETEX command

URL: https://github.com/apache/bahir-flink/pull/66#issuecomment-540858385

Looks good, @eskabetxe do you want to take a quick look at this?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Add expire to redis sink

> -

>

> Key: BAHIR-155

> URL: https://issues.apache.org/jira/browse/BAHIR-155

> Project: Bahir

> Issue Type: Wish

> Components: Flink Streaming Connectors

>Affects Versions: Flink-1.0

>Reporter: miki haiat

>Priority: Major

> Labels: features

> Fix For: Flink-1.0

>

>

> I have a scenario that im collection some MD and aggregate the result by

> time .

> for example Each HSET of each window can create different values

> by adding expiry i can guarantee that the key is holding only the current

> window values

> im thinking to change the the interface signuter

>

> {code:java}

> void hset(String key, String hashField, String value);

> void set(String key, String value);

> //to this

> void hset(String key, String hashField, String value,int expire);

> void set(String key, String value,int expire);

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (BAHIR-155) Add expire to redis sink

[

https://issues.apache.org/jira/browse/BAHIR-155?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16948026#comment-16948026

]

ASF GitHub Bot commented on BAHIR-155:

--

tutss commented on pull request #66: [BAHIR-155] TTL to HSET and SETEX command

URL: https://github.com/apache/bahir-flink/pull/66

This PR introduces some new functionality, following [BAHIR-155 Jira

discussion](https://issues.apache.org/jira/projects/BAHIR/issues/BAHIR-155?filter=allopenissues):

- Possibility to include TTL to a HASH in HSET operation.

- SETEX command.

Tests for each respective change were included.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Add expire to redis sink

> -

>

> Key: BAHIR-155

> URL: https://issues.apache.org/jira/browse/BAHIR-155

> Project: Bahir

> Issue Type: Wish

> Components: Flink Streaming Connectors

>Affects Versions: Flink-1.0

>Reporter: miki haiat

>Priority: Major

> Labels: features

> Fix For: Flink-1.0

>

>

> I have a scenario that im collection some MD and aggregate the result by

> time .

> for example Each HSET of each window can create different values

> by adding expiry i can guarantee that the key is holding only the current

> window values

> im thinking to change the the interface signuter

>

> {code:java}

> void hset(String key, String hashField, String value);

> void set(String key, String value);

> //to this

> void hset(String key, String hashField, String value,int expire);

> void set(String key, String value,int expire);

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (BAHIR-107) Build and test Bahir against Scala 2.12

[ https://issues.apache.org/jira/browse/BAHIR-107?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16926406#comment-16926406 ] ASF GitHub Bot commented on BAHIR-107: -- mahtuog commented on issue #76: [BAHIR-107] Upgrade to Scala 2.12 and Spark 2.4.0 URL: https://github.com/apache/bahir/pull/76#issuecomment-529823908 Any info on when the sql-streaming-mqtt for Spark 2.4 will be released ? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org > Build and test Bahir against Scala 2.12 > --- > > Key: BAHIR-107 > URL: https://issues.apache.org/jira/browse/BAHIR-107 > Project: Bahir > Issue Type: Improvement >Reporter: Ted Yu >Assignee: Lukasz Antoniak >Priority: Major > Fix For: Spark-2.4.0 > > > Spark has started effort for accommodating Scala 2.12 > See SPARK-14220 . > This JIRA is to track requirements for building Bahir on Scala 2.12.7 -- This message was sent by Atlassian Jira (v8.3.2#803003)

[jira] [Commented] (BAHIR-214) Improve KuduConnector speed

[ https://issues.apache.org/jira/browse/BAHIR-214?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16921088#comment-16921088 ] ASF GitHub Bot commented on BAHIR-214: -- lresende commented on pull request #64: [BAHIR-214]: improve speed and solve issues on eventual consistence URL: https://github.com/apache/bahir-flink/pull/64 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org > Improve KuduConnector speed > --- > > Key: BAHIR-214 > URL: https://issues.apache.org/jira/browse/BAHIR-214 > Project: Bahir > Issue Type: Improvement > Components: Flink Streaming Connectors >Reporter: Joao Boto >Assignee: Joao Boto >Priority: Major > > kudu connector has some issues on kudu sink with some flush modes that kill > sink over time > > this is a refactor to resolve that issues and improve speed on eventual > consistence -- This message was sent by Atlassian Jira (v8.3.2#803003)

[jira] [Commented] (BAHIR-172) Avoid FileInputStream/FileOutputStream

[

https://issues.apache.org/jira/browse/BAHIR-172?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16921085#comment-16921085

]

ASF GitHub Bot commented on BAHIR-172:

--

lresende commented on pull request #92: [BAHIR-172 ] Create input stream and

output stream of file with Files

URL: https://github.com/apache/bahir/pull/92

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Avoid FileInputStream/FileOutputStream

> --

>

> Key: BAHIR-172

> URL: https://issues.apache.org/jira/browse/BAHIR-172

> Project: Bahir

> Issue Type: Bug

>Reporter: Ted Yu

>Priority: Minor

>

> They rely on finalizers (before Java 11), which create unnecessary GC load.

> The alternatives, {{Files.newInputStream}}, are as easy to use and don't have

> this issue.

--

This message was sent by Atlassian Jira

(v8.3.2#803003)

[jira] [Commented] (BAHIR-213) Faster S3 file Source for Structured Streaming with SQS

[ https://issues.apache.org/jira/browse/BAHIR-213?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16921083#comment-16921083 ] ASF GitHub Bot commented on BAHIR-213: -- lresende commented on issue #91: [BAHIR-213] Faster S3 file Source for Structured Streaming with SQS URL: https://github.com/apache/bahir/pull/91#issuecomment-527259701 @steveloughran could you please review this. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org > Faster S3 file Source for Structured Streaming with SQS > --- > > Key: BAHIR-213 > URL: https://issues.apache.org/jira/browse/BAHIR-213 > Project: Bahir > Issue Type: New Feature > Components: Spark Structured Streaming Connectors >Affects Versions: Spark-2.4.0 >Reporter: Abhishek Dixit >Priority: Major > > Using FileStreamSource to read files from a S3 bucket has problems both in > terms of costs and latency: > * *Latency:* Listing all the files in S3 buckets every microbatch can be > both slow and resource intensive. > * *Costs:* Making List API requests to S3 every microbatch can be costly. > The solution is to use Amazon Simple Queue Service (SQS) which lets you find > new files written to S3 bucket without the need to list all the files every > microbatch. > S3 buckets can be configured to send notification to an Amazon SQS Queue on > Object Create / Object Delete events. For details see AWS documentation here > [Configuring S3 Event > Notifications|https://docs.aws.amazon.com/AmazonS3/latest/dev/NotificationHowTo.html] > > Spark can leverage this to find new files written to S3 bucket by reading > notifications from SQS queue instead of listing files every microbatch. > I hope to contribute changes proposed in [this pull > request|https://github.com/apache/spark/pull/24934] to Apache Bahir as > suggested by [gaborgsomogyi|https://github.com/gaborgsomogyi] > [here|https://github.com/apache/spark/pull/24934#issuecomment-511389130] -- This message was sent by Atlassian Jira (v8.3.2#803003)

[jira] [Commented] (BAHIR-217) Install of Oracle JDK 8 Failing in Travis CI

[

https://issues.apache.org/jira/browse/BAHIR-217?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16919823#comment-16919823

]

ASF GitHub Bot commented on BAHIR-217:

--

lresende commented on pull request #93: [BAHIR-217] Install of Oracle JDK 8

Failing in Travis CI

URL: https://github.com/apache/bahir/pull/93

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Install of Oracle JDK 8 Failing in Travis CI

>

>

> Key: BAHIR-217

> URL: https://issues.apache.org/jira/browse/BAHIR-217

> Project: Bahir

> Issue Type: Bug

> Components: Build

>Reporter: Abhishek Dixit

>Priority: Major

> Labels: build, easyfix

>

> Install of Oracle JDK 8 Failing in Travis CI. As a result, build is failing

> for new pull requests.

> We need to make a small fix in _ __ .travis.yml_ file as mentioned in the

> issue here:

> https://travis-ci.community/t/install-of-oracle-jdk-8-failing/3038

> We just need to add

> {code:java}

> dist: trusty{code}

> in the .travis.yml file as mentioned in the issue above.

> I can raise a PR for this fix if required.

--

This message was sent by Atlassian Jira

(v8.3.2#803003)

[jira] [Commented] (BAHIR-217) Install of Oracle JDK 8 Failing in Travis CI

[

https://issues.apache.org/jira/browse/BAHIR-217?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16919821#comment-16919821

]

ASF GitHub Bot commented on BAHIR-217:

--

lresende commented on issue #93: [BAHIR-217] Install of Oracle JDK 8 Failing in

Travis CI

URL: https://github.com/apache/bahir/pull/93#issuecomment-526706426

Thanks for the clarification. LGTM

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Install of Oracle JDK 8 Failing in Travis CI

>

>

> Key: BAHIR-217

> URL: https://issues.apache.org/jira/browse/BAHIR-217

> Project: Bahir

> Issue Type: Bug

> Components: Build

>Reporter: Abhishek Dixit

>Priority: Major

> Labels: build, easyfix

>

> Install of Oracle JDK 8 Failing in Travis CI. As a result, build is failing

> for new pull requests.

> We need to make a small fix in _ __ .travis.yml_ file as mentioned in the

> issue here:

> https://travis-ci.community/t/install-of-oracle-jdk-8-failing/3038

> We just need to add

> {code:java}

> dist: trusty{code}

> in the .travis.yml file as mentioned in the issue above.

> I can raise a PR for this fix if required.

--

This message was sent by Atlassian Jira

(v8.3.2#803003)

[jira] [Commented] (BAHIR-217) Install of Oracle JDK 8 Failing in Travis CI

[

https://issues.apache.org/jira/browse/BAHIR-217?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16919811#comment-16919811

]

ASF GitHub Bot commented on BAHIR-217:

--

abhishekd0907 commented on issue #93: [BAHIR-217] Install of Oracle JDK 8

Failing in Travis CI

URL: https://github.com/apache/bahir/pull/93#issuecomment-526698979

> Just out of curiosity, I [build master on

travis](https://travis-ci.org/apache/bahir/builds/544930279) without a problem.

How are you seeing the issue requiring trusty distro for travis builds.

@lresende I had raised another PR in BHAIR regarding sql-streaming SQS

connector #91

and its travis build is failing due to install jdk error. You can see the

details below:

[Travis Job log](https://travis-ci.org/apache/bahir/jobs/573750572)

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Install of Oracle JDK 8 Failing in Travis CI

>

>

> Key: BAHIR-217

> URL: https://issues.apache.org/jira/browse/BAHIR-217

> Project: Bahir

> Issue Type: Bug

> Components: Build

>Reporter: Abhishek Dixit

>Priority: Major

> Labels: build, easyfix

>

> Install of Oracle JDK 8 Failing in Travis CI. As a result, build is failing

> for new pull requests.

> We need to make a small fix in _ __ .travis.yml_ file as mentioned in the

> issue here:

> https://travis-ci.community/t/install-of-oracle-jdk-8-failing/3038

> We just need to add

> {code:java}

> dist: trusty{code}

> in the .travis.yml file as mentioned in the issue above.

> I can raise a PR for this fix if required.

--

This message was sent by Atlassian Jira

(v8.3.2#803003)

[jira] [Commented] (BAHIR-217) Install of Oracle JDK 8 Failing in Travis CI

[

https://issues.apache.org/jira/browse/BAHIR-217?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16919736#comment-16919736

]

ASF GitHub Bot commented on BAHIR-217:

--

lresende commented on issue #93: [BAHIR-217] Install of Oracle JDK 8 Failing in

Travis CI

URL: https://github.com/apache/bahir/pull/93#issuecomment-526678080

Just out of curiosity, I [build master on

travis](https://travis-ci.org/apache/bahir/builds/544930279) without a problem.

How are you seeing the issue requiring trusty distro for travis builds.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Install of Oracle JDK 8 Failing in Travis CI

>

>

> Key: BAHIR-217

> URL: https://issues.apache.org/jira/browse/BAHIR-217

> Project: Bahir

> Issue Type: Bug

> Components: Build

>Reporter: Abhishek Dixit

>Priority: Major

> Labels: build, easyfix

>

> Install of Oracle JDK 8 Failing in Travis CI. As a result, build is failing

> for new pull requests.

> We need to make a small fix in _ __ .travis.yml_ file as mentioned in the

> issue here:

> https://travis-ci.community/t/install-of-oracle-jdk-8-failing/3038

> We just need to add

> {code:java}

> dist: trusty{code}

> in the .travis.yml file as mentioned in the issue above.

> I can raise a PR for this fix if required.

--

This message was sent by Atlassian Jira

(v8.3.2#803003)

[jira] [Commented] (BAHIR-217) Install of Oracle JDK 8 Failing in Travis CI

[

https://issues.apache.org/jira/browse/BAHIR-217?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16919359#comment-16919359

]

ASF GitHub Bot commented on BAHIR-217:

--

abhishekd0907 commented on pull request #93: [BAHIR-217] Install of Oracle JDK

8 Failing in Travis CI

URL: https://github.com/apache/bahir/pull/93

## What changes were proposed in this pull request?

Install of Oracle JDK 8 Failing in Travis CI. As a result, build is failing

for new pull requests.

We need to make a small fix in _.travis.yml_ file as mentioned in the

issue here:

https://travis-ci.community/t/install-of-oracle-jdk-8-failing/3038

We just need to add

`dist: trusty`

in the _.travis.yml_ file as mentioned in the issue above.

## How will these changes be tested?

Travis Build should successfully pass for this PR, thus testing the changes.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Install of Oracle JDK 8 Failing in Travis CI

>

>

> Key: BAHIR-217

> URL: https://issues.apache.org/jira/browse/BAHIR-217

> Project: Bahir

> Issue Type: Bug

> Components: Build

>Reporter: Abhishek Dixit

>Priority: Major

> Labels: build, easyfix

>

> Install of Oracle JDK 8 Failing in Travis CI. As a result, build is failing

> for new pull requests.

> We need to make a small fix in _ __ .travis.yml_ file as mentioned in the

> issue here:

> https://travis-ci.community/t/install-of-oracle-jdk-8-failing/3038

> We just need to add

> {code:java}

> dist: trusty{code}

> in the .travis.yml file as mentioned in the issue above.

> I can raise a PR for this fix if required.

--

This message was sent by Atlassian Jira

(v8.3.2#803003)

[jira] [Commented] (BAHIR-215) bump flink to 1.9.0

[ https://issues.apache.org/jira/browse/BAHIR-215?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16915865#comment-16915865 ] ASF GitHub Bot commented on BAHIR-215: -- lresende commented on pull request #65: [BAHIR-215]: bump flink version to 1.9.0 URL: https://github.com/apache/bahir-flink/pull/65 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org > bump flink to 1.9.0 > --- > > Key: BAHIR-215 > URL: https://issues.apache.org/jira/browse/BAHIR-215 > Project: Bahir > Issue Type: Improvement >Reporter: Joao Boto >Assignee: Joao Boto >Priority: Major > -- This message was sent by Atlassian Jira (v8.3.2#803003)

[jira] [Commented] (BAHIR-215) bump flink to 1.9.0

[ https://issues.apache.org/jira/browse/BAHIR-215?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16915732#comment-16915732 ] ASF GitHub Bot commented on BAHIR-215: -- eskabetxe commented on issue #65: [BAHIR-215]: bump flink version to 1.9.0 URL: https://github.com/apache/bahir-flink/pull/65#issuecomment-524824774 @lresende could you check this This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org > bump flink to 1.9.0 > --- > > Key: BAHIR-215 > URL: https://issues.apache.org/jira/browse/BAHIR-215 > Project: Bahir > Issue Type: Improvement >Reporter: Joao Boto >Assignee: Joao Boto >Priority: Major > -- This message was sent by Atlassian Jira (v8.3.2#803003)

[jira] [Commented] (BAHIR-215) bump flink to 1.9.0

[ https://issues.apache.org/jira/browse/BAHIR-215?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16915701#comment-16915701 ] ASF GitHub Bot commented on BAHIR-215: -- eskabetxe commented on pull request #65: [BAHIR-215]: bump flink version to 1.9.0 URL: https://github.com/apache/bahir-flink/pull/65 bump flink version to 1.9.0 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org > bump flink to 1.9.0 > --- > > Key: BAHIR-215 > URL: https://issues.apache.org/jira/browse/BAHIR-215 > Project: Bahir > Issue Type: Improvement >Reporter: Joao Boto >Assignee: Joao Boto >Priority: Major > -- This message was sent by Atlassian Jira (v8.3.2#803003)

[jira] [Commented] (BAHIR-210) bump flink version to 1.8.1

[ https://issues.apache.org/jira/browse/BAHIR-210?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16911934#comment-16911934 ] ASF GitHub Bot commented on BAHIR-210: -- lresende commented on pull request #62: [BAHIR-210] upgrade flink to 1.8.1 URL: https://github.com/apache/bahir-flink/pull/62 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org > bump flink version to 1.8.1 > --- > > Key: BAHIR-210 > URL: https://issues.apache.org/jira/browse/BAHIR-210 > Project: Bahir > Issue Type: Improvement > Components: Flink Streaming Connectors >Reporter: Joao Boto >Assignee: Joao Boto >Priority: Major > -- This message was sent by Atlassian Jira (v8.3.2#803003)

[jira] [Commented] (BAHIR-214) Improve KuduConnector speed

[ https://issues.apache.org/jira/browse/BAHIR-214?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16906072#comment-16906072 ] ASF GitHub Bot commented on BAHIR-214: -- eskabetxe commented on issue #64: [BAHIR-214]: improve speed and solve issues on eventual consistence URL: https://github.com/apache/bahir-flink/pull/64#issuecomment-520786266 @lresende this is falling for the same reason BAHIR-210 fails.. this is the error "The job exceeded the maximum log length, and has been terminated." its resolved on that PR, could yo merge that first? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org > Improve KuduConnector speed > --- > > Key: BAHIR-214 > URL: https://issues.apache.org/jira/browse/BAHIR-214 > Project: Bahir > Issue Type: Improvement > Components: Flink Streaming Connectors >Reporter: Joao Boto >Assignee: Joao Boto >Priority: Major > > kudu connector has some issues on kudu sink with some flush modes that kill > sink over time > > this is a refactor to resolve that issues and improve speed on eventual > consistence -- This message was sent by Atlassian JIRA (v7.6.14#76016)

[jira] [Commented] (BAHIR-214) Improve KuduConnector speed

[ https://issues.apache.org/jira/browse/BAHIR-214?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16906054#comment-16906054 ] ASF GitHub Bot commented on BAHIR-214: -- eskabetxe commented on issue #64: [BAHIR-214]: improve speed and solve issues on eventual consistence URL: https://github.com/apache/bahir-flink/pull/64#issuecomment-520779750 @lresende could you check This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org > Improve KuduConnector speed > --- > > Key: BAHIR-214 > URL: https://issues.apache.org/jira/browse/BAHIR-214 > Project: Bahir > Issue Type: Improvement > Components: Flink Streaming Connectors >Reporter: Joao Boto >Assignee: Joao Boto >Priority: Major > > kudu connector has some issues on kudu sink with some flush modes that kill > sink over time > > this is a refactor to resolve that issues and improve speed on eventual > consistence -- This message was sent by Atlassian JIRA (v7.6.14#76016)

[jira] [Commented] (BAHIR-214) Improve KuduConnector speed

[ https://issues.apache.org/jira/browse/BAHIR-214?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16906053#comment-16906053 ] ASF GitHub Bot commented on BAHIR-214: -- eskabetxe commented on pull request #64: [BAHIR-214]: improve speed and solve issues on eventual consistence URL: https://github.com/apache/bahir-flink/pull/64 - resolve eventual consistence issues - improve speed special on eventual consistence stream - actualized Readme This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org > Improve KuduConnector speed > --- > > Key: BAHIR-214 > URL: https://issues.apache.org/jira/browse/BAHIR-214 > Project: Bahir > Issue Type: Improvement > Components: Flink Streaming Connectors >Reporter: Joao Boto >Assignee: Joao Boto >Priority: Major > > kudu connector has some issues on kudu sink with some flush modes that kill > sink over time > > this is a refactor to resolve that issues and improve speed on eventual > consistence -- This message was sent by Atlassian JIRA (v7.6.14#76016)

[jira] [Commented] (BAHIR-213) Faster S3 file Source for Structured Streaming with SQS

[ https://issues.apache.org/jira/browse/BAHIR-213?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16902384#comment-16902384 ] ASF GitHub Bot commented on BAHIR-213: -- abhishekd0907 commented on pull request #91: [BAHIR-213] Faster S3 file Source for Structured Streaming with SQS URL: https://github.com/apache/bahir/pull/91 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org > Faster S3 file Source for Structured Streaming with SQS > --- > > Key: BAHIR-213 > URL: https://issues.apache.org/jira/browse/BAHIR-213 > Project: Bahir > Issue Type: New Feature > Components: Spark Structured Streaming Connectors >Affects Versions: Spark-2.4.0 >Reporter: Abhishek Dixit >Priority: Major > > Using FileStreamSource to read files from a S3 bucket has problems both in > terms of costs and latency: > * *Latency:* Listing all the files in S3 buckets every microbatch can be > both slow and resource intensive. > * *Costs:* Making List API requests to S3 every microbatch can be costly. > The solution is to use Amazon Simple Queue Service (SQS) which lets you find > new files written to S3 bucket without the need to list all the files every > microbatch. > S3 buckets can be configured to send notification to an Amazon SQS Queue on > Object Create / Object Delete events. For details see AWS documentation here > [Configuring S3 Event > Notifications|https://docs.aws.amazon.com/AmazonS3/latest/dev/NotificationHowTo.html] > > Spark can leverage this to find new files written to S3 bucket by reading > notifications from SQS queue instead of listing files every microbatch. > I hope to contribute changes proposed in [this pull > request|https://github.com/apache/spark/pull/24934] to Apache Bahir as > suggested by [gaborgsomogyi|https://github.com/gaborgsomogyi] > [here|https://github.com/apache/spark/pull/24934#issuecomment-511389130] -- This message was sent by Atlassian JIRA (v7.6.14#76016)

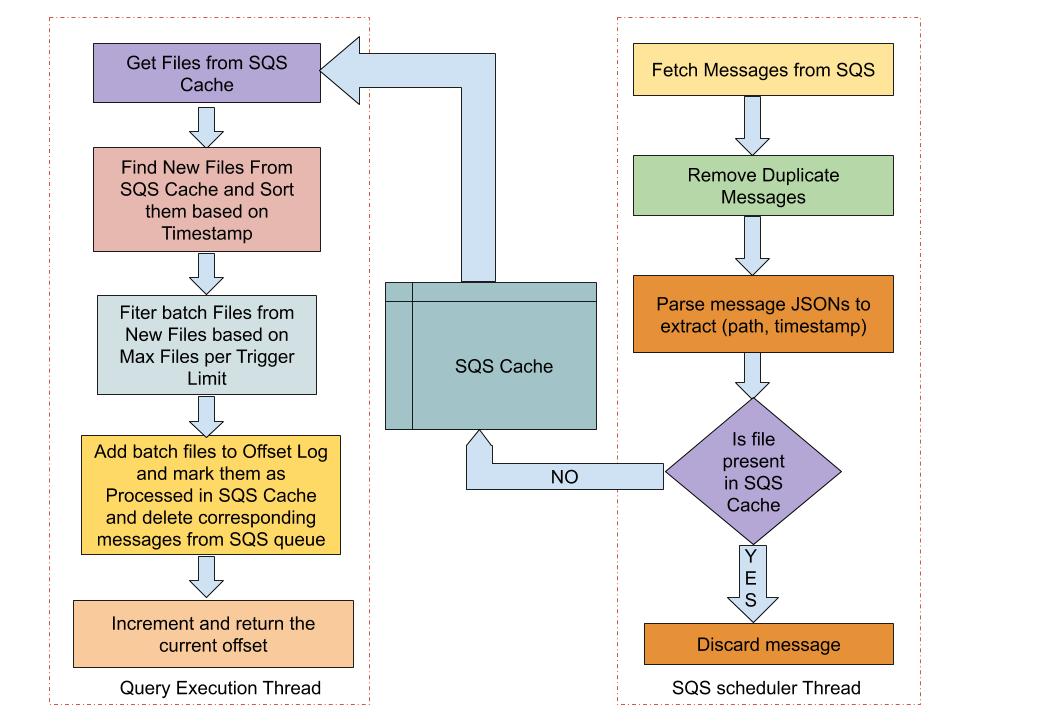

[jira] [Commented] (BAHIR-213) Faster S3 file Source for Structured Streaming with SQS

[

https://issues.apache.org/jira/browse/BAHIR-213?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16902385#comment-16902385

]

ASF GitHub Bot commented on BAHIR-213:

--

abhishekd0907 commented on pull request #91: [BAHIR-213] Faster S3 file Source

for Structured Streaming with SQS

URL: https://github.com/apache/bahir/pull/91

## What changes were proposed in this pull request?

Using FileStreamSource to read files from a S3 bucket has problems both in

terms of costs and latency:

- **Latency**: Listing all the files in S3 buckets every microbatch can be

both slow and resource intensive.

- **Costs**: Making List API requests to S3 every microbatch can be costly.

The solution is to use Amazon Simple Queue Service (SQS) which lets you find

new files written to S3 bucket without the need to list all the files every

microbatch.

S3 buckets can be configured to send notification to an Amazon SQS Queue on

Object Create / Object Delete events. For details see AWS documentation here

[Configuring S3 Event

Notifications](https://docs.aws.amazon.com/AmazonS3/latest/dev/NotificationHowTo.html)

Spark can leverage this to find new files written to S3 bucket by reading

notifications from SQS queue instead of listing files every microbatch.

This PR adds a new SQSSource which uses Amazon SQS queue to find new files

every microbatch.

## Usage

`val inputDf = spark

.readStream

.format("s3-sqs")

.schema(schema)

.option("fileFormat", "json")

.option("sqsUrl", "https://QUEUE_URL;)

.option("region", "us-east-1")

.load()`

## Implementation Details

We create a scheduled thread which runs asynchronously with the streaming

query thread and periodically fetches messages from the SQS Queue. Key

information related to file path & timestamp is extracted from the SQS messages

and the new files are stored in a thread safe SQS file cache.

Streaming Query thread gets the files from SQS File Cache and filters out

the new files. Based on the maxFilesPerTrigger condition, all or a part of the

new files are added to the offset log and marked as processed in the SQS File

Cache. The corresponding SQS messages for the processed files are deleted from

the Amazon SQS Queue and the offset value is incremented and returned.

## How was this patch tested?

Added new unit tests in SqsSourceOptionsSuite which test various

SqsSourceOptions. Will add more tests after some initial feedback on design

approach and functionality.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Faster S3 file Source for Structured Streaming with SQS

> ---

>

> Key: BAHIR-213

> URL: https://issues.apache.org/jira/browse/BAHIR-213

> Project: Bahir

> Issue Type: New Feature

> Components: Spark Structured Streaming Connectors

>Affects Versions: Spark-2.4.0

>Reporter: Abhishek Dixit

>Priority: Major

>

> Using FileStreamSource to read files from a S3 bucket has problems both in

> terms of costs and latency:

> * *Latency:* Listing all the files in S3 buckets every microbatch can be

> both slow and resource intensive.

> * *Costs:* Making List API requests to S3 every microbatch can be costly.

> The solution is to use Amazon Simple Queue Service (SQS) which lets you find

> new files written to S3 bucket without the need to list all the files every

> microbatch.

> S3 buckets can be configured to send notification to an Amazon SQS Queue on

> Object Create / Object Delete events. For details see AWS documentation here

> [Configuring S3 Event

> Notifications|https://docs.aws.amazon.com/AmazonS3/latest/dev/NotificationHowTo.html]

>

> Spark can leverage this to find new files written to S3 bucket by reading