[jira] [Commented] (PARQUET-1633) Integer overflow in ParquetFileReader.ConsecutiveChunkList

[

https://issues.apache.org/jira/browse/PARQUET-1633?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17362791#comment-17362791

]

ASF GitHub Bot commented on PARQUET-1633:

-

eadwright commented on pull request #902:

URL: https://github.com/apache/parquet-mr/pull/902#issuecomment-859662157

@gszadovszky Thanks - just created Jira account `edw_vtxa`.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Integer overflow in ParquetFileReader.ConsecutiveChunkList

> --

>

> Key: PARQUET-1633

> URL: https://issues.apache.org/jira/browse/PARQUET-1633

> Project: Parquet

> Issue Type: Bug

> Components: parquet-mr

>Affects Versions: 1.10.1

>Reporter: Ivan Sadikov

>Priority: Major

>

> When reading a large Parquet file (2.8GB), I encounter the following

> exception:

> {code:java}

> Caused by: org.apache.parquet.io.ParquetDecodingException: Can not read value

> at 0 in block -1 in file

> dbfs:/user/hive/warehouse/demo.db/test_table/part-00014-tid-1888470069989036737-593c82a4-528b-4975-8de0-5bcbc5e9827d-10856-1-c000.snappy.parquet

> at

> org.apache.parquet.hadoop.InternalParquetRecordReader.nextKeyValue(InternalParquetRecordReader.java:251)

> at

> org.apache.parquet.hadoop.ParquetRecordReader.nextKeyValue(ParquetRecordReader.java:207)

> at

> org.apache.spark.sql.execution.datasources.RecordReaderIterator.hasNext(RecordReaderIterator.scala:40)

> at

> org.apache.spark.sql.execution.datasources.FileScanRDD$$anon$1$$anon$2.getNext(FileScanRDD.scala:228)

> ... 14 more

> Caused by: java.lang.IllegalArgumentException: Illegal Capacity: -212

> at java.util.ArrayList.(ArrayList.java:157)

> at

> org.apache.parquet.hadoop.ParquetFileReader$ConsecutiveChunkList.readAll(ParquetFileReader.java:1169){code}

>

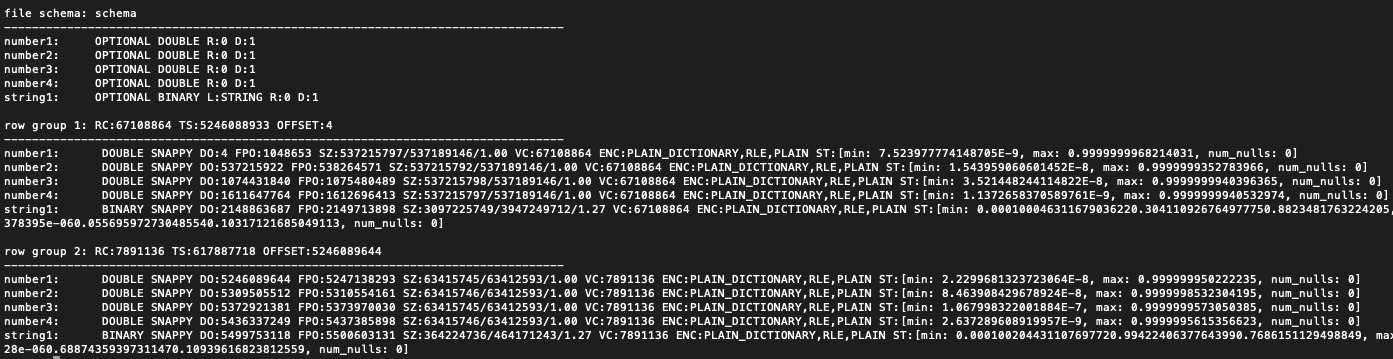

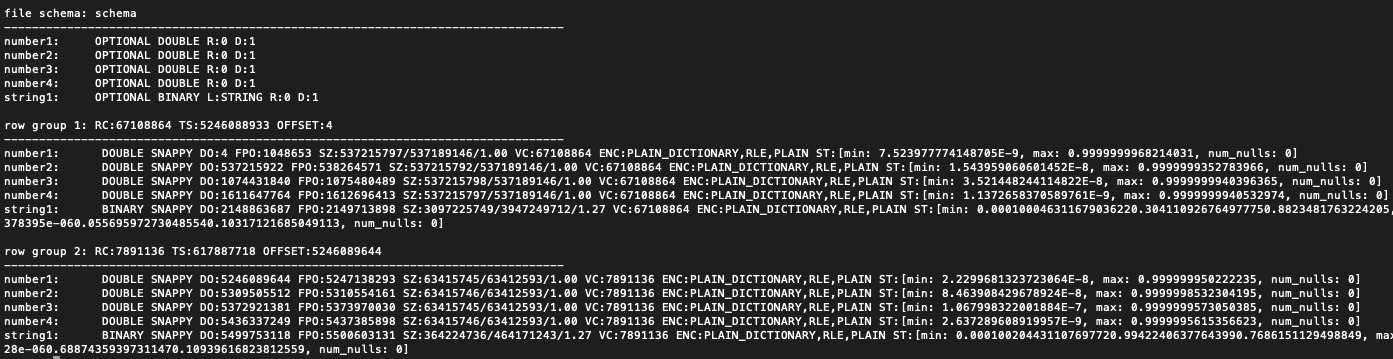

> The file metadata is:

> * block 1 (3 columns)

> ** rowCount: 110,100

> ** totalByteSize: 348,492,072

> ** compressedSize: 165,689,649

> * block 2 (3 columns)

> ** rowCount: 90,054

> ** totalByteSize: 3,243,165,541

> ** compressedSize: 2,509,579,966

> * block 3 (3 columns)

> ** rowCount: 105,119

> ** totalByteSize: 350,901,693

> ** compressedSize: 144,952,177

> * block 4 (3 columns)

> ** rowCount: 48,741

> ** totalByteSize: 1,275,995

> ** compressedSize: 914,205

> I don't have the code to reproduce the issue, unfortunately; however, I

> looked at the code and it seems that integer {{length}} field in

> ConsecutiveChunkList overflows, which results in negative capacity for array

> list in {{readAll}} method:

> {code:java}

> int fullAllocations = length / options.getMaxAllocationSize();

> int lastAllocationSize = length % options.getMaxAllocationSize();

>

> int numAllocations = fullAllocations + (lastAllocationSize > 0 ? 1 : 0);

> List buffers = new ArrayList<>(numAllocations);{code}

>

> This is caused by cast to integer in {{readNextRowGroup}} method in

> ParquetFileReader:

> {code:java}

> currentChunks.addChunk(new ChunkDescriptor(columnDescriptor, mc, startingPos,

> (int)mc.getTotalSize()));

> {code}

> which overflows when total size of the column is larger than

> Integer.MAX_VALUE.

> I would appreciate if you could help addressing the issue. Thanks!

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (PARQUET-1633) Integer overflow in ParquetFileReader.ConsecutiveChunkList

[

https://issues.apache.org/jira/browse/PARQUET-1633?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17362767#comment-17362767

]

ASF GitHub Bot commented on PARQUET-1633:

-

gszadovszky commented on pull request #902:

URL: https://github.com/apache/parquet-mr/pull/902#issuecomment-859381992

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Integer overflow in ParquetFileReader.ConsecutiveChunkList

> --

>

> Key: PARQUET-1633

> URL: https://issues.apache.org/jira/browse/PARQUET-1633

> Project: Parquet

> Issue Type: Bug

> Components: parquet-mr

>Affects Versions: 1.10.1

>Reporter: Ivan Sadikov

>Priority: Major

>

> When reading a large Parquet file (2.8GB), I encounter the following

> exception:

> {code:java}

> Caused by: org.apache.parquet.io.ParquetDecodingException: Can not read value

> at 0 in block -1 in file

> dbfs:/user/hive/warehouse/demo.db/test_table/part-00014-tid-1888470069989036737-593c82a4-528b-4975-8de0-5bcbc5e9827d-10856-1-c000.snappy.parquet

> at

> org.apache.parquet.hadoop.InternalParquetRecordReader.nextKeyValue(InternalParquetRecordReader.java:251)

> at

> org.apache.parquet.hadoop.ParquetRecordReader.nextKeyValue(ParquetRecordReader.java:207)

> at

> org.apache.spark.sql.execution.datasources.RecordReaderIterator.hasNext(RecordReaderIterator.scala:40)

> at

> org.apache.spark.sql.execution.datasources.FileScanRDD$$anon$1$$anon$2.getNext(FileScanRDD.scala:228)

> ... 14 more

> Caused by: java.lang.IllegalArgumentException: Illegal Capacity: -212

> at java.util.ArrayList.(ArrayList.java:157)

> at

> org.apache.parquet.hadoop.ParquetFileReader$ConsecutiveChunkList.readAll(ParquetFileReader.java:1169){code}

>

> The file metadata is:

> * block 1 (3 columns)

> ** rowCount: 110,100

> ** totalByteSize: 348,492,072

> ** compressedSize: 165,689,649

> * block 2 (3 columns)

> ** rowCount: 90,054

> ** totalByteSize: 3,243,165,541

> ** compressedSize: 2,509,579,966

> * block 3 (3 columns)

> ** rowCount: 105,119

> ** totalByteSize: 350,901,693

> ** compressedSize: 144,952,177

> * block 4 (3 columns)

> ** rowCount: 48,741

> ** totalByteSize: 1,275,995

> ** compressedSize: 914,205

> I don't have the code to reproduce the issue, unfortunately; however, I

> looked at the code and it seems that integer {{length}} field in

> ConsecutiveChunkList overflows, which results in negative capacity for array

> list in {{readAll}} method:

> {code:java}

> int fullAllocations = length / options.getMaxAllocationSize();

> int lastAllocationSize = length % options.getMaxAllocationSize();

>

> int numAllocations = fullAllocations + (lastAllocationSize > 0 ? 1 : 0);

> List buffers = new ArrayList<>(numAllocations);{code}

>

> This is caused by cast to integer in {{readNextRowGroup}} method in

> ParquetFileReader:

> {code:java}

> currentChunks.addChunk(new ChunkDescriptor(columnDescriptor, mc, startingPos,

> (int)mc.getTotalSize()));

> {code}

> which overflows when total size of the column is larger than

> Integer.MAX_VALUE.

> I would appreciate if you could help addressing the issue. Thanks!

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (PARQUET-1633) Integer overflow in ParquetFileReader.ConsecutiveChunkList

[

https://issues.apache.org/jira/browse/PARQUET-1633?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17362758#comment-17362758

]

ASF GitHub Bot commented on PARQUET-1633:

-

gszadovszky merged pull request #902:

URL: https://github.com/apache/parquet-mr/pull/902

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Integer overflow in ParquetFileReader.ConsecutiveChunkList

> --

>

> Key: PARQUET-1633

> URL: https://issues.apache.org/jira/browse/PARQUET-1633

> Project: Parquet

> Issue Type: Bug

> Components: parquet-mr

>Affects Versions: 1.10.1

>Reporter: Ivan Sadikov

>Priority: Major

>

> When reading a large Parquet file (2.8GB), I encounter the following

> exception:

> {code:java}

> Caused by: org.apache.parquet.io.ParquetDecodingException: Can not read value

> at 0 in block -1 in file

> dbfs:/user/hive/warehouse/demo.db/test_table/part-00014-tid-1888470069989036737-593c82a4-528b-4975-8de0-5bcbc5e9827d-10856-1-c000.snappy.parquet

> at

> org.apache.parquet.hadoop.InternalParquetRecordReader.nextKeyValue(InternalParquetRecordReader.java:251)

> at

> org.apache.parquet.hadoop.ParquetRecordReader.nextKeyValue(ParquetRecordReader.java:207)

> at

> org.apache.spark.sql.execution.datasources.RecordReaderIterator.hasNext(RecordReaderIterator.scala:40)

> at

> org.apache.spark.sql.execution.datasources.FileScanRDD$$anon$1$$anon$2.getNext(FileScanRDD.scala:228)

> ... 14 more

> Caused by: java.lang.IllegalArgumentException: Illegal Capacity: -212

> at java.util.ArrayList.(ArrayList.java:157)

> at

> org.apache.parquet.hadoop.ParquetFileReader$ConsecutiveChunkList.readAll(ParquetFileReader.java:1169){code}

>

> The file metadata is:

> * block 1 (3 columns)

> ** rowCount: 110,100

> ** totalByteSize: 348,492,072

> ** compressedSize: 165,689,649

> * block 2 (3 columns)

> ** rowCount: 90,054

> ** totalByteSize: 3,243,165,541

> ** compressedSize: 2,509,579,966

> * block 3 (3 columns)

> ** rowCount: 105,119

> ** totalByteSize: 350,901,693

> ** compressedSize: 144,952,177

> * block 4 (3 columns)

> ** rowCount: 48,741

> ** totalByteSize: 1,275,995

> ** compressedSize: 914,205

> I don't have the code to reproduce the issue, unfortunately; however, I

> looked at the code and it seems that integer {{length}} field in

> ConsecutiveChunkList overflows, which results in negative capacity for array

> list in {{readAll}} method:

> {code:java}

> int fullAllocations = length / options.getMaxAllocationSize();

> int lastAllocationSize = length % options.getMaxAllocationSize();

>

> int numAllocations = fullAllocations + (lastAllocationSize > 0 ? 1 : 0);

> List buffers = new ArrayList<>(numAllocations);{code}

>

> This is caused by cast to integer in {{readNextRowGroup}} method in

> ParquetFileReader:

> {code:java}

> currentChunks.addChunk(new ChunkDescriptor(columnDescriptor, mc, startingPos,

> (int)mc.getTotalSize()));

> {code}

> which overflows when total size of the column is larger than

> Integer.MAX_VALUE.

> I would appreciate if you could help addressing the issue. Thanks!

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (PARQUET-1633) Integer overflow in ParquetFileReader.ConsecutiveChunkList

[

https://issues.apache.org/jira/browse/PARQUET-1633?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17361096#comment-17361096

]

ASF GitHub Bot commented on PARQUET-1633:

-

eadwright commented on pull request #902:

URL: https://github.com/apache/parquet-mr/pull/902#issuecomment-858802880

Note I don't have write access, so I guess someone needs to merge this at

some point? Not sure of your workflow.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Integer overflow in ParquetFileReader.ConsecutiveChunkList

> --

>

> Key: PARQUET-1633

> URL: https://issues.apache.org/jira/browse/PARQUET-1633

> Project: Parquet

> Issue Type: Bug

> Components: parquet-mr

>Affects Versions: 1.10.1

>Reporter: Ivan Sadikov

>Priority: Major

>

> When reading a large Parquet file (2.8GB), I encounter the following

> exception:

> {code:java}

> Caused by: org.apache.parquet.io.ParquetDecodingException: Can not read value

> at 0 in block -1 in file

> dbfs:/user/hive/warehouse/demo.db/test_table/part-00014-tid-1888470069989036737-593c82a4-528b-4975-8de0-5bcbc5e9827d-10856-1-c000.snappy.parquet

> at

> org.apache.parquet.hadoop.InternalParquetRecordReader.nextKeyValue(InternalParquetRecordReader.java:251)

> at

> org.apache.parquet.hadoop.ParquetRecordReader.nextKeyValue(ParquetRecordReader.java:207)

> at

> org.apache.spark.sql.execution.datasources.RecordReaderIterator.hasNext(RecordReaderIterator.scala:40)

> at

> org.apache.spark.sql.execution.datasources.FileScanRDD$$anon$1$$anon$2.getNext(FileScanRDD.scala:228)

> ... 14 more

> Caused by: java.lang.IllegalArgumentException: Illegal Capacity: -212

> at java.util.ArrayList.(ArrayList.java:157)

> at

> org.apache.parquet.hadoop.ParquetFileReader$ConsecutiveChunkList.readAll(ParquetFileReader.java:1169){code}

>

> The file metadata is:

> * block 1 (3 columns)

> ** rowCount: 110,100

> ** totalByteSize: 348,492,072

> ** compressedSize: 165,689,649

> * block 2 (3 columns)

> ** rowCount: 90,054

> ** totalByteSize: 3,243,165,541

> ** compressedSize: 2,509,579,966

> * block 3 (3 columns)

> ** rowCount: 105,119

> ** totalByteSize: 350,901,693

> ** compressedSize: 144,952,177

> * block 4 (3 columns)

> ** rowCount: 48,741

> ** totalByteSize: 1,275,995

> ** compressedSize: 914,205

> I don't have the code to reproduce the issue, unfortunately; however, I

> looked at the code and it seems that integer {{length}} field in

> ConsecutiveChunkList overflows, which results in negative capacity for array

> list in {{readAll}} method:

> {code:java}

> int fullAllocations = length / options.getMaxAllocationSize();

> int lastAllocationSize = length % options.getMaxAllocationSize();

>

> int numAllocations = fullAllocations + (lastAllocationSize > 0 ? 1 : 0);

> List buffers = new ArrayList<>(numAllocations);{code}

>

> This is caused by cast to integer in {{readNextRowGroup}} method in

> ParquetFileReader:

> {code:java}

> currentChunks.addChunk(new ChunkDescriptor(columnDescriptor, mc, startingPos,

> (int)mc.getTotalSize()));

> {code}

> which overflows when total size of the column is larger than

> Integer.MAX_VALUE.

> I would appreciate if you could help addressing the issue. Thanks!

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (PARQUET-1633) Integer overflow in ParquetFileReader.ConsecutiveChunkList

[

https://issues.apache.org/jira/browse/PARQUET-1633?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17360984#comment-17360984

]

ASF GitHub Bot commented on PARQUET-1633:

-

eadwright edited a comment on pull request #902:

URL: https://github.com/apache/parquet-mr/pull/902#issuecomment-858684740

Pleasure @gszadovszky - thanks for yours!

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Integer overflow in ParquetFileReader.ConsecutiveChunkList

> --

>

> Key: PARQUET-1633

> URL: https://issues.apache.org/jira/browse/PARQUET-1633

> Project: Parquet

> Issue Type: Bug

> Components: parquet-mr

>Affects Versions: 1.10.1

>Reporter: Ivan Sadikov

>Priority: Major

>

> When reading a large Parquet file (2.8GB), I encounter the following

> exception:

> {code:java}

> Caused by: org.apache.parquet.io.ParquetDecodingException: Can not read value

> at 0 in block -1 in file

> dbfs:/user/hive/warehouse/demo.db/test_table/part-00014-tid-1888470069989036737-593c82a4-528b-4975-8de0-5bcbc5e9827d-10856-1-c000.snappy.parquet

> at

> org.apache.parquet.hadoop.InternalParquetRecordReader.nextKeyValue(InternalParquetRecordReader.java:251)

> at

> org.apache.parquet.hadoop.ParquetRecordReader.nextKeyValue(ParquetRecordReader.java:207)

> at

> org.apache.spark.sql.execution.datasources.RecordReaderIterator.hasNext(RecordReaderIterator.scala:40)

> at

> org.apache.spark.sql.execution.datasources.FileScanRDD$$anon$1$$anon$2.getNext(FileScanRDD.scala:228)

> ... 14 more

> Caused by: java.lang.IllegalArgumentException: Illegal Capacity: -212

> at java.util.ArrayList.(ArrayList.java:157)

> at

> org.apache.parquet.hadoop.ParquetFileReader$ConsecutiveChunkList.readAll(ParquetFileReader.java:1169){code}

>

> The file metadata is:

> * block 1 (3 columns)

> ** rowCount: 110,100

> ** totalByteSize: 348,492,072

> ** compressedSize: 165,689,649

> * block 2 (3 columns)

> ** rowCount: 90,054

> ** totalByteSize: 3,243,165,541

> ** compressedSize: 2,509,579,966

> * block 3 (3 columns)

> ** rowCount: 105,119

> ** totalByteSize: 350,901,693

> ** compressedSize: 144,952,177

> * block 4 (3 columns)

> ** rowCount: 48,741

> ** totalByteSize: 1,275,995

> ** compressedSize: 914,205

> I don't have the code to reproduce the issue, unfortunately; however, I

> looked at the code and it seems that integer {{length}} field in

> ConsecutiveChunkList overflows, which results in negative capacity for array

> list in {{readAll}} method:

> {code:java}

> int fullAllocations = length / options.getMaxAllocationSize();

> int lastAllocationSize = length % options.getMaxAllocationSize();

>

> int numAllocations = fullAllocations + (lastAllocationSize > 0 ? 1 : 0);

> List buffers = new ArrayList<>(numAllocations);{code}

>

> This is caused by cast to integer in {{readNextRowGroup}} method in

> ParquetFileReader:

> {code:java}

> currentChunks.addChunk(new ChunkDescriptor(columnDescriptor, mc, startingPos,

> (int)mc.getTotalSize()));

> {code}

> which overflows when total size of the column is larger than

> Integer.MAX_VALUE.

> I would appreciate if you could help addressing the issue. Thanks!

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (PARQUET-1633) Integer overflow in ParquetFileReader.ConsecutiveChunkList

[

https://issues.apache.org/jira/browse/PARQUET-1633?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17360979#comment-17360979

]

ASF GitHub Bot commented on PARQUET-1633:

-

eadwright commented on pull request #902:

URL: https://github.com/apache/parquet-mr/pull/902#issuecomment-858684740

Pleasure @gszadovszky

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Integer overflow in ParquetFileReader.ConsecutiveChunkList

> --

>

> Key: PARQUET-1633

> URL: https://issues.apache.org/jira/browse/PARQUET-1633

> Project: Parquet

> Issue Type: Bug

> Components: parquet-mr

>Affects Versions: 1.10.1

>Reporter: Ivan Sadikov

>Priority: Major

>

> When reading a large Parquet file (2.8GB), I encounter the following

> exception:

> {code:java}

> Caused by: org.apache.parquet.io.ParquetDecodingException: Can not read value

> at 0 in block -1 in file

> dbfs:/user/hive/warehouse/demo.db/test_table/part-00014-tid-1888470069989036737-593c82a4-528b-4975-8de0-5bcbc5e9827d-10856-1-c000.snappy.parquet

> at

> org.apache.parquet.hadoop.InternalParquetRecordReader.nextKeyValue(InternalParquetRecordReader.java:251)

> at

> org.apache.parquet.hadoop.ParquetRecordReader.nextKeyValue(ParquetRecordReader.java:207)

> at

> org.apache.spark.sql.execution.datasources.RecordReaderIterator.hasNext(RecordReaderIterator.scala:40)

> at

> org.apache.spark.sql.execution.datasources.FileScanRDD$$anon$1$$anon$2.getNext(FileScanRDD.scala:228)

> ... 14 more

> Caused by: java.lang.IllegalArgumentException: Illegal Capacity: -212

> at java.util.ArrayList.(ArrayList.java:157)

> at

> org.apache.parquet.hadoop.ParquetFileReader$ConsecutiveChunkList.readAll(ParquetFileReader.java:1169){code}

>

> The file metadata is:

> * block 1 (3 columns)

> ** rowCount: 110,100

> ** totalByteSize: 348,492,072

> ** compressedSize: 165,689,649

> * block 2 (3 columns)

> ** rowCount: 90,054

> ** totalByteSize: 3,243,165,541

> ** compressedSize: 2,509,579,966

> * block 3 (3 columns)

> ** rowCount: 105,119

> ** totalByteSize: 350,901,693

> ** compressedSize: 144,952,177

> * block 4 (3 columns)

> ** rowCount: 48,741

> ** totalByteSize: 1,275,995

> ** compressedSize: 914,205

> I don't have the code to reproduce the issue, unfortunately; however, I

> looked at the code and it seems that integer {{length}} field in

> ConsecutiveChunkList overflows, which results in negative capacity for array

> list in {{readAll}} method:

> {code:java}

> int fullAllocations = length / options.getMaxAllocationSize();

> int lastAllocationSize = length % options.getMaxAllocationSize();

>

> int numAllocations = fullAllocations + (lastAllocationSize > 0 ? 1 : 0);

> List buffers = new ArrayList<>(numAllocations);{code}

>

> This is caused by cast to integer in {{readNextRowGroup}} method in

> ParquetFileReader:

> {code:java}

> currentChunks.addChunk(new ChunkDescriptor(columnDescriptor, mc, startingPos,

> (int)mc.getTotalSize()));

> {code}

> which overflows when total size of the column is larger than

> Integer.MAX_VALUE.

> I would appreciate if you could help addressing the issue. Thanks!

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (PARQUET-1633) Integer overflow in ParquetFileReader.ConsecutiveChunkList

[

https://issues.apache.org/jira/browse/PARQUET-1633?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17360938#comment-17360938

]

ASF GitHub Bot commented on PARQUET-1633:

-

eadwright commented on pull request #902:

URL: https://github.com/apache/parquet-mr/pull/902#issuecomment-858656793

@gszadovszky done

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Integer overflow in ParquetFileReader.ConsecutiveChunkList

> --

>

> Key: PARQUET-1633

> URL: https://issues.apache.org/jira/browse/PARQUET-1633

> Project: Parquet

> Issue Type: Bug

> Components: parquet-mr

>Affects Versions: 1.10.1

>Reporter: Ivan Sadikov

>Priority: Major

>

> When reading a large Parquet file (2.8GB), I encounter the following

> exception:

> {code:java}

> Caused by: org.apache.parquet.io.ParquetDecodingException: Can not read value

> at 0 in block -1 in file

> dbfs:/user/hive/warehouse/demo.db/test_table/part-00014-tid-1888470069989036737-593c82a4-528b-4975-8de0-5bcbc5e9827d-10856-1-c000.snappy.parquet

> at

> org.apache.parquet.hadoop.InternalParquetRecordReader.nextKeyValue(InternalParquetRecordReader.java:251)

> at

> org.apache.parquet.hadoop.ParquetRecordReader.nextKeyValue(ParquetRecordReader.java:207)

> at

> org.apache.spark.sql.execution.datasources.RecordReaderIterator.hasNext(RecordReaderIterator.scala:40)

> at

> org.apache.spark.sql.execution.datasources.FileScanRDD$$anon$1$$anon$2.getNext(FileScanRDD.scala:228)

> ... 14 more

> Caused by: java.lang.IllegalArgumentException: Illegal Capacity: -212

> at java.util.ArrayList.(ArrayList.java:157)

> at

> org.apache.parquet.hadoop.ParquetFileReader$ConsecutiveChunkList.readAll(ParquetFileReader.java:1169){code}

>

> The file metadata is:

> * block 1 (3 columns)

> ** rowCount: 110,100

> ** totalByteSize: 348,492,072

> ** compressedSize: 165,689,649

> * block 2 (3 columns)

> ** rowCount: 90,054

> ** totalByteSize: 3,243,165,541

> ** compressedSize: 2,509,579,966

> * block 3 (3 columns)

> ** rowCount: 105,119

> ** totalByteSize: 350,901,693

> ** compressedSize: 144,952,177

> * block 4 (3 columns)

> ** rowCount: 48,741

> ** totalByteSize: 1,275,995

> ** compressedSize: 914,205

> I don't have the code to reproduce the issue, unfortunately; however, I

> looked at the code and it seems that integer {{length}} field in

> ConsecutiveChunkList overflows, which results in negative capacity for array

> list in {{readAll}} method:

> {code:java}

> int fullAllocations = length / options.getMaxAllocationSize();

> int lastAllocationSize = length % options.getMaxAllocationSize();

>

> int numAllocations = fullAllocations + (lastAllocationSize > 0 ? 1 : 0);

> List buffers = new ArrayList<>(numAllocations);{code}

>

> This is caused by cast to integer in {{readNextRowGroup}} method in

> ParquetFileReader:

> {code:java}

> currentChunks.addChunk(new ChunkDescriptor(columnDescriptor, mc, startingPos,

> (int)mc.getTotalSize()));

> {code}

> which overflows when total size of the column is larger than

> Integer.MAX_VALUE.

> I would appreciate if you could help addressing the issue. Thanks!

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (PARQUET-1633) Integer overflow in ParquetFileReader.ConsecutiveChunkList

[

https://issues.apache.org/jira/browse/PARQUET-1633?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17360832#comment-17360832

]

ASF GitHub Bot commented on PARQUET-1633:

-

gszadovszky commented on pull request #902:

URL: https://github.com/apache/parquet-mr/pull/902#issuecomment-858568042

@eadwright, sorry, you're right. This is not tightly related to your PR.

Please, remove the try-catch blocks for OOE and put an `@Ignore` annotation to

the test class for now. I'll open a separate jira to solve the issue of such

"not-to-be-tested-by-ci" tests.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Integer overflow in ParquetFileReader.ConsecutiveChunkList

> --

>

> Key: PARQUET-1633

> URL: https://issues.apache.org/jira/browse/PARQUET-1633

> Project: Parquet

> Issue Type: Bug

> Components: parquet-mr

>Affects Versions: 1.10.1

>Reporter: Ivan Sadikov

>Priority: Major

>

> When reading a large Parquet file (2.8GB), I encounter the following

> exception:

> {code:java}

> Caused by: org.apache.parquet.io.ParquetDecodingException: Can not read value

> at 0 in block -1 in file

> dbfs:/user/hive/warehouse/demo.db/test_table/part-00014-tid-1888470069989036737-593c82a4-528b-4975-8de0-5bcbc5e9827d-10856-1-c000.snappy.parquet

> at

> org.apache.parquet.hadoop.InternalParquetRecordReader.nextKeyValue(InternalParquetRecordReader.java:251)

> at

> org.apache.parquet.hadoop.ParquetRecordReader.nextKeyValue(ParquetRecordReader.java:207)

> at

> org.apache.spark.sql.execution.datasources.RecordReaderIterator.hasNext(RecordReaderIterator.scala:40)

> at

> org.apache.spark.sql.execution.datasources.FileScanRDD$$anon$1$$anon$2.getNext(FileScanRDD.scala:228)

> ... 14 more

> Caused by: java.lang.IllegalArgumentException: Illegal Capacity: -212

> at java.util.ArrayList.(ArrayList.java:157)

> at

> org.apache.parquet.hadoop.ParquetFileReader$ConsecutiveChunkList.readAll(ParquetFileReader.java:1169){code}

>

> The file metadata is:

> * block 1 (3 columns)

> ** rowCount: 110,100

> ** totalByteSize: 348,492,072

> ** compressedSize: 165,689,649

> * block 2 (3 columns)

> ** rowCount: 90,054

> ** totalByteSize: 3,243,165,541

> ** compressedSize: 2,509,579,966

> * block 3 (3 columns)

> ** rowCount: 105,119

> ** totalByteSize: 350,901,693

> ** compressedSize: 144,952,177

> * block 4 (3 columns)

> ** rowCount: 48,741

> ** totalByteSize: 1,275,995

> ** compressedSize: 914,205

> I don't have the code to reproduce the issue, unfortunately; however, I

> looked at the code and it seems that integer {{length}} field in

> ConsecutiveChunkList overflows, which results in negative capacity for array

> list in {{readAll}} method:

> {code:java}

> int fullAllocations = length / options.getMaxAllocationSize();

> int lastAllocationSize = length % options.getMaxAllocationSize();

>

> int numAllocations = fullAllocations + (lastAllocationSize > 0 ? 1 : 0);

> List buffers = new ArrayList<>(numAllocations);{code}

>

> This is caused by cast to integer in {{readNextRowGroup}} method in

> ParquetFileReader:

> {code:java}

> currentChunks.addChunk(new ChunkDescriptor(columnDescriptor, mc, startingPos,

> (int)mc.getTotalSize()));

> {code}

> which overflows when total size of the column is larger than

> Integer.MAX_VALUE.

> I would appreciate if you could help addressing the issue. Thanks!

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (PARQUET-1633) Integer overflow in ParquetFileReader.ConsecutiveChunkList

[

https://issues.apache.org/jira/browse/PARQUET-1633?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17360705#comment-17360705

]

ASF GitHub Bot commented on PARQUET-1633:

-

eadwright commented on pull request #902:

URL: https://github.com/apache/parquet-mr/pull/902#issuecomment-858483789

Interesting options @gszadovszky , I have no strong opinion. I'd just like

this fix merged once everyone is happy :)

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Integer overflow in ParquetFileReader.ConsecutiveChunkList

> --

>

> Key: PARQUET-1633

> URL: https://issues.apache.org/jira/browse/PARQUET-1633

> Project: Parquet

> Issue Type: Bug

> Components: parquet-mr

>Affects Versions: 1.10.1

>Reporter: Ivan Sadikov

>Priority: Major

>

> When reading a large Parquet file (2.8GB), I encounter the following

> exception:

> {code:java}

> Caused by: org.apache.parquet.io.ParquetDecodingException: Can not read value

> at 0 in block -1 in file

> dbfs:/user/hive/warehouse/demo.db/test_table/part-00014-tid-1888470069989036737-593c82a4-528b-4975-8de0-5bcbc5e9827d-10856-1-c000.snappy.parquet

> at

> org.apache.parquet.hadoop.InternalParquetRecordReader.nextKeyValue(InternalParquetRecordReader.java:251)

> at

> org.apache.parquet.hadoop.ParquetRecordReader.nextKeyValue(ParquetRecordReader.java:207)

> at

> org.apache.spark.sql.execution.datasources.RecordReaderIterator.hasNext(RecordReaderIterator.scala:40)

> at

> org.apache.spark.sql.execution.datasources.FileScanRDD$$anon$1$$anon$2.getNext(FileScanRDD.scala:228)

> ... 14 more

> Caused by: java.lang.IllegalArgumentException: Illegal Capacity: -212

> at java.util.ArrayList.(ArrayList.java:157)

> at

> org.apache.parquet.hadoop.ParquetFileReader$ConsecutiveChunkList.readAll(ParquetFileReader.java:1169){code}

>

> The file metadata is:

> * block 1 (3 columns)

> ** rowCount: 110,100

> ** totalByteSize: 348,492,072

> ** compressedSize: 165,689,649

> * block 2 (3 columns)

> ** rowCount: 90,054

> ** totalByteSize: 3,243,165,541

> ** compressedSize: 2,509,579,966

> * block 3 (3 columns)

> ** rowCount: 105,119

> ** totalByteSize: 350,901,693

> ** compressedSize: 144,952,177

> * block 4 (3 columns)

> ** rowCount: 48,741

> ** totalByteSize: 1,275,995

> ** compressedSize: 914,205

> I don't have the code to reproduce the issue, unfortunately; however, I

> looked at the code and it seems that integer {{length}} field in

> ConsecutiveChunkList overflows, which results in negative capacity for array

> list in {{readAll}} method:

> {code:java}

> int fullAllocations = length / options.getMaxAllocationSize();

> int lastAllocationSize = length % options.getMaxAllocationSize();

>

> int numAllocations = fullAllocations + (lastAllocationSize > 0 ? 1 : 0);

> List buffers = new ArrayList<>(numAllocations);{code}

>

> This is caused by cast to integer in {{readNextRowGroup}} method in

> ParquetFileReader:

> {code:java}

> currentChunks.addChunk(new ChunkDescriptor(columnDescriptor, mc, startingPos,

> (int)mc.getTotalSize()));

> {code}

> which overflows when total size of the column is larger than

> Integer.MAX_VALUE.

> I would appreciate if you could help addressing the issue. Thanks!

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (PARQUET-1633) Integer overflow in ParquetFileReader.ConsecutiveChunkList

[

https://issues.apache.org/jira/browse/PARQUET-1633?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17360695#comment-17360695

]

ASF GitHub Bot commented on PARQUET-1633:

-

gszadovszky commented on pull request #902:

URL: https://github.com/apache/parquet-mr/pull/902#issuecomment-858478591

@eadwright, I understand your concerns I don't really like it either.

Meanwhile, I don't feel good having a test that is not executed automatically.

Without regular executions there is no guarantee that this test would be

executed ever again and even if someone would execute it it might fail because

of the lack of maintenance.

What do you think about the following options? @shangxinli, I'm also curious

about your ideas.

* Execute this test separately with a maven profile. I am not sure if the CI

allows allocating such large memory but with Xmx options we might give a try

and create a separate check for this test only.

* Similar to the previous with the profile but not executing in the CI ever.

Instead, we add some comments to the release doc so this test will be executed

at least once per release.

* Configuring the CI profile to skip this test but have it in the normal

scenario meaning the devs will execute it locally. There are a couple of cons

though. There is no guarantee that devs executes all the tests including this

one. It also can cause issues if the dev doesn't have enough memory and don't

know that the test failure is not related to the current change.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Integer overflow in ParquetFileReader.ConsecutiveChunkList

> --

>

> Key: PARQUET-1633

> URL: https://issues.apache.org/jira/browse/PARQUET-1633

> Project: Parquet

> Issue Type: Bug

> Components: parquet-mr

>Affects Versions: 1.10.1

>Reporter: Ivan Sadikov

>Priority: Major

>

> When reading a large Parquet file (2.8GB), I encounter the following

> exception:

> {code:java}

> Caused by: org.apache.parquet.io.ParquetDecodingException: Can not read value

> at 0 in block -1 in file

> dbfs:/user/hive/warehouse/demo.db/test_table/part-00014-tid-1888470069989036737-593c82a4-528b-4975-8de0-5bcbc5e9827d-10856-1-c000.snappy.parquet

> at

> org.apache.parquet.hadoop.InternalParquetRecordReader.nextKeyValue(InternalParquetRecordReader.java:251)

> at

> org.apache.parquet.hadoop.ParquetRecordReader.nextKeyValue(ParquetRecordReader.java:207)

> at

> org.apache.spark.sql.execution.datasources.RecordReaderIterator.hasNext(RecordReaderIterator.scala:40)

> at

> org.apache.spark.sql.execution.datasources.FileScanRDD$$anon$1$$anon$2.getNext(FileScanRDD.scala:228)

> ... 14 more

> Caused by: java.lang.IllegalArgumentException: Illegal Capacity: -212

> at java.util.ArrayList.(ArrayList.java:157)

> at

> org.apache.parquet.hadoop.ParquetFileReader$ConsecutiveChunkList.readAll(ParquetFileReader.java:1169){code}

>

> The file metadata is:

> * block 1 (3 columns)

> ** rowCount: 110,100

> ** totalByteSize: 348,492,072

> ** compressedSize: 165,689,649

> * block 2 (3 columns)

> ** rowCount: 90,054

> ** totalByteSize: 3,243,165,541

> ** compressedSize: 2,509,579,966

> * block 3 (3 columns)

> ** rowCount: 105,119

> ** totalByteSize: 350,901,693

> ** compressedSize: 144,952,177

> * block 4 (3 columns)

> ** rowCount: 48,741

> ** totalByteSize: 1,275,995

> ** compressedSize: 914,205

> I don't have the code to reproduce the issue, unfortunately; however, I

> looked at the code and it seems that integer {{length}} field in

> ConsecutiveChunkList overflows, which results in negative capacity for array

> list in {{readAll}} method:

> {code:java}

> int fullAllocations = length / options.getMaxAllocationSize();

> int lastAllocationSize = length % options.getMaxAllocationSize();

>

> int numAllocations = fullAllocations + (lastAllocationSize > 0 ? 1 : 0);

> List buffers = new ArrayList<>(numAllocations);{code}

>

> This is caused by cast to integer in {{readNextRowGroup}} method in

> ParquetFileReader:

> {code:java}

> currentChunks.addChunk(new ChunkDescriptor(columnDescriptor, mc, startingPos,

> (int)mc.getTotalSize()));

> {code}

> which overflows when total size of the column is larger than

> Integer.MAX_VALUE.

> I would appreciate if you could help addressing the issue. Thanks!

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (PARQUET-1633) Integer overflow in ParquetFileReader.ConsecutiveChunkList

[

https://issues.apache.org/jira/browse/PARQUET-1633?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17360686#comment-17360686

]

ASF GitHub Bot commented on PARQUET-1633:

-

eadwright edited a comment on pull request #902:

URL: https://github.com/apache/parquet-mr/pull/902#issuecomment-858467001

@gszadovszky Sorry, delay on my part. Have merged your changes in. Even in a

testing pipeline I am uncomfortable with catching any kind of

`java.lang.Error`, especially an OOM. Should we remove those catch clauses and

mark this test to be ignored usually - let it be run manually?

Love how the tests need ~3GB, not 10GB.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Integer overflow in ParquetFileReader.ConsecutiveChunkList

> --

>

> Key: PARQUET-1633

> URL: https://issues.apache.org/jira/browse/PARQUET-1633

> Project: Parquet

> Issue Type: Bug

> Components: parquet-mr

>Affects Versions: 1.10.1

>Reporter: Ivan Sadikov

>Priority: Major

>

> When reading a large Parquet file (2.8GB), I encounter the following

> exception:

> {code:java}

> Caused by: org.apache.parquet.io.ParquetDecodingException: Can not read value

> at 0 in block -1 in file

> dbfs:/user/hive/warehouse/demo.db/test_table/part-00014-tid-1888470069989036737-593c82a4-528b-4975-8de0-5bcbc5e9827d-10856-1-c000.snappy.parquet

> at

> org.apache.parquet.hadoop.InternalParquetRecordReader.nextKeyValue(InternalParquetRecordReader.java:251)

> at

> org.apache.parquet.hadoop.ParquetRecordReader.nextKeyValue(ParquetRecordReader.java:207)

> at

> org.apache.spark.sql.execution.datasources.RecordReaderIterator.hasNext(RecordReaderIterator.scala:40)

> at

> org.apache.spark.sql.execution.datasources.FileScanRDD$$anon$1$$anon$2.getNext(FileScanRDD.scala:228)

> ... 14 more

> Caused by: java.lang.IllegalArgumentException: Illegal Capacity: -212

> at java.util.ArrayList.(ArrayList.java:157)

> at

> org.apache.parquet.hadoop.ParquetFileReader$ConsecutiveChunkList.readAll(ParquetFileReader.java:1169){code}

>

> The file metadata is:

> * block 1 (3 columns)

> ** rowCount: 110,100

> ** totalByteSize: 348,492,072

> ** compressedSize: 165,689,649

> * block 2 (3 columns)

> ** rowCount: 90,054

> ** totalByteSize: 3,243,165,541

> ** compressedSize: 2,509,579,966

> * block 3 (3 columns)

> ** rowCount: 105,119

> ** totalByteSize: 350,901,693

> ** compressedSize: 144,952,177

> * block 4 (3 columns)

> ** rowCount: 48,741

> ** totalByteSize: 1,275,995

> ** compressedSize: 914,205

> I don't have the code to reproduce the issue, unfortunately; however, I

> looked at the code and it seems that integer {{length}} field in

> ConsecutiveChunkList overflows, which results in negative capacity for array

> list in {{readAll}} method:

> {code:java}

> int fullAllocations = length / options.getMaxAllocationSize();

> int lastAllocationSize = length % options.getMaxAllocationSize();

>

> int numAllocations = fullAllocations + (lastAllocationSize > 0 ? 1 : 0);

> List buffers = new ArrayList<>(numAllocations);{code}

>

> This is caused by cast to integer in {{readNextRowGroup}} method in

> ParquetFileReader:

> {code:java}

> currentChunks.addChunk(new ChunkDescriptor(columnDescriptor, mc, startingPos,

> (int)mc.getTotalSize()));

> {code}

> which overflows when total size of the column is larger than

> Integer.MAX_VALUE.

> I would appreciate if you could help addressing the issue. Thanks!

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (PARQUET-1633) Integer overflow in ParquetFileReader.ConsecutiveChunkList

[

https://issues.apache.org/jira/browse/PARQUET-1633?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17360679#comment-17360679

]

ASF GitHub Bot commented on PARQUET-1633:

-

eadwright commented on pull request #902:

URL: https://github.com/apache/parquet-mr/pull/902#issuecomment-858467001

@gszadovszky Sorry, delay on my part. Have merged your changes in. Even in a

testing pipeline I am uncomfortable with catching any kind of

`java.lang.Error`, especially an OOM. Should we remove those catch clauses and

mark this test to be ignored usually - let it be run manually?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Integer overflow in ParquetFileReader.ConsecutiveChunkList

> --

>

> Key: PARQUET-1633

> URL: https://issues.apache.org/jira/browse/PARQUET-1633

> Project: Parquet

> Issue Type: Bug

> Components: parquet-mr

>Affects Versions: 1.10.1

>Reporter: Ivan Sadikov

>Priority: Major

>

> When reading a large Parquet file (2.8GB), I encounter the following

> exception:

> {code:java}

> Caused by: org.apache.parquet.io.ParquetDecodingException: Can not read value

> at 0 in block -1 in file

> dbfs:/user/hive/warehouse/demo.db/test_table/part-00014-tid-1888470069989036737-593c82a4-528b-4975-8de0-5bcbc5e9827d-10856-1-c000.snappy.parquet

> at

> org.apache.parquet.hadoop.InternalParquetRecordReader.nextKeyValue(InternalParquetRecordReader.java:251)

> at

> org.apache.parquet.hadoop.ParquetRecordReader.nextKeyValue(ParquetRecordReader.java:207)

> at

> org.apache.spark.sql.execution.datasources.RecordReaderIterator.hasNext(RecordReaderIterator.scala:40)

> at

> org.apache.spark.sql.execution.datasources.FileScanRDD$$anon$1$$anon$2.getNext(FileScanRDD.scala:228)

> ... 14 more

> Caused by: java.lang.IllegalArgumentException: Illegal Capacity: -212

> at java.util.ArrayList.(ArrayList.java:157)

> at

> org.apache.parquet.hadoop.ParquetFileReader$ConsecutiveChunkList.readAll(ParquetFileReader.java:1169){code}

>

> The file metadata is:

> * block 1 (3 columns)

> ** rowCount: 110,100

> ** totalByteSize: 348,492,072

> ** compressedSize: 165,689,649

> * block 2 (3 columns)

> ** rowCount: 90,054

> ** totalByteSize: 3,243,165,541

> ** compressedSize: 2,509,579,966

> * block 3 (3 columns)

> ** rowCount: 105,119

> ** totalByteSize: 350,901,693

> ** compressedSize: 144,952,177

> * block 4 (3 columns)

> ** rowCount: 48,741

> ** totalByteSize: 1,275,995

> ** compressedSize: 914,205

> I don't have the code to reproduce the issue, unfortunately; however, I

> looked at the code and it seems that integer {{length}} field in

> ConsecutiveChunkList overflows, which results in negative capacity for array

> list in {{readAll}} method:

> {code:java}

> int fullAllocations = length / options.getMaxAllocationSize();

> int lastAllocationSize = length % options.getMaxAllocationSize();

>

> int numAllocations = fullAllocations + (lastAllocationSize > 0 ? 1 : 0);

> List buffers = new ArrayList<>(numAllocations);{code}

>

> This is caused by cast to integer in {{readNextRowGroup}} method in

> ParquetFileReader:

> {code:java}

> currentChunks.addChunk(new ChunkDescriptor(columnDescriptor, mc, startingPos,

> (int)mc.getTotalSize()));

> {code}

> which overflows when total size of the column is larger than

> Integer.MAX_VALUE.

> I would appreciate if you could help addressing the issue. Thanks!

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (PARQUET-1633) Integer overflow in ParquetFileReader.ConsecutiveChunkList

[

https://issues.apache.org/jira/browse/PARQUET-1633?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17355329#comment-17355329

]

ASF GitHub Bot commented on PARQUET-1633:

-

eadwright commented on pull request #902:

URL: https://github.com/apache/parquet-mr/pull/902#issuecomment-852434740

@gszadovszky I had a look at your changes. I feel uncomfortable relying on

any behaviour at all after an OOM error. Are you sure this is the right

approach?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Integer overflow in ParquetFileReader.ConsecutiveChunkList

> --

>

> Key: PARQUET-1633

> URL: https://issues.apache.org/jira/browse/PARQUET-1633

> Project: Parquet

> Issue Type: Bug

> Components: parquet-mr

>Affects Versions: 1.10.1

>Reporter: Ivan Sadikov

>Priority: Major

>

> When reading a large Parquet file (2.8GB), I encounter the following

> exception:

> {code:java}

> Caused by: org.apache.parquet.io.ParquetDecodingException: Can not read value

> at 0 in block -1 in file

> dbfs:/user/hive/warehouse/demo.db/test_table/part-00014-tid-1888470069989036737-593c82a4-528b-4975-8de0-5bcbc5e9827d-10856-1-c000.snappy.parquet

> at

> org.apache.parquet.hadoop.InternalParquetRecordReader.nextKeyValue(InternalParquetRecordReader.java:251)

> at

> org.apache.parquet.hadoop.ParquetRecordReader.nextKeyValue(ParquetRecordReader.java:207)

> at

> org.apache.spark.sql.execution.datasources.RecordReaderIterator.hasNext(RecordReaderIterator.scala:40)

> at

> org.apache.spark.sql.execution.datasources.FileScanRDD$$anon$1$$anon$2.getNext(FileScanRDD.scala:228)

> ... 14 more

> Caused by: java.lang.IllegalArgumentException: Illegal Capacity: -212

> at java.util.ArrayList.(ArrayList.java:157)

> at

> org.apache.parquet.hadoop.ParquetFileReader$ConsecutiveChunkList.readAll(ParquetFileReader.java:1169){code}

>

> The file metadata is:

> * block 1 (3 columns)

> ** rowCount: 110,100

> ** totalByteSize: 348,492,072

> ** compressedSize: 165,689,649

> * block 2 (3 columns)

> ** rowCount: 90,054

> ** totalByteSize: 3,243,165,541

> ** compressedSize: 2,509,579,966

> * block 3 (3 columns)

> ** rowCount: 105,119

> ** totalByteSize: 350,901,693

> ** compressedSize: 144,952,177

> * block 4 (3 columns)

> ** rowCount: 48,741

> ** totalByteSize: 1,275,995

> ** compressedSize: 914,205

> I don't have the code to reproduce the issue, unfortunately; however, I

> looked at the code and it seems that integer {{length}} field in

> ConsecutiveChunkList overflows, which results in negative capacity for array

> list in {{readAll}} method:

> {code:java}

> int fullAllocations = length / options.getMaxAllocationSize();

> int lastAllocationSize = length % options.getMaxAllocationSize();

>

> int numAllocations = fullAllocations + (lastAllocationSize > 0 ? 1 : 0);

> List buffers = new ArrayList<>(numAllocations);{code}

>

> This is caused by cast to integer in {{readNextRowGroup}} method in

> ParquetFileReader:

> {code:java}

> currentChunks.addChunk(new ChunkDescriptor(columnDescriptor, mc, startingPos,

> (int)mc.getTotalSize()));

> {code}

> which overflows when total size of the column is larger than

> Integer.MAX_VALUE.

> I would appreciate if you could help addressing the issue. Thanks!

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (PARQUET-1633) Integer overflow in ParquetFileReader.ConsecutiveChunkList

[

https://issues.apache.org/jira/browse/PARQUET-1633?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17354286#comment-17354286

]

ASF GitHub Bot commented on PARQUET-1633:

-

gszadovszky commented on pull request #902:

URL: https://github.com/apache/parquet-mr/pull/902#issuecomment-851298938

@eadwright, I've made some changes in the unit test (no more TODOs). See the

update

[here](https://github.com/gszadovszky/parquet-mr/commit/6dc3f418b537fd5cb7954018243399f39784d81b).

The idea is to not skip the tests in "normal" case but catch the OoM and skip.

This way no tests should fail on any environments. Most of the modern laptops

should have enough memory so this test will be executed on them.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Integer overflow in ParquetFileReader.ConsecutiveChunkList

> --

>

> Key: PARQUET-1633

> URL: https://issues.apache.org/jira/browse/PARQUET-1633

> Project: Parquet

> Issue Type: Bug

> Components: parquet-mr

>Affects Versions: 1.10.1

>Reporter: Ivan Sadikov

>Priority: Major

>

> When reading a large Parquet file (2.8GB), I encounter the following

> exception:

> {code:java}

> Caused by: org.apache.parquet.io.ParquetDecodingException: Can not read value

> at 0 in block -1 in file

> dbfs:/user/hive/warehouse/demo.db/test_table/part-00014-tid-1888470069989036737-593c82a4-528b-4975-8de0-5bcbc5e9827d-10856-1-c000.snappy.parquet

> at

> org.apache.parquet.hadoop.InternalParquetRecordReader.nextKeyValue(InternalParquetRecordReader.java:251)

> at

> org.apache.parquet.hadoop.ParquetRecordReader.nextKeyValue(ParquetRecordReader.java:207)

> at

> org.apache.spark.sql.execution.datasources.RecordReaderIterator.hasNext(RecordReaderIterator.scala:40)

> at

> org.apache.spark.sql.execution.datasources.FileScanRDD$$anon$1$$anon$2.getNext(FileScanRDD.scala:228)

> ... 14 more

> Caused by: java.lang.IllegalArgumentException: Illegal Capacity: -212

> at java.util.ArrayList.(ArrayList.java:157)

> at

> org.apache.parquet.hadoop.ParquetFileReader$ConsecutiveChunkList.readAll(ParquetFileReader.java:1169){code}

>

> The file metadata is:

> * block 1 (3 columns)

> ** rowCount: 110,100

> ** totalByteSize: 348,492,072

> ** compressedSize: 165,689,649

> * block 2 (3 columns)

> ** rowCount: 90,054

> ** totalByteSize: 3,243,165,541

> ** compressedSize: 2,509,579,966

> * block 3 (3 columns)

> ** rowCount: 105,119

> ** totalByteSize: 350,901,693

> ** compressedSize: 144,952,177

> * block 4 (3 columns)

> ** rowCount: 48,741

> ** totalByteSize: 1,275,995

> ** compressedSize: 914,205

> I don't have the code to reproduce the issue, unfortunately; however, I

> looked at the code and it seems that integer {{length}} field in

> ConsecutiveChunkList overflows, which results in negative capacity for array

> list in {{readAll}} method:

> {code:java}

> int fullAllocations = length / options.getMaxAllocationSize();

> int lastAllocationSize = length % options.getMaxAllocationSize();

>

> int numAllocations = fullAllocations + (lastAllocationSize > 0 ? 1 : 0);

> List buffers = new ArrayList<>(numAllocations);{code}

>

> This is caused by cast to integer in {{readNextRowGroup}} method in

> ParquetFileReader:

> {code:java}

> currentChunks.addChunk(new ChunkDescriptor(columnDescriptor, mc, startingPos,

> (int)mc.getTotalSize()));

> {code}

> which overflows when total size of the column is larger than

> Integer.MAX_VALUE.

> I would appreciate if you could help addressing the issue. Thanks!

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (PARQUET-1633) Integer overflow in ParquetFileReader.ConsecutiveChunkList

[

https://issues.apache.org/jira/browse/PARQUET-1633?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17352779#comment-17352779

]

ASF GitHub Bot commented on PARQUET-1633:

-

eadwright commented on pull request #902:

URL: https://github.com/apache/parquet-mr/pull/902#issuecomment-849972541

@gszadovszky I raised a PR to bring your changes in to my fork. Not had time

yet alas to address the TODOs. I can say though that I believe to read that

example file correctly with the fix requires 10GB of heap or so, probably

similar with your test. Agree this test should be disabled by default, too

heavy for CI.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Integer overflow in ParquetFileReader.ConsecutiveChunkList

> --

>

> Key: PARQUET-1633

> URL: https://issues.apache.org/jira/browse/PARQUET-1633

> Project: Parquet

> Issue Type: Bug

> Components: parquet-mr

>Affects Versions: 1.10.1

>Reporter: Ivan Sadikov

>Priority: Major

>

> When reading a large Parquet file (2.8GB), I encounter the following

> exception:

> {code:java}

> Caused by: org.apache.parquet.io.ParquetDecodingException: Can not read value

> at 0 in block -1 in file

> dbfs:/user/hive/warehouse/demo.db/test_table/part-00014-tid-1888470069989036737-593c82a4-528b-4975-8de0-5bcbc5e9827d-10856-1-c000.snappy.parquet

> at

> org.apache.parquet.hadoop.InternalParquetRecordReader.nextKeyValue(InternalParquetRecordReader.java:251)

> at

> org.apache.parquet.hadoop.ParquetRecordReader.nextKeyValue(ParquetRecordReader.java:207)

> at

> org.apache.spark.sql.execution.datasources.RecordReaderIterator.hasNext(RecordReaderIterator.scala:40)

> at

> org.apache.spark.sql.execution.datasources.FileScanRDD$$anon$1$$anon$2.getNext(FileScanRDD.scala:228)

> ... 14 more

> Caused by: java.lang.IllegalArgumentException: Illegal Capacity: -212

> at java.util.ArrayList.(ArrayList.java:157)

> at

> org.apache.parquet.hadoop.ParquetFileReader$ConsecutiveChunkList.readAll(ParquetFileReader.java:1169){code}

>

> The file metadata is:

> * block 1 (3 columns)

> ** rowCount: 110,100

> ** totalByteSize: 348,492,072

> ** compressedSize: 165,689,649

> * block 2 (3 columns)

> ** rowCount: 90,054

> ** totalByteSize: 3,243,165,541

> ** compressedSize: 2,509,579,966

> * block 3 (3 columns)

> ** rowCount: 105,119

> ** totalByteSize: 350,901,693

> ** compressedSize: 144,952,177

> * block 4 (3 columns)

> ** rowCount: 48,741

> ** totalByteSize: 1,275,995

> ** compressedSize: 914,205

> I don't have the code to reproduce the issue, unfortunately; however, I

> looked at the code and it seems that integer {{length}} field in

> ConsecutiveChunkList overflows, which results in negative capacity for array

> list in {{readAll}} method:

> {code:java}

> int fullAllocations = length / options.getMaxAllocationSize();

> int lastAllocationSize = length % options.getMaxAllocationSize();

>

> int numAllocations = fullAllocations + (lastAllocationSize > 0 ? 1 : 0);

> List buffers = new ArrayList<>(numAllocations);{code}

>

> This is caused by cast to integer in {{readNextRowGroup}} method in

> ParquetFileReader:

> {code:java}

> currentChunks.addChunk(new ChunkDescriptor(columnDescriptor, mc, startingPos,

> (int)mc.getTotalSize()));

> {code}

> which overflows when total size of the column is larger than

> Integer.MAX_VALUE.

> I would appreciate if you could help addressing the issue. Thanks!

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (PARQUET-1633) Integer overflow in ParquetFileReader.ConsecutiveChunkList

[

https://issues.apache.org/jira/browse/PARQUET-1633?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17352329#comment-17352329

]

ASF GitHub Bot commented on PARQUET-1633:

-

gszadovszky commented on pull request #902:

URL: https://github.com/apache/parquet-mr/pull/902#issuecomment-849434359

@eadwright, so the CI was

[executed](https://github.com/gszadovszky/parquet-mr/actions/runs/879304911)

somehow on my private repo and failed due to OoM. So, we may either investigate

if we can tweak our configs/CI or disable this test by default.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Integer overflow in ParquetFileReader.ConsecutiveChunkList

> --

>

> Key: PARQUET-1633

> URL: https://issues.apache.org/jira/browse/PARQUET-1633

> Project: Parquet

> Issue Type: Bug

> Components: parquet-mr

>Affects Versions: 1.10.1

>Reporter: Ivan Sadikov

>Priority: Major

>

> When reading a large Parquet file (2.8GB), I encounter the following

> exception:

> {code:java}

> Caused by: org.apache.parquet.io.ParquetDecodingException: Can not read value

> at 0 in block -1 in file

> dbfs:/user/hive/warehouse/demo.db/test_table/part-00014-tid-1888470069989036737-593c82a4-528b-4975-8de0-5bcbc5e9827d-10856-1-c000.snappy.parquet

> at

> org.apache.parquet.hadoop.InternalParquetRecordReader.nextKeyValue(InternalParquetRecordReader.java:251)

> at

> org.apache.parquet.hadoop.ParquetRecordReader.nextKeyValue(ParquetRecordReader.java:207)

> at

> org.apache.spark.sql.execution.datasources.RecordReaderIterator.hasNext(RecordReaderIterator.scala:40)

> at

> org.apache.spark.sql.execution.datasources.FileScanRDD$$anon$1$$anon$2.getNext(FileScanRDD.scala:228)

> ... 14 more

> Caused by: java.lang.IllegalArgumentException: Illegal Capacity: -212

> at java.util.ArrayList.(ArrayList.java:157)

> at

> org.apache.parquet.hadoop.ParquetFileReader$ConsecutiveChunkList.readAll(ParquetFileReader.java:1169){code}

>

> The file metadata is:

> * block 1 (3 columns)

> ** rowCount: 110,100

> ** totalByteSize: 348,492,072

> ** compressedSize: 165,689,649

> * block 2 (3 columns)

> ** rowCount: 90,054

> ** totalByteSize: 3,243,165,541

> ** compressedSize: 2,509,579,966

> * block 3 (3 columns)

> ** rowCount: 105,119

> ** totalByteSize: 350,901,693

> ** compressedSize: 144,952,177

> * block 4 (3 columns)

> ** rowCount: 48,741

> ** totalByteSize: 1,275,995

> ** compressedSize: 914,205

> I don't have the code to reproduce the issue, unfortunately; however, I

> looked at the code and it seems that integer {{length}} field in

> ConsecutiveChunkList overflows, which results in negative capacity for array

> list in {{readAll}} method:

> {code:java}

> int fullAllocations = length / options.getMaxAllocationSize();

> int lastAllocationSize = length % options.getMaxAllocationSize();

>

> int numAllocations = fullAllocations + (lastAllocationSize > 0 ? 1 : 0);

> List buffers = new ArrayList<>(numAllocations);{code}

>

> This is caused by cast to integer in {{readNextRowGroup}} method in

> ParquetFileReader:

> {code:java}

> currentChunks.addChunk(new ChunkDescriptor(columnDescriptor, mc, startingPos,

> (int)mc.getTotalSize()));

> {code}

> which overflows when total size of the column is larger than

> Integer.MAX_VALUE.

> I would appreciate if you could help addressing the issue. Thanks!

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (PARQUET-1633) Integer overflow in ParquetFileReader.ConsecutiveChunkList

[

https://issues.apache.org/jira/browse/PARQUET-1633?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17351932#comment-17351932

]

ASF GitHub Bot commented on PARQUET-1633:

-

gszadovszky commented on pull request #902:

URL: https://github.com/apache/parquet-mr/pull/902#issuecomment-848941568

@eadwright, I've implemented a [unit

test](https://github.com/gszadovszky/parquet-mr/commit/fcaf41269470c03c088b7eb5598558d44013f59d)

to reproduce the issue and test your solution. Feel free to use it in your PR.

I've left some TODOs for you :)

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Integer overflow in ParquetFileReader.ConsecutiveChunkList

> --

>

> Key: PARQUET-1633

> URL: https://issues.apache.org/jira/browse/PARQUET-1633

> Project: Parquet

> Issue Type: Bug

> Components: parquet-mr

>Affects Versions: 1.10.1

>Reporter: Ivan Sadikov

>Priority: Major

>

> When reading a large Parquet file (2.8GB), I encounter the following

> exception:

> {code:java}

> Caused by: org.apache.parquet.io.ParquetDecodingException: Can not read value

> at 0 in block -1 in file

> dbfs:/user/hive/warehouse/demo.db/test_table/part-00014-tid-1888470069989036737-593c82a4-528b-4975-8de0-5bcbc5e9827d-10856-1-c000.snappy.parquet

> at

> org.apache.parquet.hadoop.InternalParquetRecordReader.nextKeyValue(InternalParquetRecordReader.java:251)

> at

> org.apache.parquet.hadoop.ParquetRecordReader.nextKeyValue(ParquetRecordReader.java:207)

> at

> org.apache.spark.sql.execution.datasources.RecordReaderIterator.hasNext(RecordReaderIterator.scala:40)

> at

> org.apache.spark.sql.execution.datasources.FileScanRDD$$anon$1$$anon$2.getNext(FileScanRDD.scala:228)

> ... 14 more

> Caused by: java.lang.IllegalArgumentException: Illegal Capacity: -212

> at java.util.ArrayList.(ArrayList.java:157)

> at

> org.apache.parquet.hadoop.ParquetFileReader$ConsecutiveChunkList.readAll(ParquetFileReader.java:1169){code}

>

> The file metadata is:

> * block 1 (3 columns)

> ** rowCount: 110,100

> ** totalByteSize: 348,492,072

> ** compressedSize: 165,689,649

> * block 2 (3 columns)

> ** rowCount: 90,054

> ** totalByteSize: 3,243,165,541

> ** compressedSize: 2,509,579,966

> * block 3 (3 columns)

> ** rowCount: 105,119

> ** totalByteSize: 350,901,693

> ** compressedSize: 144,952,177

> * block 4 (3 columns)

> ** rowCount: 48,741

> ** totalByteSize: 1,275,995

> ** compressedSize: 914,205

> I don't have the code to reproduce the issue, unfortunately; however, I

> looked at the code and it seems that integer {{length}} field in

> ConsecutiveChunkList overflows, which results in negative capacity for array

> list in {{readAll}} method:

> {code:java}

> int fullAllocations = length / options.getMaxAllocationSize();

> int lastAllocationSize = length % options.getMaxAllocationSize();

>

> int numAllocations = fullAllocations + (lastAllocationSize > 0 ? 1 : 0);

> List buffers = new ArrayList<>(numAllocations);{code}

>

> This is caused by cast to integer in {{readNextRowGroup}} method in

> ParquetFileReader:

> {code:java}

> currentChunks.addChunk(new ChunkDescriptor(columnDescriptor, mc, startingPos,

> (int)mc.getTotalSize()));

> {code}

> which overflows when total size of the column is larger than

> Integer.MAX_VALUE.

> I would appreciate if you could help addressing the issue. Thanks!

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (PARQUET-1633) Integer overflow in ParquetFileReader.ConsecutiveChunkList

[

https://issues.apache.org/jira/browse/PARQUET-1633?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17350959#comment-17350959

]

ASF GitHub Bot commented on PARQUET-1633:

-

eadwright edited a comment on pull request #902:

URL: https://github.com/apache/parquet-mr/pull/902#issuecomment-847752354

@gszadovszky Awesome, appreciated. Also note the file uploaded isn't corrupt

as such, it just goes beyond 32-bit limits.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Integer overflow in ParquetFileReader.ConsecutiveChunkList

> --

>

> Key: PARQUET-1633

> URL: https://issues.apache.org/jira/browse/PARQUET-1633

> Project: Parquet

> Issue Type: Bug

> Components: parquet-mr

>Affects Versions: 1.10.1

>Reporter: Ivan Sadikov

>Priority: Major

>

> When reading a large Parquet file (2.8GB), I encounter the following

> exception:

> {code:java}

> Caused by: org.apache.parquet.io.ParquetDecodingException: Can not read value

> at 0 in block -1 in file

> dbfs:/user/hive/warehouse/demo.db/test_table/part-00014-tid-1888470069989036737-593c82a4-528b-4975-8de0-5bcbc5e9827d-10856-1-c000.snappy.parquet

> at

> org.apache.parquet.hadoop.InternalParquetRecordReader.nextKeyValue(InternalParquetRecordReader.java:251)

> at

> org.apache.parquet.hadoop.ParquetRecordReader.nextKeyValue(ParquetRecordReader.java:207)

> at

> org.apache.spark.sql.execution.datasources.RecordReaderIterator.hasNext(RecordReaderIterator.scala:40)

> at

> org.apache.spark.sql.execution.datasources.FileScanRDD$$anon$1$$anon$2.getNext(FileScanRDD.scala:228)

> ... 14 more

> Caused by: java.lang.IllegalArgumentException: Illegal Capacity: -212

> at java.util.ArrayList.(ArrayList.java:157)

> at

> org.apache.parquet.hadoop.ParquetFileReader$ConsecutiveChunkList.readAll(ParquetFileReader.java:1169){code}

>

> The file metadata is:

> * block 1 (3 columns)

> ** rowCount: 110,100

> ** totalByteSize: 348,492,072

> ** compressedSize: 165,689,649

> * block 2 (3 columns)

> ** rowCount: 90,054

> ** totalByteSize: 3,243,165,541

> ** compressedSize: 2,509,579,966

> * block 3 (3 columns)

> ** rowCount: 105,119

> ** totalByteSize: 350,901,693

> ** compressedSize: 144,952,177

> * block 4 (3 columns)

> ** rowCount: 48,741

> ** totalByteSize: 1,275,995

> ** compressedSize: 914,205

> I don't have the code to reproduce the issue, unfortunately; however, I

> looked at the code and it seems that integer {{length}} field in

> ConsecutiveChunkList overflows, which results in negative capacity for array

> list in {{readAll}} method:

> {code:java}

> int fullAllocations = length / options.getMaxAllocationSize();

> int lastAllocationSize = length % options.getMaxAllocationSize();

>

> int numAllocations = fullAllocations + (lastAllocationSize > 0 ? 1 : 0);

> List buffers = new ArrayList<>(numAllocations);{code}

>

> This is caused by cast to integer in {{readNextRowGroup}} method in

> ParquetFileReader:

> {code:java}

> currentChunks.addChunk(new ChunkDescriptor(columnDescriptor, mc, startingPos,

> (int)mc.getTotalSize()));

> {code}

> which overflows when total size of the column is larger than

> Integer.MAX_VALUE.

> I would appreciate if you could help addressing the issue. Thanks!

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (PARQUET-1633) Integer overflow in ParquetFileReader.ConsecutiveChunkList

[

https://issues.apache.org/jira/browse/PARQUET-1633?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17350958#comment-17350958

]

ASF GitHub Bot commented on PARQUET-1633:

-

eadwright commented on pull request #902:

URL: https://github.com/apache/parquet-mr/pull/902#issuecomment-847752354

Awesome, appreciated. Also note the file uploaded isn't corrupt as such, it

just goes beyond 32-bit limits.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Integer overflow in ParquetFileReader.ConsecutiveChunkList

> --

>

> Key: PARQUET-1633

> URL: https://issues.apache.org/jira/browse/PARQUET-1633

> Project: Parquet

> Issue Type: Bug

> Components: parquet-mr

>Affects Versions: 1.10.1

>Reporter: Ivan Sadikov

>Priority: Major

>

> When reading a large Parquet file (2.8GB), I encounter the following

> exception:

> {code:java}

> Caused by: org.apache.parquet.io.ParquetDecodingException: Can not read value

> at 0 in block -1 in file

> dbfs:/user/hive/warehouse/demo.db/test_table/part-00014-tid-1888470069989036737-593c82a4-528b-4975-8de0-5bcbc5e9827d-10856-1-c000.snappy.parquet

> at

> org.apache.parquet.hadoop.InternalParquetRecordReader.nextKeyValue(InternalParquetRecordReader.java:251)

> at

> org.apache.parquet.hadoop.ParquetRecordReader.nextKeyValue(ParquetRecordReader.java:207)

> at

> org.apache.spark.sql.execution.datasources.RecordReaderIterator.hasNext(RecordReaderIterator.scala:40)

> at

> org.apache.spark.sql.execution.datasources.FileScanRDD$$anon$1$$anon$2.getNext(FileScanRDD.scala:228)

> ... 14 more

> Caused by: java.lang.IllegalArgumentException: Illegal Capacity: -212

> at java.util.ArrayList.(ArrayList.java:157)

> at

> org.apache.parquet.hadoop.ParquetFileReader$ConsecutiveChunkList.readAll(ParquetFileReader.java:1169){code}

>

> The file metadata is:

> * block 1 (3 columns)

> ** rowCount: 110,100

> ** totalByteSize: 348,492,072

> ** compressedSize: 165,689,649

> * block 2 (3 columns)

> ** rowCount: 90,054

> ** totalByteSize: 3,243,165,541

> ** compressedSize: 2,509,579,966

> * block 3 (3 columns)

> ** rowCount: 105,119

> ** totalByteSize: 350,901,693

> ** compressedSize: 144,952,177

> * block 4 (3 columns)

> ** rowCount: 48,741

> ** totalByteSize: 1,275,995

> ** compressedSize: 914,205

> I don't have the code to reproduce the issue, unfortunately; however, I

> looked at the code and it seems that integer {{length}} field in

> ConsecutiveChunkList overflows, which results in negative capacity for array

> list in {{readAll}} method:

> {code:java}

> int fullAllocations = length / options.getMaxAllocationSize();

> int lastAllocationSize = length % options.getMaxAllocationSize();

>

> int numAllocations = fullAllocations + (lastAllocationSize > 0 ? 1 : 0);

> List buffers = new ArrayList<>(numAllocations);{code}

>

> This is caused by cast to integer in {{readNextRowGroup}} method in

> ParquetFileReader:

> {code:java}

> currentChunks.addChunk(new ChunkDescriptor(columnDescriptor, mc, startingPos,

> (int)mc.getTotalSize()));

> {code}

> which overflows when total size of the column is larger than

> Integer.MAX_VALUE.

> I would appreciate if you could help addressing the issue. Thanks!

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (PARQUET-1633) Integer overflow in ParquetFileReader.ConsecutiveChunkList

[

https://issues.apache.org/jira/browse/PARQUET-1633?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17350914#comment-17350914

]

ASF GitHub Bot commented on PARQUET-1633:

-

gszadovszky commented on pull request #902:

URL: https://github.com/apache/parquet-mr/pull/902#issuecomment-847669598