[GitHub] [flink] liyafan82 commented on issue #8682: [FLINK-12796][table-planner-blink] Introduce BaseArray and BaseMap to reduce conversion overhead to blink

liyafan82 commented on issue #8682: [FLINK-12796][table-planner-blink] Introduce BaseArray and BaseMap to reduce conversion overhead to blink URL: https://github.com/apache/flink/pull/8682#issuecomment-501976712 > > Can you please provide the benchmark that produces the 10x performance improvement? > > You can find the case in `SortAggITCase.testBigDataSimpleArrayUDAF`. > If you want to test previous code, you can modify `PrimitiveLongArrayConverter.toInternalImpl` to use `return BinaryArray.fromPrimitiveArray(value);`. > The difference is so obvious, both in generated code and performance. Good job. Thanks. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [flink] sunjincheng121 edited a comment on issue #7227: [FLINK-11059] [runtime] do not add releasing failed slot to free slots

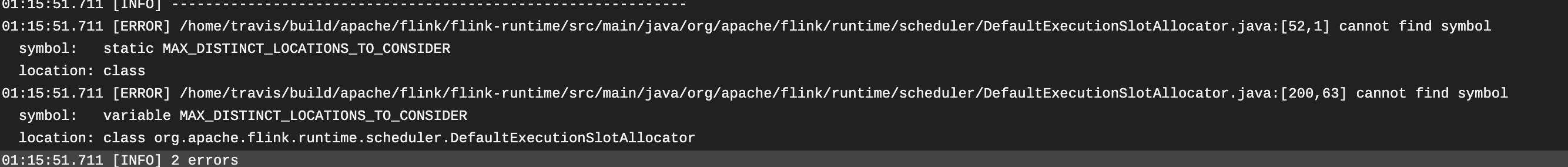

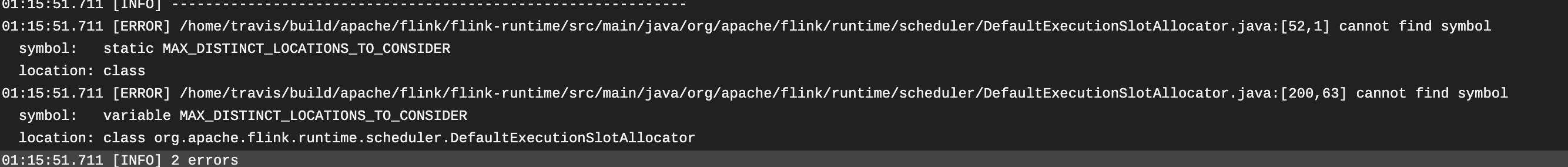

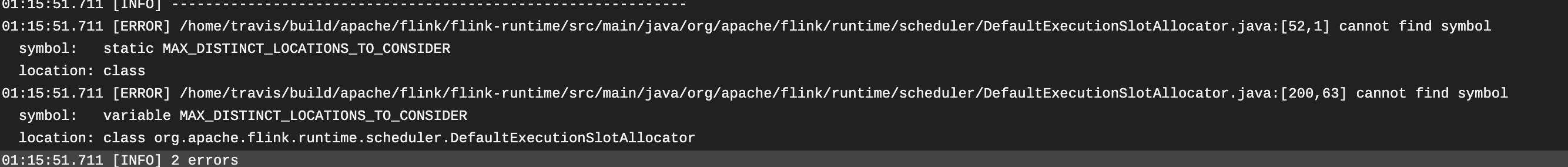

sunjincheng121 edited a comment on issue #7227: [FLINK-11059] [runtime] do not add releasing failed slot to free slots URL: https://github.com/apache/flink/pull/7227#issuecomment-501973070  I fond that `DefaultExecutionSlotAllocator` is merged 9 hours ago(https://github.com/apache/flink/commit/8a39c84e29c9ad64febbd850c99b40d28b5359d1), so I think may CI cache problem, restart all CI stage. If we still can not pass the CI, I think we should rebase the code. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [flink] JingsongLi commented on issue #8682: [FLINK-12796][table-planner-blink] Introduce BaseArray and BaseMap to reduce conversion overhead to blink

JingsongLi commented on issue #8682: [FLINK-12796][table-planner-blink] Introduce BaseArray and BaseMap to reduce conversion overhead to blink URL: https://github.com/apache/flink/pull/8682#issuecomment-501973780 > Can you please provide the benchmark that produces the 10x performance improvement? You can find the case in `SortAggITCase.testBigDataSimpleArrayUDAF`. If you want to test previous code, you can modify `PrimitiveLongArrayConverter.toInternalImpl` to use `return BinaryArray.fromPrimitiveArray(value);`. The difference is so obvious, both in generated code and performance. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [flink] sunjincheng121 edited a comment on issue #7227: [FLINK-11059] [runtime] do not add releasing failed slot to free slots

sunjincheng121 edited a comment on issue #7227: [FLINK-11059] [runtime] do not add releasing failed slot to free slots URL: https://github.com/apache/flink/pull/7227#issuecomment-501973070  I fond that `DefaultExecutionSlotAllocator` is merged 9 hours ago(https://github.com/apache/flink/commit/8a39c84e29c9ad64febbd850c99b40d28b5359d1), so I think may CI cache problem, restart all CI stage. (Maybe some bug in Travis script, but not relate this PR) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [flink] sunjincheng121 commented on issue #7227: [FLINK-11059] [runtime] do not add releasing failed slot to free slots

sunjincheng121 commented on issue #7227: [FLINK-11059] [runtime] do not add releasing failed slot to free slots URL: https://github.com/apache/flink/pull/7227#issuecomment-501973070  I did not find `DefaultExecutionSlotAllocator` in our code base, so I think may CI cache problem, restart all CI stage. (Maybe some bug in Travis script, but not relate this PR) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [flink] docete commented on issue #8707: [FLINK-12815] [table-planner-blink] Supports CatalogManager in blink planner

docete commented on issue #8707: [FLINK-12815] [table-planner-blink] Supports CatalogManager in blink planner URL: https://github.com/apache/flink/pull/8707#issuecomment-501971743 +1 LGTM, leave a comment about CatalogView expanding. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [flink] docete commented on a change in pull request #8707: [FLINK-12815] [table-planner-blink] Supports CatalogManager in blink planner

docete commented on a change in pull request #8707: [FLINK-12815]

[table-planner-blink] Supports CatalogManager in blink planner

URL: https://github.com/apache/flink/pull/8707#discussion_r293660477

##

File path:

flink-table/flink-table-planner-blink/src/main/scala/org/apache/flink/table/plan/util/RelShuttles.scala

##

@@ -97,14 +97,15 @@ class ExpandTableScanShuttle extends RelShuttleImpl {

}

/**

-* Converts [[LogicalTableScan]] the result [[RelNode]] tree by calling

[[RelTable]]#toRel

+* Converts [[LogicalTableScan]] the result [[RelNode]] tree

+* by calling [[QueryOperationCatalogViewTable]]#toRel

*/

override def visit(scan: TableScan): RelNode = {

scan match {

case tableScan: LogicalTableScan =>

-val relTable = tableScan.getTable.unwrap(classOf[RelTable])

-if (relTable != null) {

- val rel =

relTable.toRel(RelOptUtil.getContext(tableScan.getCluster), tableScan.getTable)

+val viewTable =

tableScan.getTable.unwrap(classOf[QueryOperationCatalogViewTable])

Review comment:

AbstractCatalogView from Catalog has no QueryOperation. We must get the

underlie QueryOperation and convert to QueryOperationCatalogViewTable somewhere.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [flink] zhijiangW commented on a change in pull request #8654: [FLINK-12647][network] Add feature flag to disable release of consumed blocking partitions

zhijiangW commented on a change in pull request #8654: [FLINK-12647][network]

Add feature flag to disable release of consumed blocking partitions

URL: https://github.com/apache/flink/pull/8654#discussion_r293656023

##

File path:

flink-runtime/src/main/java/org/apache/flink/runtime/io/network/partition/ReleaseOnConsumptionResultPartition.java

##

@@ -0,0 +1,102 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.runtime.io.network.partition;

+

+import org.apache.flink.runtime.io.network.buffer.BufferPool;

+import org.apache.flink.runtime.io.network.buffer.BufferPoolOwner;

+import org.apache.flink.util.function.FunctionWithException;

+

+import java.io.IOException;

+import java.util.concurrent.atomic.AtomicInteger;

+

+import static org.apache.flink.util.Preconditions.checkState;

+

+/**

+ * ResultPartition that releases itself once all subpartitions have been

consumed.

+ */

+public class ReleaseOnConsumptionResultPartition extends ResultPartition {

+

+ /**

+* The total number of references to subpartitions of this result. The

result partition can be

+* safely released, iff the reference count is zero. A reference count

of -1 denotes that the

+* result partition has been released.

+*/

+ private final AtomicInteger pendingReferences = new AtomicInteger();

+

+ ReleaseOnConsumptionResultPartition(

+ String owningTaskName,

+ ResultPartitionID partitionId,

+ ResultPartitionType partitionType,

+ ResultSubpartition[] subpartitions,

+ int numTargetKeyGroups,

+ ResultPartitionManager partitionManager,

+ FunctionWithException bufferPoolFactory) {

+ super(owningTaskName, partitionId, partitionType,

subpartitions, numTargetKeyGroups, partitionManager, bufferPoolFactory);

+ }

+

+ @Override

+ void pin() {

+ while (true) {

Review comment:

I created [FLINK-12842](https://issues.apache.org/jira/browse/FLINK-12842)

and [FLINK-12843](https://issues.apache.org/jira/browse/FLINK-12843) for the

ref-counter issues which could be solved separately after this PR merged,

because these issues already exist before and are not in the scope of this PR.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[jira] [Created] (FLINK-12843) Refactor the pin logic in ResultPartition

zhijiang created FLINK-12843:

Summary: Refactor the pin logic in ResultPartition

Key: FLINK-12843

URL: https://issues.apache.org/jira/browse/FLINK-12843

Project: Flink

Issue Type: Sub-task

Components: Runtime / Network

Reporter: zhijiang

Assignee: zhijiang

The pin logic is for adding the reference counter based on number of

subpartitions in {{ResultPartition}}. It seems not necessary to do it in while

loop as now, because the atomic counter would not be accessed by other threads

during pin. If the `ResultPartition` is not created yet, the

{{ResultPartition#createSubpartitionView}} would not be called and it would

response {{ResultPartitionNotFoundException}} in {{ResultPartitionManager}}.

So we could simple increase the reference counter in {{ResultPartition}}

constructor directly.

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[GitHub] [flink] sunjincheng121 commented on a change in pull request #8732: [FLINK-12720][python] Add the Python Table API Sphinx docs

sunjincheng121 commented on a change in pull request #8732:

[FLINK-12720][python] Add the Python Table API Sphinx docs

URL: https://github.com/apache/flink/pull/8732#discussion_r293653787

##

File path: flink-python/docs/conf.py

##

@@ -0,0 +1,208 @@

+

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+

+

+import os

+import sys

+

+# -- Path setup --

+

+# If extensions (or modules to document with autodoc) are in another directory,

+# add these directories to sys.path here. If the directory is relative to the

+# documentation root, use os.path.abspath to make it absolute, like shown here.

+sys.path.insert(0, os.path.abspath('.'))

+sys.path.insert(0, os.path.abspath('..'))

+

+# -- Project information -

+

+# project = u'Flink Python Table API'

+project = u'PyFlink'

+copyright = u''

+author = u'Author'

+

+# The short X.Y version

+version = '1.0'

Review comment:

The version should same as :

https://github.com/apache/flink/blob/master/flink-python/pyflink/version.py

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[jira] [Created] (FLINK-12842) Fix invalid check released state during ResultPartition#createSubpartitionView

zhijiang created FLINK-12842:

Summary: Fix invalid check released state during

ResultPartition#createSubpartitionView

Key: FLINK-12842

URL: https://issues.apache.org/jira/browse/FLINK-12842

Project: Flink

Issue Type: Sub-task

Components: Runtime / Network

Reporter: zhijiang

Assignee: zhijiang

Currently in {{ResultPartition#createSubpartitionView}} it would check whether

this partition is released before creating view. But this check is based on

{{refCnt != -1}} which seems invalid, because the reference counter would not

always reflect the released state.

In the case of {{ResultPartition#release/fail}}, the reference counter is not

set to -1. Even if in the case of {{ResultPartition#onConsumedSubpartition}},

the reference counter seems also no chance to be -1.

So we could check the real {{isReleased}} state during creating view instead of

reference counter.

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[GitHub] [flink] JingsongLi commented on a change in pull request #8682: [FLINK-12796][table-planner-blink] Introduce BaseArray and BaseMap to reduce conversion overhead to blink

JingsongLi commented on a change in pull request #8682:

[FLINK-12796][table-planner-blink] Introduce BaseArray and BaseMap to reduce

conversion overhead to blink

URL: https://github.com/apache/flink/pull/8682#discussion_r293653903

##

File path:

flink-table/flink-table-runtime-blink/src/main/java/org/apache/flink/table/typeutils/BaseMapSerializer.java

##

@@ -0,0 +1,273 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License.You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.table.typeutils;

+

+import org.apache.flink.api.common.ExecutionConfig;

+import org.apache.flink.api.common.typeutils.TypeSerializer;

+import org.apache.flink.api.common.typeutils.TypeSerializerSchemaCompatibility;

+import org.apache.flink.api.common.typeutils.TypeSerializerSnapshot;

+import org.apache.flink.api.java.typeutils.runtime.DataInputViewStream;

+import org.apache.flink.api.java.typeutils.runtime.DataOutputViewStream;

+import org.apache.flink.core.memory.DataInputView;

+import org.apache.flink.core.memory.DataOutputView;

+import org.apache.flink.core.memory.MemorySegmentFactory;

+import org.apache.flink.table.dataformat.BaseMap;

+import org.apache.flink.table.dataformat.BinaryArray;

+import org.apache.flink.table.dataformat.BinaryArrayWriter;

+import org.apache.flink.table.dataformat.BinaryMap;

+import org.apache.flink.table.dataformat.BinaryWriter;

+import org.apache.flink.table.dataformat.GenericMap;

+import org.apache.flink.table.types.InternalSerializers;

+import org.apache.flink.table.types.logical.LogicalType;

+import org.apache.flink.table.util.SegmentsUtil;

+import org.apache.flink.util.InstantiationUtil;

+

+import java.io.IOException;

+import java.util.HashMap;

+import java.util.Map;

+

+/**

+ * Serializer for {@link BaseMap}.

+ */

+public class BaseMapSerializer extends TypeSerializer {

+

+ private final LogicalType keyType;

+ private final LogicalType valueType;

+

+ private final TypeSerializer keySerializer;

+ private final TypeSerializer valueSerializer;

+

+ private transient BinaryArray reuseKeyArray;

+ private transient BinaryArray reuseValueArray;

+ private transient BinaryArrayWriter reuseKeyWriter;

+ private transient BinaryArrayWriter reuseValueWriter;

+

+ public BaseMapSerializer(LogicalType keyType, LogicalType valueType) {

+ this.keyType = keyType;

+ this.valueType = valueType;

+

+ this.keySerializer = InternalSerializers.create(keyType, new

ExecutionConfig());

+ this.valueSerializer = InternalSerializers.create(valueType,

new ExecutionConfig());

+ }

+

+ @Override

+ public boolean isImmutableType() {

+ return false;

+ }

+

+ @Override

+ public TypeSerializer duplicate() {

+ return new BaseMapSerializer(keyType, valueType);

+ }

+

+ @Override

+ public BaseMap createInstance() {

+ return new BinaryMap();

+ }

+

+ @Override

+ public BaseMap copy(BaseMap from) {

+ if (from instanceof GenericMap) {

+ Map fromMap = ((GenericMap)

from).getMap();

+ HashMap toMap = new HashMap<>();

Review comment:

You are right,

`DataFormatConverter` is not wrong, but `copy` maybe have some trouble.

In fact, Runtime `MapSerializer` also has this problem.

I will add some comment.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [flink] dianfu commented on a change in pull request #8732: [FLINK-12720][python] Add the Python Table API Sphinx docs

dianfu commented on a change in pull request #8732: [FLINK-12720][python] Add the Python Table API Sphinx docs URL: https://github.com/apache/flink/pull/8732#discussion_r293650042 ## File path: flink-python/docs/pyflink.rst ## @@ -0,0 +1,33 @@ +.. + Licensed to the Apache Software Foundation (ASF) under one + or more contributor license agreements. See the NOTICE file + distributed with this work for additional information + regarding copyright ownership. The ASF licenses this file + to you under the Apache License, Version 2.0 (the + "License"); you may not use this file except in compliance + with the License. You may obtain a copy of the License at + + http://www.apache.org/licenses/LICENSE-2.0 + + Unless required by applicable law or agreed to in writing, software + distributed under the License is distributed on an "AS IS" BASIS, + WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. + See the License for the specific language governing permissions and +limitations under the License. + + +pyflink package +=== + +Subpackages +--- + +.. toctree:: +:maxdepth: 1 + +pyflink.table + Review comment: Add the following text here to indicate that the following classes are the content of package pyflink: ```suggestion Contents ``` This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [flink] dianfu commented on a change in pull request #8732: [FLINK-12720][python] Add the Python Table API Sphinx docs

dianfu commented on a change in pull request #8732: [FLINK-12720][python] Add the Python Table API Sphinx docs URL: https://github.com/apache/flink/pull/8732#discussion_r293646408 ## File path: flink-python/docs/index.rst ## @@ -0,0 +1,39 @@ +.. + Licensed to the Apache Software Foundation (ASF) under one + or more contributor license agreements. See the NOTICE file + distributed with this work for additional information + regarding copyright ownership. The ASF licenses this file + to you under the Apache License, Version 2.0 (the + "License"); you may not use this file except in compliance + with the License. You may obtain a copy of the License at + + http://www.apache.org/licenses/LICENSE-2.0 + + Unless required by applicable law or agreed to in writing, software + distributed under the License is distributed on an "AS IS" BASIS, + WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. + See the License for the specific language governing permissions and +limitations under the License. + + +Welcome to Flink Python API Docs! +== + +.. toctree:: + :maxdepth: 2 + :caption: Contents + + pyflink + pyflink.table + + +Core Classes: +--- + +:class:`pyflink.table.TableEnvironment` + +Main entry point for Flink functionality. Review comment: What about `Main entry point for Flink Table functionality.` This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [flink] dianfu commented on a change in pull request #8732: [FLINK-12720][python] Add the Python Table API Sphinx docs

dianfu commented on a change in pull request #8732: [FLINK-12720][python] Add the Python Table API Sphinx docs URL: https://github.com/apache/flink/pull/8732#discussion_r293652965 ## File path: flink-python/docs/pyflink.table.rst ## @@ -0,0 +1,29 @@ +.. + Licensed to the Apache Software Foundation (ASF) under one + or more contributor license agreements. See the NOTICE file + distributed with this work for additional information + regarding copyright ownership. The ASF licenses this file + to you under the Apache License, Version 2.0 (the + "License"); you may not use this file except in compliance + with the License. You may obtain a copy of the License at + + http://www.apache.org/licenses/LICENSE-2.0 + + Unless required by applicable law or agreed to in writing, software + distributed under the License is distributed on an "AS IS" BASIS, + WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. + See the License for the specific language governing permissions and +limitations under the License. + + +pyflink.table package += + +Module contents +--- + +.. automodule:: pyflink.table +:members: +:undoc-members: +:show-inheritance: Review comment: One empty line at the end of the file This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [flink] liyafan82 commented on a change in pull request #8682: [FLINK-12796][table-planner-blink] Introduce BaseArray and BaseMap to reduce conversion overhead to blink

liyafan82 commented on a change in pull request #8682:

[FLINK-12796][table-planner-blink] Introduce BaseArray and BaseMap to reduce

conversion overhead to blink

URL: https://github.com/apache/flink/pull/8682#discussion_r293652318

##

File path:

flink-table/flink-table-runtime-blink/src/main/java/org/apache/flink/table/typeutils/BaseMapSerializer.java

##

@@ -0,0 +1,273 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License.You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.table.typeutils;

+

+import org.apache.flink.api.common.ExecutionConfig;

+import org.apache.flink.api.common.typeutils.TypeSerializer;

+import org.apache.flink.api.common.typeutils.TypeSerializerSchemaCompatibility;

+import org.apache.flink.api.common.typeutils.TypeSerializerSnapshot;

+import org.apache.flink.api.java.typeutils.runtime.DataInputViewStream;

+import org.apache.flink.api.java.typeutils.runtime.DataOutputViewStream;

+import org.apache.flink.core.memory.DataInputView;

+import org.apache.flink.core.memory.DataOutputView;

+import org.apache.flink.core.memory.MemorySegmentFactory;

+import org.apache.flink.table.dataformat.BaseMap;

+import org.apache.flink.table.dataformat.BinaryArray;

+import org.apache.flink.table.dataformat.BinaryArrayWriter;

+import org.apache.flink.table.dataformat.BinaryMap;

+import org.apache.flink.table.dataformat.BinaryWriter;

+import org.apache.flink.table.dataformat.GenericMap;

+import org.apache.flink.table.types.InternalSerializers;

+import org.apache.flink.table.types.logical.LogicalType;

+import org.apache.flink.table.util.SegmentsUtil;

+import org.apache.flink.util.InstantiationUtil;

+

+import java.io.IOException;

+import java.util.HashMap;

+import java.util.Map;

+

+/**

+ * Serializer for {@link BaseMap}.

+ */

+public class BaseMapSerializer extends TypeSerializer {

+

+ private final LogicalType keyType;

+ private final LogicalType valueType;

+

+ private final TypeSerializer keySerializer;

+ private final TypeSerializer valueSerializer;

+

+ private transient BinaryArray reuseKeyArray;

+ private transient BinaryArray reuseValueArray;

+ private transient BinaryArrayWriter reuseKeyWriter;

+ private transient BinaryArrayWriter reuseValueWriter;

+

+ public BaseMapSerializer(LogicalType keyType, LogicalType valueType) {

+ this.keyType = keyType;

+ this.valueType = valueType;

+

+ this.keySerializer = InternalSerializers.create(keyType, new

ExecutionConfig());

+ this.valueSerializer = InternalSerializers.create(valueType,

new ExecutionConfig());

+ }

+

+ @Override

+ public boolean isImmutableType() {

+ return false;

+ }

+

+ @Override

+ public TypeSerializer duplicate() {

+ return new BaseMapSerializer(keyType, valueType);

+ }

+

+ @Override

+ public BaseMap createInstance() {

+ return new BinaryMap();

+ }

+

+ @Override

+ public BaseMap copy(BaseMap from) {

+ if (from instanceof GenericMap) {

+ Map fromMap = ((GenericMap)

from).getMap();

+ HashMap toMap = new HashMap<>();

Review comment:

The user can provide a "correct" Map, but when the key value is wrapped in a

HashMap, it can be a "wrong" Map.

Let me illustrate with an example. Suppose the key type does not implement

"hashCode" and "equals" functions correctly (suppose for simplicity, hashCode

always returns 0, and equals always returns true).

The input GenericMap can be based on a TreeMap, with a proper comparator to

test key equality.

The input map is a "correct" map, because it implements get/put methods

correctly.

However, when we insert the key/value pairs of the TreeMap into a HashMap,

problems will occur. For this example, only one key/value pair will finally

exist in the HashMap, which is an unexpected behavior.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For

[GitHub] [flink] eaglewatcherwb commented on a change in pull request #8688: [FLINK-12760] [runtime] Implement ExecutionGraph to InputsLocationsRetriever Adapter

eaglewatcherwb commented on a change in pull request #8688: [FLINK-12760]

[runtime] Implement ExecutionGraph to InputsLocationsRetriever Adapter

URL: https://github.com/apache/flink/pull/8688#discussion_r293652038

##

File path:

flink-runtime/src/test/java/org/apache/flink/runtime/scheduler/EGBasedInputsLocationsRetrieverTest.java

##

@@ -0,0 +1,134 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.runtime.scheduler;

+

+import org.apache.flink.api.common.JobID;

+import org.apache.flink.runtime.execution.ExecutionState;

+import org.apache.flink.runtime.executiongraph.ExecutionGraph;

+import org.apache.flink.runtime.executiongraph.ExecutionGraphTestUtils;

+import org.apache.flink.runtime.executiongraph.TestingSlotProvider;

+import org.apache.flink.runtime.executiongraph.restart.NoRestartStrategy;

+import org.apache.flink.runtime.io.network.partition.ResultPartitionType;

+import org.apache.flink.runtime.jobgraph.DistributionPattern;

+import org.apache.flink.runtime.jobgraph.JobVertex;

+import org.apache.flink.runtime.jobmaster.TestingLogicalSlot;

+import org.apache.flink.runtime.jobmaster.slotpool.SlotProvider;

+import org.apache.flink.runtime.scheduler.strategy.ExecutionVertexID;

+import org.apache.flink.runtime.taskmanager.TaskManagerLocation;

+import org.apache.flink.util.TestLogger;

+

+import org.junit.Test;

+

+import java.util.Arrays;

+import java.util.Collection;

+import java.util.Optional;

+import java.util.concurrent.CompletableFuture;

+

+import static org.hamcrest.CoreMatchers.is;

+import static org.hamcrest.Matchers.contains;

+import static org.hamcrest.Matchers.empty;

+import static org.junit.Assert.assertEquals;

+import static org.junit.Assert.assertFalse;

+import static org.junit.Assert.assertThat;

+

+/**

+ * Tests for {@link EGBasedInputsLocationsRetriever}.

+ */

+public class EGBasedInputsLocationsRetrieverTest extends TestLogger {

+

+ /**

+* Tests that can get the producers of consumed result partitions.

+*/

+ @Test

+ public void testGetConsumedResultPartitionsProducers() throws Exception

{

+ final JobVertex producer1 =

ExecutionGraphTestUtils.createNoOpVertex(1);

+ final JobVertex producer2 =

ExecutionGraphTestUtils.createNoOpVertex(1);

+ final JobVertex consumer =

ExecutionGraphTestUtils.createNoOpVertex(1);

+ consumer.connectNewDataSetAsInput(producer1,

DistributionPattern.ALL_TO_ALL, ResultPartitionType.PIPELINED);

+ consumer.connectNewDataSetAsInput(producer2,

DistributionPattern.ALL_TO_ALL, ResultPartitionType.PIPELINED);

+

+ final ExecutionGraph eg =

ExecutionGraphTestUtils.createSimpleTestGraph(new JobID(), producer1,

producer2, consumer);

+ final EGBasedInputsLocationsRetriever inputsLocationsRetriever

= new EGBasedInputsLocationsRetriever(eg);

+

+ ExecutionVertexID evIdOfProducer1 = new

ExecutionVertexID(producer1.getID(), 0);

+ ExecutionVertexID evIdOfProducer2 = new

ExecutionVertexID(producer2.getID(), 0);

+ ExecutionVertexID evIdOfConsumer = new

ExecutionVertexID(consumer.getID(), 0);

+

+ Collection> producersOfProducer1 =

+

inputsLocationsRetriever.getConsumedResultPartitionsProducers(evIdOfProducer1);

+ Collection> producersOfProducer2 =

+

inputsLocationsRetriever.getConsumedResultPartitionsProducers(evIdOfProducer2);

+ Collection> producersOfConsumer =

+

inputsLocationsRetriever.getConsumedResultPartitionsProducers(evIdOfConsumer);

+

+ assertThat(producersOfProducer1, is(empty()));

+ assertThat(producersOfProducer2, is(empty()));

+ assertThat(producersOfConsumer,

contains(Arrays.asList(evIdOfProducer1), Arrays.asList(evIdOfProducer2)));

Review comment:

`Arrays.asList` with only one argument could be replaced with

`Collections.singletonList`

This is an automated message from the Apache Git Service.

To respond to the message, please log

[GitHub] [flink] eaglewatcherwb commented on a change in pull request #8688: [FLINK-12760] [runtime] Implement ExecutionGraph to InputsLocationsRetriever Adapter

eaglewatcherwb commented on a change in pull request #8688: [FLINK-12760]

[runtime] Implement ExecutionGraph to InputsLocationsRetriever Adapter

URL: https://github.com/apache/flink/pull/8688#discussion_r293651290

##

File path:

flink-runtime/src/test/java/org/apache/flink/runtime/scheduler/EGBasedInputsLocationsRetrieverTest.java

##

@@ -0,0 +1,134 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.runtime.scheduler;

+

+import org.apache.flink.api.common.JobID;

+import org.apache.flink.runtime.execution.ExecutionState;

+import org.apache.flink.runtime.executiongraph.ExecutionGraph;

+import org.apache.flink.runtime.executiongraph.ExecutionGraphTestUtils;

+import org.apache.flink.runtime.executiongraph.TestingSlotProvider;

+import org.apache.flink.runtime.executiongraph.restart.NoRestartStrategy;

+import org.apache.flink.runtime.io.network.partition.ResultPartitionType;

+import org.apache.flink.runtime.jobgraph.DistributionPattern;

+import org.apache.flink.runtime.jobgraph.JobVertex;

+import org.apache.flink.runtime.jobmaster.TestingLogicalSlot;

+import org.apache.flink.runtime.jobmaster.slotpool.SlotProvider;

+import org.apache.flink.runtime.scheduler.strategy.ExecutionVertexID;

+import org.apache.flink.runtime.taskmanager.TaskManagerLocation;

+import org.apache.flink.util.TestLogger;

+

+import org.junit.Test;

+

+import java.util.Arrays;

+import java.util.Collection;

+import java.util.Optional;

+import java.util.concurrent.CompletableFuture;

+

+import static org.hamcrest.CoreMatchers.is;

+import static org.hamcrest.Matchers.contains;

+import static org.hamcrest.Matchers.empty;

+import static org.junit.Assert.assertEquals;

+import static org.junit.Assert.assertFalse;

+import static org.junit.Assert.assertThat;

+

+/**

+ * Tests for {@link EGBasedInputsLocationsRetriever}.

+ */

+public class EGBasedInputsLocationsRetrieverTest extends TestLogger {

+

+ /**

+* Tests that can get the producers of consumed result partitions.

+*/

+ @Test

+ public void testGetConsumedResultPartitionsProducers() throws Exception

{

+ final JobVertex producer1 =

ExecutionGraphTestUtils.createNoOpVertex(1);

+ final JobVertex producer2 =

ExecutionGraphTestUtils.createNoOpVertex(1);

+ final JobVertex consumer =

ExecutionGraphTestUtils.createNoOpVertex(1);

+ consumer.connectNewDataSetAsInput(producer1,

DistributionPattern.ALL_TO_ALL, ResultPartitionType.PIPELINED);

+ consumer.connectNewDataSetAsInput(producer2,

DistributionPattern.ALL_TO_ALL, ResultPartitionType.PIPELINED);

+

+ final ExecutionGraph eg =

ExecutionGraphTestUtils.createSimpleTestGraph(new JobID(), producer1,

producer2, consumer);

+ final EGBasedInputsLocationsRetriever inputsLocationsRetriever

= new EGBasedInputsLocationsRetriever(eg);

+

+ ExecutionVertexID evIdOfProducer1 = new

ExecutionVertexID(producer1.getID(), 0);

+ ExecutionVertexID evIdOfProducer2 = new

ExecutionVertexID(producer2.getID(), 0);

+ ExecutionVertexID evIdOfConsumer = new

ExecutionVertexID(consumer.getID(), 0);

+

+ Collection> producersOfProducer1 =

+

inputsLocationsRetriever.getConsumedResultPartitionsProducers(evIdOfProducer1);

+ Collection> producersOfProducer2 =

+

inputsLocationsRetriever.getConsumedResultPartitionsProducers(evIdOfProducer2);

+ Collection> producersOfConsumer =

+

inputsLocationsRetriever.getConsumedResultPartitionsProducers(evIdOfConsumer);

+

+ assertThat(producersOfProducer1, is(empty()));

+ assertThat(producersOfProducer2, is(empty()));

+ assertThat(producersOfConsumer,

contains(Arrays.asList(evIdOfProducer1), Arrays.asList(evIdOfProducer2)));

+ }

+

+ /**

+* Tests that when execution is not scheduled, getting task manager

location will return null.

+*/

+ @Test

+ public void testGetNullTaskManagerLocationIfNotScheduled() throws

Exception {

+ final JobVertex

[GitHub] [flink] zjuwangg commented on issue #8636: [FLINK-12237][hive]Support Hive table stats related operations in HiveCatalog

zjuwangg commented on issue #8636: [FLINK-12237][hive]Support Hive table stats related operations in HiveCatalog URL: https://github.com/apache/flink/pull/8636#issuecomment-501958031 ccc @xuefuz again to review. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [flink] zhijiangW commented on a change in pull request #8687: [FLINK-12612][coordination] Track stored partition on the TaskExecutor

zhijiangW commented on a change in pull request #8687:

[FLINK-12612][coordination] Track stored partition on the TaskExecutor

URL: https://github.com/apache/flink/pull/8687#discussion_r293651140

##

File path:

flink-runtime/src/main/java/org/apache/flink/runtime/taskexecutor/partition/JobAwareShuffleEnvironmentImpl.java

##

@@ -0,0 +1,194 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.runtime.taskexecutor.partition;

+

+import org.apache.flink.api.common.JobID;

+import org.apache.flink.metrics.MetricGroup;

+import org.apache.flink.runtime.deployment.InputGateDeploymentDescriptor;

+import org.apache.flink.runtime.deployment.ResultPartitionDeploymentDescriptor;

+import org.apache.flink.runtime.executiongraph.ExecutionAttemptID;

+import org.apache.flink.runtime.executiongraph.PartitionInfo;

+import org.apache.flink.runtime.io.network.api.writer.ResultPartitionWriter;

+import

org.apache.flink.runtime.io.network.partition.PartitionProducerStateProvider;

+import org.apache.flink.runtime.io.network.partition.ResultPartitionID;

+import org.apache.flink.runtime.io.network.partition.consumer.InputGate;

+import org.apache.flink.runtime.shuffle.ShuffleEnvironment;

+import org.apache.flink.util.Preconditions;

+

+import java.io.IOException;

+import java.util.ArrayList;

+import java.util.Collection;

+import java.util.Collections;

+import java.util.Set;

+import java.util.concurrent.ConcurrentHashMap;

+import java.util.function.Consumer;

+import java.util.stream.Collectors;

+

+/**

+ * Wraps a {@link ShuffleEnvironment} to allow tracking of partitions per job.

+ */

+public class JobAwareShuffleEnvironmentImpl implements

JobAwareShuffleEnvironment {

+

+ private static final Consumer NO_OP_NOTIFIER =

partitionId -> {};

+

+ private final ShuffleEnvironment backingShuffleEnvironment;

+ private final PartitionTable inProgressPartitionTable = new

PartitionTable();

+ private final PartitionTable finishedPartitionTable = new

PartitionTable();

+

+ /** Tracks which jobs are still being monitored, to ensure cleanup

in cases where tasks are finishing while

+* the jobmanager connection is being terminated. This is a concurrent

map since it is modified by both the

+* Task (via {@link #notifyPartitionFinished(JobID,

ResultPartitionID)}} and

+* TaskExecutor (via {@link

#releaseAllFinishedPartitionsForJobAndMarkJobInactive(JobID)}) thread. */

+ private final Set activeJobs = ConcurrentHashMap.newKeySet();

+

+ public JobAwareShuffleEnvironmentImpl(ShuffleEnvironment

backingShuffleEnvironment) {

+ this.backingShuffleEnvironment =

Preconditions.checkNotNull(backingShuffleEnvironment);

+ }

+

+ @Override

+ public boolean hasPartitionsOccupyingLocalResources(JobID jobId) {

+ return inProgressPartitionTable.hasTrackedPartitions(jobId) ||

finishedPartitionTable.hasTrackedPartitions(jobId);

+ }

+

+ @Override

+ public void markJobActive(JobID jobId) {

+ activeJobs.add(jobId);

+ }

+

+ @Override

+ public void releaseFinishedPartitions(JobID jobId,

Collection resultPartitionIds) {

+ finishedPartitionTable.stopTrackingPartitions(jobId,

resultPartitionIds);

+ backingShuffleEnvironment.releasePartitions(resultPartitionIds);

+ }

+

+ @Override

+ public void releaseAllFinishedPartitionsForJobAndMarkJobInactive(JobID

jobId) {

+ activeJobs.remove(jobId);

+ Collection finishedPartitionsForJob =

finishedPartitionTable.stopTrackingPartitions(jobId);

+

backingShuffleEnvironment.releasePartitions(finishedPartitionsForJob);

+ }

+

+ /**

+* This method wraps partition writers for externally managed

partitions and introduces callbacks into the lifecycle

+* methods of the {@link ResultPartitionWriter}.

+*/

+ @Override

+ public Collection

createResultPartitionWriters(

+ JobID jobId,

+ String taskName,

+ ExecutionAttemptID executionAttemptID,

+ Collection

[GitHub] [flink] gaoyunhaii commented on a change in pull request #8654: [FLINK-12647][network] Add feature flag to disable release of consumed blocking partitions

gaoyunhaii commented on a change in pull request #8654: [FLINK-12647][network] Add feature flag to disable release of consumed blocking partitions URL: https://github.com/apache/flink/pull/8654#discussion_r293650869 ## File path: flink-runtime/src/test/java/org/apache/flink/runtime/io/network/partition/ResultPartitionFactoryTest.java ## @@ -0,0 +1,86 @@ +/* + * Licensed to the Apache Software Foundation (ASF) under one or more + * contributor license agreements. See the NOTICE file distributed with + * this work for additional information regarding copyright ownership. + * The ASF licenses this file to You under the Apache License, Version 2.0 + * (the "License"); you may not use this file except in compliance with + * the License. You may obtain a copy of the License at + * + *http://www.apache.org/licenses/LICENSE-2.0 + * + * Unless required by applicable law or agreed to in writing, software + * distributed under the License is distributed on an "AS IS" BASIS, + * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. + * See the License for the specific language governing permissions and + * limitations under the License. + */ + +package org.apache.flink.runtime.io.network.partition; + +import org.apache.flink.runtime.deployment.ResultPartitionDeploymentDescriptor; +import org.apache.flink.runtime.executiongraph.ExecutionAttemptID; +import org.apache.flink.runtime.io.disk.iomanager.NoOpIOManager; +import org.apache.flink.runtime.io.network.buffer.BufferPool; +import org.apache.flink.runtime.io.network.buffer.BufferPoolFactory; +import org.apache.flink.runtime.io.network.buffer.BufferPoolOwner; Review comment: It seems there are unused imports here. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [flink] JingsongLi commented on a change in pull request #8682: [FLINK-12796][table-planner-blink] Introduce BaseArray and BaseMap to reduce conversion overhead to blink

JingsongLi commented on a change in pull request #8682:

[FLINK-12796][table-planner-blink] Introduce BaseArray and BaseMap to reduce

conversion overhead to blink

URL: https://github.com/apache/flink/pull/8682#discussion_r293650003

##

File path:

flink-table/flink-table-runtime-blink/src/main/java/org/apache/flink/table/typeutils/BaseMapSerializer.java

##

@@ -0,0 +1,273 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License.You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.table.typeutils;

+

+import org.apache.flink.api.common.ExecutionConfig;

+import org.apache.flink.api.common.typeutils.TypeSerializer;

+import org.apache.flink.api.common.typeutils.TypeSerializerSchemaCompatibility;

+import org.apache.flink.api.common.typeutils.TypeSerializerSnapshot;

+import org.apache.flink.api.java.typeutils.runtime.DataInputViewStream;

+import org.apache.flink.api.java.typeutils.runtime.DataOutputViewStream;

+import org.apache.flink.core.memory.DataInputView;

+import org.apache.flink.core.memory.DataOutputView;

+import org.apache.flink.core.memory.MemorySegmentFactory;

+import org.apache.flink.table.dataformat.BaseMap;

+import org.apache.flink.table.dataformat.BinaryArray;

+import org.apache.flink.table.dataformat.BinaryArrayWriter;

+import org.apache.flink.table.dataformat.BinaryMap;

+import org.apache.flink.table.dataformat.BinaryWriter;

+import org.apache.flink.table.dataformat.GenericMap;

+import org.apache.flink.table.types.InternalSerializers;

+import org.apache.flink.table.types.logical.LogicalType;

+import org.apache.flink.table.util.SegmentsUtil;

+import org.apache.flink.util.InstantiationUtil;

+

+import java.io.IOException;

+import java.util.HashMap;

+import java.util.Map;

+

+/**

+ * Serializer for {@link BaseMap}.

+ */

+public class BaseMapSerializer extends TypeSerializer {

+

+ private final LogicalType keyType;

+ private final LogicalType valueType;

+

+ private final TypeSerializer keySerializer;

+ private final TypeSerializer valueSerializer;

+

+ private transient BinaryArray reuseKeyArray;

+ private transient BinaryArray reuseValueArray;

+ private transient BinaryArrayWriter reuseKeyWriter;

+ private transient BinaryArrayWriter reuseValueWriter;

+

+ public BaseMapSerializer(LogicalType keyType, LogicalType valueType) {

+ this.keyType = keyType;

+ this.valueType = valueType;

+

+ this.keySerializer = InternalSerializers.create(keyType, new

ExecutionConfig());

+ this.valueSerializer = InternalSerializers.create(valueType,

new ExecutionConfig());

+ }

+

+ @Override

+ public boolean isImmutableType() {

+ return false;

+ }

+

+ @Override

+ public TypeSerializer duplicate() {

+ return new BaseMapSerializer(keyType, valueType);

+ }

+

+ @Override

+ public BaseMap createInstance() {

+ return new BinaryMap();

+ }

+

+ @Override

+ public BaseMap copy(BaseMap from) {

+ if (from instanceof GenericMap) {

+ Map fromMap = ((GenericMap)

from).getMap();

+ HashMap toMap = new HashMap<>();

Review comment:

This has nothing to do with `GenericMap`. If the user gives a wrong `Map`,

it's wrong to turn it into a `BinaryMap` too. It is totally wrong...

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [flink] JingsongLi commented on a change in pull request #8682: [FLINK-12796][table-planner-blink] Introduce BaseArray and BaseMap to reduce conversion overhead to blink

JingsongLi commented on a change in pull request #8682:

[FLINK-12796][table-planner-blink] Introduce BaseArray and BaseMap to reduce

conversion overhead to blink

URL: https://github.com/apache/flink/pull/8682#discussion_r293649615

##

File path:

flink-table/flink-table-runtime-blink/src/main/java/org/apache/flink/table/typeutils/BaseRowSerializer.java

##

@@ -165,49 +168,33 @@ public int getArity() {

/**

* Convert base row to binary row.

-* TODO modify it to code gen, and reuse BinaryRow

+* TODO modify it to code gen.

Review comment:

This is a method comments, it mean move this method implement to code gen

instead of using `BinaryWriter.write` and `TypeGetterSetters.get`.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [flink] gaoyunhaii commented on a change in pull request #8654: [FLINK-12647][network] Add feature flag to disable release of consumed blocking partitions

gaoyunhaii commented on a change in pull request #8654: [FLINK-12647][network]

Add feature flag to disable release of consumed blocking partitions

URL: https://github.com/apache/flink/pull/8654#discussion_r293649558

##

File path:

flink-runtime/src/main/java/org/apache/flink/runtime/io/network/partition/ReleaseOnConsumptionResultPartition.java

##

@@ -0,0 +1,102 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.runtime.io.network.partition;

+

+import org.apache.flink.runtime.io.network.buffer.BufferPool;

+import org.apache.flink.runtime.io.network.buffer.BufferPoolOwner;

+import org.apache.flink.util.function.FunctionWithException;

+

+import java.io.IOException;

+import java.util.concurrent.atomic.AtomicInteger;

+

+import static org.apache.flink.util.Preconditions.checkState;

+

+/**

+ * ResultPartition that releases itself once all subpartitions have been

consumed.

+ */

+public class ReleaseOnConsumptionResultPartition extends ResultPartition {

+

+ /**

+* The total number of references to subpartitions of this result. The

result partition can be

+* safely released, iff the reference count is zero. A reference count

of -1 denotes that the

+* result partition has been released.

+*/

+ private final AtomicInteger pendingReferences = new AtomicInteger();

+

+ ReleaseOnConsumptionResultPartition(

+ String owningTaskName,

+ ResultPartitionID partitionId,

+ ResultPartitionType partitionType,

+ ResultSubpartition[] subpartitions,

+ int numTargetKeyGroups,

+ ResultPartitionManager partitionManager,

+ FunctionWithException bufferPoolFactory) {

+ super(owningTaskName, partitionId, partitionType,

subpartitions, numTargetKeyGroups, partitionManager, bufferPoolFactory);

+ }

+

+ @Override

+ void pin() {

+ while (true) {

Review comment:

Follow Zhijiang's thought, I think we can now remove the pin method from the

ResultPartition, since it is only the implementation detail of the

ReleaseOnConsumptionResultPartiton. Then we can move the initialization of the

pending reference to the Constructor to ensure no concurrent access to the

variable, then we can use pendingReferences.set(subpartitions.length) to

initialize the variable.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [flink] liyafan82 commented on a change in pull request #8682: [FLINK-12796][table-planner-blink] Introduce BaseArray and BaseMap to reduce conversion overhead to blink

liyafan82 commented on a change in pull request #8682:

[FLINK-12796][table-planner-blink] Introduce BaseArray and BaseMap to reduce

conversion overhead to blink

URL: https://github.com/apache/flink/pull/8682#discussion_r293648291

##

File path:

flink-table/flink-table-runtime-blink/src/main/java/org/apache/flink/table/typeutils/BaseRowSerializer.java

##

@@ -165,49 +168,33 @@ public int getArity() {

/**

* Convert base row to binary row.

-* TODO modify it to code gen, and reuse BinaryRow

+* TODO modify it to code gen.

Review comment:

I see the code gen related logic is already modified. You mean more changes

are needed?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [flink] stevenzwu opened a new pull request #8665: [FLINK-12781] [Runtime/REST] include the whole stack trace in response payload

stevenzwu opened a new pull request #8665: [FLINK-12781] [Runtime/REST] include the whole stack trace in response payload URL: https://github.com/apache/flink/pull/8665 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [flink] stevenzwu closed pull request #8665: [FLINK-12781] [Runtime/REST] include the whole stack trace in response payload

stevenzwu closed pull request #8665: [FLINK-12781] [Runtime/REST] include the whole stack trace in response payload URL: https://github.com/apache/flink/pull/8665 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [flink] liyafan82 commented on a change in pull request #8682: [FLINK-12796][table-planner-blink] Introduce BaseArray and BaseMap to reduce conversion overhead to blink

liyafan82 commented on a change in pull request #8682:

[FLINK-12796][table-planner-blink] Introduce BaseArray and BaseMap to reduce

conversion overhead to blink

URL: https://github.com/apache/flink/pull/8682#discussion_r293648012

##

File path:

flink-table/flink-table-runtime-blink/src/main/java/org/apache/flink/table/typeutils/BaseMapSerializer.java

##

@@ -0,0 +1,273 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License.You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.table.typeutils;

+

+import org.apache.flink.api.common.ExecutionConfig;

+import org.apache.flink.api.common.typeutils.TypeSerializer;

+import org.apache.flink.api.common.typeutils.TypeSerializerSchemaCompatibility;

+import org.apache.flink.api.common.typeutils.TypeSerializerSnapshot;

+import org.apache.flink.api.java.typeutils.runtime.DataInputViewStream;

+import org.apache.flink.api.java.typeutils.runtime.DataOutputViewStream;

+import org.apache.flink.core.memory.DataInputView;

+import org.apache.flink.core.memory.DataOutputView;

+import org.apache.flink.core.memory.MemorySegmentFactory;

+import org.apache.flink.table.dataformat.BaseMap;

+import org.apache.flink.table.dataformat.BinaryArray;

+import org.apache.flink.table.dataformat.BinaryArrayWriter;

+import org.apache.flink.table.dataformat.BinaryMap;

+import org.apache.flink.table.dataformat.BinaryWriter;

+import org.apache.flink.table.dataformat.GenericMap;

+import org.apache.flink.table.types.InternalSerializers;

+import org.apache.flink.table.types.logical.LogicalType;

+import org.apache.flink.table.util.SegmentsUtil;

+import org.apache.flink.util.InstantiationUtil;

+

+import java.io.IOException;

+import java.util.HashMap;

+import java.util.Map;

+

+/**

+ * Serializer for {@link BaseMap}.

+ */

+public class BaseMapSerializer extends TypeSerializer {

+

+ private final LogicalType keyType;

+ private final LogicalType valueType;

+

+ private final TypeSerializer keySerializer;

+ private final TypeSerializer valueSerializer;

+

+ private transient BinaryArray reuseKeyArray;

+ private transient BinaryArray reuseValueArray;

+ private transient BinaryArrayWriter reuseKeyWriter;

+ private transient BinaryArrayWriter reuseValueWriter;

+

+ public BaseMapSerializer(LogicalType keyType, LogicalType valueType) {

+ this.keyType = keyType;

+ this.valueType = valueType;

+

+ this.keySerializer = InternalSerializers.create(keyType, new

ExecutionConfig());

+ this.valueSerializer = InternalSerializers.create(valueType,

new ExecutionConfig());

+ }

+

+ @Override

+ public boolean isImmutableType() {

+ return false;

+ }

+

+ @Override

+ public TypeSerializer duplicate() {

+ return new BaseMapSerializer(keyType, valueType);

+ }

+

+ @Override

+ public BaseMap createInstance() {

+ return new BinaryMap();

+ }

+

+ @Override

+ public BaseMap copy(BaseMap from) {

+ if (from instanceof GenericMap) {

+ Map fromMap = ((GenericMap)

from).getMap();

+ HashMap toMap = new HashMap<>();

Review comment:

"Just wrap user Map, users need to guarantee their logic."

-> the users just guarantee that their Map works correctly according to the

Map interface, but it does not mean their Map would work correctly when wrapped

in a HashMap. Right?

When that is the case, it may cause some weird problem which is hard to

locate and debug. So this is not a reliable solution.

At the very least, we should write some comment in the JavaDoc about this

explicitly.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [flink] yanghua commented on issue #7435: [FLINK-11226] Lack of getKeySelector in Scala KeyedStream API unlike Java KeyedStream

yanghua commented on issue #7435: [FLINK-11226] Lack of getKeySelector in Scala KeyedStream API unlike Java KeyedStream URL: https://github.com/apache/flink/pull/7435#issuecomment-501953286 @sunjincheng121 I have a small PR holds a long time, can you have a look? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Commented] (FLINK-12836) Allow retained checkpoints to be persisted on success

[

https://issues.apache.org/jira/browse/FLINK-12836?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16863647#comment-16863647

]

vinoyang commented on FLINK-12836:

--

Hi [~klion26] I think {{CHECKPOINT_RETAINED_ON_CANCELLATION}} has a flag:

{{discardSubsumed}} its value is {{true}}. A checkpoint is subsumed when the

maximum number of retained checkpoints is reached and a more recent checkpoint

completes. Maybe this point is [~andreweduffy]'s thought.

> Allow retained checkpoints to be persisted on success

> -

>

> Key: FLINK-12836

> URL: https://issues.apache.org/jira/browse/FLINK-12836

> Project: Flink

> Issue Type: Improvement

> Components: Runtime / Checkpointing

>Reporter: Andrew Duffy

>Assignee: vinoyang

>Priority: Major

>

> Currently, retained checkpoints are persisted with one of 3 strategies:

> * {color:#33}CHECKPOINT_NEVER_RETAINED:{color} Retained checkpoints are

> never persisted

> * {color:#33}CHECKPOINT_RETAINED_ON_FAILURE:{color}{color:#33}

> Latest retained checkpoint{color} is persisted in the face of job failures

> * {color:#33}CHECKPOINT_RETAINED_ON_CANCELLATION{color}: Latest retained

> checkpoint is persisted when job is canceled externally (e.g. via the REST

> API)

>

> I'm proposing a third persistence mode: _CHECKPOINT_RETAINED_ALWAYS_. This

> mode would ensure that retained checkpoints are retained on successful

> completion of the job, and can be resumed from later.

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[GitHub] [flink] zjuwangg commented on a change in pull request #8703: [FLINK-12807][hive]Support Hive table columnstats related operations in HiveCatalog

zjuwangg commented on a change in pull request #8703:

[FLINK-12807][hive]Support Hive table columnstats related operations in

HiveCatalog

URL: https://github.com/apache/flink/pull/8703#discussion_r293647266

##

File path:

flink-connectors/flink-connector-hive/src/main/java/org/apache/flink/table/catalog/hive/HiveCatalog.java

##

@@ -1079,7 +1100,27 @@ public void alterPartitionStatistics(ObjectPath

tablePath, CatalogPartitionSpec

@Override

public void alterPartitionColumnStatistics(ObjectPath tablePath,

CatalogPartitionSpec partitionSpec, CatalogColumnStatistics columnStatistics,

boolean ignoreIfNotExists) throws PartitionNotExistException, CatalogException {

+ try {

+ Partition hivePartition = getHivePartition(tablePath,

partitionSpec);

+ Table hiveTable = getHiveTable(tablePath);

+ String partName = getPartitionName(tablePath,

partitionSpec, hiveTable);

Review comment:

Yes, we can not just obtain partition keys from hive Partition

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [flink] zhijiangW commented on issue #8654: [FLINK-12647][network] Add feature flag to disable release of consumed blocking partitions

zhijiangW commented on issue #8654: [FLINK-12647][network] Add feature flag to disable release of consumed blocking partitions URL: https://github.com/apache/flink/pull/8654#issuecomment-501951296 Thanks for the updates @zentol and the reviews @tillrohrmann . I like the current way and it looks pretty good now. I only left several nit comments. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [flink] zhijiangW commented on a change in pull request #8654: [FLINK-12647][network] Add feature flag to disable release of consumed blocking partitions

zhijiangW commented on a change in pull request #8654: [FLINK-12647][network]

Add feature flag to disable release of consumed blocking partitions

URL: https://github.com/apache/flink/pull/8654#discussion_r293644729

##

File path:

flink-runtime/src/test/java/org/apache/flink/runtime/io/network/partition/ResultPartitionBuilder.java

##

@@ -112,13 +114,19 @@ public ResultPartitionBuilder setBufferPoolFactory(

return this;

}

+ public ResultPartitionBuilder isReleasedOnConsumption(boolean

releasedOnConsumption) {

+ this.releasedOnConsumption = releasedOnConsumption;

+ return this;

+ }

+

public ResultPartition build() {

ResultPartitionFactory resultPartitionFactory = new

ResultPartitionFactory(

partitionManager,

ioManager,

networkBufferPool,

networkBuffersPerChannel,

- floatingNetworkBuffersPerGate);

+ floatingNetworkBuffersPerGate,

+ true);

Review comment:

After this value is set true, it seems no way to generate `ResultPartition`

atm via builder no matter with `releasedOnConsumption` is set true or false.

Another option is make this value false, and let `releasedOnConsumption`

default value is true, then the final tag could also be changed via

`ResultPartitionBuilder#isReleasedOnConsumption(false)`.

I am not sure whether to adjust this issue atm or fix when required future.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [flink] zhijiangW commented on a change in pull request #8654: [FLINK-12647][network] Add feature flag to disable release of consumed blocking partitions

zhijiangW commented on a change in pull request #8654: [FLINK-12647][network]

Add feature flag to disable release of consumed blocking partitions

URL: https://github.com/apache/flink/pull/8654#discussion_r293645376

##

File path:

flink-runtime/src/main/java/org/apache/flink/runtime/io/network/partition/ReleaseOnConsumptionResultPartition.java

##

@@ -0,0 +1,102 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.runtime.io.network.partition;

+

+import org.apache.flink.runtime.io.network.buffer.BufferPool;

+import org.apache.flink.runtime.io.network.buffer.BufferPoolOwner;

+import org.apache.flink.util.function.FunctionWithException;

+

+import java.io.IOException;

+import java.util.concurrent.atomic.AtomicInteger;

+

+import static org.apache.flink.util.Preconditions.checkState;

+

+/**

+ * ResultPartition that releases itself once all subpartitions have been

consumed.

+ */

+public class ReleaseOnConsumptionResultPartition extends ResultPartition {

+

+ /**

+* The total number of references to subpartitions of this result. The

result partition can be

+* safely released, iff the reference count is zero. A reference count

of -1 denotes that the

+* result partition has been released.

+*/

+ private final AtomicInteger pendingReferences = new AtomicInteger();

+

+ ReleaseOnConsumptionResultPartition(

+ String owningTaskName,

+ ResultPartitionID partitionId,

+ ResultPartitionType partitionType,

+ ResultSubpartition[] subpartitions,

+ int numTargetKeyGroups,

+ ResultPartitionManager partitionManager,

+ FunctionWithException bufferPoolFactory) {

+ super(owningTaskName, partitionId, partitionType,

subpartitions, numTargetKeyGroups, partitionManager, bufferPoolFactory);

+ }

+

+ @Override

+ void pin() {

+ while (true) {

Review comment:

Actually I am not sure why it needs `while` loop here before.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[jira] [Reopened] (FLINK-12541) Add deploy a Python Flink job and session cluster on Kubernetes support.

[ https://issues.apache.org/jira/browse/FLINK-12541?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] sunjincheng reopened FLINK-12541: - Hi [~till.rohrmann] Thanks for helping review and merge the #8609, And #8609 is relating the FLINK-12788. So, I'll close the FLINK-12788, and reopen this JIRA. If I miss something, please correct me. :) > Add deploy a Python Flink job and session cluster on Kubernetes support. > > > Key: FLINK-12541 > URL: https://issues.apache.org/jira/browse/FLINK-12541 > Project: Flink > Issue Type: Sub-task > Components: API / Python, Runtime / REST >Affects Versions: 1.9.0 >Reporter: sunjincheng >Assignee: Dian Fu >Priority: Major > Labels: pull-request-available > Fix For: 1.9.0 > > Time Spent: 0.5h > Remaining Estimate: 0h > > Add deploy a Python Flink job and session cluster on Kubernetes support. > We need to have the same deployment step as the Java job. Please see: > [https://ci.apache.org/projects/flink/flink-docs-stable/ops/deployment/kubernetes.html] > -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[GitHub] [flink] zhijiangW commented on a change in pull request #8654: [FLINK-12647][network] Add feature flag to disable release of consumed blocking partitions

zhijiangW commented on a change in pull request #8654: [FLINK-12647][network]

Add feature flag to disable release of consumed blocking partitions