[GitHub] [flink] godfreyhe commented on a change in pull request #13742: [FLINK-19626][table-planner-blink] Introduce multi-input operator construction algorithm

godfreyhe commented on a change in pull request #13742:

URL: https://github.com/apache/flink/pull/13742#discussion_r512431935

##

File path:

flink-table/flink-table-planner-blink/src/main/java/org/apache/flink/table/planner/plan/processor/utils/TopologyGraph.java

##

@@ -0,0 +1,206 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.table.planner.plan.processor.utils;

+

+import org.apache.flink.annotation.Internal;

+import org.apache.flink.annotation.VisibleForTesting;

+import org.apache.flink.streaming.api.datastream.DataStream;

+import

org.apache.flink.table.planner.plan.nodes.exec.AbstractExecNodeExactlyOnceVisitor;

+import org.apache.flink.table.planner.plan.nodes.exec.ExecNode;

+import

org.apache.flink.table.planner.plan.nodes.physical.batch.BatchExecBoundedStreamScan;

+import org.apache.flink.util.Preconditions;

+

+import java.util.Collections;

+import java.util.HashMap;

+import java.util.HashSet;

+import java.util.LinkedList;

+import java.util.List;

+import java.util.Map;

+import java.util.Queue;

+import java.util.Set;

+

+/**

+ * A data structure storing the topological and input priority information of

an {@link ExecNode} graph.

+ */

+@Internal

+class TopologyGraph {

+

+ private final Map, TopologyNode> nodes;

+

+ TopologyGraph(List> roots) {

+ this(roots, Collections.emptySet());

+ }

+

+ TopologyGraph(List> roots, Set>

boundaries) {

+ this.nodes = new HashMap<>();

+

+ // we first link all edges in the original exec node graph

+ AbstractExecNodeExactlyOnceVisitor visitor = new

AbstractExecNodeExactlyOnceVisitor() {

+ @Override

+ protected void visitNode(ExecNode node) {

+ if (boundaries.contains(node)) {

+ return;

+ }

+ for (ExecNode input :

node.getInputNodes()) {

+ link(input, node);

+ }

+ visitInputs(node);

+ }

+ };

+ roots.forEach(n -> n.accept(visitor));

+ }

+

+ /**

+* Link an edge from `from` node to `to` node if no loop will occur

after adding this edge.

+* Returns if this edge is successfully added.

+*/

+ boolean link(ExecNode from, ExecNode to) {

+ TopologyNode fromNode = getTopologyNode(from);

+ TopologyNode toNode = getTopologyNode(to);

+

+ if (canReach(toNode, fromNode)) {

+ // invalid edge, as `to` is the predecessor of `from`

+ return false;

+ } else {

+ // link `from` and `to`

+ fromNode.outputs.add(toNode);

+ toNode.inputs.add(fromNode);

+ return true;

+ }

+ }

+

+ /**

+* Remove the edge from `from` node to `to` node. If there is no edge

between them then do nothing.

+*/

+ void unlink(ExecNode from, ExecNode to) {

+ TopologyNode fromNode = getTopologyNode(from);

+ TopologyNode toNode = getTopologyNode(to);

+

+ fromNode.outputs.remove(toNode);

+ toNode.inputs.remove(fromNode);

+ }

+

+ /**

+* Calculate the maximum distance of the currently added nodes from the

nodes without inputs.

+* The smallest distance is 0 (which are exactly the nodes without

inputs) and the distances of

+* other nodes are the largest distances in their inputs plus 1.

+*/

+ Map, Integer> calculateDistance() {

Review comment:

give `distance` a definition ?

##

File path:

flink-table/flink-table-planner-blink/src/main/java/org/apache/flink/table/planner/plan/processor/MultipleInputNodeCreationProcessor.java

##

@@ -0,0 +1,484 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional

[GitHub] [flink] wuchong commented on a change in pull request #13669: [FLINK-19684][Connector][jdbc] Fix the Jdbc-connector's 'lookup.max-retries' option implementation

wuchong commented on a change in pull request #13669:

URL: https://github.com/apache/flink/pull/13669#discussion_r512405731

##

File path:

flink-connectors/flink-connector-jdbc/src/test/java/org/apache/flink/connector/jdbc/table/JdbcDynamicTableSourceITCase.java

##

@@ -125,6 +125,45 @@ public void testJdbcSource() throws Exception {

assertEquals(expected, result);

}

+ @Test

+ public void testJdbcSourceWithLookupMaxRetries() throws Exception {

+ StreamExecutionEnvironment env =

StreamExecutionEnvironment.getExecutionEnvironment();

+ EnvironmentSettings envSettings =

EnvironmentSettings.newInstance()

+ .useBlinkPlanner()

+ .inStreamingMode()

+ .build();

+ StreamTableEnvironment tEnv =

StreamTableEnvironment.create(env, envSettings);

+

+ tEnv.executeSql(

+ "CREATE TABLE " + INPUT_TABLE + "(" +

+ "id BIGINT," +

+ "timestamp6_col TIMESTAMP(6)," +

+ "timestamp9_col TIMESTAMP(9)," +

+ "time_col TIME," +

+ "real_col FLOAT," +

+ "double_col DOUBLE," +

+ "decimal_col DECIMAL(10, 4)" +

+ ") WITH (" +

+ " 'connector'='jdbc'," +

+ " 'url'='" + DB_URL + "'," +

+ " 'lookup.max-retries'='0'," +

+ " 'table-name'='" + INPUT_TABLE + "'" +

+ ")"

+ );

+

+ Iterator collected = tEnv.executeSql("SELECT id FROM " +

INPUT_TABLE).collect();

Review comment:

`SELECT *` can't test lookup ability.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Updated] (FLINK-19820) TableEnvironment init fails with JDK9

[

https://issues.apache.org/jira/browse/FLINK-19820?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Timo Walther updated FLINK-19820:

-

Affects Version/s: 1.11.2

> TableEnvironment init fails with JDK9

> -

>

> Key: FLINK-19820

> URL: https://issues.apache.org/jira/browse/FLINK-19820

> Project: Flink

> Issue Type: Bug

> Components: Table SQL / API

>Affects Versions: 1.11.2

>Reporter: Timo Walther

>Priority: Major

>

> I haven't verified the issue myself. But it seems that TableEnvironment

> cannot be properly initialized when using JDK9:

> Stack trace:

> {code}

> Exception in thread "main" java.lang.ExceptionInInitializerError

> at

> org.apache.flink.table.planner.calcite.FlinkRelFactories$.(FlinkRelFactories.scala:51)

> at

> org.apache.flink.table.planner.calcite.FlinkRelFactories$.(FlinkRelFactories.scala)

> at

> org.apache.flink.table.planner.calcite.FlinkRelFactories.FLINK_REL_BUILDER(FlinkRelFactories.scala)

> at

> org.apache.flink.table.planner.delegation.PlannerContext.lambda$getSqlToRelConverterConfig$2(PlannerContext.java:279)

> at

> java.util.Optional.orElseGet(java.base@9-internal/Optional.java:344)

> at

> org.apache.flink.table.planner.delegation.PlannerContext.getSqlToRelConverterConfig(PlannerContext.java:273)

> at

> org.apache.flink.table.planner.delegation.PlannerContext.createFrameworkConfig(PlannerContext.java:137)

> at

> org.apache.flink.table.planner.delegation.PlannerContext.(PlannerContext.java:113)

> at

> org.apache.flink.table.planner.delegation.PlannerBase.(PlannerBase.scala:112)

> at

> org.apache.flink.table.planner.delegation.StreamPlanner.(StreamPlanner.scala:48)

> at

> org.apache.flink.table.planner.delegation.BlinkPlannerFactory.create(BlinkPlannerFactory.java:50)

> at

> org.apache.flink.table.api.bridge.java.internal.StreamTableEnvironmentImpl.create(StreamTableEnvironmentImpl.java:130)

> at

> org.apache.flink.table.api.bridge.java.StreamTableEnvironment.create(StreamTableEnvironment.java:111)

> at

> org.apache.flink.table.api.bridge.java.StreamTableEnvironment.create(StreamTableEnvironment.java:82)

> at com.teavaro.cep.modules.ml.CEPMLInit.runUseCase(CEPMLInit.java:57)

> at com.teavaro.cep.modules.ml.CEPMLInit.start(CEPMLInit.java:43)

> at

> com.teavaro.cep.modules.ml.CEPMLInit.prepareUseCase(CEPMLInit.java:35)

> at com.teavaro.cep.pipelines.CEPInit.start(CEPInit.java:47)

> at com.teavaro.cep.StreamingJob.runCEP(StreamingJob.java:121)

> at com.teavaro.cep.StreamingJob.prepareJob(StreamingJob.java:106)

> at com.teavaro.cep.StreamingJob.main(StreamingJob.java:64)

> Caused by: java.lang.RuntimeException: while binding method public default

> org.apache.calcite.tools.RelBuilder$ConfigBuilder

> org.apache.calcite.tools.RelBuilder$Config.toBuilder()

> at

> org.apache.calcite.util.ImmutableBeans.create(ImmutableBeans.java:215)

> at

> org.apache.calcite.tools.RelBuilder$Config.(RelBuilder.java:3074)

> ... 21 more

> Caused by: java.lang.IllegalAccessException: access to public member failed:

> org.apache.calcite.tools.RelBuilder$Config.toBuilder()ConfigBuilder/invokeSpecial,

> from org.apache.calcite.tools.RelBuilder$Config/2 (unnamed module @2cc03cd1)

> at

> java.lang.invoke.MemberName.makeAccessException(java.base@9-internal/MemberName.java:908)

> at

> java.lang.invoke.MethodHandles$Lookup.checkAccess(java.base@9-internal/MethodHandles.java:1839)

> at

> java.lang.invoke.MethodHandles$Lookup.checkMethod(java.base@9-internal/MethodHandles.java:1779)

> at

> java.lang.invoke.MethodHandles$Lookup.getDirectMethodCommon(java.base@9-internal/MethodHandles.java:1928)

> at

> java.lang.invoke.MethodHandles$Lookup.getDirectMethodNoSecurityManager(java.base@9-internal/MethodHandles.java:1922)

> at

> java.lang.invoke.MethodHandles$Lookup.unreflectSpecial(java.base@9-internal/MethodHandles.java:1480)

> at

> org.apache.calcite.util.ImmutableBeans.create(ImmutableBeans.java:213)

> {code}

> This might be fixed in later JDK versions but we should track the issue

> nevertheless. The full discussion can be found here:

> https://stackoverflow.com/questions/64544422/illegal-access-to-create-streamtableenvironment-with-jdk-9-in-debian

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Created] (FLINK-19820) TableEnvironment init fails with JDK9

Timo Walther created FLINK-19820:

Summary: TableEnvironment init fails with JDK9

Key: FLINK-19820

URL: https://issues.apache.org/jira/browse/FLINK-19820

Project: Flink

Issue Type: Bug

Components: Table SQL / API

Reporter: Timo Walther

I haven't verified the issue myself. But it seems that TableEnvironment cannot

be properly initialized when using JDK9:

Stack trace:

{code}

Exception in thread "main" java.lang.ExceptionInInitializerError

at

org.apache.flink.table.planner.calcite.FlinkRelFactories$.(FlinkRelFactories.scala:51)

at

org.apache.flink.table.planner.calcite.FlinkRelFactories$.(FlinkRelFactories.scala)

at

org.apache.flink.table.planner.calcite.FlinkRelFactories.FLINK_REL_BUILDER(FlinkRelFactories.scala)

at

org.apache.flink.table.planner.delegation.PlannerContext.lambda$getSqlToRelConverterConfig$2(PlannerContext.java:279)

at java.util.Optional.orElseGet(java.base@9-internal/Optional.java:344)

at

org.apache.flink.table.planner.delegation.PlannerContext.getSqlToRelConverterConfig(PlannerContext.java:273)

at

org.apache.flink.table.planner.delegation.PlannerContext.createFrameworkConfig(PlannerContext.java:137)

at

org.apache.flink.table.planner.delegation.PlannerContext.(PlannerContext.java:113)

at

org.apache.flink.table.planner.delegation.PlannerBase.(PlannerBase.scala:112)

at

org.apache.flink.table.planner.delegation.StreamPlanner.(StreamPlanner.scala:48)

at

org.apache.flink.table.planner.delegation.BlinkPlannerFactory.create(BlinkPlannerFactory.java:50)

at

org.apache.flink.table.api.bridge.java.internal.StreamTableEnvironmentImpl.create(StreamTableEnvironmentImpl.java:130)

at

org.apache.flink.table.api.bridge.java.StreamTableEnvironment.create(StreamTableEnvironment.java:111)

at

org.apache.flink.table.api.bridge.java.StreamTableEnvironment.create(StreamTableEnvironment.java:82)

at com.teavaro.cep.modules.ml.CEPMLInit.runUseCase(CEPMLInit.java:57)

at com.teavaro.cep.modules.ml.CEPMLInit.start(CEPMLInit.java:43)

at

com.teavaro.cep.modules.ml.CEPMLInit.prepareUseCase(CEPMLInit.java:35)

at com.teavaro.cep.pipelines.CEPInit.start(CEPInit.java:47)

at com.teavaro.cep.StreamingJob.runCEP(StreamingJob.java:121)

at com.teavaro.cep.StreamingJob.prepareJob(StreamingJob.java:106)

at com.teavaro.cep.StreamingJob.main(StreamingJob.java:64)

Caused by: java.lang.RuntimeException: while binding method public default

org.apache.calcite.tools.RelBuilder$ConfigBuilder

org.apache.calcite.tools.RelBuilder$Config.toBuilder()

at

org.apache.calcite.util.ImmutableBeans.create(ImmutableBeans.java:215)

at

org.apache.calcite.tools.RelBuilder$Config.(RelBuilder.java:3074)

... 21 more

Caused by: java.lang.IllegalAccessException: access to public member failed:

org.apache.calcite.tools.RelBuilder$Config.toBuilder()ConfigBuilder/invokeSpecial,

from org.apache.calcite.tools.RelBuilder$Config/2 (unnamed module @2cc03cd1)

at

java.lang.invoke.MemberName.makeAccessException(java.base@9-internal/MemberName.java:908)

at

java.lang.invoke.MethodHandles$Lookup.checkAccess(java.base@9-internal/MethodHandles.java:1839)

at

java.lang.invoke.MethodHandles$Lookup.checkMethod(java.base@9-internal/MethodHandles.java:1779)

at

java.lang.invoke.MethodHandles$Lookup.getDirectMethodCommon(java.base@9-internal/MethodHandles.java:1928)

at

java.lang.invoke.MethodHandles$Lookup.getDirectMethodNoSecurityManager(java.base@9-internal/MethodHandles.java:1922)

at

java.lang.invoke.MethodHandles$Lookup.unreflectSpecial(java.base@9-internal/MethodHandles.java:1480)

at

org.apache.calcite.util.ImmutableBeans.create(ImmutableBeans.java:213)

{code}

This might be fixed in later JDK versions but we should track the issue

nevertheless. The full discussion can be found here:

https://stackoverflow.com/questions/64544422/illegal-access-to-create-streamtableenvironment-with-jdk-9-in-debian

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [flink] xintongsong closed pull request #13584: [hotfix][typo] Fix typo in MiniCluster

xintongsong closed pull request #13584: URL: https://github.com/apache/flink/pull/13584 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (FLINK-19654) Improve the execution time of PyFlink end-to-end tests

[ https://issues.apache.org/jira/browse/FLINK-19654?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17221148#comment-17221148 ] Dian Fu commented on FLINK-19654: - - Set the parallelism to 2 to reduce the execution time: merged to master via 16ed892245fa0ccd0319597f26f0ec193d5021c8 > Improve the execution time of PyFlink end-to-end tests > -- > > Key: FLINK-19654 > URL: https://issues.apache.org/jira/browse/FLINK-19654 > Project: Flink > Issue Type: Bug > Components: API / Python, Tests >Affects Versions: 1.12.0 >Reporter: Dian Fu >Assignee: Huang Xingbo >Priority: Major > Labels: pull-request-available > Fix For: 1.12.0 > > Attachments: image (7).png > > > Thanks for the sharing from [~rmetzger], currently the test duration for > PyFlink end-to-end test is as following: > ||test case||average execution-time||maximum execution-time|| > |PyFlink Table end-to-end test|1340s|1877s| > |PyFlink DataStream end-to-end test|387s|575s| > |Kubernetes PyFlink application test|606s|694s| > We need to investigate how to improve them to reduce the execution time. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (FLINK-19768) The shell "./yarn-session.sh " not use log4j-session.properties , it use log4j.properties

[

https://issues.apache.org/jira/browse/FLINK-19768?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17221147#comment-17221147

]

Xintong Song commented on FLINK-19768:

--

[~YUJIANBO],

I don't think you can easily separate logs for different jobs in the same Flink

session cluster. Since the jobs share the same Flink cluster, some of the

framework activities cannot be separated.

You can only separated the logs generated by user codes. To achieve that, you

would need to set the environment variable {{FLINK_CONF_DIR}}, pointing to

different directories containing different {{log4j.properties}} when submitting

the jobs

> The shell "./yarn-session.sh " not use log4j-session.properties , it use

> log4j.properties

> --

>

> Key: FLINK-19768

> URL: https://issues.apache.org/jira/browse/FLINK-19768

> Project: Flink

> Issue Type: Bug

> Components: Client / Job Submission, Deployment / YARN

>Affects Versions: 1.11.2

>Reporter: YUJIANBO

>Priority: Major

>

> The shell "./yarn-session.sh " not use log4j-session.properties , it use

> log4j.properties

> My Flink Job UI shows the $internal.yarn.log-config-file is

> "/usr/local/flink-1.11.2/conf/log4j.properties",is it a bug?

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [flink] dianfu closed pull request #13736: [FLINK-19654][python][e2e] Reduce pyflink e2e test parallelism

dianfu closed pull request #13736: URL: https://github.com/apache/flink/pull/13736 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] dianfu commented on a change in pull request #13736: [FLINK-19654][python][e2e] Reduce pyflink e2e test parallelism

dianfu commented on a change in pull request #13736:

URL: https://github.com/apache/flink/pull/13736#discussion_r512419927

##

File path: flink-end-to-end-tests/test-scripts/test_pyflink.sh

##

@@ -110,6 +110,7 @@ echo "pytest==4.4.1" > "${REQUIREMENTS_PATH}"

echo "Test submitting python job with 'pipeline.jars':\n"

PYFLINK_CLIENT_EXECUTABLE=${PYTHON_EXEC} "${FLINK_DIR}/bin/flink" run \

+ -p 2 \

Review comment:

format is incorrect.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [flink] curcur edited a comment on pull request #13648: [FLINK-19632] Introduce a new ResultPartitionType for Approximate Local Recovery

curcur edited a comment on pull request #13648: URL: https://github.com/apache/flink/pull/13648#issuecomment-716973831 Hey @rkhachatryan and @pnowojski , thanks for the response. > We realized that if we check view reference in subpartition (or null out view.parent) then the downstream which view was overwritten by an older thread, will sooner or later be fenced by the upstream (or checkpoint will time out). > FLINK-19774 then becomes only an optimization. 1. Do you have preferences on `check view reference` vs `null out view.parent`? I would slightly prefer to set view.parent -> null; Conceptually, it breaks the connection between the old view and its parent; Implementation wise, it can limit the (parent == null) handling mostly within `PipelinedApproximateSubpartitionView`. Notice that not just `pollNext()` needs the check, I think everything that touches view.parent needs a check. 2. How this can solve the problem of a new view is replaced by an old one? Let's say downstream reconnects, asking for a new view; the new view is created; replaced by an old view that is triggered by an old handler event. The new view's parent is null-out. Then what will happen? I do not think "fenced by the upstream (or checkpoint will time out)" can **solve** this problem, it just ends with more failures caused by this problem. > So we can prevent most of the issues without touching deployment descriptors. > Therefore, I think it makes sense to implement it in this PR. Sorry for changing the decision. I am overall fine to put the check within this PR. However, may I ask how it is different from we have this PR as it is, and I do a follow-up one to null-out the parent? In this PR, my main purpose is to introduce a different result partition type, and scheduler changes are based upon this new type. That's the main reason I prefer to do it in a follow-up PR otherwise the scheduler part is blocked. It is easier to review as well. > Another concern is a potential resource leak if downstream continuously fail without notifying the upstream (instances of CreditBasedSequenceNumberingViewReader will accumulate). Can you create a follow-up ticket for that? > This can be addressed by firing user event (see PartitionRequestQueue.userEventTriggered). Yes, that's a good point. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #13626: [FLINK-19594][web] Make subtask index start from zero

flinkbot edited a comment on pull request #13626: URL: https://github.com/apache/flink/pull/13626#issuecomment-708171532 ## CI report: * 1f6974a2251daef826687b981fd5a5fb428fe66c Azure: [FAILURE](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=7857) Azure: [FAILURE](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=7571) * bcea64faf14e9261ae2cb6b996701b844bf876d3 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=8342) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] curcur edited a comment on pull request #13648: [FLINK-19632] Introduce a new ResultPartitionType for Approximate Local Recovery

curcur edited a comment on pull request #13648: URL: https://github.com/apache/flink/pull/13648#issuecomment-716973831 Hey @rkhachatryan and @pnowojski , thanks for the response. > We realized that if we check view reference in subpartition (or null out view.parent) then the downstream which view was overwritten by an older thread, will sooner or later be fenced by the upstream (or checkpoint will time out). > FLINK-19774 then becomes only an optimization. 1. Do you have preferences on `check view reference` vs `null out view.parent`? I have evaluated these two methods before, and I feel set view.parent -> null may be a cleaner way (that's why I proposed null out parent). Conceptually, it breaks the connection between the old view and its parent; Implementation wise, it can limit the (parent == null) handling mostly within `PipelinedApproximateSubpartition` or probably a little bit in `PipelinedSubpartition`, while in the reference check way, we have to change the interface and touch all the subpartitions that implements `PipelinedSubpartition`. Notice that not just `pollNext()` needs the check, everything that touches parent needs a check. 2. How this can solve the problem of a new view is replaced by an old one? Let's say downstream reconnects, asking for a new view; the new view is created; replaced by an old view that is triggered by an old handler event. The new view's parent is null-out. Then what will happen? I do not think "fenced by the upstream (or checkpoint will time out)" can **solve** this problem, it just ends with more failures caused by this problem. > So we can prevent most of the issues without touching deployment descriptors. > Therefore, I think it makes sense to implement it in this PR. Sorry for changing the decision. I am overall fine to put the check within this PR. However, may I ask how it is different from we have this PR as it is, and I do a follow-up one to null-out the parent? In this PR, my main purpose is to introduce a different result partition type, and scheduler changes are based upon this new type. That's the main reason I prefer to do it in a follow-up PR otherwise the scheduler part is blocked. And also, it is easier to review, and for me to focus on tests after null-out parent as well. > Another concern is a potential resource leak if downstream continuously fail without notifying the upstream (instances of CreditBasedSequenceNumberingViewReader will accumulate). Can you create a follow-up ticket for that? > This can be addressed by firing user event (see PartitionRequestQueue.userEventTriggered). Yes, that's a good point. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] curcur edited a comment on pull request #13648: [FLINK-19632] Introduce a new ResultPartitionType for Approximate Local Recovery

curcur edited a comment on pull request #13648: URL: https://github.com/apache/flink/pull/13648#issuecomment-716973831 Hey @rkhachatryan and @pnowojski , thanks for the response. > We realized that if we check view reference in subpartition (or null out view.parent) then the downstream which view was overwritten by an older thread, will sooner or later be fenced by the upstream (or checkpoint will time out). > FLINK-19774 then becomes only an optimization. 1. Do you have preferences on `check view reference` vs `null out view.parent`? I have evaluated these two methods before, and I feel set view.parent -> null may be a cleaner way (that's why I proposed null out parent). Conceptually, it breaks the connection between the old view and the new view; Implementation wise, it can limit the (parent == null) handling mostly within `PipelinedApproximateSubpartition` or probably a little bit in `PipelinedSubpartition`, while in the reference check way, we have to change the interface and touch all the subpartitions that implements `PipelinedSubpartition`. Notice that not just `pollNext()` needs the check, everything that touches parent needs a check. 2. How this can solve the problem of a new view is replaced by an old one? Let's say downstream reconnects, asking for a new view; the new view is created; replaced by an old view that is triggered by an old handler event. The new view's parent is null-out. Then what will happen? I do not think "fenced by the upstream (or checkpoint will time out)" can **solve** this problem, it just ends with more failures caused by this problem. > So we can prevent most of the issues without touching deployment descriptors. > Therefore, I think it makes sense to implement it in this PR. Sorry for changing the decision. I am overall fine to put the check within this PR. However, may I ask how it is different from we have this PR as it is, and I do a follow-up one to null-out the parent? In this PR, my main purpose is to introduce a different result partition type, and scheduler changes are based upon this new type. That's the main reason I prefer to do it in a follow-up PR otherwise the scheduler part is blocked. And also, it is easier to review, and for me to focus on tests after null-out parent as well. > Another concern is a potential resource leak if downstream continuously fail without notifying the upstream (instances of CreditBasedSequenceNumberingViewReader will accumulate). Can you create a follow-up ticket for that? > This can be addressed by firing user event (see PartitionRequestQueue.userEventTriggered). Yes, that's a good point. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] curcur edited a comment on pull request #13648: [FLINK-19632] Introduce a new ResultPartitionType for Approximate Local Recovery

curcur edited a comment on pull request #13648: URL: https://github.com/apache/flink/pull/13648#issuecomment-716973831 Hey @rkhachatryan and @pnowojski , thanks for the response. > We realized that if we check view reference in subpartition (or null out view.parent) then the downstream which view was overwritten by an older thread, will sooner or later be fenced by the upstream (or checkpoint will time out). > FLINK-19774 then becomes only an optimization. 1. Do you have preferences on `check view reference` vs `null out view.parent`? I have evaluated these two methods before, and I feel set view.parent -> null may be a cleaner way (that's why I proposed null out parent). Conceptually, it breaks the cut between the old view and the new view; Implementation wise, it can limit the (parent == null) handling mostly within `PipelinedApproximateSubpartition` or probably a little bit in `PipelinedSubpartition`, while in the reference check way, we have to change the interface and touch all the subpartitions that implements `PipelinedSubpartition`. Notice that not just `pollNext()` needs the check, everything that touches parent needs a check. 2. How this can solve the problem of a new view is replaced by an old one? Let's say downstream reconnects, asking for a new view; the new view is created; replaced by an old view that is triggered by an old handler event. The new view's parent is null-out. Then what will happen? I do not think "fenced by the upstream (or checkpoint will time out)" can **solve** this problem, it just ends with more failures caused by this problem. > So we can prevent most of the issues without touching deployment descriptors. > Therefore, I think it makes sense to implement it in this PR. Sorry for changing the decision. I am overall fine to put the check within this PR. However, may I ask how it is different from we have this PR as it is, and I do a follow-up one to null-out the parent? In this PR, my main purpose is to introduce a different result partition type, and scheduler changes are based upon this new type. That's the main reason I prefer to do it in a follow-up PR otherwise the scheduler part is blocked. And also, it is easier to review, and for me to focus on tests after null-out parent as well. > Another concern is a potential resource leak if downstream continuously fail without notifying the upstream (instances of CreditBasedSequenceNumberingViewReader will accumulate). Can you create a follow-up ticket for that? > This can be addressed by firing user event (see PartitionRequestQueue.userEventTriggered). Yes, that's a good point. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] shizhengchao commented on pull request #13717: [FLINK-19723][Connector/JDBC] Solve the problem of repeated data submission in the failure retry

shizhengchao commented on pull request #13717: URL: https://github.com/apache/flink/pull/13717#issuecomment-716976670 > Could you add an unit test in `JdbcDynamicOutputFormatTest` to verify this bug fix? Thanks for review, i will add unit tests soon This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] wuchong commented on a change in pull request #13612: [FLINK-19587][table-planner-blink] Fix error result when casting binary as varchar

wuchong commented on a change in pull request #13612:

URL: https://github.com/apache/flink/pull/13612#discussion_r512412637

##

File path:

flink-table/flink-table-planner-blink/src/test/scala/org/apache/flink/table/planner/expressions/ScalarOperatorsTest.scala

##

@@ -62,6 +62,26 @@ class ScalarOperatorsTest extends ScalarOperatorsTestBase {

)

}

+ @Test

+ def testCast(): Unit = {

+

+// binary -> varchar

+testSqlApi(

+ "CAST (f18 as varchar)",

+ "hello world")

+

+// varbinary -> varchar

+testSqlApi(

+ "CAST (f19 as varchar)",

+ "hello flink")

+

+// null case

+testSqlApi("CAST (NULL AS INT)", "null")

+testSqlApi(

+ "CAST (NULL AS VARCHAR) = ''",

+ "null")

Review comment:

Would be better to add a test for cast binary literal to varchar.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [flink] curcur commented on pull request #13648: [FLINK-19632] Introduce a new ResultPartitionType for Approximate Local Recovery

curcur commented on pull request #13648: URL: https://github.com/apache/flink/pull/13648#issuecomment-716973831 Hey @rkhachatryan and @pnowojski , thanks for the response. > We realized that if we check view reference in subpartition (or null out view.parent) then the downstream which view was overwritten by an older thread, will sooner or later be fenced by the upstream (or checkpoint will time out). > FLINK-19774 then becomes only an optimization. 1. Do you have preferences on `check view reference` vs `null out view.parent`? I have evaluated these two methods before, and I feel set view.parent -> null may be a cleaner way (that's why I proposed null out parent). Conceptually, it breaks the cut between the old view and the new view; Implementation wise, it can limit the (parent == null) handling mostly within `PipelinedApproximateSubpartition` or probably a little bit in `PipelinedSubpartition`, while in the reference check way, we have to change the interface and touch all the subpartitions that implements `PipelinedSubpartition`. Notice that not just `pollNext()` needs the check, everything that touches parent needs a check. 2. How this can solve the problem of a new view is replaced by an old one? Let's say downstream reconnects, asking for a new view; the new view is created; replaced by an old view that is triggered by an old handler event. The new view's parent is null-out. Then what will happen? I do not think "fenced by the upstream (or checkpoint will time out)" can **solve** this problem, it just ends with more failures caused by this problem. > So we can prevent most of the issues without touching deployment descriptors. > Therefore, I think it makes sense to implement it in this PR. Sorry for changing the decision. I am overall fine to put the check within this PR. However, may I ask how it is different from we have this PR as it is, and I fire a follow-up one to null-out the parent? In this PR, my main purpose is to introduce a different result partition type, and scheduler changes are based upon this new type. That's the main reason I prefer to do it in a follow-up PR otherwise the scheduler part is blocked. And also, it is easier to review, and for me to focus on tests after null-out parent as well. > Another concern is a potential resource leak if downstream continuously fail without notifying the upstream (instances of CreditBasedSequenceNumberingViewReader will accumulate). Can you create a follow-up ticket for that? > This can be addressed by firing user event (see PartitionRequestQueue.userEventTriggered). Yes, that's a good point. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot commented on pull request #13802: [FLINK-19793][connector-kafka] Harden KafkaTableITCase.testKafkaSourceSinkWithMetadata

flinkbot commented on pull request #13802: URL: https://github.com/apache/flink/pull/13802#issuecomment-716973857 Thanks a lot for your contribution to the Apache Flink project. I'm the @flinkbot. I help the community to review your pull request. We will use this comment to track the progress of the review. ## Automated Checks Last check on commit 25256d07a44b5e60b2f699a73220d6ea4a5d44f1 (Tue Oct 27 04:32:20 UTC 2020) **Warnings:** * No documentation files were touched! Remember to keep the Flink docs up to date! Mention the bot in a comment to re-run the automated checks. ## Review Progress * ❓ 1. The [description] looks good. * ❓ 2. There is [consensus] that the contribution should go into to Flink. * ❓ 3. Needs [attention] from. * ❓ 4. The change fits into the overall [architecture]. * ❓ 5. Overall code [quality] is good. Please see the [Pull Request Review Guide](https://flink.apache.org/contributing/reviewing-prs.html) for a full explanation of the review process. The Bot is tracking the review progress through labels. Labels are applied according to the order of the review items. For consensus, approval by a Flink committer of PMC member is required Bot commands The @flinkbot bot supports the following commands: - `@flinkbot approve description` to approve one or more aspects (aspects: `description`, `consensus`, `architecture` and `quality`) - `@flinkbot approve all` to approve all aspects - `@flinkbot approve-until architecture` to approve everything until `architecture` - `@flinkbot attention @username1 [@username2 ..]` to require somebody's attention - `@flinkbot disapprove architecture` to remove an approval you gave earlier This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (FLINK-19793) KafkaTableITCase.testKafkaSourceSinkWithMetadata fails on AZP

[

https://issues.apache.org/jira/browse/FLINK-19793?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

ASF GitHub Bot updated FLINK-19793:

---

Labels: pull-request-available test-stability (was: test-stability)

> KafkaTableITCase.testKafkaSourceSinkWithMetadata fails on AZP

> -

>

> Key: FLINK-19793

> URL: https://issues.apache.org/jira/browse/FLINK-19793

> Project: Flink

> Issue Type: Bug

> Components: Connectors / Kafka, Table SQL / Ecosystem

>Affects Versions: 1.12.0

>Reporter: Till Rohrmann

>Assignee: Timo Walther

>Priority: Blocker

> Labels: pull-request-available, test-stability

> Fix For: 1.12.0

>

>

> The {{KafkaTableITCase.testKafkaSourceSinkWithMetadata}} seems to fail on AZP:

> https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=8197=logs=c5f0071e-1851-543e-9a45-9ac140befc32=1fb1a56f-e8b5-5a82-00a0-a2db7757b4f5

> {code}

> Expected: k2=[B@7c9ecd9e},0,metadata_topic_avro,true>

> 2,2,CreateTime,2020-03-09T13:12:11.123,1,0,{},0,metadata_topic_avro,false>

> k2=[B@4af44e42},0,metadata_topic_avro,true>

> but: was 1,1,CreateTime,2020-03-08T13:12:11.123,0,0,{k1=[B@4ea4e0f3,

> k2=[B@7c9ecd9e},0,metadata_topic_avro,true>

> 2,2,CreateTime,2020-03-09T13:12:11.123,1,0,{},0,metadata_topic_avro,false>

> k2=[B@4af44e42},0,metadata_topic_avro,true>

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [flink] twalthr opened a new pull request #13802: [FLINK-19793][connector-kafka] Harden KafkaTableITCase.testKafkaSourceSinkWithMetadata

twalthr opened a new pull request #13802: URL: https://github.com/apache/flink/pull/13802 ## What is the purpose of the change Hardens the KafkaTableITCase by further improving the new test utilities and ignoring non-deterministic metadata columns. ## Brief change log - Offer an unordered list of row deep equals with matcher - Remove offset from test ## Verifying this change This change added tests and can be verified as follows: `RowTest` ## Does this pull request potentially affect one of the following parts: - Dependencies (does it add or upgrade a dependency): no - The public API, i.e., is any changed class annotated with `@Public(Evolving)`: yes - The serializers: no - The runtime per-record code paths (performance sensitive): no - Anything that affects deployment or recovery: JobManager (and its components), Checkpointing, Kubernetes/Yarn/Mesos, ZooKeeper: no - The S3 file system connector: no ## Documentation - Does this pull request introduce a new feature? no - If yes, how is the feature documented? not applicable This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Assigned] (FLINK-19684) The Jdbc-connector's 'lookup.max-retries' option implementation is different from the meaning

[

https://issues.apache.org/jira/browse/FLINK-19684?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Jark Wu reassigned FLINK-19684:

---

Assignee: CaoZhen

> The Jdbc-connector's 'lookup.max-retries' option implementation is different

> from the meaning

> --

>

> Key: FLINK-19684

> URL: https://issues.apache.org/jira/browse/FLINK-19684

> Project: Flink

> Issue Type: Bug

> Components: Connectors / JDBC

>Reporter: CaoZhen

>Assignee: CaoZhen

>Priority: Minor

> Labels: pull-request-available

>

>

> The code of 'lookup.max-retries' option :

> {code:java}

> for (int retry = 1; retry <= maxRetryTimes; retry++) {

> statement.clearParameters();

> .

> }

> {code}

> From the code, If this option is set to 0, the JDBC query will not be

> executed.

>

> From documents, the max retry times if lookup database failed. [1]

> When set to 0, there is a query, but no retry.

>

> So,the code of 'lookup.max-retries' option should be:

> {code:java}

> for (int retry = 0; retry <= maxRetryTimes; retry++) {

> statement.clearParameters();

> .

> }

> {code}

>

>

> [1]

> https://ci.apache.org/projects/flink/flink-docs-release-1.11/dev/table/connectors/jdbc.html#lookup-max-retries

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Created] (FLINK-19819) SourceReaderBase supports limit push down

Jingsong Lee created FLINK-19819: Summary: SourceReaderBase supports limit push down Key: FLINK-19819 URL: https://issues.apache.org/jira/browse/FLINK-19819 Project: Flink Issue Type: Improvement Components: Connectors / Common Reporter: Jingsong Lee Fix For: 1.12.0 User requirement: Users need to look at a few random pieces of data in a table to see what the data looks like. So users often use the SQL: "select * from table limit 10" For a large table, expect to end soon because only a few pieces of data are queried. For DataStream or BoundedStream, they are push based execution models, so the downstream cannot control the end of source operator. We need push down limit to source operator, so that source operator can end early. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] godfreyhe commented on pull request #13793: [FLINK-19811][table-planner-blink] Simplify SEARCHes in conjunctions in FlinkRexUtil#simplify

godfreyhe commented on pull request #13793: URL: https://github.com/apache/flink/pull/13793#issuecomment-716967458 update the title as "Simplify SEARCHes in FlinkRexUtil#simplify" This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

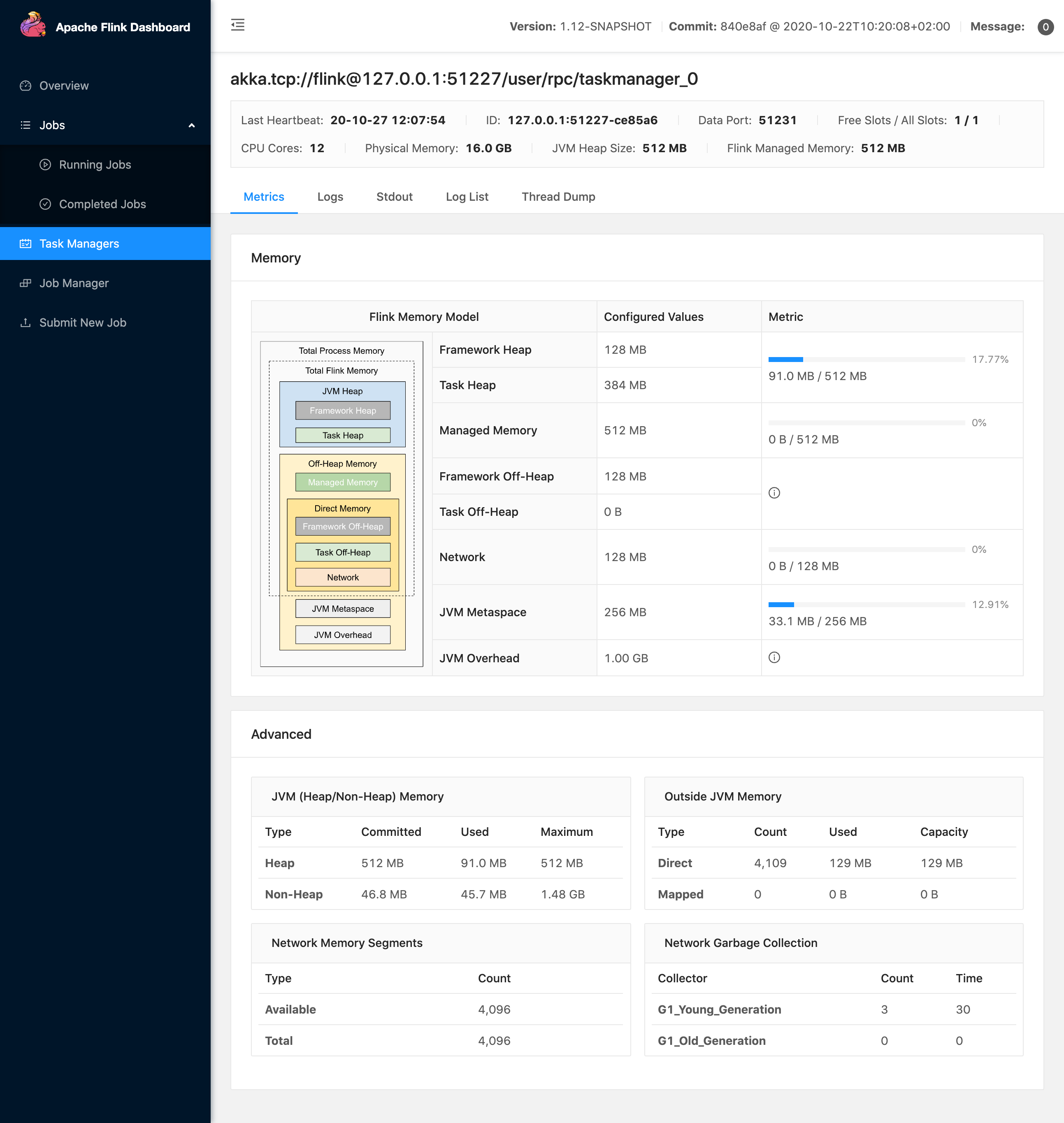

[GitHub] [flink] vthinkxie edited a comment on pull request #13786: [FLINK-19764] Add More Metrics to TaskManager in Web UI

vthinkxie edited a comment on pull request #13786: URL: https://github.com/apache/flink/pull/13786#issuecomment-716966483 Hi @XComp fixed, thanks for your comments new screenshot  This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] vthinkxie commented on pull request #13786: [FLINK-19764] Add More Metrics to TaskManager in Web UI

vthinkxie commented on pull request #13786: URL: https://github.com/apache/flink/pull/13786#issuecomment-716966483 Hi @XComp fixed, thanks for your comments This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] wuchong commented on pull request #13717: [FLINK-19723][Connector/JDBC] Solve the problem of repeated data submission in the failure retry

wuchong commented on pull request #13717: URL: https://github.com/apache/flink/pull/13717#issuecomment-716965007 Could you add an unit test in `JdbcDynamicOutputFormatTest` to verify this bug fix? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] wuchong commented on pull request #13721: [FLINK-19694][table] Support Upsert ChangelogMode for ScanTableSource

wuchong commented on pull request #13721: URL: https://github.com/apache/flink/pull/13721#issuecomment-716944858 Hi @godfreyhe , I have added the plan test as we discussed offline, and I did find a bug. Appreciate if you can have another look . This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (FLINK-19793) KafkaTableITCase.testKafkaSourceSinkWithMetadata fails on AZP

[

https://issues.apache.org/jira/browse/FLINK-19793?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17221093#comment-17221093

]

Timo Walther commented on FLINK-19793:

--

Will take care of this.

> KafkaTableITCase.testKafkaSourceSinkWithMetadata fails on AZP

> -

>

> Key: FLINK-19793

> URL: https://issues.apache.org/jira/browse/FLINK-19793

> Project: Flink

> Issue Type: Bug

> Components: Connectors / Kafka, Table SQL / Ecosystem

>Affects Versions: 1.12.0

>Reporter: Till Rohrmann

>Assignee: Timo Walther

>Priority: Blocker

> Labels: test-stability

> Fix For: 1.12.0

>

>

> The {{KafkaTableITCase.testKafkaSourceSinkWithMetadata}} seems to fail on AZP:

> https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=8197=logs=c5f0071e-1851-543e-9a45-9ac140befc32=1fb1a56f-e8b5-5a82-00a0-a2db7757b4f5

> {code}

> Expected: k2=[B@7c9ecd9e},0,metadata_topic_avro,true>

> 2,2,CreateTime,2020-03-09T13:12:11.123,1,0,{},0,metadata_topic_avro,false>

> k2=[B@4af44e42},0,metadata_topic_avro,true>

> but: was 1,1,CreateTime,2020-03-08T13:12:11.123,0,0,{k1=[B@4ea4e0f3,

> k2=[B@7c9ecd9e},0,metadata_topic_avro,true>

> 2,2,CreateTime,2020-03-09T13:12:11.123,1,0,{},0,metadata_topic_avro,false>

> k2=[B@4af44e42},0,metadata_topic_avro,true>

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Assigned] (FLINK-19793) KafkaTableITCase.testKafkaSourceSinkWithMetadata fails on AZP

[

https://issues.apache.org/jira/browse/FLINK-19793?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Timo Walther reassigned FLINK-19793:

Assignee: Timo Walther

> KafkaTableITCase.testKafkaSourceSinkWithMetadata fails on AZP

> -

>

> Key: FLINK-19793

> URL: https://issues.apache.org/jira/browse/FLINK-19793

> Project: Flink

> Issue Type: Bug

> Components: Connectors / Kafka, Table SQL / Ecosystem

>Affects Versions: 1.12.0

>Reporter: Till Rohrmann

>Assignee: Timo Walther

>Priority: Blocker

> Labels: test-stability

> Fix For: 1.12.0

>

>

> The {{KafkaTableITCase.testKafkaSourceSinkWithMetadata}} seems to fail on AZP:

> https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=8197=logs=c5f0071e-1851-543e-9a45-9ac140befc32=1fb1a56f-e8b5-5a82-00a0-a2db7757b4f5

> {code}

> Expected: k2=[B@7c9ecd9e},0,metadata_topic_avro,true>

> 2,2,CreateTime,2020-03-09T13:12:11.123,1,0,{},0,metadata_topic_avro,false>

> k2=[B@4af44e42},0,metadata_topic_avro,true>

> but: was 1,1,CreateTime,2020-03-08T13:12:11.123,0,0,{k1=[B@4ea4e0f3,

> k2=[B@7c9ecd9e},0,metadata_topic_avro,true>

> 2,2,CreateTime,2020-03-09T13:12:11.123,1,0,{},0,metadata_topic_avro,false>

> k2=[B@4af44e42},0,metadata_topic_avro,true>

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Updated] (FLINK-19818) ArrayIndexOutOfBoundsException occus when the source table have nest json

[

https://issues.apache.org/jira/browse/FLINK-19818?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

shizhengchao updated FLINK-19818:

-

Description:

I get an *ArrayIndexOutOfBoundsException* , when my table source have nest

json. as the follows is my test:

{code:sql}

CREATE TABLE Orders (

nest ROW<

idBIGINT,

consumerName STRING,

price DECIMAL(10, 5),

productName STRING

>,

proctime AS PROCTIME()

) WITH (

'connector' = 'kafka-0.11',

'topic' = 'Orders',

'properties.bootstrap.servers' = 'localhost:9092',

'properties.group.id' = 'testGroup',

'scan.startup.mode' = 'latest-offset',

'format' = 'json'

);

CREATE TABLE print (

orderId BIGINT,

consumerName STRING,

price DECIMAL(10, 5),

productName STRING

) WITH (

'connector' = 'print'

);

CREATE VIEW testView AS

SELECT

id,

consumerName,

price,

productName

FROM (

SELECT * FROM Orders

);

INSERT INTO print

SELECT

*

FROM testView;

{code}

The following is the exception of flink:

{code}

Exception in thread "main" java.lang.ArrayIndexOutOfBoundsException: -1

at java.util.ArrayList.elementData(ArrayList.java:422)

at java.util.ArrayList.get(ArrayList.java:435)

at

org.apache.calcite.sql.validate.SelectNamespace.getMonotonicity(SelectNamespace.java:73)

at

org.apache.calcite.sql.SqlIdentifier.getMonotonicity(SqlIdentifier.java:375)

at

org.apache.calcite.sql2rel.SqlToRelConverter.convertSelectList(SqlToRelConverter.java:4132)

at

org.apache.calcite.sql2rel.SqlToRelConverter.convertSelectImpl(SqlToRelConverter.java:685)

at

org.apache.calcite.sql2rel.SqlToRelConverter.convertSelect(SqlToRelConverter.java:642)

at

org.apache.calcite.sql2rel.SqlToRelConverter.convertQueryRecursive(SqlToRelConverter.java:3345)

at

org.apache.calcite.sql2rel.SqlToRelConverter.convertQuery(SqlToRelConverter.java:568)

at

org.apache.flink.table.planner.calcite.FlinkPlannerImpl.org$apache$flink$table$planner$calcite$FlinkPlannerImpl$$rel(FlinkPlannerImpl.scala:164)

at

org.apache.flink.table.planner.calcite.FlinkPlannerImpl.rel(FlinkPlannerImpl.scala:151)

at

org.apache.flink.table.planner.operations.SqlToOperationConverter.toQueryOperation(SqlToOperationConverter.java:789)

at

org.apache.flink.table.planner.operations.SqlToOperationConverter.convertViewQuery(SqlToOperationConverter.java:696)

at

org.apache.flink.table.planner.operations.SqlToOperationConverter.convertCreateView(SqlToOperationConverter.java:665)

at

org.apache.flink.table.planner.operations.SqlToOperationConverter.convert(SqlToOperationConverter.java:228)

at

org.apache.flink.table.planner.delegation.ParserImpl.parse(ParserImpl.java:78)

at

org.apache.flink.table.api.internal.TableEnvironmentImpl.executeSql(TableEnvironmentImpl.java:684)

at

com.fcbox.streaming.sql.submit.StreamingJob.callExecuteSql(StreamingJob.java:239)

at

com.fcbox.streaming.sql.submit.StreamingJob.callCommand(StreamingJob.java:207)

at

com.fcbox.streaming.sql.submit.StreamingJob.run(StreamingJob.java:133)

at

com.fcbox.streaming.sql.submit.StreamingJob.main(StreamingJob.java:77)

{code}

was:

I get an *ArrayIndexOutOfBoundsException* , when my table source have nest

json. as the follows is my test:

{code:sql}

CREATE TABLE Orders (

nest ROW<

idBIGINT,

consumerName STRING,

price DECIMAL(10, 5),

productName STRING

>,

proctime AS PROCTIME()

) WITH (

'connector' = 'kafka-0.11',

'topic' = 'Orders',

'properties.bootstrap.servers' = 'localhost:9092',

'properties.group.id' = 'testGroup',

'scan.startup.mode' = 'latest-offset',

'format' = 'json'

);

CREATE TABLE print (

orderId BIGINT,

consumerName STRING,

price DECIMAL(10, 5),

productName STRING

) WITH (

'connector' = 'print'

);

CREATE VIEW testView AS

SELECT

id,

consumerName,

price,

productName

FROM (

SELECT * FROM Orders

);

INSERT INTO print

SELECT

*

FROM testView;

{code}

The following is the exception of flink:

{code}

Unable to find source-code formatter for language: log. Available languages

are: actionscript, ada, applescript, bash, c, c#, c++, cpp, css, erlang, go,

groovy, haskell, html, java, javascript, js, json, lua, none, nyan, objc, perl,

php, python, r, rainbow, ruby, scala, sh, sql, swift, visualbasic, xml,

yamlException in thread "main" java.lang.ArrayIndexOutOfBoundsException: -1

at java.util.ArrayList.elementData(ArrayList.java:422)

at java.util.ArrayList.get(ArrayList.java:435)

at

org.apache.calcite.sql.validate.SelectNamespace.getMonotonicity(SelectNamespace.java:73)

at

org.apache.calcite.sql.SqlIdentifier.getMonotonicity(SqlIdentifier.java:375)

at

[jira] [Updated] (FLINK-19818) ArrayIndexOutOfBoundsException occus when the source table have nest json

[

https://issues.apache.org/jira/browse/FLINK-19818?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

shizhengchao updated FLINK-19818:

-

Description:

I get an *ArrayIndexOutOfBoundsException* , when my table source have nest

json. as the follows is my test:

{code:sql}

CREATE TABLE Orders (

nest ROW<

idBIGINT,

consumerName STRING,

price DECIMAL(10, 5),

productName STRING

>,

proctime AS PROCTIME()

) WITH (

'connector' = 'kafka-0.11',

'topic' = 'Orders',

'properties.bootstrap.servers' = 'localhost:9092',

'properties.group.id' = 'testGroup',

'scan.startup.mode' = 'latest-offset',

'format' = 'json'

);

CREATE TABLE print (

orderId BIGINT,

consumerName STRING,

price DECIMAL(10, 5),

productName STRING

) WITH (

'connector' = 'print'

);

CREATE VIEW testView AS

SELECT

id,

consumerName,

price,

productName

FROM (

SELECT * FROM Orders

);

INSERT INTO print

SELECT

*

FROM testView;

{code}

The following is the exception of flink:

{code}

Unable to find source-code formatter for language: log. Available languages

are: actionscript, ada, applescript, bash, c, c#, c++, cpp, css, erlang, go,

groovy, haskell, html, java, javascript, js, json, lua, none, nyan, objc, perl,

php, python, r, rainbow, ruby, scala, sh, sql, swift, visualbasic, xml,

yamlException in thread "main" java.lang.ArrayIndexOutOfBoundsException: -1

at java.util.ArrayList.elementData(ArrayList.java:422)

at java.util.ArrayList.get(ArrayList.java:435)

at

org.apache.calcite.sql.validate.SelectNamespace.getMonotonicity(SelectNamespace.java:73)

at

org.apache.calcite.sql.SqlIdentifier.getMonotonicity(SqlIdentifier.java:375)

at

org.apache.calcite.sql2rel.SqlToRelConverter.convertSelectList(SqlToRelConverter.java:4132)

at

org.apache.calcite.sql2rel.SqlToRelConverter.convertSelectImpl(SqlToRelConverter.java:685)

at

org.apache.calcite.sql2rel.SqlToRelConverter.convertSelect(SqlToRelConverter.java:642)

at

org.apache.calcite.sql2rel.SqlToRelConverter.convertQueryRecursive(SqlToRelConverter.java:3345)

at

org.apache.calcite.sql2rel.SqlToRelConverter.convertQuery(SqlToRelConverter.java:568)

at

org.apache.flink.table.planner.calcite.FlinkPlannerImpl.org$apache$flink$table$planner$calcite$FlinkPlannerImpl$$rel(FlinkPlannerImpl.scala:164)

at

org.apache.flink.table.planner.calcite.FlinkPlannerImpl.rel(FlinkPlannerImpl.scala:151)

at

org.apache.flink.table.planner.operations.SqlToOperationConverter.toQueryOperation(SqlToOperationConverter.java:789)

at

org.apache.flink.table.planner.operations.SqlToOperationConverter.convertViewQuery(SqlToOperationConverter.java:696)

at

org.apache.flink.table.planner.operations.SqlToOperationConverter.convertCreateView(SqlToOperationConverter.java:665)

at

org.apache.flink.table.planner.operations.SqlToOperationConverter.convert(SqlToOperationConverter.java:228)

at

org.apache.flink.table.planner.delegation.ParserImpl.parse(ParserImpl.java:78)

at

org.apache.flink.table.api.internal.TableEnvironmentImpl.executeSql(TableEnvironmentImpl.java:684)

at

com.fcbox.streaming.sql.submit.StreamingJob.callExecuteSql(StreamingJob.java:239)

at

com.fcbox.streaming.sql.submit.StreamingJob.callCommand(StreamingJob.java:207)

at

com.fcbox.streaming.sql.submit.StreamingJob.run(StreamingJob.java:133)

at

com.fcbox.streaming.sql.submit.StreamingJob.main(StreamingJob.java:77)

{code}

was:

I get an *ArrayIndexOutOfBoundsException* in *Interval Joins*, when my table

source have nest json. as the follows is my test:

{code:sql}

CREATE TABLE Orders (

nest ROW<

idBIGINT,

consumerName STRING,

price DECIMAL(10, 5),

productName STRING

>,

proctime AS PROCTIME()

) WITH (

'connector' = 'kafka-0.11',

'topic' = 'Orders',

'properties.bootstrap.servers' = 'localhost:9092',

'properties.group.id' = 'testGroup',

'scan.startup.mode' = 'latest-offset',

'format' = 'json'

);

DROP TABLE IF EXISTS Shipments;

CREATE TABLE Shipments (

idBIGINT,

orderId BIGINT,

originSTRING,

destnationSTRING,

isArrived BOOLEAN,

proctime AS PROCTIME()

) WITH (

'connector' = 'kafka-0.11',

'topic' = 'Shipments',

'properties.bootstrap.servers' = 'localhost:9092',

'properties.group.id' = 'testGroup',

'scan.startup.mode' = 'latest-offset',

'format' = 'json'

);

DROP TABLE IF EXISTS print;

CREATE TABLE print (

orderId BIGINT,

consumerName STRING,

price DECIMAL(10, 5),

productName STRING,

originSTRING,

destnationSTRING,

isArrived BOOLEAN

) WITH (

'connector' = 'print'

);

DROP VIEW IF EXISTS IntervalJoinView;

CREATE VIEW IntervalJoinView AS

SELECT

o.id,

o.consumerName,

o.price,

o.productName,

[jira] [Commented] (FLINK-19768) The shell "./yarn-session.sh " not use log4j-session.properties , it use log4j.properties

[ https://issues.apache.org/jira/browse/FLINK-19768?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17221121#comment-17221121 ] YUJIANBO commented on FLINK-19768: -- Thank you for your reply! I want to ask a question. Our company plans to use yarn-session model, but we don't know how to Distinguish between different task logs on JobManager Log. I'm looking forward to your reply。 Thank you! > The shell "./yarn-session.sh " not use log4j-session.properties , it use > log4j.properties > -- > > Key: FLINK-19768 > URL: https://issues.apache.org/jira/browse/FLINK-19768 > Project: Flink > Issue Type: Bug > Components: Client / Job Submission, Deployment / YARN >Affects Versions: 1.11.2 >Reporter: YUJIANBO >Priority: Major > > The shell "./yarn-session.sh " not use log4j-session.properties , it use > log4j.properties > My Flink Job UI shows the $internal.yarn.log-config-file is > "/usr/local/flink-1.11.2/conf/log4j.properties",is it a bug? -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Issue Comment Deleted] (FLINK-19768) The shell "./yarn-session.sh " not use log4j-session.properties , it use log4j.properties

[ https://issues.apache.org/jira/browse/FLINK-19768?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] YUJIANBO updated FLINK-19768: - Comment: was deleted (was: Thank you for your reply! I want to ask a question. Our company plans to use yarn-session model, but we don't know how to Distinguish between different task logs on JobManager Log. I'm looking forward to your reply。 Thank you!) > The shell "./yarn-session.sh " not use log4j-session.properties , it use > log4j.properties > -- > > Key: FLINK-19768 > URL: https://issues.apache.org/jira/browse/FLINK-19768 > Project: Flink > Issue Type: Bug > Components: Client / Job Submission, Deployment / YARN >Affects Versions: 1.11.2 >Reporter: YUJIANBO >Priority: Major > > The shell "./yarn-session.sh " not use log4j-session.properties , it use > log4j.properties > My Flink Job UI shows the $internal.yarn.log-config-file is > "/usr/local/flink-1.11.2/conf/log4j.properties",is it a bug? -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (FLINK-19818) ArrayIndexOutOfBoundsException occus when the source table have nest json

[

https://issues.apache.org/jira/browse/FLINK-19818?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

shizhengchao updated FLINK-19818:

-

Summary: ArrayIndexOutOfBoundsException occus when the source table have

nest json (was: ArrayIndexOutOfBoundsException occus in 'Interval Joins' when

the source table have nest json)

> ArrayIndexOutOfBoundsException occus when the source table have nest json

> --

>

> Key: FLINK-19818

> URL: https://issues.apache.org/jira/browse/FLINK-19818

> Project: Flink

> Issue Type: Bug

> Components: Table SQL / Planner

>Affects Versions: 1.11.2

>Reporter: shizhengchao

>Priority: Major

>

> I get an *ArrayIndexOutOfBoundsException* in *Interval Joins*, when my table

> source have nest json. as the follows is my test:

> {code:sql}

> CREATE TABLE Orders (

> nest ROW<

> idBIGINT,

> consumerName STRING,

> price DECIMAL(10, 5),

> productName STRING

> >,

> proctime AS PROCTIME()

> ) WITH (

> 'connector' = 'kafka-0.11',

> 'topic' = 'Orders',

> 'properties.bootstrap.servers' = 'localhost:9092',

> 'properties.group.id' = 'testGroup',

> 'scan.startup.mode' = 'latest-offset',

> 'format' = 'json'

> );

> DROP TABLE IF EXISTS Shipments;

> CREATE TABLE Shipments (

> idBIGINT,

> orderId BIGINT,

> originSTRING,

> destnationSTRING,

> isArrived BOOLEAN,

> proctime AS PROCTIME()

> ) WITH (

> 'connector' = 'kafka-0.11',

> 'topic' = 'Shipments',

> 'properties.bootstrap.servers' = 'localhost:9092',

> 'properties.group.id' = 'testGroup',

> 'scan.startup.mode' = 'latest-offset',

> 'format' = 'json'

> );

> DROP TABLE IF EXISTS print;

> CREATE TABLE print (

> orderId BIGINT,

> consumerName STRING,

> price DECIMAL(10, 5),

> productName STRING,

> originSTRING,

> destnationSTRING,

> isArrived BOOLEAN

> ) WITH (

> 'connector' = 'print'

> );

> DROP VIEW IF EXISTS IntervalJoinView;

> CREATE VIEW IntervalJoinView AS

> SELECT

> o.id,

> o.consumerName,

> o.price,

> o.productName,

> s.origin,

> s.destnation,

> s.isArrived

> FROM

> (SELECT * FROM Orders) o,

> (SELECT * FROM Shipments) s

> WHERE s.orderId = o.id AND o.proctime BETWEEN s.proctime - INTERVAL '4' HOUR

> AND s.proctime;

> INSERT INTO print

> SELECT

> id,

> consumerName,

> price,

> productName,

> origin,

> destnation,

> isArrived

> FROM IntervalJoinView;

> {code}

> The following is the exception of flink:

> {code:log}

> Exception in thread "main" java.lang.ArrayIndexOutOfBoundsException: -1

> at java.util.ArrayList.elementData(ArrayList.java:422)

> at java.util.ArrayList.get(ArrayList.java:435)

> at

> org.apache.calcite.sql.validate.SelectNamespace.getMonotonicity(SelectNamespace.java:73)

> at

> org.apache.calcite.sql.SqlIdentifier.getMonotonicity(SqlIdentifier.java:375)

> at

> org.apache.calcite.sql2rel.SqlToRelConverter.convertSelectList(SqlToRelConverter.java:4132)

> at

> org.apache.calcite.sql2rel.SqlToRelConverter.convertSelectImpl(SqlToRelConverter.java:685)

> at

> org.apache.calcite.sql2rel.SqlToRelConverter.convertSelect(SqlToRelConverter.java:642)

> at

> org.apache.calcite.sql2rel.SqlToRelConverter.convertQueryRecursive(SqlToRelConverter.java:3345)

> at

> org.apache.calcite.sql2rel.SqlToRelConverter.convertQuery(SqlToRelConverter.java:568)

> at

> org.apache.flink.table.planner.calcite.FlinkPlannerImpl.org$apache$flink$table$planner$calcite$FlinkPlannerImpl$$rel(FlinkPlannerImpl.scala:164)

> at

> org.apache.flink.table.planner.calcite.FlinkPlannerImpl.rel(FlinkPlannerImpl.scala:151)

> at

> org.apache.flink.table.planner.operations.SqlToOperationConverter.toQueryOperation(SqlToOperationConverter.java:789)

> at

> org.apache.flink.table.planner.operations.SqlToOperationConverter.convertViewQuery(SqlToOperationConverter.java:696)

> at

> org.apache.flink.table.planner.operations.SqlToOperationConverter.convertCreateView(SqlToOperationConverter.java:665)

> at

> org.apache.flink.table.planner.operations.SqlToOperationConverter.convert(SqlToOperationConverter.java:228)

> at

> org.apache.flink.table.planner.delegation.ParserImpl.parse(ParserImpl.java:78)

> at

> org.apache.flink.table.api.internal.TableEnvironmentImpl.executeSql(TableEnvironmentImpl.java:684)

> at

> com.fcbox.streaming.sql.submit.StreamingJob.callExecuteSql(StreamingJob.java:239)

> at

> com.fcbox.streaming.sql.submit.StreamingJob.callCommand(StreamingJob.java:207)

> at

> com.fcbox.streaming.sql.submit.StreamingJob.run(StreamingJob.java:133)

> at

> com.fcbox.streaming.sql.submit.StreamingJob.main(StreamingJob.java:77)

> {code}

[jira] [Commented] (FLINK-19768) The shell "./yarn-session.sh " not use log4j-session.properties , it use log4j.properties

[ https://issues.apache.org/jira/browse/FLINK-19768?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17221120#comment-17221120 ] YUJIANBO commented on FLINK-19768: -- Thank you for your reply! I want to ask a question. Our company plans to use yarn-session model, but we don't know how to Distinguish between different task logs on JobManager Log. I'm looking forward to your reply。 Thank you! > The shell "./yarn-session.sh " not use log4j-session.properties , it use > log4j.properties > -- > > Key: FLINK-19768 > URL: https://issues.apache.org/jira/browse/FLINK-19768 > Project: Flink > Issue Type: Bug > Components: Client / Job Submission, Deployment / YARN >Affects Versions: 1.11.2 >Reporter: YUJIANBO >Priority: Major > > The shell "./yarn-session.sh " not use log4j-session.properties , it use > log4j.properties > My Flink Job UI shows the $internal.yarn.log-config-file is > "/usr/local/flink-1.11.2/conf/log4j.properties",is it a bug? -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] flinkbot commented on pull request #13801: [FLINK-19213][docs-zh] Update the Chinese documentation

flinkbot commented on pull request #13801: URL: https://github.com/apache/flink/pull/13801#issuecomment-716960915 Thanks a lot for your contribution to the Apache Flink project. I'm the @flinkbot. I help the community to review your pull request. We will use this comment to track the progress of the review. ## Automated Checks Last check on commit 08c397aefda24ff450869e60acfadff81859e669 (Tue Oct 27 03:46:54 UTC 2020) ✅no warnings Mention the bot in a comment to re-run the automated checks. ## Review Progress * ❓ 1. The [description] looks good. * ❓ 2. There is [consensus] that the contribution should go into to Flink. * ❓ 3. Needs [attention] from. * ❓ 4. The change fits into the overall [architecture]. * ❓ 5. Overall code [quality] is good. Please see the [Pull Request Review Guide](https://flink.apache.org/contributing/reviewing-prs.html) for a full explanation of the review process. The Bot is tracking the review progress through labels. Labels are applied according to the order of the review items. For consensus, approval by a Flink committer of PMC member is required Bot commands The @flinkbot bot supports the following commands: - `@flinkbot approve description` to approve one or more aspects (aspects: `description`, `consensus`, `architecture` and `quality`) - `@flinkbot approve all` to approve all aspects - `@flinkbot approve-until architecture` to approve everything until `architecture` - `@flinkbot attention @username1 [@username2 ..]` to require somebody's attention - `@flinkbot disapprove architecture` to remove an approval you gave earlier This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (FLINK-19213) Update the Chinese documentation

[ https://issues.apache.org/jira/browse/FLINK-19213?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated FLINK-19213: --- Labels: pull-request-available (was: ) > Update the Chinese documentation > > > Key: FLINK-19213 > URL: https://issues.apache.org/jira/browse/FLINK-19213 > Project: Flink > Issue Type: Sub-task > Components: chinese-translation, Documentation >Reporter: Dawid Wysakowicz >Assignee: jiawen xiao >Priority: Trivial > Labels: pull-request-available > Time Spent: 168h > > We should update the Chinese documentation with the changes introduced in > FLINK-18802 -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] xiaoHoly opened a new pull request #13801: [FLINK-19213][docs-zh] Update the Chinese documentation

xiaoHoly opened a new pull request #13801: URL: https://github.com/apache/flink/pull/13801 ## What is the purpose of the change I will translate document avro-confluent.md under formats into document avro-confluent.zh.md. We should update the Chinese documentation with the changes introduced in FLINK-18802 ## Brief change log -translate flink/docs/dev/table/connectors/formats/avro-confluent.zh.md ## Verifying this change This change is a trivial rework / code cleanup without any test coverage. ## Does this pull request potentially affect one of the following parts: - Dependencies (does it add or upgrade a dependency): (no) - The public API, i.e., is any changed class annotated with `@Public(Evolving)`: (no) - The serializers: (no) - The runtime per-record code paths (performance sensitive): (no) - Anything that affects deployment or recovery: JobManager (and its components), Checkpointing, Kubernetes/Yarn/Mesos, ZooKeeper: (no) - The S3 file system connector: (no) ## Documentation - Does this pull request introduce a new feature? (no) - If yes, how is the feature documented? (no) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] wuchong commented on a change in pull request #13081: [FLINK-18590][json] Support json array explode to multi messages

wuchong commented on a change in pull request #13081:

URL: https://github.com/apache/flink/pull/13081#discussion_r512399078

##

File path:

flink-formats/flink-json/src/main/java/org/apache/flink/formats/json/JsonRowDataDeserializationSchema.java

##

@@ -130,6 +133,39 @@ public RowData deserialize(byte[] message) throws

IOException {

}

}

+ @Override

Review comment:

I still prefer to only keep one implementation, otherwise it's hard to

maintain in the future. We should update the json tests to use collector

methods to have full test coverage.

The Kinesis should migrate to collector method ASAP, rather than hacking

JSON format for Kinesis. What do you think?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [flink] flinkbot commented on pull request #13800: [FLINK-19650][connectors jdbc]Support the limit push down for the Jdb…