[jira] [Updated] (HDDS-3951) Rename the num.write.chunk.thread key

[ https://issues.apache.org/jira/browse/HDDS-3951?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated HDDS-3951: - Labels: pull-request-available (was: ) > Rename the num.write.chunk.thread key > - > > Key: HDDS-3951 > URL: https://issues.apache.org/jira/browse/HDDS-3951 > Project: Hadoop Distributed Data Store > Issue Type: Improvement > Components: Ozone Datanode >Affects Versions: 0.5.0 >Reporter: maobaolong >Assignee: maobaolong >Priority: Major > Labels: pull-request-available > > dfs.container.ratis.num.write.chunk.thread -> > dfs.container.ratis.num.write.chunk.thread.per.disk -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] maobaolong opened a new pull request #1187: HDDS-3951. Rename the num.write.chunk.thread key.

maobaolong opened a new pull request #1187: URL: https://github.com/apache/hadoop-ozone/pull/1187 ## What changes were proposed in this pull request? Reanme `dfs.container.ratis.num.write.chunk.thread` to `dfs.container.ratis.num.write.chunk.thread.per.disk ` to clearly express the meaning of this key, if it configured to 10, then the thread num would be 10 * numOfDisk. ## What is the link to the Apache JIRA HDDS-3951 ## How was this patch tested? NO Need, just rename config key. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Updated] (HDDS-3951) Rename the num.write.chunk.thread key

[ https://issues.apache.org/jira/browse/HDDS-3951?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] maobaolong updated HDDS-3951: - Description: dfs.container.ratis.num.write.chunk.thread -> dfs.container.ratis.num.write.chunk.thread.per.disk (was: dfs.container.ratis.num.write.chunk.thread -> dfs.container.ratis.num.write.chunk.thread.per.volume ) > Rename the num.write.chunk.thread key > - > > Key: HDDS-3951 > URL: https://issues.apache.org/jira/browse/HDDS-3951 > Project: Hadoop Distributed Data Store > Issue Type: Improvement > Components: Ozone Datanode >Affects Versions: 0.5.0 >Reporter: maobaolong >Assignee: maobaolong >Priority: Major > > dfs.container.ratis.num.write.chunk.thread -> > dfs.container.ratis.num.write.chunk.thread.per.disk -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] captainzmc commented on a change in pull request #1150: HDDS-3903. OzoneRpcClient support batch rename keys.

captainzmc commented on a change in pull request #1150:

URL: https://github.com/apache/hadoop-ozone/pull/1150#discussion_r452634999

##

File path:

hadoop-ozone/ozone-manager/src/main/java/org/apache/hadoop/ozone/om/response/key/OMKeysRenameResponse.java

##

@@ -0,0 +1,135 @@

+/**

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hadoop.ozone.om.response.key;

+

+import com.google.common.annotations.VisibleForTesting;

+import com.google.common.base.Optional;

+import org.apache.hadoop.hdds.utils.db.BatchOperation;

+import org.apache.hadoop.hdds.utils.db.Table;

+import org.apache.hadoop.hdds.utils.db.cache.CacheKey;

+import org.apache.hadoop.hdds.utils.db.cache.CacheValue;

+import org.apache.hadoop.ozone.om.OMMetadataManager;

+import org.apache.hadoop.ozone.om.OmRenameKeyInfo;

+import org.apache.hadoop.ozone.om.helpers.OmKeyInfo;

+import org.apache.hadoop.ozone.om.response.CleanupTableInfo;

+import org.apache.hadoop.ozone.om.response.OMClientResponse;

+import

org.apache.hadoop.ozone.protocol.proto.OzoneManagerProtocolProtos.OMResponse;

+

+import javax.annotation.Nonnull;

+import java.io.IOException;

+import java.util.List;

+

+import static org.apache.hadoop.ozone.om.OmMetadataManagerImpl.KEY_TABLE;

+import static

org.apache.hadoop.ozone.om.lock.OzoneManagerLock.Resource.BUCKET_LOCK;

+

+/**

+ * Response for RenameKeys request.

+ */

+@CleanupTableInfo(cleanupTables = {KEY_TABLE})

+public class OMKeysRenameResponse extends OMClientResponse {

+

+ private List renameKeyInfoList;

+ private long trxnLogIndex;

+ private String fromKeyName = null;

+ private String toKeyName = null;

+

+ public OMKeysRenameResponse(@Nonnull OMResponse omResponse,

+ List renameKeyInfoList,

+ long trxnLogIndex) {

+super(omResponse);

+this.renameKeyInfoList = renameKeyInfoList;

+this.trxnLogIndex = trxnLogIndex;

+ }

+

+

+ /**

+ * For when the request is not successful or it is a replay transaction.

+ * For a successful request, the other constructor should be used.

+ */

+ public OMKeysRenameResponse(@Nonnull OMResponse omResponse) {

+super(omResponse);

+checkStatusNotOK();

+ }

+

+ @Override

+ public void addToDBBatch(OMMetadataManager omMetadataManager,

+ BatchOperation batchOperation) throws IOException {

+boolean acquiredLock = false;

+for (OmRenameKeyInfo omRenameKeyInfo : renameKeyInfoList) {

+ String volumeName = omRenameKeyInfo.getNewKeyInfo().getVolumeName();

+ String bucketName = omRenameKeyInfo.getNewKeyInfo().getBucketName();

+ fromKeyName = omRenameKeyInfo.getFromKeyName();

+ OmKeyInfo newKeyInfo = omRenameKeyInfo.getNewKeyInfo();

+ toKeyName = newKeyInfo.getKeyName();

+ Table keyTable = omMetadataManager

+ .getKeyTable();

+ try {

+acquiredLock =

+omMetadataManager.getLock().acquireWriteLock(BUCKET_LOCK,

+volumeName, bucketName);

+// If toKeyName is null, then we need to only delete the fromKeyName

+// from KeyTable. This is the case of replay where toKey exists but

+// fromKey has not been deleted.

+if (deleteFromKeyOnly()) {

Review comment:

Thanks Bharat for the suggestion, I have taken a close look at the

implementation of #1169 with some very nice changes. In this PR I will

synchronize the #1169 changes here to make sure they are implemented the same.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Created] (HDDS-3951) Rename the num.write.chunk.thread key

maobaolong created HDDS-3951: Summary: Rename the num.write.chunk.thread key Key: HDDS-3951 URL: https://issues.apache.org/jira/browse/HDDS-3951 Project: Hadoop Distributed Data Store Issue Type: Improvement Components: Ozone Datanode Affects Versions: 0.5.0 Reporter: maobaolong Assignee: maobaolong dfs.container.ratis.num.write.chunk.thread -> dfs.container.ratis.num.write.chunk.thread.per.volume -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Updated] (HDDS-3813) Integrate with ratis 1.0.0 release binaries for ozone 0.6.0 release

[ https://issues.apache.org/jira/browse/HDDS-3813?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Xiaoyu Yao updated HDDS-3813: - Summary: Integrate with ratis 1.0.0 release binaries for ozone 0.6.0 release (was: Integrate with ratis 0.6.0 release binaries for ozone 0.6.0 release) > Integrate with ratis 1.0.0 release binaries for ozone 0.6.0 release > --- > > Key: HDDS-3813 > URL: https://issues.apache.org/jira/browse/HDDS-3813 > Project: Hadoop Distributed Data Store > Issue Type: Improvement >Reporter: Sammi Chen >Assignee: Lokesh Jain >Priority: Major > -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Updated] (HDDS-3833) Use Pipeline choose policy to choose pipeline from exist pipeline list

[ https://issues.apache.org/jira/browse/HDDS-3833?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] maobaolong updated HDDS-3833: - Target Version/s: 0.7.0 > Use Pipeline choose policy to choose pipeline from exist pipeline list > -- > > Key: HDDS-3833 > URL: https://issues.apache.org/jira/browse/HDDS-3833 > Project: Hadoop Distributed Data Store > Issue Type: New Feature >Reporter: maobaolong >Assignee: maobaolong >Priority: Major > Labels: pull-request-available > > With this policy driven mode, we can develop various pipeline choosing policy > to satisfy complex production environment. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Updated] (HDDS-3885) Create Datanode home page

[ https://issues.apache.org/jira/browse/HDDS-3885?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Xiaoyu Yao updated HDDS-3885: - Target Version/s: 0.6.0 Affects Version/s: (was: 0.6.0) 0.5.0 > Create Datanode home page > - > > Key: HDDS-3885 > URL: https://issues.apache.org/jira/browse/HDDS-3885 > Project: Hadoop Distributed Data Store > Issue Type: Improvement > Components: Ozone Datanode >Affects Versions: 0.5.0 >Reporter: maobaolong >Assignee: maobaolong >Priority: Major > Labels: pull-request-available > -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Updated] (HDDS-3941) Enable core dump when crash in C++

[ https://issues.apache.org/jira/browse/HDDS-3941?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated HDDS-3941: - Labels: pull-request-available (was: ) > Enable core dump when crash in C++ > -- > > Key: HDDS-3941 > URL: https://issues.apache.org/jira/browse/HDDS-3941 > Project: Hadoop Distributed Data Store > Issue Type: Improvement >Reporter: runzhiwang >Assignee: runzhiwang >Priority: Major > Labels: pull-request-available > -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] runzhiwang opened a new pull request #1186: HDDS-3941. Enable core dump when crash in C++

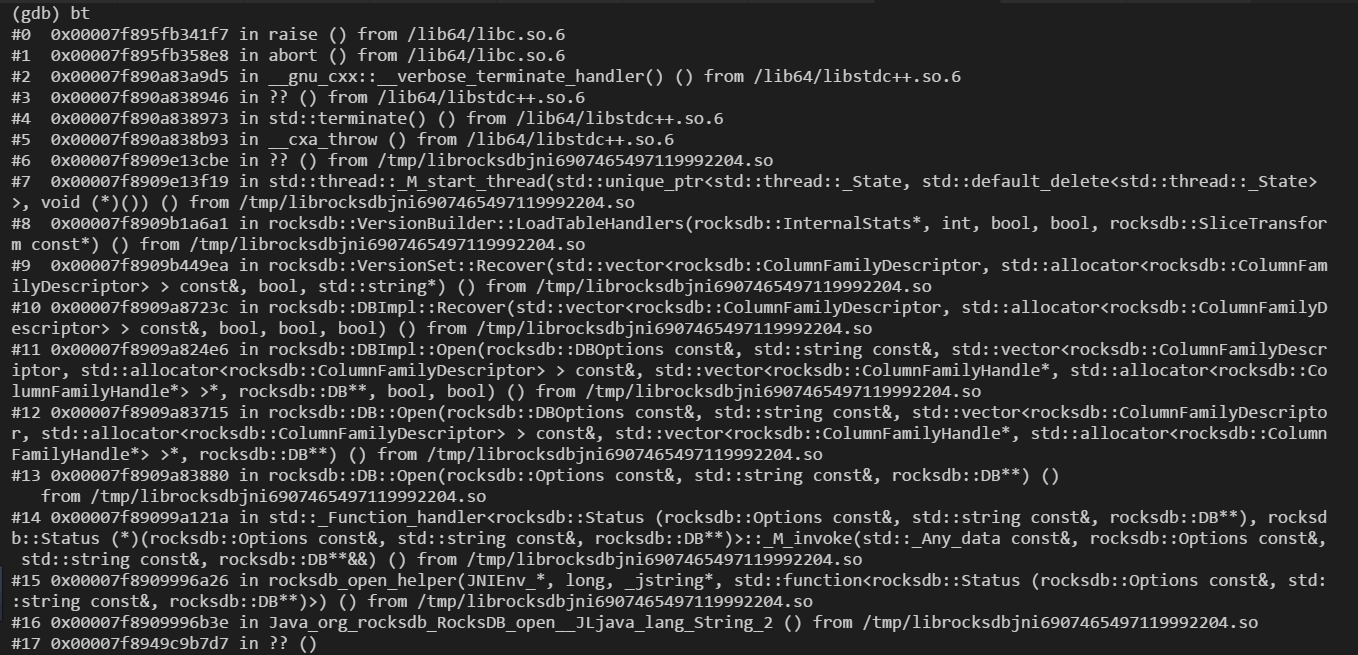

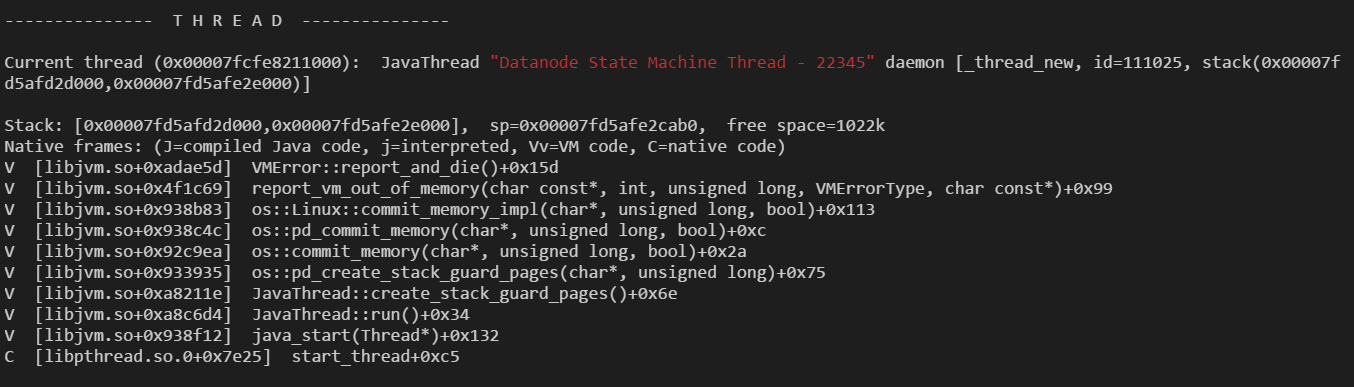

runzhiwang opened a new pull request #1186: URL: https://github.com/apache/hadoop-ozone/pull/1186 ## What changes were proposed in this pull request? **What's the problem ?** This PR is related to HDDS-3933. Fix memory leak because of too many Datanode State Machine Thread. When memory leak, Datanode most time generates core.pid because it crash in Rocksdb when create new thread, as the image shows, and generates crash log rarely.  But because the default value of `core file size` if zero, so core.pid can not be generated. So when Datanode crash in Rocksdb, we can not get any information about why it crashed. **How to fix ?** Set `ulimit -c unlimited` to enable core dump when crash in RocksDB. ## What is the link to the Apache JIRA https://issues.apache.org/jira/browse/HDDS-3941 ## How was this patch tested? Existed UT. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

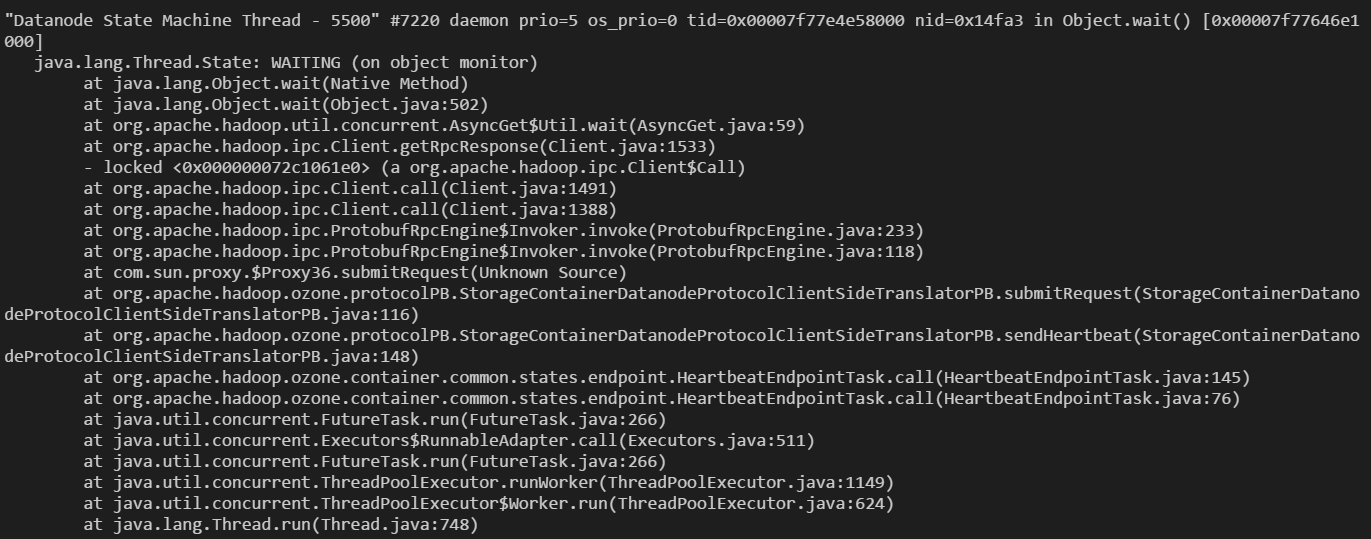

[jira] [Updated] (HDDS-3933) Fix memory leak because of too many Datanode State Machine Thread

[ https://issues.apache.org/jira/browse/HDDS-3933?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated HDDS-3933: - Labels: pull-request-available (was: ) > Fix memory leak because of too many Datanode State Machine Thread > - > > Key: HDDS-3933 > URL: https://issues.apache.org/jira/browse/HDDS-3933 > Project: Hadoop Distributed Data Store > Issue Type: Sub-task >Reporter: runzhiwang >Assignee: runzhiwang >Priority: Major > Labels: pull-request-available > Attachments: jstack.txt, screenshot-1.png, screenshot-2.png, > screenshot-3.png > > > When create 22345th Datanode State Machine Thread, OOM happened. > !screenshot-1.png! > !screenshot-2.png! > !screenshot-3.png! -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] runzhiwang opened a new pull request #1185: HDDS-3933. Fix memory leak because of too many Datanode State Machine Thread

runzhiwang opened a new pull request #1185: URL: https://github.com/apache/hadoop-ozone/pull/1185 ## What changes were proposed in this pull request? **What's problem ?** Datanode creates more than 20K Datanode State Machine Thread, then OOM happened.  **What's the reason ?** 20K Datanode State Machine Thread were created by newCachedThreadPool  Almost all of them were wait lock.  Only one Datanode State Machine Thread got the lock, and block when submitRequest. Because this thread was blocked and can not free the lock, newCachedThreadPool will create new thread infinitely.  **How to fix ?** 1. Avoid use newCachedThreadPool, because it will create new thread infinitely, if no thread available in pool. 2. Cancel future when task time out. ## What is the link to the Apache JIRA https://issues.apache.org/jira/browse/HDDS-3933 ## How was this patch tested? Existed UT. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] bharatviswa504 commented on a change in pull request #1169: HDDS-3930. Fix OMKeyDeletesRequest.

bharatviswa504 commented on a change in pull request #1169:

URL: https://github.com/apache/hadoop-ozone/pull/1169#discussion_r452613394

##

File path: hadoop-ozone/interface-client/src/main/proto/OmClientProtocol.proto

##

@@ -848,7 +850,18 @@ message DeleteKeyRequest {

}

message DeleteKeysRequest {

-repeated KeyArgs keyArgs = 1;

+optional DeleteKeyArgs deleteKeys = 1;

Review comment:

From proto 3 onwards all fields are optional.

So, followed that approach and declared optional. (In future if something is

changed, we can still be backward compatible)

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] bharatviswa504 commented on a change in pull request #1169: HDDS-3930. Fix OMKeyDeletesRequest.

bharatviswa504 commented on a change in pull request #1169:

URL: https://github.com/apache/hadoop-ozone/pull/1169#discussion_r452613394

##

File path: hadoop-ozone/interface-client/src/main/proto/OmClientProtocol.proto

##

@@ -848,7 +850,18 @@ message DeleteKeyRequest {

}

message DeleteKeysRequest {

-repeated KeyArgs keyArgs = 1;

+optional DeleteKeyArgs deleteKeys = 1;

Review comment:

In proto 3 onwards all fields are optional.

So, followed that approach and declared optional. (In future if something is

changed, we can still be backward compatible)

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Issue Comment Deleted] (HDDS-3841) FLAKY-UT: TestSecureOzoneRpcClient timeout

[ https://issues.apache.org/jira/browse/HDDS-3841?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] maobaolong updated HDDS-3841: - Comment: was deleted (was: [WARNING] Tests run: 68, Failures: 0, Errors: 0, Skipped: 3, Time elapsed: 58.105 s - in org.apache.hadoop.ozone.client.rpc.TestSecureOzoneRpcClient [INFO] [INFO] Results: [INFO] [ERROR] Failures: [ERROR] TestDeleteWithSlowFollower.testDeleteKeyWithSlowFollower:225) > FLAKY-UT: TestSecureOzoneRpcClient timeout > -- > > Key: HDDS-3841 > URL: https://issues.apache.org/jira/browse/HDDS-3841 > Project: Hadoop Distributed Data Store > Issue Type: Sub-task > Components: test >Affects Versions: 0.7.0 >Reporter: maobaolong >Priority: Major > > [WARNING] Tests run: 68, Failures: 0, Errors: 0, Skipped: 3, Time elapsed: > 58.105 s - in org.apache.hadoop.ozone.client.rpc.TestSecureOzoneRpcClient > [INFO] > [INFO] Results: > [INFO] > [ERROR] Failures: > [ERROR] TestDeleteWithSlowFollower.testDeleteKeyWithSlowFollower:225 -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Updated] (HDDS-3841) FLAKY-UT: TestSecureOzoneRpcClient timeout

[ https://issues.apache.org/jira/browse/HDDS-3841?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] maobaolong updated HDDS-3841: - Description: [WARNING] Tests run: 68, Failures: 0, Errors: 0, Skipped: 3, Time elapsed: 58.105 s - in org.apache.hadoop.ozone.client.rpc.TestSecureOzoneRpcClient [INFO] [INFO] Results: [INFO] [ERROR] Failures: [ERROR] TestDeleteWithSlowFollower.testDeleteKeyWithSlowFollower:225 was:If a failure test appeared in your CI checks, and you are sure it is not relation with your PR, so, paste the stale test log here. > FLAKY-UT: TestSecureOzoneRpcClient timeout > -- > > Key: HDDS-3841 > URL: https://issues.apache.org/jira/browse/HDDS-3841 > Project: Hadoop Distributed Data Store > Issue Type: Sub-task > Components: test >Affects Versions: 0.7.0 >Reporter: maobaolong >Priority: Major > > [WARNING] Tests run: 68, Failures: 0, Errors: 0, Skipped: 3, Time elapsed: > 58.105 s - in org.apache.hadoop.ozone.client.rpc.TestSecureOzoneRpcClient > [INFO] > [INFO] Results: > [INFO] > [ERROR] Failures: > [ERROR] TestDeleteWithSlowFollower.testDeleteKeyWithSlowFollower:225 -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Updated] (HDDS-3841) FLAKY-UT: TestSecureOzoneRpcClient timeout

[ https://issues.apache.org/jira/browse/HDDS-3841?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] maobaolong updated HDDS-3841: - Summary: FLAKY-UT: TestSecureOzoneRpcClient timeout (was: Stale tests(timeout or other reason)) > FLAKY-UT: TestSecureOzoneRpcClient timeout > -- > > Key: HDDS-3841 > URL: https://issues.apache.org/jira/browse/HDDS-3841 > Project: Hadoop Distributed Data Store > Issue Type: Sub-task > Components: test >Affects Versions: 0.7.0 >Reporter: maobaolong >Priority: Major > > If a failure test appeared in your CI checks, and you are sure it is not > relation with your PR, so, paste the stale test log here. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Issue Comment Deleted] (HDDS-3841) FLAKY-UT: TestSecureOzoneRpcClient timeout

[

https://issues.apache.org/jira/browse/HDDS-3841?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

maobaolong updated HDDS-3841:

-

Comment: was deleted

(was: {code:}

[INFO] Running

org.apache.hadoop.ozone.client.rpc.TestFailureHandlingByClientFlushDelay[INFO]

Running

org.apache.hadoop.ozone.client.rpc.TestFailureHandlingByClientFlushDelay[ERROR]

Tests run: 1, Failures: 1, Errors: 0, Skipped: 0, Time elapsed: 94.969 s <<<

FAILURE! - in

org.apache.hadoop.ozone.client.rpc.TestFailureHandlingByClientFlushDelay[ERROR]

testPipelineExclusionWithPipelineFailure(org.apache.hadoop.ozone.client.rpc.TestFailureHandlingByClientFlushDelay)

Time elapsed: 94.881 s <<< FAILURE!java.lang.AssertionError at

org.junit.Assert.fail(Assert.java:86) at

org.junit.Assert.assertTrue(Assert.java:41) at

org.junit.Assert.assertTrue(Assert.java:52) at

org.apache.hadoop.ozone.client.rpc.TestFailureHandlingByClientFlushDelay.testPipelineExclusionWithPipelineFailure(TestFailureHandlingByClientFlushDelay.java:200)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at

sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at

sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498) at

org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:47)

at

org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12)

at

org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:44)

at

org.junit.internal.runners.statements.InvokeMethod.evaluate(InvokeMethod.java:17)

at org.junit.internal.runners.statements.RunAfters.evaluate(RunAfters.java:27)

at

org.junit.internal.runners.statements.FailOnTimeout$StatementThread.run(FailOnTimeout.java:74)

{code}

)

> FLAKY-UT: TestSecureOzoneRpcClient timeout

> --

>

> Key: HDDS-3841

> URL: https://issues.apache.org/jira/browse/HDDS-3841

> Project: Hadoop Distributed Data Store

> Issue Type: Sub-task

> Components: test

>Affects Versions: 0.7.0

>Reporter: maobaolong

>Priority: Major

>

> If a failure test appeared in your CI checks, and you are sure it is not

> relation with your PR, so, paste the stale test log here.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Comment Edited] (HDDS-3841) FLAKY-UT: TestSecureOzoneRpcClient timeout

[

https://issues.apache.org/jira/browse/HDDS-3841?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17141235#comment-17141235

]

maobaolong edited comment on HDDS-3841 at 7/10/20, 4:13 AM:

{code:}

[INFO] Running

org.apache.hadoop.ozone.client.rpc.TestFailureHandlingByClientFlushDelay[INFO]

Running

org.apache.hadoop.ozone.client.rpc.TestFailureHandlingByClientFlushDelay[ERROR]

Tests run: 1, Failures: 1, Errors: 0, Skipped: 0, Time elapsed: 94.969 s <<<

FAILURE! - in

org.apache.hadoop.ozone.client.rpc.TestFailureHandlingByClientFlushDelay[ERROR]

testPipelineExclusionWithPipelineFailure(org.apache.hadoop.ozone.client.rpc.TestFailureHandlingByClientFlushDelay)

Time elapsed: 94.881 s <<< FAILURE!java.lang.AssertionError at

org.junit.Assert.fail(Assert.java:86) at

org.junit.Assert.assertTrue(Assert.java:41) at

org.junit.Assert.assertTrue(Assert.java:52) at

org.apache.hadoop.ozone.client.rpc.TestFailureHandlingByClientFlushDelay.testPipelineExclusionWithPipelineFailure(TestFailureHandlingByClientFlushDelay.java:200)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at

sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at

sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498) at

org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:47)

at

org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12)

at

org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:44)

at

org.junit.internal.runners.statements.InvokeMethod.evaluate(InvokeMethod.java:17)

at org.junit.internal.runners.statements.RunAfters.evaluate(RunAfters.java:27)

at

org.junit.internal.runners.statements.FailOnTimeout$StatementThread.run(FailOnTimeout.java:74)

{code}

was (Author: maobaolong):

{code:}

[INFO] Running

org.apache.hadoop.ozone.client.rpc.TestFailureHandlingByClientFlushDelay[INFO]

Running

org.apache.hadoop.ozone.client.rpc.TestFailureHandlingByClientFlushDelay[ERROR]

Tests run: 1, Failures: 1, Errors: 0, Skipped: 0, Time elapsed: 94.969 s <<<

FAILURE! - in

org.apache.hadoop.ozone.client.rpc.TestFailureHandlingByClientFlushDelay[ERROR]

testPipelineExclusionWithPipelineFailure(org.apache.hadoop.ozone.client.rpc.TestFailureHandlingByClientFlushDelay)

Time elapsed: 94.881 s <<< FAILURE!java.lang.AssertionError at

org.junit.Assert.fail(Assert.java:86) at

org.junit.Assert.assertTrue(Assert.java:41) at

org.junit.Assert.assertTrue(Assert.java:52) at

org.apache.hadoop.ozone.client.rpc.TestFailureHandlingByClientFlushDelay.testPipelineExclusionWithPipelineFailure(TestFailureHandlingByClientFlushDelay.java:200)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at

sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at

sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498) at

org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:47)

at

org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12)

at

org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:44)

at

org.junit.internal.runners.statements.InvokeMethod.evaluate(InvokeMethod.java:17)

at org.junit.internal.runners.statements.RunAfters.evaluate(RunAfters.java:27)

at

org.junit.internal.runners.statements.FailOnTimeout$StatementThread.run(FailOnTimeout.java:74)

{code}

> FLAKY-UT: TestSecureOzoneRpcClient timeout

> --

>

> Key: HDDS-3841

> URL: https://issues.apache.org/jira/browse/HDDS-3841

> Project: Hadoop Distributed Data Store

> Issue Type: Sub-task

> Components: test

>Affects Versions: 0.7.0

>Reporter: maobaolong

>Priority: Major

>

> If a failure test appeared in your CI checks, and you are sure it is not

> relation with your PR, so, paste the stale test log here.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] xiaoyuyao commented on a change in pull request #1169: HDDS-3930. Fix OMKeyDeletesRequest.

xiaoyuyao commented on a change in pull request #1169:

URL: https://github.com/apache/hadoop-ozone/pull/1169#discussion_r452612030

##

File path: hadoop-ozone/interface-client/src/main/proto/OmClientProtocol.proto

##

@@ -867,10 +867,10 @@ message DeletedKeys {

}

message DeleteKeysResponse {

-repeated KeyInfo deletedKeys = 1;

-repeated KeyInfo unDeletedKeys = 2;

Review comment:

Looks good to me.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] xiaoyuyao commented on a change in pull request #1169: HDDS-3930. Fix OMKeyDeletesRequest.

xiaoyuyao commented on a change in pull request #1169:

URL: https://github.com/apache/hadoop-ozone/pull/1169#discussion_r452611714

##

File path: hadoop-ozone/interface-client/src/main/proto/OmClientProtocol.proto

##

@@ -848,7 +850,18 @@ message DeleteKeyRequest {

}

message DeleteKeysRequest {

-repeated KeyArgs keyArgs = 1;

+optional DeleteKeyArgs deleteKeys = 1;

Review comment:

Should this be required?

##

File path: hadoop-ozone/interface-client/src/main/proto/OmClientProtocol.proto

##

@@ -848,7 +850,18 @@ message DeleteKeyRequest {

}

message DeleteKeysRequest {

-repeated KeyArgs keyArgs = 1;

+optional DeleteKeyArgs deleteKeys = 1;

Review comment:

Should this be required instead of optional?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] xiaoyuyao commented on pull request #1163: HDDS-3920. Too many redudant replications due to fail to get node's a…

xiaoyuyao commented on pull request #1163: URL: https://github.com/apache/hadoop-ozone/pull/1163#issuecomment-656470926 LGTM, +1 pending CI. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Updated] (HDDS-1444) Allocate block fails in MiniOzoneChaosCluster because of InsufficientDatanodesException

[

https://issues.apache.org/jira/browse/HDDS-1444?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Sammi Chen updated HDDS-1444:

-

Parent: HDDS-1127

Issue Type: Sub-task (was: Bug)

> Allocate block fails in MiniOzoneChaosCluster because of

> InsufficientDatanodesException

> ---

>

> Key: HDDS-1444

> URL: https://issues.apache.org/jira/browse/HDDS-1444

> Project: Hadoop Distributed Data Store

> Issue Type: Sub-task

> Components: SCM

>Affects Versions: 0.3.0

>Reporter: Mukul Kumar Singh

>Priority: Major

> Labels: TriagePending

>

> MiniOzoneChaosCluster is failing with InsufficientDatanodesException while

> writing keys to the Ozone Cluster

> {code}

> org.apache.hadoop.hdds.scm.pipeline.InsufficientDatanodesException: Cannot

> create pipeline of factor 3 using 2 nodes.

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] xiaoyuyao commented on a change in pull request #1163: HDDS-3920. Too many redudant replications due to fail to get node's a…

xiaoyuyao commented on a change in pull request #1163:

URL: https://github.com/apache/hadoop-ozone/pull/1163#discussion_r452609872

##

File path:

hadoop-hdds/server-scm/src/main/java/org/apache/hadoop/hdds/scm/container/ContainerReportHandler.java

##

@@ -102,8 +102,15 @@ public ContainerReportHandler(final NodeManager

nodeManager,

public void onMessage(final ContainerReportFromDatanode reportFromDatanode,

final EventPublisher publisher) {

-final DatanodeDetails datanodeDetails =

+final DatanodeDetails dnFromReport =

reportFromDatanode.getDatanodeDetails();

+DatanodeDetails datanodeDetails =

Review comment:

That's a good catch. Thanks for the details.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Updated] (HDDS-2039) Some ozone unit test takes too long to finish.

[

https://issues.apache.org/jira/browse/HDDS-2039?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Sammi Chen updated HDDS-2039:

-

Parent: HDDS-1127

Issue Type: Sub-task (was: Test)

> Some ozone unit test takes too long to finish.

> --

>

> Key: HDDS-2039

> URL: https://issues.apache.org/jira/browse/HDDS-2039

> Project: Hadoop Distributed Data Store

> Issue Type: Sub-task

> Components: test

>Reporter: Xiaoyu Yao

>Priority: Major

>

> Here are a few

> {code}

> [INFO] Running org.apache.hadoop.ozone.om.TestOzoneManagerHA

> [INFO] Tests run: 15, Failures: 0, Errors: 0, Skipped: 0, Time elapsed:

> 436.08 s - in org.apache.hadoop.ozone.om.TestOzoneManagerHA

> [INFO] Running org.apache.hadoop.ozone.om.TestOzoneManager

> [INFO] Tests run: 26, Failures: 0, Errors: 0, Skipped: 0, Time elapsed:

> 259.566 s - in org.apache.hadoop.ozone.om.TestOzoneManager

> [INFO] Running org.apache.hadoop.ozone.om.TestScmSafeMode

> [INFO] Tests run: 5, Failures: 0, Errors: 0, Skipped: 0, Time elapsed:

> 129.653 s - in org.apache.hadoop.ozone.om.TestScmSafeMode

> [INFO] Running org.apache.hadoop.ozone.om.TestOzoneManagerRestart

> [INFO] Tests run: 3, Failures: 0, Errors: 0, Skipped: 0, Time elapsed:

> 843.129 s - in org.apache.hadoop.ozone.om.TestOzoneManagerRestart

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Updated] (HDDS-2643) TestOzoneDelegationTokenSecretManager#testRenewTokenFailureRenewalTime fails intermittently

[

https://issues.apache.org/jira/browse/HDDS-2643?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Sammi Chen updated HDDS-2643:

-

Parent: HDDS-1127

Issue Type: Sub-task (was: Test)

> TestOzoneDelegationTokenSecretManager#testRenewTokenFailureRenewalTime fails

> intermittently

> ---

>

> Key: HDDS-2643

> URL: https://issues.apache.org/jira/browse/HDDS-2643

> Project: Hadoop Distributed Data Store

> Issue Type: Sub-task

> Components: test

>Reporter: Lokesh Jain

>Priority: Major

> Labels: TriagePending

>

> TestOzoneDelegationTokenSecretManager.testRenewTokenFailureRenewalTime fails

> intermittently with the following error.

> {code:java}

> [ERROR] Failures:

> [ERROR]

> TestOzoneDelegationTokenSecretManager.testRenewTokenFailureRenewalTime:253

> Expecting java.io.IOException with text is expired but got : Expected to

> find 'is expired' but got unexpected exception:

> org.apache.hadoop.security.token.SecretManager$InvalidToken: token

> (OzoneToken owner=testUser, renewer=testUser, realUser=testUser,

> issueDate=1574938955794, maxDate=1574938965794, sequenceNumber=1,

> masterKeyId=1, strToSign=null, signature=null, awsAccessKeyId=null) can't be

> found in cache

> at

> org.apache.hadoop.ozone.security.OzoneDelegationTokenSecretManager.validateToken(OzoneDelegationTokenSecretManager.java:362)

> at

> org.apache.hadoop.ozone.security.OzoneDelegationTokenSecretManager.renewToken(OzoneDelegationTokenSecretManager.java:244)

> at

> org.apache.hadoop.ozone.security.TestOzoneDelegationTokenSecretManager.lambda$testRenewTokenFailureRenewalTime$2(TestOzoneDelegationTokenSecretManager.java:254)

> at

> org.apache.hadoop.test.LambdaTestUtils.lambda$intercept$0(LambdaTestUtils.java:527)

> at

> org.apache.hadoop.test.LambdaTestUtils.intercept(LambdaTestUtils.java:491)

> at

> org.apache.hadoop.test.LambdaTestUtils.intercept(LambdaTestUtils.java:522)

> at

> org.apache.hadoop.ozone.security.TestOzoneDelegationTokenSecretManager.testRenewTokenFailureRenewalTime(TestOzoneDelegationTokenSecretManager.java:253)

> at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

> at

> sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

> at

> sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> at java.lang.reflect.Method.invoke(Method.java:498)

> at

> org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:47)

> at

> org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12)

> at

> org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:44)

> at

> org.junit.internal.runners.statements.InvokeMethod.evaluate(InvokeMethod.java:17)

> at

> org.junit.internal.runners.statements.RunBefores.evaluate(RunBefores.java:26)

> at

> org.junit.internal.runners.statements.RunAfters.evaluate(RunAfters.java:27)

> at org.junit.rules.ExternalResource$1.evaluate(ExternalResource.java:48)

> at org.junit.rules.RunRules.evaluate(RunRules.java:20)

> at org.junit.runners.ParentRunner.runLeaf(ParentRunner.java:271)

> at

> org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:70)

> at

> org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:50)

> at org.junit.runners.ParentRunner$3.run(ParentRunner.java:238)

> at org.junit.runners.ParentRunner$1.schedule(ParentRunner.java:63)

> at org.junit.runners.ParentRunner.runChildren(ParentRunner.java:236)

> at org.junit.runners.ParentRunner.access$000(ParentRunner.java:53)

> at org.junit.runners.ParentRunner$2.evaluate(ParentRunner.java:229)

> at org.junit.runners.ParentRunner.run(ParentRunner.java:309)

> at

> org.apache.maven.surefire.junit4.JUnit4Provider.execute(JUnit4Provider.java:365)

> at

> org.apache.maven.surefire.junit4.JUnit4Provider.executeWithRerun(JUnit4Provider.java:273)

> at

> org.apache.maven.surefire.junit4.JUnit4Provider.executeTestSet(JUnit4Provider.java:238)

> at

> org.apache.maven.surefire.junit4.JUnit4Provider.invoke(JUnit4Provider.java:159)

> at

> org.apache.maven.surefire.booter.ForkedBooter.invokeProviderInSameClassLoader(ForkedBooter.java:384)

> at

> org.apache.maven.surefire.booter.ForkedBooter.runSuitesInProcess(ForkedBooter.java:345)

> at

> org.apache.maven.surefire.booter.ForkedBooter.execute(ForkedBooter.java:126)

> at

> org.apache.maven.surefire.booter.ForkedBooter.main(ForkedBooter.java:418)

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail:

[jira] [Updated] (HDDS-2644) TestTableCacheImpl#testPartialTableCacheWithOverrideAndDelete fails intermittently

[

https://issues.apache.org/jira/browse/HDDS-2644?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Sammi Chen updated HDDS-2644:

-

Parent: HDDS-1127

Issue Type: Sub-task (was: Test)

> TestTableCacheImpl#testPartialTableCacheWithOverrideAndDelete fails

> intermittently

> --

>

> Key: HDDS-2644

> URL: https://issues.apache.org/jira/browse/HDDS-2644

> Project: Hadoop Distributed Data Store

> Issue Type: Sub-task

> Components: test

>Reporter: Lokesh Jain

>Priority: Major

> Labels: TriagePending

>

> {code:java}

> [ERROR] Tests run: 10, Failures: 1, Errors: 0, Skipped: 0, Time elapsed: 2.87

> s <<< FAILURE! - in org.apache.hadoop.hdds.utils.db.cache.TestTableCacheImpl

> [ERROR]

> testPartialTableCacheWithOverrideAndDelete[0](org.apache.hadoop.hdds.utils.db.cache.TestTableCacheImpl)

> Time elapsed: 0.044 s <<< FAILURE!

> java.lang.AssertionError: expected:<2> but was:<6>

> at org.junit.Assert.fail(Assert.java:88)

> at org.junit.Assert.failNotEquals(Assert.java:743)

> at org.junit.Assert.assertEquals(Assert.java:118)

> at org.junit.Assert.assertEquals(Assert.java:555)

> at org.junit.Assert.assertEquals(Assert.java:542)

> at

> org.apache.hadoop.hdds.utils.db.cache.TestTableCacheImpl.testPartialTableCacheWithOverrideAndDelete(TestTableCacheImpl.java:308)

> at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

> at

> sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

> at

> sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> at java.lang.reflect.Method.invoke(Method.java:498)

> at

> org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:47)

> at

> org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12)

> at

> org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:44)

> at

> org.junit.internal.runners.statements.InvokeMethod.evaluate(InvokeMethod.java:17)

> at

> org.junit.internal.runners.statements.RunBefores.evaluate(RunBefores.java:26)

> at org.junit.runners.ParentRunner.runLeaf(ParentRunner.java:271)

> at

> org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:70)

> at

> org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:50)

> at org.junit.runners.ParentRunner$3.run(ParentRunner.java:238)

> at org.junit.runners.ParentRunner$1.schedule(ParentRunner.java:63)

> at org.junit.runners.ParentRunner.runChildren(ParentRunner.java:236)

> at org.junit.runners.ParentRunner.access$000(ParentRunner.java:53)

> at org.junit.runners.ParentRunner$2.evaluate(ParentRunner.java:229)

> at org.junit.runners.ParentRunner.run(ParentRunner.java:309)

> at org.junit.runners.Suite.runChild(Suite.java:127)

> at org.junit.runners.Suite.runChild(Suite.java:26)

> at org.junit.runners.ParentRunner$3.run(ParentRunner.java:238)

> at org.junit.runners.ParentRunner$1.schedule(ParentRunner.java:63)

> at org.junit.runners.ParentRunner.runChildren(ParentRunner.java:236)

> at org.junit.runners.ParentRunner.access$000(ParentRunner.java:53)

> at org.junit.runners.ParentRunner$2.evaluate(ParentRunner.java:229)

> at org.junit.runners.ParentRunner.run(ParentRunner.java:309)

> at

> org.apache.maven.surefire.junit4.JUnit4Provider.execute(JUnit4Provider.java:365)

> at

> org.apache.maven.surefire.junit4.JUnit4Provider.executeWithRerun(JUnit4Provider.java:273)

> at

> org.apache.maven.surefire.junit4.JUnit4Provider.executeTestSet(JUnit4Provider.java:238)

> at

> org.apache.maven.surefire.junit4.JUnit4Provider.invoke(JUnit4Provider.java:159)

> at

> org.apache.maven.surefire.booter.ForkedBooter.invokeProviderInSameClassLoader(ForkedBooter.java:384)

> at

> org.apache.maven.surefire.booter.ForkedBooter.runSuitesInProcess(ForkedBooter.java:345)

> at

> org.apache.maven.surefire.booter.ForkedBooter.execute(ForkedBooter.java:126)

> at

> org.apache.maven.surefire.booter.ForkedBooter.main(ForkedBooter.java:418)

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Updated] (HDDS-2649) TestOzoneManagerHttpServer#testHttpPolicy fails intermittently

[

https://issues.apache.org/jira/browse/HDDS-2649?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Sammi Chen updated HDDS-2649:

-

Parent: HDDS-1127

Issue Type: Sub-task (was: Test)

> TestOzoneManagerHttpServer#testHttpPolicy fails intermittently

> --

>

> Key: HDDS-2649

> URL: https://issues.apache.org/jira/browse/HDDS-2649

> Project: Hadoop Distributed Data Store

> Issue Type: Sub-task

> Components: test

>Reporter: Lokesh Jain

>Priority: Major

> Labels: TriagePending

>

> TestOzoneManagerHttpServer#testHttpPolicy fails with the following exception.

> {code:java}

> [ERROR] Tests run: 3, Failures: 1, Errors: 0, Skipped: 0, Time elapsed: 3.42

> s <<< FAILURE! - in org.apache.hadoop.ozone.om.TestOzoneManagerHttpServer

> [ERROR]

> testHttpPolicy[1](org.apache.hadoop.ozone.om.TestOzoneManagerHttpServer)

> Time elapsed: 0.343 s <<< FAILURE!

> java.lang.AssertionError

> at org.junit.Assert.fail(Assert.java:86)

> at org.junit.Assert.assertTrue(Assert.java:41)

> at org.junit.Assert.assertTrue(Assert.java:52)

> at

> org.apache.hadoop.ozone.om.TestOzoneManagerHttpServer.testHttpPolicy(TestOzoneManagerHttpServer.java:110)

> at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

> at

> sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

> at

> sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> at java.lang.reflect.Method.invoke(Method.java:498)

> at

> org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:47)

> at

> org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12)

> at

> org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:44)

> at

> org.junit.internal.runners.statements.InvokeMethod.evaluate(InvokeMethod.java:17)

> at org.junit.runners.ParentRunner.runLeaf(ParentRunner.java:271)

> at

> org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:70)

> at

> org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:50)

> at org.junit.runners.ParentRunner$3.run(ParentRunner.java:238)

> at org.junit.runners.ParentRunner$1.schedule(ParentRunner.java:63)

> at org.junit.runners.ParentRunner.runChildren(ParentRunner.java:236)

> at org.junit.runners.ParentRunner.access$000(ParentRunner.java:53)

> at org.junit.runners.ParentRunner$2.evaluate(ParentRunner.java:229)

> at org.junit.runners.ParentRunner.run(ParentRunner.java:309)

> at org.junit.runners.Suite.runChild(Suite.java:127)

> at org.junit.runners.Suite.runChild(Suite.java:26)

> at org.junit.runners.ParentRunner$3.run(ParentRunner.java:238)

> at org.junit.runners.ParentRunner$1.schedule(ParentRunner.java:63)

> at org.junit.runners.ParentRunner.runChildren(ParentRunner.java:236)

> at org.junit.runners.ParentRunner.access$000(ParentRunner.java:53)

> at org.junit.runners.ParentRunner$2.evaluate(ParentRunner.java:229)

> at

> org.junit.internal.runners.statements.RunBefores.evaluate(RunBefores.java:26)

> at

> org.junit.internal.runners.statements.RunAfters.evaluate(RunAfters.java:27)

> at org.junit.runners.ParentRunner.run(ParentRunner.java:309)

> at

> org.apache.maven.surefire.junit4.JUnit4Provider.execute(JUnit4Provider.java:365)

> at

> org.apache.maven.surefire.junit4.JUnit4Provider.executeWithRerun(JUnit4Provider.java:273)

> at

> org.apache.maven.surefire.junit4.JUnit4Provider.executeTestSet(JUnit4Provider.java:238)

> at

> org.apache.maven.surefire.junit4.JUnit4Provider.invoke(JUnit4Provider.java:159)

> at

> org.apache.maven.surefire.booter.ForkedBooter.invokeProviderInSameClassLoader(ForkedBooter.java:384)

> at

> org.apache.maven.surefire.booter.ForkedBooter.runSuitesInProcess(ForkedBooter.java:345)

> at

> org.apache.maven.surefire.booter.ForkedBooter.execute(ForkedBooter.java:126)

> at

> org.apache.maven.surefire.booter.ForkedBooter.main(ForkedBooter.java:418)

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Updated] (HDDS-1249) Fix TestOzoneManagerHttpServer & TestStorageContainerManagerHttpServer

[

https://issues.apache.org/jira/browse/HDDS-1249?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Sammi Chen updated HDDS-1249:

-

Parent: HDDS-1127

Issue Type: Sub-task (was: Bug)

> Fix TestOzoneManagerHttpServer & TestStorageContainerManagerHttpServer

> --

>

> Key: HDDS-1249

> URL: https://issues.apache.org/jira/browse/HDDS-1249

> Project: Hadoop Distributed Data Store

> Issue Type: Sub-task

> Components: Ozone Manager, SCM

>Affects Versions: 0.4.0

>Reporter: Mukul Kumar Singh

>Assignee: Nanda kumar

>Priority: Major

> Labels: TriagePending

>

> Fix the following unit test failures

> {code}

> java.lang.AssertionError

> at org.junit.Assert.fail(Assert.java:86)

> at org.junit.Assert.assertTrue(Assert.java:41)

> at org.junit.Assert.assertTrue(Assert.java:52)

> at

> org.apache.hadoop.hdds.scm.TestStorageContainerManagerHttpServer.testHttpPolicy(TestStorageContainerManagerHttpServer.java:114)

> at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

> at

> sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

> at

> sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> at java.lang.reflect.Method.invoke(Method.java:498)

> at

> org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:47)

> at

> org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12)

> at

> org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:44)

> at

> org.junit.internal.runners.statements.InvokeMethod.evaluate(InvokeMethod.java:17)

> at org.junit.runners.ParentRunner.runLeaf(ParentRunner.java:271)

> at

> org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:70)

> at

> org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:50)

> at org.junit.runners.ParentRunner$3.run(ParentRunner.java:238)

> at org.junit.runners.ParentRunner$1.schedule(ParentRunner.java:63)

> at org.junit.runners.ParentRunner.runChildren(ParentRunner.java:236)

> at org.junit.runners.ParentRunner.access$000(ParentRunner.java:53)

> at org.junit.runners.ParentRunner$2.evaluate(ParentRunner.java:229)

> at org.junit.runners.ParentRunner.run(ParentRunner.java:309)

> at org.junit.runners.Suite.runChild(Suite.java:127)

> at org.junit.runners.Suite.runChild(Suite.java:26)

> at org.junit.runners.ParentRunner$3.run(ParentRunner.java:238)

> at org.junit.runners.ParentRunner$1.schedule(ParentRunner.java:63)

> at org.junit.runners.ParentRunner.runChildren(ParentRunner.java:236)

> at org.junit.runners.ParentRunner.access$000(ParentRunner.java:53)

> at org.junit.runners.ParentRunner$2.evaluate(ParentRunner.java:229)

> at

> org.junit.internal.runners.statements.RunBefores.evaluate(RunBefores.java:26)

> at

> org.junit.internal.runners.statements.RunAfters.evaluate(RunAfters.java:27)

> at org.junit.runners.ParentRunner.run(ParentRunner.java:309)

> at

> org.apache.maven.surefire.junit4.JUnit4Provider.execute(JUnit4Provider.java:365)

> at

> org.apache.maven.surefire.junit4.JUnit4Provider.executeWithRerun(JUnit4Provider.java:273)

> at

> org.apache.maven.surefire.junit4.JUnit4Provider.executeTestSet(JUnit4Provider.java:238)

> at

> org.apache.maven.surefire.junit4.JUnit4Provider.invoke(JUnit4Provider.java:159)

> at

> org.apache.maven.surefire.booter.ForkedBooter.invokeProviderInSameClassLoader(ForkedBooter.java:384)

> at

> org.apache.maven.surefire.booter.ForkedBooter.runSuitesInProcess(ForkedBooter.java:345)

> at

> org.apache.maven.surefire.booter.ForkedBooter.execute(ForkedBooter.java:126)

> at

> org.apache.maven.surefire.booter.ForkedBooter.main(ForkedBooter.java:418)

> {code}

> and

> {code}

> java.lang.AssertionError

> at org.junit.Assert.fail(Assert.java:86)

> at org.junit.Assert.assertTrue(Assert.java:41)

> at org.junit.Assert.assertTrue(Assert.java:52)

> at

> org.apache.hadoop.hdds.scm.TestStorageContainerManagerHttpServer.testHttpPolicy(TestStorageContainerManagerHttpServer.java:109)

> at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

> at

> sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

> at

> sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> at java.lang.reflect.Method.invoke(Method.java:498)

> at

> org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:47)

> at

>

[jira] [Updated] (HDDS-1537) TestContainerPersistence#testDeleteBlockTwice is failing

[

https://issues.apache.org/jira/browse/HDDS-1537?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Sammi Chen updated HDDS-1537:

-

Parent: HDDS-1127

Issue Type: Sub-task (was: Improvement)

> TestContainerPersistence#testDeleteBlockTwice is failing

> -

>

> Key: HDDS-1537

> URL: https://issues.apache.org/jira/browse/HDDS-1537

> Project: Hadoop Distributed Data Store

> Issue Type: Sub-task

> Components: Ozone Datanode

>Reporter: Mukul Kumar Singh

>Priority: Major

> Labels: TriagePending

>

> The test is failing with the following exception.

> {code}

> [ERROR] Tests run: 18, Failures: 1, Errors: 0, Skipped: 0, Time elapsed:

> 4.132 s <<< FAILURE! - in

> org.apache.hadoop.ozone.container.common.impl.TestContainerPersistence

> [ERROR]

> testDeleteBlockTwice(org.apache.hadoop.ozone.container.common.impl.TestContainerPersistence)

> Time elapsed: 0.058 s <<< FAILURE!

> java.lang.AssertionError: Expected test to throw (an instance of

> org.apache.hadoop.hdds.scm.container.common.helpers.StorageContainerException

> and exception with message a string containing "Unable to find the block.")

> at org.junit.Assert.fail(Assert.java:88)

> at

> org.junit.rules.ExpectedException.failDueToMissingException(ExpectedException.java:184)

> at

> org.junit.rules.ExpectedException.access$100(ExpectedException.java:85)

> at

> org.junit.rules.ExpectedException$ExpectedExceptionStatement.evaluate(ExpectedException.java:170)

> at

> org.junit.internal.runners.statements.FailOnTimeout$StatementThread.run(FailOnTimeout.java:74)

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Updated] (HDDS-1342) TestOzoneManagerHA#testOMProxyProviderFailoverOnConnectionFailure fails intermittently

[

https://issues.apache.org/jira/browse/HDDS-1342?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Sammi Chen updated HDDS-1342:

-

Parent: HDDS-1127

Issue Type: Sub-task (was: Bug)

> TestOzoneManagerHA#testOMProxyProviderFailoverOnConnectionFailure fails

> intermittently

> --

>

> Key: HDDS-1342

> URL: https://issues.apache.org/jira/browse/HDDS-1342

> Project: Hadoop Distributed Data Store

> Issue Type: Sub-task

> Components: test

>Reporter: Lokesh Jain

>Assignee: Hanisha Koneru

>Priority: Major

> Labels: TriagePending

>

> The test fails intermittently. The link to the test report can be found below.

> [https://builds.apache.org/job/PreCommit-HDDS-Build/2582/testReport/]

> {code:java}

> java.net.ConnectException: Call From ea902c1cb730/172.17.0.3 to

> localhost:10174 failed on connection exception: java.net.ConnectException:

> Connection refused; For more details see:

> http://wiki.apache.org/hadoop/ConnectionRefused

> at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

> at

> sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

> at

> sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

> at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

> at org.apache.hadoop.net.NetUtils.wrapWithMessage(NetUtils.java:831)

> at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:755)

> at org.apache.hadoop.ipc.Client.getRpcResponse(Client.java:1515)

> at org.apache.hadoop.ipc.Client.call(Client.java:1457)

> at org.apache.hadoop.ipc.Client.call(Client.java:1367)

> at

> org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:228)

> at

> org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:116)

> at com.sun.proxy.$Proxy34.submitRequest(Unknown Source)

> at sun.reflect.GeneratedMethodAccessor30.invoke(Unknown Source)

> at

> sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> at java.lang.reflect.Method.invoke(Method.java:498)

> at

> org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:422)

> at

> org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeMethod(RetryInvocationHandler.java:165)

> at

> org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invoke(RetryInvocationHandler.java:157)

> at

> org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeOnce(RetryInvocationHandler.java:95)

> at

> org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:359)

> at com.sun.proxy.$Proxy34.submitRequest(Unknown Source)

> at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

> at

> sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

> at

> sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> at java.lang.reflect.Method.invoke(Method.java:498)

> at

> org.apache.hadoop.hdds.tracing.TraceAllMethod.invoke(TraceAllMethod.java:66)

> at com.sun.proxy.$Proxy34.submitRequest(Unknown Source)

> at

> org.apache.hadoop.ozone.om.protocolPB.OzoneManagerProtocolClientSideTranslatorPB.submitRequest(OzoneManagerProtocolClientSideTranslatorPB.java:310)

> at

> org.apache.hadoop.ozone.om.protocolPB.OzoneManagerProtocolClientSideTranslatorPB.createVolume(OzoneManagerProtocolClientSideTranslatorPB.java:343)

> at

> org.apache.hadoop.ozone.client.rpc.RpcClient.createVolume(RpcClient.java:275)

> at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

> at

> sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

> at

> sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> at java.lang.reflect.Method.invoke(Method.java:498)

> at

> org.apache.hadoop.ozone.client.OzoneClientInvocationHandler.invoke(OzoneClientInvocationHandler.java:54)

> at com.sun.proxy.$Proxy86.createVolume(Unknown Source)

> at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

> at

> sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

> at

> sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> at java.lang.reflect.Method.invoke(Method.java:498)

> at

> org.apache.hadoop.hdds.tracing.TraceAllMethod.invoke(TraceAllMethod.java:66)

> at com.sun.proxy.$Proxy86.createVolume(Unknown Source)

> at

>

[jira] [Updated] (HDDS-1316) TestContainerStateManagerIntegration#testReplicaMap fails with ChillModePrecheck

[

https://issues.apache.org/jira/browse/HDDS-1316?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Sammi Chen updated HDDS-1316:

-

Parent: HDDS-1127

Issue Type: Sub-task (was: Bug)

> TestContainerStateManagerIntegration#testReplicaMap fails with

> ChillModePrecheck

>

>

> Key: HDDS-1316

> URL: https://issues.apache.org/jira/browse/HDDS-1316

> Project: Hadoop Distributed Data Store

> Issue Type: Sub-task

> Components: test

>Affects Versions: 0.4.0

>Reporter: Mukul Kumar Singh

>Priority: Major

> Labels: Triaged

>

> TestContainerStateManagerIntegration#testReplicaMap fails with

> ChillModePrecheck

> {code}

> [ERROR] Tests run: 8, Failures: 0, Errors: 1, Skipped: 1, Time elapsed:

> 41.475 s <<< FAILURE! - in

> org.apache.hadoop.hdds.scm.container.TestContainerStateManagerIntegration

> [ERROR]

> testReplicaMap(org.apache.hadoop.hdds.scm.container.TestContainerStateManagerIntegration)

> Time elapsed: 4.589 s <<< ERROR!

> org.apache.hadoop.hdds.scm.exceptions.SCMException: ChillModePrecheck failed

> for allocateContainer

> at

> org.apache.hadoop.hdds.scm.chillmode.ChillModePrecheck.check(ChillModePrecheck.java:51)

> at

> org.apache.hadoop.hdds.scm.chillmode.ChillModePrecheck.check(ChillModePrecheck.java:31)

> at org.apache.hadoop.hdds.scm.ScmUtils.preCheck(ScmUtils.java:53)

> at

> org.apache.hadoop.hdds.scm.server.SCMClientProtocolServer.allocateContainer(SCMClientProtocolServer.java:180)

> at

> org.apache.hadoop.hdds.scm.container.TestContainerStateManagerIntegration.testReplicaMap(TestContainerStateManagerIntegration.java:386)

> at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

> at

> sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

> at

> sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> at java.lang.reflect.Method.invoke(Method.java:498)

> at

> org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:47)

> at

> org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12)

> at

> org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:44)

> at

> org.junit.internal.runners.statements.InvokeMethod.evaluate(InvokeMethod.java:17)

> at

> org.junit.internal.runners.statements.RunBefores.evaluate(RunBefores.java:26)

> at

> org.junit.internal.runners.statements.RunAfters.evaluate(RunAfters.java:27)

> at org.junit.runners.ParentRunner.runLeaf(ParentRunner.java:271)

> at

> org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:70)

> at

> org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:50)

> at org.junit.runners.ParentRunner$3.run(ParentRunner.java:238)

> at org.junit.runners.ParentRunner$1.schedule(ParentRunner.java:63)

> at org.junit.runners.ParentRunner.runChildren(ParentRunner.java:236)

> at org.junit.runners.ParentRunner.access$000(ParentRunner.java:53)

> at org.junit.runners.ParentRunner$2.evaluate(ParentRunner.java:229)

> at org.junit.runners.ParentRunner.run(ParentRunner.java:309)

> at

> org.apache.maven.surefire.junit4.JUnit4Provider.execute(JUnit4Provider.java:365)

> at

> org.apache.maven.surefire.junit4.JUnit4Provider.executeWithRerun(JUnit4Provider.java:273)

> at

> org.apache.maven.surefire.junit4.JUnit4Provider.executeTestSet(JUnit4Provider.java:238)

> at

> org.apache.maven.surefire.junit4.JUnit4Provider.invoke(JUnit4Provider.java:159)

> at

> org.apache.maven.surefire.booter.ForkedBooter.invokeProviderInSameClassLoader(ForkedBooter.java:384)

> at

> org.apache.maven.surefire.booter.ForkedBooter.runSuitesInProcess(ForkedBooter.java:345)

> at

> org.apache.maven.surefire.booter.ForkedBooter.execute(ForkedBooter.java:126)

> at

> org.apache.maven.surefire.booter.ForkedBooter.main(ForkedBooter.java:418)

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Updated] (HDDS-2934) OM HA S3 test failure

[

https://issues.apache.org/jira/browse/HDDS-2934?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Sammi Chen updated HDDS-2934:

-

Parent: HDDS-1127

Issue Type: Sub-task (was: Bug)

> OM HA S3 test failure

> -

>

> Key: HDDS-2934

> URL: https://issues.apache.org/jira/browse/HDDS-2934

> Project: Hadoop Distributed Data Store

> Issue Type: Sub-task

> Components: Ozone Manager

>Reporter: Attila Doroszlai

>Priority: Major

> Labels: Triaged, intermittent

> Attachments: docker-ozone-om-ha-s3-ozone-om-ha-s3-s3-scm.log,

> robot-ozone-om-ha-s3-ozone-om-ha-s3-s3-scm.xml

>

>

> OM HA S3 test ({{ozone-om-ha-s3}}) failed in one CI run at the following test

> case, then most subsequent test cases failed, too:

> {code}

> 2020-01-22T06:33:16.7322540Z Test Multipart Upload Put With Copy and range

> | FAIL |

> 2020-01-22T06:33:16.7323058Z 255 != 0

> {code}

> Docker log has several of the following exception starting around above time:

> {code}

> OMNotLeaderException: OM:om1 is not the leader. Suggested leader is OM:om3.

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] xiaoyuyao commented on pull request #1174: HDDS-3918. ConcurrentModificationException in ContainerReportHandler.…

xiaoyuyao commented on pull request #1174: URL: https://github.com/apache/hadoop-ozone/pull/1174#issuecomment-656465017 Thanks @adoroszlai for the review and @ChenSammi for reporting the issue. PR has been merged. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Resolved] (HDDS-3918) ConcurrentModificationException in ContainerReportHandler.onMessage

[ https://issues.apache.org/jira/browse/HDDS-3918?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Xiaoyu Yao resolved HDDS-3918. -- Fix Version/s: 0.6.0 Resolution: Fixed > ConcurrentModificationException in ContainerReportHandler.onMessage > --- > > Key: HDDS-3918 > URL: https://issues.apache.org/jira/browse/HDDS-3918 > Project: Hadoop Distributed Data Store > Issue Type: Bug >Reporter: Sammi Chen >Assignee: Xiaoyu Yao >Priority: Major > Labels: pull-request-available > Fix For: 0.6.0 > > Attachments: TestCME.java > > > 2020-07-03 14:51:45,489 [EventQueue-ContainerReportForContainerReportHandler] > ERROR org.apache.hadoop.hdds.server.events.SingleThreadExecutor: Error on > execution message > org.apache.hadoop.hdds.scm.server.SCMDatanodeHeartbeatDispatcher$ContainerReportFromDatanode@8f6e7cb > java.util.ConcurrentModificationException > at java.util.HashMap$HashIterator.nextNode(HashMap.java:1445) > at java.util.HashMap$KeyIterator.next(HashMap.java:1469) > at > java.util.Collections$UnmodifiableCollection$1.next(Collections.java:1044) > at java.util.AbstractCollection.addAll(AbstractCollection.java:343) > at java.util.HashSet.(HashSet.java:120) > at > org.apache.hadoop.hdds.scm.container.ContainerReportHandler.onMessage(ContainerReportHandler.java:127) > at > org.apache.hadoop.hdds.scm.container.ContainerReportHandler.onMessage(ContainerReportHandler.java:50) > at > org.apache.hadoop.hdds.server.events.SingleThreadExecutor.lambda$onMessage$1(SingleThreadExecutor.java:81) > at > java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) > at > java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) > at java.lang.Thread.run(Thread.java:748) > 2020-07-03 14:51:45,648 [EventQueue-ContainerReportForContainerReportHandler] > ERROR org.apache.hadoop.hdds.server.events.SingleThreadExecutor: Error on > execution message > org.apache.hadoop.hdds.scm.server.SCMDatanodeHeartbeatDispatcher$ContainerReportFromDatanode@49d2b84b > java.util.ConcurrentModificationException > at java.util.HashMap$HashIterator.nextNode(HashMap.java:1445) > at java.util.HashMap$KeyIterator.next(HashMap.java:1469) > at > java.util.Collections$UnmodifiableCollection$1.next(Collections.java:1044) > at java.util.AbstractCollection.addAll(AbstractCollection.java:343) > at java.util.HashSet.(HashSet.java:120) > at > org.apache.hadoop.hdds.scm.container.ContainerReportHandler.onMessage(ContainerReportHandler.java:127) > at > org.apache.hadoop.hdds.scm.container.ContainerReportHandler.onMessage(ContainerReportHandler.java:50) > at > org.apache.hadoop.hdds.server.events.SingleThreadExecutor.lambda$onMessage$1(SingleThreadExecutor.java:81) > at > java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) > at > java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) > at java.lang.Thread.run(Thread.java:748) -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] xiaoyuyao merged pull request #1174: HDDS-3918. ConcurrentModificationException in ContainerReportHandler.…

xiaoyuyao merged pull request #1174: URL: https://github.com/apache/hadoop-ozone/pull/1174 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] xiaoyuyao commented on a change in pull request #1166: HDDS-3914. Remove LevelDB configuration option for DN Metastore

xiaoyuyao commented on a change in pull request #1166:

URL: https://github.com/apache/hadoop-ozone/pull/1166#discussion_r452605910

##

File path:

hadoop-hdds/container-service/src/main/java/org/apache/hadoop/ozone/container/keyvalue/KeyValueContainerCheck.java

##

@@ -186,8 +186,8 @@ private void checkContainerFile() throws IOException {

}

dbType = onDiskContainerData.getContainerDBType();

-if (!dbType.equals(OZONE_METADATA_STORE_IMPL_ROCKSDB) &&

-!dbType.equals(OZONE_METADATA_STORE_IMPL_LEVELDB)) {

+if (!dbType.equals(CONTAINER_DB_TYPE_ROCKSDB) &&

Review comment:

That makes sense to me.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Updated] (HDDS-1681) TestNodeReportHandler failing because of NPE

[

https://issues.apache.org/jira/browse/HDDS-1681?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Sammi Chen updated HDDS-1681:

-

Parent: HDDS-1127

Issue Type: Sub-task (was: Bug)

> TestNodeReportHandler failing because of NPE

>

>

> Key: HDDS-1681

> URL: https://issues.apache.org/jira/browse/HDDS-1681

> Project: Hadoop Distributed Data Store

> Issue Type: Sub-task

> Components: test

>Reporter: Mukul Kumar Singh

>Assignee: Nanda kumar

>Priority: Major

> Labels: TriagePending

>

> {code}

> [INFO] Running org.apache.hadoop.hdds.scm.node.TestNodeReportHandler

> [ERROR] Tests run: 1, Failures: 0, Errors: 1, Skipped: 0, Time elapsed: 0.469

> s <<< FAILURE! - in org.apache.hadoop.hdds.scm.node.TestNodeReportHandler

> [ERROR] testNodeReport(org.apache.hadoop.hdds.scm.node.TestNodeReportHandler)

> Time elapsed: 0.31 s <<< ERROR!

> java.lang.NullPointerException

> at

> org.apache.hadoop.hdds.scm.node.SCMNodeManager.(SCMNodeManager.java:122)

> at

> org.apache.hadoop.hdds.scm.node.TestNodeReportHandler.resetEventCollector(TestNodeReportHandler.java:53)

> at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

> at

> sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

> at

> sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> at java.lang.reflect.Method.invoke(Method.java:498)

> at

> org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:47)

> at

> org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12)

> at

> org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:44)

> at

> org.junit.internal.runners.statements.RunBefores.evaluate(RunBefores.java:24)

> at org.junit.runners.ParentRunner.runLeaf(ParentRunner.java:271)

> at

> org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:70)

> at

> org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:50)

> at org.junit.runners.ParentRunner$3.run(ParentRunner.java:238)

> at org.junit.runners.ParentRunner$1.schedule(ParentRunner.java:63)

> at org.junit.runners.ParentRunner.runChildren(ParentRunner.java:236)

> at org.junit.runners.ParentRunner.access$000(ParentRunner.java:53)

> at org.junit.runners.ParentRunner$2.evaluate(ParentRunner.java:229)

> at org.junit.runners.ParentRunner.run(ParentRunner.java:309)

> at

> org.apache.maven.surefire.junit4.JUnit4Provider.execute(JUnit4Provider.java:365)

> at

> org.apache.maven.surefire.junit4.JUnit4Provider.executeWithRerun(JUnit4Provider.java:273)

> at

> org.apache.maven.surefire.junit4.JUnit4Provider.executeTestSet(JUnit4Provider.java:238)

> at

> org.apache.maven.surefire.junit4.JUnit4Provider.invoke(JUnit4Provider.java:159)

> at

> org.apache.maven.surefire.booter.ForkedBooter.invokeProviderInSameClassLoader(ForkedBooter.java:384)

> at

> org.apache.maven.surefire.booter.ForkedBooter.runSuitesInProcess(ForkedBooter.java:345)

> at

> org.apache.maven.surefire.booter.ForkedBooter.execute(ForkedBooter.java:126)

> at

> org.apache.maven.surefire.booter.ForkedBooter.main(ForkedBooter.java:418)

> {code}