[GitHub] [spark] SparkQA commented on pull request #32399: [SPARK-35271][ML][PYSPARK] Fix: After CrossValidator/TrainValidationSplit fit raised error, some backgroud threads may still continue run or

SparkQA commented on pull request #32399: URL: https://github.com/apache/spark/pull/32399#issuecomment-836216634 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA commented on pull request #32399: [SPARK-35271][ML][PYSPARK] Fix: After CrossValidator/TrainValidationSplit fit raised error, some backgroud threads may still continue run or

SparkQA commented on pull request #32399: URL: https://github.com/apache/spark/pull/32399#issuecomment-836192784 **[Test build #138321 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/138321/testReport)** for PR 32399 at commit [`a1724ab`](https://github.com/apache/spark/commit/a1724ab3c4bb852dcb227bced236fdcbd3f3b93f). -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA removed a comment on pull request #32399: [SPARK-35271][ML][PYSPARK] Fix: After CrossValidator/TrainValidationSplit fit raised error, some backgroud threads may still continue

SparkQA removed a comment on pull request #32399: URL: https://github.com/apache/spark/pull/32399#issuecomment-836190123 **[Test build #138320 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/138320/testReport)** for PR 32399 at commit [`a6874e5`](https://github.com/apache/spark/commit/a6874e5fc05c1f418500670c59c36bc799977761). -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins removed a comment on pull request #32399: [SPARK-35271][ML][PYSPARK] Fix: After CrossValidator/TrainValidationSplit fit raised error, some backgroud threads may still co

AmplabJenkins removed a comment on pull request #32399: URL: https://github.com/apache/spark/pull/32399#issuecomment-836190600 Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/138320/ -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA commented on pull request #32399: [SPARK-35271][ML][PYSPARK] Fix: After CrossValidator/TrainValidationSplit fit raised error, some backgroud threads may still continue run or

SparkQA commented on pull request #32399: URL: https://github.com/apache/spark/pull/32399#issuecomment-836190579 **[Test build #138320 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/138320/testReport)** for PR 32399 at commit [`a6874e5`](https://github.com/apache/spark/commit/a6874e5fc05c1f418500670c59c36bc799977761). * This patch **fails RAT tests**. * This patch merges cleanly. * This patch adds no public classes. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on pull request #32399: [SPARK-35271][ML][PYSPARK] Fix: After CrossValidator/TrainValidationSplit fit raised error, some backgroud threads may still continue r

AmplabJenkins commented on pull request #32399: URL: https://github.com/apache/spark/pull/32399#issuecomment-836190600 Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/138320/ -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA commented on pull request #32399: [SPARK-35271][ML][PYSPARK] Fix: After CrossValidator/TrainValidationSplit fit raised error, some backgroud threads may still continue run or

SparkQA commented on pull request #32399: URL: https://github.com/apache/spark/pull/32399#issuecomment-836190123 **[Test build #138320 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/138320/testReport)** for PR 32399 at commit [`a6874e5`](https://github.com/apache/spark/commit/a6874e5fc05c1f418500670c59c36bc799977761). -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA removed a comment on pull request #32399: [SPARK-35271][ML][PYSPARK] Fix: After CrossValidator/TrainValidationSplit fit raised error, some backgroud threads may still continue

SparkQA removed a comment on pull request #32399: URL: https://github.com/apache/spark/pull/32399#issuecomment-836187589 **[Test build #138319 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/138319/testReport)** for PR 32399 at commit [`45c64ea`](https://github.com/apache/spark/commit/45c64ead77bfd897b53e383efa67e4ba35c2). -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins removed a comment on pull request #32399: [SPARK-35271][ML][PYSPARK] Fix: After CrossValidator/TrainValidationSplit fit raised error, some backgroud threads may still co

AmplabJenkins removed a comment on pull request #32399: URL: https://github.com/apache/spark/pull/32399#issuecomment-836188026 Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/138319/ -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on pull request #32399: [SPARK-35271][ML][PYSPARK] Fix: After CrossValidator/TrainValidationSplit fit raised error, some backgroud threads may still continue r

AmplabJenkins commented on pull request #32399: URL: https://github.com/apache/spark/pull/32399#issuecomment-836188026 Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/138319/ -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA commented on pull request #32399: [SPARK-35271][ML][PYSPARK] Fix: After CrossValidator/TrainValidationSplit fit raised error, some backgroud threads may still continue run or

SparkQA commented on pull request #32399: URL: https://github.com/apache/spark/pull/32399#issuecomment-836188006 **[Test build #138319 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/138319/testReport)** for PR 32399 at commit [`45c64ea`](https://github.com/apache/spark/commit/45c64ead77bfd897b53e383efa67e4ba35c2). * This patch **fails RAT tests**. * This patch merges cleanly. * This patch adds no public classes. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA commented on pull request #32399: [SPARK-35271][ML][PYSPARK] Fix: After CrossValidator/TrainValidationSplit fit raised error, some backgroud threads may still continue run or

SparkQA commented on pull request #32399: URL: https://github.com/apache/spark/pull/32399#issuecomment-836187589 **[Test build #138319 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/138319/testReport)** for PR 32399 at commit [`45c64ea`](https://github.com/apache/spark/commit/45c64ead77bfd897b53e383efa67e4ba35c2). -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA commented on pull request #32031: [WIP] Initial work of Remote Shuffle Service on Kubernetes

SparkQA commented on pull request #32031: URL: https://github.com/apache/spark/pull/32031#issuecomment-836185216 **[Test build #138318 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/138318/testReport)** for PR 32031 at commit [`506149f`](https://github.com/apache/spark/commit/506149f3fa92b27bdf09da6748e91516b6dd5aea). -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins removed a comment on pull request #32475: [SPARK-34775][SQL] Push down limit through window when partitionSpec is not empty

AmplabJenkins removed a comment on pull request #32475: URL: https://github.com/apache/spark/pull/32475#issuecomment-836183250 Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder-K8s/42837/ -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins removed a comment on pull request #32487: [SPARK-35358][BUILD] Increase maximum Java heap used for release build to avoid OOM

AmplabJenkins removed a comment on pull request #32487: URL: https://github.com/apache/spark/pull/32487#issuecomment-836183252 Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder-K8s/42836/ -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins removed a comment on pull request #32482: [SPARK-35332][SQL] Make cache plan disable configs configurable

AmplabJenkins removed a comment on pull request #32482: URL: https://github.com/apache/spark/pull/32482#issuecomment-836183249 Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder-K8s/42838/ -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on pull request #32482: [SPARK-35332][SQL] Make cache plan disable configs configurable

AmplabJenkins commented on pull request #32482: URL: https://github.com/apache/spark/pull/32482#issuecomment-836183249 Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder-K8s/42838/ -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on pull request #32475: [SPARK-34775][SQL] Push down limit through window when partitionSpec is not empty

AmplabJenkins commented on pull request #32475: URL: https://github.com/apache/spark/pull/32475#issuecomment-836183250 Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder-K8s/42837/ -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on pull request #32487: [SPARK-35358][BUILD] Increase maximum Java heap used for release build to avoid OOM

AmplabJenkins commented on pull request #32487: URL: https://github.com/apache/spark/pull/32487#issuecomment-836183252 Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder-K8s/42836/ -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA commented on pull request #32487: [SPARK-35358][BUILD] Increase maximum Java heap used for release build to avoid OOM

SparkQA commented on pull request #32487: URL: https://github.com/apache/spark/pull/32487#issuecomment-836177985 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA commented on pull request #32482: [SPARK-35332][SQL] Make cache plan disable configs configurable

SparkQA commented on pull request #32482: URL: https://github.com/apache/spark/pull/32482#issuecomment-836176561 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA commented on pull request #32475: [SPARK-34775][SQL] Push down limit through window when partitionSpec is not empty

SparkQA commented on pull request #32475: URL: https://github.com/apache/spark/pull/32475#issuecomment-836174973 Kubernetes integration test status failure URL: https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder-K8s/42837/ -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA commented on pull request #32475: [SPARK-34775][SQL] Push down limit through window when partitionSpec is not empty

SparkQA commented on pull request #32475: URL: https://github.com/apache/spark/pull/32475#issuecomment-836171886 Kubernetes integration test starting URL: https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder-K8s/42837/ -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on pull request #32473: [SPARK-35345][SQL] Add Parquet tests to BloomFilterBenchmark

AmplabJenkins commented on pull request #32473: URL: https://github.com/apache/spark/pull/32473#issuecomment-836153120 Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/138312/ -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA commented on pull request #32399: [SPARK-35271][ML][PYSPARK] Fix: After CrossValidator/TrainValidationSplit fit raised error, some backgroud threads may still continue run or

SparkQA commented on pull request #32399: URL: https://github.com/apache/spark/pull/32399#issuecomment-836152449 **[Test build #138317 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/138317/testReport)** for PR 32399 at commit [`e8c86db`](https://github.com/apache/spark/commit/e8c86db1753a097e7ed442fd26d064693e0803e8). -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA removed a comment on pull request #32473: [SPARK-35345][SQL] Add Parquet tests to BloomFilterBenchmark

SparkQA removed a comment on pull request #32473: URL: https://github.com/apache/spark/pull/32473#issuecomment-835964738 **[Test build #138312 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/138312/testReport)** for PR 32473 at commit [`21cc2ac`](https://github.com/apache/spark/commit/21cc2ac907ffe9256942d818663ce225d1a1b992). -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA commented on pull request #32473: [SPARK-35345][SQL] Add Parquet tests to BloomFilterBenchmark

SparkQA commented on pull request #32473: URL: https://github.com/apache/spark/pull/32473#issuecomment-836151733 **[Test build #138312 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/138312/testReport)** for PR 32473 at commit [`21cc2ac`](https://github.com/apache/spark/commit/21cc2ac907ffe9256942d818663ce225d1a1b992). * This patch passes all tests. * This patch merges cleanly. * This patch adds no public classes. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] srowen commented on pull request #32487: [SPARK-35358][BUILD] Increase maximum Java heap used for release build to avoid OOM

srowen commented on pull request #32487: URL: https://github.com/apache/spark/pull/32487#issuecomment-836150808 Getting pretty big! but OK if needed. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] ulysses-you commented on a change in pull request #32482: [SPARK-35332][SQL] Make cache plan disable configs configurable

ulysses-you commented on a change in pull request #32482:

URL: https://github.com/apache/spark/pull/32482#discussion_r629039347

##

File path: sql/core/src/test/scala/org/apache/spark/sql/CachedTableSuite.scala

##

@@ -1175,7 +1175,7 @@ class CachedTableSuite extends QueryTest with SQLTestUtils

}

test("cache supports for intervals") {

-withTable("interval_cache") {

+withTable("interval_cache", "t1") {

Review comment:

not related this pr, but affected the new added test with `t1`.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA commented on pull request #32482: [SPARK-35332][SQL] Make cache plan disable configs configurable

SparkQA commented on pull request #32482: URL: https://github.com/apache/spark/pull/32482#issuecomment-836147815 **[Test build #138316 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/138316/testReport)** for PR 32482 at commit [`7625677`](https://github.com/apache/spark/commit/76256774c52b78b9f6011f82063004bf18734f01). -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] ulysses-you commented on pull request #32482: [SPARK-35332][SQL] Make cache plan disable configs configurable

ulysses-you commented on pull request #32482: URL: https://github.com/apache/spark/pull/32482#issuecomment-836147309 Thank you @maropu @c21 @dongjoon-hyun . Agree, the current config seems overkill to user, it's better to just make it as `enabled`. Refactor this PR to address: * make the new config simple and improve the doc. * improve the test for two things, 1) more pattern with AQE test, 2) bucketed test -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA commented on pull request #32475: [SPARK-34775][SQL] Push down limit through window when partitionSpec is not empty

SparkQA commented on pull request #32475: URL: https://github.com/apache/spark/pull/32475#issuecomment-83614 **[Test build #138315 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/138315/testReport)** for PR 32475 at commit [`bf9d041`](https://github.com/apache/spark/commit/bf9d04140d596ba9d4cfe33b0f497a5a9045ba37). -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA commented on pull request #32487: [SPARK-35358][BUILD] Increase maximum Java heap used for release build to avoid OOM

SparkQA commented on pull request #32487: URL: https://github.com/apache/spark/pull/32487#issuecomment-836145492 **[Test build #138314 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/138314/testReport)** for PR 32487 at commit [`4098407`](https://github.com/apache/spark/commit/4098407bf6b74f2045ca27c3851da249a2a6ec7e). -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on pull request #32488: [SPARK-35316][SQL] UnwrapCastInBinaryComparison support In/InSet predicate

AmplabJenkins commented on pull request #32488: URL: https://github.com/apache/spark/pull/32488#issuecomment-836144135 Can one of the admins verify this patch? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] cfmcgrady opened a new pull request #32488: [SPARK-35316][SQL] UnwrapCastInBinaryComparison support In/InSet predicate

cfmcgrady opened a new pull request #32488:

URL: https://github.com/apache/spark/pull/32488

### What changes were proposed in this pull request?

This pr add in/inset predicate support for `UnwrapCastInBinaryComparison`.

Current implement doesn't pushdown filters for `In/InSet` which contains

`Cast`.

For instance:

```scala

spark.range(50).selectExpr("cast(id as int) as

id").write.mode("overwrite").parquet("/tmp/parquet/t1")

spark.read.parquet("/tmp/parquet/t1").where("id in (1L, 2L, 4L)").explain

```

before this pr:

```

== Physical Plan ==

*(1) Filter cast(id#5 as bigint) IN (1,2,4)

+- *(1) ColumnarToRow

+- FileScan parquet [id#5] Batched: true, DataFilters: [cast(id#5 as

bigint) IN (1,2,4)], Format: Parquet, Location: InMemoryFileIndex(1

paths)[file:/tmp/parquet/t1], PartitionFilters: [], PushedFilters: [],

ReadSchema: struct

```

after this pr:

```

== Physical Plan ==

*(1) Filter id#95 IN (1,2,4)

+- *(1) ColumnarToRow

+- FileScan parquet [id#95] Batched: true, DataFilters: [id#95 IN

(1,2,4)], Format: Parquet, Location: InMemoryFileIndex(1

paths)[file:/tmp/parquet/t1], PartitionFilters: [], PushedFilters: [In(id,

[1,2,4])], ReadSchema: struct

```

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

New test.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] c21 commented on a change in pull request #32476: [SPARK-35349][SQL] Add code-gen for left/right outer sort merge join

c21 commented on a change in pull request #32476:

URL: https://github.com/apache/spark/pull/32476#discussion_r629027318

##

File path:

sql/core/src/main/scala/org/apache/spark/sql/execution/joins/SortMergeJoinExec.scala

##

@@ -418,115 +443,140 @@ case class SortMergeJoinExec(

// Inline mutable state since not many join operations in a task

val matches = ctx.addMutableState(clsName, "matches",

v => s"$v = new $clsName($inMemoryThreshold, $spillThreshold);",

forceInline = true)

-// Copy the left keys as class members so they could be used in next

function call.

-val matchedKeyVars = copyKeys(ctx, leftKeyVars)

+// Copy the streamed keys as class members so they could be used in next

function call.

+val matchedKeyVars = copyKeys(ctx, streamedKeyVars)

+

+// Handle the case when streamed rows has any NULL keys.

+val handleStreamedAnyNull = joinType match {

+ case _: InnerLike =>

+// Skip streamed row.

+s"""

+ |$streamedRow = null;

+ |continue;

+ """.stripMargin

+ case LeftOuter | RightOuter =>

+// Eagerly return streamed row.

+s"""

+ |if (!$matches.isEmpty()) {

+ | $matches.clear();

+ |}

+ |return false;

+ """.stripMargin

+ case x =>

+throw new IllegalArgumentException(

+ s"SortMergeJoin.genScanner should not take $x as the JoinType")

+}

-ctx.addNewFunction("findNextInnerJoinRows",

+// Handle the case when streamed keys less than buffered keys.

+val handleStreamedLessThanBuffered = joinType match {

+ case _: InnerLike =>

+// Skip streamed row.

+s"$streamedRow = null;"

+ case LeftOuter | RightOuter =>

+// Eagerly return with streamed row.

+"return false;"

+ case x =>

+throw new IllegalArgumentException(

+ s"SortMergeJoin.genScanner should not take $x as the JoinType")

+}

+

+ctx.addNewFunction("findNextJoinRows",

s"""

- |private boolean findNextInnerJoinRows(

- |scala.collection.Iterator leftIter,

- |scala.collection.Iterator rightIter) {

- | $leftRow = null;

+ |private boolean findNextJoinRows(

Review comment:

@maropu - No I think we need buffer anyway. The buffered rows has same

join keys with current streamed row. But there can be multiple followed

streamed rows having same join keys, as the buffered rows. Even though buffered

rows cannot match condition with current streamed row, they may match condition

with followed streamed rows. I think this is how current sort merge join

(code-gen & iterator) is designed.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] c21 commented on a change in pull request #32476: [SPARK-35349][SQL] Add code-gen for left/right outer sort merge join

c21 commented on a change in pull request #32476:

URL: https://github.com/apache/spark/pull/32476#discussion_r629027318

##

File path:

sql/core/src/main/scala/org/apache/spark/sql/execution/joins/SortMergeJoinExec.scala

##

@@ -418,115 +443,140 @@ case class SortMergeJoinExec(

// Inline mutable state since not many join operations in a task

val matches = ctx.addMutableState(clsName, "matches",

v => s"$v = new $clsName($inMemoryThreshold, $spillThreshold);",

forceInline = true)

-// Copy the left keys as class members so they could be used in next

function call.

-val matchedKeyVars = copyKeys(ctx, leftKeyVars)

+// Copy the streamed keys as class members so they could be used in next

function call.

+val matchedKeyVars = copyKeys(ctx, streamedKeyVars)

+

+// Handle the case when streamed rows has any NULL keys.

+val handleStreamedAnyNull = joinType match {

+ case _: InnerLike =>

+// Skip streamed row.

+s"""

+ |$streamedRow = null;

+ |continue;

+ """.stripMargin

+ case LeftOuter | RightOuter =>

+// Eagerly return streamed row.

+s"""

+ |if (!$matches.isEmpty()) {

+ | $matches.clear();

+ |}

+ |return false;

+ """.stripMargin

+ case x =>

+throw new IllegalArgumentException(

+ s"SortMergeJoin.genScanner should not take $x as the JoinType")

+}

-ctx.addNewFunction("findNextInnerJoinRows",

+// Handle the case when streamed keys less than buffered keys.

+val handleStreamedLessThanBuffered = joinType match {

+ case _: InnerLike =>

+// Skip streamed row.

+s"$streamedRow = null;"

+ case LeftOuter | RightOuter =>

+// Eagerly return with streamed row.

+"return false;"

+ case x =>

+throw new IllegalArgumentException(

+ s"SortMergeJoin.genScanner should not take $x as the JoinType")

+}

+

+ctx.addNewFunction("findNextJoinRows",

s"""

- |private boolean findNextInnerJoinRows(

- |scala.collection.Iterator leftIter,

- |scala.collection.Iterator rightIter) {

- | $leftRow = null;

+ |private boolean findNextJoinRows(

Review comment:

@maropu - No I think we need buffer anyway. The buffered rows has same

join keys with current streamed row. But there can be multiple followed

streamed rows having same join keys, as the buffered rows. Even though buffered

rows cannot match condition with current streamed rows, they may match

condition with followed streamed rows. I think this is how current sort merge

join (code-gen & iterator) is designed.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA removed a comment on pull request #32399: [SPARK-35271][ML][PYSPARK] Fix: After CrossValidator/TrainValidationSplit fit raised error, some backgroud threads may still continue

SparkQA removed a comment on pull request #32399: URL: https://github.com/apache/spark/pull/32399#issuecomment-836035623 **[Test build #138313 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/138313/testReport)** for PR 32399 at commit [`c6aa4c4`](https://github.com/apache/spark/commit/c6aa4c4ccc8b9103314d5efea148b71e19a560d4). -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] viirya commented on a change in pull request #32487: [SPARK-35358][BUILD] Increase maximum Java heap used for release build to avoid OOM

viirya commented on a change in pull request #32487: URL: https://github.com/apache/spark/pull/32487#discussion_r629025675 ## File path: dev/create-release/release-build.sh ## @@ -210,6 +210,8 @@ if [[ "$1" == "package" ]]; then PYSPARK_VERSION=`echo "$SPARK_VERSION" | sed -e "s/-/./" -e "s/SNAPSHOT/dev0/" -e "s/preview/dev/"` echo "__version__='$PYSPARK_VERSION'" > python/pyspark/version.py +export MAVEN_OPTS="-Xmx12000m" Review comment: ok. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins removed a comment on pull request #32399: [SPARK-35271][ML][PYSPARK] Fix: After CrossValidator/TrainValidationSplit fit raised error, some backgroud threads may still co

AmplabJenkins removed a comment on pull request #32399: URL: https://github.com/apache/spark/pull/32399#issuecomment-836109653 Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/138313/ -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on pull request #32399: [SPARK-35271][ML][PYSPARK] Fix: After CrossValidator/TrainValidationSplit fit raised error, some backgroud threads may still continue r

AmplabJenkins commented on pull request #32399: URL: https://github.com/apache/spark/pull/32399#issuecomment-836109653 Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/138313/ -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA commented on pull request #32399: [SPARK-35271][ML][PYSPARK] Fix: After CrossValidator/TrainValidationSplit fit raised error, some backgroud threads may still continue run or

SparkQA commented on pull request #32399: URL: https://github.com/apache/spark/pull/32399#issuecomment-836108663 **[Test build #138313 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/138313/testReport)** for PR 32399 at commit [`c6aa4c4`](https://github.com/apache/spark/commit/c6aa4c4ccc8b9103314d5efea148b71e19a560d4). * This patch **fails PySpark unit tests**. * This patch merges cleanly. * This patch adds no public classes. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins removed a comment on pull request #32473: [SPARK-35345][SQL] Add Parquet tests to BloomFilterBenchmark

AmplabJenkins removed a comment on pull request #32473: URL: https://github.com/apache/spark/pull/32473#issuecomment-836106608 Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/138311/ -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on pull request #32473: [SPARK-35345][SQL] Add Parquet tests to BloomFilterBenchmark

AmplabJenkins commented on pull request #32473: URL: https://github.com/apache/spark/pull/32473#issuecomment-836106608 Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/138311/ -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

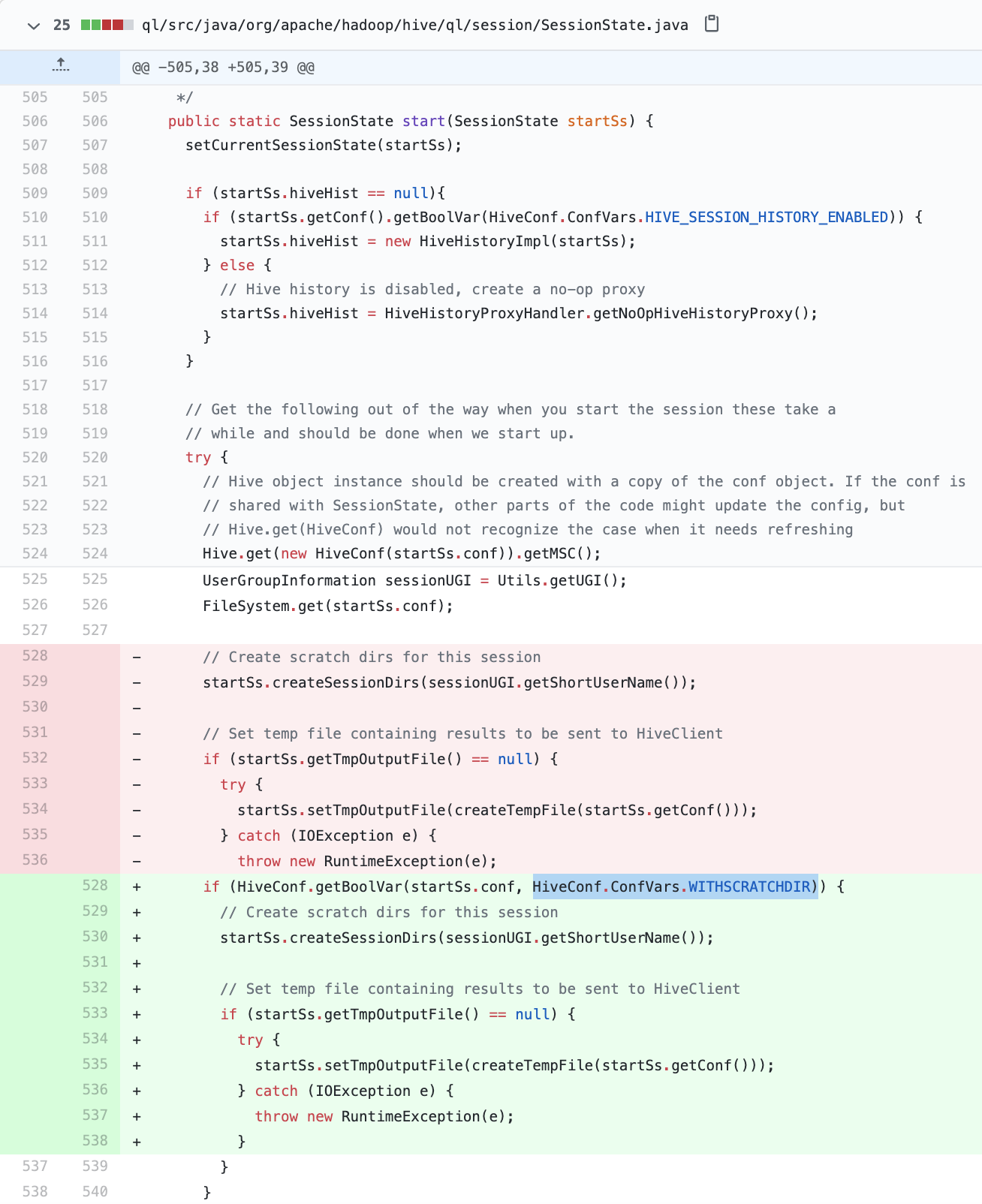

[GitHub] [spark] wangyum commented on a change in pull request #32410: [SPARK-35286][SQL] Replace SessionState.start with SessionState.setCurrentSessionState

wangyum commented on a change in pull request #32410:

URL: https://github.com/apache/spark/pull/32410#discussion_r629020979

##

File path:

sql/hive-thriftserver/src/main/java/org/apache/hive/service/cli/session/HiveSessionImpl.java

##

@@ -141,7 +141,7 @@ public void open(Map sessionConfMap) throws

HiveSQLException {

sessionState = new SessionState(hiveConf, username);

sessionState.setUserIpAddress(ipAddress);

sessionState.setIsHiveServerQuery(true);

-SessionState.start(sessionState);

+SessionState.setCurrentSessionState(sessionState);

Review comment:

Yes. It is safe when use `ADD JARS`. We have disabled creating these

directories for more than a year with the following

changes(`HiveConf.ConfVars.WITHSCRATCHDIR=false`):

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA removed a comment on pull request #32473: [SPARK-35345][SQL] Add Parquet tests to BloomFilterBenchmark

SparkQA removed a comment on pull request #32473: URL: https://github.com/apache/spark/pull/32473#issuecomment-835906957 **[Test build #138311 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/138311/testReport)** for PR 32473 at commit [`34d0511`](https://github.com/apache/spark/commit/34d05113d307395bd1c1449651e09a8285fd0c6e). -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HeartSaVioR commented on pull request #25911: [SPARK-29223][SQL][SS] Enable global timestamp per topic while specifying offset by timestamp in Kafka source

HeartSaVioR commented on pull request #25911:

URL: https://github.com/apache/spark/pull/25911#issuecomment-836089685

I see actual customer's demand on this; "a" topic has 100+ partitions and

it's weird to let them craft json which contains 100+ partitions for the same

timestamp.

Flink already does the thing; Flink uses global value across partitions for

earliest/latest/timestamp, while it allows to set exact offset per partition.

https://ci.apache.org/projects/flink/flink-docs-release-1.13/docs/connectors/datastream/kafka/#kafka-consumers-start-position-configuration

```

final StreamExecutionEnvironment env =

StreamExecutionEnvironment.getExecutionEnvironment();

FlinkKafkaConsumer myConsumer = new FlinkKafkaConsumer<>(...);

myConsumer.setStartFromEarliest(); // start from the earliest record

possible

myConsumer.setStartFromLatest(); // start from the latest record

myConsumer.setStartFromTimestamp(...); // start from specified epoch

timestamp (milliseconds)

myConsumer.setStartFromGroupOffsets(); // the default behaviour

```

```

Map specificStartOffsets = new HashMap<>();

specificStartOffsets.put(new KafkaTopicPartition("myTopic", 0), 23L);

specificStartOffsets.put(new KafkaTopicPartition("myTopic", 1), 31L);

specificStartOffsets.put(new KafkaTopicPartition("myTopic", 2), 43L);

myConsumer.setStartFromSpecificOffsets(specificStartOffsets);

```

Given this PR is stale, I'll rebase this with master and raise the PR again.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA commented on pull request #32473: [SPARK-35345][SQL] Add Parquet tests to BloomFilterBenchmark

SparkQA commented on pull request #32473: URL: https://github.com/apache/spark/pull/32473#issuecomment-836088555 **[Test build #138311 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/138311/testReport)** for PR 32473 at commit [`34d0511`](https://github.com/apache/spark/commit/34d05113d307395bd1c1449651e09a8285fd0c6e). * This patch passes all tests. * This patch merges cleanly. * This patch adds no public classes. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] c21 commented on pull request #32480: [SPARK-35354][SQL] Replace BaseJoinExec with ShuffledJoin in CoalesceBucketsInJoin

c21 commented on pull request #32480: URL: https://github.com/apache/spark/pull/32480#issuecomment-836086921 Thank you @maropu for review! -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] beliefer commented on a change in pull request #32442: [SPARK-35283][SQL] Support query some DDL with CTES

beliefer commented on a change in pull request #32442:

URL: https://github.com/apache/spark/pull/32442#discussion_r629016341

##

File path: sql/core/src/test/resources/sql-tests/inputs/cte-ddl.sql

##

@@ -0,0 +1,65 @@

+-- Test data.

+CREATE NAMESPACE IF NOT EXISTS query_ddl_namespace;

+USE NAMESPACE query_ddl_namespace;

+CREATE TABLE test_show_tables(a INT, b STRING, c INT) using parquet;

+CREATE TABLE test_show_table_properties (a INT, b STRING, c INT) USING parquet

TBLPROPERTIES('p1'='v1', 'p2'='v2');

+CREATE TABLE test_show_partitions(a String, b Int, c String, d String) USING

parquet PARTITIONED BY (c, d);

+ALTER TABLE test_show_partitions ADD PARTITION (c='Us', d=1);

+ALTER TABLE test_show_partitions ADD PARTITION (c='Us', d=2);

+ALTER TABLE test_show_partitions ADD PARTITION (c='Cn', d=1);

+CREATE VIEW view_1 AS SELECT * FROM test_show_tables;

+CREATE VIEW view_2 AS SELECT * FROM test_show_tables WHERE c=1;

+CREATE TEMPORARY VIEW test_show_views(e int) USING parquet;

+CREATE GLOBAL TEMP VIEW test_global_show_views AS SELECT 1 as col1;

+

+-- SHOW NAMESPACES

+SHOW NAMESPACES;

+WITH s AS (SHOW NAMESPACES) SELECT * FROM s;

+WITH s AS (SHOW NAMESPACES) SELECT * FROM s WHERE namespace =

'query_ddl_namespace';

+WITH s(n) AS (SHOW NAMESPACES) SELECT * FROM s WHERE n = 'query_ddl_namespace';

+

+-- SHOW TABLES

+SHOW TABLES;

+WITH s AS (SHOW TABLES) SELECT * FROM s;

+WITH s AS (SHOW TABLES) SELECT * FROM s WHERE tableName = 'test_show_tables';

+WITH s(ns, tn, t) AS (SHOW TABLES) SELECT * FROM s WHERE tn =

'test_show_tables';

Review comment:

OK

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] LuciferYang closed pull request #32374: [WIP][SPARK-35253][BUILD][SQL] Upgrade Janino from 3.0.16 to 3.1.3

LuciferYang closed pull request #32374: URL: https://github.com/apache/spark/pull/32374 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] LuciferYang commented on pull request #32374: [WIP][SPARK-35253][BUILD][SQL] Upgrade Janino from 3.0.16 to 3.1.3

LuciferYang commented on pull request #32374: URL: https://github.com/apache/spark/pull/32374#issuecomment-836082137 close this because SPARK-35253 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] LuciferYang commented on a change in pull request #32455: [SPARK-35253][SQL][BUILD] Bump up the janino version to v3.1.4

LuciferYang commented on a change in pull request #32455:

URL: https://github.com/apache/spark/pull/32455#discussion_r629014929

##

File path:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/codegen/CodeGenerator.scala

##

@@ -1434,9 +1435,10 @@ object CodeGenerator extends Logging {

private def updateAndGetCompilationStats(evaluator: ClassBodyEvaluator):

ByteCodeStats = {

// First retrieve the generated classes.

val classes = {

- val resultField = classOf[SimpleCompiler].getDeclaredField("result")

- resultField.setAccessible(true)

- val loader =

resultField.get(evaluator).asInstanceOf[ByteArrayClassLoader]

+ val scField = classOf[ClassBodyEvaluator].getDeclaredField("sc")

Review comment:

@maropu Can we directly use `evaluator.getBytecodes.asScala` instead of

line 1438 ~ line 1445?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins removed a comment on pull request #32399: [SPARK-35271][ML][PYSPARK] Fix: After CrossValidator/TrainValidationSplit fit raised error, some backgroud threads may still co

AmplabJenkins removed a comment on pull request #32399: URL: https://github.com/apache/spark/pull/32399#issuecomment-836069987 Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder-K8s/42835/ -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on pull request #32399: [SPARK-35271][ML][PYSPARK] Fix: After CrossValidator/TrainValidationSplit fit raised error, some backgroud threads may still continue r

AmplabJenkins commented on pull request #32399: URL: https://github.com/apache/spark/pull/32399#issuecomment-836069987 Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder-K8s/42835/ -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] zhengruifeng commented on pull request #32350: [SPARK-35231][SQL] logical.Range override maxRowsPerPartition

zhengruifeng commented on pull request #32350: URL: https://github.com/apache/spark/pull/32350#issuecomment-836067509 Thank you so much! -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA commented on pull request #32399: [SPARK-35271][ML][PYSPARK] Fix: After CrossValidator/TrainValidationSplit fit raised error, some backgroud threads may still continue run or

SparkQA commented on pull request #32399: URL: https://github.com/apache/spark/pull/32399#issuecomment-836058502 Kubernetes integration test unable to build dist. exiting with code: 1 URL: https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder-K8s/42835/ -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] maropu commented on a change in pull request #32487: [SPARK-35358][BUILD] Increase maximum Java heap used for release build to avoid OOM

maropu commented on a change in pull request #32487: URL: https://github.com/apache/spark/pull/32487#discussion_r629004607 ## File path: dev/create-release/release-build.sh ## @@ -210,6 +210,8 @@ if [[ "$1" == "package" ]]; then PYSPARK_VERSION=`echo "$SPARK_VERSION" | sed -e "s/-/./" -e "s/SNAPSHOT/dev0/" -e "s/preview/dev/"` echo "__version__='$PYSPARK_VERSION'" > python/pyspark/version.py +export MAVEN_OPTS="-Xmx12000m" Review comment: nit: we can say `-Xmx12g`? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] huaxingao commented on pull request #32473: [SPARK-35345][SQL] Add Parquet tests to BloomFilterBenchmark

huaxingao commented on pull request #32473: URL: https://github.com/apache/spark/pull/32473#issuecomment-836051980 @dongjoon-hyun > Shall we change the grouping in order see the trend according to the block size? Sorry, I just saw your comment. I guess it might be a little better to pair up the results of `Without bloom filter` and `With bloom filter` so it's easier to see the improvement for bloom filter? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] huaxingao commented on a change in pull request #32473: [SPARK-35345][SQL] Add Parquet tests to BloomFilterBenchmark

huaxingao commented on a change in pull request #32473:

URL: https://github.com/apache/spark/pull/32473#discussion_r629004056

##

File path:

sql/core/src/test/scala/org/apache/spark/sql/execution/benchmark/BloomFilterBenchmark.scala

##

@@ -81,8 +80,57 @@ object BloomFilterBenchmark extends SqlBasedBenchmark {

}

}

+ private def writeParquetBenchmark(): Unit = {

+withTempPath { dir =>

+ val path = dir.getCanonicalPath

+

+ runBenchmark(s"Parquet Write") {

+val benchmark = new Benchmark(s"Write ${scaleFactor}M rows", N, output

= output)

+benchmark.addCase("Without bloom filter") { _ =>

+ df.write.mode("overwrite").parquet(path + "/withoutBF")

+}

+benchmark.addCase("With bloom filter") { _ =>

+ df.write.mode("overwrite")

+.option(ParquetOutputFormat.BLOOM_FILTER_ENABLED + "#value", true)

+.parquet(path + "/withBF")

+}

+benchmark.run()

+ }

+}

+ }

+

+ private def readParquetBenchmark(): Unit = {

+val blockSizes = Seq(512 * 1024, 1024 * 1024, 2 * 1024 * 1024, 3 * 1024 *

1024,

+ 4 * 1024 * 1024, 5 * 1024 * 1024, 6 * 1024 * 1024, 7 * 1024 * 1024,

+ 8 * 1024 * 1024, 9 * 1024 * 1024, 10 * 1024 * 1024)

+for (blocksize <- blockSizes) {

+ withTempPath { dir =>

+val path = dir.getCanonicalPath

+

+df.write.option("parquet.block.size", blocksize).parquet(path +

"/withoutBF")

Review comment:

@wangyum Sorry, I am new to parquet. Somehow I didn't see parquet has

compression size, seems only ORC has `orc.compress.size`?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA commented on pull request #32399: [SPARK-35271][ML][PYSPARK] Fix: After CrossValidator/TrainValidationSplit fit raised error, some backgroud threads may still continue run or

SparkQA commented on pull request #32399: URL: https://github.com/apache/spark/pull/32399#issuecomment-836035623 **[Test build #138313 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/138313/testReport)** for PR 32399 at commit [`c6aa4c4`](https://github.com/apache/spark/commit/c6aa4c4ccc8b9103314d5efea148b71e19a560d4). -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on pull request #32487: [SPARK-35358][BUILD] Increase maximum Java heap used for release build to avoid OOM

AmplabJenkins commented on pull request #32487: URL: https://github.com/apache/spark/pull/32487#issuecomment-836035119 Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/138310/ -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins removed a comment on pull request #32473: [SPARK-35345][SQL] Add Parquet tests to BloomFilterBenchmark

AmplabJenkins removed a comment on pull request #32473: URL: https://github.com/apache/spark/pull/32473#issuecomment-836035114 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins removed a comment on pull request #32487: [SPARK-35358][BUILD] Increase maximum Java heap used for release build to avoid OOM

AmplabJenkins removed a comment on pull request #32487: URL: https://github.com/apache/spark/pull/32487#issuecomment-836035119 Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/138310/ -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on pull request #32473: [SPARK-35345][SQL] Add Parquet tests to BloomFilterBenchmark

AmplabJenkins commented on pull request #32473: URL: https://github.com/apache/spark/pull/32473#issuecomment-836035114 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] maropu commented on pull request #32480: [SPARK-35354][SQL] Replace BaseJoinExec with ShuffledJoin in CoalesceBucketsInJoin

maropu commented on pull request #32480: URL: https://github.com/apache/spark/pull/32480#issuecomment-836019661 Thank you, @c21. Merged to master. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] maropu closed pull request #32480: [SPARK-35354][SQL] Replace BaseJoinExec with ShuffledJoin in CoalesceBucketsInJoin

maropu closed pull request #32480: URL: https://github.com/apache/spark/pull/32480 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA commented on pull request #32473: [SPARK-35345][SQL] Add Parquet tests to BloomFilterBenchmark

SparkQA commented on pull request #32473: URL: https://github.com/apache/spark/pull/32473#issuecomment-835996955 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] wangyum commented on a change in pull request #32442: [SPARK-35283][SQL] Support query some DDL with CTES

wangyum commented on a change in pull request #32442:

URL: https://github.com/apache/spark/pull/32442#discussion_r628987144

##

File path: sql/core/src/test/resources/sql-tests/inputs/cte-ddl.sql

##

@@ -0,0 +1,65 @@

+-- Test data.

+CREATE NAMESPACE IF NOT EXISTS query_ddl_namespace;

+USE NAMESPACE query_ddl_namespace;

+CREATE TABLE test_show_tables(a INT, b STRING, c INT) using parquet;

+CREATE TABLE test_show_table_properties (a INT, b STRING, c INT) USING parquet

TBLPROPERTIES('p1'='v1', 'p2'='v2');

+CREATE TABLE test_show_partitions(a String, b Int, c String, d String) USING

parquet PARTITIONED BY (c, d);

+ALTER TABLE test_show_partitions ADD PARTITION (c='Us', d=1);

+ALTER TABLE test_show_partitions ADD PARTITION (c='Us', d=2);

+ALTER TABLE test_show_partitions ADD PARTITION (c='Cn', d=1);

+CREATE VIEW view_1 AS SELECT * FROM test_show_tables;

+CREATE VIEW view_2 AS SELECT * FROM test_show_tables WHERE c=1;

+CREATE TEMPORARY VIEW test_show_views(e int) USING parquet;

+CREATE GLOBAL TEMP VIEW test_global_show_views AS SELECT 1 as col1;

+

+-- SHOW NAMESPACES

+SHOW NAMESPACES;

+WITH s AS (SHOW NAMESPACES) SELECT * FROM s;

+WITH s AS (SHOW NAMESPACES) SELECT * FROM s WHERE namespace =

'query_ddl_namespace';

+WITH s(n) AS (SHOW NAMESPACES) SELECT * FROM s WHERE n = 'query_ddl_namespace';

+

+-- SHOW TABLES

+SHOW TABLES;

+WITH s AS (SHOW TABLES) SELECT * FROM s;

+WITH s AS (SHOW TABLES) SELECT * FROM s WHERE tableName = 'test_show_tables';

+WITH s(ns, tn, t) AS (SHOW TABLES) SELECT * FROM s WHERE tn =

'test_show_tables';

Review comment:

Could we add more tests? For example:

```sql

WITH s(ns, tn, t) AS (SHOW TABLES) SELECT tn FROM s;

WITH s(ns, tn, t) AS (SHOW TABLES) SELECT tn FROM s ORDER BY rn;

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] maropu commented on a change in pull request #32476: [SPARK-35349][SQL] Add code-gen for left/right outer sort merge join

maropu commented on a change in pull request #32476:

URL: https://github.com/apache/spark/pull/32476#discussion_r628986405

##

File path:

sql/core/src/main/scala/org/apache/spark/sql/execution/joins/SortMergeJoinExec.scala

##

@@ -418,115 +443,140 @@ case class SortMergeJoinExec(

// Inline mutable state since not many join operations in a task

val matches = ctx.addMutableState(clsName, "matches",

v => s"$v = new $clsName($inMemoryThreshold, $spillThreshold);",

forceInline = true)

-// Copy the left keys as class members so they could be used in next

function call.

-val matchedKeyVars = copyKeys(ctx, leftKeyVars)

+// Copy the streamed keys as class members so they could be used in next

function call.

+val matchedKeyVars = copyKeys(ctx, streamedKeyVars)

+

+// Handle the case when streamed rows has any NULL keys.

+val handleStreamedAnyNull = joinType match {

+ case _: InnerLike =>

+// Skip streamed row.

+s"""

+ |$streamedRow = null;

+ |continue;

+ """.stripMargin

+ case LeftOuter | RightOuter =>

+// Eagerly return streamed row.

+s"""

+ |if (!$matches.isEmpty()) {

+ | $matches.clear();

+ |}

+ |return false;

+ """.stripMargin

+ case x =>

+throw new IllegalArgumentException(

+ s"SortMergeJoin.genScanner should not take $x as the JoinType")

+}

-ctx.addNewFunction("findNextInnerJoinRows",

+// Handle the case when streamed keys less than buffered keys.

+val handleStreamedLessThanBuffered = joinType match {

+ case _: InnerLike =>

+// Skip streamed row.

+s"$streamedRow = null;"

+ case LeftOuter | RightOuter =>

+// Eagerly return with streamed row.

+"return false;"

+ case x =>

+throw new IllegalArgumentException(

+ s"SortMergeJoin.genScanner should not take $x as the JoinType")

+}

+

+ctx.addNewFunction("findNextJoinRows",

s"""

- |private boolean findNextInnerJoinRows(

- |scala.collection.Iterator leftIter,

- |scala.collection.Iterator rightIter) {

- | $leftRow = null;

+ |private boolean findNextJoinRows(

Review comment:

btw, in the current generated code, it seems `conditionCheck` is

evaluated outside `findNextJoinRows`. We cannot evaluate it inside

`findNextJoinRows` to avoid putting unmached rows in `matches`?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] maropu commented on a change in pull request #32476: [SPARK-35349][SQL] Add code-gen for left/right outer sort merge join

maropu commented on a change in pull request #32476:

URL: https://github.com/apache/spark/pull/32476#discussion_r628986405

##

File path:

sql/core/src/main/scala/org/apache/spark/sql/execution/joins/SortMergeJoinExec.scala

##

@@ -418,115 +443,140 @@ case class SortMergeJoinExec(

// Inline mutable state since not many join operations in a task

val matches = ctx.addMutableState(clsName, "matches",

v => s"$v = new $clsName($inMemoryThreshold, $spillThreshold);",

forceInline = true)

-// Copy the left keys as class members so they could be used in next

function call.

-val matchedKeyVars = copyKeys(ctx, leftKeyVars)

+// Copy the streamed keys as class members so they could be used in next

function call.

+val matchedKeyVars = copyKeys(ctx, streamedKeyVars)

+

+// Handle the case when streamed rows has any NULL keys.

+val handleStreamedAnyNull = joinType match {

+ case _: InnerLike =>

+// Skip streamed row.

+s"""

+ |$streamedRow = null;

+ |continue;

+ """.stripMargin

+ case LeftOuter | RightOuter =>

+// Eagerly return streamed row.

+s"""

+ |if (!$matches.isEmpty()) {

+ | $matches.clear();

+ |}

+ |return false;

+ """.stripMargin

+ case x =>

+throw new IllegalArgumentException(

+ s"SortMergeJoin.genScanner should not take $x as the JoinType")

+}

-ctx.addNewFunction("findNextInnerJoinRows",

+// Handle the case when streamed keys less than buffered keys.

+val handleStreamedLessThanBuffered = joinType match {

+ case _: InnerLike =>

+// Skip streamed row.

+s"$streamedRow = null;"

+ case LeftOuter | RightOuter =>

+// Eagerly return with streamed row.

+"return false;"

+ case x =>

+throw new IllegalArgumentException(

+ s"SortMergeJoin.genScanner should not take $x as the JoinType")

+}

+

+ctx.addNewFunction("findNextJoinRows",

s"""

- |private boolean findNextInnerJoinRows(

- |scala.collection.Iterator leftIter,

- |scala.collection.Iterator rightIter) {

- | $leftRow = null;

+ |private boolean findNextJoinRows(

Review comment:

btw, in the current generated code, it seems `conditionCheck` is

evaluated outside `findNextJoinRows`. We cannot evaluate it inside

`findNextJoinRows` to avoid actual putting unmached rows in `matches`?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] wangyum commented on a change in pull request #32442: [SPARK-35283][SQL] Support query some DDL with CTES

wangyum commented on a change in pull request #32442:

URL: https://github.com/apache/spark/pull/32442#discussion_r628980328

##

File path:

sql/catalyst/src/main/antlr4/org/apache/spark/sql/catalyst/parser/SqlBase.g4

##

@@ -375,8 +363,18 @@ ctes

: WITH namedQuery (',' namedQuery)*

;

+informationQuery

+: SHOW (DATABASES | NAMESPACES) ((FROM | IN) multipartIdentifier)? (LIKE?

pattern=STRING)? #showNamespaces

+| SHOW TABLES ((FROM | IN) multipartIdentifier)? (LIKE? pattern=STRING)?

#showTables

Review comment:

Why do not support `SHOW TABLE EXTENDED`?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] c21 commented on a change in pull request #32476: [SPARK-35349][SQL] Add code-gen for left/right outer sort merge join

c21 commented on a change in pull request #32476:

URL: https://github.com/apache/spark/pull/32476#discussion_r628977186

##

File path:

sql/core/src/main/scala/org/apache/spark/sql/execution/joins/SortMergeJoinExec.scala

##

@@ -418,115 +443,140 @@ case class SortMergeJoinExec(

// Inline mutable state since not many join operations in a task

val matches = ctx.addMutableState(clsName, "matches",

v => s"$v = new $clsName($inMemoryThreshold, $spillThreshold);",

forceInline = true)

-// Copy the left keys as class members so they could be used in next

function call.

-val matchedKeyVars = copyKeys(ctx, leftKeyVars)

+// Copy the streamed keys as class members so they could be used in next

function call.

+val matchedKeyVars = copyKeys(ctx, streamedKeyVars)

+

+// Handle the case when streamed rows has any NULL keys.

+val handleStreamedAnyNull = joinType match {

+ case _: InnerLike =>

+// Skip streamed row.

+s"""

+ |$streamedRow = null;

+ |continue;

+ """.stripMargin

+ case LeftOuter | RightOuter =>

+// Eagerly return streamed row.

+s"""

+ |if (!$matches.isEmpty()) {

+ | $matches.clear();

+ |}