[GitHub] [spark] dtenedor commented on a diff in pull request #37840: [SPARK-40416][SQL] Move subquery expression CheckAnalysis error messages to use the new error framework

dtenedor commented on code in PR #37840:

URL: https://github.com/apache/spark/pull/37840#discussion_r975571738

##

sql/catalyst/src/main/scala/org/apache/spark/sql/internal/SQLConf.scala:

##

@@ -3911,6 +3911,15 @@ object SQLConf {

.checkValues(ErrorMessageFormat.values.map(_.toString))

.createWithDefault(ErrorMessageFormat.PRETTY.toString)

+ val INCLUDE_PLANS_IN_ERRORS = buildConf("spark.sql.error.includePlans")

Review Comment:

@gengliangwang apologies for missing that. It is reverted now.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dtenedor commented on a diff in pull request #37840: [SPARK-40416][SQL] Move subquery expression CheckAnalysis error messages to use the new error framework

dtenedor commented on code in PR #37840:

URL: https://github.com/apache/spark/pull/37840#discussion_r974575955

##

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/analysis/CheckAnalysis.scala:

##

@@ -730,6 +729,13 @@ trait CheckAnalysis extends PredicateHelper with

LookupCatalog {

}

}

+ private def canonicalizeForError(expr: LogicalPlan): String =

+if (SQLConf.get.getConf(SQLConf.CANONICALIZE_PLANS_IN_ERRORS)) {

Review Comment:

@gengliangwang thanks for the suggestion! This is done, it is simpler now.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dtenedor commented on a diff in pull request #37840: [SPARK-40416][SQL] Move subquery expression CheckAnalysis error messages to use the new error framework

dtenedor commented on code in PR #37840:

URL: https://github.com/apache/spark/pull/37840#discussion_r973305583

##

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/analysis/package.scala:

##

@@ -45,18 +51,101 @@ package object analysis {

throw new AnalysisException(msg, t.origin.line, t.origin.startPosition)

}

-/** Fails the analysis at the point where a specific tree node was parsed.

*/

+/** Fails the analysis at the point where a specific tree node was parsed

with a given cause. */

def failAnalysis(msg: String, cause: Throwable): Nothing = {

throw new AnalysisException(msg, t.origin.line, t.origin.startPosition,

cause = Some(cause))

}

+/**

+ * Fails the analysis at the point where a specific tree node was parsed

using a provided

+ * error class and message parameters.

+ */

def failAnalysis(errorClass: String, messageParameters: Map[String,

String]): Nothing = {

throw new AnalysisException(

errorClass = errorClass,

messageParameters = messageParameters,

origin = t.origin)

}

+/**

+ * Fails the analysis at the point where a specific tree node was parsed

using a provided

+ * error class and subclass and message parameters.

+ */

+def failAnalysis(

+errorClass: String,

+errorSubClass: String,

+messageParameters: Map[String, String] = Map.empty[String, String]):

Nothing = {

+ throw new AnalysisException(

+errorClass = errorClass,

+errorSubClass = errorSubClass,

+messageParameters = messageParameters,

+origin = t.origin)

+}

+

+/**

+ * Fails the analysis at the point where a specific tree node was parsed

using a provided

+ * error class and subclass and one message parameter comprising a plan

string. The plan string

+ * will be printed in the error message if and only if the corresponding

Spark configuration is

+ * enabled.

+ */

+def failAnalysis(

+errorClass: String,

+errorSubClass: String,

+treeNodes: Seq[TreeNode[_]]): Nothing = {

+ // Normalize expression IDs in the query plan to keep tests

deterministic.

Review Comment:

Hi @gengliangwang I looked at this at length some more, and I was able to

reuse the existing plan `canonicalize` method after all using

`AnalysisHelper.allowInvokingTransformsInAnalyzer` in one specific place

instead. Now there is no need to introduce new code for this purpose. Please

take another look.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dtenedor commented on a diff in pull request #37840: [SPARK-40416][SQL] Move subquery expression CheckAnalysis error messages to use the new error framework

dtenedor commented on code in PR #37840:

URL: https://github.com/apache/spark/pull/37840#discussion_r972488615

##

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/analysis/package.scala:

##

@@ -45,18 +51,101 @@ package object analysis {

throw new AnalysisException(msg, t.origin.line, t.origin.startPosition)

}

-/** Fails the analysis at the point where a specific tree node was parsed.

*/

+/** Fails the analysis at the point where a specific tree node was parsed

with a given cause. */

def failAnalysis(msg: String, cause: Throwable): Nothing = {

throw new AnalysisException(msg, t.origin.line, t.origin.startPosition,

cause = Some(cause))

}

+/**

+ * Fails the analysis at the point where a specific tree node was parsed

using a provided

+ * error class and message parameters.

+ */

def failAnalysis(errorClass: String, messageParameters: Map[String,

String]): Nothing = {

throw new AnalysisException(

errorClass = errorClass,

messageParameters = messageParameters,

origin = t.origin)

}

+/**

+ * Fails the analysis at the point where a specific tree node was parsed

using a provided

+ * error class and subclass and message parameters.

+ */

+def failAnalysis(

+errorClass: String,

+errorSubClass: String,

+messageParameters: Map[String, String] = Map.empty[String, String]):

Nothing = {

+ throw new AnalysisException(

+errorClass = errorClass,

+errorSubClass = errorSubClass,

+messageParameters = messageParameters,

+origin = t.origin)

+}

+

+/**

+ * Fails the analysis at the point where a specific tree node was parsed

using a provided

+ * error class and subclass and one message parameter comprising a plan

string. The plan string

+ * will be printed in the error message if and only if the corresponding

Spark configuration is

+ * enabled.

+ */

+def failAnalysis(

+errorClass: String,

+errorSubClass: String,

+treeNodes: Seq[TreeNode[_]]): Nothing = {

+ // Normalize expression IDs in the query plan to keep tests

deterministic.

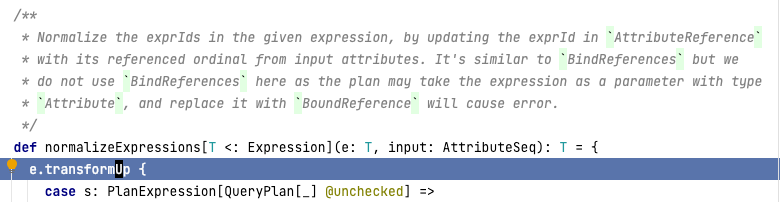

Review Comment:

I tried this, but this `canonicalized` method is not available during

analysis since it uses `transformUp`, triggering this error:

```

def methodCalledInAnalyzerNotAllowedError(): Throwable = {

new RuntimeException("This method should not be called in the analyzer")

}

```

Therefore a separate implementation is needed.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dtenedor commented on a diff in pull request #37840: [SPARK-40416][SQL] Move subquery expression CheckAnalysis error messages to use the new error framework

dtenedor commented on code in PR #37840:

URL: https://github.com/apache/spark/pull/37840#discussion_r972488849

##

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/analysis/package.scala:

##

@@ -45,18 +51,101 @@ package object analysis {

throw new AnalysisException(msg, t.origin.line, t.origin.startPosition)

}

-/** Fails the analysis at the point where a specific tree node was parsed.

*/

+/** Fails the analysis at the point where a specific tree node was parsed

with a given cause. */

def failAnalysis(msg: String, cause: Throwable): Nothing = {

throw new AnalysisException(msg, t.origin.line, t.origin.startPosition,

cause = Some(cause))

}

+/**

+ * Fails the analysis at the point where a specific tree node was parsed

using a provided

+ * error class and message parameters.

+ */

def failAnalysis(errorClass: String, messageParameters: Map[String,

String]): Nothing = {

throw new AnalysisException(

errorClass = errorClass,

messageParameters = messageParameters,

origin = t.origin)

}

+/**

+ * Fails the analysis at the point where a specific tree node was parsed

using a provided

+ * error class and subclass and message parameters.

+ */

+def failAnalysis(

+errorClass: String,

+errorSubClass: String,

+messageParameters: Map[String, String] = Map.empty[String, String]):

Nothing = {

+ throw new AnalysisException(

+errorClass = errorClass,

+errorSubClass = errorSubClass,

+messageParameters = messageParameters,

+origin = t.origin)

+}

+

+/**

+ * Fails the analysis at the point where a specific tree node was parsed

using a provided

+ * error class and subclass and one message parameter comprising a plan

string. The plan string

+ * will be printed in the error message if and only if the corresponding

Spark configuration is

+ * enabled.

+ */

+def failAnalysis(

+errorClass: String,

+errorSubClass: String,

+treeNodes: Seq[TreeNode[_]]): Nothing = {

+ // Normalize expression IDs in the query plan to keep tests

deterministic.

Review Comment:

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dtenedor commented on a diff in pull request #37840: [SPARK-40416][SQL] Move subquery expression CheckAnalysis error messages to use the new error framework

dtenedor commented on code in PR #37840:

URL: https://github.com/apache/spark/pull/37840#discussion_r972488615

##

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/analysis/package.scala:

##

@@ -45,18 +51,101 @@ package object analysis {

throw new AnalysisException(msg, t.origin.line, t.origin.startPosition)

}

-/** Fails the analysis at the point where a specific tree node was parsed.

*/

+/** Fails the analysis at the point where a specific tree node was parsed

with a given cause. */

def failAnalysis(msg: String, cause: Throwable): Nothing = {

throw new AnalysisException(msg, t.origin.line, t.origin.startPosition,

cause = Some(cause))

}

+/**

+ * Fails the analysis at the point where a specific tree node was parsed

using a provided

+ * error class and message parameters.

+ */

def failAnalysis(errorClass: String, messageParameters: Map[String,

String]): Nothing = {

throw new AnalysisException(

errorClass = errorClass,

messageParameters = messageParameters,

origin = t.origin)

}

+/**

+ * Fails the analysis at the point where a specific tree node was parsed

using a provided

+ * error class and subclass and message parameters.

+ */

+def failAnalysis(

+errorClass: String,

+errorSubClass: String,

+messageParameters: Map[String, String] = Map.empty[String, String]):

Nothing = {

+ throw new AnalysisException(

+errorClass = errorClass,

+errorSubClass = errorSubClass,

+messageParameters = messageParameters,

+origin = t.origin)

+}

+

+/**

+ * Fails the analysis at the point where a specific tree node was parsed

using a provided

+ * error class and subclass and one message parameter comprising a plan

string. The plan string

+ * will be printed in the error message if and only if the corresponding

Spark configuration is

+ * enabled.

+ */

+def failAnalysis(

+errorClass: String,

+errorSubClass: String,

+treeNodes: Seq[TreeNode[_]]): Nothing = {

+ // Normalize expression IDs in the query plan to keep tests

deterministic.

Review Comment:

I tried this, but this `canonicalized` method is not available during

analysis since it uses `transformUp`. Therefore a separate implementation is

needed.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dtenedor commented on a diff in pull request #37840: [SPARK-40416][SQL] Move subquery expression CheckAnalysis error messages to use the new error framework

dtenedor commented on code in PR #37840:

URL: https://github.com/apache/spark/pull/37840#discussion_r971397869

##

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/analysis/CheckAnalysis.scala:

##

@@ -823,12 +834,16 @@ trait CheckAnalysis extends PredicateHelper with

LookupCatalog {

// it must also be in the aggregate expressions to be rewritten

in the optimization

// phase.

if (containsExpr(a.groupingExpressions) &&

!containsExpr(a.aggregateExpressions)) {

-failAnalysis("Correlated scalar subqueries in the group by

clause " +

- s"must also be in the aggregate expressions:\n$a")

+a.failAnalysis(

+ errorClass = "UNSUPPORTED_SUBQUERY_EXPRESSION_CATEGORY",

+ errorSubClass = "MUST_AGGREGATE_CORRELATED_SCALAR_SUBQUERY",

+ treeNodes = Seq(a))

Review Comment:

Good Q. The way I implemented it, `a` is a `TreeNode`. This overload of

`failAnalysis` I added takes a `Seq[TreeNode[_]]` and then assigns their

`toString`s to the `treeNode` parameter. I do this in the `failAnalysis`

overload itself in order to normalize the expression IDs in the string to keep

tests deterministic (the LogicalPlan `canonicalized` method aims to support

this as well, but is not available during analysis since it uses `transformUp`).

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dtenedor commented on a diff in pull request #37840: [SPARK-40416][SQL] Move subquery expression CheckAnalysis error messages to use the new error framework

dtenedor commented on code in PR #37840:

URL: https://github.com/apache/spark/pull/37840#discussion_r971345715

##

sql/core/src/test/resources/sql-tests/results/join-lateral.sql.out:

##

@@ -317,11 +317,20 @@ SELECT * FROM t1, LATERAL (SELECT c1 + c2 + rand(0) AS c3)

struct<>

-- !query output

org.apache.spark.sql.AnalysisException

-Non-deterministic lateral subqueries are not supported when joining with outer

relations that produce more than one row

-SubqueryAlias __auto_generated_subquery_name

-+- Project [(cast((outer(c1#x) + outer(c2#x)) as double) + rand(0)) AS c3#x]

- +- OneRowRelation

-; line 1 pos 9

+{

+ "errorClass" : "UNSUPPORTED_SUBQUERY_EXPRESSION_CATEGORY",

+ "errorSubClass" : "NON_DETERMINISTIC_LATERAL_SUBQUERIES",

+ "messageParameters" : {

+"treeNode" : ": !LateralJoin lateral-subquery#5 [c1#6 && c2#7], Inner\n:

+- SubqueryAlias __auto_generated_subquery_name\n: +- Project

[(cast((outer(c1#2) + outer(c2#3)) as double) + rand(0)) AS c3#4]\n:+-

OneRowRelation\n+- SubqueryAlias spark_catalog.default.t1\n +- View

(`spark_catalog`.`default`.`t1`, [c1#2,c2#3])\n +- Project [cast(col1#0 as

int) AS c1#2, cast(col2#1 as int) AS c2#3]\n +- LocalRelation [col1#0,

col2#1]\n"

Review Comment:

Yes we did discuss this already in the PR threads :) I suggested eliding the

plan strings given that we have the query context now, but some folks opined

that the strings are helpful for debugging. In the end I struck a compromise by

including the strings but covered by a new SQLConf to toggle their presence.

This new config can remain enabled by default for now in Apache Spark so folks

who are used to the strings for debugging may continue to use them.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dtenedor commented on a diff in pull request #37840: [SPARK-40416][SQL] Move subquery expression CheckAnalysis error messages to use the new error framework

dtenedor commented on code in PR #37840:

URL: https://github.com/apache/spark/pull/37840#discussion_r971345715

##

sql/core/src/test/resources/sql-tests/results/join-lateral.sql.out:

##

@@ -317,11 +317,20 @@ SELECT * FROM t1, LATERAL (SELECT c1 + c2 + rand(0) AS c3)

struct<>

-- !query output

org.apache.spark.sql.AnalysisException

-Non-deterministic lateral subqueries are not supported when joining with outer

relations that produce more than one row

-SubqueryAlias __auto_generated_subquery_name

-+- Project [(cast((outer(c1#x) + outer(c2#x)) as double) + rand(0)) AS c3#x]

- +- OneRowRelation

-; line 1 pos 9

+{

+ "errorClass" : "UNSUPPORTED_SUBQUERY_EXPRESSION_CATEGORY",

+ "errorSubClass" : "NON_DETERMINISTIC_LATERAL_SUBQUERIES",

+ "messageParameters" : {

+"treeNode" : ": !LateralJoin lateral-subquery#5 [c1#6 && c2#7], Inner\n:

+- SubqueryAlias __auto_generated_subquery_name\n: +- Project

[(cast((outer(c1#2) + outer(c2#3)) as double) + rand(0)) AS c3#4]\n:+-

OneRowRelation\n+- SubqueryAlias spark_catalog.default.t1\n +- View

(`spark_catalog`.`default`.`t1`, [c1#2,c2#3])\n +- Project [cast(col1#0 as

int) AS c1#2, cast(col2#1 as int) AS c2#3]\n +- LocalRelation [col1#0,

col2#1]\n"

Review Comment:

Yes we did discuss this already in the PR threads :) I suggested eliding the

plan strings given that we have the query context now, but some folks opined

that the strings are helpful for debugging. In the end I stuck a compromise by

including the strings but covered by a new SQLConf to toggle their presence.

This new config can remain enabled by default for now in Apache Spark so folks

who are used to the strings for debugging may continue to use them.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dtenedor commented on a diff in pull request #37840: [SPARK-40416][SQL] Move subquery expression CheckAnalysis error messages to use the new error framework

dtenedor commented on code in PR #37840:

URL: https://github.com/apache/spark/pull/37840#discussion_r971116242

##

sql/core/src/test/resources/sql-tests/results/subquery/negative-cases/invalid-correlation.sql.out:

##

@@ -105,14 +131,20 @@ WHERE t1a IN (SELECT t2a

struct<>

-- !query output

org.apache.spark.sql.AnalysisException

-Expressions referencing the outer query are not supported outside of

WHERE/HAVING clauses:

-Aggregate [min(outer(t2a#x)) AS min(outer(t2.t2a))#x]

-+- SubqueryAlias t3

- +- View (`t3`, [t3a#x,t3b#x,t3c#x])

- +- Project [cast(t3a#x as int) AS t3a#x, cast(t3b#x as int) AS t3b#x,

cast(t3c#x as int) AS t3c#x]

- +- Project [t3a#x, t3b#x, t3c#x]

-+- SubqueryAlias t3

- +- LocalRelation [t3a#x, t3b#x, t3c#x]

+{

+ "errorClass" : "UNSUPPORTED_SUBQUERY_EXPRESSION_CATEGORY",

+ "errorSubClass" : "UNSUPPORTED_CORRELATED_REFERENCE",

+ "messageParameters" : {

+"planString" : ": Aggregate [min(outer(t2a#6)) AS

min(outer(t2.t2a))#7]\n+- SubqueryAlias t3\n +- View (`t3`,

[t3a#3,t3b#4,t3c#5])\n +- Project [cast(t3a#0 as int) AS t3a#3, cast(t3b#1

as int) AS t3b#4, cast(t3c#2 as int) AS t3c#5]\n +- Project [t3a#0,

t3b#1, t3c#2]\n+- SubqueryAlias t3\n +- LocalRelation

[t3a#0, t3b#1, t3c#2]\n"

Review Comment:

Good Q. I found they are not stable when running the tests outside of my

local machine, therefore to prevent the tests from becoming flaky, I found it

necessary to normalize the expression IDs. I added code in the `failAnalysis`

overload that takes `TreeNode`s to do that, iterating through the trees to

reset the expression IDs. This should be more robust than regex-replacing the

string. We do have the existing `canonicalized` method on logical plans and

expressions, but that uses `transformUp` which is not allowed in the analyzer,

so I ended up doing it separately here for this purpose.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dtenedor commented on a diff in pull request #37840: [SPARK-40416][SQL] Move subquery expression CheckAnalysis error messages to use the new error framework

dtenedor commented on code in PR #37840:

URL: https://github.com/apache/spark/pull/37840#discussion_r971113842

##

sql/catalyst/src/main/scala/org/apache/spark/sql/errors/QueryCompilationErrors.scala:

##

@@ -1563,10 +1563,12 @@ private[sql] object QueryCompilationErrors extends

QueryErrorsBase {

new AnalysisException(s"'$operation' does not support partitioning")

}

- def mixedRefsInAggFunc(funcStr: String): Throwable = {

-val msg = "Found an aggregate function in a correlated predicate that has

both " +

- "outer and local references, which is not supported: " + funcStr

-new AnalysisException(msg)

+ def mixedRefsInAggFunc(funcStr: String, origin: Origin): Throwable = {

+new AnalysisException(

+ errorClass = "UNSUPPORTED_SUBQUERY_EXPRESSION_CATEGORY",

+ errorSubClass = "AGGREGATE_FUNCTION_MIXED_OUTER_LOCAL_REFERENCES",

+ origin = origin,

+ messageParameters = Map("function" -> funcStr))

Review Comment:

Good Q,

Looks like this is a `sql` string generated from a predicate which may

contain multiple functions. It renders from the error-classes.json file like

this:

```

Found an aggregate function in a correlated predicate that has both outer

and local references, which is not supported:

```

It might be better to leave it unquoted since some parts of the predicate

may contain their own double-quotes, e.g. literal string values. Will leave it

alone for now, Serge please comment if you want it to work differently and I

can update it.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dtenedor commented on a diff in pull request #37840: [SPARK-40416][SQL] Move subquery expression CheckAnalysis error messages to use the new error framework

dtenedor commented on code in PR #37840:

URL: https://github.com/apache/spark/pull/37840#discussion_r971108777

##

sql/core/src/test/resources/sql-tests/results/udf/udf-except.sql.out:

##

@@ -100,12 +100,17 @@ WHERE udf(t1.v) >= (SELECT min(udf(t2.v))

struct<>

-- !query output

org.apache.spark.sql.AnalysisException

-Correlated column is not allowed in predicate (CAST(udf(cast(k as string)) AS

STRING) = CAST(udf(cast(outer(k#x) as string)) AS STRING)):

-Aggregate [cast(udf(cast(max(cast(udf(cast(v#x as string)) as int)) as

string)) as int) AS udf(max(udf(v)))#x]

-+- Filter (cast(udf(cast(k#x as string)) as string) = cast(udf(cast(outer(k#x)

as string)) as string))

- +- SubqueryAlias t2

- +- View (`t2`, [k#x,v#x])

- +- Project [cast(k#x as string) AS k#x, cast(v#x as int) AS v#x]

-+- Project [k#x, v#x]

- +- SubqueryAlias t2

- +- LocalRelation [k#x, v#x]

+{

+ "errorClass" : "UNSUPPORTED_SUBQUERY_EXPRESSION_CATEGORY",

+ "errorSubClass" : "CORRELATED_COLUMN_IS_NOT_ALLOWED_IN_PREDICATE",

+ "messageParameters" : {

+"planString" : ": (cast(udf(cast(k#0 as string)) as string) =

cast(udf(cast(outer(k#1) as string)) as string))"

Review Comment:

Good point, this comes from expressions rather than plans. Renamed all to

`treeNode` instead of `planString`.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dtenedor commented on a diff in pull request #37840: [SPARK-40416][SQL] Move subquery expression CheckAnalysis error messages to use the new error framework

dtenedor commented on code in PR #37840: URL: https://github.com/apache/spark/pull/37840#discussion_r970102088 ## sql/core/src/test/resources/sql-tests/results/join-lateral.sql.out: ## @@ -317,11 +317,18 @@ SELECT * FROM t1, LATERAL (SELECT c1 + c2 + rand(0) AS c3) struct<> -- !query output org.apache.spark.sql.AnalysisException -Non-deterministic lateral subqueries are not supported when joining with outer relations that produce more than one row Review Comment: Hi @allisonwang-db I looked at this and the existing `plan.canonicalized` uses `transformUp` which unfortunately is not allowed during analysis. However I was able to implement this by manually canonicalizing just the expression IDs for purposes of these strings and now the tests should be deterministic. Thanks for the suggestion. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org