[GitHub] spark pull request #21734: [SPARK-24149][YARN][FOLLOW-UP] Add a config to co...

Github user LantaoJin commented on a diff in the pull request:

https://github.com/apache/spark/pull/21734#discussion_r203584220

--- Diff:

resource-managers/yarn/src/main/scala/org/apache/spark/deploy/yarn/YarnSparkHadoopUtil.scala

---

@@ -193,8 +193,7 @@ object YarnSparkHadoopUtil {

sparkConf: SparkConf,

hadoopConf: Configuration): Set[FileSystem] = {

val filesystemsToAccess = sparkConf.get(FILESYSTEMS_TO_ACCESS)

- .map(new Path(_).getFileSystem(hadoopConf))

- .toSet

+val isRequestAllDelegationTokens = filesystemsToAccess.isEmpty

--- End diff --

@wangyum spark.yarn.access.hadoopFileSystems could be set with HA.

For example:

` --conf spark.yarn.access.namenodes hdfs://cluster1-ha,hdfs://cluster2-ha`

in hdfs-site.xml

``

`dfs.nameservices`

`cluster1-ha,cluster2-ha`

``

``

`dfs.ha.namenodes.cluster1-ha`

`nn1,nn2`

``

``

`dfs.ha.namenodes.cluster2-ha`

`nn1,nn2`

``

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21734: [SPARK-24149][YARN][FOLLOW-UP] Add a config to co...

Github user wangyum commented on a diff in the pull request:

https://github.com/apache/spark/pull/21734#discussion_r203427395

--- Diff:

resource-managers/yarn/src/main/scala/org/apache/spark/deploy/yarn/YarnSparkHadoopUtil.scala

---

@@ -193,8 +193,7 @@ object YarnSparkHadoopUtil {

sparkConf: SparkConf,

hadoopConf: Configuration): Set[FileSystem] = {

val filesystemsToAccess = sparkConf.get(FILESYSTEMS_TO_ACCESS)

- .map(new Path(_).getFileSystem(hadoopConf))

- .toSet

+val isRequestAllDelegationTokens = filesystemsToAccess.isEmpty

--- End diff --

cc @LantaoJin @suxingfate You should be familiar with this.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21734: [SPARK-24149][YARN][FOLLOW-UP] Add a config to co...

Github user wangyum commented on a diff in the pull request:

https://github.com/apache/spark/pull/21734#discussion_r203425909

--- Diff:

resource-managers/yarn/src/main/scala/org/apache/spark/deploy/yarn/YarnSparkHadoopUtil.scala

---

@@ -193,8 +193,7 @@ object YarnSparkHadoopUtil {

sparkConf: SparkConf,

hadoopConf: Configuration): Set[FileSystem] = {

val filesystemsToAccess = sparkConf.get(FILESYSTEMS_TO_ACCESS)

- .map(new Path(_).getFileSystem(hadoopConf))

- .toSet

+val isRequestAllDelegationTokens = filesystemsToAccess.isEmpty

--- End diff --

Then `spark.yarn.access.hadoopFileSystems` only can be used in filesystems

that without HA.

Because HA filesystem must configure 2 namenodes info in `hdfs-site.xml`.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21734: [SPARK-24149][YARN][FOLLOW-UP] Add a config to co...

Github user jerryshao commented on a diff in the pull request:

https://github.com/apache/spark/pull/21734#discussion_r201322643

--- Diff:

resource-managers/yarn/src/main/scala/org/apache/spark/deploy/yarn/YarnSparkHadoopUtil.scala

---

@@ -193,8 +193,7 @@ object YarnSparkHadoopUtil {

sparkConf: SparkConf,

hadoopConf: Configuration): Set[FileSystem] = {

val filesystemsToAccess = sparkConf.get(FILESYSTEMS_TO_ACCESS)

- .map(new Path(_).getFileSystem(hadoopConf))

- .toSet

+val isRequestAllDelegationTokens = filesystemsToAccess.isEmpty

--- End diff --

`spark.yarn.access.hadoopFileSystems` is not used as what you think. I

don't think changing the semantics of `spark.yarn.access.hadoopFileSystems` is

a correct way.

Basically your problem is that not all the nameservices are accessible in

federated HDFS, currently the Hadoop token provider will throw an exception and

ignore the following FSs. I think it would be better to try-catch and ignore

bad cluster, that would be more meaningful compared to this fix.

If you don't want to get all tokens from all the nameservices, I think you

should change the hdfs configuration for Spark. Spark assumes that all the

nameservices is accessible. Also token acquisition is happened in application

submission, it is not a big problem whether the fetch is slow or not.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21734: [SPARK-24149][YARN][FOLLOW-UP] Add a config to co...

Github user mgaido91 commented on a diff in the pull request:

https://github.com/apache/spark/pull/21734#discussion_r201298533

--- Diff:

resource-managers/yarn/src/main/scala/org/apache/spark/deploy/yarn/YarnSparkHadoopUtil.scala

---

@@ -193,8 +193,7 @@ object YarnSparkHadoopUtil {

sparkConf: SparkConf,

hadoopConf: Configuration): Set[FileSystem] = {

val filesystemsToAccess = sparkConf.get(FILESYSTEMS_TO_ACCESS)

- .map(new Path(_).getFileSystem(hadoopConf))

- .toSet

+val isRequestAllDelegationTokens = filesystemsToAccess.isEmpty

--- End diff --

`spark.yarn.access.hadoopFileSystems` is not invalid, it is just needed to

access external cluster, which is what it was created for. Moreover, if you use

viewfs, the same operations are performed under the hood by Hadoop code. So

this seems to be a more general performance/scalability issue on the number of

namespaces we support.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21734: [SPARK-24149][YARN][FOLLOW-UP] Add a config to co...

Github user wangyum commented on a diff in the pull request:

https://github.com/apache/spark/pull/21734#discussion_r201275550

--- Diff:

resource-managers/yarn/src/main/scala/org/apache/spark/deploy/yarn/YarnSparkHadoopUtil.scala

---

@@ -193,8 +193,7 @@ object YarnSparkHadoopUtil {

sparkConf: SparkConf,

hadoopConf: Configuration): Set[FileSystem] = {

val filesystemsToAccess = sparkConf.get(FILESYSTEMS_TO_ACCESS)

- .map(new Path(_).getFileSystem(hadoopConf))

- .toSet

+val isRequestAllDelegationTokens = filesystemsToAccess.isEmpty

--- End diff --

Users should be able to configure their needed FileSystem for better

performance at least.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21734: [SPARK-24149][YARN][FOLLOW-UP] Add a config to co...

Github user wangyum commented on a diff in the pull request:

https://github.com/apache/spark/pull/21734#discussion_r201271876

--- Diff:

resource-managers/yarn/src/main/scala/org/apache/spark/deploy/yarn/YarnSparkHadoopUtil.scala

---

@@ -193,8 +193,7 @@ object YarnSparkHadoopUtil {

sparkConf: SparkConf,

hadoopConf: Configuration): Set[FileSystem] = {

val filesystemsToAccess = sparkConf.get(FILESYSTEMS_TO_ACCESS)

- .map(new Path(_).getFileSystem(hadoopConf))

- .toSet

+val isRequestAllDelegationTokens = filesystemsToAccess.isEmpty

--- End diff --

Now the `spark.yarn.access.hadoopFileSystems` configuration is invalid,

always get all tokens.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21734: [SPARK-24149][YARN][FOLLOW-UP] Add a config to co...

Github user wangyum commented on a diff in the pull request:

https://github.com/apache/spark/pull/21734#discussion_r201271407

--- Diff:

resource-managers/yarn/src/main/scala/org/apache/spark/deploy/yarn/YarnSparkHadoopUtil.scala

---

@@ -193,8 +193,7 @@ object YarnSparkHadoopUtil {

sparkConf: SparkConf,

hadoopConf: Configuration): Set[FileSystem] = {

val filesystemsToAccess = sparkConf.get(FILESYSTEMS_TO_ACCESS)

- .map(new Path(_).getFileSystem(hadoopConf))

- .toSet

+val isRequestAllDelegationTokens = filesystemsToAccess.isEmpty

--- End diff --

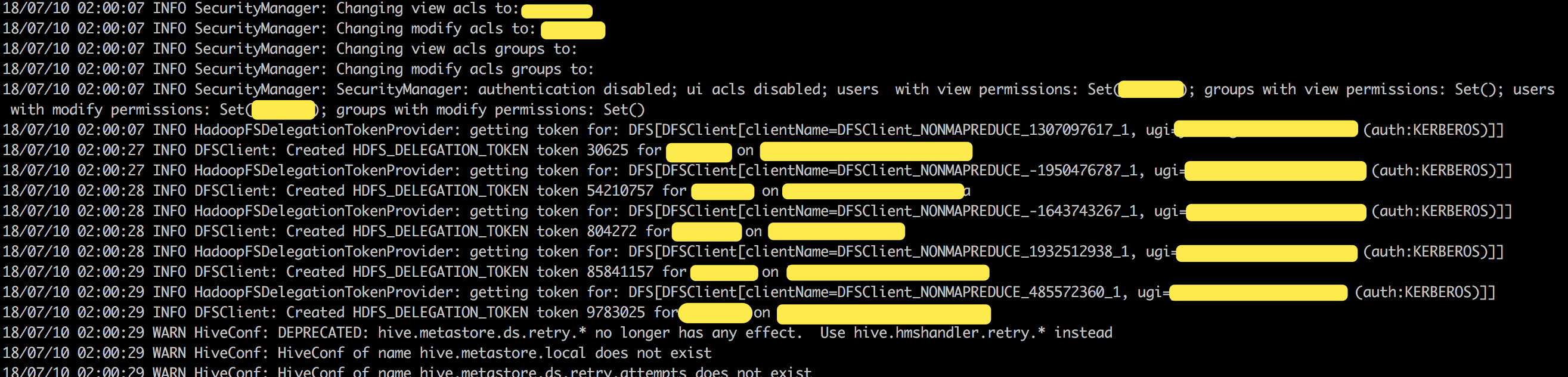

The fetch delegation token is inherently heavy. It took `22 seconds` to get

5 tokens:

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21734: [SPARK-24149][YARN][FOLLOW-UP] Add a config to co...

Github user mgaido91 commented on a diff in the pull request:

https://github.com/apache/spark/pull/21734#discussion_r201258595

--- Diff:

resource-managers/yarn/src/main/scala/org/apache/spark/deploy/yarn/YarnSparkHadoopUtil.scala

---

@@ -193,8 +193,7 @@ object YarnSparkHadoopUtil {

sparkConf: SparkConf,

hadoopConf: Configuration): Set[FileSystem] = {

val filesystemsToAccess = sparkConf.get(FILESYSTEMS_TO_ACCESS)

- .map(new Path(_).getFileSystem(hadoopConf))

- .toSet

+val isRequestAllDelegationTokens = filesystemsToAccess.isEmpty

--- End diff --

this would mean that if you have your running application accessing

different namespaces and you want to add a new namespace to connect to, if you

just add the namespace you need the application can break as we are not getting

anymore the tokens for the other namespaces.

I'd rather follow @jerryshao's comment about avoiding to crash if the

renewal fails, this seems to fix your problem and it doesn't hurt other

solutions.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21734: [SPARK-24149][YARN][FOLLOW-UP] Add a config to co...

GitHub user wangyum opened a pull request: https://github.com/apache/spark/pull/21734 [SPARK-24149][YARN][FOLLOW-UP] Add a config to control automatic namespaces discovery ## What changes were proposed in this pull request? Our HDFS cluster configured 5 nameservices: `nameservices1`, `nameservices2`, `nameservices3`, `nameservices-dev1` and `nameservices4`, but `nameservices-dev1` unstable. So sometimes an error occurred and causing the entire job failed since [SPARK-24149](https://issues.apache.org/jira/browse/SPARK-24149):  I think it's best to add a switch here. ## How was this patch tested? manual tests You can merge this pull request into a Git repository by running: $ git pull https://github.com/wangyum/spark SPARK-24149 Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/21734.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #21734 commit 888503efe1bbc2afa86b24f15c0413d2c05d Author: Yuming Wang Date: 2018-07-09T06:24:50Z Add spark.yarn.access.all.hadoopFileSystems --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org