[GitHub] [druid] mgill25 edited a comment on issue #9343: [Proposal] Pubsub Indexing Service

mgill25 edited a comment on issue #9343: [Proposal] Pubsub Indexing Service URL: https://github.com/apache/druid/issues/9343#issuecomment-586257689 Hi @jihoonson * What semantics is guaranteed by the proposed indexing service? I don't think the exactly-once ingestion is possible. And how does the proposed indexing service guarantee it? We are proposing a 2 step approach: - Make a naive pubsub indexing service which provides all the guarantees that a regular pubsub consumer would do - that is, at-least once message semantics. This would in in-line with any normal pubsub consumer would work. - Do some basic research into how systems such as dataflow achieve exactly once processing with pubsub. It is clearly possible to achieve this, since dataflow does it with pubsub (although the details of precisely how are not yet clear to us). This will be more of an exploratory work. * Description on the overall algorithm including what the supervisor and its tasks do, respectively. - The Supervisor looks pretty similar to the KafkaStreamSupervisor's basic functions - creation and management of tasks - If more tasks are required to maintain active task count, it submits new tasks. - A single task would be doing the following basic things: - Connects to a pubsub subscription - Pull in a batch from pubsub (relevant tuning parameters should be available in config) - Packets are handed off for persistence. - On successfully persisting, send back an ACK message to pubsub for the batch. * Does the proposed indexing service provide linear scalability? If so, how does it provide? The service can keep launching new tasks to process data from subscriptions, as needed. The supervisor can do periodic checks for pubsub metrics, and if the rate of message consumption is falling behind compared to the production rate, it can launch new tasks across the cluster. * How does it handle transient failures such as task failures? - If a task fails before a successful ACK has been sent out, it should be reprocessed. - Data successfully persisted, but ACK delivery fails. In this case, we would want to introduce a retry policy. - In case of permanent failure, pubsub would redeliver the message, which is in line with the at-least once guarantee of the indexing service. * Exactly Once case: I think it's fair to say we currently don't have an extremely clear understanding of making exactly once work, but we know other systems do claim to provide those guarantees. I'm interested in trying to see if we can achieve the same with Druid, but for that to happen, the foundation as described above needs to be built first, IMHO. There are unanswered questions here that we haven't fleshed out yet. Would be happy to brainstorm. :) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] [druid] mgill25 edited a comment on issue #9343: [Proposal] Pubsub Indexing Service

mgill25 edited a comment on issue #9343: [Proposal] Pubsub Indexing Service URL: https://github.com/apache/druid/issues/9343#issuecomment-586257689 Hi @jihoonson * What semantics is guaranteed by the proposed indexing service? I don't think the exactly-once ingestion is possible. And how does the proposed indexing service guarantee it? We are proposing a 2 step approach: - Make a naive pubsub indexing service which provides all the guarantees that a regular pubsub consumer would do - that is, at-least once message semantics. This would in in-line with any normal pubsub consumer would work. - Do some basic research into how systems such as dataflow achieve exactly once processing with pubsub. It is clearly possible to achieve this, since dataflow does it with pubsub (although the details of precisely how are not yet clear to us). This will be more of an exploratory work. * Description on the overall algorithm including what the supervisor and its tasks do, respectively. - The Supervisor looks pretty similar to the KafkaStreamSupervisor's basic functions - creation and management of tasks - If more tasks are required to maintain active task count, it submits new tasks. - A single task would be doing the following basic things: - Connects to a pubsub subscription - Pull in a batch from pubsub (relevant tuning parameters should be available in config) - Packets are handed off for persistence. - On successfully persisting, send back an ACK message to pubsub for the batch. * Does the proposed indexing service provide linear scalability? If so, how does it provide? The service can keep launching new tasks to process data from subscriptions, as needed. The supervisor can do periodic checks for pubsub metrics, and if the rate of message consumption is falling behind compared to the production rate, it can launch new tasks across the cluster. * How does it handle transient failures such as task failures? - If a task fails before a successful ACK has been sent out, it should be reprocessed. - Data successfully persisted, but ACK delivery fails. In this case, we would want to introduce a retry policy. - In case of permanent failure, pubsub would redeliver the message, which is in line with the at-least once guarantee of the indexing service. * Exactly Once case: I think it's fair to say we currently don't have an extremely clear understanding of making exactly once work, but we know other systems do claim to provide those guarantees. I'm interested in trying to see if we can achieve the same with Druid, but for that to happen, the foundation as described above needs to be built first, IMHO. There are unanswered questions here that we haven't fleshed out yet. Would be happy to brainstorm. :) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] [druid] mgill25 edited a comment on issue #9343: [Proposal] Pubsub Indexing Service

mgill25 edited a comment on issue #9343: [Proposal] Pubsub Indexing Service URL: https://github.com/apache/druid/issues/9343#issuecomment-586257689 Hi @jihoonson * What semantics is guaranteed by the proposed indexing service? I don't think the exactly-once ingestion is possible. And how does the proposed indexing service guarantee it? We are proposing a 2 step approach: * Make a naive pubsub indexing service which provides all the guarantees that a regular pubsub consumer would do - that is, at-least once message semantics. This would in in-line with any normal pubsub consumer would work. * Do some basic research into how systems such as dataflow achieve exactly once processing with pubsub. It is clearly possible to achieve this, since dataflow does it with pubsub (although the details of precisely how are not yet clear to us). This will be more of an exploratory work. * Description on the overall algorithm including what the supervisor and its tasks do, respectively. - The Supervisor looks pretty similar to the KafkaStreamSupervisor's basic functions - creation and management of tasks - If more tasks are required to maintain active task count, it submits new tasks. - A single task would be doing the following basic things: - Connects to a pubsub subscription - Pull in a batch from pubsub (relevant tuning parameters should be available in config) - Packets are handed off for persistence. - On successfully persisting, send back an ACK message to pubsub for the batch. * Does the proposed indexing service provide linear scalability? If so, how does it provide? The service can keep launching new tasks to process data from subscriptions, as needed. The supervisor can do periodic checks for pubsub metrics, and if the rate of message consumption is falling behind compared to the production rate, it can launch new tasks across the cluster. * How does it handle transient failures such as task failures? - If a task fails before a successful ACK has been sent out, it should be reprocessed. - Data successfully persisted, but ACK delivery fails. In this case, we would want to introduce a retry policy. - In case of permanent failure, pubsub would redeliver the message, which is in line with the at-least once guarantee of the indexing service. * Exactly Once case: I think it's fair to say we currently don't have an extremely clear understanding of making exactly once work, but we know other systems do claim to provide those guarantees. I'm interested in trying to see if we can achieve the same with Druid, but for that to happen, the foundation as described above needs to be built first, IMHO. There are unanswered questions here that we haven't fleshed out yet. Would be happy to brainstorm. :) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] [druid] himanshug commented on issue #8728: Initial join support

himanshug commented on issue #8728: Initial join support URL: https://github.com/apache/druid/issues/8728#issuecomment-586353610 @gianm This sounds great and thanks for writing https://gist.github.com/gianm/39548daef74f0373b3c87056e3db4627 . While I am reading through everything trying to understand details, I am realizing it would be useful to have a doc describing current limitations of this work (e.g. Druid table joins requiring shuffle not supported yet) and examples of SQL and Druid Native queries that showcase use cases which were not possible before but are supported now, or older way was not efficient/user-friendly and new way of writing that query makes things efficient/user-friendly . This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] [druid] mgill25 commented on issue #9343: [Proposal] Pubsub Indexing Service

mgill25 commented on issue #9343: [Proposal] Pubsub Indexing Service URL: https://github.com/apache/druid/issues/9343#issuecomment-586257689 Hi @jihoonson * What semantics is guaranteed by the proposed indexing service? I don't think the exactly-once ingestion is possible. And how does the proposed indexing service guarantee it? We are proposing a 2 step approach: 1. Make a naive pubsub indexing service which provides all the guarantees that a regular pubsub consumer would do - that is, at-least once message semantics. This would in in-line with any normal pubsub consumer would work. 2. Do some basic research into how systems such as dataflow achieve exactly once processing with pubsub. It is clearly possible to achieve this, since dataflow does it with pubsub (although the details of precisely how are not yet clear to us). This will be more of an exploratory work. * Description on the overall algorithm including what the supervisor and its tasks do, respectively. - The Supervisor looks pretty similar to the KafkaStreamSupervisor's basic functions - creation and management of tasks - If more tasks are required to maintain active task count, it submits new tasks. - A single task would be doing the following basic things: - Connects to a pubsub subscription - Pull in a batch from pubsub (relevant tuning parameters should be available in config) - Packets are handed off for persistence. - On successfully persisting, send back an ACK message to pubsub for the batch. * Does the proposed indexing service provide linear scalability? If so, how does it provide? The service can keep launching new tasks to process data from subscriptions, as needed. The supervisor can do periodic checks for pubsub metrics, and if the rate of message consumption is falling behind compared to the production rate, it can launch new tasks across the cluster. * How does it handle transient failures such as task failures? - If a task fails before a successful ACK has been sent out, it should be reprocessed. - Data successfully persisted, but ACK delivery fails. In this case, we would want to introduce a retry policy. - In case of permanent failure, pubsub would redeliver the message, which is in line with the at-least once guarantee of the indexing service. * Exactly Once case: I think it's fair to say we currently don't have an extremely clear understanding of making exactly once work, but we know other systems do claim to provide those guarantees. I'm interested in trying to see if we can achieve the same with Druid, but for that to happen, the foundation as described above needs to be built first, IMHO. There are unanswered questions here that we haven't fleshed out yet. Would be happy to brainstorm. :) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] [druid] himanshug commented on issue #9351: Adding CredentialsProvider, deprecating PasswordProvider

himanshug commented on issue #9351: Adding CredentialsProvider, deprecating

PasswordProvider

URL: https://github.com/apache/druid/issues/9351#issuecomment-58634

> Hmm, should one CredentialsProvider be able to handle multiple secrets?

Would you tell me some examples?

For example,

https://github.com/apache/druid/blob/master/extensions-core/simple-client-sslcontext/src/main/java/org/apache/druid/https/SSLClientConfig.java

has three secrets which could be logically related

Also, now that I remember, even the interface `String getPassword(String

key);` would have problems, only way to prevent the problem mentioned in #7400

would be to have an interface like below ( as suggested in

https://github.com/apache/druid/issues/#issuecomment-486398615 )

```

interface CredentialsProvider {

Map getCredentials();

}

```

as you wanna get a snapshot of secrets in single call , if the code needs to

make two/multiple calls to `CredentialsProvider` then you would continue to

have the race mentioned in #7400

hopefully it made sense or I need to be more descriptive :)

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org

For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] [druid] a2l007 opened a new pull request #9365: Fix compatibility issues with SqlFirehose

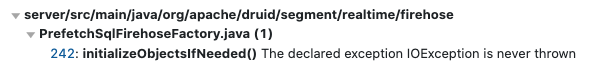

a2l007 opened a new pull request #9365: Fix compatibility issues with SqlFirehose URL: https://github.com/apache/druid/pull/9365 Fixes #9359. ### Description `SqlFirehoseFactory` is now fixed to be compatible with the deprecated `FiniteFirehoseFactory` . Will look to add `InputSource` and `InputFormat` support soon. This PR has: - [x] been self-reviewed. - [x] been tested in a test Druid cluster. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] [druid] himanshug commented on issue #8989: Overlord can support many autoscalers of different categories

himanshug commented on issue #8989: Overlord can support many autoscalers of different categories URL: https://github.com/apache/druid/pull/8989#issuecomment-586381063 I will try and review #9350 over the weekend or next week. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] [druid] himanshug commented on issue #6493: Support for request logging in kafka emitter.

himanshug commented on issue #6493: Support for request logging in kafka emitter. URL: https://github.com/apache/druid/pull/6493#issuecomment-586370967 this looks like very close indeed, @mkuthan there is at least one more person ( @Raboo ) needing this , if you wanna revive this PR , I can help review and get in. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] [druid] sthetland opened a new pull request #9366: Remove references to Docker Machine

sthetland opened a new pull request #9366: Remove references to Docker Machine

URL: https://github.com/apache/druid/pull/9366

Removing a broken link to an obsolete repo.

While at it, removing references to Docker Machine, which was obsolete as of

Docker v1.12 (avail. 2016). This version introduced Docker as native MacOS and

Windows apps.

Fixes #.

### Description

Fixed the bug ...

Renamed the class ...

Added a forbidden-apis entry ...

This PR has:

- [ ] been self-reviewed.

- [ ] using the [concurrency

checklist](https://github.com/apache/druid/blob/master/dev/code-review/concurrency.md)

(Remove this item if the PR doesn't have any relation to concurrency.)

- [ ] added documentation for new or modified features or behaviors.

- [ ] added Javadocs for most classes and all non-trivial methods. Linked

related entities via Javadoc links.

- [ ] added or updated version, license, or notice information in

[licenses.yaml](https://github.com/apache/druid/blob/master/licenses.yaml)

- [ ] added comments explaining the "why" and the intent of the code

wherever would not be obvious for an unfamiliar reader.

- [ ] added unit tests or modified existing tests to cover new code paths.

- [ ] added integration tests.

- [ ] been tested in a test Druid cluster.

# Key changed/added classes in this PR

* `MyFoo`

* `OurBar`

* `TheirBaz`

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org

For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] [druid] himanshug commented on issue #8992: druid extension for OpenID Connect auth using pac4j lib

himanshug commented on issue #8992: druid extension for OpenID Connect auth using pac4j lib URL: https://github.com/apache/druid/pull/8992#issuecomment-586509109 this has been successfully running in our prod for a while now. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] [druid] jihoonson commented on issue #9365: Fix compatibility issues with SqlFirehose

jihoonson commented on issue #9365: Fix compatibility issues with SqlFirehose URL: https://github.com/apache/druid/pull/9365#issuecomment-586484839 Would you fix the TC failure?  This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] [druid] ccaominh commented on a change in pull request #6974: sql support for dynamic parameters

ccaominh commented on a change in pull request #6974: sql support for dynamic

parameters

URL: https://github.com/apache/druid/pull/6974#discussion_r379706535

##

File path: sql/src/main/java/org/apache/druid/sql/calcite/planner/Calcites.java

##

@@ -397,4 +402,44 @@ public static int collapseFetch(int innerFetch, int

outerFetch, int outerOffset)

}

return fetch;

}

+

+ public static Class sqlTypeNameJdbcToJavaClass(SqlTypeName typeName)

+ {

+JDBCType jdbcType = JDBCType.valueOf(typeName.getJdbcOrdinal());

+switch (jdbcType) {

+ case CHAR:

+ case VARCHAR:

+ case LONGVARCHAR:

+return String.class;

+ case NUMERIC:

+ case DECIMAL:

+return BigDecimal.class;

+ case BIT:

+return Boolean.class;

+ case TINYINT:

+return Byte.class;

+ case SMALLINT:

+return Short.class;

+ case INTEGER:

+return Integer.class;

+ case BIGINT:

+return Long.class;

+ case REAL:

+return Float.class;

+ case FLOAT:

Review comment:

I think a comment for why this is not `Float.class` would be helpful

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org

For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] [druid] ccaominh commented on a change in pull request #6974: sql support for dynamic parameters

ccaominh commented on a change in pull request #6974: sql support for dynamic

parameters

URL: https://github.com/apache/druid/pull/6974#discussion_r379704623

##

File path: docs/querying/sql.md

##

@@ -607,7 +641,7 @@ Properties connectionProperties = new Properties();

try (Connection connection = DriverManager.getConnection(url,

connectionProperties)) {

try (

final Statement statement = connection.createStatement();

- final ResultSet resultSet = statement.executeQuery(query)

+ final ResultSet resultSet = statement.executeQuery(query);

Review comment:

I think the original example is better as the semicolon is not needed

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org

For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] [druid] ccaominh commented on a change in pull request #6974: sql support for dynamic parameters

ccaominh commented on a change in pull request #6974: sql support for dynamic

parameters

URL: https://github.com/apache/druid/pull/6974#discussion_r379705718

##

File path: sql/src/main/java/org/apache/druid/sql/avatica/DruidStatement.java

##

@@ -152,27 +162,36 @@ public DruidStatement prepare(

try {

ensure(State.NEW);

sqlLifecycle.initialize(query, queryContext);

-sqlLifecycle.planAndAuthorize(authenticationResult);

+

+this.authenticationResult = authenticationResult;

+PrepareResult prepareResult =

sqlLifecycle.prepare(authenticationResult);

this.maxRowCount = maxRowCount;

this.query = query;

+ArrayList params = new ArrayList<>();

Review comment:

Suggestion: Declare as `List` instead of `ArrayList` to separate behavior

from implementation

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org

For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] [druid] ccaominh commented on a change in pull request #6974: sql support for dynamic parameters

ccaominh commented on a change in pull request #6974: sql support for dynamic parameters URL: https://github.com/apache/druid/pull/6974#discussion_r379704878 ## File path: extensions-core/druid-bloom-filter/src/test/java/org/apache/druid/query/filter/sql/BloomDimFilterSqlTest.java ## @@ -273,10 +275,67 @@ public void testBloomFilters() throws Exception ); } + @Test Review comment: Per https://github.com/apache/druid/pull/6974#issuecomment-459578848, it'd be good to ignore this slow test This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] [druid] ccaominh commented on a change in pull request #6974: sql support for dynamic parameters

ccaominh commented on a change in pull request #6974: sql support for dynamic parameters URL: https://github.com/apache/druid/pull/6974#discussion_r379706863 ## File path: sql/src/main/java/org/apache/druid/sql/http/SqlQuery.java ## @@ -85,13 +110,14 @@ public boolean equals(final Object o) return header == sqlQuery.header && Objects.equals(query, sqlQuery.query) && resultFormat == sqlQuery.resultFormat && - Objects.equals(context, sqlQuery.context); + Objects.equals(context, sqlQuery.context) && Review comment: Suggest adding an `EqualsVerifier` unit test for this This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] [druid] ccaominh commented on a change in pull request #6974: sql support for dynamic parameters

ccaominh commented on a change in pull request #6974: sql support for dynamic

parameters

URL: https://github.com/apache/druid/pull/6974#discussion_r379706847

##

File path: sql/src/main/java/org/apache/druid/sql/http/SqlParameter.java

##

@@ -0,0 +1,126 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing,

+ * software distributed under the License is distributed on an

+ * "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+ * KIND, either express or implied. See the License for the

+ * specific language governing permissions and limitations

+ * under the License.

+ */

+

+package org.apache.druid.sql.http;

+

+import com.fasterxml.jackson.annotation.JsonCreator;

+import com.fasterxml.jackson.annotation.JsonIgnore;

+import com.fasterxml.jackson.annotation.JsonProperty;

+import com.google.common.base.Preconditions;

+import org.apache.calcite.avatica.ColumnMetaData;

+import org.apache.calcite.avatica.SqlType;

+import org.apache.calcite.avatica.remote.TypedValue;

+import org.apache.calcite.runtime.SqlFunctions;

+import org.apache.calcite.util.TimestampString;

+import org.apache.druid.java.util.common.DateTimes;

+

+import java.sql.Date;

+import java.util.Objects;

+

+public class SqlParameter

+{

+ private final SqlType type;

+ private final Object value;

+

+ @JsonCreator

+ public SqlParameter(

+ @JsonProperty("type") SqlType type,

+ @JsonProperty("value") Object value

+ )

+ {

+this.type = Preconditions.checkNotNull(type);

+this.value = Preconditions.checkNotNull(value);

+ }

+

+ @JsonProperty

+ public Object getValue()

+ {

+return value;

+ }

+

+ @JsonProperty

+ public SqlType getType()

+ {

+return type;

+ }

+

+ @JsonIgnore

+ public TypedValue getTypedValue()

+ {

+

+Object adjustedValue = value;

+

+// perhaps there is a better way to do this?

+if (type == SqlType.TIMESTAMP) {

+ // TypedValue.create for TIMESTAMP expects a long...

+ // but be lenient try to accept iso format and sql 'timestamp' format\

+ if (value instanceof String) {

+try {

+ adjustedValue = DateTimes.of((String) value).getMillis();

+}

+catch (IllegalArgumentException ignore) {

+}

+try {

+ adjustedValue = new TimestampString((String)

value).getMillisSinceEpoch();

+}

+catch (IllegalArgumentException ignore) {

+}

+ }

+} else if (type == SqlType.DATE) {

+ // TypedValue.create for DATE expects calcites internal int

representation of sql dates

+ // but be lenient try to accept sql date '-MM-dd' format and convert

to internal calcite int representation

+ if (value instanceof String) {

+try {

+ adjustedValue = SqlFunctions.toInt(Date.valueOf((String) value));

+}

+catch (IllegalArgumentException ignore) {

+}

+ }

+}

+return

TypedValue.create(ColumnMetaData.Rep.nonPrimitiveRepOf(type).name(),

adjustedValue);

+ }

+

+ @Override

+ public String toString()

+ {

+return "SqlParameter{" +

+ ", value={" + type.name() + ',' + value + '}' +

+ '}';

+ }

+

+ @Override

+ public boolean equals(Object o)

Review comment:

Suggest adding an `EqualsVerifier` unit test for this

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org

For additional commands, e-mail: commits-h...@druid.apache.org

[druid-website] branch download-footer-fix created (now b433ace)

This is an automated email from the ASF dual-hosted git repository. cwylie pushed a change to branch download-footer-fix in repository https://gitbox.apache.org/repos/asf/druid-website.git. at b433ace remove direct download link from footer, cleanup stray incubating references This branch includes the following new commits: new b433ace remove direct download link from footer, cleanup stray incubating references The 1 revisions listed above as "new" are entirely new to this repository and will be described in separate emails. The revisions listed as "add" were already present in the repository and have only been added to this reference. - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] [druid] ccaominh commented on a change in pull request #6974: sql support for dynamic parameters

ccaominh commented on a change in pull request #6974: sql support for dynamic

parameters

URL: https://github.com/apache/druid/pull/6974#discussion_r379707606

##

File path:

sql/src/test/java/org/apache/druid/sql/calcite/CalciteParameterQueryTest.java

##

@@ -0,0 +1,616 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing,

+ * software distributed under the License is distributed on an

+ * "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+ * KIND, either express or implied. See the License for the

+ * specific language governing permissions and limitations

+ * under the License.

+ */

+

+package org.apache.druid.sql.calcite;

+

+import com.google.common.collect.ImmutableList;

+import org.apache.calcite.avatica.SqlType;

+import org.apache.druid.common.config.NullHandling;

+import org.apache.druid.java.util.common.DateTimes;

+import org.apache.druid.java.util.common.Intervals;

+import org.apache.druid.java.util.common.granularity.Granularities;

+import org.apache.druid.query.Druids;

+import org.apache.druid.query.aggregation.CountAggregatorFactory;

+import org.apache.druid.query.aggregation.DoubleSumAggregatorFactory;

+import org.apache.druid.query.aggregation.FilteredAggregatorFactory;

+import org.apache.druid.query.dimension.DefaultDimensionSpec;

+import org.apache.druid.query.groupby.GroupByQuery;

+import org.apache.druid.query.ordering.StringComparators;

+import org.apache.druid.query.scan.ScanQuery;

+import org.apache.druid.segment.column.ValueType;

+import org.apache.druid.sql.calcite.filtration.Filtration;

+import org.apache.druid.sql.calcite.util.CalciteTests;

+import org.apache.druid.sql.http.SqlParameter;

+import org.junit.Test;

+

+/**

+ * This class has copied a subset of the tests in {@link CalciteQueryTest} and

replaced various parts of queries with

+ * dynamic parameters. It is NOT important that this file remains in sync with

{@link CalciteQueryTest}, the tests

+ * were merely chosen to produce a selection of parameter types and positions

within query expressions and have been

+ * renamed to reflect this

+ */

+public class CalciteParameterQueryTest extends BaseCalciteQueryTest

+{

+ private final boolean useDefault = NullHandling.replaceWithDefault();

Review comment:

Would it be beneficial to test binding a null?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org

For additional commands, e-mail: commits-h...@druid.apache.org

[druid-website-src] 01/01: remove direct download from footer, incubating cleanup

This is an automated email from the ASF dual-hosted git repository.

cwylie pushed a commit to branch download-footer-fix

in repository https://gitbox.apache.org/repos/asf/druid-website-src.git

commit ccca5bcb3819b94be35f54b0bdfbeb7dbfa37d41

Author: Clint Wylie

AuthorDate: Fri Feb 14 17:34:09 2020 -0800

remove direct download from footer, incubating cleanup

---

_includes/news-list.html | 2 +-

_includes/page_footer.html | 3 +--

2 files changed, 2 insertions(+), 3 deletions(-)

diff --git a/_includes/news-list.html b/_includes/news-list.html

index 72a8bc3..0338bbc 100644

--- a/_includes/news-list.html

+++ b/_includes/news-list.html

@@ -12,7 +12,7 @@

{% if ctr < max %}

{% assign ctr = ctr | plus:1 %}

-https://github.com/apache/incubator-druid/releases/tag/druid-{{

release.version }}">

+https://github.com/apache/druid/releases/tag/druid-{{

release.version }}">

Apache Druid {{ release.version | remove:

"-incubating"}} Released

{{ release.date | date: "%b %e %Y" }}

diff --git a/_includes/page_footer.html b/_includes/page_footer.html

index 0ccbef1..e46436b 100644

--- a/_includes/page_footer.html

+++ b/_includes/page_footer.html

@@ -15,8 +15,7 @@

https://groups.google.com/forum/#!forum/druid-user; target="_blank">·

https://twitter.com/druidio;

target="_blank">·

-https://www.apache.org/dyn/closer.cgi?path=/incubator/druid/{{

site.druid_versions[0].versions[0].version }}/apache-druid-{{

site.druid_versions[0].versions[0].version }}-bin.tar.gz" target="_blank">·

-https://github.com/apache/incubator-druid;

target="_blank">

+https://github.com/apache/druid;

target="_blank">

Copyright © 2020 https://www.apache.org/; target="_blank">Apache

Software Foundation.

-

To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org

For additional commands, e-mail: commits-h...@druid.apache.org

[druid-website-src] branch download-footer-fix created (now ccca5bc)

This is an automated email from the ASF dual-hosted git repository. cwylie pushed a change to branch download-footer-fix in repository https://gitbox.apache.org/repos/asf/druid-website-src.git. at ccca5bc remove direct download from footer, incubating cleanup This branch includes the following new commits: new ccca5bc remove direct download from footer, incubating cleanup The 1 revisions listed above as "new" are entirely new to this repository and will be described in separate emails. The revisions listed as "add" were already present in the repository and have only been added to this reference. - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] [druid-website] clintropolis opened a new pull request #71: remove direct download link from footer, cleanup stray incubating references

clintropolis opened a new pull request #71: remove direct download link from footer, cleanup stray incubating references URL: https://github.com/apache/druid-website/pull/71 https://github.com/apache/druid-website-src/pull/108 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] [druid-website-src] clintropolis opened a new pull request #108: remove direct download from footer, incubating cleanup

clintropolis opened a new pull request #108: remove direct download from footer, incubating cleanup URL: https://github.com/apache/druid-website-src/pull/108 Fixes https://github.com/apache/druid/issues/9264 by removing the link from the footer This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] [druid] jihoonson closed issue #9359: SQL firehose error

jihoonson closed issue #9359: SQL firehose error URL: https://github.com/apache/druid/issues/9359 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] [druid] jihoonson merged pull request #9365: Fix compatibility issues with SqlFirehose

jihoonson merged pull request #9365: Fix compatibility issues with SqlFirehose URL: https://github.com/apache/druid/pull/9365 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[druid] branch master updated (2e54755 -> 043abd5)

This is an automated email from the ASF dual-hosted git repository. jihoonson pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/druid.git. from 2e54755 add docker tutorial, friendlier docker-compose.yml, experimental java 11 dockerfile (#9262) add 043abd5 Fix compatibility issues with SqlFirehose (#9365) No new revisions were added by this update. Summary of changes: .../firehose/PrefetchSqlFirehoseFactory.java | 33 -- .../realtime/firehose/SqlFirehoseFactory.java | 19 + 2 files changed, 50 insertions(+), 2 deletions(-) - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] [druid] ccaominh commented on a change in pull request #9353: Inject things instead of subclassing everything for parallel task testing

ccaominh commented on a change in pull request #9353: Inject things instead of

subclassing everything for parallel task testing

URL: https://github.com/apache/druid/pull/9353#discussion_r379695817

##

File path:

indexing-service/src/main/java/org/apache/druid/indexing/common/task/batch/parallel/HttpShuffleClient.java

##

@@ -0,0 +1,78 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing,

+ * software distributed under the License is distributed on an

+ * "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+ * KIND, either express or implied. See the License for the

+ * specific language governing permissions and limitations

+ * under the License.

+ */

+

+package org.apache.druid.indexing.common.task.batch.parallel;

+

+import com.google.inject.Inject;

+import org.apache.druid.guice.annotations.EscalatedClient;

+import org.apache.druid.java.util.common.FileUtils;

+import org.apache.druid.java.util.common.StringUtils;

+import org.apache.druid.java.util.http.client.HttpClient;

+import org.apache.druid.java.util.http.client.Request;

+import

org.apache.druid.java.util.http.client.response.InputStreamResponseHandler;

+import org.jboss.netty.handler.codec.http.HttpMethod;

+

+import java.io.File;

+import java.io.IOException;

+import java.net.URI;

+import java.util.concurrent.ExecutionException;

+

+public class HttpShuffleClient implements ShuffleClient

+{

+ private static final int BUFFER_SIZE = 1024 * 4;

+ private static final int NUM_FETCH_RETRIES = 3;

+

+ private final HttpClient httpClient;

+

+ @Inject

+ public HttpShuffleClient(@EscalatedClient HttpClient httpClient)

+ {

+this.httpClient = httpClient;

+ }

+

+ @Override

+ public > File fetchSegmentFile(

+ File partitionDir,

+ String supervisorTaskId,

+ P location

+ ) throws IOException

+ {

+final byte[] buffer = new byte[BUFFER_SIZE];

Review comment:

This was previously a class member variable, so it was only allocated once.

Is there a reason that's changed here?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org

For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] [druid] ccaominh commented on a change in pull request #9353: Inject things instead of subclassing everything for parallel task testing

ccaominh commented on a change in pull request #9353: Inject things instead of

subclassing everything for parallel task testing

URL: https://github.com/apache/druid/pull/9353#discussion_r379695930

##

File path:

indexing-service/src/main/java/org/apache/druid/indexing/common/task/batch/parallel/HttpShuffleClient.java

##

@@ -0,0 +1,78 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing,

+ * software distributed under the License is distributed on an

+ * "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+ * KIND, either express or implied. See the License for the

+ * specific language governing permissions and limitations

+ * under the License.

+ */

+

+package org.apache.druid.indexing.common.task.batch.parallel;

+

+import com.google.inject.Inject;

+import org.apache.druid.guice.annotations.EscalatedClient;

+import org.apache.druid.java.util.common.FileUtils;

+import org.apache.druid.java.util.common.StringUtils;

+import org.apache.druid.java.util.http.client.HttpClient;

+import org.apache.druid.java.util.http.client.Request;

+import

org.apache.druid.java.util.http.client.response.InputStreamResponseHandler;

+import org.jboss.netty.handler.codec.http.HttpMethod;

+

+import java.io.File;

+import java.io.IOException;

+import java.net.URI;

+import java.util.concurrent.ExecutionException;

+

+public class HttpShuffleClient implements ShuffleClient

+{

+ private static final int BUFFER_SIZE = 1024 * 4;

+ private static final int NUM_FETCH_RETRIES = 3;

+

+ private final HttpClient httpClient;

+

+ @Inject

+ public HttpShuffleClient(@EscalatedClient HttpClient httpClient)

+ {

+this.httpClient = httpClient;

+ }

+

+ @Override

+ public > File fetchSegmentFile(

Review comment:

Is it worth adding unit tests for this method?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org

For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] [druid] ccaominh commented on a change in pull request #9353: Inject things instead of subclassing everything for parallel task testing

ccaominh commented on a change in pull request #9353: Inject things instead of

subclassing everything for parallel task testing

URL: https://github.com/apache/druid/pull/9353#discussion_r379703293

##

File path:

indexing-service/src/test/java/org/apache/druid/indexing/common/task/batch/parallel/SinglePhaseParallelIndexingTest.java

##

@@ -197,62 +182,52 @@ private void testRunAndOverwrite(@Nullable Interval

inputInterval, Granularity s

}

@Test

- public void testWithoutInterval() throws Exception

+ public void testWithoutInterval()

{

testRunAndOverwrite(null, Granularities.DAY);

}

@Test()

- public void testRunInParallel() throws Exception

+ public void testRunInParallel()

{

// Ingest all data.

-testRunAndOverwrite(Intervals.of("2017/2018"), Granularities.DAY);

+testRunAndOverwrite(Intervals.of("2017-12/P1M"), Granularities.DAY);

Review comment:

If the interval had been a named constant for the class, then updating it

for all of the tests would have been easier. Perhaps it's worth creating the

named constant now.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org

For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] [druid] ccaominh commented on a change in pull request #9353: Inject things instead of subclassing everything for parallel task testing

ccaominh commented on a change in pull request #9353: Inject things instead of

subclassing everything for parallel task testing

URL: https://github.com/apache/druid/pull/9353#discussion_r379702277

##

File path:

indexing-service/src/test/java/org/apache/druid/indexing/common/task/batch/parallel/AbstractParallelIndexSupervisorTaskTest.java

##

@@ -35,53 +43,80 @@

import org.apache.druid.data.input.impl.TimestampSpec;

import org.apache.druid.indexer.RunnerTaskState;

import org.apache.druid.indexer.TaskLocation;

-import org.apache.druid.indexer.TaskState;

import org.apache.druid.indexer.TaskStatus;

import org.apache.druid.indexer.TaskStatusPlus;

+import org.apache.druid.indexing.common.RetryPolicyConfig;

+import org.apache.druid.indexing.common.RetryPolicyFactory;

+import org.apache.druid.indexing.common.SegmentLoaderFactory;

import org.apache.druid.indexing.common.TaskInfoProvider;

import org.apache.druid.indexing.common.TaskToolbox;

+import org.apache.druid.indexing.common.TestUtils;

+import org.apache.druid.indexing.common.actions.TaskActionClient;

import org.apache.druid.indexing.common.config.TaskConfig;

import

org.apache.druid.indexing.common.stats.DropwizardRowIngestionMetersFactory;

+import org.apache.druid.indexing.common.stats.RowIngestionMetersFactory;

+import org.apache.druid.indexing.common.task.CompactionTask;

import org.apache.druid.indexing.common.task.IndexTaskClientFactory;

import org.apache.druid.indexing.common.task.IngestionTestBase;

import org.apache.druid.indexing.common.task.NoopTestTaskReportFileWriter;

import org.apache.druid.indexing.common.task.Task;

import org.apache.druid.indexing.common.task.TaskResource;

import org.apache.druid.indexing.common.task.TestAppenderatorsManager;

+import org.apache.druid.indexing.overlord.Segments;

import org.apache.druid.indexing.worker.IntermediaryDataManager;

import org.apache.druid.indexing.worker.config.WorkerConfig;

import org.apache.druid.java.util.common.DateTimes;

+import org.apache.druid.java.util.common.IAE;

import org.apache.druid.java.util.common.ISE;

import org.apache.druid.java.util.common.concurrent.Execs;

+import org.apache.druid.java.util.common.logger.Logger;

+import org.apache.druid.math.expr.ExprMacroTable;

import org.apache.druid.metadata.EntryExistsException;

+import org.apache.druid.query.expression.LookupEnabledTestExprMacroTable;

+import org.apache.druid.segment.IndexIO;

import org.apache.druid.segment.join.NoopJoinableFactory;

+import org.apache.druid.segment.loading.LocalDataSegmentPuller;

import org.apache.druid.segment.loading.LocalDataSegmentPusher;

import org.apache.druid.segment.loading.LocalDataSegmentPusherConfig;

import org.apache.druid.segment.loading.NoopDataSegmentKiller;

import org.apache.druid.segment.loading.StorageLocationConfig;

+import org.apache.druid.segment.realtime.appenderator.AppenderatorsManager;

import org.apache.druid.segment.realtime.appenderator.SegmentIdWithShardSpec;

+import org.apache.druid.segment.realtime.firehose.ChatHandlerProvider;

import org.apache.druid.segment.realtime.firehose.NoopChatHandlerProvider;

import org.apache.druid.server.DruidNode;

import org.apache.druid.server.security.AllowAllAuthorizer;

+import org.apache.druid.server.security.AuthConfig;

import org.apache.druid.server.security.Authorizer;

import org.apache.druid.server.security.AuthorizerMapper;

+import org.apache.druid.timeline.DataSegment;

+import org.apache.druid.timeline.SegmentId;

+import org.checkerframework.checker.nullness.qual.MonotonicNonNull;

import org.joda.time.DateTime;

import org.joda.time.Duration;

+import org.joda.time.Interval;

+import org.junit.After;

+import org.junit.Before;

import org.junit.Rule;

import org.junit.rules.TemporaryFolder;

import javax.annotation.Nullable;

import java.io.File;

import java.io.IOException;

import java.util.Arrays;

+import java.util.Collection;

+import java.util.Collections;

+import java.util.List;

import java.util.Map;

+import java.util.Set;

import java.util.concurrent.ConcurrentHashMap;

import java.util.concurrent.ConcurrentMap;

import java.util.concurrent.ExecutionException;

import java.util.concurrent.Future;

public class AbstractParallelIndexSupervisorTaskTest extends IngestionTestBase

{

+ static final String DISABLE_INJECT_CONTEXT_KEY = "disableInject";

Review comment:

Suggestion: Rename to something like `DISABLE_TASK_INJECT_CONTEXT_KEY`.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org

For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] [druid] ccaominh commented on a change in pull request #9353: Inject things instead of subclassing everything for parallel task testing

ccaominh commented on a change in pull request #9353: Inject things instead of

subclassing everything for parallel task testing

URL: https://github.com/apache/druid/pull/9353#discussion_r379702969

##

File path:

indexing-service/src/test/java/org/apache/druid/indexing/common/task/batch/parallel/AbstractParallelIndexSupervisorTaskTest.java

##

@@ -151,127 +195,196 @@ protected void initializeIntermediaryDataManager()

throws IOException

),

null

);

+LocalShuffleClient shuffleClient = new

LocalShuffleClient(intermediaryDataManager);

+coordinatorClient = new LocalCoordinatorClient();

+prepareObjectMapper(

+objectMapper,

+getIndexIO(),

+indexingServiceClient,

+indexTaskClientFactory,

+shuffleClient,

+coordinatorClient

+);

}

- public class LocalIndexingServiceClient extends NoopIndexingServiceClient

+ @After

+ public void tearDownAbstractParallelIndexSupervisorTaskTest()

+ {

+taskRunner.shutdown();

+temporaryFolder.delete();

+ }

+

+ protected LocalIndexingServiceClient getIndexingServiceClient()

+ {

+return indexingServiceClient;

+ }

+

+ protected IndexTaskClientFactory

getParallelIndexTaskClientFactory()

+ {

+return indexTaskClientFactory;

+ }

+

+ protected CoordinatorClient getCoordinatorClient()

{

-private final ConcurrentMap> tasks = new

ConcurrentHashMap<>();

+return coordinatorClient;

+ }

+

+ private static class TaskContainer

+ {

+private final Task task;

+@MonotonicNonNull

+private volatile Future statusFuture;

+@MonotonicNonNull

+private volatile TestLocalTaskActionClient actionClient;

+

+private TaskContainer(Task task)

+{

+ this.task = task;

+}

+

+private void setStatusFuture(Future statusFuture)

+{

+ this.statusFuture = statusFuture;

+}

+

+private void setActionClient(TestLocalTaskActionClient actionClient)

+{

+ this.actionClient = actionClient;

+}

+ }

+

+ public class SimpleThreadingTaskRunner

+ {

+private final ConcurrentMap tasks = new

ConcurrentHashMap<>();

private final ListeningExecutorService service =

MoreExecutors.listeningDecorator(

-Execs.multiThreaded(5, "parallel-index-supervisor-task-test-%d")

+Execs.multiThreaded(5, "simple-threading-task-runner-%d")

);

-@Override

-public String runTask(Object taskObject)

+public String run(Task task)

+{

+ runTask(task);

+ return task.getId();

+}

+

+private TaskStatus runAndWait(Task task)

{

- final Task subTask = (Task) taskObject;

try {

-getTaskStorage().insert(subTask, TaskStatus.running(subTask.getId()));

+return runTask(task).get();

}

- catch (EntryExistsException e) {

+ catch (InterruptedException e) {

+Thread.currentThread().interrupt();

+throw new RuntimeException(e);

+ }

+ catch (ExecutionException e) {

throw new RuntimeException(e);

}

+}

- // WARNING: In production, subtasks are created via HTTP calls and

instantiated by Jackson, which means they

- // cannot share objects like they can here. For example, if the indexing

task uses JsonParseSpec, the same

- // JSONFlattenerMaker instance is shared among subtasks, which is bad

since JSONFlattenerMaker is not thread-safe.

- tasks.put(subTask.getId(), service.submit(() -> {

-try {

- final TaskToolbox toolbox = createTaskToolbox(subTask);

- if (subTask.isReady(toolbox.getTaskActionClient())) {

-return subTask.run(toolbox);

- } else {

-getTaskStorage().setStatus(TaskStatus.failure(subTask.getId()));

-throw new ISE("task[%s] is not ready", subTask.getId());

- }

-}

-catch (Exception e) {

- getTaskStorage().setStatus(TaskStatus.failure(subTask.getId(),

e.getMessage()));

- throw new RuntimeException(e);

+private TaskStatus waitToFinish(Task task)

+{

+ final TaskContainer taskContainer = tasks.get(task.getId());

+ if (taskContainer == null) {

+throw new IAE("Unknown task[%s]", task.getId());

+ }

+ try {

+while (taskContainer.statusFuture == null &&

!Thread.currentThread().isInterrupted()) {

+ Thread.sleep(10);

}

- }));

- return subTask.getId();

+return taskContainer.statusFuture.get();

Review comment:

Previously, tests would be able to specify a timeout, which is useful for

failing tests sooner than the travis timeout.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [druid] mrsrinivas commented on issue #9163: Improved the readability and fixed few java warnings

mrsrinivas commented on issue #9163: Improved the readability and fixed few java warnings URL: https://github.com/apache/druid/pull/9163#issuecomment-586563485 @gianm thank you for the review. I fixed it. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] [druid] clintropolis opened a new pull request #9363: fix druid-sql Expressions.toQueryGranularity to be more correct

clintropolis opened a new pull request #9363: fix druid-sql Expressions.toQueryGranularity to be more correct URL: https://github.com/apache/druid/pull/9363 Also improves javadocs of `Expr.getIdentifierIfIdentifier` and `Expr.getBindingIfIdentifier`. This isn't an actual bug because the expressions that `Expressions.toQueryGranularity` can recognize and transform are limited to cases where the time floor expressions argument `Identifier.identifier` will always match `IdentifierExpr.binding`, but changed anyway to be more consistent with intention of the method. Related #9362 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] [druid] BigRantLing opened a new issue #9364: DirectDruidClient openConnections may be samller than the real connctions between the servers

BigRantLing opened a new issue #9364: DirectDruidClient openConnections may be samller than the real connctions between the servers URL: https://github.com/apache/druid/issues/9364 Hi guys, Our Druid version is 0.9.2. When I was going to make some changs to code of DirectDruidClient , I found some original code confuse me. The **openConnections** is a variable means the connctions ammount of queryable servers. And it will increase after broker requesting a server.But it only represent the connection ammount between this broker and the server requested. When we use **ConnectionCountServerSelectorStrategy** to decide server should be requested,the broker will choose the server which openConnections is small. But, the real active connection ammount between the sever which has been selected and all brokers may be bigger than **openConnections**. Is that right? Thanks for reply. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] [druid] Raboo commented on issue #6493: Support for request logging in kafka emitter.

Raboo commented on issue #6493: Support for request logging in kafka emitter. URL: https://github.com/apache/druid/pull/6493#issuecomment-586205200 This is sad that this pr never was merged. It would be awesome if someone would pick this up. I can contribute with lots of emojis if that happens! This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] [druid] stale[bot] closed pull request #8893: Break up docker environment config file

stale[bot] closed pull request #8893: Break up docker environment config file URL: https://github.com/apache/druid/pull/8893 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org

[GitHub] [druid] stale[bot] commented on issue #8893: Break up docker environment config file

stale[bot] commented on issue #8893: Break up docker environment config file URL: https://github.com/apache/druid/pull/8893#issuecomment-586206540 This pull request/issue has been closed due to lack of activity. If you think that is incorrect, or the pull request requires review, you can revive the PR at any time. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@druid.apache.org For additional commands, e-mail: commits-h...@druid.apache.org