[jira] [Commented] (HBASE-25651) NORMALIZER_TARGET_REGION_SIZE needs a unit in its name

[

https://issues.apache.org/jira/browse/HBASE-25651?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17361379#comment-17361379

]

Rahul Kumar commented on HBASE-25651:

-

Sure [~ndimiduk], will do.

> NORMALIZER_TARGET_REGION_SIZE needs a unit in its name

> --

>

> Key: HBASE-25651

> URL: https://issues.apache.org/jira/browse/HBASE-25651

> Project: HBase

> Issue Type: Bug

> Components: Normalizer

>Affects Versions: 2.3.0

>Reporter: Nick Dimiduk

>Assignee: Rahul Kumar

>Priority: Major

> Fix For: 3.0.0-alpha-1

>

>

> On a per-table basis, the normalizer can be configured with a specific region

> size target via {{NORMALIZER_TARGET_REGION_SIZE}}. This value is used in a

> context where size values are in the megabyte scale. However, this

> configuration name does not mention "megabyte" or "mb" anywhere, so it's very

> easy for an operator to get the configured value wrong by many orders of

> magnitude.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Updated] (HBASE-25596) Fix NPE in ReplicationSourceManager as well as avoid permanently unreplicated data due to EOFException from WAL

[

https://issues.apache.org/jira/browse/HBASE-25596?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Duo Zhang updated HBASE-25596:

--

Component/s: Replication

> Fix NPE in ReplicationSourceManager as well as avoid permanently unreplicated

> data due to EOFException from WAL

> ---

>

> Key: HBASE-25596

> URL: https://issues.apache.org/jira/browse/HBASE-25596

> Project: HBase

> Issue Type: Bug

> Components: Replication

>Reporter: Sandeep Pal

>Assignee: Sandeep Pal

>Priority: Critical

> Fix For: 3.0.0-alpha-1, 1.7.0, 2.5.0, 2.4.2

>

>

> There seems to be a major issue with how we handle the EOF exception from

> WALEntryStream.

> Problem:

> When we see EOFException, we try to handle it and remove it from the log

> queue, but we never try to ship the existing batch of entries. *This is a

> permanent data loss in replication.*

>

> Secondly, we do not stop the reader on encountering the EOFException and thus

> if EOFException was on the last WAL, we still try to process the WALEntry

> stream and ship the empty batch with lastWALPath set to null. This is the

> reason of NPE as below which *crash* the region server.

> {code:java}

> 2021-02-16 15:33:21,293 ERROR [,60020,1613262147968]

> regionserver.ReplicationSource - Unexpected exception in

> ReplicationSourceWorkerThread,

> currentPath=nulljava.lang.NullPointerExceptionat

> org.apache.hadoop.hbase.replication.regionserver.ReplicationSourceManager.logPositionAndCleanOldLogs(ReplicationSourceManager.java:193)at

>

> org.apache.hadoop.hbase.replication.regionserver.ReplicationSource$ReplicationSourceShipperThread.updateLogPosition(ReplicationSource.java:831)at

>

> org.apache.hadoop.hbase.replication.regionserver.ReplicationSource$ReplicationSourceShipperThread.shipEdits(ReplicationSource.java:746)at

>

> org.apache.hadoop.hbase.replication.regionserver.ReplicationSource$ReplicationSourceShipperThread.run(ReplicationSource.java:650)2021-02-16

> 15:33:21,294 INFO [,60020,1613262147968] regionserver.HRegionServer -

> STOPPED: Unexpected exception in ReplicationSourceWorkerThread

> {code}

>

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (HBASE-25967) The readRequestsCount does not calculate when the outResults is empty

[

https://issues.apache.org/jira/browse/HBASE-25967?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17361344#comment-17361344

]

Hudson commented on HBASE-25967:

Results for branch branch-2.4

[build #140 on

builds.a.o|https://ci-hadoop.apache.org/job/HBase/job/HBase%20Nightly/job/branch-2.4/140/]:

(/) *{color:green}+1 overall{color}*

details (if available):

(/) {color:green}+1 general checks{color}

-- For more information [see general

report|https://ci-hadoop.apache.org/job/HBase/job/HBase%20Nightly/job/branch-2.4/140/General_20Nightly_20Build_20Report/]

(/) {color:green}+1 jdk8 hadoop2 checks{color}

-- For more information [see jdk8 (hadoop2)

report|https://ci-hadoop.apache.org/job/HBase/job/HBase%20Nightly/job/branch-2.4/140/JDK8_20Nightly_20Build_20Report_20_28Hadoop2_29/]

(/) {color:green}+1 jdk8 hadoop3 checks{color}

-- For more information [see jdk8 (hadoop3)

report|https://ci-hadoop.apache.org/job/HBase/job/HBase%20Nightly/job/branch-2.4/140/JDK8_20Nightly_20Build_20Report_20_28Hadoop3_29/]

(/) {color:green}+1 jdk11 hadoop3 checks{color}

-- For more information [see jdk11

report|https://ci-hadoop.apache.org/job/HBase/job/HBase%20Nightly/job/branch-2.4/140/JDK11_20Nightly_20Build_20Report_20_28Hadoop3_29/]

(/) {color:green}+1 source release artifact{color}

-- See build output for details.

(/) {color:green}+1 client integration test{color}

> The readRequestsCount does not calculate when the outResults is empty

> -

>

> Key: HBASE-25967

> URL: https://issues.apache.org/jira/browse/HBASE-25967

> Project: HBase

> Issue Type: Bug

> Components: metrics

>Reporter: Zheng Wang

>Assignee: Zheng Wang

>Priority: Major

> Fix For: 2.5.0, 2.3.6, 3.0.0-alpha-2, 2.4.5

>

>

> This metric is about request, so should not depend on the result.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (HBASE-25649) Complete the work on moving all the balancer related classes to hbase-balancer module

[ https://issues.apache.org/jira/browse/HBASE-25649?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17361339#comment-17361339 ] Clara Xiong commented on HBASE-25649: - I see. I do have more pr in the pipeline and other observability pr that I want to back port, backporting every single one seems a lot of overhead. But I see your point there was already another refactoring than this one, may be I will just do a refactoring to align. > Complete the work on moving all the balancer related classes to > hbase-balancer module > - > > Key: HBASE-25649 > URL: https://issues.apache.org/jira/browse/HBASE-25649 > Project: HBase > Issue Type: Umbrella > Components: Balancer >Reporter: Duo Zhang >Assignee: Duo Zhang >Priority: Major > Fix For: 3.0.0-alpha-1 > > > This is the follow up issue of HBASE-23933, where we set up the new > hbase-balancer module and moved several classes into it. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (HBASE-25924) Seeing a spike in uncleanlyClosedWALs metric.

[

https://issues.apache.org/jira/browse/HBASE-25924?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17361335#comment-17361335

]

Hudson commented on HBASE-25924:

Results for branch branch-2.3

[build #235 on

builds.a.o|https://ci-hadoop.apache.org/job/HBase/job/HBase%20Nightly/job/branch-2.3/235/]:

(/) *{color:green}+1 overall{color}*

details (if available):

(/) {color:green}+1 general checks{color}

-- For more information [see general

report|https://ci-hadoop.apache.org/job/HBase/job/HBase%20Nightly/job/branch-2.3/235/General_20Nightly_20Build_20Report/]

(/) {color:green}+1 jdk8 hadoop2 checks{color}

-- For more information [see jdk8 (hadoop2)

report|https://ci-hadoop.apache.org/job/HBase/job/HBase%20Nightly/job/branch-2.3/235/JDK8_20Nightly_20Build_20Report_20_28Hadoop2_29/]

(/) {color:green}+1 jdk8 hadoop3 checks{color}

-- For more information [see jdk8 (hadoop3)

report|https://ci-hadoop.apache.org/job/HBase/job/HBase%20Nightly/job/branch-2.3/235/JDK8_20Nightly_20Build_20Report_20_28Hadoop3_29/]

(/) {color:green}+1 jdk11 hadoop3 checks{color}

-- For more information [see jdk11

report|https://ci-hadoop.apache.org/job/HBase/job/HBase%20Nightly/job/branch-2.3/235/JDK11_20Nightly_20Build_20Report_20_28Hadoop3_29/]

(/) {color:green}+1 source release artifact{color}

-- See build output for details.

(/) {color:green}+1 client integration test{color}

> Seeing a spike in uncleanlyClosedWALs metric.

> -

>

> Key: HBASE-25924

> URL: https://issues.apache.org/jira/browse/HBASE-25924

> Project: HBase

> Issue Type: Bug

> Components: Replication, wal

>Affects Versions: 3.0.0-alpha-1, 1.7.0, 2.5.0, 2.4.4

>Reporter: Rushabh Shah

>Assignee: Rushabh Shah

>Priority: Major

> Fix For: 3.0.0-alpha-1, 2.5.0, 2.4.4, 1.7.1

>

>

> Getting the following log line in all of our production clusters when

> WALEntryStream is dequeuing WAL file.

> {noformat}

> 2021-05-02 04:01:30,437 DEBUG [04901996] regionserver.WALEntryStream -

> Reached the end of WAL file hdfs://. It was not closed

> cleanly, so we did not parse 8 bytes of data. This is normally ok.

> {noformat}

> The 8 bytes are usually the trailer serialized size (SIZE_OF_INT (4bytes) +

> "LAWP" (4 bytes) = 8 bytes)

> While dequeue'ing the WAL file from WALEntryStream, we reset the reader here.

> [WALEntryStream|https://github.com/apache/hbase/blob/branch-1/hbase-server/src/main/java/org/apache/hadoop/hbase/replication/regionserver/WALEntryStream.java#L199-L221]

> {code:java}

> private void tryAdvanceEntry() throws IOException {

> if (checkReader()) {

> readNextEntryAndSetPosition();

> if (currentEntry == null) { // no more entries in this log file - see

> if log was rolled

> if (logQueue.getQueue(walGroupId).size() > 1) { // log was rolled

> // Before dequeueing, we should always get one more attempt at

> reading.

> // This is in case more entries came in after we opened the reader,

> // and a new log was enqueued while we were reading. See HBASE-6758

> resetReader(); ---> HERE

> readNextEntryAndSetPosition();

> if (currentEntry == null) {

> if (checkAllBytesParsed()) { // now we're certain we're done with

> this log file

> dequeueCurrentLog();

> if (openNextLog()) {

> readNextEntryAndSetPosition();

> }

> }

> }

> } // no other logs, we've simply hit the end of the current open log.

> Do nothing

> }

> }

> // do nothing if we don't have a WAL Reader (e.g. if there's no logs in

> queue)

> }

> {code}

> In resetReader, we call the following methods, WALEntryStream#resetReader

> > ProtobufLogReader#reset ---> ProtobufLogReader#initInternal.

> In ProtobufLogReader#initInternal, we try to create the whole reader object

> from scratch to see if any new data has been written.

> We reset all the fields of ProtobufLogReader except for ReaderBase#fileLength.

> We calculate whether trailer is present or not depending on fileLength.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (HBASE-25993) Make excluded SSL cipher suites configurable for all Web UIs

[

https://issues.apache.org/jira/browse/HBASE-25993?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17361336#comment-17361336

]

Hudson commented on HBASE-25993:

Results for branch branch-2.3

[build #235 on

builds.a.o|https://ci-hadoop.apache.org/job/HBase/job/HBase%20Nightly/job/branch-2.3/235/]:

(/) *{color:green}+1 overall{color}*

details (if available):

(/) {color:green}+1 general checks{color}

-- For more information [see general

report|https://ci-hadoop.apache.org/job/HBase/job/HBase%20Nightly/job/branch-2.3/235/General_20Nightly_20Build_20Report/]

(/) {color:green}+1 jdk8 hadoop2 checks{color}

-- For more information [see jdk8 (hadoop2)

report|https://ci-hadoop.apache.org/job/HBase/job/HBase%20Nightly/job/branch-2.3/235/JDK8_20Nightly_20Build_20Report_20_28Hadoop2_29/]

(/) {color:green}+1 jdk8 hadoop3 checks{color}

-- For more information [see jdk8 (hadoop3)

report|https://ci-hadoop.apache.org/job/HBase/job/HBase%20Nightly/job/branch-2.3/235/JDK8_20Nightly_20Build_20Report_20_28Hadoop3_29/]

(/) {color:green}+1 jdk11 hadoop3 checks{color}

-- For more information [see jdk11

report|https://ci-hadoop.apache.org/job/HBase/job/HBase%20Nightly/job/branch-2.3/235/JDK11_20Nightly_20Build_20Report_20_28Hadoop3_29/]

(/) {color:green}+1 source release artifact{color}

-- See build output for details.

(/) {color:green}+1 client integration test{color}

> Make excluded SSL cipher suites configurable for all Web UIs

>

>

> Key: HBASE-25993

> URL: https://issues.apache.org/jira/browse/HBASE-25993

> Project: HBase

> Issue Type: Improvement

>Affects Versions: 3.0.0-alpha-1, 2.2.7, 2.5.0, 2.3.5, 2.4.4

>Reporter: Mate Szalay-Beko

>Assignee: Mate Szalay-Beko

>Priority: Major

> Fix For: 3.0.0-alpha-1, 2.5.0, 2.3.6, 2.4.5

>

>

> When starting a jetty http server, one can explicitly exclude certain

> (unsecure) SSL cipher suites. This can be especially important, when the

> HBase cluster needs to be compliant with security regulations (e.g. FIPS).

> Currently it is possible to set the excluded ciphers for the ThriftServer

> ("hbase.thrift.ssl.exclude.cipher.suites") or for the RestServer

> ("hbase.rest.ssl.exclude.cipher.suites"), but one can not configure it for

> the regular InfoServer started by e.g. the master or region servers.

> In this commit I want to introduce a new configuration

> "ssl.server.exclude.cipher.list" to configure the excluded cipher suites for

> the http server started by the InfoServer. This parameter has the same name

> and will work in the same way, as it was already implemented in hadoop (e.g.

> for hdfs/yarn). See: HADOOP-12668, HADOOP-14341

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Resolved] (HBASE-25947) Backport 'HBASE-25894 Improve the performance for region load and region count related cost functions' to branch-2.4 and branch-2.3

[ https://issues.apache.org/jira/browse/HBASE-25947?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Duo Zhang resolved HBASE-25947. --- Hadoop Flags: Reviewed Resolution: Fixed Pushed to branch-2.4 and branch-2.3. Thanks all for reviewing. > Backport 'HBASE-25894 Improve the performance for region load and region > count related cost functions' to branch-2.4 and branch-2.3 > --- > > Key: HBASE-25947 > URL: https://issues.apache.org/jira/browse/HBASE-25947 > Project: HBase > Issue Type: Sub-task > Components: Balancer, Performance >Reporter: Duo Zhang >Assignee: Duo Zhang >Priority: Major > Fix For: 2.3.6, 2.4.5 > > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (HBASE-24984) WAL corruption due to early DBBs re-use when Durability.ASYNC_WAL is used with multi operation

[ https://issues.apache.org/jira/browse/HBASE-24984?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Duo Zhang updated HBASE-24984: -- Fix Version/s: 2.4.5 2.3.6 2.5.0 3.0.0-alpha-1 > WAL corruption due to early DBBs re-use when Durability.ASYNC_WAL is used > with multi operation > -- > > Key: HBASE-24984 > URL: https://issues.apache.org/jira/browse/HBASE-24984 > Project: HBase > Issue Type: Bug > Components: rpc, wal >Affects Versions: 2.1.6 >Reporter: Liu Junhong >Priority: Critical > Fix For: 3.0.0-alpha-1, 2.5.0, 2.3.6, 2.4.5 > > > After bugfix HBASE-22539, When client use BufferedMutator or multiple > mutation , there will be one RpcCall and mutliple FSWALEntry . At the time > RpcCall finish and one FSWALEntry call release() , the remain FSWALEntries > may trigger RuntimeException or segmentation fault . > We should use RefCnt instead of AtomicInteger for > org.apache.hadoop.hbase.ipc.ServerCall.reference? -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (HBASE-24984) WAL corruption due to early DBBs re-use when Durability.ASYNC_WAL is used with multi operation

[ https://issues.apache.org/jira/browse/HBASE-24984?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17361322#comment-17361322 ] Duo Zhang commented on HBASE-24984: --- I think first we could introduce a UT to reproduce this bug, and then let's find a proper way to fix. > WAL corruption due to early DBBs re-use when Durability.ASYNC_WAL is used > with multi operation > -- > > Key: HBASE-24984 > URL: https://issues.apache.org/jira/browse/HBASE-24984 > Project: HBase > Issue Type: Bug > Components: rpc, wal >Affects Versions: 2.1.6 >Reporter: Liu Junhong >Priority: Critical > > After bugfix HBASE-22539, When client use BufferedMutator or multiple > mutation , there will be one RpcCall and mutliple FSWALEntry . At the time > RpcCall finish and one FSWALEntry call release() , the remain FSWALEntries > may trigger RuntimeException or segmentation fault . > We should use RefCnt instead of AtomicInteger for > org.apache.hadoop.hbase.ipc.ServerCall.reference? -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (HBASE-24984) WAL corruption due to early DBBs re-use when Durability.ASYNC_WAL is used with multi operation

[ https://issues.apache.org/jira/browse/HBASE-24984?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Duo Zhang updated HBASE-24984: -- Priority: Critical (was: Major) > WAL corruption due to early DBBs re-use when Durability.ASYNC_WAL is used > with multi operation > -- > > Key: HBASE-24984 > URL: https://issues.apache.org/jira/browse/HBASE-24984 > Project: HBase > Issue Type: Bug > Components: rpc, wal >Affects Versions: 2.1.6 >Reporter: Liu Junhong >Priority: Critical > > After bugfix HBASE-22539, When client use BufferedMutator or multiple > mutation , there will be one RpcCall and mutliple FSWALEntry . At the time > RpcCall finish and one FSWALEntry call release() , the remain FSWALEntries > may trigger RuntimeException or segmentation fault . > We should use RefCnt instead of AtomicInteger for > org.apache.hadoop.hbase.ipc.ServerCall.reference? -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (HBASE-25649) Complete the work on moving all the balancer related classes to hbase-balancer module

[ https://issues.apache.org/jira/browse/HBASE-25649?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17361318#comment-17361318 ] Duo Zhang commented on HBASE-25649: --- [~claraxiong] See HBASE-25947, I've backported the most important performance improvements to branch-2.4 and branch-2.3. And it is already one branch-2. For me, as the main work is based on the previous refactoring of moving rs group related code to hbase-server, which is only done on master branch, so I'm not sure what is the best way to land the refactoring in this issue to branch-2. If you have clear requirements, just go ahead take the backport work. Thanks. > Complete the work on moving all the balancer related classes to > hbase-balancer module > - > > Key: HBASE-25649 > URL: https://issues.apache.org/jira/browse/HBASE-25649 > Project: HBase > Issue Type: Umbrella > Components: Balancer >Reporter: Duo Zhang >Assignee: Duo Zhang >Priority: Major > Fix For: 3.0.0-alpha-1 > > > This is the follow up issue of HBASE-23933, where we set up the new > hbase-balancer module and moved several classes into it. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Created] (HBASE-25995) Change the method name for DoubleArrayCost.setCosts

Duo Zhang created HBASE-25995: - Summary: Change the method name for DoubleArrayCost.setCosts Key: HBASE-25995 URL: https://issues.apache.org/jira/browse/HBASE-25995 Project: HBase Issue Type: Improvement Components: Balancer Environment: Reporter: Duo Zhang Assignee: Duo Zhang See this discussion. https://github.com/apache/hbase/pull/3276#discussion_r643448466 It is a little strange to have setter to pass in a function. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Comment Edited] (HBASE-25649) Complete the work on moving all the balancer related classes to hbase-balancer module

[ https://issues.apache.org/jira/browse/HBASE-25649?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17361303#comment-17361303 ] Clara Xiong edited comment on HBASE-25649 at 6/10/21, 11:48 PM: Hi [~zhangduo] The refactoring was great. The module in a much better state. May I ask this to be back ported to branch 2? There have been/is to be a few balancer improvements after this work that projects on release 2, like our production on a large hbase cluster, will benefit. Without back porting this work, it will become very laborious and risky. If you don't have the time, I will take it. was (Author: claraxiong): Hi [~zhangduo] The refactoring was great. The module in a much better state. May I ask this to be back ported to branch 2? There have been/is to be a few balancer improvements after this work that projects on release 2 will benefit. Without back porting this work, it will become very laborious and risky. If you don't have the time, I will take it. > Complete the work on moving all the balancer related classes to > hbase-balancer module > - > > Key: HBASE-25649 > URL: https://issues.apache.org/jira/browse/HBASE-25649 > Project: HBase > Issue Type: Umbrella > Components: Balancer >Reporter: Duo Zhang >Assignee: Duo Zhang >Priority: Major > Fix For: 3.0.0-alpha-1 > > > This is the follow up issue of HBASE-23933, where we set up the new > hbase-balancer module and moved several classes into it. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (HBASE-25649) Complete the work on moving all the balancer related classes to hbase-balancer module

[ https://issues.apache.org/jira/browse/HBASE-25649?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17361303#comment-17361303 ] Clara Xiong commented on HBASE-25649: - Hi [~zhangduo] The refactoring was great. The module in a much better state. May I ask this to be back ported to branch 2? There have been/is to be a few balancer improvements after this work that projects on release 2 will benefit. Without back porting this work, it will become very laborious and risky. If you don't have the time, I will take it. > Complete the work on moving all the balancer related classes to > hbase-balancer module > - > > Key: HBASE-25649 > URL: https://issues.apache.org/jira/browse/HBASE-25649 > Project: HBase > Issue Type: Umbrella > Components: Balancer >Reporter: Duo Zhang >Assignee: Duo Zhang >Priority: Major > Fix For: 3.0.0-alpha-1 > > > This is the follow up issue of HBASE-23933, where we set up the new > hbase-balancer module and moved several classes into it. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [hbase] Apache-HBase commented on pull request #3377: HBASE-25994 Active WAL tailing fails when WAL value compression is enabled

Apache-HBase commented on pull request #3377: URL: https://github.com/apache/hbase/pull/3377#issuecomment-859138246 :confetti_ball: **+1 overall** | Vote | Subsystem | Runtime | Comment | |::|--:|:|:| | +0 :ok: | reexec | 1m 5s | Docker mode activated. | | -0 :warning: | yetus | 0m 3s | Unprocessed flag(s): --brief-report-file --spotbugs-strict-precheck --whitespace-eol-ignore-list --whitespace-tabs-ignore-list --quick-hadoopcheck | ||| _ Prechecks _ | ||| _ master Compile Tests _ | | +1 :green_heart: | mvninstall | 3m 55s | master passed | | +1 :green_heart: | compile | 1m 1s | master passed | | +1 :green_heart: | shadedjars | 8m 14s | branch has no errors when building our shaded downstream artifacts. | | +1 :green_heart: | javadoc | 0m 38s | master passed | ||| _ Patch Compile Tests _ | | +1 :green_heart: | mvninstall | 3m 44s | the patch passed | | +1 :green_heart: | compile | 1m 0s | the patch passed | | +1 :green_heart: | javac | 1m 0s | the patch passed | | +1 :green_heart: | shadedjars | 8m 12s | patch has no errors when building our shaded downstream artifacts. | | +1 :green_heart: | javadoc | 0m 37s | the patch passed | ||| _ Other Tests _ | | +1 :green_heart: | unit | 151m 45s | hbase-server in the patch passed. | | | | 182m 31s | | | Subsystem | Report/Notes | |--:|:-| | Docker | ClientAPI=1.41 ServerAPI=1.41 base: https://ci-hadoop.apache.org/job/HBase/job/HBase-PreCommit-GitHub-PR/job/PR-3377/1/artifact/yetus-jdk8-hadoop3-check/output/Dockerfile | | GITHUB PR | https://github.com/apache/hbase/pull/3377 | | Optional Tests | javac javadoc unit shadedjars compile | | uname | Linux c84a74b15cab 4.15.0-112-generic #113-Ubuntu SMP Thu Jul 9 23:41:39 UTC 2020 x86_64 x86_64 x86_64 GNU/Linux | | Build tool | maven | | Personality | dev-support/hbase-personality.sh | | git revision | master / 6b81ff94a5 | | Default Java | AdoptOpenJDK-1.8.0_282-b08 | | Test Results | https://ci-hadoop.apache.org/job/HBase/job/HBase-PreCommit-GitHub-PR/job/PR-3377/1/testReport/ | | Max. process+thread count | 4157 (vs. ulimit of 3) | | modules | C: hbase-server U: hbase-server | | Console output | https://ci-hadoop.apache.org/job/HBase/job/HBase-PreCommit-GitHub-PR/job/PR-3377/1/console | | versions | git=2.17.1 maven=3.6.3 | | Powered by | Apache Yetus 0.12.0 https://yetus.apache.org | This message was automatically generated. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hbase] Apache-HBase commented on pull request #3377: HBASE-25994 Active WAL tailing fails when WAL value compression is enabled

Apache-HBase commented on pull request #3377: URL: https://github.com/apache/hbase/pull/3377#issuecomment-859132011 :confetti_ball: **+1 overall** | Vote | Subsystem | Runtime | Comment | |::|--:|:|:| | +0 :ok: | reexec | 0m 29s | Docker mode activated. | | -0 :warning: | yetus | 0m 4s | Unprocessed flag(s): --brief-report-file --spotbugs-strict-precheck --whitespace-eol-ignore-list --whitespace-tabs-ignore-list --quick-hadoopcheck | ||| _ Prechecks _ | ||| _ master Compile Tests _ | | +1 :green_heart: | mvninstall | 4m 34s | master passed | | +1 :green_heart: | compile | 1m 15s | master passed | | +1 :green_heart: | shadedjars | 8m 15s | branch has no errors when building our shaded downstream artifacts. | | +1 :green_heart: | javadoc | 0m 41s | master passed | ||| _ Patch Compile Tests _ | | +1 :green_heart: | mvninstall | 4m 15s | the patch passed | | +1 :green_heart: | compile | 1m 13s | the patch passed | | +1 :green_heart: | javac | 1m 13s | the patch passed | | +1 :green_heart: | shadedjars | 8m 14s | patch has no errors when building our shaded downstream artifacts. | | +1 :green_heart: | javadoc | 0m 40s | the patch passed | ||| _ Other Tests _ | | +1 :green_heart: | unit | 137m 49s | hbase-server in the patch passed. | | | | 169m 46s | | | Subsystem | Report/Notes | |--:|:-| | Docker | ClientAPI=1.41 ServerAPI=1.41 base: https://ci-hadoop.apache.org/job/HBase/job/HBase-PreCommit-GitHub-PR/job/PR-3377/1/artifact/yetus-jdk11-hadoop3-check/output/Dockerfile | | GITHUB PR | https://github.com/apache/hbase/pull/3377 | | Optional Tests | javac javadoc unit shadedjars compile | | uname | Linux 9b7e823c972c 4.15.0-112-generic #113-Ubuntu SMP Thu Jul 9 23:41:39 UTC 2020 x86_64 x86_64 x86_64 GNU/Linux | | Build tool | maven | | Personality | dev-support/hbase-personality.sh | | git revision | master / 6b81ff94a5 | | Default Java | AdoptOpenJDK-11.0.10+9 | | Test Results | https://ci-hadoop.apache.org/job/HBase/job/HBase-PreCommit-GitHub-PR/job/PR-3377/1/testReport/ | | Max. process+thread count | 4587 (vs. ulimit of 3) | | modules | C: hbase-server U: hbase-server | | Console output | https://ci-hadoop.apache.org/job/HBase/job/HBase-PreCommit-GitHub-PR/job/PR-3377/1/console | | versions | git=2.17.1 maven=3.6.3 | | Powered by | Apache Yetus 0.12.0 https://yetus.apache.org | This message was automatically generated. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hbase] apurtell commented on pull request #3377: HBASE-25994 Active WAL tailing fails when WAL value compression is enabled

apurtell commented on pull request #3377: URL: https://github.com/apache/hbase/pull/3377#issuecomment-859055987 Checkstyle nit already addressed (c2a3a90). Whitespace nit noted, will fix if there is a round of review or at commit time. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hbase] Apache-HBase commented on pull request #3377: HBASE-25994 Active WAL tailing fails when WAL value compression is enabled

Apache-HBase commented on pull request #3377: URL: https://github.com/apache/hbase/pull/3377#issuecomment-859054066 :confetti_ball: **+1 overall** | Vote | Subsystem | Runtime | Comment | |::|--:|:|:| | +0 :ok: | reexec | 1m 17s | Docker mode activated. | ||| _ Prechecks _ | | +1 :green_heart: | dupname | 0m 0s | No case conflicting files found. | | +1 :green_heart: | hbaseanti | 0m 0s | Patch does not have any anti-patterns. | | +1 :green_heart: | @author | 0m 0s | The patch does not contain any @author tags. | ||| _ master Compile Tests _ | | +1 :green_heart: | mvninstall | 4m 32s | master passed | | +1 :green_heart: | compile | 3m 28s | master passed | | +1 :green_heart: | checkstyle | 1m 13s | master passed | | +1 :green_heart: | spotbugs | 2m 18s | master passed | ||| _ Patch Compile Tests _ | | +1 :green_heart: | mvninstall | 4m 7s | the patch passed | | +1 :green_heart: | compile | 3m 30s | the patch passed | | +1 :green_heart: | javac | 3m 30s | the patch passed | | -0 :warning: | checkstyle | 1m 12s | hbase-server: The patch generated 1 new + 5 unchanged - 0 fixed = 6 total (was 5) | | -0 :warning: | whitespace | 0m 0s | The patch has 1 line(s) that end in whitespace. Use git apply --whitespace=fix <>. Refer https://git-scm.com/docs/git-apply | | +1 :green_heart: | hadoopcheck | 21m 8s | Patch does not cause any errors with Hadoop 3.1.2 3.2.1 3.3.0. | | +1 :green_heart: | spotbugs | 2m 31s | the patch passed | ||| _ Other Tests _ | | +1 :green_heart: | asflicense | 0m 14s | The patch does not generate ASF License warnings. | | | | 55m 18s | | | Subsystem | Report/Notes | |--:|:-| | Docker | ClientAPI=1.41 ServerAPI=1.41 base: https://ci-hadoop.apache.org/job/HBase/job/HBase-PreCommit-GitHub-PR/job/PR-3377/1/artifact/yetus-general-check/output/Dockerfile | | GITHUB PR | https://github.com/apache/hbase/pull/3377 | | Optional Tests | dupname asflicense javac spotbugs hadoopcheck hbaseanti checkstyle compile | | uname | Linux 67912efbd034 4.15.0-136-generic #140-Ubuntu SMP Thu Jan 28 05:20:47 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux | | Build tool | maven | | Personality | dev-support/hbase-personality.sh | | git revision | master / 6b81ff94a5 | | Default Java | AdoptOpenJDK-1.8.0_282-b08 | | checkstyle | https://ci-hadoop.apache.org/job/HBase/job/HBase-PreCommit-GitHub-PR/job/PR-3377/1/artifact/yetus-general-check/output/diff-checkstyle-hbase-server.txt | | whitespace | https://ci-hadoop.apache.org/job/HBase/job/HBase-PreCommit-GitHub-PR/job/PR-3377/1/artifact/yetus-general-check/output/whitespace-eol.txt | | Max. process+thread count | 85 (vs. ulimit of 3) | | modules | C: hbase-server U: hbase-server | | Console output | https://ci-hadoop.apache.org/job/HBase/job/HBase-PreCommit-GitHub-PR/job/PR-3377/1/console | | versions | git=2.17.1 maven=3.6.3 spotbugs=4.2.2 | | Powered by | Apache Yetus 0.12.0 https://yetus.apache.org | This message was automatically generated. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (HBASE-25984) FSHLog WAL lockup with sync future reuse [RS deadlock]

[

https://issues.apache.org/jira/browse/HBASE-25984?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Andrew Kyle Purtell updated HBASE-25984:

Status: Patch Available (was: Open)

> FSHLog WAL lockup with sync future reuse [RS deadlock]

> --

>

> Key: HBASE-25984

> URL: https://issues.apache.org/jira/browse/HBASE-25984

> Project: HBase

> Issue Type: Bug

> Components: regionserver, wal

>Affects Versions: 3.0.0-alpha-1, 1.7.0, 2.5.0, 2.4.5

>Reporter: Bharath Vissapragada

>Assignee: Bharath Vissapragada

>Priority: Critical

> Labels: deadlock, hang

> Attachments: HBASE-25984-unit-test.patch

>

>

> We use FSHLog as the WAL implementation (branch-1 based) and under heavy load

> we noticed the WAL system gets locked up due to a subtle bug involving racy

> code with sync future reuse. This bug applies to all FSHLog implementations

> across branches.

> Symptoms:

> On heavily loaded clusters with large write load we noticed that the region

> servers are hanging abruptly with filled up handler queues and stuck MVCC

> indicating appends/syncs not making any progress.

> {noformat}

> WARN [8,queue=9,port=60020] regionserver.MultiVersionConcurrencyControl -

> STUCK for : 296000 millis.

> MultiVersionConcurrencyControl{readPoint=172383686, writePoint=172383690,

> regionName=1ce4003ab60120057734ffe367667dca}

> WARN [6,queue=2,port=60020] regionserver.MultiVersionConcurrencyControl -

> STUCK for : 296000 millis.

> MultiVersionConcurrencyControl{readPoint=171504376, writePoint=171504381,

> regionName=7c441d7243f9f504194dae6bf2622631}

> {noformat}

> All the handlers are stuck waiting for the sync futures and timing out.

> {noformat}

> java.lang.Object.wait(Native Method)

>

> org.apache.hadoop.hbase.regionserver.wal.SyncFuture.get(SyncFuture.java:183)

>

> org.apache.hadoop.hbase.regionserver.wal.FSHLog.blockOnSync(FSHLog.java:1509)

> .

> {noformat}

> Log rolling is stuck because it was unable to attain a safe point

> {noformat}

>java.util.concurrent.CountDownLatch.await(CountDownLatch.java:277)

> org.apache.hadoop.hbase.regionserver.wal.FSHLog$SafePointZigZagLatch.waitSafePoint(FSHLog.java:1799)

>

> org.apache.hadoop.hbase.regionserver.wal.FSHLog.replaceWriter(FSHLog.java:900)

> {noformat}

> and the Ring buffer consumer thinks that there are some outstanding syncs

> that need to finish..

> {noformat}

>

> org.apache.hadoop.hbase.regionserver.wal.FSHLog$RingBufferEventHandler.attainSafePoint(FSHLog.java:2031)

>

> org.apache.hadoop.hbase.regionserver.wal.FSHLog$RingBufferEventHandler.onEvent(FSHLog.java:1999)

>

> org.apache.hadoop.hbase.regionserver.wal.FSHLog$RingBufferEventHandler.onEvent(FSHLog.java:1857)

> {noformat}

> On the other hand, SyncRunner threads are idle and just waiting for work

> implying that there are no pending SyncFutures that need to be run

> {noformat}

>sun.misc.Unsafe.park(Native Method)

> java.util.concurrent.locks.LockSupport.park(LockSupport.java:175)

>

> java.util.concurrent.locks.AbstractQueuedSynchronizer$ConditionObject.await(AbstractQueuedSynchronizer.java:2039)

>

> java.util.concurrent.LinkedBlockingQueue.take(LinkedBlockingQueue.java:442)

>

> org.apache.hadoop.hbase.regionserver.wal.FSHLog$SyncRunner.run(FSHLog.java:1297)

> java.lang.Thread.run(Thread.java:748)

> {noformat}

> Overall the WAL system is dead locked and could make no progress until it was

> aborted. I got to the bottom of this issue and have a patch that can fix it

> (more details in the comments due to word limit in the description).

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Updated] (HBASE-25994) Active WAL tailing fails when WAL value compression is enabled

[ https://issues.apache.org/jira/browse/HBASE-25994?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Andrew Kyle Purtell updated HBASE-25994: Affects Version/s: 3.0.0-alpha-1 > Active WAL tailing fails when WAL value compression is enabled > -- > > Key: HBASE-25994 > URL: https://issues.apache.org/jira/browse/HBASE-25994 > Project: HBase > Issue Type: Bug >Affects Versions: 3.0.0-alpha-1, 2.5.0 >Reporter: Andrew Kyle Purtell >Assignee: Andrew Kyle Purtell >Priority: Major > Fix For: 3.0.0-alpha-1, 2.5.0 > > > Depending on which compression codec is used, a short read of the compressed > bytes can cause catastrophic errors that confuse the WAL reader. This problem > can manifest when the reader is actively tailing the WAL for replication. The > input stream's available() method sometimes lies so cannot be relied upon. To > avoid these issues, when WAL value compression is enabled, ensure all bytes > of the compressed value are read before submitting the payload to the > decompressor. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (HBASE-25957) Back port HBASE-25924 and its related patches to branch-2.3

[ https://issues.apache.org/jira/browse/HBASE-25957?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17361206#comment-17361206 ] Rushabh Shah commented on HBASE-25957: -- Just noticed this Jira today. The branches have diverged a lot. It is not trivial work to backport them easily. Unfortunately don't have cycles in few days. Will get back to it later. [~bharathv] [|https://issues.apache.org/jira/secure/AddComment!default.jspa?id=13380557] > Back port HBASE-25924 and its related patches to branch-2.3 > --- > > Key: HBASE-25957 > URL: https://issues.apache.org/jira/browse/HBASE-25957 > Project: HBase > Issue Type: Sub-task > Components: metrics, Replication, wal >Affects Versions: 2.3.6 >Reporter: Bharath Vissapragada >Priority: Major > > The branch has diverged a bit and the patches are not applying clean. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (HBASE-25924) Seeing a spike in uncleanlyClosedWALs metric.

[

https://issues.apache.org/jira/browse/HBASE-25924?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17361205#comment-17361205

]

Rushabh Shah commented on HBASE-25924:

--

The branches have diverged a lot. It is not trivial work to backport them

easily. Unfortunately don't have cycles in few days. Will get back to it later.

> Seeing a spike in uncleanlyClosedWALs metric.

> -

>

> Key: HBASE-25924

> URL: https://issues.apache.org/jira/browse/HBASE-25924

> Project: HBase

> Issue Type: Bug

> Components: Replication, wal

>Affects Versions: 3.0.0-alpha-1, 1.7.0, 2.5.0, 2.4.4

>Reporter: Rushabh Shah

>Assignee: Rushabh Shah

>Priority: Major

> Fix For: 3.0.0-alpha-1, 2.5.0, 2.4.4, 1.7.1

>

>

> Getting the following log line in all of our production clusters when

> WALEntryStream is dequeuing WAL file.

> {noformat}

> 2021-05-02 04:01:30,437 DEBUG [04901996] regionserver.WALEntryStream -

> Reached the end of WAL file hdfs://. It was not closed

> cleanly, so we did not parse 8 bytes of data. This is normally ok.

> {noformat}

> The 8 bytes are usually the trailer serialized size (SIZE_OF_INT (4bytes) +

> "LAWP" (4 bytes) = 8 bytes)

> While dequeue'ing the WAL file from WALEntryStream, we reset the reader here.

> [WALEntryStream|https://github.com/apache/hbase/blob/branch-1/hbase-server/src/main/java/org/apache/hadoop/hbase/replication/regionserver/WALEntryStream.java#L199-L221]

> {code:java}

> private void tryAdvanceEntry() throws IOException {

> if (checkReader()) {

> readNextEntryAndSetPosition();

> if (currentEntry == null) { // no more entries in this log file - see

> if log was rolled

> if (logQueue.getQueue(walGroupId).size() > 1) { // log was rolled

> // Before dequeueing, we should always get one more attempt at

> reading.

> // This is in case more entries came in after we opened the reader,

> // and a new log was enqueued while we were reading. See HBASE-6758

> resetReader(); ---> HERE

> readNextEntryAndSetPosition();

> if (currentEntry == null) {

> if (checkAllBytesParsed()) { // now we're certain we're done with

> this log file

> dequeueCurrentLog();

> if (openNextLog()) {

> readNextEntryAndSetPosition();

> }

> }

> }

> } // no other logs, we've simply hit the end of the current open log.

> Do nothing

> }

> }

> // do nothing if we don't have a WAL Reader (e.g. if there's no logs in

> queue)

> }

> {code}

> In resetReader, we call the following methods, WALEntryStream#resetReader

> > ProtobufLogReader#reset ---> ProtobufLogReader#initInternal.

> In ProtobufLogReader#initInternal, we try to create the whole reader object

> from scratch to see if any new data has been written.

> We reset all the fields of ProtobufLogReader except for ReaderBase#fileLength.

> We calculate whether trailer is present or not depending on fileLength.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (HBASE-15041) Clean up javadoc errors and reenable jdk8 linter

[ https://issues.apache.org/jira/browse/HBASE-15041?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17361201#comment-17361201 ] Nick Dimiduk commented on HBASE-15041: -- No, it appears the change from HBASE-15011 is still present. > Clean up javadoc errors and reenable jdk8 linter > > > Key: HBASE-15041 > URL: https://issues.apache.org/jira/browse/HBASE-15041 > Project: HBase > Issue Type: Umbrella > Components: build, documentation >Affects Versions: 1.2.0, 1.3.0, 2.0.0 >Reporter: Sean Busbey >Assignee: Chia-Ping Tsai >Priority: Major > Fix For: 3.0.0-alpha-1 > > > umbrella to clean up our various errors according to the jdk8 javadoc linter. > plan is a sub-task per module. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [hbase] ndimiduk commented on a change in pull request #3276: HBASE-25894 Improve the performance for region load and region count related cost functions

ndimiduk commented on a change in pull request #3276:

URL: https://github.com/apache/hbase/pull/3276#discussion_r649487211

##

File path:

hbase-server/src/main/java/org/apache/hadoop/hbase/master/balancer/DoubleArrayCost.java

##

@@ -0,0 +1,100 @@

+/**

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.hadoop.hbase.master.balancer;

+

+import java.util.function.Consumer;

+import org.apache.yetus.audience.InterfaceAudience;

+

+/**

+ * A helper class to compute a scaled cost using

+ * {@link

org.apache.commons.math3.stat.descriptive.DescriptiveStatistics#DescriptiveStatistics()}.

+ * It assumes that this is a zero sum set of costs. It assumes that the worst

case possible is all

+ * of the elements in one region server and the rest having 0.

+ */

+@InterfaceAudience.Private

+final class DoubleArrayCost {

+

+ private double[] costs;

+

+ // computeCost call is expensive so we use this flag to indicate whether we

need to recalculate

+ // the cost by calling computeCost

+ private boolean costsChanged;

+

+ private double cost;

+

+ void prepare(int length) {

+if (costs == null || costs.length != length) {

+ costs = new double[length];

+}

+ }

+

+ void setCosts(Consumer consumer) {

Review comment:

Not off the top of my head, no. This interface for mutability by an

external actor is a little strange. Maybe `applyCostsConsumer` ?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hbase] apurtell edited a comment on pull request #3377: HBASE-25994 Active WAL tailing fails when WAL value compression is enabled

apurtell edited a comment on pull request #3377: URL: https://github.com/apache/hbase/pull/3377#issuecomment-858994276 @bharathv Had to undo that read side optimization we discussed on #3244. It's fine for readers that operate on closed and completed WAL files. We were missing coverage of the other case, when the WAL is actively tailed. Added that coverage. Determined the optimization is not a good idea for that case via unit test failure (and confirmed fix). -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (HBASE-25651) NORMALIZER_TARGET_REGION_SIZE needs a unit in its name

[

https://issues.apache.org/jira/browse/HBASE-25651?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17361199#comment-17361199

]

Nick Dimiduk commented on HBASE-25651:

--

I started the backport to branch-2, but I haven't gotten around to

investigating the test failures. [~rkrahul324], do you mind picking up the pack

port effort? We just need a PR against branch-2. Thanks.

> NORMALIZER_TARGET_REGION_SIZE needs a unit in its name

> --

>

> Key: HBASE-25651

> URL: https://issues.apache.org/jira/browse/HBASE-25651

> Project: HBase

> Issue Type: Bug

> Components: Normalizer

>Affects Versions: 2.3.0

>Reporter: Nick Dimiduk

>Assignee: Rahul Kumar

>Priority: Major

> Fix For: 3.0.0-alpha-1

>

>

> On a per-table basis, the normalizer can be configured with a specific region

> size target via {{NORMALIZER_TARGET_REGION_SIZE}}. This value is used in a

> context where size values are in the megabyte scale. However, this

> configuration name does not mention "megabyte" or "mb" anywhere, so it's very

> easy for an operator to get the configured value wrong by many orders of

> magnitude.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [hbase] apurtell edited a comment on pull request #3377: HBASE-25994 Active WAL tailing fails when WAL value compression is enabled

apurtell edited a comment on pull request #3377: URL: https://github.com/apache/hbase/pull/3377#issuecomment-858994276 @bharathv Had to undo that read side optimization we discussed on #3244. It's fine for readers that operate on closed and completed WAL files. We were missing coverage of the other case, when the WAL is actively tailed. Added that coverage. Determined the optimization is not a good idea for that case. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hbase] apurtell commented on pull request #3377: HBASE-25994 Active WAL tailing fails when WAL value compression is enabled

apurtell commented on pull request #3377: URL: https://github.com/apache/hbase/pull/3377#issuecomment-858994276 @bharathv Had to undo that read side optimization we discussed. It's fine for readers that operate on closed and completed WAL files. We were missing coverage of the other case, when the WAL is actively tailed. Added that coverage. Determined the optimization is not a good idea for that case. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (HBASE-25994) Active WAL tailing fails when WAL value compression is enabled

[ https://issues.apache.org/jira/browse/HBASE-25994?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Andrew Kyle Purtell updated HBASE-25994: Status: Patch Available (was: Open) > Active WAL tailing fails when WAL value compression is enabled > -- > > Key: HBASE-25994 > URL: https://issues.apache.org/jira/browse/HBASE-25994 > Project: HBase > Issue Type: Bug >Affects Versions: 2.5.0 >Reporter: Andrew Kyle Purtell >Assignee: Andrew Kyle Purtell >Priority: Major > Fix For: 3.0.0-alpha-1, 2.5.0 > > > Depending on which compression codec is used, a short read of the compressed > bytes can cause catastrophic errors that confuse the WAL reader. This problem > can manifest when the reader is actively tailing the WAL for replication. The > input stream's available() method sometimes lies so cannot be relied upon. To > avoid these issues, when WAL value compression is enabled, ensure all bytes > of the compressed value are read before submitting the payload to the > decompressor. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [hbase] apurtell commented on pull request #3377: HBASE-25994 Active WAL tailing fails when WAL value compression is enabled

apurtell commented on pull request #3377: URL: https://github.com/apache/hbase/pull/3377#issuecomment-858991850 Added all reviewers of original change https://github.com/apache/hbase/pull/3244 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hbase] apurtell opened a new pull request #3377: HBASE-25994 Active WAL tailing fails when WAL value compression is enabled

apurtell opened a new pull request #3377: URL: https://github.com/apache/hbase/pull/3377 Depending on which compression codec is used, a short read of the compressed bytes can cause catastrophic errors that confuse the WAL reader. This problem can manifest when the reader is actively tailing the WAL for replication. The input stream's available() method sometimes lies so cannot be relied upon. To avoid these issues when WAL value compression is enabled ensure all bytes of the compressed value are read in and thus available before submitting the payload to the decompressor. Adds new unit tests TestReplicationCompressedWAL and TestReplicationValueCompressedWAL. Without the WALCellCodec change TestReplicationValueCompressedWAL will fail. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Created] (HBASE-25994) Active WAL tailing fails when WAL value compression is enabled

Andrew Kyle Purtell created HBASE-25994: --- Summary: Active WAL tailing fails when WAL value compression is enabled Key: HBASE-25994 URL: https://issues.apache.org/jira/browse/HBASE-25994 Project: HBase Issue Type: Bug Affects Versions: 2.5.0 Reporter: Andrew Kyle Purtell Assignee: Andrew Kyle Purtell Fix For: 3.0.0-alpha-1, 2.5.0 Depending on which compression codec is used, a short read of the compressed bytes can cause catastrophic errors that confuse the WAL reader. This problem can manifest when the reader is actively tailing the WAL for replication. The input stream's available() method sometimes lies so cannot be relied upon. To avoid these issues, when WAL value compression is enabled, ensure all bytes of the compressed value are read before submitting the payload to the decompressor. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (HBASE-24984) WAL corruption due to early DBBs re-use when Durability.ASYNC_WAL is used with multi operation

[ https://issues.apache.org/jira/browse/HBASE-24984?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17361187#comment-17361187 ] Gaurav Kanade commented on HBASE-24984: --- [~mopishv0] any sample call stack, err file log for when this kind of issue happens? > WAL corruption due to early DBBs re-use when Durability.ASYNC_WAL is used > with multi operation > -- > > Key: HBASE-24984 > URL: https://issues.apache.org/jira/browse/HBASE-24984 > Project: HBase > Issue Type: Bug > Components: rpc, wal >Affects Versions: 2.1.6 >Reporter: Liu Junhong >Priority: Major > > After bugfix HBASE-22539, When client use BufferedMutator or multiple > mutation , there will be one RpcCall and mutliple FSWALEntry . At the time > RpcCall finish and one FSWALEntry call release() , the remain FSWALEntries > may trigger RuntimeException or segmentation fault . > We should use RefCnt instead of AtomicInteger for > org.apache.hadoop.hbase.ipc.ServerCall.reference? -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [hbase] bharathv commented on pull request #3371: HBASE-25984: Avoid premature reuse of sync futures in FSHLog [DRAFT]

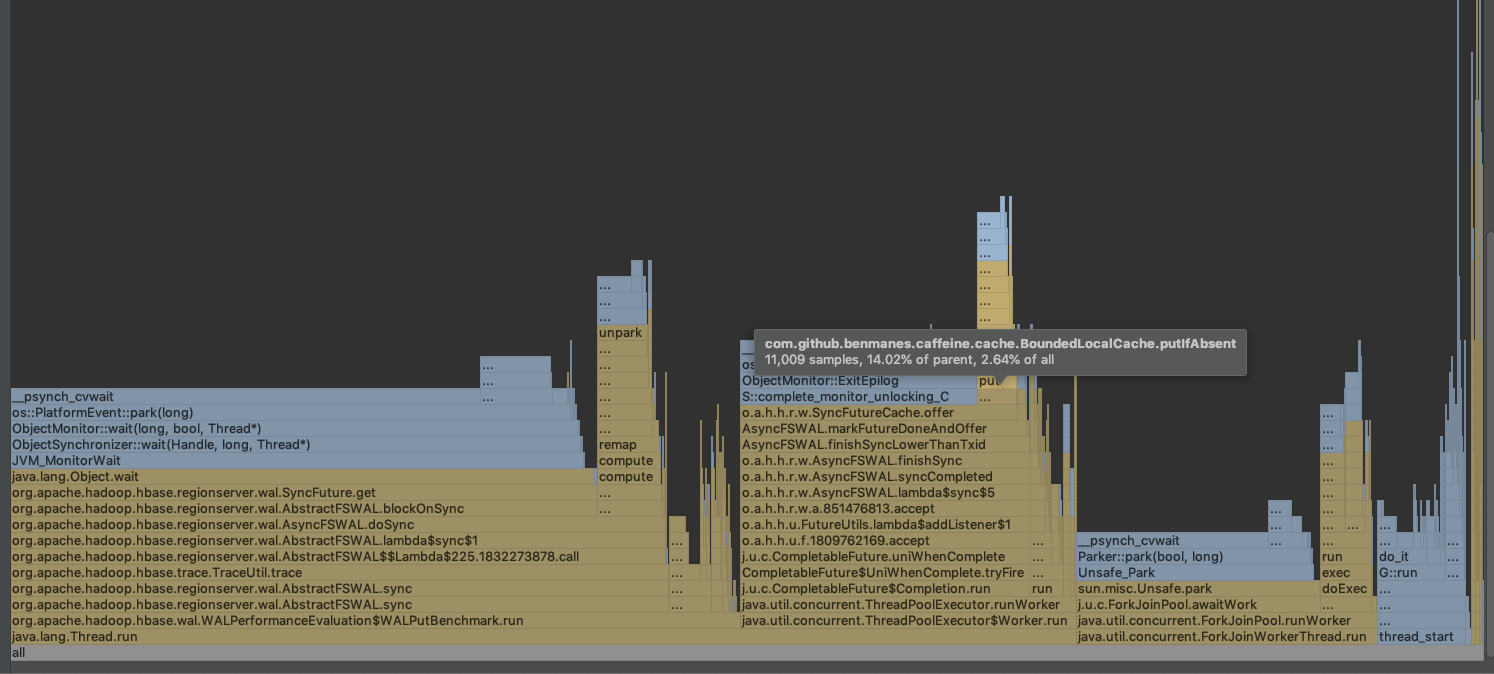

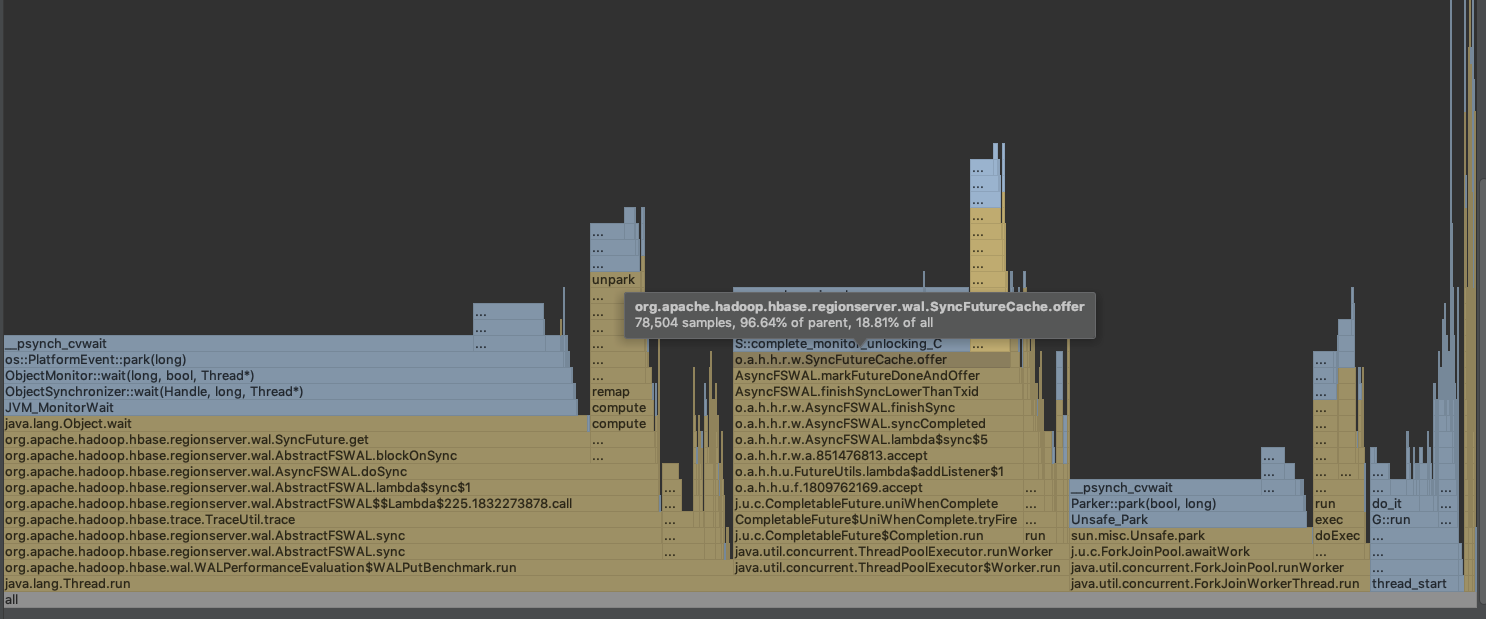

bharathv commented on pull request #3371: URL: https://github.com/apache/hbase/pull/3371#issuecomment-858901557 Flame graph without any changes.. overall it seems like we have 1.xx% overhead due to the cache and guava seems slightly better than Caffeine. cc: @saintstack / @apurtell **Without Changes**  -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hbase] sandeepvinayak commented on a change in pull request #3376: HBASE-25992 Polish the ReplicationSourceWALReader code for 2.x after …

sandeepvinayak commented on a change in pull request #3376:

URL: https://github.com/apache/hbase/pull/3376#discussion_r649435799

##

File path:

hbase-server/src/main/java/org/apache/hadoop/hbase/replication/regionserver/ReplicationSourceWALReader.java

##

@@ -270,43 +236,63 @@ private void handleEmptyWALEntryBatch() throws

InterruptedException {

}

}

+ private WALEntryBatch tryAdvanceStreamAndCreateWALBatch(WALEntryStream

entryStream)

+throws IOException {

+Path currentPath = entryStream.getCurrentPath();

+if (!entryStream.hasNext()) {

+ // check whether we have switched a file

+ if (currentPath != null && switched(entryStream, currentPath)) {

+return WALEntryBatch.endOfFile(currentPath);

+ } else {

+return null;

+ }

+}

+if (currentPath != null) {

+ if (switched(entryStream, currentPath)) {

+return WALEntryBatch.endOfFile(currentPath);

+ }

+}

+return createBatch(entryStream);

+ }

+

/**

* This is to handle the EOFException from the WAL entry stream.

EOFException should

* be handled carefully because there are chances of data loss because of

never replicating

* the data. Thus we should always try to ship existing batch of entries

here.

* If there was only one log in the queue before EOF, we ship the empty

batch here

* and since reader is still active, in the next iteration of reader we will

* stop the reader.

+ *

* If there was more than one log in the queue before EOF, we ship the

existing batch

* and reset the wal patch and position to the log with EOF, so shipper can

remove

* logs from replication queue

* @return true only the IOE can be handled

*/

- private boolean handleEofException(Exception e, WALEntryBatch batch)

- throws InterruptedException {

+ private boolean handleEofException(Exception e, WALEntryBatch batch) {

PriorityBlockingQueue queue = logQueue.getQueue(walGroupId);

// Dump the log even if logQueue size is 1 if the source is from recovered

Source

// since we don't add current log to recovered source queue so it is safe

to remove.

-if ((e instanceof EOFException || e.getCause() instanceof EOFException)

- && (source.isRecovered() || queue.size() > 1)

- && this.eofAutoRecovery) {

+if ((e instanceof EOFException || e.getCause() instanceof EOFException) &&

+ (source.isRecovered() || queue.size() > 1) && this.eofAutoRecovery) {

Path head = queue.peek();

try {

if (fs.getFileStatus(head).getLen() == 0) {

// head of the queue is an empty log file

LOG.warn("Forcing removal of 0 length log in queue: {}", head);

logQueue.remove(walGroupId);

currentPosition = 0;

- // After we removed the WAL from the queue, we should

- // try shipping the existing batch of entries and set the wal

position

- // and path to the wal just dequeued to correctly remove logs from

the zk

- batch.setLastWalPath(head);

- batch.setLastWalPosition(currentPosition);

- addBatchToShippingQueue(batch);

+ if (batch != null) {

+// After we removed the WAL from the queue, we should try shipping

the existing batch of

+// entries

+addBatchToShippingQueue(batch);

Review comment:

I don't think we need this now if we are sure that batch is empty since

the batch can only have one WAL's data.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hbase] sandeepvinayak commented on a change in pull request #3376: HBASE-25992 Polish the ReplicationSourceWALReader code for 2.x after …

sandeepvinayak commented on a change in pull request #3376:

URL: https://github.com/apache/hbase/pull/3376#discussion_r649431795

##

File path:

hbase-server/src/main/java/org/apache/hadoop/hbase/replication/regionserver/ReplicationSourceWALReader.java

##

@@ -122,65 +122,51 @@ public ReplicationSourceWALReader(FileSystem fs,

Configuration conf,

@Override

public void run() {

int sleepMultiplier = 1;

-WALEntryBatch batch = null;

-WALEntryStream entryStream = null;

-try {

- // we only loop back here if something fatal happened to our stream

- while (isReaderRunning()) {

-try {

- entryStream =

-new WALEntryStream(logQueue, conf, currentPosition,

source.getWALFileLengthProvider(),

- source.getServerWALsBelongTo(), source.getSourceMetrics(),

walGroupId);

- while (isReaderRunning()) { // loop here to keep reusing stream

while we can

-if (!source.isPeerEnabled()) {

- Threads.sleep(sleepForRetries);

- continue;

-}

-if (!checkQuota()) {

- continue;

-}

-

-batch = createBatch(entryStream);

-batch = readWALEntries(entryStream, batch);

+while (isReaderRunning()) { // we only loop back here if something fatal

happened to our stream

+ WALEntryBatch batch = null;

+ try (WALEntryStream entryStream =

+ new WALEntryStream(logQueue, conf, currentPosition,

+ source.getWALFileLengthProvider(),

source.getServerWALsBelongTo(),

+ source.getSourceMetrics(), walGroupId)) {

+while (isReaderRunning()) { // loop here to keep reusing stream while

we can

+ batch = null;

+ if (!source.isPeerEnabled()) {

+Threads.sleep(sleepForRetries);

+continue;

+ }

+ if (!checkQuota()) {

+continue;

+ }

+ batch = tryAdvanceStreamAndCreateWALBatch(entryStream);

+ if (batch == null) {

+// got no entries and didn't advance position in WAL

+handleEmptyWALEntryBatch();

+entryStream.reset(); // reuse stream

+continue;

+ }

+ // if we have already switched a file, skip reading and put it

directly to the ship queue

+ if (!batch.isEndOfFile()) {

+readWALEntries(entryStream, batch);

currentPosition = entryStream.getPosition();

-if (batch == null) {

- // either the queue have no WAL to read

- // or got no new entries (didn't advance position in WAL)

- handleEmptyWALEntryBatch();

- entryStream.reset(); // reuse stream

-} else {

- addBatchToShippingQueue(batch);

-}

}

-} catch (WALEntryFilterRetryableException | IOException e) { // stream

related

- if (handleEofException(e, batch)) {

-sleepMultiplier = 1;

- } else {

-LOG.warn("Failed to read stream of replication entries "

- + "or replication filter is recovering", e);

-if (sleepMultiplier < maxRetriesMultiplier) {

- sleepMultiplier++;

-}

-Threads.sleep(sleepForRetries * sleepMultiplier);

+ // need to propagate the batch even it has no entries since it may

carry the last

+ // sequence id information for serial replication.

+ LOG.debug("Read {} WAL entries eligible for replication",

batch.getNbEntries());

+ entryBatchQueue.put(batch);

+ sleepMultiplier = 1;

+}

+ } catch (IOException e) { // stream related

+if (!handleEofException(e, batch)) {

Review comment:

I think we need to reset the sleepMultiplier to 1 in the else part of

this.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hbase] bharathv commented on pull request #3371: HBASE-25984: Avoid premature reuse of sync futures in FSHLog [DRAFT]

bharathv commented on pull request #3371: URL: https://github.com/apache/hbase/pull/3371#issuecomment-858883352 Caffeine seems to perform even worse overall (even though flame graph % is slightly lower), may be its some noise on my machine too. **With patch using Caffeine**   ``` -- Histograms -- org.apache.hadoop.hbase.wal.WALPerformanceEvaluation.latencyHistogram.nanos count = 15726724 min = 3199736 max = 54760561 mean = 4271968.63 stddev = 3613748.22 median = 3942103.00 75% <= 4057762.00 95% <= 4403199.00 98% <= 4815190.00 99% <= 5449043.00 99.9% <= 49375530.00 org.apache.hadoop.hbase.wal.WALPerformanceEvaluation.syncCountHistogram.countPerSync count = 154233 min = 101 max = 103 mean = 102.00 stddev = 0.15 median = 102.00 75% <= 102.00 95% <= 102.00 98% <= 102.00 99% <= 103.00 99.9% <= 103.00 org.apache.hadoop.hbase.wal.WALPerformanceEvaluation.syncHistogram.nanos-between-syncs count = 154233 min = 120917 max = 47121051 mean = 1786963.74 stddev = 2953364.50 median = 1573054.00 75% <= 1624446.00 95% <= 1782991.00 98% <= 1933771.00 99% <= 2221343.00 99.9% <= 44809603.00 -- Meters -- org.apache.hadoop.hbase.wal.WALPerformanceEvaluation.appendMeter.bytes count = 8759954596 mean rate = 32330356.51 events/second 1-minute rate = 32550176.33 events/second 5-minute rate = 30821417.63 events/second 15-minute rate = 29564873.50 events/second org.apache.hadoop.hbase.wal.WALPerformanceEvaluation.syncMeter.syncs count = 154234 mean rate = 569.23 events/second 1-minute rate = 573.07 events/second 5-minute rate = 542.31 events/second 15-minute rate = 519.90 events/second ``` -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hbase] Apache-HBase commented on pull request #3376: HBASE-25992 Polish the ReplicationSourceWALReader code for 2.x after …

Apache-HBase commented on pull request #3376: URL: https://github.com/apache/hbase/pull/3376#issuecomment-858879576 :confetti_ball: **+1 overall** | Vote | Subsystem | Runtime | Comment | |::|--:|:|:| | +0 :ok: | reexec | 0m 28s | Docker mode activated. | | -0 :warning: | yetus | 0m 3s | Unprocessed flag(s): --brief-report-file --spotbugs-strict-precheck --whitespace-eol-ignore-list --whitespace-tabs-ignore-list --quick-hadoopcheck | ||| _ Prechecks _ | ||| _ master Compile Tests _ | | +1 :green_heart: | mvninstall | 3m 57s | master passed | | +1 :green_heart: | compile | 0m 59s | master passed | | +1 :green_heart: | shadedjars | 8m 19s | branch has no errors when building our shaded downstream artifacts. | | +1 :green_heart: | javadoc | 0m 39s | master passed | ||| _ Patch Compile Tests _ | | +1 :green_heart: | mvninstall | 3m 39s | the patch passed | | +1 :green_heart: | compile | 0m 59s | the patch passed | | +1 :green_heart: | javac | 0m 59s | the patch passed | | +1 :green_heart: | shadedjars | 8m 20s | patch has no errors when building our shaded downstream artifacts. | | +1 :green_heart: | javadoc | 0m 37s | the patch passed | ||| _ Other Tests _ | | +1 :green_heart: | unit | 145m 50s | hbase-server in the patch passed. | | | | 176m 5s | | | Subsystem | Report/Notes | |--:|:-| | Docker | ClientAPI=1.41 ServerAPI=1.41 base: https://ci-hadoop.apache.org/job/HBase/job/HBase-PreCommit-GitHub-PR/job/PR-3376/1/artifact/yetus-jdk8-hadoop3-check/output/Dockerfile | | GITHUB PR | https://github.com/apache/hbase/pull/3376 | | Optional Tests | javac javadoc unit shadedjars compile | | uname | Linux 3d99cb084f83 4.15.0-112-generic #113-Ubuntu SMP Thu Jul 9 23:41:39 UTC 2020 x86_64 x86_64 x86_64 GNU/Linux | | Build tool | maven | | Personality | dev-support/hbase-personality.sh | | git revision | master / 6b81ff94a5 | | Default Java | AdoptOpenJDK-1.8.0_282-b08 | | Test Results | https://ci-hadoop.apache.org/job/HBase/job/HBase-PreCommit-GitHub-PR/job/PR-3376/1/testReport/ | | Max. process+thread count | 4772 (vs. ulimit of 3) | | modules | C: hbase-server U: hbase-server | | Console output | https://ci-hadoop.apache.org/job/HBase/job/HBase-PreCommit-GitHub-PR/job/PR-3376/1/console | | versions | git=2.17.1 maven=3.6.3 | | Powered by | Apache Yetus 0.12.0 https://yetus.apache.org | This message was automatically generated. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hbase] Apache-HBase commented on pull request #3376: HBASE-25992 Polish the ReplicationSourceWALReader code for 2.x after …

Apache-HBase commented on pull request #3376: URL: https://github.com/apache/hbase/pull/3376#issuecomment-858879067 :confetti_ball: **+1 overall** | Vote | Subsystem | Runtime | Comment | |::|--:|:|:| | +0 :ok: | reexec | 1m 4s | Docker mode activated. | | -0 :warning: | yetus | 0m 3s | Unprocessed flag(s): --brief-report-file --spotbugs-strict-precheck --whitespace-eol-ignore-list --whitespace-tabs-ignore-list --quick-hadoopcheck | ||| _ Prechecks _ | ||| _ master Compile Tests _ | | +1 :green_heart: | mvninstall | 4m 39s | master passed | | +1 :green_heart: | compile | 1m 13s | master passed | | +1 :green_heart: | shadedjars | 8m 23s | branch has no errors when building our shaded downstream artifacts. | | +1 :green_heart: | javadoc | 0m 43s | master passed | ||| _ Patch Compile Tests _ | | +1 :green_heart: | mvninstall | 4m 17s | the patch passed | | +1 :green_heart: | compile | 1m 13s | the patch passed | | +1 :green_heart: | javac | 1m 13s | the patch passed | | +1 :green_heart: | shadedjars | 8m 9s | patch has no errors when building our shaded downstream artifacts. | | +1 :green_heart: | javadoc | 0m 41s | the patch passed | ||| _ Other Tests _ | | +1 :green_heart: | unit | 143m 3s | hbase-server in the patch passed. | | | | 175m 44s | | | Subsystem | Report/Notes | |--:|:-| | Docker | ClientAPI=1.41 ServerAPI=1.41 base: https://ci-hadoop.apache.org/job/HBase/job/HBase-PreCommit-GitHub-PR/job/PR-3376/1/artifact/yetus-jdk11-hadoop3-check/output/Dockerfile | | GITHUB PR | https://github.com/apache/hbase/pull/3376 | | Optional Tests | javac javadoc unit shadedjars compile | | uname | Linux 21dde46d2702 4.15.0-112-generic #113-Ubuntu SMP Thu Jul 9 23:41:39 UTC 2020 x86_64 x86_64 x86_64 GNU/Linux | | Build tool | maven | | Personality | dev-support/hbase-personality.sh | | git revision | master / 6b81ff94a5 | | Default Java | AdoptOpenJDK-11.0.10+9 | | Test Results | https://ci-hadoop.apache.org/job/HBase/job/HBase-PreCommit-GitHub-PR/job/PR-3376/1/testReport/ | | Max. process+thread count | 3711 (vs. ulimit of 3) | | modules | C: hbase-server U: hbase-server | | Console output | https://ci-hadoop.apache.org/job/HBase/job/HBase-PreCommit-GitHub-PR/job/PR-3376/1/console | | versions | git=2.17.1 maven=3.6.3 | | Powered by | Apache Yetus 0.12.0 https://yetus.apache.org | This message was automatically generated. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (HBASE-25924) Seeing a spike in uncleanlyClosedWALs metric.

[

https://issues.apache.org/jira/browse/HBASE-25924?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17361144#comment-17361144

]

Bharath Vissapragada commented on HBASE-25924:

--

[~shahrs87] I think it was committed and reverted from 2.3, still would be nice

to have a working patch in that branch (if you have spare cycles) :-).

> Seeing a spike in uncleanlyClosedWALs metric.

> -

>

> Key: HBASE-25924

> URL: https://issues.apache.org/jira/browse/HBASE-25924

> Project: HBase

> Issue Type: Bug

> Components: Replication, wal

>Affects Versions: 3.0.0-alpha-1, 1.7.0, 2.5.0, 2.4.4

>Reporter: Rushabh Shah

>Assignee: Rushabh Shah

>Priority: Major

> Fix For: 3.0.0-alpha-1, 2.5.0, 2.4.4, 1.7.1

>

>

> Getting the following log line in all of our production clusters when

> WALEntryStream is dequeuing WAL file.

> {noformat}

> 2021-05-02 04:01:30,437 DEBUG [04901996] regionserver.WALEntryStream -

> Reached the end of WAL file hdfs://. It was not closed

> cleanly, so we did not parse 8 bytes of data. This is normally ok.

> {noformat}

> The 8 bytes are usually the trailer serialized size (SIZE_OF_INT (4bytes) +

> "LAWP" (4 bytes) = 8 bytes)

> While dequeue'ing the WAL file from WALEntryStream, we reset the reader here.

> [WALEntryStream|https://github.com/apache/hbase/blob/branch-1/hbase-server/src/main/java/org/apache/hadoop/hbase/replication/regionserver/WALEntryStream.java#L199-L221]

> {code:java}

> private void tryAdvanceEntry() throws IOException {

> if (checkReader()) {

> readNextEntryAndSetPosition();

> if (currentEntry == null) { // no more entries in this log file - see

> if log was rolled

> if (logQueue.getQueue(walGroupId).size() > 1) { // log was rolled

> // Before dequeueing, we should always get one more attempt at

> reading.

> // This is in case more entries came in after we opened the reader,

> // and a new log was enqueued while we were reading. See HBASE-6758

> resetReader(); ---> HERE

> readNextEntryAndSetPosition();

> if (currentEntry == null) {

> if (checkAllBytesParsed()) { // now we're certain we're done with

> this log file

> dequeueCurrentLog();

> if (openNextLog()) {

> readNextEntryAndSetPosition();

> }

> }

> }

> } // no other logs, we've simply hit the end of the current open log.

> Do nothing

> }

> }

> // do nothing if we don't have a WAL Reader (e.g. if there's no logs in

> queue)

> }

> {code}

> In resetReader, we call the following methods, WALEntryStream#resetReader

> > ProtobufLogReader#reset ---> ProtobufLogReader#initInternal.

> In ProtobufLogReader#initInternal, we try to create the whole reader object

> from scratch to see if any new data has been written.

> We reset all the fields of ProtobufLogReader except for ReaderBase#fileLength.

> We calculate whether trailer is present or not depending on fileLength.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (HBASE-25967) The readRequestsCount does not calculate when the outResults is empty

[

https://issues.apache.org/jira/browse/HBASE-25967?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17361141#comment-17361141

]

Hudson commented on HBASE-25967:

Results for branch branch-2

[build #274 on

builds.a.o|https://ci-hadoop.apache.org/job/HBase/job/HBase%20Nightly/job/branch-2/274/]:

(/) *{color:green}+1 overall{color}*

details (if available):

(/) {color:green}+1 general checks{color}

-- For more information [see general

report|https://ci-hadoop.apache.org/job/HBase/job/HBase%20Nightly/job/branch-2/274/General_20Nightly_20Build_20Report/]

(/) {color:green}+1 jdk8 hadoop2 checks{color}

-- For more information [see jdk8 (hadoop2)

report|https://ci-hadoop.apache.org/job/HBase/job/HBase%20Nightly/job/branch-2/274/JDK8_20Nightly_20Build_20Report_20_28Hadoop2_29/]

(/) {color:green}+1 jdk8 hadoop3 checks{color}

-- For more information [see jdk8 (hadoop3)

report|https://ci-hadoop.apache.org/job/HBase/job/HBase%20Nightly/job/branch-2/274/JDK8_20Nightly_20Build_20Report_20_28Hadoop3_29/]

(/) {color:green}+1 jdk11 hadoop3 checks{color}

-- For more information [see jdk11

report|https://ci-hadoop.apache.org/job/HBase/job/HBase%20Nightly/job/branch-2/274/JDK11_20Nightly_20Build_20Report_20_28Hadoop3_29/]

(/) {color:green}+1 source release artifact{color}

-- See build output for details.

(/) {color:green}+1 client integration test{color}

> The readRequestsCount does not calculate when the outResults is empty

> -

>

> Key: HBASE-25967

> URL: https://issues.apache.org/jira/browse/HBASE-25967

> Project: HBase

> Issue Type: Bug

> Components: metrics

>Reporter: Zheng Wang

>Assignee: Zheng Wang

>Priority: Major

> Fix For: 2.5.0, 2.3.6, 3.0.0-alpha-2, 2.4.5

>

>

> This metric is about request, so should not depend on the result.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [hbase] bharathv commented on pull request #3371: HBASE-25984: Avoid premature reuse of sync futures in FSHLog [DRAFT]

bharathv commented on pull request #3371: URL: https://github.com/apache/hbase/pull/3371#issuecomment-858844253 Let me try Caffeine and see what it looks like. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (HBASE-20503) [AsyncFSWAL] Failed to get sync result after 300000 ms for txid=160912, WAL system stuck?

[

https://issues.apache.org/jira/browse/HBASE-20503?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17361122#comment-17361122

]

Pankaj Kumar commented on HBASE-20503:

--

We also met this issue in one of our production env, where slow sync cost is

even more than 5 min and so flush and compaction are getting timedout. We are

using HBase-2.2.3 version + some JIRA backport.

> [AsyncFSWAL] Failed to get sync result after 30 ms for txid=160912, WAL

> system stuck?

> -

>

> Key: HBASE-20503

> URL: https://issues.apache.org/jira/browse/HBASE-20503

> Project: HBase

> Issue Type: Bug

> Components: wal

>Reporter: Michael Stack

>Priority: Major

> Attachments:

> 0001-HBASE-20503-AsyncFSWAL-Failed-to-get-sync-result-aft.patch,

> 0001-HBASE-20503-AsyncFSWAL-Failed-to-get-sync-result-aft.patch

>

>

> Scale test. Startup w/ 30k regions over ~250nodes. This RS is trying to

> furiously open regions assigned by Master. It is importantly carrying

> hbase:meta. Twenty minutes in, meta goes dead after an exception up out

> AsyncFSWAL. Process had been restarted so I couldn't get a thread dump.

> Suspicious is we archive a WAL and we get a FNFE because we got to access WAL

> in old location. [~Apache9] mind taking a look? Does this FNFE rolling kill

> the WAL sub-system? Thanks.

> DFS complaining on file open for a few files getting blocks from remote dead

> DNs: e.g. {{2018-04-25 10:05:21,506 WARN

> org.apache.hadoop.hdfs.client.impl.BlockReaderFactory: I/O error constructing

> remote block reader.

> java.net.ConnectException: Connection refused}}