[GitHub] [kafka] dajac merged pull request #9602: MINOR: Use string interpolation in FinalizedFeatureCache

dajac merged pull request #9602: URL: https://github.com/apache/kafka/pull/9602 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] dajac commented on pull request #9602: MINOR: Use string interpolation in FinalizedFeatureCache

dajac commented on pull request #9602: URL: https://github.com/apache/kafka/pull/9602#issuecomment-728753741 Failed test is unrelated. Merging to trunk. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (KAFKA-10730) KafkaApis#handleProduceRequest should use auto-generated protocol

[ https://issues.apache.org/jira/browse/KAFKA-10730?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Chia-Ping Tsai updated KAFKA-10730: --- Description: This is follow-up of KAFKA-9628 the construction of ProduceResponse is able to accept auto-generated protocol data so KafkaApis#handleProduceRequest should apply auto-generated protocol to avoid extra conversion. was:the construction of ProduceResponse is able to accept auto-generated protocol data so KafkaApis#handleProduceRequest should apply auto-generated protocol to avoid extra conversion. > KafkaApis#handleProduceRequest should use auto-generated protocol > - > > Key: KAFKA-10730 > URL: https://issues.apache.org/jira/browse/KAFKA-10730 > Project: Kafka > Issue Type: Improvement >Reporter: Chia-Ping Tsai >Assignee: Chia-Ping Tsai >Priority: Major > > This is follow-up of KAFKA-9628 > the construction of ProduceResponse is able to accept auto-generated protocol > data so KafkaApis#handleProduceRequest should apply auto-generated protocol > to avoid extra conversion. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Created] (KAFKA-10730) KafkaApis#handleProduceRequest should use auto-generated protocol

Chia-Ping Tsai created KAFKA-10730: -- Summary: KafkaApis#handleProduceRequest should use auto-generated protocol Key: KAFKA-10730 URL: https://issues.apache.org/jira/browse/KAFKA-10730 Project: Kafka Issue Type: Improvement Reporter: Chia-Ping Tsai Assignee: Chia-Ping Tsai the construction of ProduceResponse is able to accept auto-generated protocol data so KafkaApis#handleProduceRequest should apply auto-generated protocol to avoid extra conversion. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (KAFKA-10726) How to detect heartbeat failure between broker/zookeeper leader

[ https://issues.apache.org/jira/browse/KAFKA-10726?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17233304#comment-17233304 ] Keiichiro Wakasa commented on KAFKA-10726: -- [~Jack-Lee] Hello Jack, thank you so much for your comment and so sorry for the confusion. The issue of heartbeat timeout has already been solved. (it's actually due to heavy logrotate on zk nodes.) *So we are just looking for the way to detect the timeout issue for the future occurance* > How to detect heartbeat failure between broker/zookeeper leader > --- > > Key: KAFKA-10726 > URL: https://issues.apache.org/jira/browse/KAFKA-10726 > Project: Kafka > Issue Type: Bug > Components: controller, logging >Affects Versions: 2.1.1 >Reporter: Keiichiro Wakasa >Priority: Critical > > Hello experts, > I'm not sure this is proper place to ask but I'd appreciate if you could help > us with the following question... > > We've continuously suffered from broker exclusion caused by heartbeat timeout > between broker and zookeeper leader. > This issue can be easily detected by checking ephemeral nodes via zkcli.sh > but we'd like to detect this with logs like server.log/controller.log since > we have an existing system to forward these logs to our system. > Looking at server.log/controller.log, we couldn't find any logs that > indicates the heartbeat timeout. Is there any other logs to check for > heartbeat health? -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (KAFKA-10726) How to detect heartbeat failure between broker/zookeeper leader

[ https://issues.apache.org/jira/browse/KAFKA-10726?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17233300#comment-17233300 ] lqjacklee commented on KAFKA-10726: --- If you are seeing excessive pauses during garbage collection, you can consider upgrading your JDK version or garbage collector (or extend your timeout value for zookeeper.session.timeout.ms). Additionally, you can tune your Java runtime to minimize garbage collection. The engineers at LinkedIn have written about optimizing JVM garbage collection in depth. Of course, you can also check the Kafka documentation for some recommendations. some metrics which provide more information can help you : ||Name|| Description || Metric type|| Availability|| |outstanding_requests |Number of requests queued| Resource: Saturation | Four-letter words, AdminServer, JMX| |avg_latency|Amount of time it takes to respond to a client request (in ms)|Work: Throughput|Four-letter words, AdminServer, JMX| |num_alive_connections|Number of clients connected to ZooKeeper|Resource: Availability|Four-letter words, AdminServer, JMX| |followers|Number of active followers|Resource: Availability|Four-letter words, AdminServer |pending_syncs|Number of pending syncs from followers|Other|Four-letter words, AdminServer, JMX| |open_file_descriptor_count|Number of file descriptors in use|Resource: Utilization|Four-letter words, AdminServer| > How to detect heartbeat failure between broker/zookeeper leader > --- > > Key: KAFKA-10726 > URL: https://issues.apache.org/jira/browse/KAFKA-10726 > Project: Kafka > Issue Type: Bug > Components: controller, logging >Affects Versions: 2.1.1 >Reporter: Keiichiro Wakasa >Priority: Critical > > Hello experts, > I'm not sure this is proper place to ask but I'd appreciate if you could help > us with the following question... > > We've continuously suffered from broker exclusion caused by heartbeat timeout > between broker and zookeeper leader. > This issue can be easily detected by checking ephemeral nodes via zkcli.sh > but we'd like to detect this with logs like server.log/controller.log since > we have an existing system to forward these logs to our system. > Looking at server.log/controller.log, we couldn't find any logs that > indicates the heartbeat timeout. Is there any other logs to check for > heartbeat health? -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Resolved] (KAFKA-10709) Sender#sendProduceRequest should use auto-generated protocol directly

[ https://issues.apache.org/jira/browse/KAFKA-10709?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Chia-Ping Tsai resolved KAFKA-10709. Resolution: Won't Fix This fix will be included by https://github.com/apache/kafka/pull/9401 > Sender#sendProduceRequest should use auto-generated protocol directly > - > > Key: KAFKA-10709 > URL: https://issues.apache.org/jira/browse/KAFKA-10709 > Project: Kafka > Issue Type: Improvement >Reporter: Chia-Ping Tsai >Assignee: Chia-Ping Tsai >Priority: Minor > > That can avoid extra conversion to improve the performance. > related discussion: > https://github.com/apache/kafka/pull/9401#discussion_r521902936 -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] lqjack commented on a change in pull request #9596: KAFKA-10723: Fix LogManager shutdown error handling

lqjack commented on a change in pull request #9596:

URL: https://github.com/apache/kafka/pull/9596#discussion_r524907470

##

File path: core/src/test/scala/unit/kafka/log/LogManagerTest.scala

##

@@ -83,6 +87,51 @@ class LogManagerTest {

log.appendAsLeader(TestUtils.singletonRecords("test".getBytes()),

leaderEpoch = 0)

}

+ /**

+ * Tests that all internal futures are completed before

LogManager.shutdown() returns to the

+ * caller during error situations.

+ */

+ @Test

+ def testHandlingExceptionsDuringShutdown(): Unit = {

+logManager.shutdown()

+

+// We create two directories logDir1 and logDir2 to help effectively test

error handling

+// during LogManager.shutdown().

+val logDir1 = TestUtils.tempDir()

+val logDir2 = TestUtils.tempDir()

+logManager = createLogManager(Seq(logDir1, logDir2))

+assertEquals(2, logManager.liveLogDirs.size)

+logManager.startup()

+

+val log1 = logManager.getOrCreateLog(new TopicPartition(name, 0), () =>

logConfig)

+val log2 = logManager.getOrCreateLog(new TopicPartition(name, 1), () =>

logConfig)

+

+val logFile1 = new File(logDir1, name + "-0")

+assertTrue(logFile1.exists)

+val logFile2 = new File(logDir2, name + "-1")

+assertTrue(logFile2.exists)

+

+log1.appendAsLeader(TestUtils.singletonRecords("test1".getBytes()),

leaderEpoch = 0)

+log1.takeProducerSnapshot()

+log1.appendAsLeader(TestUtils.singletonRecords("test1".getBytes()),

leaderEpoch = 0)

+

+log2.appendAsLeader(TestUtils.singletonRecords("test2".getBytes()),

leaderEpoch = 0)

+log2.takeProducerSnapshot()

+log2.appendAsLeader(TestUtils.singletonRecords("test2".getBytes()),

leaderEpoch = 0)

+

+// This should cause log1.close() to fail during LogManger shutdown

sequence.

+FileUtils.deleteDirectory(logFile1)

Review comment:

What if error occur during the shutdown of the broker ? should we log

the error info to the log or just throw the exception ?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Commented] (KAFKA-10666) Kafka doesn't use keystore / key / truststore passwords for named SSL connections

[ https://issues.apache.org/jira/browse/KAFKA-10666?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17233277#comment-17233277 ] lqjacklee commented on KAFKA-10666: --- [~pfjason] Does https://issues.apache.org/jira/browse/KAFKA-10700 can resolve the issue you provided ? > Kafka doesn't use keystore / key / truststore passwords for named SSL > connections > - > > Key: KAFKA-10666 > URL: https://issues.apache.org/jira/browse/KAFKA-10666 > Project: Kafka > Issue Type: Bug > Components: admin >Affects Versions: 2.5.0, 2.6.0 > Environment: kafka in an openjdk-11 docker container, the client java > application is in an alpine container. zookeeper in a separate container. >Reporter: Jason >Priority: Minor > > When configuring named listener SSL connections with ssl key and keystore > with passwords including listener.name.ourname.ssl.key.password, > listener.name.ourname.ssl.keystore.password, and > listener.name.ourname.ssl.truststore.password via via the AdminClient the > settings are not used and the setting is not accepted if the default > ssl.key.password or ssl.keystore.password are not set. We configure all > keystore and truststore values for the named listener in a single batch using > incrementalAlterConfigs. Additionally, when ssl.keystore.password is set to > the value of our keystore password the keystore is loaded for SSL > communication without issue, however if ssl.keystore.password is incorrect > and listener.name.ourname.keystore.password is correct, we are unable to load > the keystore with bad password errors. It appears that only the default > ssl.xxx.password settings are used. This setting is immutable as when we > attempt to set it we get an error indicating that the listener.name. setting > can be set. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] quanuw commented on pull request #9598: KAFKA-10701 : First line of detailed stats from consumer-perf-test.sh incorrect

quanuw commented on pull request #9598: URL: https://github.com/apache/kafka/pull/9598#issuecomment-728698903 Hi @lijubjohn, can you explain how having joinStart initialized to 0L led to a negative fetchTimeMs? I'm new to the project and not sure how having joinStart initialized as 0L could have been the problem. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] kowshik opened a new pull request #9602: MINOR: Use string interpolation in FinalizedFeatureCache

kowshik opened a new pull request #9602: URL: https://github.com/apache/kafka/pull/9602 This is a small change. In this PR, I'm using string interpolation in `FinalizedFeatureCache` at places where string format was otherwise used. This just ensures uniformity, with this change we ensure that throughout the file we just use string interpolation. **Test plan:** Rely on existing tests since this PR is not changing behavior. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] hachikuji commented on pull request #9401: KAFKA-9628 Replace Produce request/response with automated protocol

hachikuji commented on pull request #9401: URL: https://github.com/apache/kafka/pull/9401#issuecomment-728669194 @chia7712 It might be worth checking the fancy new `toSend` implementation. I did a quick test and found that gc overhead actually increased with this change even though the new implementation seemed much better for cpu. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] chia7712 commented on pull request #9401: KAFKA-9628 Replace Produce request/response with automated protocol

chia7712 commented on pull request #9401: URL: https://github.com/apache/kafka/pull/9401#issuecomment-728664200 @hachikuji @ijuma Thanks for all feedback. I'm going to do more tests :) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] kowshik commented on pull request #9596: KAFKA-10723: Fix LogManager shutdown error handling

kowshik commented on pull request #9596: URL: https://github.com/apache/kafka/pull/9596#issuecomment-728654950 Thanks for the review @junrao! I have addressed the comments in f917f0c24cebbb0fb5eb7029ccb6676734b60b3e. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] kowshik commented on a change in pull request #9596: KAFKA-10723: Fix LogManager shutdown error handling

kowshik commented on a change in pull request #9596:

URL: https://github.com/apache/kafka/pull/9596#discussion_r524853231

##

File path: core/src/test/scala/unit/kafka/log/LogManagerTest.scala

##

@@ -83,6 +87,51 @@ class LogManagerTest {

log.appendAsLeader(TestUtils.singletonRecords("test".getBytes()),

leaderEpoch = 0)

}

+ /**

+ * Tests that all internal futures are completed before

LogManager.shutdown() returns to the

+ * caller during error situations.

+ */

+ @Test

+ def testHandlingExceptionsDuringShutdown(): Unit = {

+logManager.shutdown()

Review comment:

Thinking about it again, you are right. I have eliminated the need for

the `shutdown()` now by using a `LogManager` instance specific to the test.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Commented] (KAFKA-10062) Add a method to retrieve the current timestamp as known by the Streams app

[ https://issues.apache.org/jira/browse/KAFKA-10062?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17233230#comment-17233230 ] Rohit Deshpande commented on KAFKA-10062: - Thank you [~wbottrell] > Add a method to retrieve the current timestamp as known by the Streams app > -- > > Key: KAFKA-10062 > URL: https://issues.apache.org/jira/browse/KAFKA-10062 > Project: Kafka > Issue Type: Improvement > Components: streams >Reporter: Piotr Smolinski >Assignee: William Bottrell >Priority: Major > Labels: needs-kip, newbie > > Please add to the ProcessorContext a method to retrieve current timestamp > compatible with Punctuator#punctate(long) method. > Proposal in ProcessorContext: > long getTimestamp(PunctuationType type); > The method should return time value as known by the Punctuator scheduler with > the respective PunctuationType. > The use-case is tracking of a process with timeout-based escalation. > A transformer receives process events and in case of missing an event execute > an action (emit message) after given escalation timeout (several stages). The > initial message may already arrive with reference timestamp in the past and > may trigger different action upon arrival depending on how far in the past it > is. > If the timeout should be computed against some further time only, Punctuator > is perfectly sufficient. The problem is that I have to evaluate the current > time-related state once the message arrives. > I am using wall-clock time. Normally accessing System.currentTimeMillis() is > sufficient, but it breaks in unit testing with TopologyTestDriver, where the > app wall clock time is different from the system-wide one. > To access the mentioned clock I am using reflection to access > ProcessorContextImpl#task and then StreamTask#time. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Assigned] (KAFKA-10062) Add a method to retrieve the current timestamp as known by the Streams app

[ https://issues.apache.org/jira/browse/KAFKA-10062?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Rohit Deshpande reassigned KAFKA-10062: --- Assignee: Rohit Deshpande (was: William Bottrell) > Add a method to retrieve the current timestamp as known by the Streams app > -- > > Key: KAFKA-10062 > URL: https://issues.apache.org/jira/browse/KAFKA-10062 > Project: Kafka > Issue Type: Improvement > Components: streams >Reporter: Piotr Smolinski >Assignee: Rohit Deshpande >Priority: Major > Labels: needs-kip, newbie > > Please add to the ProcessorContext a method to retrieve current timestamp > compatible with Punctuator#punctate(long) method. > Proposal in ProcessorContext: > long getTimestamp(PunctuationType type); > The method should return time value as known by the Punctuator scheduler with > the respective PunctuationType. > The use-case is tracking of a process with timeout-based escalation. > A transformer receives process events and in case of missing an event execute > an action (emit message) after given escalation timeout (several stages). The > initial message may already arrive with reference timestamp in the past and > may trigger different action upon arrival depending on how far in the past it > is. > If the timeout should be computed against some further time only, Punctuator > is perfectly sufficient. The problem is that I have to evaluate the current > time-related state once the message arrives. > I am using wall-clock time. Normally accessing System.currentTimeMillis() is > sufficient, but it breaks in unit testing with TopologyTestDriver, where the > app wall clock time is different from the system-wide one. > To access the mentioned clock I am using reflection to access > ProcessorContextImpl#task and then StreamTask#time. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] kowshik commented on a change in pull request #9596: KAFKA-10723: Fix LogManager shutdown error handling

kowshik commented on a change in pull request #9596:

URL: https://github.com/apache/kafka/pull/9596#discussion_r524848584

##

File path: core/src/test/scala/unit/kafka/log/LogManagerTest.scala

##

@@ -83,6 +87,51 @@ class LogManagerTest {

log.appendAsLeader(TestUtils.singletonRecords("test".getBytes()),

leaderEpoch = 0)

}

+ /**

+ * Tests that all internal futures are completed before

LogManager.shutdown() returns to the

+ * caller during error situations.

+ */

+ @Test

+ def testHandlingExceptionsDuringShutdown(): Unit = {

+logManager.shutdown()

Review comment:

Yeah this explicit shutdown is needed to:

1) Re-create a new `LogManager` instance with multiple `logDirs` for this

test. This is different from the default one provided in `setUp()`.

2) Help do some additional checks post shutdown (towards the end of this

test).

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] kowshik commented on a change in pull request #9596: KAFKA-10723: Fix LogManager shutdown error handling

kowshik commented on a change in pull request #9596:

URL: https://github.com/apache/kafka/pull/9596#discussion_r524847888

##

File path: core/src/main/scala/kafka/log/LogManager.scala

##

@@ -477,27 +477,41 @@ class LogManager(logDirs: Seq[File],

jobs(dir) = jobsForDir.map(pool.submit).toSeq

}

+var firstExceptionOpt: Option[Throwable] = Option.empty

try {

for ((dir, dirJobs) <- jobs) {

-dirJobs.foreach(_.get)

+val errorsForDirJobs = dirJobs.map {

+ future =>

+try {

+ future.get

+ Option.empty

+} catch {

+ case e: ExecutionException =>

+error(s"There was an error in one of the threads during

LogManager shutdown: ${e.getCause}")

+Some(e.getCause)

+}

+}.filter{ e => e.isDefined }.map{ e => e.get }

+

+if (firstExceptionOpt.isEmpty) {

+ firstExceptionOpt = errorsForDirJobs.headOption

+}

-val logs = logsInDir(localLogsByDir, dir)

+if (errorsForDirJobs.isEmpty) {

+ val logs = logsInDir(localLogsByDir, dir)

-// update the last flush point

-debug(s"Updating recovery points at $dir")

-checkpointRecoveryOffsetsInDir(dir, logs)

+ // update the last flush point

+ debug(s"Updating recovery points at $dir")

+ checkpointRecoveryOffsetsInDir(dir, logs)

-debug(s"Updating log start offsets at $dir")

-checkpointLogStartOffsetsInDir(dir, logs)

+ debug(s"Updating log start offsets at $dir")

+ checkpointLogStartOffsetsInDir(dir, logs)

-// mark that the shutdown was clean by creating marker file

-debug(s"Writing clean shutdown marker at $dir")

-CoreUtils.swallow(Files.createFile(new File(dir,

Log.CleanShutdownFile).toPath), this)

+ // mark that the shutdown was clean by creating marker file

+ debug(s"Writing clean shutdown marker at $dir")

+ CoreUtils.swallow(Files.createFile(new File(dir,

Log.CleanShutdownFile).toPath), this)

+}

}

-} catch {

- case e: ExecutionException =>

-error(s"There was an error in one of the threads during LogManager

shutdown: ${e.getCause}")

-throw e.getCause

+ firstExceptionOpt.foreach{ e => throw e}

Review comment:

Great point. I've changed the code to do the same.

My understanding is that the exception swallow safety net exists inside

`KafkaServer.shutdown()` today, but it makes sense to also just log a warning

here instead instead of relying on the safety net:

https://github.com/apache/kafka/blob/bb34c5c8cc32d1b769a34329e34b83cda040aafc/core/src/main/scala/kafka/server/KafkaServer.scala#L732.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] hachikuji commented on pull request #9401: KAFKA-9628 Replace Produce request/response with automated protocol

hachikuji commented on pull request #9401: URL: https://github.com/apache/kafka/pull/9401#issuecomment-728643380 I think the large difference in latency in my test is due to the producer's buffer pool getting exhausted. I was looking at the "bufferpool-wait-ratio" metric exposed in the producer. With this patch, it was hovering around 0.6 while on trunk it remained around 0.01. I'll need to lower the throughput a little bit in order to get a better estimate of the regression. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

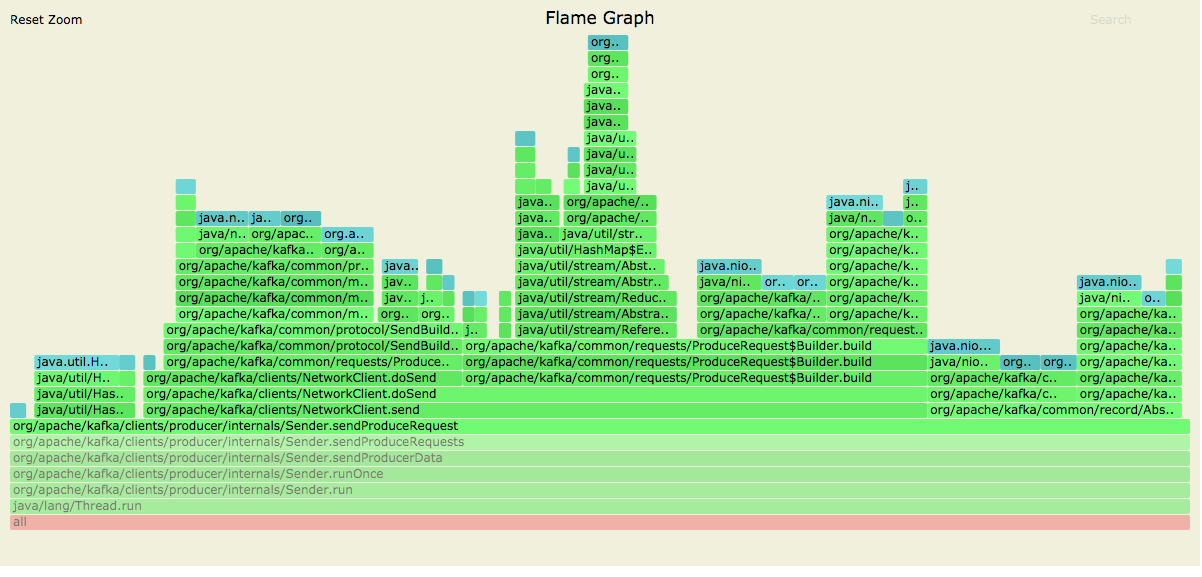

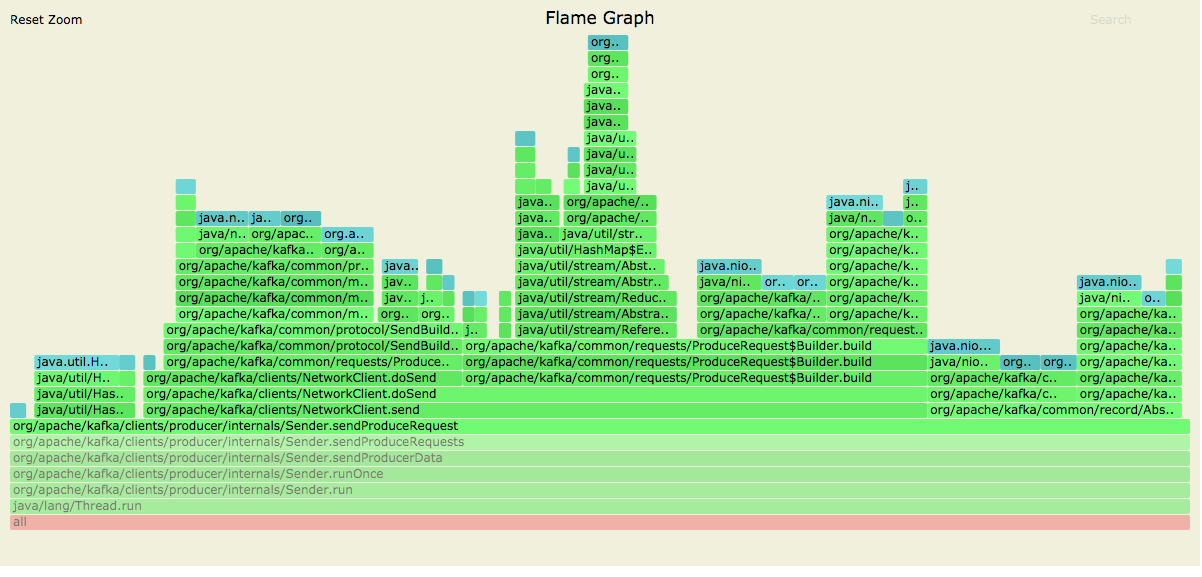

[GitHub] [kafka] hachikuji edited a comment on pull request #9401: KAFKA-9628 Replace Produce request/response with automated protocol

hachikuji edited a comment on pull request #9401: URL: https://github.com/apache/kafka/pull/9401#issuecomment-728383115 Posting allocation flame graphs from the producer before and after this patch:   So we succeeded in getting rid of the extra allocations in the network layer! I generated these graphs using the producer performance test writing to a topic with 10 partitions on a cluster with a single broker. ``` > bin/kafka-producer-perf-test.sh --topic foo --num-records 25000 --throughput -1 --record-size 256 --producer-props bootstrap.servers=localhost:9092 ``` This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] ableegoldman commented on a change in pull request #9487: KAFKA-9331: Add a streams specific uncaught exception handler

ableegoldman commented on a change in pull request #9487:

URL: https://github.com/apache/kafka/pull/9487#discussion_r524824649

##

File path:

streams/src/main/java/org/apache/kafka/streams/processor/internals/StreamThread.java

##

@@ -559,18 +552,52 @@ void runLoop() {

}

} catch (final TaskCorruptedException e) {

log.warn("Detected the states of tasks " +

e.corruptedTaskWithChangelogs() + " are corrupted. " +

- "Will close the task as dirty and re-create and

bootstrap from scratch.", e);

+"Will close the task as dirty and re-create and

bootstrap from scratch.", e);

try {

taskManager.handleCorruption(e.corruptedTaskWithChangelogs());

} catch (final TaskMigratedException taskMigrated) {

handleTaskMigrated(taskMigrated);

}

} catch (final TaskMigratedException e) {

handleTaskMigrated(e);

+} catch (final UnsupportedVersionException e) {

Review comment:

Mm ok actually I think this should be fine. I was thinking of the

handler as just "swallowing" the exception, but in reality the user would still

let the current thread die and just spin up a new one in its place. And then

the new one would hit this UnsupportedVersionException and so on, until the

brokers are upgraded. So there shouldn't be any way to get into a bad state

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] twobeeb edited a comment on pull request #9589: KAFKA-10710 - Mirror Maker 2 - Create herders only if source->target.enabled=true

twobeeb edited a comment on pull request #9589: URL: https://github.com/apache/kafka/pull/9589#issuecomment-728593310 @ryannedolan @hachikuji If I understand correctly, when setting up a link A->B.enabled=true (with defaults settings regarding heartbeat), it creates a topic heartbeat which produces beats on B I figured it was the other way around, so that the topic could be picked up by replication process, leading to a replicated topic B.heartbeat (which we monitor actually). Looking into my configuration, I now understand that I was lucky because I have one link going up back up ``replica_OLS->replica_CENTRAL`` which is now the single emitter of beats (which are then replicated in every other cluster) Reading the KIP led me to interpret that the production of heartbeat would be done within the same herder (the source one) : > Internal Topics > MM2 emits a heartbeat topic in each source cluster, which is replicated to demonstrate connectivity through the connectors. Wouldn't it make more sense to produce the beats into the source side of the replication ? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] gardnervickers commented on a change in pull request #9601: MINOR: Enable flexible versioning for ListOffsetRequest/ListOffsetResponse.

gardnervickers commented on a change in pull request #9601:

URL: https://github.com/apache/kafka/pull/9601#discussion_r524820388

##

File path: core/src/main/scala/kafka/api/ApiVersion.scala

##

@@ -424,6 +426,13 @@ case object KAFKA_2_7_IV2 extends DefaultApiVersion {

val id: Int = 30

}

+case object KAFKA_2_7_IV3 extends DefaultApiVersion {

Review comment:

Thanks, that makes more sense.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] twobeeb edited a comment on pull request #9589: KAFKA-10710 - Mirror Maker 2 - Create herders only if source->target.enabled=true

twobeeb edited a comment on pull request #9589: URL: https://github.com/apache/kafka/pull/9589#issuecomment-728593310 @ryannedolan @hachikuji If I understand correctly, when setting up a link A->B.enabled=true (with defaults settings regarding heartbeat), it creates a topic heartbeat which produces beats on B I figured it was the other way around, so that the topic could be picked up by replication process, leading to a replicated topic B.heartbeat (which we monitor actually). Looking into my configuration, I now understand that I was lucky because I have one link going up back up ``replica_OLS->replica_CENTRAL`` which is now the single emitter of beats (which are then replicated in every other cluster) Reading the KIP led me to interpret that the production of heartbeat would be done within the same herder (the source one) : > Internal Topics > MM2 emits a heartbeat topic in each source cluster, which is replicated to demonstrate connectivity through the connectors. Wouldn't it make more sense to produce the beats from the same "side" of the replication ? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] twobeeb commented on pull request #9589: KAFKA-10710 - Mirror Maker 2 - Create herders only if source->target.enabled=true

twobeeb commented on pull request #9589: URL: https://github.com/apache/kafka/pull/9589#issuecomment-728593310 @ryannedolan @hachikuji If I understand correctly, when setting up a link A->B.enabled=true (with defaults settings regarding heartbeat), it creates a topic heartbeat which produces beats on B I figured it was the other way around, so that the topic could be picked up by replication process, leading to a replicated topic B.heartbeat (which we monitor actually). Looking into my configuration, I now understand that I was lucky because I have one link going up back up ``replica_OLS->replica_CENTRAL`` which is now the single emitter of beats (which are then replicated in every other cluster) Reading the KIP lead me to interpret that the production of heartbeat would be done within the same herder (the source one) : > Internal Topics > MM2 emits a heartbeat topic in each source cluster, which is replicated to demonstrate connectivity through the connectors. Wouldn't it make more sense to produce the beats from the same "side" of the replication ? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] ableegoldman commented on a change in pull request #9487: KAFKA-9331: Add a streams specific uncaught exception handler

ableegoldman commented on a change in pull request #9487:

URL: https://github.com/apache/kafka/pull/9487#discussion_r524819063

##

File path:

streams/src/main/java/org/apache/kafka/streams/processor/internals/StreamThread.java

##

@@ -559,18 +552,52 @@ void runLoop() {

}

} catch (final TaskCorruptedException e) {

log.warn("Detected the states of tasks " +

e.corruptedTaskWithChangelogs() + " are corrupted. " +

- "Will close the task as dirty and re-create and

bootstrap from scratch.", e);

+"Will close the task as dirty and re-create and

bootstrap from scratch.", e);

try {

taskManager.handleCorruption(e.corruptedTaskWithChangelogs());

} catch (final TaskMigratedException taskMigrated) {

handleTaskMigrated(taskMigrated);

}

} catch (final TaskMigratedException e) {

handleTaskMigrated(e);

+} catch (final UnsupportedVersionException e) {

Review comment:

Just to clarify I think it's ok to leave this as-is for now, since as

Walker said all handler options are fatal at this point

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] ableegoldman commented on a change in pull request #9487: KAFKA-9331: Add a streams specific uncaught exception handler

ableegoldman commented on a change in pull request #9487:

URL: https://github.com/apache/kafka/pull/9487#discussion_r524817135

##

File path: streams/src/main/java/org/apache/kafka/streams/KafkaStreams.java

##

@@ -366,6 +374,63 @@ public void setUncaughtExceptionHandler(final

Thread.UncaughtExceptionHandler eh

}

}

+/**

+ * Set the handler invoked when an {@link

StreamsConfig#NUM_STREAM_THREADS_CONFIG internal thread}

+ * throws an unexpected exception.

+ * These might be exceptions indicating rare bugs in Kafka Streams, or they

+ * might be exceptions thrown by your code, for example a

NullPointerException thrown from your processor

+ * logic.

+ *

+ * Note, this handler must be threadsafe, since it will be shared among

all threads, and invoked from any

+ * thread that encounters such an exception.

+ *

+ * @param streamsUncaughtExceptionHandler the uncaught exception handler

of type {@link StreamsUncaughtExceptionHandler} for all internal threads

+ * @throws IllegalStateException if this {@code KafkaStreams} instance is

not in state {@link State#CREATED CREATED}.

+ * @throws NullPointerException if streamsUncaughtExceptionHandler is null.

+ */

+public void setUncaughtExceptionHandler(final

StreamsUncaughtExceptionHandler streamsUncaughtExceptionHandler) {

+final StreamsUncaughtExceptionHandler handler = exception ->

handleStreamsUncaughtException(exception, streamsUncaughtExceptionHandler);

+synchronized (stateLock) {

+if (state == State.CREATED) {

+Objects.requireNonNull(streamsUncaughtExceptionHandler);

+for (final StreamThread thread : threads) {

+thread.setStreamsUncaughtExceptionHandler(handler);

+}

+if (globalStreamThread != null) {

+globalStreamThread.setUncaughtExceptionHandler(handler);

+}

+} else {

+throw new IllegalStateException("Can only set

UncaughtExceptionHandler in CREATED state. " +

+"Current state is: " + state);

+}

+}

+}

+

+private StreamsUncaughtExceptionHandler.StreamThreadExceptionResponse

handleStreamsUncaughtException(final Throwable e,

+

final StreamsUncaughtExceptionHandler

streamsUncaughtExceptionHandler) {

+final StreamsUncaughtExceptionHandler.StreamThreadExceptionResponse

action = streamsUncaughtExceptionHandler.handle(e);

+switch (action) {

+case SHUTDOWN_CLIENT:

+log.error("Encountered the following exception during

processing " +

+"and the registered exception handler opted to \" +

action + \"." +

+" The streams client is going to shut down now. ", e);

+close(Duration.ZERO);

Review comment:

> Since the stream thread is alive when it calls close() there will not

be a deadlock anymore. So, why do we call close() with duration zero

@cadonna can you clarify? I thought we would still be in danger of deadlock

if we use the blocking `close()`, since `close()` will not return until every

thread has joined but the StreamThread that called `close()` would be stuck in

this blocking call and thus never stop/join

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] ableegoldman commented on a change in pull request #9487: KAFKA-9331: Add a streams specific uncaught exception handler

ableegoldman commented on a change in pull request #9487:

URL: https://github.com/apache/kafka/pull/9487#discussion_r524814932

##

File path:

streams/src/main/java/org/apache/kafka/streams/processor/internals/StreamThread.java

##

@@ -559,18 +552,52 @@ void runLoop() {

}

} catch (final TaskCorruptedException e) {

log.warn("Detected the states of tasks " +

e.corruptedTaskWithChangelogs() + " are corrupted. " +

- "Will close the task as dirty and re-create and

bootstrap from scratch.", e);

+"Will close the task as dirty and re-create and

bootstrap from scratch.", e);

try {

taskManager.handleCorruption(e.corruptedTaskWithChangelogs());

} catch (final TaskMigratedException taskMigrated) {

handleTaskMigrated(taskMigrated);

}

} catch (final TaskMigratedException e) {

handleTaskMigrated(e);

+} catch (final UnsupportedVersionException e) {

Review comment:

That's a fair point about broker upgrades, but don't we require the

brokers to be upgraded to a version that supports EOS _before_ turning on

eos-beta?

Anyways I was wondering if there was something special about this exception

such that ignoring it could violate eos or corrupt the state of the program.

I'll ping the eos experts to assuage my concerns

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] hachikuji commented on pull request #9401: KAFKA-9628 Replace Produce request/response with automated protocol

hachikuji commented on pull request #9401: URL: https://github.com/apache/kafka/pull/9401#issuecomment-728545356 It would be helpful if someone can reproduce the tests I did to make sure it is not something funky in my environment. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] ijuma commented on pull request #9401: KAFKA-9628 Replace Produce request/response with automated protocol

ijuma commented on pull request #9401: URL: https://github.com/apache/kafka/pull/9401#issuecomment-728531187 That is really weird. The difference seems significant enough that we need to understand it better before we can merge IMO. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] ableegoldman commented on pull request #9583: [KAFKA-10705]: Make state stores not readable by others

ableegoldman commented on pull request #9583: URL: https://github.com/apache/kafka/pull/9583#issuecomment-728523131 I don't think so. It would be nice to have if you happen to end up cutting a new RC, but I wouldn't delay the ongoing release over this This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] jolshan commented on pull request #9590: KAFKA-7556: KafkaConsumer.beginningOffsets does not return actual first offsets

jolshan commented on pull request #9590: URL: https://github.com/apache/kafka/pull/9590#issuecomment-728513284 Also looks like the test I added may be flaky, so I'll take a look at that. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] hachikuji commented on pull request #9401: KAFKA-9628 Replace Produce request/response with automated protocol

hachikuji commented on pull request #9401: URL: https://github.com/apache/kafka/pull/9401#issuecomment-728486374 Yeah, there is something strange going on, especially in regard to latency. Running the same producer performance test, I saw the following: ``` Patch: 25000 records sent, 1347222.297068 records/sec (328.91 MB/sec), 91.98 ms avg latency, 1490.00 ms max latency, 71 ms 50th, 242 ms 95th, 320 ms 99th, 728 ms 99.9th. Trunk: 25000 records sent, 1426264.954388 records/sec (348.21 MB/sec), 15.11 ms avg latency, 348.00 ms max latency, 3 ms 50th, 94 ms 95th, 179 ms 99th, 265 ms 99.9th. ``` I was able to reproduce similar results several times. Take this with a grain of salt, but from the flame graphs, I see the following differences: `RequestContext.parseRequest`: 1% -> 0.45% `RequestUtils.hasTransactionalRecords`: 0% -> 0.59% `RequestUtils.hasIdempotentRecords`: 0% -> 0.14% `KafkaApis.sendResponseCallback`: 3.20% -> 2.33% `KafkaApis.clearPartitionRecords`: 0% -> 0.16% I think `hasTransactionalRecords` and `hasIdempotentRecords` are the most obvious optimization targets (they also show up in allocations), but I do not think they explain the increase in latency. Just to be sure, I commented out these lines and I got similar results. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (KAFKA-10062) Add a method to retrieve the current timestamp as known by the Streams app

[ https://issues.apache.org/jira/browse/KAFKA-10062?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17233170#comment-17233170 ] William Bottrell commented on KAFKA-10062: -- Go ahead and take over. I had left off at the KIP approval step. > Add a method to retrieve the current timestamp as known by the Streams app > -- > > Key: KAFKA-10062 > URL: https://issues.apache.org/jira/browse/KAFKA-10062 > Project: Kafka > Issue Type: Improvement > Components: streams >Reporter: Piotr Smolinski >Assignee: William Bottrell >Priority: Major > Labels: needs-kip, newbie > > Please add to the ProcessorContext a method to retrieve current timestamp > compatible with Punctuator#punctate(long) method. > Proposal in ProcessorContext: > long getTimestamp(PunctuationType type); > The method should return time value as known by the Punctuator scheduler with > the respective PunctuationType. > The use-case is tracking of a process with timeout-based escalation. > A transformer receives process events and in case of missing an event execute > an action (emit message) after given escalation timeout (several stages). The > initial message may already arrive with reference timestamp in the past and > may trigger different action upon arrival depending on how far in the past it > is. > If the timeout should be computed against some further time only, Punctuator > is perfectly sufficient. The problem is that I have to evaluate the current > time-related state once the message arrives. > I am using wall-clock time. Normally accessing System.currentTimeMillis() is > sufficient, but it breaks in unit testing with TopologyTestDriver, where the > app wall clock time is different from the system-wide one. > To access the mentioned clock I am using reflection to access > ProcessorContextImpl#task and then StreamTask#time. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (KAFKA-10062) Add a method to retrieve the current timestamp as known by the Streams app

[ https://issues.apache.org/jira/browse/KAFKA-10062?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17233158#comment-17233158 ] Rohit Deshpande commented on KAFKA-10062: - Thanks [~cadonna] I will wait for the response. > Add a method to retrieve the current timestamp as known by the Streams app > -- > > Key: KAFKA-10062 > URL: https://issues.apache.org/jira/browse/KAFKA-10062 > Project: Kafka > Issue Type: Improvement > Components: streams >Reporter: Piotr Smolinski >Assignee: William Bottrell >Priority: Major > Labels: needs-kip, newbie > > Please add to the ProcessorContext a method to retrieve current timestamp > compatible with Punctuator#punctate(long) method. > Proposal in ProcessorContext: > long getTimestamp(PunctuationType type); > The method should return time value as known by the Punctuator scheduler with > the respective PunctuationType. > The use-case is tracking of a process with timeout-based escalation. > A transformer receives process events and in case of missing an event execute > an action (emit message) after given escalation timeout (several stages). The > initial message may already arrive with reference timestamp in the past and > may trigger different action upon arrival depending on how far in the past it > is. > If the timeout should be computed against some further time only, Punctuator > is perfectly sufficient. The problem is that I have to evaluate the current > time-related state once the message arrives. > I am using wall-clock time. Normally accessing System.currentTimeMillis() is > sufficient, but it breaks in unit testing with TopologyTestDriver, where the > app wall clock time is different from the system-wide one. > To access the mentioned clock I am using reflection to access > ProcessorContextImpl#task and then StreamTask#time. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] ijuma commented on a change in pull request #9566: KAFKA-10618: Update to Uuid class

ijuma commented on a change in pull request #9566:

URL: https://github.com/apache/kafka/pull/9566#discussion_r524738967

##

File path: clients/src/test/java/org/apache/kafka/common/UuidTest.java

##

@@ -21,50 +21,50 @@

import static org.junit.Assert.assertEquals;

import static org.junit.Assert.assertNotEquals;

-public class UUIDTest {

+public class UuidTest {

@Test

public void testSignificantBits() {

-UUID id = new UUID(34L, 98L);

+Uuid id = new Uuid(34L, 98L);

assertEquals(id.getMostSignificantBits(), 34L);

assertEquals(id.getLeastSignificantBits(), 98L);

}

@Test

-public void testUUIDEquality() {

-UUID id1 = new UUID(12L, 13L);

-UUID id2 = new UUID(12L, 13L);

-UUID id3 = new UUID(24L, 38L);

+public void testUuidEquality() {

Review comment:

We don't have to specify it, but it would be good to ensure we have a

test for the actual hashCode we're implementing. At the moment, we are only

verifying that the hashCode is the same for two equal UUIDs and different for

two unequal UUIDs. One option would be to have a few tests where we verify that

the result is what we expect it to be.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] vvcephei commented on a change in pull request #9570: KAFKA-9274: Handle TimeoutException on commit

vvcephei commented on a change in pull request #9570:

URL: https://github.com/apache/kafka/pull/9570#discussion_r524564694

##

File path:

streams/src/main/java/org/apache/kafka/streams/processor/internals/StreamTask.java

##

@@ -817,13 +817,15 @@ private void initializeMetadata() {

.filter(e -> e.getValue() != null)

.collect(Collectors.toMap(Map.Entry::getKey,

Map.Entry::getValue));

initializeTaskTime(offsetsAndMetadata);

-} catch (final TimeoutException e) {

-log.warn("Encountered {} while trying to fetch committed offsets,

will retry initializing the metadata in the next loop." +

-"\nConsider overwriting consumer config {} to a

larger value to avoid timeout errors",

-e.toString(),

-ConsumerConfig.DEFAULT_API_TIMEOUT_MS_CONFIG);

-

-throw e;

+} catch (final TimeoutException timeoutException) {

+log.warn(

+"Encountered {} while trying to fetch committed offsets, will

retry initializing the metadata in the next loop." +

+"\nConsider overwriting consumer config {} to a larger

value to avoid timeout errors",

+time.toString(),

+ConsumerConfig.DEFAULT_API_TIMEOUT_MS_CONFIG);

Review comment:

It might still be nice to see the stacktrace here (even if it also gets

logged elsewhere). If you want to do it, don't forget you have to change to

using `String.format` for the variable substitution.

I don't feel strongly in this case, so I'll defer to you whether you want to

do this or not.

##

File path:

streams/src/main/java/org/apache/kafka/streams/processor/internals/TaskManager.java

##

@@ -1029,20 +1048,40 @@ int commit(final Collection tasksToCommit) {

return -1;

} else {

int committed = 0;

-final Map>

consumedOffsetsAndMetadataPerTask = new HashMap<>();

-for (final Task task : tasksToCommit) {

+final Map>

consumedOffsetsAndMetadataPerTask = new HashMap<>();

+final Iterator it = tasksToCommit.iterator();

+while (it.hasNext()) {

+final Task task = it.next();

if (task.commitNeeded()) {

final Map

offsetAndMetadata = task.prepareCommit();

if (task.isActive()) {

-consumedOffsetsAndMetadataPerTask.put(task.id(),

offsetAndMetadata);

+consumedOffsetsAndMetadataPerTask.put(task,

offsetAndMetadata);

}

+} else {

+it.remove();

}

}

-commitOffsetsOrTransaction(consumedOffsetsAndMetadataPerTask);

+final Set uncommittedTasks = new HashSet<>();

+try {

+commitOffsetsOrTransaction(consumedOffsetsAndMetadataPerTask);

+tasksToCommit.forEach(Task::clearTaskTimeout);

+} catch (final TaskTimeoutExceptions taskTimeoutExceptions) {

+final TimeoutException timeoutException =

taskTimeoutExceptions.timeoutException();

+if (timeoutException != null) {

+tasksToCommit.forEach(t ->

t.maybeInitTaskTimeoutOrThrow(time.milliseconds(), timeoutException));

+uncommittedTasks.addAll(tasksToCommit);

+} else {

+for (final Map.Entry

timeoutExceptions : taskTimeoutExceptions.exceptions().entrySet()) {

+final Task task = timeoutExceptions.getKey();

+task.maybeInitTaskTimeoutOrThrow(time.milliseconds(),

timeoutExceptions.getValue());

+uncommittedTasks.add(task);

+}

+}

+}

for (final Task task : tasksToCommit) {

-if (task.commitNeeded()) {

+if (!uncommittedTasks.contains(task)) {

++committed;

task.postCommit(false);

Review comment:

maybe we should move `clearTaskTimeout` here, in case some of the tasks

timed out, but not all?

##

File path:

streams/src/main/java/org/apache/kafka/streams/processor/internals/TaskManager.java

##

@@ -1029,20 +1048,40 @@ int commit(final Collection tasksToCommit) {

return -1;

} else {

int committed = 0;

-final Map>

consumedOffsetsAndMetadataPerTask = new HashMap<>();

-for (final Task task : tasksToCommit) {

+final Map>

consumedOffsetsAndMetadataPerTask = new HashMap<>();

+final Iterator it = tasksToCommit.iterator();

Review comment:

I was initially worried about potential side-effects of removing from

the input collection, but on second thought, maybe it's reasonable (considering

the usage of this method) to assume that

[GitHub] [kafka] jolshan commented on a change in pull request #9566: KAFKA-10618: Update to Uuid class

jolshan commented on a change in pull request #9566:

URL: https://github.com/apache/kafka/pull/9566#discussion_r524727151

##

File path: clients/src/test/java/org/apache/kafka/common/UuidTest.java

##

@@ -21,50 +21,50 @@

import static org.junit.Assert.assertEquals;

import static org.junit.Assert.assertNotEquals;

-public class UUIDTest {

+public class UuidTest {

@Test

public void testSignificantBits() {

-UUID id = new UUID(34L, 98L);

+Uuid id = new Uuid(34L, 98L);

assertEquals(id.getMostSignificantBits(), 34L);

assertEquals(id.getLeastSignificantBits(), 98L);

}

@Test

-public void testUUIDEquality() {

-UUID id1 = new UUID(12L, 13L);

-UUID id2 = new UUID(12L, 13L);

-UUID id3 = new UUID(24L, 38L);

+public void testUuidEquality() {

Review comment:

We don't have that yet. I also didn't specify this behavior. Should I?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] ijuma commented on pull request #9401: KAFKA-9628 Replace Produce request/response with automated protocol

ijuma commented on pull request #9401: URL: https://github.com/apache/kafka/pull/9401#issuecomment-728385188 Nice! So what's the reason for the small regression in the PR description? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] hachikuji edited a comment on pull request #9401: KAFKA-9628 Replace Produce request/response with automated protocol

hachikuji edited a comment on pull request #9401: URL: https://github.com/apache/kafka/pull/9401#issuecomment-728383115 Posting allocation flame graphs from the producer before and after this patch:   So we succeeded in getting rid of the extra allocations in the network layer! I generated these graphs using the producer performance test writing to a topic with 10 partitions on a cluster with a single broker. ``` > bin/kafka-producer-perf-test.sh --topic foo --num-records 25000 --throughput -1 --record-size 256 --producer-props bootstrap.servers=localhost:9092 ``` This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] ijuma commented on a change in pull request #9566: KAFKA-10618: Update to Uuid class

ijuma commented on a change in pull request #9566:

URL: https://github.com/apache/kafka/pull/9566#discussion_r524722302

##

File path: clients/src/test/java/org/apache/kafka/common/UuidTest.java

##

@@ -21,50 +21,50 @@

import static org.junit.Assert.assertEquals;

import static org.junit.Assert.assertNotEquals;

-public class UUIDTest {

+public class UuidTest {

@Test

public void testSignificantBits() {

-UUID id = new UUID(34L, 98L);

+Uuid id = new Uuid(34L, 98L);

assertEquals(id.getMostSignificantBits(), 34L);

assertEquals(id.getLeastSignificantBits(), 98L);

}

@Test

-public void testUUIDEquality() {

-UUID id1 = new UUID(12L, 13L);

-UUID id2 = new UUID(12L, 13L);

-UUID id3 = new UUID(24L, 38L);

+public void testUuidEquality() {

Review comment:

Can we add a test that verifies that the `hashCode` is the same for our

`Uuid` and Java's `UUID`? Or do we have that already?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] hachikuji commented on pull request #9401: KAFKA-9628 Replace Produce request/response with automated protocol

hachikuji commented on pull request #9401: URL: https://github.com/apache/kafka/pull/9401#issuecomment-728383115 Posting allocation flame graphs from the producer before and after this patch:   So we succeeded in getting rid of the extra allocations in the network layer! This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (KAFKA-10729) KIP-482: Bump remaining RPC's to use tagged fields

[

https://issues.apache.org/jira/browse/KAFKA-10729?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Gardner Vickers updated KAFKA-10729:

Summary: KIP-482: Bump remaining RPC's to use tagged fields (was: KIP-482:

Bump remaining RPC's to use flexible versions)

> KIP-482: Bump remaining RPC's to use tagged fields

> --

>

> Key: KAFKA-10729

> URL: https://issues.apache.org/jira/browse/KAFKA-10729

> Project: Kafka

> Issue Type: Improvement

>Reporter: Gardner Vickers

>Assignee: Gardner Vickers

>Priority: Major

>

> With

> [KIP-482|https://cwiki.apache.org/confluence/display/KAFKA/KIP-482%3A+The+Kafka+Protocol+should+Support+Optional+Tagged+Fields],

> the Kafka protocol gained support for tagged fields.

> Not all RPC's were bumped to use flexible versioning and tagged fields. We

> should bump the remaining RPC's and provide a new IBP to take advantage of

> tagged fields via the flexible versioning mechanism.

>

> The RPC's which need to be bumped are:

>

> {code:java}

> AddOffsetsToTxnRequest

> AddOffsetsToTxnResponse

> AddPartitionsToTxnRequest

> AddPartitionsToTxnResponse

> AlterClientQuotasRequest

> AlterClientQuotasResponse

> AlterConfigsRequest

> AlterConfigsResponse

> AlterReplicaLogDirsRequest

> AlterReplicaLogDirsResponse

> DescribeClientQuotasRequest

> DescribeClientQuotasResponse

> DescribeConfigsRequest

> DescribeConfigsResponse

> EndTxnRequest

> EndTxnResponse

> ListOffsetRequest

> ListOffsetResponse

> OffsetForLeaderEpochRequest

> OffsetForLeaderEpochResponse

> ProduceRequest

> ProduceResponse

> WriteTxnMarkersRequest

> WriteTxnMarkersResponse

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Created] (KAFKA-10729) KIP-482: Bump remaining RPC's to use flexible versions

Gardner Vickers created KAFKA-10729:

---

Summary: KIP-482: Bump remaining RPC's to use flexible versions

Key: KAFKA-10729

URL: https://issues.apache.org/jira/browse/KAFKA-10729

Project: Kafka

Issue Type: Improvement

Reporter: Gardner Vickers

Assignee: Gardner Vickers

With

[KIP-482|https://cwiki.apache.org/confluence/display/KAFKA/KIP-482%3A+The+Kafka+Protocol+should+Support+Optional+Tagged+Fields],

the Kafka protocol gained support for tagged fields.

Not all RPC's were bumped to use flexible versioning and tagged fields. We

should bump the remaining RPC's and provide a new IBP to take advantage of

tagged fields via the flexible versioning mechanism.

The RPC's which need to be bumped are:

{code:java}

AddOffsetsToTxnRequest

AddOffsetsToTxnResponse

AddPartitionsToTxnRequest

AddPartitionsToTxnResponse

AlterClientQuotasRequest

AlterClientQuotasResponse

AlterConfigsRequest

AlterConfigsResponse

AlterReplicaLogDirsRequest

AlterReplicaLogDirsResponse

DescribeClientQuotasRequest

DescribeClientQuotasResponse

DescribeConfigsRequest

DescribeConfigsResponse

EndTxnRequest

EndTxnResponse

ListOffsetRequest

ListOffsetResponse

OffsetForLeaderEpochRequest

OffsetForLeaderEpochResponse

ProduceRequest

ProduceResponse

WriteTxnMarkersRequest

WriteTxnMarkersResponse

{code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [kafka] junrao commented on a change in pull request #9596: KAFKA-10723: Fix LogManager shutdown error handling

junrao commented on a change in pull request #9596:

URL: https://github.com/apache/kafka/pull/9596#discussion_r524673732

##

File path: core/src/main/scala/kafka/log/LogManager.scala

##

@@ -477,27 +477,41 @@ class LogManager(logDirs: Seq[File],

jobs(dir) = jobsForDir.map(pool.submit).toSeq

}

+var firstExceptionOpt: Option[Throwable] = Option.empty

try {

for ((dir, dirJobs) <- jobs) {

-dirJobs.foreach(_.get)

+val errorsForDirJobs = dirJobs.map {

+ future =>

+try {

+ future.get

+ Option.empty

+} catch {

+ case e: ExecutionException =>

+error(s"There was an error in one of the threads during

LogManager shutdown: ${e.getCause}")

+Some(e.getCause)

+}

+}.filter{ e => e.isDefined }.map{ e => e.get }

+

+if (firstExceptionOpt.isEmpty) {

+ firstExceptionOpt = errorsForDirJobs.headOption

+}

-val logs = logsInDir(localLogsByDir, dir)

+if (errorsForDirJobs.isEmpty) {

+ val logs = logsInDir(localLogsByDir, dir)

-// update the last flush point

-debug(s"Updating recovery points at $dir")

-checkpointRecoveryOffsetsInDir(dir, logs)

+ // update the last flush point

+ debug(s"Updating recovery points at $dir")

+ checkpointRecoveryOffsetsInDir(dir, logs)

-debug(s"Updating log start offsets at $dir")

-checkpointLogStartOffsetsInDir(dir, logs)

+ debug(s"Updating log start offsets at $dir")

+ checkpointLogStartOffsetsInDir(dir, logs)

-// mark that the shutdown was clean by creating marker file

-debug(s"Writing clean shutdown marker at $dir")

-CoreUtils.swallow(Files.createFile(new File(dir,

Log.CleanShutdownFile).toPath), this)

+ // mark that the shutdown was clean by creating marker file

+ debug(s"Writing clean shutdown marker at $dir")

+ CoreUtils.swallow(Files.createFile(new File(dir,

Log.CleanShutdownFile).toPath), this)

+}

}

-} catch {

- case e: ExecutionException =>

-error(s"There was an error in one of the threads during LogManager

shutdown: ${e.getCause}")

-throw e.getCause

+ firstExceptionOpt.foreach{ e => throw e}

Review comment:

Hmm, since we are about to shut down the JVM, should we just log a WARN

here instead of throwing the exception?

##

File path: core/src/test/scala/unit/kafka/log/LogManagerTest.scala

##

@@ -83,6 +87,51 @@ class LogManagerTest {

log.appendAsLeader(TestUtils.singletonRecords("test".getBytes()),

leaderEpoch = 0)

}

+ /**

+ * Tests that all internal futures are completed before

LogManager.shutdown() returns to the

+ * caller during error situations.

+ */

+ @Test

+ def testHandlingExceptionsDuringShutdown(): Unit = {

+logManager.shutdown()

Review comment:

Hmm, do we need this given that we do this in tearDown() already?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] jolshan commented on pull request #9590: KAFKA-7556: KafkaConsumer.beginningOffsets does not return actual first offsets

jolshan commented on pull request #9590: URL: https://github.com/apache/kafka/pull/9590#issuecomment-728360755 I've updated the code to delay creating a new segment until there is a non-compacted record. If a segment is never created in cleanSegments, the old segments are simply deleted rather than replaced. I had to change some code surrounding the transactionMetadata that allows a delay before updating the transactionIndex of a segment until the segment is actually created. Any aborted transactions will be added once the segment is created. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] jolshan commented on a change in pull request #9590: KAFKA-7556: KafkaConsumer.beginningOffsets does not return actual first offsets

jolshan commented on a change in pull request #9590: URL: https://github.com/apache/kafka/pull/9590#discussion_r524659149 ## File path: core/src/main/scala/kafka/log/LogCleaner.scala ## @@ -711,6 +723,9 @@ private[log] class Cleaner(val id: Int, shallowOffsetOfMaxTimestamp = result.shallowOffsetOfMaxTimestamp, records = retained) throttler.maybeThrottle(outputBuffer.limit()) +if (newCanUpdateBaseOffset) + dest.updateBaseOffset(result.minOffset()) +newCanUpdateBaseOffset = false Review comment: I've updated to delete segments that end up being empty. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] ryannedolan commented on pull request #9589: KAFKA-10710 - Mirror Maker 2 - Create herders only if source->target.enabled=true

ryannedolan commented on pull request #9589: URL: https://github.com/apache/kafka/pull/9589#issuecomment-728354718 > The code seems to explicitly allow the connector to be created even when the link is disabled. > @ryannedolan maybe you could clarify? My intention was to ensure that MirrorHeartbeatConnector always runs, even when a link/flow is not otherwise needed. This is because MirrorHeartbeatConnector is most useful when it emits to all clusters, not just those clusters targeted by some flow. For example, in a two-cluster environment with only A->B replicated, it is nice to have heartbeats emitted to A s.t. they get replicated to B. Without a Herder targeting A, there can be no MirrorHeartbeatConnector emitting heartbeats there, and B will see no heartbeats from A. I know that some vendors/providers use heartbeats in this way, e.g. for discovering which flows are active and healthy. And I know that some vendors/providers don't use heartbeats at all, or use something else to send them (instead of MirrorHeartbeatConnector). Hard to say whether anything would break if we nixed these extra herders without addressing the heartbeats that would go missing. IMO, we'd ideally skip creating the A->B herder whenever A->B.emit.heartbeats.enabled=false (defaults to true) and A->B.enabled=false (defaults to false). A top-level emit.heartbeats.enabled=false would then disable heartbeats altogether, which would trivially eliminate the extra herders. N.B. this would just be an optimization and wouldn't required a KIP, IMO. Ryanne This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (KAFKA-10722) Timestamped store is used even if not desired

[

https://issues.apache.org/jira/browse/KAFKA-10722?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17233116#comment-17233116

]

fml2 commented on KAFKA-10722:

--

OK, I accept that Kafka Streams needs timestamps for the internal processing.

But I still fail to see why all the users (clients) are imposed to use it. It

adds an additional (I assume, rarely needed) wrapping layer

(`ValueAndTimestamp` with the value within in being a `KeyValues` vs. just

`KeyValue`). But OK, I accepti it.

I can't see why a method can't be deprecated. Deprecation does not change the

API, the code will still work. Just the IDE will issue a warning alert if the

method is used.

And you are of course right that I can just use `Materialized.as("MyStore")`.

Actually this is what I did first. And got a `ClassCastException`. And started

to investigate the case. And wanted to guarantee that I get a non-timestamped

store – but could not get it. And hence this ticket :).

I also saw the upgrade note for 2.3.0. I understood the words "Some DSL

operators (for example KTables) are using those new stores." as "they can use

them" or "they use them internally" – but not as "you will always get the new

store type and should unwrap timestamped values". And "you might need to update

your code to cast to the correct type" did not sound very obligatory too.

Would it make sense to introduce the method "value()" (or similar) that would

return the real data – both for a `KeyValue` and a `ValueAndTimestamp`? This

would be confusing for `ValueAndTimestamp` though since `value` (the field)

would return a `KeyValue` but `value()` (the method) would return the value

part of the KeyValue.

Another note is that I could not find the explanation of the values of

timestamps used in Kafka Streams. I found out this is a millis epoch. But,

judging just by the type, it could have been the nano epoch. Using e.g.

`Instance` would eliminate the question. But this is spread over so many places

that I assume a change is not possible. Besides, this is another topic.

Thank you for your replies!

> Timestamped store is used even if not desired

> -

>

> Key: KAFKA-10722

> URL: https://issues.apache.org/jira/browse/KAFKA-10722

> Project: Kafka

> Issue Type: Bug

> Components: streams

>Affects Versions: 2.4.1, 2.6.0

>Reporter: fml2

>Priority: Major

>

> I have a stream which I then group and aggregate (this results in a KTable).

> When aggregating, I explicitly tell to materialize the result table using a

> usual (not timestamped) store.

> After that, the KTable is filtered and streamed. This stream is processed by

> a processor that accesses the store.

> The problem/bug is that even if I tell to use a non-timestamped store, a

> timestamped one is used, which leads to a ClassCastException in the processor

> (it iterates over the store and expects the items to be of type "KeyValue"

> but they are of type "ValueAndTimestamp").

> Here is the code (schematically).

> First, I define the topology:

> {code:java}

> KTable table = ...aggregate(

> initializer, // initializer for the KTable row

> aggregator, // aggregator

> Materialized.as(Stores.persistentKeyValueStore("MyStore")) // <--

> Non-Timestamped!

> .withKeySerde(...).withValueSerde(...));

> table.toStream().process(theProcessor);

> {code}

> In the class for the processor:

> {code:java}

> public void init(ProcessorContext context) {

>var store = context.getStateStore("MyStore"); // Returns a

> TimestampedKeyValueStore!

> }

> {code}

> A timestamped store is returned even if I explicitly told to use a

> non-timestamped one!

>

> I tried to find the cause for this behaviour and think that I've found it. It

> lies in this line:

> [https://github.com/apache/kafka/blob/cfc813537e955c267106eea989f6aec4879e14d7/streams/src/main/java/org/apache/kafka/streams/kstream/internals/KGroupedStreamImpl.java#L241]

> There, TimestampedKeyValueStoreMaterializer is used regardless of whether

> materialization supplier is a timestamped one or not.

> I think this is a bug.

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [kafka] ijuma commented on a change in pull request #9601: MINOR: Enable flexible versioning for ListOffsetRequest/ListOffsetResponse.

ijuma commented on a change in pull request #9601:

URL: https://github.com/apache/kafka/pull/9601#discussion_r524636462

##

File path: core/src/main/scala/kafka/api/ApiVersion.scala

##

@@ -424,6 +426,13 @@ case object KAFKA_2_7_IV2 extends DefaultApiVersion {

val id: Int = 30

}

+case object KAFKA_2_7_IV3 extends DefaultApiVersion {

Review comment:

2.7 has been branched. It should be 2.8, right?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] twobeeb commented on pull request #9589: KAFKA-10710 - Mirror Maker 2 - Create herders only if source->target.enabled=true

twobeeb commented on pull request #9589: URL: https://github.com/apache/kafka/pull/9589#issuecomment-728339571 Thank you for your help @hachikuji. I agree with your analysis and it kind of makes sense for most use-cases with 2-3 clusters as described in the original KIP. I also understand that the original intent was that the ``clusterPairs`` variable would not grow exponential thanks to the command line parameter ``--clusters`` which is target-based (not source-based). But this parameter doesn't help the business case we are implementing which can be better viewed as one "central cluster" and multiple "local clusters": - Some topics (_schema is a perfect example) must be replicated down to every local cluster, - and some "local" topics will be replicated up to the central cluster. As stated in the KAFKA-10710, I cherry picked this commit in 2.5.2 and we are now running this build of MirrorMaker in production, because we can't ramp up deployment with current code as it stands. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] wcarlson5 commented on a change in pull request #9487: KAFKA-9331: Add a streams specific uncaught exception handler

wcarlson5 commented on a change in pull request #9487:

URL: https://github.com/apache/kafka/pull/9487#discussion_r524540416

##

File path:

streams/src/main/java/org/apache/kafka/streams/processor/internals/StreamsPartitionAssignor.java

##

@@ -255,8 +255,9 @@ public ByteBuffer subscriptionUserData(final Set

topics) {

taskManager.processId(),

userEndPoint,

taskManager.getTaskOffsetSums(),

-uniqueField)

-.encode();

+uniqueField,

+(byte) assignmentErrorCode.get()

Review comment:

I guess I must have misunderstood your earlier comment. I thought you

wanted it to stay a byte so that is why I pushed back. But if you have no

objections I will just change it

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.