[GitHub] [spark] beliefer commented on a diff in pull request #39660: [SPARK-42128][SQL] Support TOP (N) for MS SQL Server dialect as an alternative to Limit pushdown

beliefer commented on code in PR #39660:

URL: https://github.com/apache/spark/pull/39660#discussion_r1083729512

##

sql/core/src/main/scala/org/apache/spark/sql/jdbc/JdbcDialects.scala:

##

@@ -544,6 +544,14 @@ abstract class JdbcDialect extends Serializable with

Logging {

if (limit > 0 ) s"LIMIT $limit" else ""

}

+ /**

+ * MS SQL Server version of `getLimitClause`.

+ * This is only supported by SQL Server as it uses TOP (N) instead.

+ */

+ def getTopExpression(limit: Integer): String = {

Review Comment:

I think we should define the different syntax by dialect themself. I exact

the API getSQLText so as some dialect could implement it in special way.

##

sql/core/src/main/scala/org/apache/spark/sql/jdbc/JdbcDialects.scala:

##

@@ -544,6 +544,14 @@ abstract class JdbcDialect extends Serializable with

Logging {

if (limit > 0 ) s"LIMIT $limit" else ""

}

+ /**

+ * MS SQL Server version of `getLimitClause`.

+ * This is only supported by SQL Server as it uses TOP (N) instead.

+ */

+ def getTopExpression(limit: Integer): String = {

Review Comment:

I think we should define the different syntax by dialect themself. I exact

the API `getSQLText` so as some dialect could implement it in special way.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] itholic opened a new pull request, #39706: [SPARK-42158][SQL] Integrate `_LEGACY_ERROR_TEMP_1003` into `FIELD_NOT_FOUND`

itholic opened a new pull request, #39706: URL: https://github.com/apache/spark/pull/39706 ### What changes were proposed in this pull request? This PR proposes to integrate `_LEGACY_ERROR_TEMP_1003` into `FIELD_NOT_FOUND` ### Why are the changes needed? We should deduplicate the similar error classes into single error class by merging them. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Fixed exiting UTs. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] viirya commented on a diff in pull request #39508: [SPARK-41985][SQL] Centralize more column resolution rules

viirya commented on code in PR #39508: URL: https://github.com/apache/spark/pull/39508#discussion_r1083698974 ## sql/core/src/test/resources/sql-tests/inputs/group-by.sql: ## @@ -45,6 +45,15 @@ SELECT COUNT(DISTINCT b), COUNT(DISTINCT b, c) FROM (SELECT 1 AS a, 2 AS b, 3 AS SELECT a AS k, COUNT(b) FROM testData GROUP BY k; SELECT a AS k, COUNT(b) FROM testData GROUP BY k HAVING k > 1; +-- GROUP BY alias is not triggered if SELECT list has lateral column alias. +SELECT 1 AS x, x + 1 AS k FROM testData GROUP BY k; + +-- GROUP BY alias is not triggered if SELECT list has outer reference. +SELECT * FROM testData WHERE a = 1 AND EXISTS (SELECT a AS k GROUP BY k); + +-- GROUP BY alias inside subquery expression with conflicting outer reference +SELECT * FROM testData WHERE a = 1 AND EXISTS (SELECT 1 AS a GROUP BY a); + Review Comment: GROUP BY alias takes precedence than outer reference? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dongjoon-hyun commented on pull request #39703: [SPARK-42157][CORE] `spark.scheduler.mode=FAIR` should provide FAIR scheduler

dongjoon-hyun commented on PR #39703: URL: https://github.com/apache/spark/pull/39703#issuecomment-1399888545 No problem. I totally understand your concern on the usage of template file. I'll also think about a new way. Thank you for your thoughtful review, @mridulm . -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] mridulm commented on pull request #39703: [SPARK-42157][CORE] `spark.scheduler.mode=FAIR` should provide FAIR scheduler

mridulm commented on PR #39703: URL: https://github.com/apache/spark/pull/39703#issuecomment-1399887383 Looks like I misunderstood the PR, I see what you mean @dongjoon-hyun. I am not sure what is a good way to make progress here ... let me think about it more. +CC @tgravescs, @Ngone51 in case you have thoughts. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] viirya commented on a diff in pull request #39508: [SPARK-41985][SQL] Centralize more column resolution rules

viirya commented on code in PR #39508: URL: https://github.com/apache/spark/pull/39508#discussion_r1083695957 ## sql/core/src/test/resources/sql-tests/inputs/group-by.sql: ## @@ -45,6 +45,15 @@ SELECT COUNT(DISTINCT b), COUNT(DISTINCT b, c) FROM (SELECT 1 AS a, 2 AS b, 3 AS SELECT a AS k, COUNT(b) FROM testData GROUP BY k; SELECT a AS k, COUNT(b) FROM testData GROUP BY k HAVING k > 1; +-- GROUP BY alias is not triggered if SELECT list has lateral column alias. +SELECT 1 AS x, x + 1 AS k FROM testData GROUP BY k; + +-- GROUP BY alias is not triggered if SELECT list has outer reference. +SELECT * FROM testData WHERE a = 1 AND EXISTS (SELECT a AS k GROUP BY k); Review Comment: If it is not group by alias but group by outer reference, it works? From `ResolveReferencesInAggregate` seems so, just want to confirm. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dongjoon-hyun commented on a diff in pull request #39703: [SPARK-42157][CORE] `spark.scheduler.mode=FAIR` should provide FAIR scheduler

dongjoon-hyun commented on code in PR #39703:

URL: https://github.com/apache/spark/pull/39703#discussion_r1083692686

##

core/src/main/scala/org/apache/spark/scheduler/SchedulableBuilder.scala:

##

@@ -86,10 +87,17 @@ private[spark] class FairSchedulableBuilder(val rootPool:

Pool, sc: SparkContext

logInfo(s"Creating Fair Scheduler pools from default file:

$DEFAULT_SCHEDULER_FILE")

Some((is, DEFAULT_SCHEDULER_FILE))

} else {

- logWarning("Fair Scheduler configuration file not found so jobs will

be scheduled in " +

-s"FIFO order. To use fair scheduling, configure pools in

$DEFAULT_SCHEDULER_FILE or " +

-s"set ${SCHEDULER_ALLOCATION_FILE.key} to a file that contains the

configuration.")

- None

+ val is =

Utils.getSparkClassLoader.getResourceAsStream(DEFAULT_SCHEDULER_TEMPLATE_FILE)

+ if (is != null) {

+logInfo("Creating Fair Scheduler pools from default template file:

" +

+ s"$DEFAULT_SCHEDULER_TEMPLATE_FILE.")

+Some((is, DEFAULT_SCHEDULER_TEMPLATE_FILE))

+ } else {

+logWarning("Fair Scheduler configuration file not found so jobs

will be scheduled in " +

+ s"FIFO order. To use fair scheduling, configure pools in

$DEFAULT_SCHEDULER_FILE " +

+ s"or set ${SCHEDULER_ALLOCATION_FILE.key} to a file that

contains the configuration.")

+None

+ }

Review Comment:

First of all, this is not a testing issue. As I wrote in the PR description,

our documentation is wrong. It says `spark.scheduler.mode=FAIR` will return a

FAIR scheduler. However, we are getting `FIFO` scheduler now.

> Note - if this is only for testing, we can special case it that way via

spark.testing

`None` is the previous behavior which ends up with `FIFO` scheduler with the

WARNING message, `23/01/22 14:47:38 WARN FairSchedulableBuilder: Fair Scheduler

configuration file not found so jobs will be scheduled in FIFO order. To use

fair scheduling, configure pools in fairscheduler.xml or set

spark.scheduler.allocation.file to a file that contains the configuration.`

> Instead, why not simply rely on returning None here ?

Got it. I understand your point about the `template` file. The reason why I

tried to use template file is that I cannot put the real `fairscheduler.xml`

file because it can be used already in the production.

> We should not be relying on template file - in deployments, template file

can be invalid - admin's are not expecting it to be read by spark.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] LuciferYang commented on a diff in pull request #39555: [SPARK-42051][SQL] Codegen Support for HiveGenericUDF

LuciferYang commented on code in PR #39555:

URL: https://github.com/apache/spark/pull/39555#discussion_r1083687701

##

sql/hive/src/main/scala/org/apache/spark/sql/hive/hiveUDFs.scala:

##

@@ -192,6 +194,48 @@ private[hive] case class HiveGenericUDF(

override protected def withNewChildrenInternal(newChildren:

IndexedSeq[Expression]): Expression =

copy(children = newChildren)

+ override protected def doGenCode(ctx: CodegenContext, ev: ExprCode):

ExprCode = {

+val refTerm = ctx.addReferenceObj("this", this)

+val childrenEvals = children.map(_.genCode(ctx))

+

+val setDeferredObjects = childrenEvals.zipWithIndex.map {

+ case (eval, i) =>

+val deferredObjectAdapterClz =

classOf[DeferredObjectAdapter].getCanonicalName

+s"""

+ |if (${eval.isNull}) {

+ | (($deferredObjectAdapterClz)

$refTerm.deferredObjects()[$i]).set(null);

+ |} else {

+ | (($deferredObjectAdapterClz)

$refTerm.deferredObjects()[$i]).set(${eval.value});

+ |}

+ |""".stripMargin

+}

+

+val resultType = CodeGenerator.boxedType(dataType)

+val resultTerm = ctx.freshName("result")

+ev.copy(code =

+ code"""

+ |${childrenEvals.map(_.code).mkString("\n")}

+ |${setDeferredObjects.mkString("\n")}

+ |$resultType $resultTerm = null;

+ |boolean ${ev.isNull} = false;

+ |try {

+ | $resultTerm = ($resultType) $refTerm.unwrapper().apply(

+ |$refTerm.function().evaluate($refTerm.deferredObjects()));

+ | ${ev.isNull} = $resultTerm == null;

+ |} catch (Throwable e) {

+ | throw QueryExecutionErrors.failedExecuteUserDefinedFunctionError(

Review Comment:

For safety, better to add a case check the exception scenario

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] LuciferYang commented on a diff in pull request #39555: [SPARK-42051][SQL] Codegen Support for HiveGenericUDF

LuciferYang commented on code in PR #39555:

URL: https://github.com/apache/spark/pull/39555#discussion_r1083674070

##

sql/hive/src/main/scala/org/apache/spark/sql/hive/hiveUDFs.scala:

##

@@ -154,17 +154,19 @@ private[hive] case class HiveGenericUDF(

function.initializeAndFoldConstants(argumentInspectors.toArray)

}

+ // Visible for codegen

@transient

- private lazy val unwrapper = unwrapperFor(returnInspector)

+ lazy val unwrapper = unwrapperFor(returnInspector)

@transient

private lazy val isUDFDeterministic = {

val udfType = function.getClass.getAnnotation(classOf[HiveUDFType])

udfType != null && udfType.deterministic() && !udfType.stateful()

}

+ // Visible for codegen

@transient

- private lazy val deferredObjects = argumentInspectors.zip(children).map {

case (inspect, child) =>

+ lazy val deferredObjects = argumentInspectors.zip(children).map { case

(inspect, child) =>

Review Comment:

ditto

##

sql/hive/src/main/scala/org/apache/spark/sql/hive/hiveUDFs.scala:

##

@@ -192,6 +194,48 @@ private[hive] case class HiveGenericUDF(

override protected def withNewChildrenInternal(newChildren:

IndexedSeq[Expression]): Expression =

copy(children = newChildren)

+ override protected def doGenCode(ctx: CodegenContext, ev: ExprCode):

ExprCode = {

+val refTerm = ctx.addReferenceObj("this", this)

+val childrenEvals = children.map(_.genCode(ctx))

+

+val setDeferredObjects = childrenEvals.zipWithIndex.map {

+ case (eval, i) =>

+val deferredObjectAdapterClz =

classOf[DeferredObjectAdapter].getCanonicalName

+s"""

+ |if (${eval.isNull}) {

+ | (($deferredObjectAdapterClz)

$refTerm.deferredObjects()[$i]).set(null);

Review Comment:

The initial value of `func` is null.

`set(null)` seem to be a protective operation

##

sql/hive/src/main/scala/org/apache/spark/sql/hive/hiveUDFs.scala:

##

@@ -154,17 +154,19 @@ private[hive] case class HiveGenericUDF(

function.initializeAndFoldConstants(argumentInspectors.toArray)

}

+ // Visible for codegen

@transient

- private lazy val unwrapper = unwrapperFor(returnInspector)

+ lazy val unwrapper = unwrapperFor(returnInspector)

Review Comment:

change to public should add type annotation

##

sql/hive/src/main/scala/org/apache/spark/sql/hive/hiveUDFs.scala:

##

@@ -192,6 +194,48 @@ private[hive] case class HiveGenericUDF(

override protected def withNewChildrenInternal(newChildren:

IndexedSeq[Expression]): Expression =

copy(children = newChildren)

+ override protected def doGenCode(ctx: CodegenContext, ev: ExprCode):

ExprCode = {

+val refTerm = ctx.addReferenceObj("this", this)

+val childrenEvals = children.map(_.genCode(ctx))

+

+val setDeferredObjects = childrenEvals.zipWithIndex.map {

+ case (eval, i) =>

+val deferredObjectAdapterClz =

classOf[DeferredObjectAdapter].getCanonicalName

+s"""

+ |if (${eval.isNull}) {

+ | (($deferredObjectAdapterClz)

$refTerm.deferredObjects()[$i]).set(null);

+ |} else {

+ | (($deferredObjectAdapterClz)

$refTerm.deferredObjects()[$i]).set(${eval.value});

+ |}

+ |""".stripMargin

+}

+

+val resultType = CodeGenerator.boxedType(dataType)

+val resultTerm = ctx.freshName("result")

+ev.copy(code =

+ code"""

+ |${childrenEvals.map(_.code).mkString("\n")}

+ |${setDeferredObjects.mkString("\n")}

+ |$resultType $resultTerm = null;

+ |boolean ${ev.isNull} = false;

+ |try {

+ | $resultTerm = ($resultType) $refTerm.unwrapper().apply(

+ |$refTerm.function().evaluate($refTerm.deferredObjects()));

+ | ${ev.isNull} = $resultTerm == null;

+ |} catch (Throwable e) {

+ | throw QueryExecutionErrors.failedExecuteUserDefinedFunctionError(

Review Comment:

For safety, better to add a case check to exception scenario

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

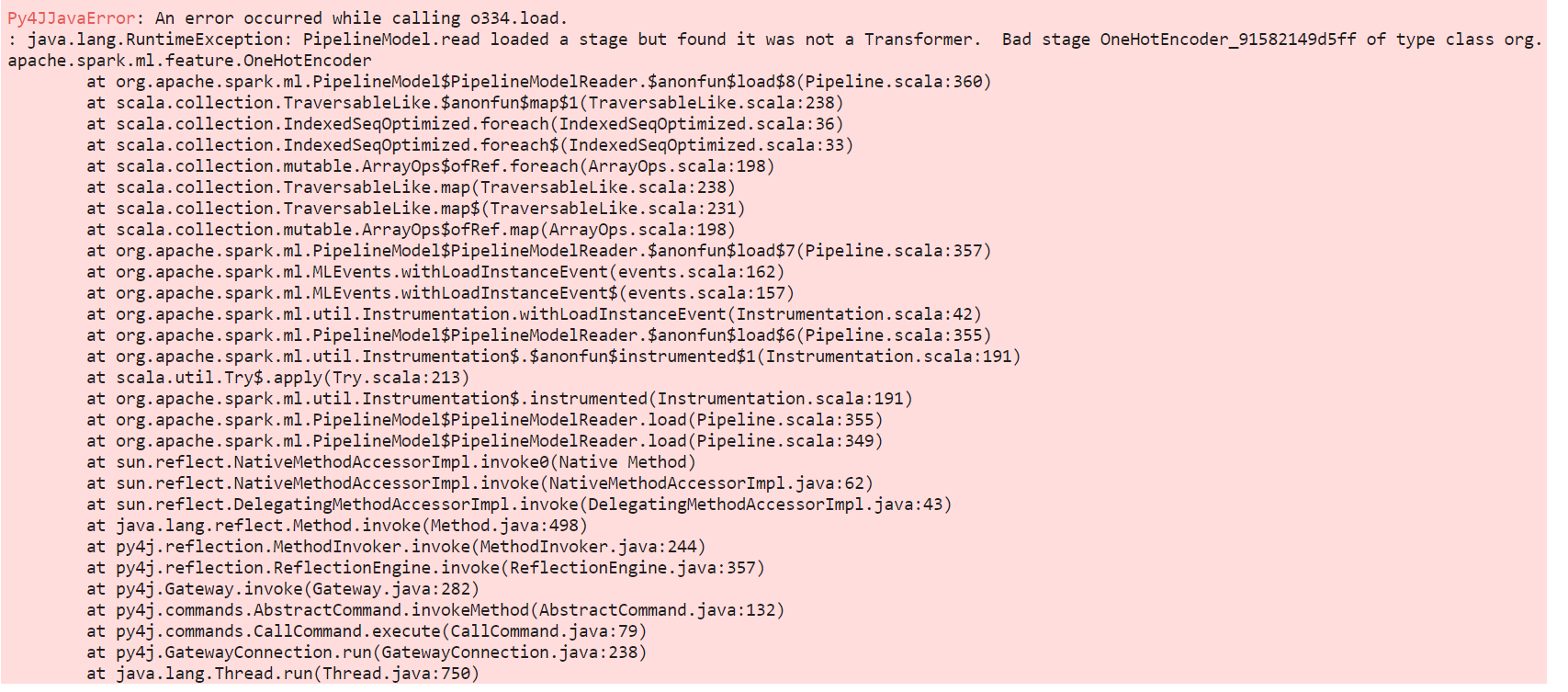

[GitHub] [spark] purple-dude commented on pull request #30889: [SPARK-33398] Fix loading tree models prior to Spark 3.0

purple-dude commented on PR #30889: URL: https://github.com/apache/spark/pull/30889#issuecomment-1399881202 Hi All, I have trained a random forest model in pyspark version 2.4 but I am unable to reload it in pyspark version 3.0.3 but it gives below error :  Please suggest how should I proceed ? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dongjoon-hyun commented on a diff in pull request #39703: [SPARK-42157][CORE] `spark.scheduler.mode=FAIR` should provide FAIR scheduler

dongjoon-hyun commented on code in PR #39703: URL: https://github.com/apache/spark/pull/39703#discussion_r1083690034 ## conf/fairscheduler-default.xml.template: ## @@ -0,0 +1,26 @@ + + + + + + +FAIR +1 +0 + + Review Comment: This is not for testing, @mridulm . As mentioned in https://github.com/apache/spark/pull/39703#pullrequestreview-1264907510, we already have a testing resource, `fairscheduler.xml`, not a template. In addition, the content of `conf/fairscheduler.xml.template` is not matched with the expected default behavior. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] itholic commented on pull request #39705: [SPARK-41488][SQL] Assign name to _LEGACY_ERROR_TEMP_1176 (and 1177)

itholic commented on PR #39705: URL: https://github.com/apache/spark/pull/39705#issuecomment-1399864027 I referred to code path in `sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/analysis/unresolved.scala` below: ```scala case class GetViewColumnByNameAndOrdinal( viewName: String, colName: String, ordinal: Int, expectedNumCandidates: Int, // viewDDL is used to help user fix incompatible schema issue for permanent views // it will be None for temp views. viewDDL: Option[String]) ``` -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] imhunterand commented on pull request #39566: Patched()Fix Protobuf Java vulnerable to Uncontrolled Resource Consumption

imhunterand commented on PR #39566: URL: https://github.com/apache/spark/pull/39566#issuecomment-1399849534 **Hi!** @everyone @apache any update is last week's ago for waited fixed. could you `merged` this pull-request as fixed/patched. Kind regards, -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] itholic commented on pull request #39705: [SPARK-41488][SQL] Assign name to _LEGACY_ERROR_TEMP_1176

itholic commented on PR #39705: URL: https://github.com/apache/spark/pull/39705#issuecomment-1399837315 cc @srielau @MaxGekk @cloud-fan -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] itholic commented on pull request #39501: [SPARK-41295][SPARK-41296][SQL] Rename the error classes

itholic commented on PR #39501: URL: https://github.com/apache/spark/pull/39501#issuecomment-1399836266 @srowen Could you happen to help creating JIRA account for @NarekDW when you find some time?? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] itholic commented on pull request #39501: [SPARK-41295][SPARK-41296][SQL] Rename the error classes

itholic commented on PR #39501: URL: https://github.com/apache/spark/pull/39501#issuecomment-1399836177 Oh, I just submit a PR for SPARK-41488, so please take a look SPARK-41302 when you have some time. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] itholic commented on a diff in pull request #39691: [SPARK-31561][SQL] Add QUALIFY clause

itholic commented on code in PR #39691:

URL: https://github.com/apache/spark/pull/39691#discussion_r1083665941

##

sql/core/src/test/resources/sql-tests/results/window.sql.out:

##

@@ -1342,3 +1342,139 @@ org.apache.spark.sql.AnalysisException

"windowName" : "w"

}

}

+

+

+-- !query

+SELECT val_long,

+ val_date,

+ max(val) OVER (partition BY val_date) AS m

+FROM testdata

+WHERE val_long > 2

+QUALIFY m > 2 AND m < 10

+-- !query schema

+struct

+-- !query output

+2147483650 2020-12-31 3

+2147483650 2020-12-31 3

+

+

+-- !query

+SELECT val_long,

+ val_date,

+ val

+FROM testdata QUALIFY max(val) OVER (partition BY val_date) >= 3

+-- !query schema

+struct

+-- !query output

+1 2017-08-01 1

+1 2017-08-01 3

+1 2017-08-01 NULL

+2147483650 2020-12-31 2

+2147483650 2020-12-31 3

+NULL 2017-08-01 1

+

+

+-- !query

+SELECT val_date,

+ val * sum(val) OVER (partition BY val_date) AS w

+FROM testdata

+QUALIFY w > 10

+-- !query schema

+struct

+-- !query output

+2017-08-01 15

+2020-12-31 15

+

+

+-- !query

+SELECT w.val_date

+FROM testdata w

+JOIN testdata w2 ON w.val_date=w2.val_date

+QUALIFY row_number() OVER (partition BY w.val_date ORDER BY w.val) IN (2)

+-- !query schema

+struct

+-- !query output

+2017-08-01

+2020-12-31

+

+

+-- !query

+SELECT val_date,

+ count(val_long) OVER (partition BY val_date) AS w

+FROM testdata

+GROUP BY val_date,

+ val_long

+HAVING Sum(val) > 1

+QUALIFY w = 1

+-- !query schema

+struct

+-- !query output

+2017-08-01 1

+2017-08-03 1

+2020-12-31 1

+

+

+-- !query

+SELECT val_date,

+ val_long,

+ Sum(val)

+FROM testdata

+GROUP BY val_date,

+ val_long

+HAVING Sum(val) > 1

+QUALIFY count(val_long) OVER (partition BY val_date) IN(SELECT 1)

+-- !query schema

+struct

+-- !query output

+2017-08-01 1 4

+2017-08-03 3 2

+2020-12-31 2147483650 5

+

+

+-- !query

+SELECT val_date,

+ val_long

+FROM testdata

+QUALIFY count(val_long) OVER (partition BY val_date) > 1 AND val > 1

+-- !query schema

+struct

+-- !query output

+2017-08-01 1

+2020-12-31 2147483650

+2020-12-31 2147483650

+

+

+-- !query

+SELECT val_date,

+ val_long

+FROM testdata

+QUALIFY val > 1

+-- !query schema

+struct<>

+-- !query output

+org.apache.spark.sql.AnalysisException

+{

+ "errorClass" : "_LEGACY_ERROR_TEMP_1032",

Review Comment:

Sure! Will follow-up after merging this PR jus in case to avoid unexpected

conflicts.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] itholic opened a new pull request, #39705: [SPARK-41488][SQL] Assign name to _LEGACY_ERROR_TEMP_1176

itholic opened a new pull request, #39705: URL: https://github.com/apache/spark/pull/39705 ### What changes were proposed in this pull request? This PR proposes to assign name to _LEGACY_ERROR_TEMP_1176, "INCOMPATIBLE_VIEW_SCHEMA_CHANGE". ### Why are the changes needed? We should assign proper name to _LEGACY_ERROR_TEMP_* ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? `./build/sbt "sql/testOnly org.apache.spark.sql.SQLQueryTestSuite*` -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] mridulm commented on a diff in pull request #39703: [SPARK-42157][CORE] `spark.scheduler.mode=FAIR` should provide FAIR scheduler

mridulm commented on code in PR #39703:

URL: https://github.com/apache/spark/pull/39703#discussion_r1083660245

##

conf/fairscheduler-default.xml.template:

##

@@ -0,0 +1,26 @@

+

+

+

+

+

+

+FAIR

+1

+0

+

+

Review Comment:

There is a `conf/fairscheduler.xml.template` - why do we need this ?

If it is for testing, move it as a resource there instead of in conf ?

##

core/src/main/scala/org/apache/spark/scheduler/SchedulableBuilder.scala:

##

@@ -86,10 +87,17 @@ private[spark] class FairSchedulableBuilder(val rootPool:

Pool, sc: SparkContext

logInfo(s"Creating Fair Scheduler pools from default file:

$DEFAULT_SCHEDULER_FILE")

Some((is, DEFAULT_SCHEDULER_FILE))

} else {

- logWarning("Fair Scheduler configuration file not found so jobs will

be scheduled in " +

-s"FIFO order. To use fair scheduling, configure pools in

$DEFAULT_SCHEDULER_FILE or " +

-s"set ${SCHEDULER_ALLOCATION_FILE.key} to a file that contains the

configuration.")

- None

+ val is =

Utils.getSparkClassLoader.getResourceAsStream(DEFAULT_SCHEDULER_TEMPLATE_FILE)

+ if (is != null) {

+logInfo("Creating Fair Scheduler pools from default template file:

" +

+ s"$DEFAULT_SCHEDULER_TEMPLATE_FILE.")

+Some((is, DEFAULT_SCHEDULER_TEMPLATE_FILE))

+ } else {

+logWarning("Fair Scheduler configuration file not found so jobs

will be scheduled in " +

+ s"FIFO order. To use fair scheduling, configure pools in

$DEFAULT_SCHEDULER_FILE " +

+ s"or set ${SCHEDULER_ALLOCATION_FILE.key} to a file that

contains the configuration.")

+None

+ }

Review Comment:

We should not be relying on template file - in deployments, template file

can be invalid - admin's are not expecting it to be read by spark.

Instead, why not simply rely on returning `None` here ?

Note - if this is only for testing, we can special case it that way via

`spark.testing`

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] itholic commented on a diff in pull request #39695: [SPARK-42156] SparkConnectClient supports RetryPolicies now

itholic commented on code in PR #39695:

URL: https://github.com/apache/spark/pull/39695#discussion_r1083661917

##

python/pyspark/sql/connect/client.py:

##

@@ -365,6 +385,15 @@ def __init__(

# Parse the connection string.

self._builder = ChannelBuilder(connectionString, channelOptions)

self._user_id = None

+self._retry_policy = {

+"max_retries": 15,

+"backoff_multiplier": 4,

+"initial_backoff": 50,

+"max_backoff": 6,

+}

Review Comment:

Thanks for the context

Looks good if it's enough to test!

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] itholic commented on pull request #39701: [SPARK-41489][SQL] Assign name to _LEGACY_ERROR_TEMP_2415

itholic commented on PR #39701: URL: https://github.com/apache/spark/pull/39701#issuecomment-1399797647 cc @MaxGekk @srielau @cloud-fan -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] itholic commented on pull request #39702: [SPARK-41487][SQL] Assign name to _LEGACY_ERROR_TEMP_1020

itholic commented on PR #39702: URL: https://github.com/apache/spark/pull/39702#issuecomment-1399797614 cc @MaxGekk @srielau @cloud-fan -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] itholic commented on pull request #39700: [SPARK-41490][SQL] Assign name to _LEGACY_ERROR_TEMP_2441

itholic commented on PR #39700: URL: https://github.com/apache/spark/pull/39700#issuecomment-1399797488 cc @MaxGekk @srielau @cloud-fan -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] grundprinzip commented on a diff in pull request #39695: [SPARK-42156] SparkConnectClient supports RetryPolicies now

grundprinzip commented on code in PR #39695:

URL: https://github.com/apache/spark/pull/39695#discussion_r1083643562

##

python/pyspark/sql/connect/client.py:

##

@@ -365,6 +385,15 @@ def __init__(

# Parse the connection string.

self._builder = ChannelBuilder(connectionString, channelOptions)

self._user_id = None

+self._retry_policy = {

+"max_retries": 15,

+"backoff_multiplier": 4,

+"initial_backoff": 50,

+"max_backoff": 6,

+}

Review Comment:

These values modeled roughly after the GRPC retry policies. In this case

this gives us enough time for the system to be ready.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] LuciferYang commented on a diff in pull request #39642: [SPARK-41677][CORE][SQL][SS][UI] Add Protobuf serializer for `StreamingQueryProgressWrapper`

LuciferYang commented on code in PR #39642:

URL: https://github.com/apache/spark/pull/39642#discussion_r1083643178

##

core/src/main/protobuf/org/apache/spark/status/protobuf/store_types.proto:

##

@@ -765,3 +765,54 @@ message PoolData {

optional string name = 1;

repeated int64 stage_ids = 2;

}

+

+message StateOperatorProgress {

+ optional string operator_name = 1;

+ int64 num_rows_total = 2;

+ int64 num_rows_updated = 3;

+ int64 all_updates_time_ms = 4;

+ int64 num_rows_removed = 5;

+ int64 all_removals_time_ms = 6;

+ int64 commit_time_ms = 7;

+ int64 memory_used_bytes = 8;

+ int64 num_rows_dropped_by_watermark = 9;

+ int64 num_shuffle_partitions = 10;

+ int64 num_state_store_instances = 11;

+ map custom_metrics = 12;

Review Comment:

[a904a27](https://github.com/apache/spark/pull/39642/commits/a904a27919a47cebf3784a8756f46b3237b4be46)

check/test all map

[699ebd1](https://github.com/apache/spark/pull/39642/commits/699ebd1e6c3905722d0b09ff11e5dccc31813d3c)

add `setJMapField` function to `Utils`

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] grundprinzip commented on a diff in pull request #39695: [SPARK-42156] SparkConnectClient supports RetryPolicies now

grundprinzip commented on code in PR #39695:

URL: https://github.com/apache/spark/pull/39695#discussion_r1083643160

##

python/pyspark/sql/connect/client.py:

##

@@ -531,12 +560,16 @@ def _analyze(self, plan: pb2.Plan, explain_mode: str =

"extended") -> AnalyzeRes

req.explain.explain_mode = pb2.Explain.ExplainMode.CODEGEN

else: # formatted

req.explain.explain_mode = pb2.Explain.ExplainMode.FORMATTED

-

try:

-resp = self._stub.AnalyzePlan(req,

metadata=self._builder.metadata())

-if resp.client_id != self._session_id:

-raise SparkConnectException("Received incorrect session

identifier for request.")

-return AnalyzeResult.fromProto(resp)

+for attempt in Retrying(SparkConnectClient.retry_exception,

**self._retry_policy):

+with attempt:

+resp = self._stub.AnalyzePlan(req,

metadata=self._builder.metadata())

+if resp.client_id != self._session_id:

+raise SparkConnectException(

+"Received incorrect session identifier for

request."

Review Comment:

Done.

##

python/pyspark/sql/connect/client.py:

##

@@ -567,54 +602,48 @@ def _execute_and_fetch(

logger.info("ExecuteAndFetch")

m: Optional[pb2.ExecutePlanResponse.Metrics] = None

-

batches: List[pa.RecordBatch] = []

try:

-for b in self._stub.ExecutePlan(req,

metadata=self._builder.metadata()):

-if b.client_id != self._session_id:

-raise SparkConnectException(

-"Received incorrect session identifier for request."

-)

-if b.metrics is not None:

-logger.debug("Received metric batch.")

-m = b.metrics

-if b.HasField("arrow_batch"):

-logger.debug(

-f"Received arrow batch rows={b.arrow_batch.row_count} "

-f"size={len(b.arrow_batch.data)}"

-)

-

-with pa.ipc.open_stream(b.arrow_batch.data) as reader:

-for batch in reader:

-assert isinstance(batch, pa.RecordBatch)

-batches.append(batch)

+for attempt in Retrying(SparkConnectClient.retry_exception,

**self._retry_policy):

+with attempt:

+for b in self._stub.ExecutePlan(req,

metadata=self._builder.metadata()):

+if b.client_id != self._session_id:

+raise SparkConnectException(

+"Received incorrect session identifier for

request."

Review Comment:

Done

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] grundprinzip commented on a diff in pull request #39695: [SPARK-42156] SparkConnectClient supports RetryPolicies now

grundprinzip commented on code in PR #39695: URL: https://github.com/apache/spark/pull/39695#discussion_r1083642374 ## python/pyspark/sql/tests/connect/test_connect_basic.py: ## @@ -2591,6 +2591,73 @@ def test_unsupported_io_functions(self): getattr(df.write, f)() +@unittest.skipIf(not should_test_connect, connect_requirement_message) +class ClientTests(unittest.TestCase): +def test_retry_error_handling(self): +# Helper class for wrapping the test. +class TestError(grpc.RpcError, Exception): +def __init__(self, code: grpc.StatusCode): +self._code = code + +def code(self): +return self._code + +def stub(retries, w, code): +w["counter"] += 1 +if w["counter"] < retries: +raise TestError(code) + +from pyspark.sql.connect.client import Retrying Review Comment: Done -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] grundprinzip commented on a diff in pull request #39695: [SPARK-42156] SparkConnectClient supports RetryPolicies now

grundprinzip commented on code in PR #39695: URL: https://github.com/apache/spark/pull/39695#discussion_r1083642191 ## python/pyspark/sql/connect/client.py: ## @@ -640,6 +669,136 @@ def _handle_error(self, rpc_error: grpc.RpcError) -> NoReturn: raise SparkConnectException(str(rpc_error)) from None +class RetryState: +""" +Simple state helper that captures the state between retries of the exceptions. It +keeps track of the last exception thrown and how many in total. when the task +finishes successfully done() returns True. +""" + +def __init__(self) -> None: +self._exception: Optional[BaseException] = None +self._done = False +self._count = 0 + +def set_exception(self, exc: Optional[BaseException]) -> None: +self._exception = exc +self._count += 1 + +def exception(self) -> Optional[BaseException]: +return self._exception + +def set_done(self) -> None: +self._done = True + +def count(self) -> int: +return self._count + +def done(self) -> bool: +return self._done + + +class AttemptManager: +""" +Simple ContextManager that is used to capture the exception thrown inside the context. +""" + +def __init__(self, check: Callable[..., bool], retry_state: RetryState) -> None: +self._retry_state = retry_state +self._can_retry = check + +def __enter__(self) -> None: +pass + +def __exit__( +self, +exc_type: Optional[Type[BaseException]], +exc_val: Optional[BaseException], +exc_tb: Optional[TracebackType], +) -> Optional[bool]: +if isinstance(exc_val, BaseException): +# Swallow the exception. +if self._can_retry(exc_val): +self._retry_state.set_exception(exc_val) +return True +# Bubble up the exception. +return False +else: +self._retry_state.set_done() +return None + + +class Retrying: +""" +This helper class is used as a generator together with a context manager to +allow retrying exceptions in particular code blocks. The Retrying can be configured +with a lambda function that is can be filtered what kind of exceptions should be +retried. + +In addition, there are several parameters that are used to configure the exponential +backoff behavior. + +An example to use this class looks like this: + +for attempt in Retrying(lambda x: isinstance(x, TransientError)): +with attempt: +# do the work. + +""" + +def __init__( +self, +can_retry: Callable[..., bool] = lambda x: True, +max_retries: int = 15, +initial_backoff: int = 50, +max_backoff: int = 6, +backoff_multiplier: float = 4.0, +) -> None: +self._can_retry = can_retry +self._max_retries = max_retries +self._initial_backoff = initial_backoff +self._max_backoff = max_backoff +self._backoff_multiplier = backoff_multiplier + +def __iter__(self) -> Generator[AttemptManager, None, None]: +""" +Generator function to wrap the exception producing code block. +Returns +--- + Review Comment: Done -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] grundprinzip commented on a diff in pull request #39695: [SPARK-42156] SparkConnectClient supports RetryPolicies now

grundprinzip commented on code in PR #39695: URL: https://github.com/apache/spark/pull/39695#discussion_r1083641679 ## python/pyspark/sql/connect/client.py: ## @@ -640,6 +669,136 @@ def _handle_error(self, rpc_error: grpc.RpcError) -> NoReturn: raise SparkConnectException(str(rpc_error)) from None +class RetryState: +""" +Simple state helper that captures the state between retries of the exceptions. It +keeps track of the last exception thrown and how many in total. when the task +finishes successfully done() returns True. +""" + +def __init__(self) -> None: +self._exception: Optional[BaseException] = None +self._done = False +self._count = 0 + +def set_exception(self, exc: Optional[BaseException]) -> None: +self._exception = exc +self._count += 1 + +def exception(self) -> Optional[BaseException]: +return self._exception + +def set_done(self) -> None: +self._done = True + +def count(self) -> int: +return self._count + +def done(self) -> bool: +return self._done + + +class AttemptManager: +""" +Simple ContextManager that is used to capture the exception thrown inside the context. +""" + +def __init__(self, check: Callable[..., bool], retry_state: RetryState) -> None: +self._retry_state = retry_state +self._can_retry = check + +def __enter__(self) -> None: +pass + +def __exit__( +self, +exc_type: Optional[Type[BaseException]], +exc_val: Optional[BaseException], +exc_tb: Optional[TracebackType], +) -> Optional[bool]: +if isinstance(exc_val, BaseException): +# Swallow the exception. +if self._can_retry(exc_val): +self._retry_state.set_exception(exc_val) +return True +# Bubble up the exception. +return False +else: +self._retry_state.set_done() +return None + + +class Retrying: +""" +This helper class is used as a generator together with a context manager to +allow retrying exceptions in particular code blocks. The Retrying can be configured +with a lambda function that is can be filtered what kind of exceptions should be +retried. + +In addition, there are several parameters that are used to configure the exponential +backoff behavior. + +An example to use this class looks like this: + +for attempt in Retrying(lambda x: isinstance(x, TransientError)): +with attempt: +# do the work. + Review Comment: Done -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] grundprinzip commented on a diff in pull request #39695: [SPARK-42156] SparkConnectClient supports RetryPolicies now

grundprinzip commented on code in PR #39695:

URL: https://github.com/apache/spark/pull/39695#discussion_r1083641393

##

python/pyspark/sql/connect/client.py:

##

@@ -567,54 +602,48 @@ def _execute_and_fetch(

logger.info("ExecuteAndFetch")

m: Optional[pb2.ExecutePlanResponse.Metrics] = None

-

batches: List[pa.RecordBatch] = []

try:

-for b in self._stub.ExecutePlan(req,

metadata=self._builder.metadata()):

-if b.client_id != self._session_id:

-raise SparkConnectException(

-"Received incorrect session identifier for request."

-)

-if b.metrics is not None:

-logger.debug("Received metric batch.")

-m = b.metrics

-if b.HasField("arrow_batch"):

-logger.debug(

-f"Received arrow batch rows={b.arrow_batch.row_count} "

-f"size={len(b.arrow_batch.data)}"

-)

-

-with pa.ipc.open_stream(b.arrow_batch.data) as reader:

-for batch in reader:

-assert isinstance(batch, pa.RecordBatch)

-batches.append(batch)

+for attempt in Retrying(SparkConnectClient.retry_exception,

**self._retry_policy):

+with attempt:

+for b in self._stub.ExecutePlan(req,

metadata=self._builder.metadata()):

+if b.client_id != self._session_id:

+raise SparkConnectException(

+"Received incorrect session identifier for

request."

+)

+if b.metrics is not None:

+logger.debug("Received metric batch.")

+m = b.metrics

+if b.HasField("arrow_batch"):

+logger.debug(

+f"Received arrow batch

rows={b.arrow_batch.row_count} "

+f"size={len(b.arrow_batch.data)}"

+)

+

+with pa.ipc.open_stream(b.arrow_batch.data) as

reader:

+for batch in reader:

+assert isinstance(batch, pa.RecordBatch)

+batches.append(batch)

except grpc.RpcError as rpc_error:

self._handle_error(rpc_error)

-

assert len(batches) > 0

-

table = pa.Table.from_batches(batches=batches)

-

metrics: List[PlanMetrics] = self._build_metrics(m) if m is not None

else []

-

return table, metrics

def _handle_error(self, rpc_error: grpc.RpcError) -> NoReturn:

"""

Error handling helper for dealing with GRPC Errors. On the server

side, certain

exceptions are enriched with additional RPC Status information. These

are

unpacked in this function and put into the exception.

-

To avoid overloading the user with GRPC errors, this message explicitly

swallows the error context from the call. This GRPC Error is logged

however,

and can be enabled.

-

Review Comment:

done

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] grundprinzip commented on a diff in pull request #39695: [SPARK-42156] SparkConnectClient supports RetryPolicies now

grundprinzip commented on code in PR #39695:

URL: https://github.com/apache/spark/pull/39695#discussion_r1083641323

##

python/pyspark/sql/connect/client.py:

##

@@ -567,54 +602,48 @@ def _execute_and_fetch(

logger.info("ExecuteAndFetch")

m: Optional[pb2.ExecutePlanResponse.Metrics] = None

-

batches: List[pa.RecordBatch] = []

try:

-for b in self._stub.ExecutePlan(req,

metadata=self._builder.metadata()):

-if b.client_id != self._session_id:

-raise SparkConnectException(

-"Received incorrect session identifier for request."

-)

-if b.metrics is not None:

-logger.debug("Received metric batch.")

-m = b.metrics

-if b.HasField("arrow_batch"):

-logger.debug(

-f"Received arrow batch rows={b.arrow_batch.row_count} "

-f"size={len(b.arrow_batch.data)}"

-)

-

-with pa.ipc.open_stream(b.arrow_batch.data) as reader:

-for batch in reader:

-assert isinstance(batch, pa.RecordBatch)

-batches.append(batch)

+for attempt in Retrying(SparkConnectClient.retry_exception,

**self._retry_policy):

+with attempt:

+for b in self._stub.ExecutePlan(req,

metadata=self._builder.metadata()):

+if b.client_id != self._session_id:

+raise SparkConnectException(

+"Received incorrect session identifier for

request."

+)

+if b.metrics is not None:

+logger.debug("Received metric batch.")

+m = b.metrics

+if b.HasField("arrow_batch"):

+logger.debug(

+f"Received arrow batch

rows={b.arrow_batch.row_count} "

+f"size={len(b.arrow_batch.data)}"

+)

+

+with pa.ipc.open_stream(b.arrow_batch.data) as

reader:

+for batch in reader:

+assert isinstance(batch, pa.RecordBatch)

+batches.append(batch)

except grpc.RpcError as rpc_error:

self._handle_error(rpc_error)

-

assert len(batches) > 0

-

table = pa.Table.from_batches(batches=batches)

-

metrics: List[PlanMetrics] = self._build_metrics(m) if m is not None

else []

-

return table, metrics

def _handle_error(self, rpc_error: grpc.RpcError) -> NoReturn:

"""

Error handling helper for dealing with GRPC Errors. On the server

side, certain

exceptions are enriched with additional RPC Status information. These

are

unpacked in this function and put into the exception.

-

To avoid overloading the user with GRPC errors, this message explicitly

swallows the error context from the call. This GRPC Error is logged

however,

and can be enabled.

-

Parameters

--

rpc_error : grpc.RpcError

RPC Error containing the details of the exception.

-

Review Comment:

Done

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] grundprinzip commented on a diff in pull request #39695: [SPARK-42156] SparkConnectClient supports RetryPolicies now

grundprinzip commented on code in PR #39695:

URL: https://github.com/apache/spark/pull/39695#discussion_r1083641243

##

python/pyspark/sql/connect/client.py:

##

@@ -567,54 +602,48 @@ def _execute_and_fetch(

logger.info("ExecuteAndFetch")

m: Optional[pb2.ExecutePlanResponse.Metrics] = None

-

batches: List[pa.RecordBatch] = []

try:

-for b in self._stub.ExecutePlan(req,

metadata=self._builder.metadata()):

-if b.client_id != self._session_id:

-raise SparkConnectException(

-"Received incorrect session identifier for request."

-)

-if b.metrics is not None:

-logger.debug("Received metric batch.")

-m = b.metrics

-if b.HasField("arrow_batch"):

-logger.debug(

-f"Received arrow batch rows={b.arrow_batch.row_count} "

-f"size={len(b.arrow_batch.data)}"

-)

-

-with pa.ipc.open_stream(b.arrow_batch.data) as reader:

-for batch in reader:

-assert isinstance(batch, pa.RecordBatch)

-batches.append(batch)

+for attempt in Retrying(SparkConnectClient.retry_exception,

**self._retry_policy):

+with attempt:

+for b in self._stub.ExecutePlan(req,

metadata=self._builder.metadata()):

+if b.client_id != self._session_id:

+raise SparkConnectException(

+"Received incorrect session identifier for

request."

+)

+if b.metrics is not None:

+logger.debug("Received metric batch.")

+m = b.metrics

+if b.HasField("arrow_batch"):

+logger.debug(

+f"Received arrow batch

rows={b.arrow_batch.row_count} "

+f"size={len(b.arrow_batch.data)}"

+)

+

+with pa.ipc.open_stream(b.arrow_batch.data) as

reader:

+for batch in reader:

+assert isinstance(batch, pa.RecordBatch)

+batches.append(batch)

except grpc.RpcError as rpc_error:

self._handle_error(rpc_error)

-

assert len(batches) > 0

-

table = pa.Table.from_batches(batches=batches)

-

metrics: List[PlanMetrics] = self._build_metrics(m) if m is not None

else []

-

return table, metrics

def _handle_error(self, rpc_error: grpc.RpcError) -> NoReturn:

"""

Error handling helper for dealing with GRPC Errors. On the server

side, certain

exceptions are enriched with additional RPC Status information. These

are

unpacked in this function and put into the exception.

-

Review Comment:

Done.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] grundprinzip commented on a diff in pull request #39695: [SPARK-42156] SparkConnectClient supports RetryPolicies now

grundprinzip commented on code in PR #39695:

URL: https://github.com/apache/spark/pull/39695#discussion_r1083640867

##

python/pyspark/sql/connect/client.py:

##

@@ -531,12 +560,16 @@ def _analyze(self, plan: pb2.Plan, explain_mode: str =

"extended") -> AnalyzeRes

req.explain.explain_mode = pb2.Explain.ExplainMode.CODEGEN

else: # formatted

req.explain.explain_mode = pb2.Explain.ExplainMode.FORMATTED

-

try:

-resp = self._stub.AnalyzePlan(req,

metadata=self._builder.metadata())

-if resp.client_id != self._session_id:

-raise SparkConnectException("Received incorrect session

identifier for request.")

-return AnalyzeResult.fromProto(resp)

+for attempt in Retrying(SparkConnectClient.retry_exception,

**self._retry_policy):

+with attempt:

+resp = self._stub.AnalyzePlan(req,

metadata=self._builder.metadata())

+if resp.client_id != self._session_id:

+raise SparkConnectException(

+"Received incorrect session identifier for

request."

Review Comment:

This is unchanged code from the old handling, but I can add the two IDs

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] LuciferYang commented on a diff in pull request #39642: [SPARK-41677][CORE][SQL][SS][UI] Add Protobuf serializer for `StreamingQueryProgressWrapper`

LuciferYang commented on code in PR #39642:

URL: https://github.com/apache/spark/pull/39642#discussion_r1083634888

##

sql/core/src/test/scala/org/apache/spark/status/protobuf/sql/KVStoreProtobufSerializerSuite.scala:

##

@@ -271,4 +278,254 @@ class KVStoreProtobufSerializerSuite extends

SparkFunSuite {

assert(result.endTimestamp == input.endTimestamp)

}

}

+

+ test("StreamingQueryProgressWrapper") {

+val normalInput = {

+ val stateOperatorProgress0 = new StateOperatorProgress(

+operatorName = "op-0",

+numRowsTotal = 1L,

+numRowsUpdated = 2L,

+allUpdatesTimeMs = 3L,

+numRowsRemoved = 4L,

+allRemovalsTimeMs = 5L,

+commitTimeMs = 6L,

+memoryUsedBytes = 7L,

+numRowsDroppedByWatermark = 8L,

+numShufflePartitions = 9L,

+numStateStoreInstances = 10L,

+customMetrics = Map(

+ "custom-metrics-00" -> JLong.valueOf("10"),

+ "custom-metrics-01" -> JLong.valueOf("11")).asJava

+ )

+ val stateOperatorProgress1 = new StateOperatorProgress(

+operatorName = null,

+numRowsTotal = 11L,

+numRowsUpdated = 12L,

+allUpdatesTimeMs = 13L,

+numRowsRemoved = 14L,

+allRemovalsTimeMs = 15L,

+commitTimeMs = 16L,

+memoryUsedBytes = 17L,

+numRowsDroppedByWatermark = 18L,

+numShufflePartitions = 19L,

+numStateStoreInstances = 20L,

+customMetrics = Map(

+ "custom-metrics-10" -> JLong.valueOf("20"),

+ "custom-metrics-11" -> JLong.valueOf("21")).asJava

+ )

+ val source0 = new SourceProgress(

+description = "description-0",

+startOffset = "startOffset-0",

+endOffset = "endOffset-0",

+latestOffset = "latestOffset-0",

+numInputRows = 10L,

+inputRowsPerSecond = 11.0,

+processedRowsPerSecond = 12.0,

+metrics = Map(

+ "metrics-00" -> "10",

+ "metrics-01" -> "11").asJava

+ )

+ val source1 = new SourceProgress(

+description = "description-1",

+startOffset = "startOffset-1",

+endOffset = "endOffset-1",

+latestOffset = "latestOffset-1",

+numInputRows = 20L,

+inputRowsPerSecond = 21.0,

+processedRowsPerSecond = 22.0,

+metrics = Map(

+ "metrics-10" -> "20",

+ "metrics-11" -> "21").asJava

+ )

+ val sink = new SinkProgress(

+description = "sink-0",

+numOutputRows = 30,

+metrics = Map(

+ "metrics-20" -> "30",

+ "metrics-21" -> "31").asJava

+ )

+ val schema1 = new StructType()

+.add("c1", "long")

+.add("c2", "double")

+ val schema2 = new StructType()

+.add("rc", "long")

+.add("min_q", "string")

+.add("max_q", "string")

+ val observedMetrics = Map[String, Row](

+"event1" -> new GenericRowWithSchema(Array(1L, 3.0d), schema1),

+"event2" -> new GenericRowWithSchema(Array(1L, "hello", "world"),

schema2)

+ ).asJava

+ val progress = new StreamingQueryProgress(

+id = UUID.randomUUID(),

+runId = UUID.randomUUID(),

+name = "name-1",

+timestamp = "2023-01-03T09:14:04.175Z",

+batchId = 1L,

+batchDuration = 2L,

+durationMs = Map(

+ "duration-0" -> JLong.valueOf("10"),

+ "duration-1" -> JLong.valueOf("11")).asJava,

+eventTime = Map(

+ "eventTime-0" -> "20",

+ "eventTime-1" -> "21").asJava,

+stateOperators = Array(stateOperatorProgress0, stateOperatorProgress1),

+sources = Array(source0, source1),

+sink = sink,

+observedMetrics = observedMetrics

+ )

+ new StreamingQueryProgressWrapper(progress)

+}

+

+val withNullInput = {

+ val stateOperatorProgress0 = new StateOperatorProgress(

+operatorName = null,

+numRowsTotal = 1L,

+numRowsUpdated = 2L,

+allUpdatesTimeMs = 3L,

+numRowsRemoved = 4L,

+allRemovalsTimeMs = 5L,

+commitTimeMs = 6L,

+memoryUsedBytes = 7L,

+numRowsDroppedByWatermark = 8L,

+numShufflePartitions = 9L,

+numStateStoreInstances = 10L,

+customMetrics = null

+ )

+ val stateOperatorProgress1 = new StateOperatorProgress(

+operatorName = null,

+numRowsTotal = 11L,

+numRowsUpdated = 12L,

+allUpdatesTimeMs = 13L,

+numRowsRemoved = 14L,

+allRemovalsTimeMs = 15L,

+commitTimeMs = 16L,

+memoryUsedBytes = 17L,

+numRowsDroppedByWatermark = 18L,

+numShufflePartitions = 19L,

+numStateStoreInstances = 20L,

+customMetrics = null

+ )

+ val source0 = new SourceProgress(

+description = null,

+startOffset = null,

+endOffset = null,

+latestOffset = null,

+numInputRows = 10L,

+

[GitHub] [spark] LuciferYang commented on a diff in pull request #39642: [SPARK-41677][CORE][SQL][SS][UI] Add Protobuf serializer for `StreamingQueryProgressWrapper`

LuciferYang commented on code in PR #39642:

URL: https://github.com/apache/spark/pull/39642#discussion_r1083634709

##

sql/core/src/test/scala/org/apache/spark/status/protobuf/sql/KVStoreProtobufSerializerSuite.scala:

##

@@ -271,4 +278,254 @@ class KVStoreProtobufSerializerSuite extends

SparkFunSuite {

assert(result.endTimestamp == input.endTimestamp)

}

}

+

+ test("StreamingQueryProgressWrapper") {

+val normalInput = {

Review Comment:

Two objects are manually created due to many fields can be null

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] wangyum commented on a diff in pull request #39691: [SPARK-31561][SQL] Add QUALIFY clause

wangyum commented on code in PR #39691:

URL: https://github.com/apache/spark/pull/39691#discussion_r1083632796

##

sql/core/src/test/resources/sql-tests/results/window.sql.out:

##

@@ -1342,3 +1342,139 @@ org.apache.spark.sql.AnalysisException

"windowName" : "w"

}

}

+

+

+-- !query

+SELECT val_long,

+ val_date,

+ max(val) OVER (partition BY val_date) AS m

+FROM testdata

+WHERE val_long > 2

+QUALIFY m > 2 AND m < 10

+-- !query schema

+struct

+-- !query output

+2147483650 2020-12-31 3

+2147483650 2020-12-31 3

+

+

+-- !query

+SELECT val_long,

+ val_date,

+ val

+FROM testdata QUALIFY max(val) OVER (partition BY val_date) >= 3

+-- !query schema

+struct

+-- !query output

+1 2017-08-01 1

+1 2017-08-01 3

+1 2017-08-01 NULL

+2147483650 2020-12-31 2

+2147483650 2020-12-31 3

+NULL 2017-08-01 1

+

+

+-- !query

+SELECT val_date,

+ val * sum(val) OVER (partition BY val_date) AS w

+FROM testdata

+QUALIFY w > 10

+-- !query schema

+struct

+-- !query output

+2017-08-01 15

+2020-12-31 15

+

+

+-- !query

+SELECT w.val_date

+FROM testdata w

+JOIN testdata w2 ON w.val_date=w2.val_date

+QUALIFY row_number() OVER (partition BY w.val_date ORDER BY w.val) IN (2)

+-- !query schema

+struct

+-- !query output

+2017-08-01

+2020-12-31

+

+

+-- !query

+SELECT val_date,

+ count(val_long) OVER (partition BY val_date) AS w

+FROM testdata

+GROUP BY val_date,

+ val_long

+HAVING Sum(val) > 1

+QUALIFY w = 1

+-- !query schema

+struct

+-- !query output

+2017-08-01 1

+2017-08-03 1

+2020-12-31 1

+

+

+-- !query

+SELECT val_date,

+ val_long,

+ Sum(val)

+FROM testdata

+GROUP BY val_date,

+ val_long

+HAVING Sum(val) > 1

+QUALIFY count(val_long) OVER (partition BY val_date) IN(SELECT 1)

+-- !query schema

+struct

+-- !query output

+2017-08-01 1 4

+2017-08-03 3 2

+2020-12-31 2147483650 5

+

+

+-- !query

+SELECT val_date,

+ val_long

+FROM testdata

+QUALIFY count(val_long) OVER (partition BY val_date) > 1 AND val > 1

+-- !query schema

+struct

+-- !query output

+2017-08-01 1

+2020-12-31 2147483650

+2020-12-31 2147483650

+

+

+-- !query

+SELECT val_date,

+ val_long

+FROM testdata

+QUALIFY val > 1

+-- !query schema

+struct<>

+-- !query output

+org.apache.spark.sql.AnalysisException

+{

+ "errorClass" : "_LEGACY_ERROR_TEMP_1032",

Review Comment:

+1 for follow-up.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] wangyum commented on a diff in pull request #39691: [SPARK-31561][SQL] Add QUALIFY clause

wangyum commented on code in PR #39691: URL: https://github.com/apache/spark/pull/39691#discussion_r1083632654 ## docs/sql-ref-syntax-qry-select-qualify.md: ## @@ -0,0 +1,98 @@ +--- +layout: global +title: QUALIFY Clause +displayTitle: QUALIFY Clause +license: | + Licensed to the Apache Software Foundation (ASF) under one or more + contributor license agreements. See the NOTICE file distributed with + this work for additional information regarding copyright ownership. + The ASF licenses this file to You under the Apache License, Version 2.0 + (the "License"); you may not use this file except in compliance with + the License. You may obtain a copy of the License at + + http://www.apache.org/licenses/LICENSE-2.0 + + Unless required by applicable law or agreed to in writing, software + distributed under the License is distributed on an "AS IS" BASIS, + WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. + See the License for the specific language governing permissions and + limitations under the License. +--- + +### Description + +The `QUALIFY` clause is used to filter the results of +[window functions](sql-ref-syntax-qry-select-window.md). To use QUALIFY, +at least one window function is required to be present in the SELECT list or the QUALIFY clause. Review Comment: OK. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] LuciferYang commented on pull request #39694: [SPARK-42152][BUILD][CORE][SQL][PYTHON][PROTOBUF] Use `_` instead of `-` for relocation package name

LuciferYang commented on PR #39694: URL: https://github.com/apache/spark/pull/39694#issuecomment-1399724538 also cc @srowen @dongjoon-hyun @HyukjinKwon -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] LuciferYang commented on pull request #39694: [SPARK-42152][BUILD][CORE][SQL][PYTHON][PROTOBUF] Use `_` instead of `-` for relocation package name

LuciferYang commented on PR #39694: URL: https://github.com/apache/spark/pull/39694#issuecomment-1399724327 @itholic Thanks for your suggestion, pr description has been updated -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] LuciferYang commented on pull request #39684: [SPARK-42140][CORE] Handle null string values in ApplicationEnvironmentInfoWrapper/ApplicationInfoWrapper

LuciferYang commented on PR #39684: URL: https://github.com/apache/spark/pull/39684#issuecomment-1399721398 thanks @gengliangwang -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] LuciferYang commented on pull request #39683: [SPARK-42144][CORE][SQL] Handle null string values in StageDataWrapper/StreamBlockData/StreamingQueryData

LuciferYang commented on PR #39683: URL: https://github.com/apache/spark/pull/39683#issuecomment-1399721161 thanks @gengliangwang -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dongjoon-hyun opened a new pull request, #39704: [MINOR][DOCS] Add all supported resource managers in `Scheduling Within an Application` section

dongjoon-hyun opened a new pull request, #39704: URL: https://github.com/apache/spark/pull/39704 … ### What changes were proposed in this pull request? ### Why are the changes needed? ### Does this PR introduce _any_ user-facing change? ### How was this patch tested? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on pull request #39657: [SPARK-42123][SQL] Include column default values in DESCRIBE and SHOW CREATE TABLE output

AmplabJenkins commented on PR #39657: URL: https://github.com/apache/spark/pull/39657#issuecomment-1399670640 Can one of the admins verify this patch? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on pull request #39660: [SPARK-42128][SQL] Support TOP (N) for MS SQL Server dialect as an alternative to Limit pushdown

AmplabJenkins commented on PR #39660: URL: https://github.com/apache/spark/pull/39660#issuecomment-1399670621 Can one of the admins verify this patch? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] srowen commented on a diff in pull request #39660: [SPARK-42128][SQL] Support TOP (N) for MS SQL Server dialect as an alternative to Limit pushdown

srowen commented on code in PR #39660: URL: https://github.com/apache/spark/pull/39660#discussion_r1083570242 ## sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/jdbc/JDBCRDD.scala: ## @@ -307,11 +307,12 @@ private[jdbc] class JDBCRDD( "" } +val myTopExpression: String = dialect.getTopExpression(limit) // SQL Server Limit alternative Review Comment: Oops yes I'm talking about what you're talking about, typo - MS SQL Server I'm OK with it; the alternative is to somehow edit the SQL query down in the MS SQL dialect maybe, I haven't thought about it. It'd be nicer but not sure it's cleaner. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org